Elementary, Watson: you integrate with Voximplant

- Tutorial

Working with natural languages (NLU, NLP) is an area of intense competition between IT giants. As well as the development of AI. Well, and accordingly, the intersection of these sets is also a hot niche, which is interesting to observe and learn new things. Voximplant has long made friends with the Google Dialogflow , and so well that we made a wrapper for this integration - Dialogflow Connector . IBM has an analogue, the Watson-based Voice Agent, which performs the same task of automating flexible, adequate communication with the client to replace the classic IVR. We tried the technology on a simple demo and today step by step we will tell you how to do it as well. In the meantime, you will read this, our developers will continue to make a wrapper for this integration ...

What do we do?

No rocket science - we’ll create the simplest bot that we’ll call, he will say hello, offer two options: one will loop the conversation, the other will end the conversation and the bot will say goodbye. Nuance: at the time of writing, Watson supports only four languages - English, Arabic, Portuguese and Chinese (simplified) - so the demo will be in English, as the most familiar of them.

Resource Creation

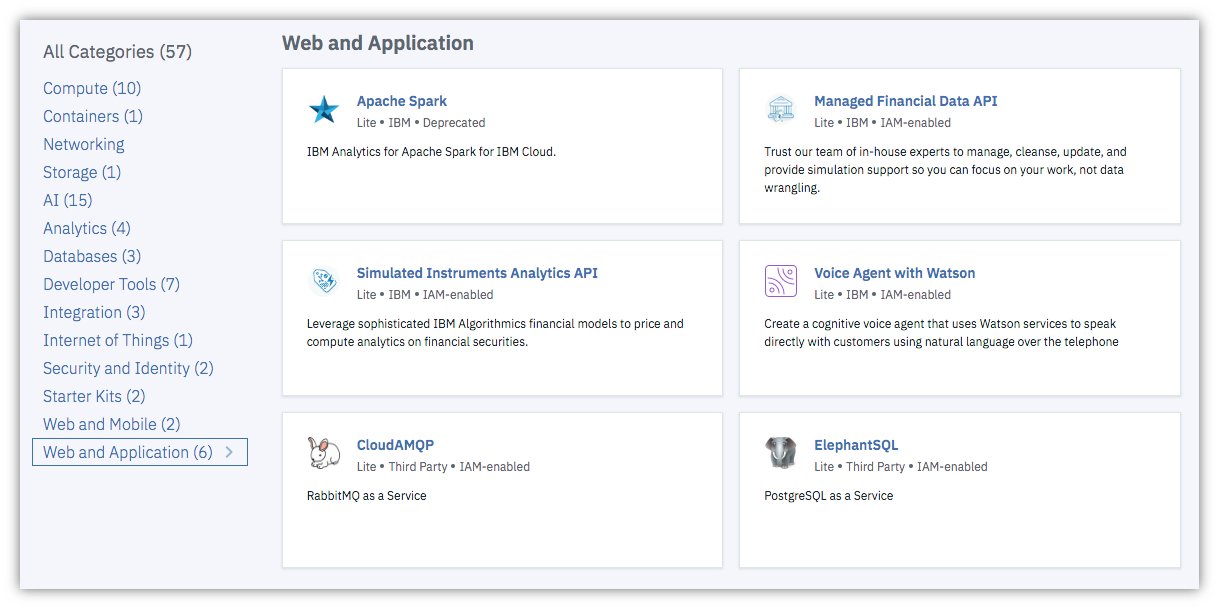

If you do not have an IBM Cloud account, register here . Go to your account, in the upper right corner click Create resource, the resource directory will open. On the left, select the Web and Application category, then on the right side of the screen, click Voice Agent with Watson.

You can leave all the fields by default (Service Name, region, etc.) - at the bottom of the screen, click Create and go make some tea (this is a joke, because the IBM Cloud does not always turn around quickly). When the resource is created, you will be taken to the dashboard of this freshly created resource; here you will see the name of the resource, location (Washington, DC), as well as the resource management menu - it is located on the left. Soon we will need the Manage item from this menu, but first we need to buy ...

Voximplant Number

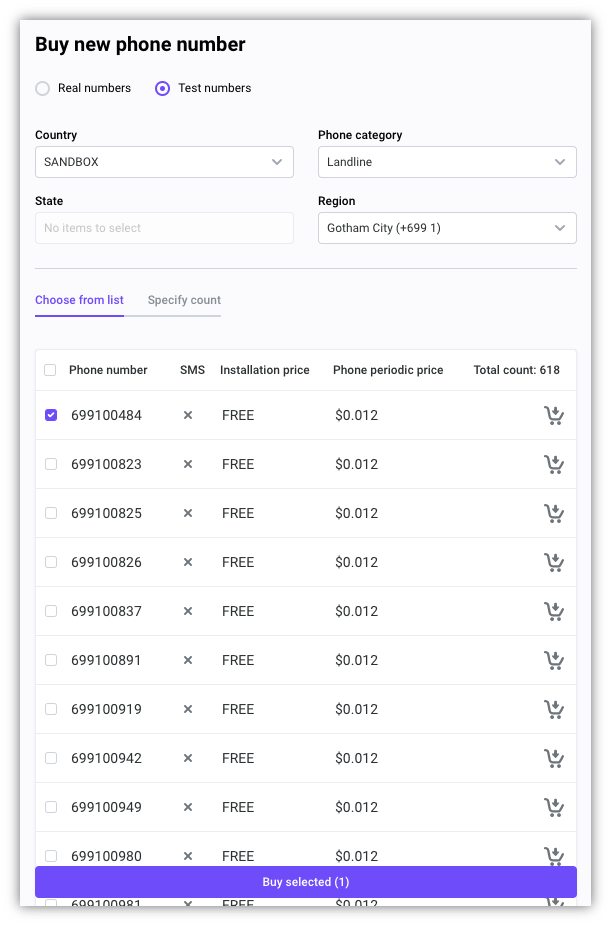

The agent needs a number by which he can be reached. To do this, go to the Voximplant panel, the Numbers -> My Phone Numbers section , in the upper right corner click Buy new phone number. A virtual number is suitable for our purposes - select the Test numbers switch, check one number from the list and click Buy selected at the bottom, then Buy in the window that opens.

You will immediately see this number in the list of your numbers. Copy it and return to the IBM Cloud, to the Voice Agent with Watson resource.

Agent Creation

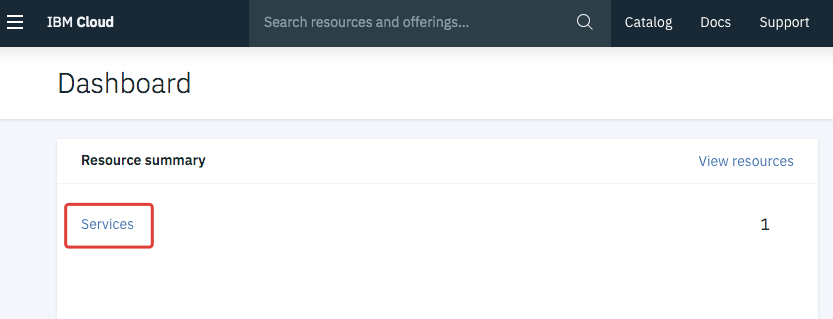

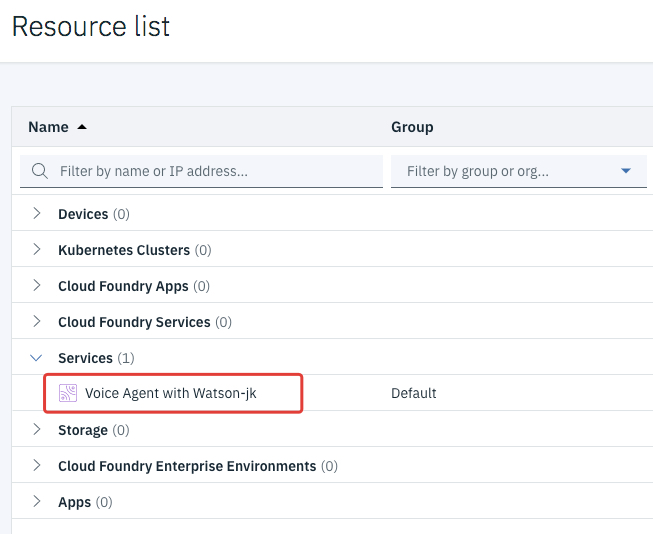

Tip. If you have closed a tab with a resource, then here's how to quickly open a resource from the main page. On the cloud.ibm.com dashboard, click on the word Services, a list of the current resources of your account will open.

At the same time, the Services group will already be deployed and the Voice Agent will be visible in it. Click on it - cheers, you have again opened the desired resource.

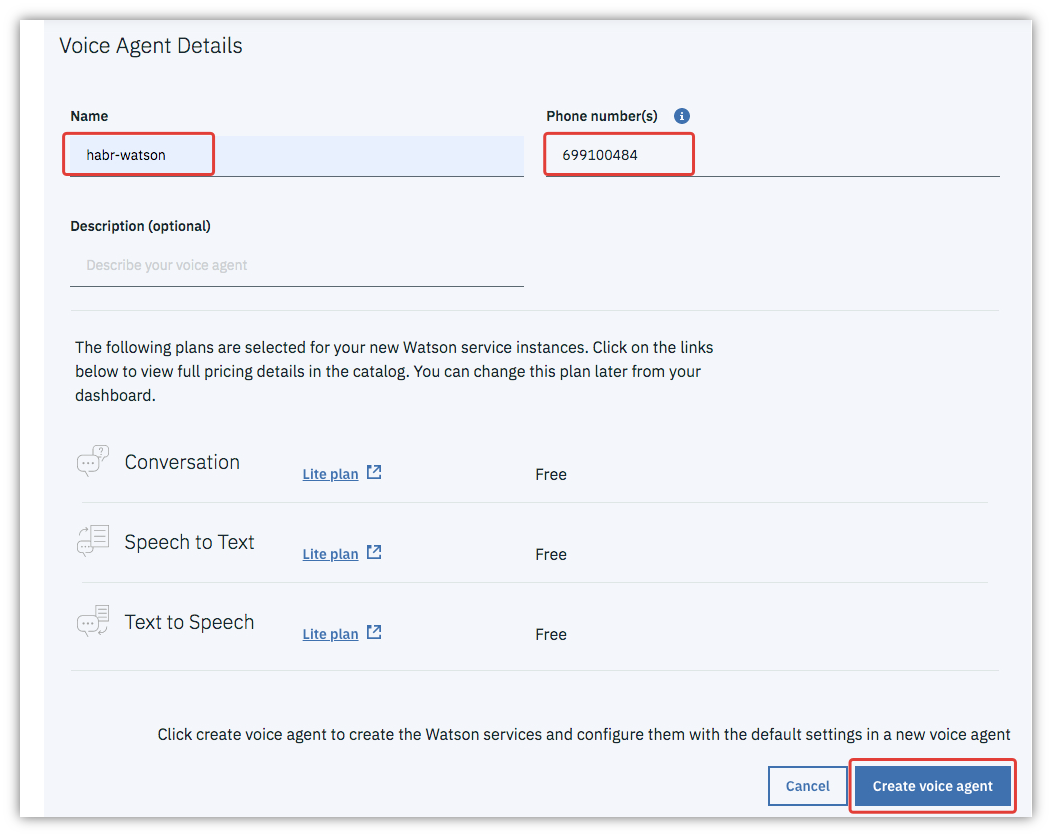

In the left menu, select Manage, then on the right side of the screen, click the Create a voice agent button. Enter the name of the agent and insert the purchased virtual number, scroll down - Create voice agent.

IBM Cloud will think a little and show a message about the successful creation of an agent and 3 embedded services. Well, now we need to teach the agent how to ...

Talking with leather bags

In the upper left corner, click on the hamburger icon, select Resource list. In the list of resources, select VoiceAgent-WatsonAssistant (bottom in the list). In the control panel, click the Launch tool. The Watson Assistant Control Panel will open. From the top, select the Skills tab. You will see that there is already the VoiceGatewayConversation skill - this is a set of phrases and a dialog flow chart that the wizard added when creating the agent. You can use this skill, but for the sake of interest we suggest creating your own.

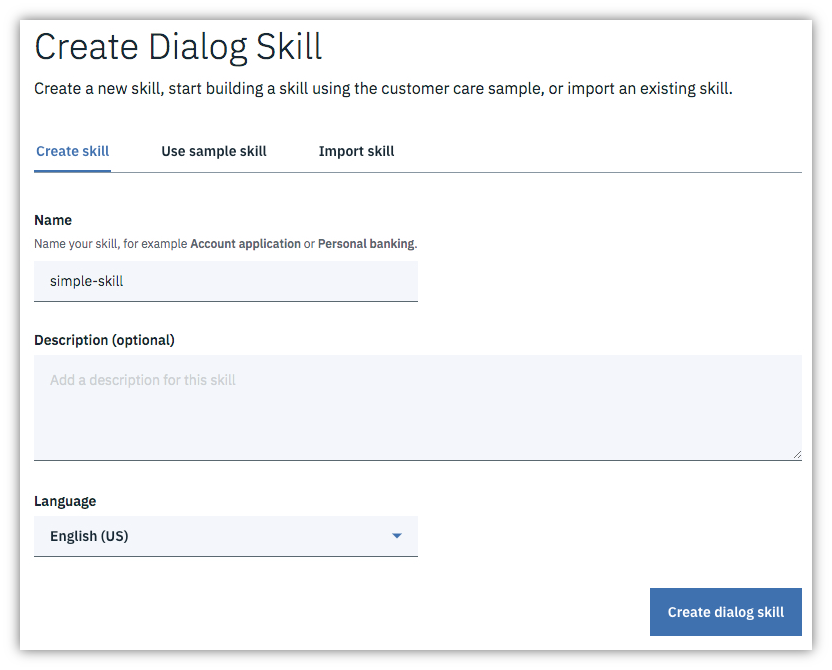

To do this, on the Skills tab, click Create skill. Name it simple-skill, leave the language English (US), click Create dialog skill.

Inside the skill you will need three tabs:

- Intents - the intentions of the client. In essence, these are phrases and their variations;

- Entities - keywords for recognition and their synonyms;

- Dialog - a dialog flowchart.

On the Intents tab, add the statement #whatcanido and write down a few options for it (“What can I do?”, “Show me the options”, etc.).

On the Entities tab, add:

- continue with the synonyms "go on", "proceed";

- options with the synonyms " options ", "option", "choices";

- stop with the synonyms "stopped", "quit".

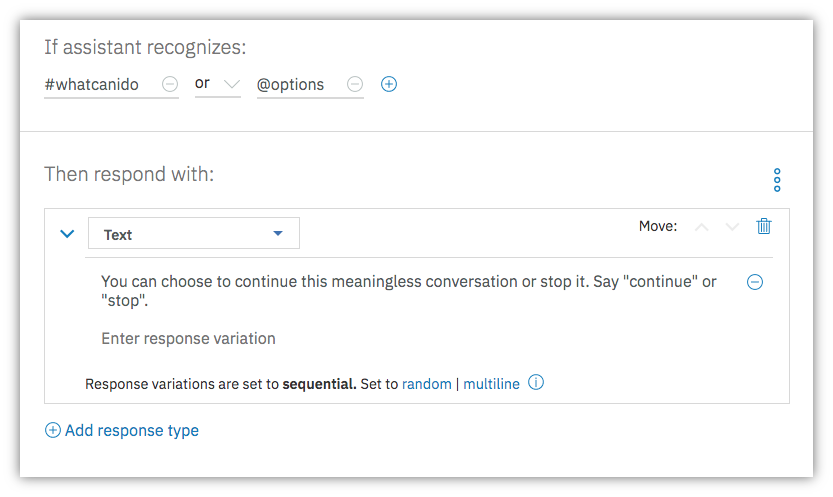

On the Dialog tab, click Create dialog, the Welcome and Anything else blocks will appear. Open Anything else and set the settings as in the screenshot (text: "You can choose to continue this meaningless conversation or stop it. Say" continue "or" stop ".). Please note that in the recognition condition, we have added not only intent, but also a keyword for security.

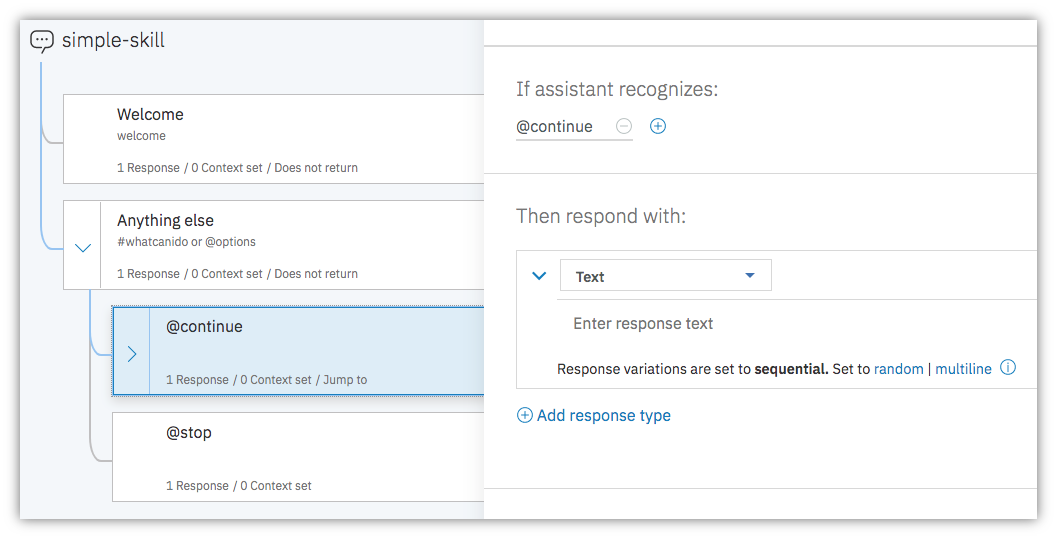

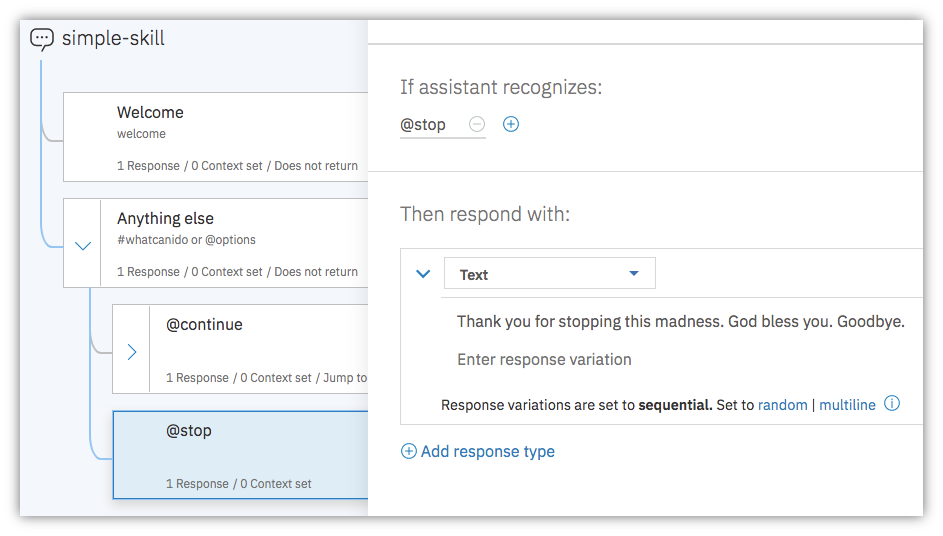

Then click on the three points on the Anything else block, select Add child node, create a continue block . Create inside Anything else, create another child block - stop . Block Settings:

Thus, the bot will either endlessly repeat the text about the choice while the person says “continue”, or will end the dialogue when the person says “stop”. Indeed, meaningless conversation.

If you are too lazy to do all this, you can download our json file and import it as a skill. To do this, on the Skills tab, click Create skill, switch to the Import skill tab, click Choose JSON file, specify the downloaded file and finally click Import.

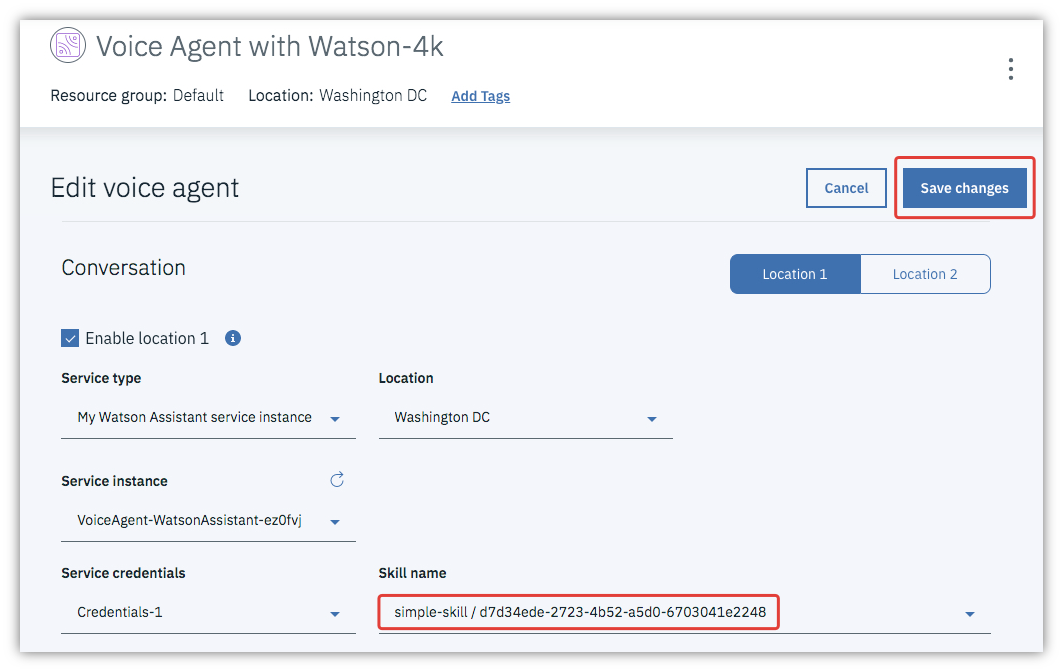

Last but not least at this stage: go back to cloud.ibm.com , open the Resource list, click on Voice Agent with Watson, go to the Manage tab. At the habr-watson agent, click on the three dots, select Edit agent. In the Conversation section, change the Skill name to indicate the skill you just created:

Done, now the agent can communicate with people! There was the last touch, namely ...

Voximplant app and script

In the Voximplant control panel, create the watson application. All of the following steps must be done inside this application. Go to the Scenarios tab and create a watson-scenario with the code:

require(Modules.ASR)

VoxEngine.addEventListener(AppEvents.CallAlerting, (e) => {

let call2 = VoxEngine.callSIP("sip:699100484@us-east.voiceagent.cloud.ibm.com")

const recognition = VoxEngine.createASR({

lang: ASRLanguage.ENGLISH_US

})

let botSpeech = ""

recognition.addEventListener(ASREvents.Result, e => {

botSpeech += e.text

botSpeech.includes("goodbye") ? VoxEngine.terminate() : Logger.write("There is no 'goodbye' yet.")

})

call2.addEventListener(CallEvents.Connected, () => call2.sendMediaTo(recognition))

VoxEngine.easyProcess(e.call, call2)

})Do not forget to substitute exactly the phone number that you bought earlier and specified in the IBM agent settings in the callSIP method call!

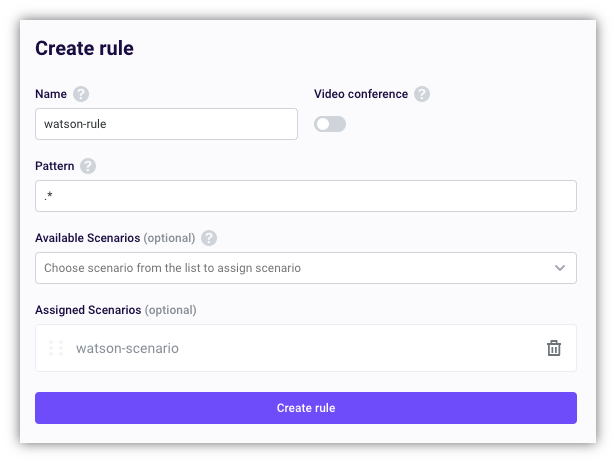

Then go to the Routing tab and create a watson-rule. Specify the watson-scenario script:

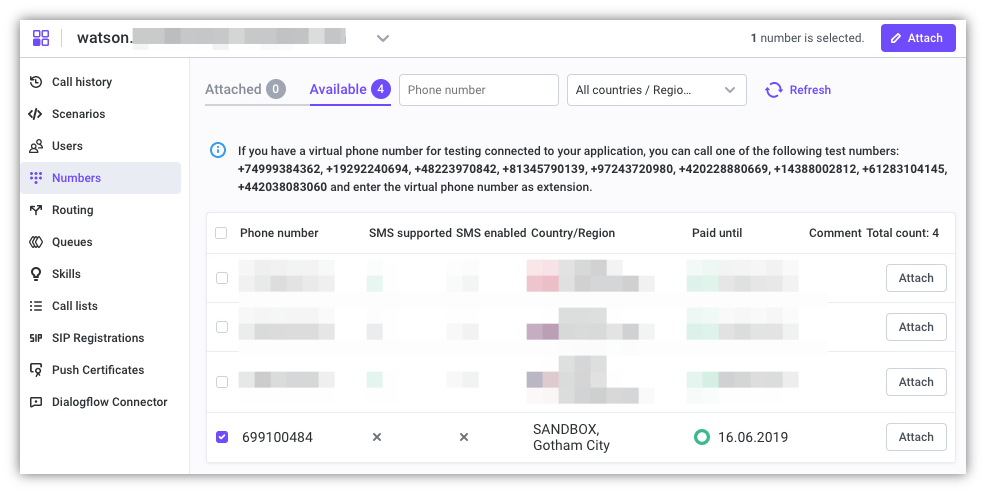

Finally, go to the Numbers tab, where there will be the Attached sections (it is empty so far) and Available. Switch to Available, mark the purchased number and click Attach.

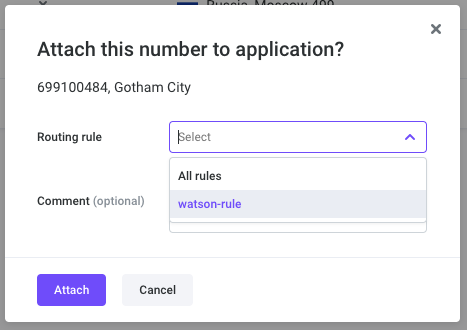

In the window that opens, specify the watson-rule, then Attach.

Now the number will appear in the Attached section. Here you will see the numbers you can call, then enter the purchased virtual number in tone mode and start a highly intelligent conversation with the IBM bot.