A brief history of 3D texturing in games

- Transfer

In this post I will talk about the history of texturing in three-dimensional video games. Since the first appearance of 3D real-time on home consoles, we have come a long way, but even today, when creating game textures, some practices have been applied that have their roots in those early years.

To get started, let's talk a bit about the basics — the differences between real-time rendering and pre-rendered scenes. Real-time rendering is used in most 3D games. The machine in this case draws the image in real time. To create a single frame of a prerendered scene requires large computing power.

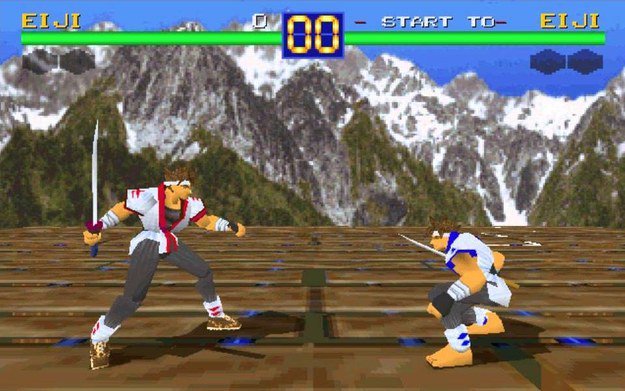

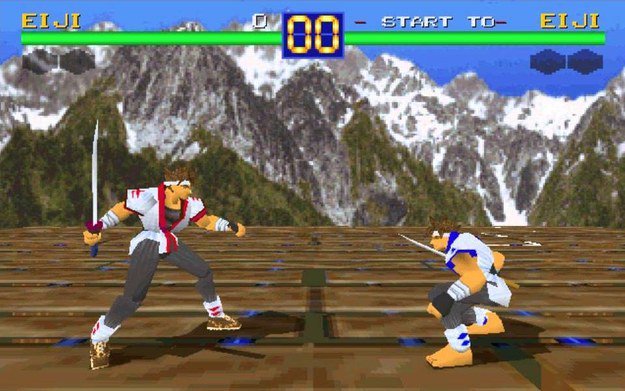

Because of this, we get different levels of quality. Real-time rendering is needed by games for interactivity. Static elements such as cinematic inserts or fixed backgrounds can be created by pre-rendering. The difference in results was huge. Here is the pre-rendered background and real-time character from the 1999 game:

Pre-rendering allowed you to create a bunch of scenes that were expensive to render, which could take hours or even days to render a single frame. For a picture or movie, this is quite normal. But games need to constantly render 30-60 frames per second. Therefore, in the early 3D games had to go to great simplifications.

On 16-bit consoles, Star Fox was one of the first examples of real-time 3D, but Donkey Kong Country was also on them, in which pre-rendered three-dimensional graphics were converted to sprites (with greatly simplified color palettes). For a long time, nothing else could look as good in real time.

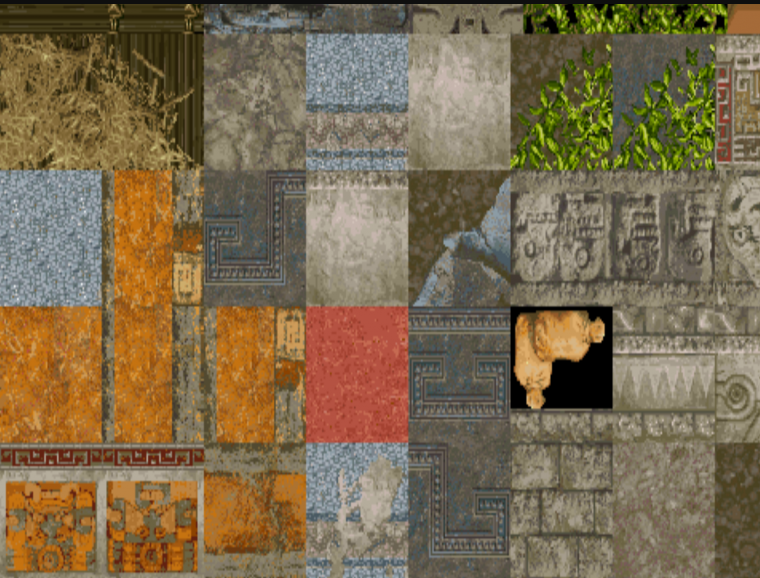

When we switched to real 3D consoles (such as N64 and PS1), we finally saw what real-time rendering was not capable of. You can’t use light sources to bake shadows or lighting in the scene, the materials do not respond to light, there is no “bump mapping”, only low-resolution geometry and textures. How did the artists manage to cope with this?

For example, information about lighting was either drawn on textures (shadows, highlights, depths), or painted on each vertex of a triangle, or both approaches were used. Character shadows were usually simple textures that followed the character. Casting the correct shadows was impossible.

It was possible to achieve the simplest shading on models, but he usually lacked the correct lighting information. Games such as Ocarina of Time and Crash Bandicoot used a lot of lighting information, which was recorded in textures and drawing on the vertices of the geometry. This made it possible to make various areas lighter, darker, or to give them a certain shade.

In those days, to overcome such limitations, a large amount of creative work was required. To varying degrees, drawing or writing lighting information into textures is still used today. But as real-time rendering gets better, the need for such techniques is reduced.

So, the next generation of hardware needed to solve many more problems. The next generation of consoles - PS2, Xbox and Gamecube - tried to cope with some of them. The first notable leap in quality was an increase in texture resolution and better lighting.

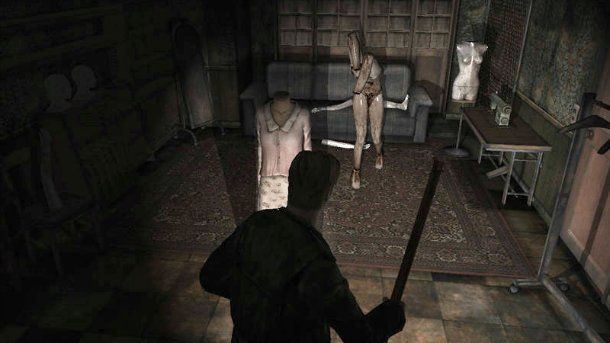

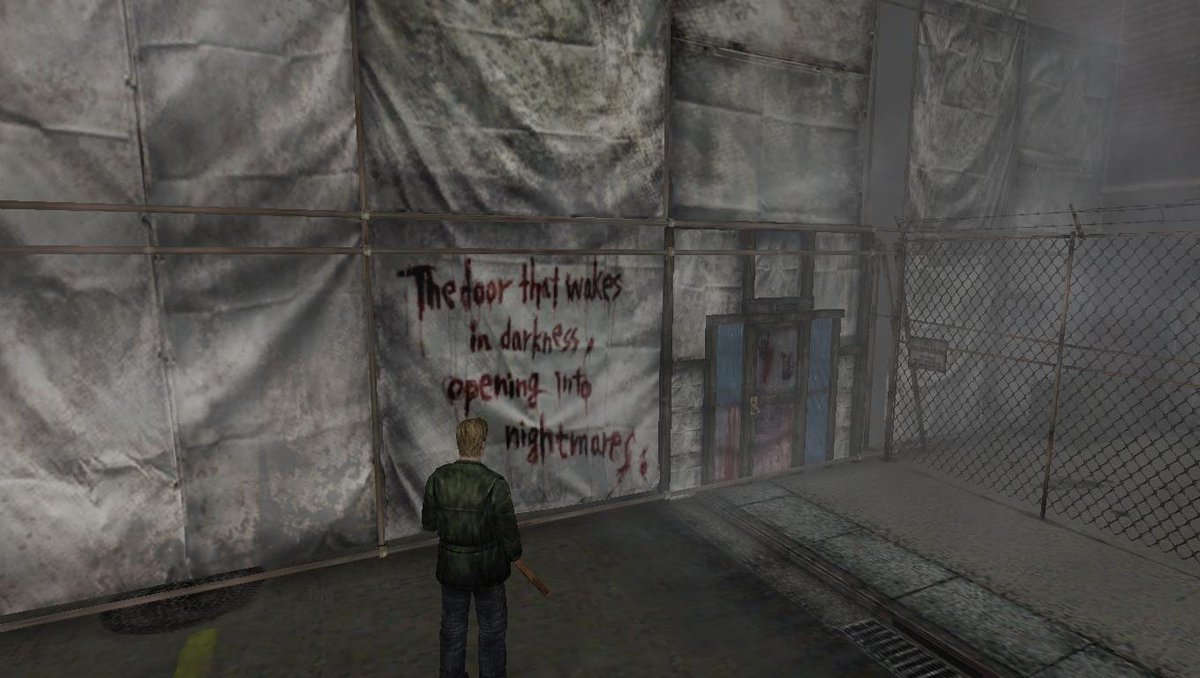

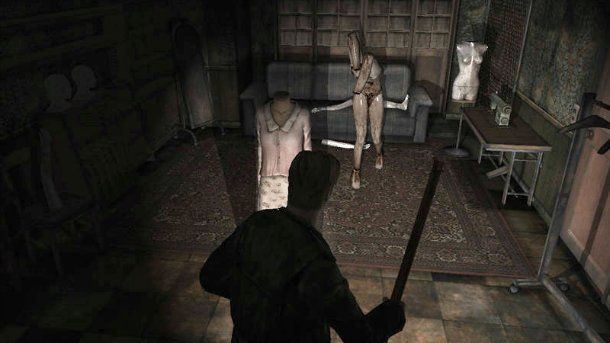

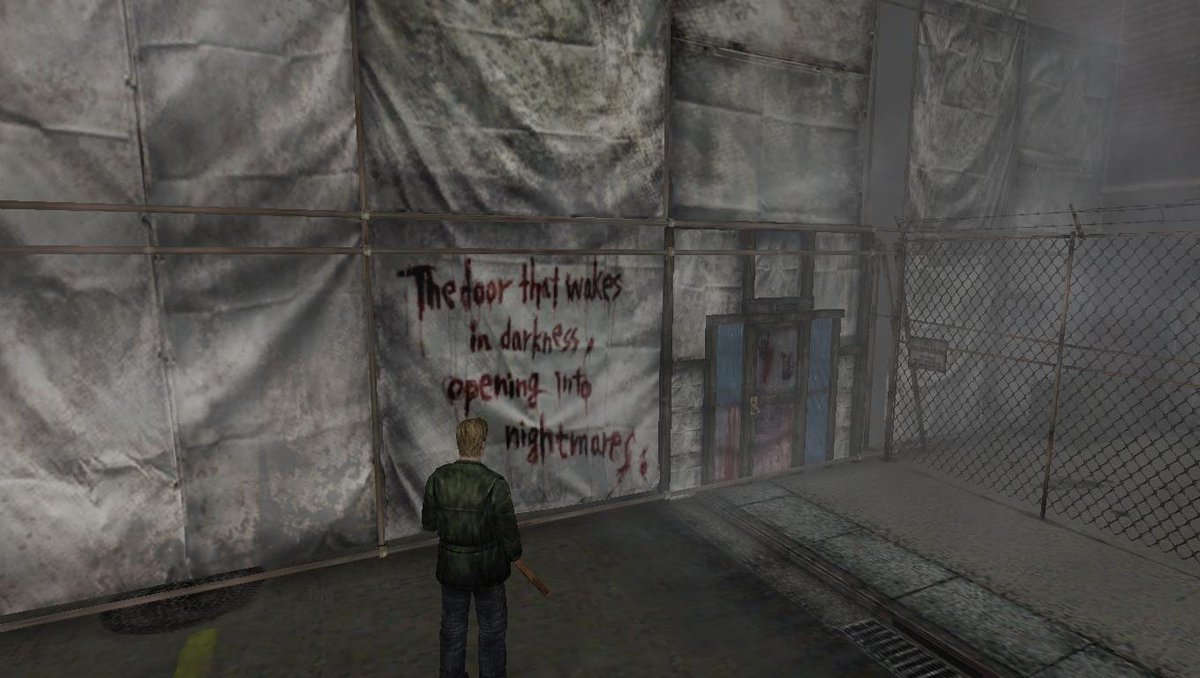

One of the important games in this regard was Silent Hill 2. The most serious breakthrough of this game in 2001 was the use of casting shadows in real time. This meant that part of the lighting information recorded in the texture could be excluded, but for the most part it was actively used in this generation.

The decisive factor for this and other games of that era was resolution. Due to the larger number of pixels, they could store much more micro details. But so far it was only information about color and diffuse lighting. Bump maps and reflection maps were rarely used then. It was impossible to obtain the correct reaction to light from materials.

There was another reason for the popularity of baking information in textures. In pre-rendered scenes, this was not a problem, in them the clothes really looked like fabric, and the glass, hair and skin seemed convincing. For real-time rendering, embossed texturing was required, and it appeared, but only closer to the end of this generation (only on xbox).

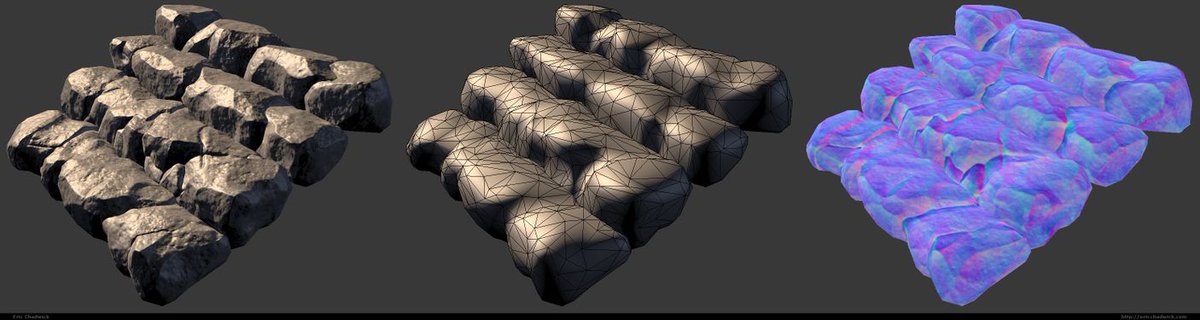

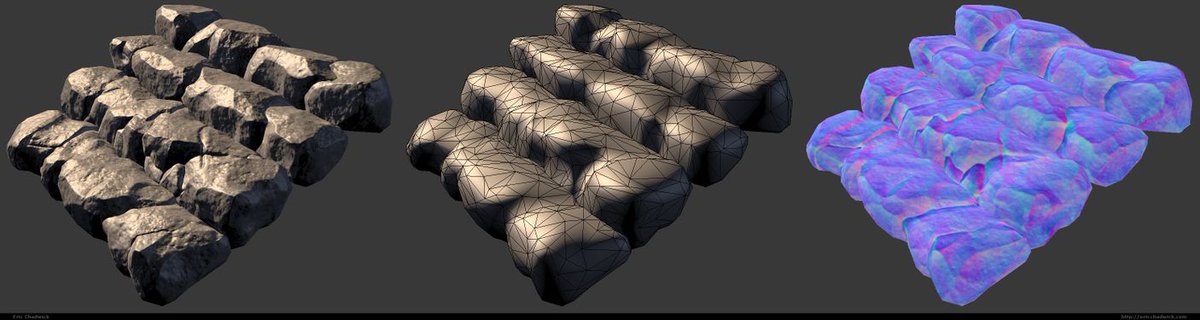

Reflection and normal maps appeared in games such as Halo 2 and Doom 3. Specular maps allowed surfaces to respond to lighting much more naturally, for example, metal could really shine, and so on. The normal map allows you to record much more details that could not be achieved in objects with such a low number of polygons.

If you work with 3D, then you know what a normal map is. This is a type of embossed texturing that allows surfaces to respond to lighting in much more detail than the complexity of the model. This is the most important texture that is used in almost every game released after this generation.

After the advent of normal maps, the artists' approach to creating textures has changed. To produce normal maps, you have to spend much more time creating the model. It has become the norm to use sculpting tools such as Zbrush, which allow you to bake high-poly models into textures that can be used in low-poly objects.

Prior to this technology, most textures were either hand-drawn or created from photos in Photoshop. In the era of the Xbox 360 and PS3, this method for many games is a thing of the past, because along with an increase in resolution, the quality of models has also improved.

In addition, thanks to pre-calculated shading, the behavior of the materials has greatly improved. For many artists, this turned out to be a turning point. The materials became much more complicated than before. This 2005 demo exceeded everything that came before him. At that time, there was not even an Xbox 360.

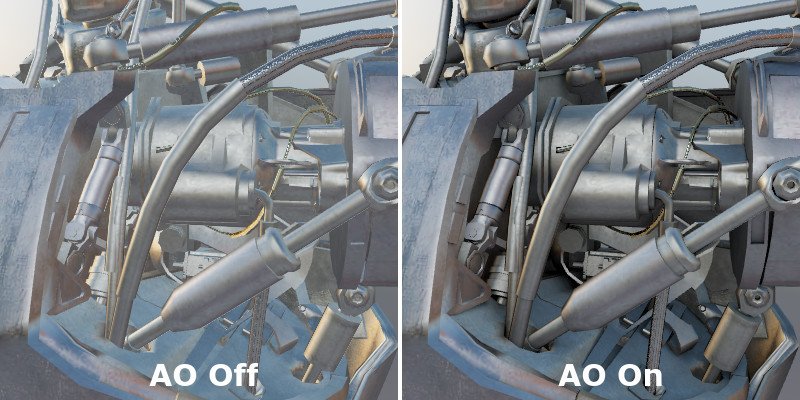

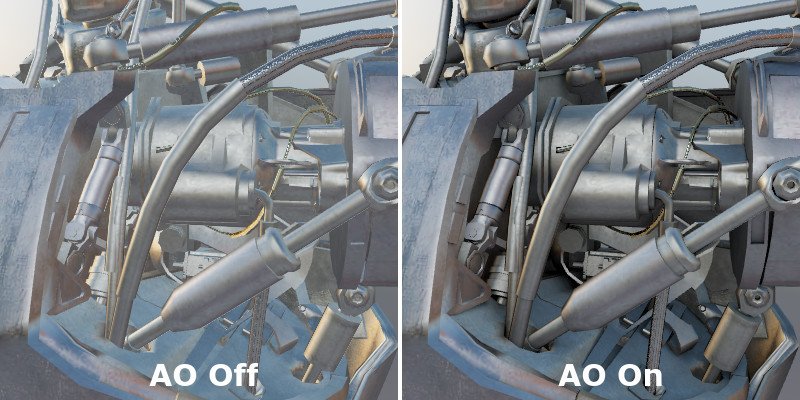

A new approach to stage lighting has also appeared - Ambient occlusion model. Real-time rendering again had to catch up with the prerender. AO is too expensive for real-time rendering, so artists just started writing it in textures! AO recreates indirect shadows from light sources that are too small to display in detail.

Even today, real-time AO is not 100% achievable, but we are already close to that! Thanks to processes such as SSAO and DFAO, the situation has greatly improved compared to what it was 10 years ago. Baked AO cards are still in use, but probably when the renderers get better, they will be discarded.

To summarize: in the era of PS3 and X360, we saw an even greater jump in resolution compared to the previous generation, and new textures appeared for surfaces with shading. And, of course, the quality of lighting has improved! You could get real-time shadows for the whole scene or bake the lighting to increase detail.

Everything seems to be just fine? But still there are flaws. Low resolution of models and textures, plus high costs due to new shaders. And do not forget the resolution issued by games. Only 720p! (In addition, shades of fonts on CRT televisions have become a problem).

Another problem remained specular maps. At that time, each object had only one card of its “brilliance”. This is a big limitation. The materials looked unreal. Therefore, some developers began to split reflection maps. One of the first examples was the Bioshock Infinite game.

Reflection maps were now divided by types of materials (wood, gold, concrete, etc.) and by “old age” (cracks, wear, etc.). This event coincided in time with the advent of a new type of shading model - Physically Based Rendering, PBR (Physically Correct Rendering).

This brings us today to the current generation. PBR has become a standard for many games. This technique was popularized by Pixar, standardizing it as a way to create plausible materials in computer graphics. And it can be applied in real time!

In addition, the industry has improved the conveyor that appeared in the previous generation - screen effects. Aspects such as tone correction and color correction have improved in the current generation. In the previous generation, this would have taken a long time to set up the textures.

If you are interested in learning more about old games and their rendering techniques, I highly recommend the DF Retro series on digitalfoundry. The author did a fantastic job of analyzing individual games, such as Silent Hill 2.

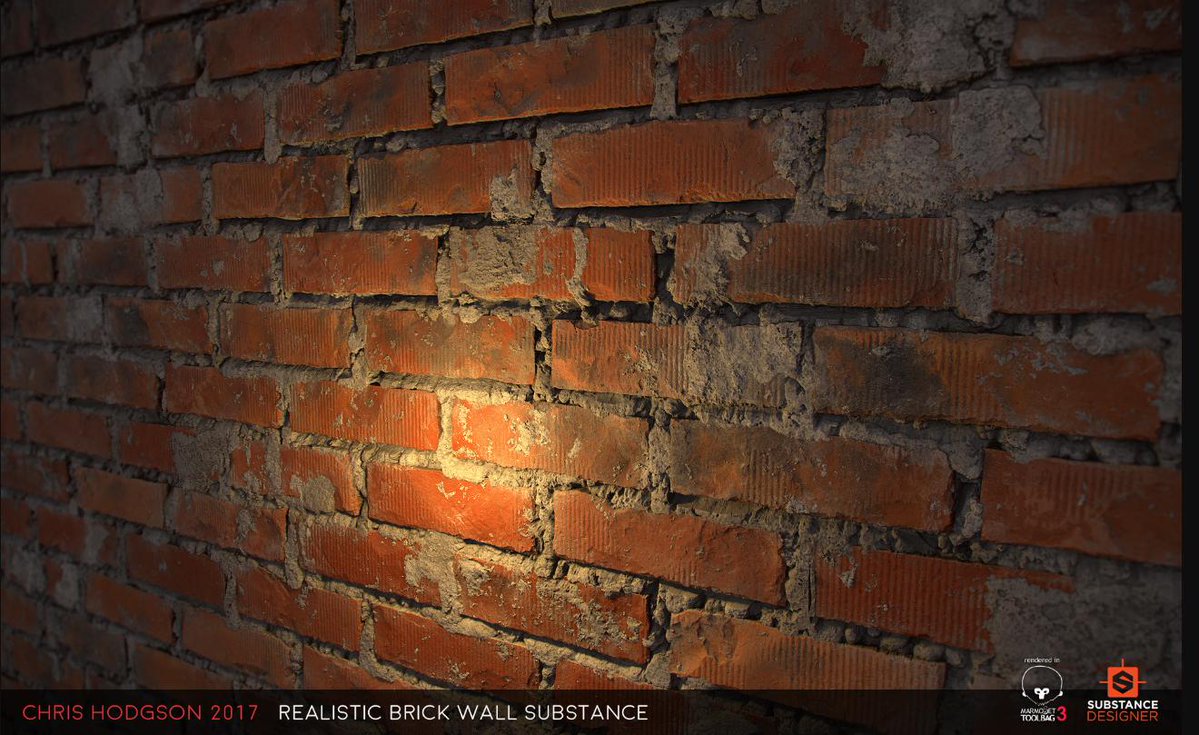

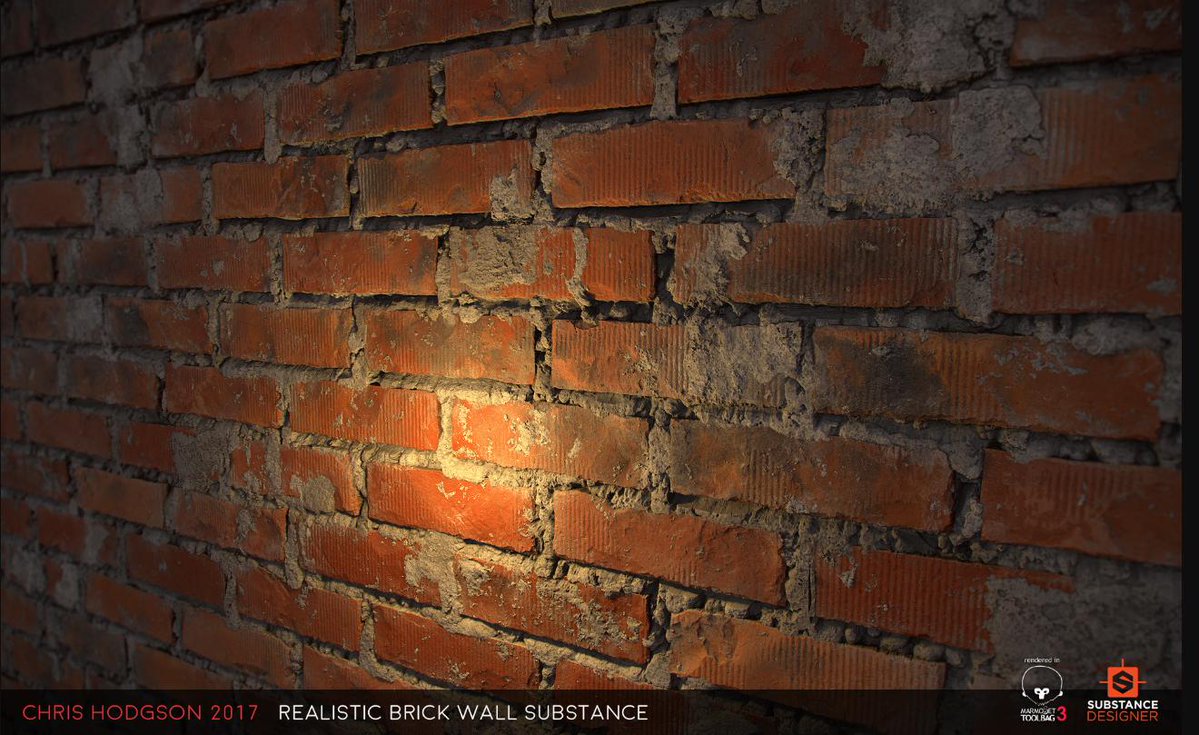

Just for comparison, I'll show you what the first 3D games looked like and what kind of work today is needed to create the only texture in the game.

Let us also briefly mention some of the techniques developed in previous eras that are still in use today! There are many “stylized” textures that record lighting information. Most actively it uses Blizzard.

This company combines technical limitations with a thoughtful attitude to graphics, and achieves amazing results. Perhaps they do not use the same bunch of textures as in other AAA games, but the results can not be called bad.

And sometimes, thanks to the PBR technique and hand-drawn / simplified textures, you can go very far. This is facilitated by the presence of a modern engine with many functions.

To get started, let's talk a bit about the basics — the differences between real-time rendering and pre-rendered scenes. Real-time rendering is used in most 3D games. The machine in this case draws the image in real time. To create a single frame of a prerendered scene requires large computing power.

Because of this, we get different levels of quality. Real-time rendering is needed by games for interactivity. Static elements such as cinematic inserts or fixed backgrounds can be created by pre-rendering. The difference in results was huge. Here is the pre-rendered background and real-time character from the 1999 game:

Pre-rendering allowed you to create a bunch of scenes that were expensive to render, which could take hours or even days to render a single frame. For a picture or movie, this is quite normal. But games need to constantly render 30-60 frames per second. Therefore, in the early 3D games had to go to great simplifications.

On 16-bit consoles, Star Fox was one of the first examples of real-time 3D, but Donkey Kong Country was also on them, in which pre-rendered three-dimensional graphics were converted to sprites (with greatly simplified color palettes). For a long time, nothing else could look as good in real time.

When we switched to real 3D consoles (such as N64 and PS1), we finally saw what real-time rendering was not capable of. You can’t use light sources to bake shadows or lighting in the scene, the materials do not respond to light, there is no “bump mapping”, only low-resolution geometry and textures. How did the artists manage to cope with this?

For example, information about lighting was either drawn on textures (shadows, highlights, depths), or painted on each vertex of a triangle, or both approaches were used. Character shadows were usually simple textures that followed the character. Casting the correct shadows was impossible.

It was possible to achieve the simplest shading on models, but he usually lacked the correct lighting information. Games such as Ocarina of Time and Crash Bandicoot used a lot of lighting information, which was recorded in textures and drawing on the vertices of the geometry. This made it possible to make various areas lighter, darker, or to give them a certain shade.

In those days, to overcome such limitations, a large amount of creative work was required. To varying degrees, drawing or writing lighting information into textures is still used today. But as real-time rendering gets better, the need for such techniques is reduced.

So, the next generation of hardware needed to solve many more problems. The next generation of consoles - PS2, Xbox and Gamecube - tried to cope with some of them. The first notable leap in quality was an increase in texture resolution and better lighting.

One of the important games in this regard was Silent Hill 2. The most serious breakthrough of this game in 2001 was the use of casting shadows in real time. This meant that part of the lighting information recorded in the texture could be excluded, but for the most part it was actively used in this generation.

The decisive factor for this and other games of that era was resolution. Due to the larger number of pixels, they could store much more micro details. But so far it was only information about color and diffuse lighting. Bump maps and reflection maps were rarely used then. It was impossible to obtain the correct reaction to light from materials.

There was another reason for the popularity of baking information in textures. In pre-rendered scenes, this was not a problem, in them the clothes really looked like fabric, and the glass, hair and skin seemed convincing. For real-time rendering, embossed texturing was required, and it appeared, but only closer to the end of this generation (only on xbox).

Reflection and normal maps appeared in games such as Halo 2 and Doom 3. Specular maps allowed surfaces to respond to lighting much more naturally, for example, metal could really shine, and so on. The normal map allows you to record much more details that could not be achieved in objects with such a low number of polygons.

If you work with 3D, then you know what a normal map is. This is a type of embossed texturing that allows surfaces to respond to lighting in much more detail than the complexity of the model. This is the most important texture that is used in almost every game released after this generation.

After the advent of normal maps, the artists' approach to creating textures has changed. To produce normal maps, you have to spend much more time creating the model. It has become the norm to use sculpting tools such as Zbrush, which allow you to bake high-poly models into textures that can be used in low-poly objects.

Prior to this technology, most textures were either hand-drawn or created from photos in Photoshop. In the era of the Xbox 360 and PS3, this method for many games is a thing of the past, because along with an increase in resolution, the quality of models has also improved.

In addition, thanks to pre-calculated shading, the behavior of the materials has greatly improved. For many artists, this turned out to be a turning point. The materials became much more complicated than before. This 2005 demo exceeded everything that came before him. At that time, there was not even an Xbox 360.

A new approach to stage lighting has also appeared - Ambient occlusion model. Real-time rendering again had to catch up with the prerender. AO is too expensive for real-time rendering, so artists just started writing it in textures! AO recreates indirect shadows from light sources that are too small to display in detail.

Even today, real-time AO is not 100% achievable, but we are already close to that! Thanks to processes such as SSAO and DFAO, the situation has greatly improved compared to what it was 10 years ago. Baked AO cards are still in use, but probably when the renderers get better, they will be discarded.

To summarize: in the era of PS3 and X360, we saw an even greater jump in resolution compared to the previous generation, and new textures appeared for surfaces with shading. And, of course, the quality of lighting has improved! You could get real-time shadows for the whole scene or bake the lighting to increase detail.

Everything seems to be just fine? But still there are flaws. Low resolution of models and textures, plus high costs due to new shaders. And do not forget the resolution issued by games. Only 720p! (In addition, shades of fonts on CRT televisions have become a problem).

Another problem remained specular maps. At that time, each object had only one card of its “brilliance”. This is a big limitation. The materials looked unreal. Therefore, some developers began to split reflection maps. One of the first examples was the Bioshock Infinite game.

Reflection maps were now divided by types of materials (wood, gold, concrete, etc.) and by “old age” (cracks, wear, etc.). This event coincided in time with the advent of a new type of shading model - Physically Based Rendering, PBR (Physically Correct Rendering).

This brings us today to the current generation. PBR has become a standard for many games. This technique was popularized by Pixar, standardizing it as a way to create plausible materials in computer graphics. And it can be applied in real time!

In addition, the industry has improved the conveyor that appeared in the previous generation - screen effects. Aspects such as tone correction and color correction have improved in the current generation. In the previous generation, this would have taken a long time to set up the textures.

If you are interested in learning more about old games and their rendering techniques, I highly recommend the DF Retro series on digitalfoundry. The author did a fantastic job of analyzing individual games, such as Silent Hill 2.

Just for comparison, I'll show you what the first 3D games looked like and what kind of work today is needed to create the only texture in the game.

Let us also briefly mention some of the techniques developed in previous eras that are still in use today! There are many “stylized” textures that record lighting information. Most actively it uses Blizzard.

This company combines technical limitations with a thoughtful attitude to graphics, and achieves amazing results. Perhaps they do not use the same bunch of textures as in other AAA games, but the results can not be called bad.

And sometimes, thanks to the PBR technique and hand-drawn / simplified textures, you can go very far. This is facilitated by the presence of a modern engine with many functions.