Keybase and true TOFU

- Transfer

In messengers with end-to-end encryption (E2E), the user is responsible for their keys. When he loses them, he is forced to reset his account.

Resetting an account is dangerous. You erase public keys and in all conversations become a cryptographic stranger. You need to restore your identity, and in almost all cases this means a personal meeting and comparison of "security numbers" with each of the contacts. How often do you really go through such a test, which is the only protection from MiTM?

Even if you are serious about security numbers, you only see many chat partners once a year at a conference, so you're stuck.

How often does a reset occur? Answer: in most E2E chat applications all the time.

In these messengers, you drop cryptography and simply start trusting the server: (1) whenever you switch to a new phone; (2) whenever a person switches to a new telephone; (3) when reset to the phone factory settings; (4) when any interlocutor performs a reset to the factory settings; (5) whenever you uninstall and reinstall the application, or (6) when any person you are talking to uninstalls and reinstalls it. If you have dozens of contacts, a reset will occur every few days.

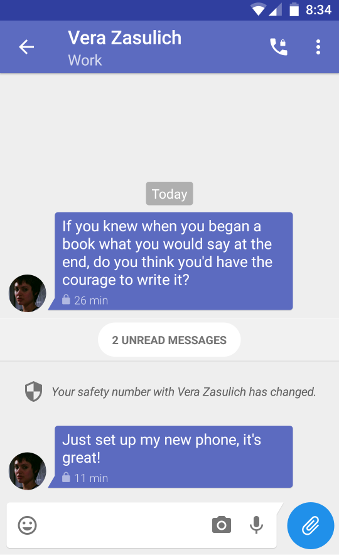

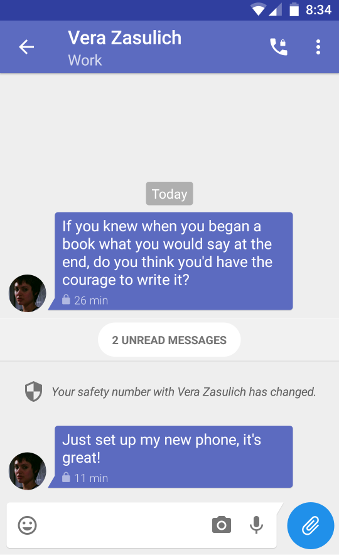

The reset occurs so regularly that these applications pretend that this is not a problem:

It looks like we have a security upgrade! (But not quite)

In cryptography, the term TOFU ("trust on first use") describes a game of chance when two parties speak for the first time. Instead of meeting in person, the mediator is responsible for each side ... and then, after the parties present themselves, each side carefully monitors the keys to make sure nothing has changed. If the key has changed, each side will give an alarm.

If the key of the remote host changes in SSH in such a situation, it will not “just work”, but will become completely belligerent:

Here is the correct behavior. And remember: this is not TOFU if it allows you to work further with a small warning. You should see a giant skull with crossbones .

Of course, these instant messengers will claim that everything is fine, because the user is warned. If he wants, he can check the security numbers. That is why we do not agree:

There is a very effective attack. Suppose Eve wants to break into Alice and Bob's existing conversation and stand between them. Alice and Bob have been in contact for many years, having long since passed TOFU.

Eve just makes Alice think that Bob bought a new phone:

Therefore, most encrypted messengers are unlikely to earn TOFU compliance. This is more like TADA - trust after adding devices. This is a real, not a fictitious problem, because it creates the opportunity for malicious implementation in a pre-existing conversation. In real TOFU, by the time someone is interested in your conversation, they will not be able to infiltrate it. With TADA, this is possible.

In group chats, the situation is even worse. The more people in the chat, the more often the account will be reinstalled. In a company of only 20 people, this will happen approximately every two weeks, according to our estimate. And every person in the company must meet this person. Personally. Otherwise, the entire chat is compromised by one mole or hacker.

Is there a good solution that does not imply trust in private key servers? We think there is: real support for multiple devices. This means that you manage a chain of devices that represent your personality. When you receive a new device (phone, laptop, desktop computer, iPad, etc.), it generates its own key pair, and your previous device signs it. If you lose the device, then “delete” it from one of the remaining ones. Technically, such a deletion is a recall, and in this case there is also some kind of key reversal that occurs automatically.

As a result, you do not need to trust the server or meet in person when the interlocutor or colleague receives a new device. Similarly, you do not need to trust the server or meet in person when it removes the device, if it was not the last. The only time you need to see a warning is when someone really loses access to all of their settings. And in this case, you will see a serious warning, as it should:

Specially maximally ugly.

As a result, much fewer accounts are reset and reinstalled. Historically, on Keybase, the total number of device add-ons and reviews is ten times the number of account discharges (you do not need to take our word for it, this is publicly available in our Merkle tree). Unlike other instant messengers, we can show a truly terrifying warning when you are talking to someone who has recently reinstalled the keys.

Device management is a complex engineering operation that we have revised several times. The existing device signs the public keys of the new device and encrypts all the important secret data for the public key of the new device. This operation should be performed quickly (within a second), since we are talking about the range of attention of the user. As a result, Keybase uses a key hierarchy, so that when transferring 32 bytes of secret data from an old device, the new device can see all long-lived cryptographic data (see the FAQ below for more details). This may seem a little surprising, but that is exactly the point of cryptography . It does not solve your secrets management problems; it just makes the system more scalable.

Now we can formulate four basic security properties for the Keybase application:

The first two seem understandable. A third becomes important in a design where device recall is expected and considered normal. The system must have checks that malicious servers cannot delay device reviews, which we wrote about earlier .

See our article on ephemeral messaging for more information on the fourth security feature .

Keybase has never been implemented by Keybase before, or even described in scientific articles. We had to invent some cryptographic protocols ourselves . Fortunately, there are plenty of off-the-shelf, standardized, and widely used cryptographic algorithms for any situation. All our client code is open . Theoretically, anyone can find design or implementation errors. But we wanted to demonstrate the internal structure and hired the best security audit firm for a full analysis.

Today we present a reportNCC Group and are extremely encouraged by the results. Keybase spent over $ 100,000 on auditing, and the NCC Group hired top-level security and cryptography experts. They found two important errors in our implementation, and we quickly fixed them. These bugs could only appear if our servers acted maliciously. We can assure that they will not act like that, but you have no reason to believe us. In fact of the matter!

We believe the NCC team has done an excellent job. Respect for the time they spent to fully understand our architecture and implementation. They found subtle errors that passed the attention of our developers, although we have recently watched this part of the code base repeatedly. We recommend that you look at the report here , or go to our FAQ.

We have already removed references to specific products from the article.

We are proud that Keybase does not require phone numbers and can cryptographically verify Twitter, HackerNews, Reddit and Github identifiers if you know someone.

And ... very soon ... there will be support for Mastodon.

Many applications are susceptible to redirected attacks. Eve walks into a kiosk in a shopping center and convinces Bob, the mobile operator, to forward Bob's phone number to her device. Or she convinces the representative by phone. Now, Eve can authenticate on the messenger servers, claiming that she is Bob. The result looks like our example of Alice, Bob and Eve is higher, but Eve does not need to penetrate any servers. Some applications offer “registration blocking” to protect against this attack, but by default they are annoying.

In the early days (2014 and early 2015), Keybase worked as a PGP web application, and the user could choose the function to store their private PGP keys on our servers, encrypted with passphrases (which Keybase did not know).

In September 2015, we introduced the new Keybase model. PGP keys are never used (and never used) in the Keybase chat or file system.

In some other applications, new devices do not see old messages, since synchronizing old messages through the server is contrary to direct secrecy. The Keybase app allows you to designate certain messages — or entire conversations — as “ephemeral”. They are destroyed after a certain time and are encrypted twice: once with the help of long-lived chat encryption keys, and the other with frequently changing ephemeral keys. Thus, ephemeral messages provide direct secrecy and cannot be synchronized between phones.

Non-ephemeral messages remain until the user explicitly deletes them and synchronizes E2E with new Slack-style devices, only with encryption! Therefore, when you add someone to a team or add a new device for yourself, messages are unblocked.

More on synchronization in the next paragraph.

Two years ago, we introduced keys for users (PUK) . The public half of the PUK is advertised in the public sigchain of users. The secret half is encrypted for the public key of each device. When Alice is preparing a new device, her old device knows the secret half of her PUK and the public key of the new device. It encrypts the secret half of the PUK for the public key of the new device, and the new device downloads this ciphertext through the server. The new device decrypts the PUK and can immediately access all long-lived chat messages.

Whenever Alice recalls a device, she changes her PUK, so that all of her devices, except the most recently recalled, receive a new PUK.

This synchronization scheme is fundamentally different from the early Keybase PGP system. Here, all keys involved have 32 bytes of true entropy; they do not break with brute force in case of a server hack. True, if the Curve25519 or the PRNG from Go is broken, then everything breaks. But PUK synchronization does not make any other significant cryptographic assumptions.

tL; dr Groups have their own audited signature chains for changing roles, adding and removing members.

Security researchers wrote about phantom user attacks on group chats. If users' clients are not able to cryptographically verify group membership, then malicious servers can embed spyware and moles in group chats. Keybase has a very reliable system here in the form of a special function of groups , which we will write about in the future.

We believe that this bug is the most significant find of the NCC audit. Keybase actively uses immutable data structures to protect against server ambiguity. In the case of a bug, an honest server may begin to evade: “I told you A earlier, but a bug occurred, I meant B”. But our clients have a common policy not to allow the server such flexibility: they have hard-coded exceptions in case of bugs.

Recently we also introduced Sigchain V2: This system solves the scalability problems that we did not correctly predict in the first version. Now clients are more economical with cryptographic data that they pull from the server, receiving only one signature from the tail of the signature chain, rather than the signature of each intermediate link. Thus, customers have lost the opportunity to go in cycles in searching for a specific signature hash, but we previously used these hashes to search for bad chainlinks in this list of hard-coded exceptions. We were preparing for the release of Sigchain V2, forgetting about this detail buried under several layers of abstractions, so the system simply trusted the field from the server response.

Once the NCC has detected this error, the fixwas simple enough: searching for hard-coded exceptions with a chainlink hash, not a chainlink signature hash. The client can always directly calculate these hashes.

We can also attribute this error to the additional complexity needed to support Sigchain V1 and Sigchain V2 simultaneously. Modern clients write Sigchain V2 links, but all clients must support legacy v1 links for an unlimited time. Recall that customers sign links with their private keys for each device. We cannot coordinate all customers overwriting historical data in a reasonable amount of time, as these clients can simply be offline.

As in 001 (see above), we were let down by a certain combination of simultaneous support for outdated solutions and optimization, which seemed important as we gained more experience working with the system.

In Sigchain V2, we reduce the size of the chains in bytes in order to reduce the bandwidth needed to search for users. This saving is especially important on mobile phones. So we encode chainlinks with MessagePack , not JSONthat gives good savings. In turn, customers sign and verify signatures on these chains. Researchers at NCC have found tricky ways to create “signatures” that look like JSON and MessagePack at the same time, leading to a conflict. We involuntarily introduced this ambiguity of decoding during optimization when we switched JSON parsers from the standard Go parser to a more efficient one. This fast parser quietly skipped a bunch of garbage before finding the actual JSON, which included this polyglot attack feature. The error is fixed by additional input verification .

In Sigchain V2, we also accepted Adam Langley ’s suggestion that signers precede their packages with signatures by context line prefix and byte

After fixing both bugs, the server rejects the malicious loads of the polyglot attack, so exploitation of these vulnerabilities is possible only with the help of a compromised server.

https://keybase.io/docs

In the coming months we will devote more time to working on the documentation.

Keybase makes extensive use of immutable append-only public data structures that force the server infrastructure to capture one true representation of user identifiers. We can guarantee the recall of devices and the removal of group members in such a way that a compromised server cannot roll back. If the server decides to show an inconsistent view, this deviation becomes part of the immutable public record. Keybase customers or a third-party auditor can detect a mismatch at any time after the attack. We believe that these guarantees far exceed the guarantees of competing products and are almost optimal, taking into account the practical limitations of mobile phones and customers with limited computing power.

Simply put, Keybase cannot invent someone else's signatures. Like any server, it can hold data. But our transparent Merkle tree is designed to store them for a very short period of time, and it is always discoverable.

When Keybase users actually lose all their devices (as opposed to adding new ones or losing a few), they need to reset. After resetting the account, the user is basically new, but he has the same username. He cannot sign the “reset instruction” because he has lost all of his keys. So instead, the Keybase server commits an indelible statement to the Merkle tree, which means a reset. Clients force the impossibility of rolling back these instructions. In a future article, specific mechanisms will be described in detail.

This user will have to re-add identity verifications (Twitter, Github, whatever) with the new keys.

NCC authors are considering a hostile Keybase server that completely swaps the Merkle tree leaf, replacing Bob's true key set with a completely new fake set. An attacking server has two options. First, he can fork the state of the world by placing Bob in one fork, and those whom he wants to fool in another. Secondly, he can "cheat" by publishing a version of the Merkle tree with the correct set of Bob keys and other versions with a fake set. Users who regularly interact with Bob will discover this attack, as they will verify that previously downloaded versions of Bob's history are valid prefixes of the new versions that they download from the server. Third-party validators that scan all Keybase updates will also notice this attack. If you write a third-party Keybase validator that we like, We can offer significant rewards. Talk to

Did you read it or just scroll down?

Resetting an account is dangerous. You erase public keys and in all conversations become a cryptographic stranger. You need to restore your identity, and in almost all cases this means a personal meeting and comparison of "security numbers" with each of the contacts. How often do you really go through such a test, which is the only protection from MiTM?

Even if you are serious about security numbers, you only see many chat partners once a year at a conference, so you're stuck.

But this happens infrequently, right?

How often does a reset occur? Answer: in most E2E chat applications all the time.

In these messengers, you drop cryptography and simply start trusting the server: (1) whenever you switch to a new phone; (2) whenever a person switches to a new telephone; (3) when reset to the phone factory settings; (4) when any interlocutor performs a reset to the factory settings; (5) whenever you uninstall and reinstall the application, or (6) when any person you are talking to uninstalls and reinstalls it. If you have dozens of contacts, a reset will occur every few days.

The reset occurs so regularly that these applications pretend that this is not a problem:

It looks like we have a security upgrade! (But not quite)

Is it really TOFU?

In cryptography, the term TOFU ("trust on first use") describes a game of chance when two parties speak for the first time. Instead of meeting in person, the mediator is responsible for each side ... and then, after the parties present themselves, each side carefully monitors the keys to make sure nothing has changed. If the key has changed, each side will give an alarm.

If the key of the remote host changes in SSH in such a situation, it will not “just work”, but will become completely belligerent:

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

Someone could be eavesdropping on you right now (man-in-the-middle attack)!

It is also possible that a host key has just been changed.

The fingerprint for the RSA key sent by the remote host is

00:11:22:33:44:55:66:77:88:99:aa:bb:cc:dd:ee:ff

Please contact your system administrator.

Add correct host key in /Users/rmueller/.ssh/known_hosts to get rid of this message.

Offending RSA key in /Users/rmueller/.ssh/known_hosts:12

RSA host key for 8.8.8.8 has changed and you have requested strict checking.

Host key verification failed.Here is the correct behavior. And remember: this is not TOFU if it allows you to work further with a small warning. You should see a giant skull with crossbones .

Of course, these instant messengers will claim that everything is fine, because the user is warned. If he wants, he can check the security numbers. That is why we do not agree:

- Verification is not performed because it happens too often.

- Check sucks.

- Even a cursory survey of our friends who are concerned about security showed that no one is worried about this test.

- So this is just trust in the server and trust in SMS (well, well!) Again, again and again .

- Finally, these applications should not work this way. Especially when changing devices. A typical normal case can be handled smoothly and safely, and the rarer the situation, the worse it should look. In a minute, show the Keybase solution.

Stop calling it TOFU

There is a very effective attack. Suppose Eve wants to break into Alice and Bob's existing conversation and stand between them. Alice and Bob have been in contact for many years, having long since passed TOFU.

Eve just makes Alice think that Bob bought a new phone:

Bob (Eve): Hey, hey!

Alice: Yo, Bob! It looks like you have new security numbers.

Bob (Eve): Yes, I bought the iPhone XS, a good phone, very pleased with it. Let's exchange the security numbers on the RWC 2020. Hey, do you have your current Caroline address? I want to surprise her while I'm in San Francisco.

Alice: I can’t compare, Android 4 life! Yes, Cozy Street 555.

Therefore, most encrypted messengers are unlikely to earn TOFU compliance. This is more like TADA - trust after adding devices. This is a real, not a fictitious problem, because it creates the opportunity for malicious implementation in a pre-existing conversation. In real TOFU, by the time someone is interested in your conversation, they will not be able to infiltrate it. With TADA, this is possible.

In group chats, the situation is even worse. The more people in the chat, the more often the account will be reinstalled. In a company of only 20 people, this will happen approximately every two weeks, according to our estimate. And every person in the company must meet this person. Personally. Otherwise, the entire chat is compromised by one mole or hacker.

Decision

Is there a good solution that does not imply trust in private key servers? We think there is: real support for multiple devices. This means that you manage a chain of devices that represent your personality. When you receive a new device (phone, laptop, desktop computer, iPad, etc.), it generates its own key pair, and your previous device signs it. If you lose the device, then “delete” it from one of the remaining ones. Technically, such a deletion is a recall, and in this case there is also some kind of key reversal that occurs automatically.

As a result, you do not need to trust the server or meet in person when the interlocutor or colleague receives a new device. Similarly, you do not need to trust the server or meet in person when it removes the device, if it was not the last. The only time you need to see a warning is when someone really loses access to all of their settings. And in this case, you will see a serious warning, as it should:

Specially maximally ugly.

As a result, much fewer accounts are reset and reinstalled. Historically, on Keybase, the total number of device add-ons and reviews is ten times the number of account discharges (you do not need to take our word for it, this is publicly available in our Merkle tree). Unlike other instant messengers, we can show a truly terrifying warning when you are talking to someone who has recently reinstalled the keys.

Device management is a complex engineering operation that we have revised several times. The existing device signs the public keys of the new device and encrypts all the important secret data for the public key of the new device. This operation should be performed quickly (within a second), since we are talking about the range of attention of the user. As a result, Keybase uses a key hierarchy, so that when transferring 32 bytes of secret data from an old device, the new device can see all long-lived cryptographic data (see the FAQ below for more details). This may seem a little surprising, but that is exactly the point of cryptography . It does not solve your secrets management problems; it just makes the system more scalable.

The full picture of security

Now we can formulate four basic security properties for the Keybase application:

- long-lasting secret keys never leave the devices on which they are created

- full multi-device support minimizes account drops

- key revocation cannot be maliciously delayed or rolled back

- direct secrecy using ephemeral time messages

The first two seem understandable. A third becomes important in a design where device recall is expected and considered normal. The system must have checks that malicious servers cannot delay device reviews, which we wrote about earlier .

See our article on ephemeral messaging for more information on the fourth security feature .

A lot of new cryptography, is everything correctly implemented?

Keybase has never been implemented by Keybase before, or even described in scientific articles. We had to invent some cryptographic protocols ourselves . Fortunately, there are plenty of off-the-shelf, standardized, and widely used cryptographic algorithms for any situation. All our client code is open . Theoretically, anyone can find design or implementation errors. But we wanted to demonstrate the internal structure and hired the best security audit firm for a full analysis.

Today we present a reportNCC Group and are extremely encouraged by the results. Keybase spent over $ 100,000 on auditing, and the NCC Group hired top-level security and cryptography experts. They found two important errors in our implementation, and we quickly fixed them. These bugs could only appear if our servers acted maliciously. We can assure that they will not act like that, but you have no reason to believe us. In fact of the matter!

We believe the NCC team has done an excellent job. Respect for the time they spent to fully understand our architecture and implementation. They found subtle errors that passed the attention of our developers, although we have recently watched this part of the code base repeatedly. We recommend that you look at the report here , or go to our FAQ.

FAQ

How dare you attack an XYZ product?

We have already removed references to specific products from the article.

What else?

We are proud that Keybase does not require phone numbers and can cryptographically verify Twitter, HackerNews, Reddit and Github identifiers if you know someone.

And ... very soon ... there will be support for Mastodon.

What about phone redirect attacks?

Many applications are susceptible to redirected attacks. Eve walks into a kiosk in a shopping center and convinces Bob, the mobile operator, to forward Bob's phone number to her device. Or she convinces the representative by phone. Now, Eve can authenticate on the messenger servers, claiming that she is Bob. The result looks like our example of Alice, Bob and Eve is higher, but Eve does not need to penetrate any servers. Some applications offer “registration blocking” to protect against this attack, but by default they are annoying.

I heard Keybase sends some private keys to the server?

In the early days (2014 and early 2015), Keybase worked as a PGP web application, and the user could choose the function to store their private PGP keys on our servers, encrypted with passphrases (which Keybase did not know).

In September 2015, we introduced the new Keybase model. PGP keys are never used (and never used) in the Keybase chat or file system.

How do old chats instantly appear on new phones?

In some other applications, new devices do not see old messages, since synchronizing old messages through the server is contrary to direct secrecy. The Keybase app allows you to designate certain messages — or entire conversations — as “ephemeral”. They are destroyed after a certain time and are encrypted twice: once with the help of long-lived chat encryption keys, and the other with frequently changing ephemeral keys. Thus, ephemeral messages provide direct secrecy and cannot be synchronized between phones.

Non-ephemeral messages remain until the user explicitly deletes them and synchronizes E2E with new Slack-style devices, only with encryption! Therefore, when you add someone to a team or add a new device for yourself, messages are unblocked.

More on synchronization in the next paragraph.

Tell us about PUKs!

Two years ago, we introduced keys for users (PUK) . The public half of the PUK is advertised in the public sigchain of users. The secret half is encrypted for the public key of each device. When Alice is preparing a new device, her old device knows the secret half of her PUK and the public key of the new device. It encrypts the secret half of the PUK for the public key of the new device, and the new device downloads this ciphertext through the server. The new device decrypts the PUK and can immediately access all long-lived chat messages.

Whenever Alice recalls a device, she changes her PUK, so that all of her devices, except the most recently recalled, receive a new PUK.

This synchronization scheme is fundamentally different from the early Keybase PGP system. Here, all keys involved have 32 bytes of true entropy; they do not break with brute force in case of a server hack. True, if the Curve25519 or the PRNG from Go is broken, then everything breaks. But PUK synchronization does not make any other significant cryptographic assumptions.

What about big group chats?

tL; dr Groups have their own audited signature chains for changing roles, adding and removing members.

Security researchers wrote about phantom user attacks on group chats. If users' clients are not able to cryptographically verify group membership, then malicious servers can embed spyware and moles in group chats. Keybase has a very reliable system here in the form of a special function of groups , which we will write about in the future.

Can you talk about NCC-KB2018-001?

We believe that this bug is the most significant find of the NCC audit. Keybase actively uses immutable data structures to protect against server ambiguity. In the case of a bug, an honest server may begin to evade: “I told you A earlier, but a bug occurred, I meant B”. But our clients have a common policy not to allow the server such flexibility: they have hard-coded exceptions in case of bugs.

Recently we also introduced Sigchain V2: This system solves the scalability problems that we did not correctly predict in the first version. Now clients are more economical with cryptographic data that they pull from the server, receiving only one signature from the tail of the signature chain, rather than the signature of each intermediate link. Thus, customers have lost the opportunity to go in cycles in searching for a specific signature hash, but we previously used these hashes to search for bad chainlinks in this list of hard-coded exceptions. We were preparing for the release of Sigchain V2, forgetting about this detail buried under several layers of abstractions, so the system simply trusted the field from the server response.

Once the NCC has detected this error, the fixwas simple enough: searching for hard-coded exceptions with a chainlink hash, not a chainlink signature hash. The client can always directly calculate these hashes.

We can also attribute this error to the additional complexity needed to support Sigchain V1 and Sigchain V2 simultaneously. Modern clients write Sigchain V2 links, but all clients must support legacy v1 links for an unlimited time. Recall that customers sign links with their private keys for each device. We cannot coordinate all customers overwriting historical data in a reasonable amount of time, as these clients can simply be offline.

Can you talk about NCC-KB2018-004?

As in 001 (see above), we were let down by a certain combination of simultaneous support for outdated solutions and optimization, which seemed important as we gained more experience working with the system.

In Sigchain V2, we reduce the size of the chains in bytes in order to reduce the bandwidth needed to search for users. This saving is especially important on mobile phones. So we encode chainlinks with MessagePack , not JSONthat gives good savings. In turn, customers sign and verify signatures on these chains. Researchers at NCC have found tricky ways to create “signatures” that look like JSON and MessagePack at the same time, leading to a conflict. We involuntarily introduced this ambiguity of decoding during optimization when we switched JSON parsers from the standard Go parser to a more efficient one. This fast parser quietly skipped a bunch of garbage before finding the actual JSON, which included this polyglot attack feature. The error is fixed by additional input verification .

In Sigchain V2, we also accepted Adam Langley ’s suggestion that signers precede their packages with signatures by context line prefix and byte

\0so that the verifiers are not confused in the intentions of the signer. There were errors on the verifying side of this context-prefix idea that could lead to other polyglot attacks. We quickly fixed this flaw with a whitelist . After fixing both bugs, the server rejects the malicious loads of the polyglot attack, so exploitation of these vulnerabilities is possible only with the help of a compromised server.

Where is the documentation?

https://keybase.io/docs

In the coming months we will devote more time to working on the documentation.

You can tell more about this statement by NCC: “However, an attacker is able to refuse to update sigchain or roll back a user's sigchain to a previous state by truncating subsequent links in the chain”

Keybase makes extensive use of immutable append-only public data structures that force the server infrastructure to capture one true representation of user identifiers. We can guarantee the recall of devices and the removal of group members in such a way that a compromised server cannot roll back. If the server decides to show an inconsistent view, this deviation becomes part of the immutable public record. Keybase customers or a third-party auditor can detect a mismatch at any time after the attack. We believe that these guarantees far exceed the guarantees of competing products and are almost optimal, taking into account the practical limitations of mobile phones and customers with limited computing power.

Simply put, Keybase cannot invent someone else's signatures. Like any server, it can hold data. But our transparent Merkle tree is designed to store them for a very short period of time, and it is always discoverable.

How does Keybase handle account resets?

When Keybase users actually lose all their devices (as opposed to adding new ones or losing a few), they need to reset. After resetting the account, the user is basically new, but he has the same username. He cannot sign the “reset instruction” because he has lost all of his keys. So instead, the Keybase server commits an indelible statement to the Merkle tree, which means a reset. Clients force the impossibility of rolling back these instructions. In a future article, specific mechanisms will be described in detail.

This user will have to re-add identity verifications (Twitter, Github, whatever) with the new keys.

Can a server simply swap someone's Merkle tree leaf to advertise a completely different set of keys?

NCC authors are considering a hostile Keybase server that completely swaps the Merkle tree leaf, replacing Bob's true key set with a completely new fake set. An attacking server has two options. First, he can fork the state of the world by placing Bob in one fork, and those whom he wants to fool in another. Secondly, he can "cheat" by publishing a version of the Merkle tree with the correct set of Bob keys and other versions with a fake set. Users who regularly interact with Bob will discover this attack, as they will verify that previously downloaded versions of Bob's history are valid prefixes of the new versions that they download from the server. Third-party validators that scan all Keybase updates will also notice this attack. If you write a third-party Keybase validator that we like, We can offer significant rewards. Talk to

maxon Keybase. Otherwise, we hope to soon plan the creation of an autonomous validator.Can you believe what I read to the end?

Did you read it or just scroll down?