DDoS to help: how we conduct stress and stress tests

Variti develops protection against bots and DDoS attacks, and also conducts stress and load testing. At the HighLoad ++ 2018 conference, we talked about how to secure resources from various types of attacks. In short: isolate parts of the system, use cloud services and CDN and update regularly. But without specialized companies, you still won’t be able to protect :)

Before reading the text, you can familiarize yourself with short abstracts on the conference website .

And if you do not like to read or just want to watch a video, the recording of our report is below under the spoiler.

Report video

Many companies already know how to do stress testing, but not all do stress testing. Some of our customers think that their site is invulnerable because they have a highload system and it protects against attacks. We show that this is not entirely true.

Of course, before conducting the tests, we get permission from the customer, signed and stamped, and with our help we can’t do a DDoS attack on anyone. Testing is carried out at the time chosen by the customer, when the attendance of his resource is minimal, and access problems will not affect customers. In addition, since something can always go wrong during the testing process, we have constant contact with the customer. This allows not only to report on the results achieved, but also to change something during the testing. At the end of testing, we always draw up a report in which we point out the discovered flaws and give recommendations for eliminating the weaknesses of the site.

How we are working

During testing, we emulate a botnet. Since we work with clients who are not located in our networks, so that the test does not end in the first minute due to triggering of limits or protection, we load the load not from one IP, but from our own subnet. Plus, to create a significant load, we have our own rather powerful test server.

Postulates

A lot doesn’t mean good.

The less load we can bring a resource to failure, the better. If you manage to make the site stop functioning from one request per second, or even from one request per minute, that’s fine. Because according to the law of meanness, users or attackers accidentally fall into this vulnerability.

Partial failure is better than full failure.

We always recommend making systems heterogeneous. Moreover, it is worth separating them at the physical level, and not just containerization. In the case of physical separation, even if something fails on the site, it is likely that it will not stop working completely, and users will still have access to at least part of the functionality.

The right architecture is the basis of stability.

Resilience of a resource and its ability to withstand attacks and loads should be laid at the design stage, in fact, at the stage of drawing the first block diagrams in a notebook. Because if fatal errors creep in, you can correct them in the future, but it is very difficult.

Not only the code should be good, but also the config.

Many people think that a good development team is a guarantee of the fault tolerance of the service. A good development team is really necessary, but there must also be good operation, good DevOps. That is, we need specialists who correctly configure Linux and the network, correctly write configs in nginx, configure limits and more. Otherwise, the resource will work well only on the test, and in production at some point everything will break.

Differences between stress and stress testing.

Load testing allows you to identify the limits of the system. Stress testing is aimed at finding the weaknesses of the system and is used to break this system and see how it will behave in the process of failure of certain parts. At the same time, the nature of the load usually remains unknown to the customer until the start of stress testing.

Distinctive features of L7 attacks

We usually divide the types of load into loads at the level of L7 and L3 & 4. L7 is a load at the application level, most often it is understood only as HTTP, but we mean any load at the TCP protocol level.

L7 attacks have certain distinguishing features. Firstly, they come directly to the application, that is, it is unlikely that they can be reflected by network means. Such attacks use logic, and due to this they consume CPU, memory, disk, database and other resources very efficiently and with little traffic.

HTTP Flood

In the case of any attack, the load is easier to create than to handle, and in the case of L7 this is also true. Attack traffic is not always easy to distinguish from legitimate one, and most often it can be done by frequency, but if everything is planned correctly, then it’s impossible to understand where the attack is and where legitimate requests are from the logs.

As a first example, consider an HTTP Flood attack. The graph shows that usually such attacks are very powerful, in the example below the peak number of requests exceeded 600 thousand per minute.

HTTP Flood is the easiest way to create a load. Usually, some kind of load testing tool is taken for it, for example, ApacheBench, and the request and purpose are set. With such a simple approach, it is likely to run into server caching, but it is easy to get around. For example, adding random lines to the query, which forces the server to constantly give a fresh page.

Also, do not forget about user-agent in the process of creating a load. Many user-agents of popular testing tools are filtered by system administrators, and in this case, the load may simply not reach the backend. You can significantly improve the result by inserting a more or less valid header from the browser into the request.

For all its simplicity, HTTP Flood attacks have their drawbacks. Firstly, large capacities are required to create a load. Secondly, such attacks are very easy to detect, especially if they come from the same address. As a result, requests immediately begin to be filtered either by system administrators, or even at the provider level.

What to look for

To reduce the number of requests per second and still not lose in efficiency, you need to show a little imagination and explore the site. So, you can load not only the channel or server, but also individual parts of the application, for example, databases or file systems. You can also search for places on the site that do great calculations: calculators, product selection pages, and more. Finally, it often happens that there is a php script on the site that generates a page of several hundred thousand lines. Such a script also heavily loads the server and can become a target for attack.

Where to looking for

When we scan a resource before testing, we first of all look, of course, at the site itself. We are looking for all kinds of input fields, heavy files - in general, everything that can create problems for a resource and slows down its operation. Here, commonplace development tools in Google Chrome and Firefox help show the response time of the page.

We also scan subdomains. For example, there is a certain online store, abc.com, and it has a subdomain admin.abc.com. Most likely, this is the admin panel with authorization, but if you put a load into it, then it can create problems for the main resource.

The site may have a subdomain api.abc.com. Most likely, this is a resource for mobile applications. The application can be found in the App Store or Google Play, put a special access point, dissect the API and register test accounts. The problem is that often people think that everything that is protected by authorization is immune to denial of service attacks. Allegedly, authorization is the best CAPTCHA, but it is not. Making 10-20 test accounts is simple, and by creating them, we get access to complex and undisguised functionality.

Naturally, we look at the history, at robots.txt and WebArchive, ViewDNS, we are looking for old versions of the resource. Sometimes it happens that the developers rolled out, say, mail2.yandex.net, but the old version, mail.yandex.net, remained. This mail.yandex.net is no longer supported, development resources are not allocated to it, but it continues to consume the database. Accordingly, using the old version, you can effectively use the resources of the backend and everything that is behind the layout. Of course, this does not always happen, but we still encounter something like this quite often.

Naturally, we dissect all request parameters, cookie structure. You can, say, push some value into the JSON array inside the cookie, create more nesting and make the resource work unreasonably long.

Search load

The first thing that comes to mind when researching a site is to load the database, since almost everyone has a search, and almost everyone has it, unfortunately, is poorly protected. For some reason, the developers do not pay enough attention to the search. But there is one recommendation - do not make the same type of requests, because you may encounter caching, as is the case with HTTP flood.

Making random queries into the database is also not always efficient. It is much better to create a list of keywords that are relevant to the search. If you go back to the example of an online store: let's say the site sells car tires and allows you to set the radius of the tires, the type of car and other parameters. Accordingly, combinations of relevant words will make the database work in much more complex conditions.

In addition, it’s worth using pagination: it’s much harder for a search to return the penultimate page of an issue than the first. That is, with the help of pagination, you can diversify the load a little.

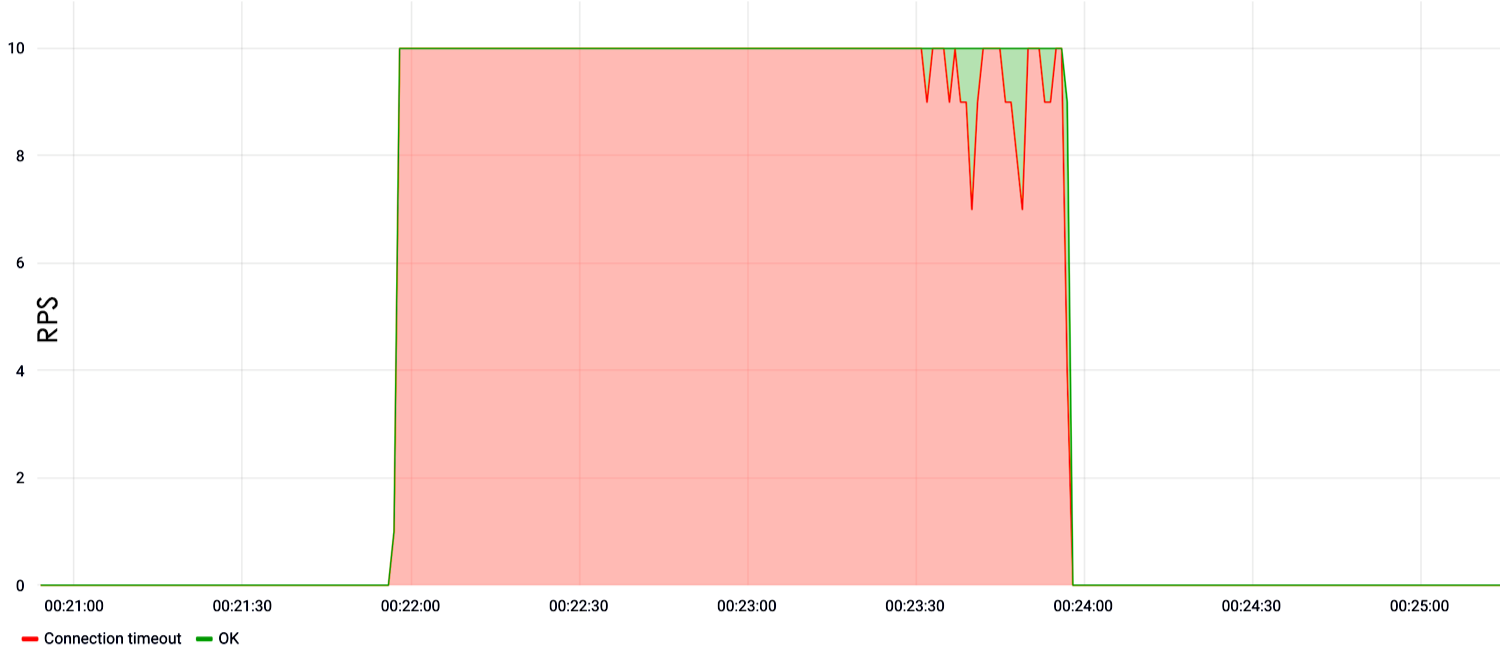

In the example below, we show the load in the search. It can be seen that from the first second of the test at a speed of ten requests per second, the site went down and did not respond.

If there is no search?

If there is no search, this does not mean that the site does not contain other vulnerable input fields. This field may be authorization. Now developers like to create complex hashes in order to protect the login database from attacks on rainbow tables. This is good, but such hashes consume large CPU resources. A large stream of false authorizations leads to a processor failure, and as a result, the site stops working on output.

The presence on the site of all kinds of forms for comments and feedback is an occasion to send very large texts there or simply create a massive flood. Sometimes sites accept file attachments, including in gzip format. In this case, we take a 1TB file, using gzip we compress it to a few bytes or kilobytes and send it to the site. Then it is unzipped and a very interesting effect is obtained.

Rest API

I would like to pay a little attention to such popular services as the Rest API. Protecting the Rest API is much more difficult than a regular site. For the Rest API, even trivial methods of protection against password cracking and other illegitimate activity do not work.

The Rest API is very easy to break because it accesses the database directly. At the same time, the failure of such a service entails quite serious consequences for the business. The fact is that the Rest API usually involves not only the main site, but also the mobile application, some internal business resources. And if all this falls, then the effect is much stronger than in the case of the failure of a simple site.

Heavy Content Load

If we are offered to test some ordinary one-page application, landing page, business card website, which do not have complex functionality, we are looking for heavy content. For example, large pictures that the server gives, binary files, pdf-documentation - we are trying to pump it all out. Such tests load the file system well and clog channels, and therefore are effective. That is, even if you don’t put the server down, downloading a large file at low speeds, you will simply clog the channel of the target server and then a denial of service will occur.

An example of such a test shows that at a speed of 30 RPS the site stopped responding, or generated 500 server errors.

Do not forget about setting up servers. You can often find that a person bought a virtual machine, installed Apache there, configured everything by default, located a php application, and below you can see the result.

Here the load went to the root and amounted to only 10 RPS. We waited 5 minutes and the server crashed. To the end, however, it is not known why he fell, but there is an assumption that he simply was full of memory, and therefore stopped responding.

Wave based

In the last year or two, wave attacks have become quite popular. This is due to the fact that many organizations buy certain pieces of hardware for protection against DDoS, which require a certain amount of statistics to start filtering attacks. That is, they do not filter the attack in the first 30-40 seconds, because they accumulate data and learn. Accordingly, in these 30-40 seconds, you can launch so much that the resource will lie for a long time until all requests are raked.

In the case of the attack, there was an interval of 10 minutes below, after which a new, modified portion of the attack arrived.

That is, the defense trained, started filtering, but a new, completely different portion of the attack arrived, and the defense began training again. In fact, filtering stops working, protection becomes ineffective, and the site is inaccessible.

Wave attacks are characterized by very high values at the peak, it can reach one hundred thousand or a million requests per second, in the case of L7. If we talk about L3 & 4, then there can be hundreds of gigabits of traffic, or, accordingly, hundreds of mpps, if you count in packets.

The problem with such attacks is synchronization. Attacks come from a botnet, and in order to create a very large one-time peak, a high degree of synchronization is required. And this coordination does not always work: sometimes the output is some kind of parabolic peak, which looks rather pathetic.

Not HTTP Unified

In addition to HTTP at the L7 level, we love to exploit other protocols. As a rule, a regular website, especially a regular hosting, has mail protocols and MySQL sticking out. Mail protocols are less affected than databases, but they can also be loaded quite efficiently and get an overloaded CPU on the server at the output.

With the help of the 2016 SSH vulnerability, we were quite successful. Now this vulnerability has been fixed for almost everyone, but this does not mean that SSH cannot be loaded. Can. Just a huge load of authorizations is served, SSH eats up almost the entire CPU on the server, and then the website is already composed of one or two requests per second.

Accordingly, these one or two log queries cannot be distinguished from a legitimate load.

The many connections that we open in the servers remain relevant. Previously, Apache sinned, now nginx actually sinned, since it is often configured by default. The number of connections that nginx can keep open is limited, so we open this number of connections, the new nginx connection no longer accepts, and the site does not work on output.

Our test cluster has enough CPU to attack SSL handshake. In principle, as practice shows, botnets also sometimes like to do this. On the one hand, it’s clear that you can’t do without SSL, because Google’s issuance, ranking, and security. SSL, on the other hand, unfortunately has a CPU problem.

L3 & 4

When we talk about an attack at the L3 & 4 levels, we usually talk about an attack at the channel level. Such a load is almost always distinguishable from legitimate if it is not a SYN flood attack. The problem of SYN flood attacks for security features is large. The maximum value of L3 & 4 was 1.5-2 Tb / s. Such traffic is very difficult to handle even for large companies, including Oracle and Google.

SYN and SYN-ACK are the packages that are used to establish the connection. Therefore, it is difficult to distinguish SYN-flood from a legitimate load: it is not clear that this is SYN, which came to establish the connection, or part of the flood.

UDP flood

Typically, attackers do not have the capabilities that we have, so amplification can be used to organize attacks. That is, an attacker scans the Internet and finds either vulnerable or improperly configured servers, which, for example, in response to one SYN packet, respond with three SYN-ACKs. By faking the source address from the address of the target server, you can use one package to increase the capacity, say, three times, and redirect traffic to the victim.

The problem with amplifications is their complex detection. From the latest examples we can cite the sensational case with the vulnerable memcached. Plus, now there are a lot of IoT devices, IP cameras, which are also mostly configured by default, and by default they are configured incorrectly, therefore, through such devices, attackers most often make attacks.

Difficult SYN-flood

SYN-flood is probably the most interesting view of all attacks from the point of view of the developer. The problem is that often system administrators use IP blocking for protection. Moreover, IP blocking affects not only sysadmins that operate according to scripts, but, unfortunately, some security systems that are bought for a lot of money.

This method can turn into a catastrophe, because if the attackers change their IP addresses, the company will block its own subnet. When Firewall blocks its own cluster, external interactions will crash at the output, and the resource will break.

And to achieve blocking your own network is easy. If the client’s office has a Wi-Fi network, or if the health of the resources is measured using various monitoring, then we take the IP address of this monitoring system or office Wi-Fi client, and use it as a source. At the output, the resource seems to be available, but the target IP addresses are blocked. So, the Wi-Fi network of the HighLoad conference, where a new product of the company is presented, can be blocked, and this entails certain business and economic costs.

During testing, we cannot use amplification through memcached by some external resources, because there are agreements to supply traffic only to allowed IP addresses. Accordingly, we use amplification via SYN and SYN-ACK, when the system responds with two or three SYN-ACKs to send one SYN, and the output is multiplied by two to three times.

Instruments

One of the main tools that we use for the load at the L7 level is Yandex-tank. In particular, a phantom is used as a gun, plus there are several scripts for generating cartridges and for analyzing the results.

Tcpdump is used to analyze network traffic, and Nmap is used to analyze server traffic. To create a load at the L3 & 4 level, OpenSSL is used and a little of its own magic with the DPDK library. DPDK is a library from Intel that allows you to work with a network interface, bypassing the Linux stack, and thereby increase efficiency. Naturally, we use DPDK not only at the L3 & 4 level, but also at the L7 level, because it allows you to create a very high load flow, within a few million requests per second from one machine.

We also use certain traffic generators and special tools that we write for specific tests. If we recall the vulnerability under SSH, then with the above set, it cannot be escaped. If we attack the mail protocol, we take mail utilities or just write scripts on them.

conclusions

As a result, I would like to say:

- In addition to the classical load testing, stress testing must also be carried out. We have a real-world example where a partner subcontractor conducted only load testing. It showed that the resource withstands the standard load. But then an abnormal load appeared, site visitors began to use the resource a little differently, and at the output the subcontractor laid down. Thus, it’s worth looking for vulnerabilities even if you are already protected from DDoS attacks.

- It is necessary to isolate some parts of the system from others. If you have a search, it must be taken out on separate machines, that is, not even in the docker. Because if the search or authorization fails, then at least something will continue to work. In the case of the online store, users will continue to find products in the catalog, switch from the aggregator, buy if they are already authorized, or log in through OAuth2.

- Do not neglect all kinds of cloud services.

- Use the CDN not only to optimize network latency, but also as a means of protection against attacks on the exhaustion of the channel and just flood into static.

- You must use specialized security services. You won’t defend yourself from L3 & 4 attacks at the channel level, because you most likely simply do not have a sufficient channel. You are also unlikely to beat off L7 attacks, since they are very large. Plus, the search for small attacks is still the prerogative of special services, special algorithms.

- Update regularly. This applies not only to the kernel, but also to SSH daemon, especially if you have them open to the outside. In principle, everything needs to be updated, because you are unlikely to be able to track any vulnerabilities yourself.