VMware NSX for the smallest. Part 5. Configuring the load balancer

- Tutorial

Part one. Introductory

Part Two. Configuring Firewall and NAT Rules

Part Three. DHCP Configuration

Part Four. Configuring routing

Last time we talked about the capabilities of NSX Edge in terms of static and dynamic routing, and today we will deal with the balancer.

Before proceeding with the setup, I would like to briefly recall the main types of balancing.

Theory

All of today's payload balancing solutions are most often divided into two categories: balancing at the fourth (transport) and seventh (applied) levels of the OSI model . The OSI model is not the best reference point when describing balancing methods. For example, if the L4 balancer also supports TLS termination, does it then become an L7 balancer? But that is, that is.

- The L4 balancer is most often the one standing between the client and the set of available middle proxy backends, which terminates TCP connections (that is, independently responds to SYN), selects a backend and initiates a new TCP session in its direction, sending SYN itself. This type is one of the basic, other options are possible.

- The L7 balancer distributes traffic to the available backends “more sophisticated” than the L4 balancer does. He can decide on a backend based on, for example, the contents of an HTTP message (URL, cookie, etc.).

Regardless of the type, the balancer can support the following functions:

- Service discovery is the process of determining the set of available backends (Static, DNS, Consul, Etcd, etc.).

- Checking the health of detected backends (active “ping” of the backend using an HTTP request, passive detection of problems in TCP connections, the presence of several 503 HTTP-code in the answers in a row, etc.).

- Balancing itself (round robin, random selection, source IP hash, URI).

- TLS termination and certificate verification.

- Security related options (authentication, prevention of DoS attacks, speed limit) and much more.

NSX Edge offers support for two balancer deployment modes:

proxy mode, or one-arm . In this mode, when sending a request to one of the backends, NSX Edge uses its IP address as the source address. Thus, the balancer performs both the Source and Destination NAT functions. The backend sees all traffic as sent from the balancer and responds directly to it. In this scheme, the balancer must be in the same network segment with the internal servers.

Here's how it goes:

- The user sends a request to the VIP address (balancer address) that is configured on Edge.

- Edge selects one of the backends and performs destination NAT, replacing the VIP address with the address of the selected backend.

- Edge runs source NAT, replacing the address of the user who sent the request with its own.

- The packet is sent to the selected backend.

- The backend does not respond directly to the user, but Edge, since the original address of the user has been changed to the address of the balancer.

- Edge sends the server response to the user.

Scheme below.

Transparent, or inline, mode. In this scenario, the balancer has interfaces in the internal and external network. However, there is no direct access to the internal network from the external. The built-in load balancer acts as a NAT gateway for virtual machines on the internal network.

The mechanism is as follows:

- The user sends a request to the VIP address (balancer address) that is configured on Edge.

- Edge selects one of the backends and performs destination NAT, replacing the VIP address with the address of the selected backend.

- The packet is sent to the selected backend.

- The backend receives a request with the user's original address (source NAT was not executed) and responds directly to it.

- The traffic is again accepted by the load balancer, since in the inline scheme it usually acts as the default gateway for the server farm.

- Edge runs source NAT to send traffic to the user, using its VIP as the source IP address.

Scheme below.

Practice

On my testbed, 3 servers with Apache are configured, which is configured to work on HTTPS. Edge will balance HTTPS requests using the round robin method, proxying each new request to a new server.

Let's get started.

Generating an SSL Certificate That Will Use NSX Edge

You can import a valid CA certificate or use a self-signed one. In this test, I will use self-signed.

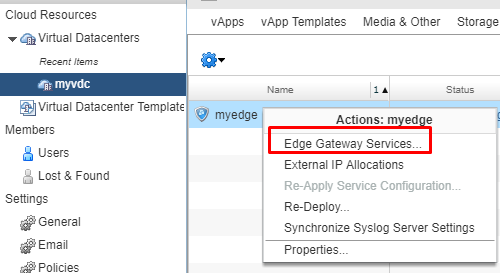

- In the vCloud Director interface, go to the Edge services settings.

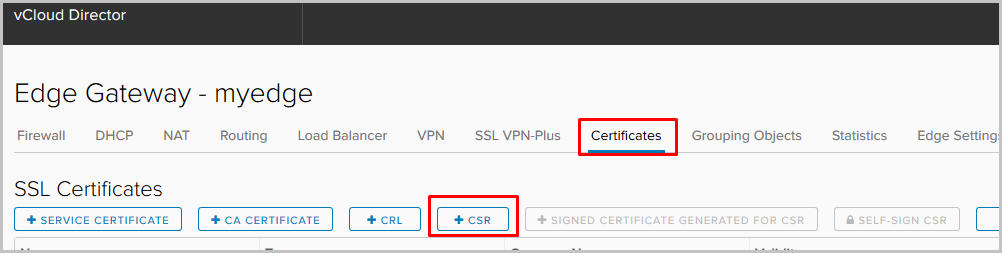

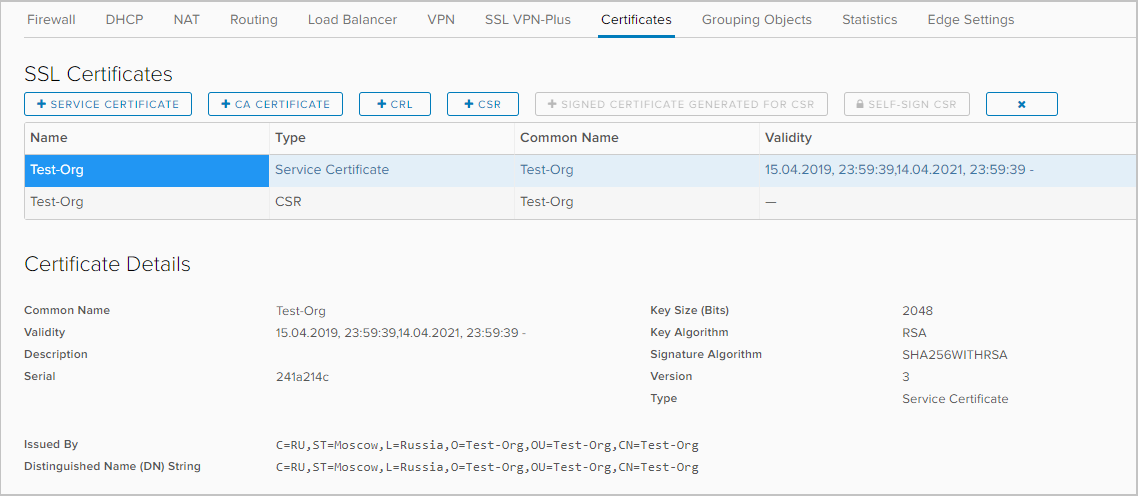

- Go to the Certificates tab. From the list of actions, select the addition of a new CSR.

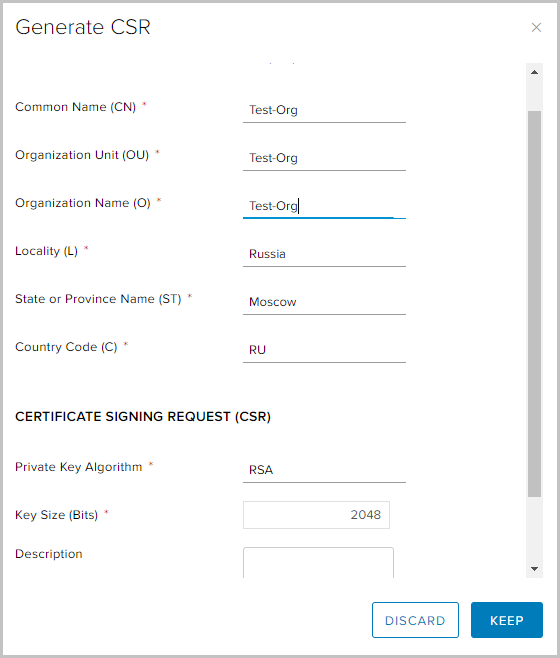

- Fill in the required fields and click Keep.

- Select the newly created CSR and select the self-sign CSR option.

- Select the certificate validity period and click Keep

- A self-signed certificate appeared in the list of available.

Configure Application Profile

Application profiles give you more control over network traffic and make managing it simple and efficient. With their help, you can determine the behavior for specific types of traffic.

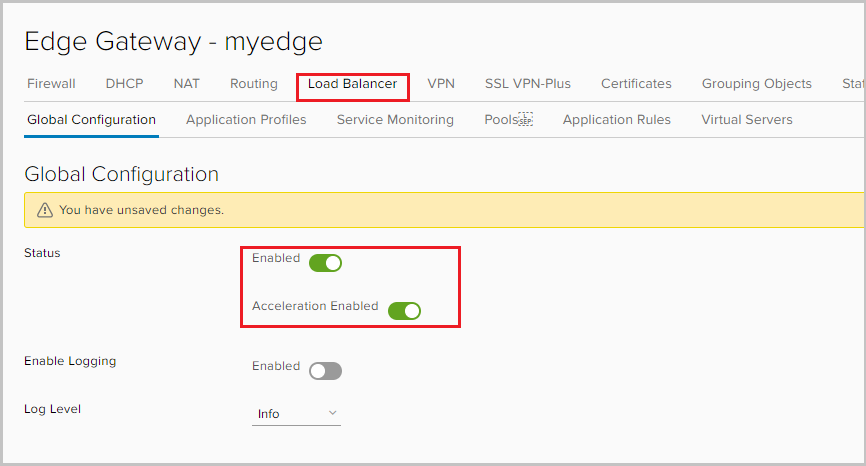

- Go to the Load Balancer tab and turn on the balancer. The Acceleration enabled option here allows the balancer to use faster L4 balancing instead of L7.

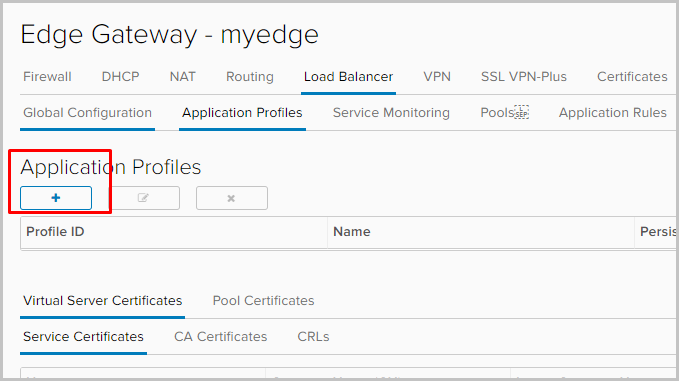

- Go to the Application profile tab to set the application profile. Click +.

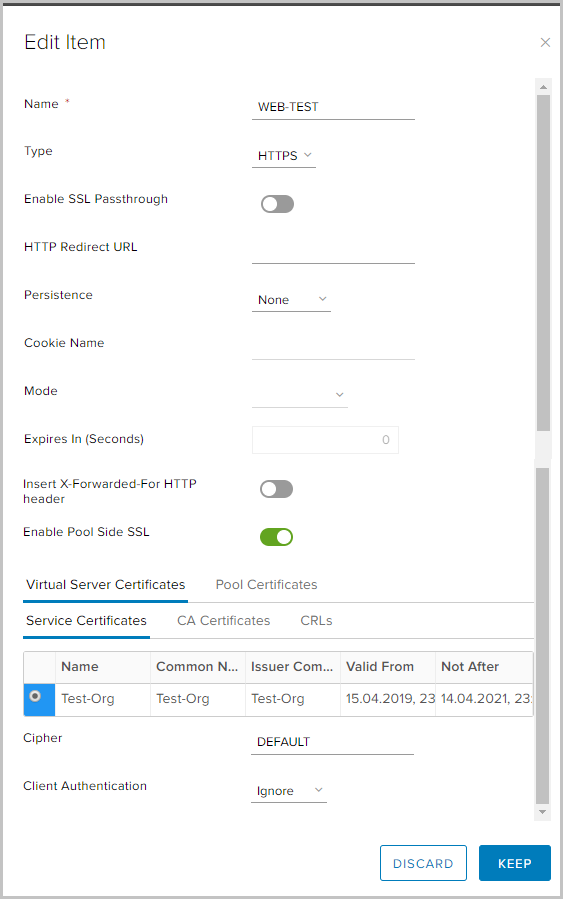

- Set the profile name and select the type of traffic for which the profile will be applied. I will explain some parameters.

Persistence - saves and tracks session data, for example: which specific server from the pool is serving a user request. This ensures that user requests are sent to the same pool member throughout the life of the session or subsequent sessions.

Enable SSL passthrough - when this option is selected, NSX Edge stops terminating SSL. Instead, termination occurs directly on the servers for which balancing is performed.

Insert X-Forwarded-For HTTP header - allows you to determine the source IP address of the client connecting to the web server through the balancer.

Enable Pool Side SSL - allows you to specify that the selected pool consists of HTTPS servers.

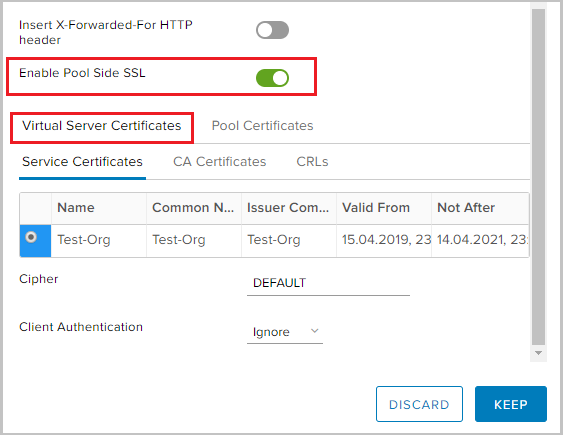

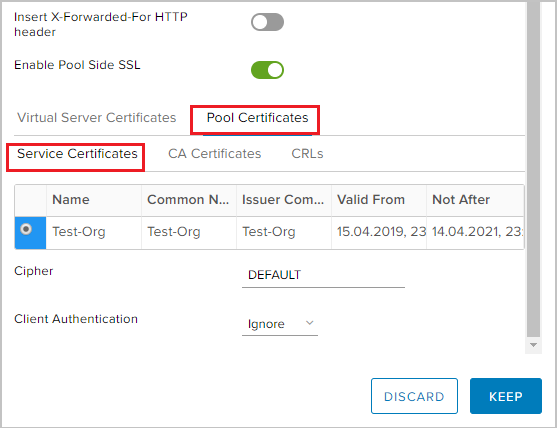

- Since I will balance the HTTPS traffic, I need to enable Pool Side SSL and select the previously generated certificate in the Virtual Server Certificates -> Service Certificate tab.

- Similarly for Pool Certificates -> Service Certificate.

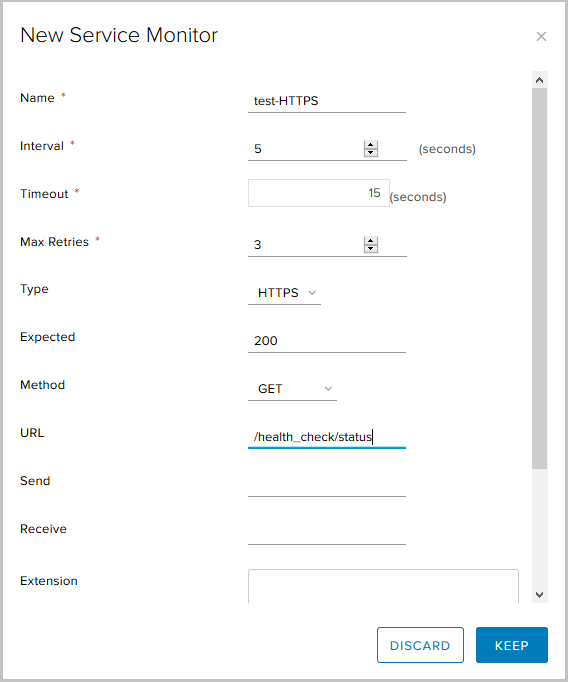

We create a pool of servers, the traffic to which Pools will be balanced

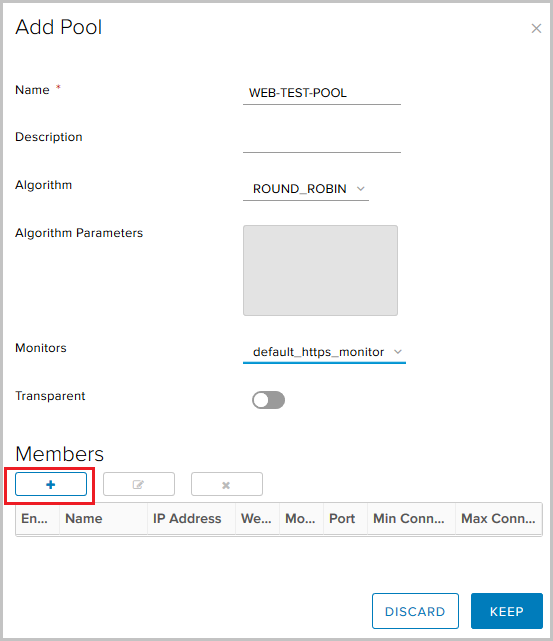

- Go to the Pools tab. Click +.

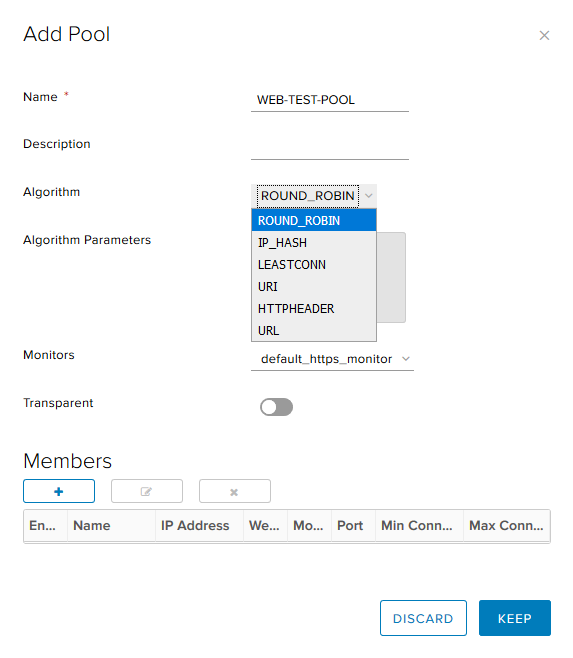

- Set the pool name, select the algorithm (I will use round robin) and the monitoring type for the health check of the backend. The Transparent option indicates whether the initial source IP clients are visible to the internal servers.

- If this option is disabled, traffic for internal servers comes from the source IP of the balancer.

- If this option is enabled, internal servers see the source IP clients. In this configuration, the NSX Edge must act as the default gateway to ensure that the returned packets pass through the NSX Edge.

NSX supports the following balancing algorithms:- IP_HASH - server selection based on the results of the hash function for the source and destination IP of each packet.

- LEASTCONN - balancing incoming connections, depending on the number of already available on a particular server. New connections will be directed to the server with the least number of connections.

- ROUND_ROBIN - new connections are sent to each server in turn, in accordance with the specified weight.

- URI - the left part of the URI (before the question mark) is hashed and divided by the total weight of the servers in the pool. The result indicates which server receives the request, ensuring that the request is always routed to the same server, as long as all servers remain available.

- HTTPHEADER - balancing based on a specific HTTP header, which can be specified as a parameter. If the header is missing or does not have any meaning, the ROUND_ROBIN algorithm is used.

- URL - each HTTP GET request searches for the URL parameter specified as an argument. If the parameter is followed by an equal sign and value, then the value is hashed and divided by the total weight of the running servers. The result indicates which server receives the request. This process is used to track user identifiers in requests and to ensure that the same user id is always sent to the same server, as long as all servers remain available.

- If this option is disabled, traffic for internal servers comes from the source IP of the balancer.

- In the Members block, click + to add servers to the pool.

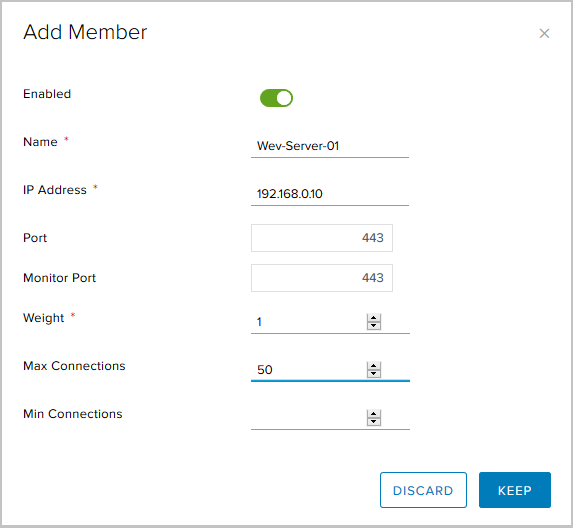

Here you need to specify:- server name

- Server IP address;

- the port to which the server will receive traffic;

- port for health check (Monitor healthcheck);

- Weight - with this parameter you can adjust the proportional amount of received traffic for a specific member of the pool;

- Max Connections - the maximum number of connections to the server;

- Min Connections - the minimum number of connections that the server must process before traffic is redirected to the next pool member.

This is what the final pool of three servers looks like.

Add Virtual Server

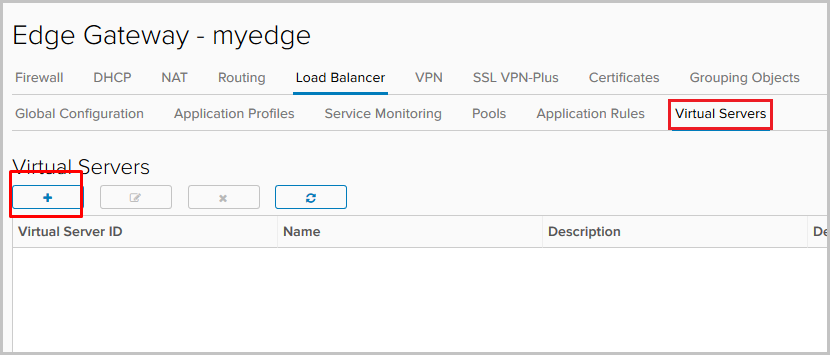

- Go to the Virtual Servers tab. Click +.

- We activate the virtual server using Enable Virtual Server.

We give it a name, select the previously created Application Profile, Pool and specify the IP address to which Virtual Server will accept requests from outside. We specify the HTTPS protocol and port 443. The

optional parameters are here:

Connection Limit - the maximum number of simultaneous connections that a virtual server can handle;

Connection Rate Limit (CPS) - The maximum number of new incoming requests per second.

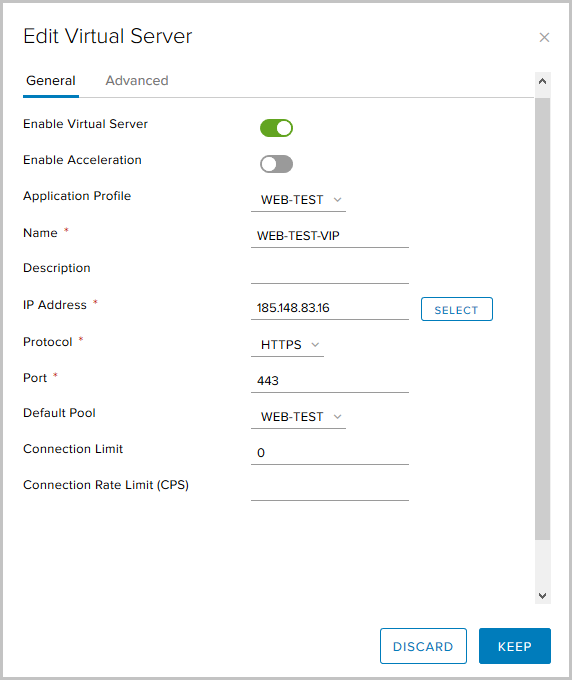

This completes the balancer configuration, you can check its performance. Servers have the simplest configuration, which allows you to understand which server from the pool processed the request. During the setup, we selected the Round Robin balancing algorithm, and the Weight parameter for each server is equal to one, so each next request will be processed by the next server from the pool.

We enter the external address of the balancer in the browser and see:

After the page is refreshed, the request will be processed by the following server:

And again, to check the third server from the pool:

When checking, you can see that the certificate that Edge sends to us is the same one that we generated in very beginning.

Check the status of the balancer from the Edge gateway console. To do this, entershow service loadbalancer pool .

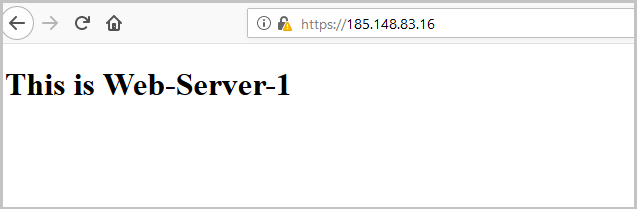

Configure Service Monitor to check the status of servers in the pool

Using Service Monitor, we can monitor the status of servers in the backend pool. If the response to the request does not match what was expected, the server can be withdrawn from the pool so that it does not receive any new requests.

By default, three verification methods are configured:

- TCP monitor

- HTTP monitor

- HTTPS-monitor.

Create a new one.

- Go to the Service Monitoring tab, click +.

- Choose:

- name for the new method;

- interval at which requests will be sent,

- response timeout

- the monitoring type is HTTPS request using the GET method, the expected status code is 200 (OK) and the request URL.

- This completes the configuration of the new Service Monitor, now we can use it when creating a pool.

Configure Application Rules

Application Rules is a way to manipulate traffic based on specific triggers. Using this tool, we can create advanced load balancing rules, which may not be possible to configure through Application profiles or using other services available on Edge Gateway.

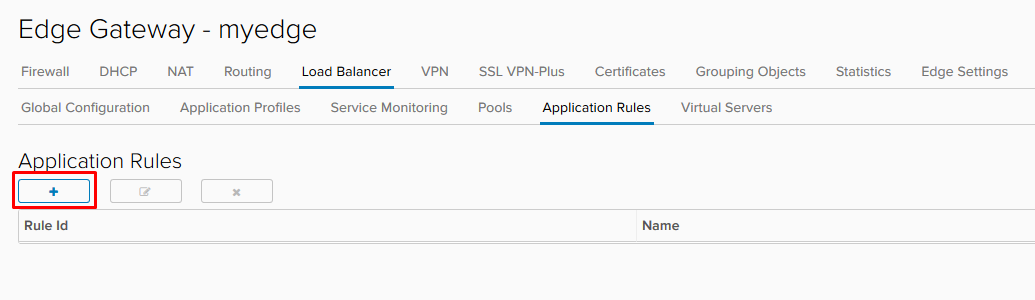

- To create a rule, go to the Application Rules tab of the balancer.

- Choose a name, a script that will use the rule, and click Keep.

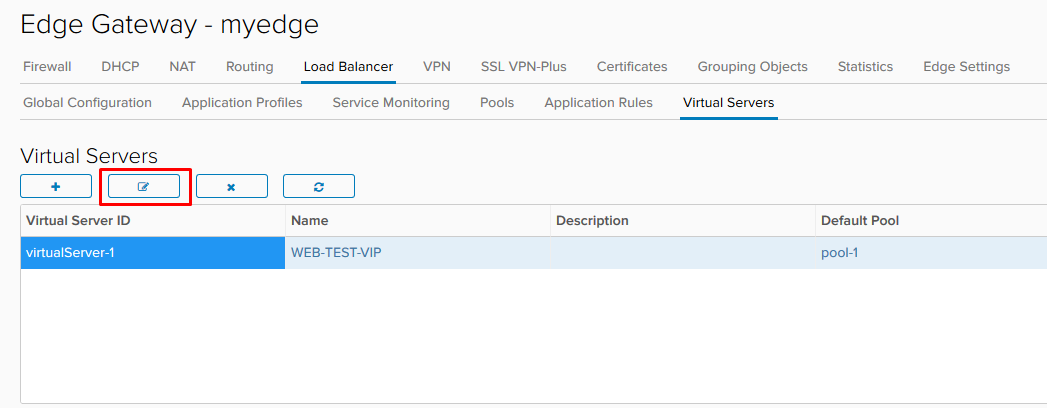

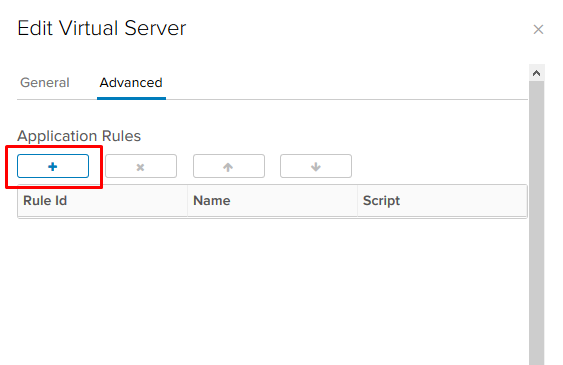

- After the rule is created, we need to edit the already configured Virtual Server.

- In the Advanced tab, add the rule we created.

In the example above, we have included tlsv1 support.

A couple more examples:

Redirecting traffic to another pool.

With this script, we can redirect traffic to another balancing pool if the main pool does not work. For the rule to work, several pools must be configured on the balancer and all members of the main pool must be in the down state. Specify the name of the pool, not its ID.

acl pool_down nbsrv(PRIMARY_POOL_NAME) eq 0

use_backend SECONDARY_POOL_NAME if PRIMARY_POOL_NAME

Redirect traffic to an external resource.

Here we redirect traffic to an external website if all members of the main pool are in the down state.

acl pool_down nbsrv(NAME_OF_POOL) eq 0

redirect location http://www.example.com if pool_downMore examples here .

That's all about the balancer. If you have any questions, ask, I am ready to answer.