Why silicon and why CMOS?

The very first transistor was bipolar and germanium, but the vast majority of modern integrated circuits are made of silicon using CMOS technology (complementary metal-oxide-semiconductor). How did silicon turn out to be the main of many well-known semiconductors? Why is it that CMOS technology has become almost exclusive? Were the processors on other technologies? What awaits us in the near future, because the physical limit of miniaturization of MOS transistors has actually been reached?

If you want to know the answers to all these questions - welcome to cat. At the request of readers of previous articles, I warn you: there is a lot of text, for half an hour.

In the yard between 1947 and 1948, John Bardin and Walter Brattain, led by William Shockley at The Bell Labs, study the field distribution in germanium diodes and accidentally discover a transistor effect. And although the potential usefulness of the discovery looked obvious (however, urban legends say that the discovery was declassified after military experts did not see any practical use in it), the first transistor looked like this:

Figure 2. Replica of the first transistor

Not very similar to a device suitable for industrial production, right? It took two years to make a capricious point bipolar transistor more convenient to manufacture from pn junctions, then days (well, not days, but years) of electron tubes in mass electronic equipment were counted.

Of the three pioneers of the transistor, it is true that only Shockley continued to work on them, who had little to do with the original work (because he was a theoretician and boss, not a researcher), but he took on all his fame and so he quarreled with Bardin and Brattein that they never dealt with microelectronics again. Brattain studied electrochemistry, and Bardin - superconductivity, for which he received the second Nobel Prize, becoming the only person in history who has two prizes in physics.

Shockley, having successfully broken up his research team with his ambitions, left Bell Labs and created his own Shockley Semiconductor Laboratory. The working climate in it, however, also left much to be desired, which led to the emergence of the famous “treasonous eight”, who fled from Shockley and founded Fairchild Semiconductor, which, in turn, became the parent of what we now know as “Silicon Valley” - including companies like Intel, AMD, and Intersil.

Figure 3. “Fairchildren” - companies founded by immigrants from Fairchild

. Shockley himself never recovered from the G8’s betrayal and rolled downhill: he was fired from his own company, carried away by racism and eugenics, became an outcast in the scientific community and died, forgotten by everyone . Even his children learned about death from newspapers.

The history of the discovery of the transistor is widely known and much described. It is much less known that the first patent application for a transistor was not filed at all in 1947, but twenty-odd years earlier, in 1925, by an American of Austro-Hungarian origin Julius Lilienfeld. In this case, unlike the 1947 bipolar transistor, the devices described in the Lilienfeld patents were field: in the patent received in 1930, MESFET with a metal shutter, and in the 1933 patent - MOSFET, almost the same as we know it now. Lilienfeld intended to use gate aluminum and alumina as a gate dielectric.

Unfortunately, the then level of technology development did not allow Lilienfeld to realize his ideas in prototypes, but the experiments carried out by Shockley in 1948 (already alone) showed that Lilienfeld's patents described fundamentally operable devices. Actually, all the work of the Shockley group on the properties of diodes, which led to the random invention of a bipolar transistor, was part of research on the creation of a field effect transistor, much more similar in properties to vacuum tubes and therefore more understandable to physicists of those years. Nevertheless, despite the successful confirmation of the workability of Lilienfeld's ideas, in 1948 there was still no technology for the stable production of thin defect-free films of dielectrics, while a bipolar transistor turned out to be more technologically advanced and commercially promising.

A moment of terminology A

bipolar transistor or Bipolar Transistor is a transistor in which both types of charge carriers, both electrons and holes, are needed to operate, and which is controlled by the base current (multiplying it by the gain of the transistor). Usually done using pn junctions or heterojunctions, although the very first transistor, although it was bipolar, was not a junction transistor. A popular English acronym is BJT, bipolar junction transistor.

For transistors at heterojunctions (transitions between different materials, for example, gallium arsenide and gallium aluminitride), the acronym HBT (Heterojunction Bipolar Transistor) is used.

A unipolar or field effect transistor, also known as a Field-Effect Transistor or FET, is a transistor whose operation is based on the field effect and requires only one type of charge carrier. The field effect transistor has a channel controlled by the voltage applied to the gate. Field-effect transistors are quite a few varieties.

The usual MOSFET or MOSFET is a transistor with a gate isolated from the channel by a dielectric, usually oxide, and which is a Metal-Oxide-Semiconductor structure. If not oxide is used, they can be called MISFET (I - Insulator) or MDPT (D - Dielectric).

JFET (J - Junction) or transistor with a control pn junction. In such a transistor, the field blocking the channel is created by applying voltage to the control pn junction.

A Schottky field effect transistor (PTSH) or MESFET (ME - Metal) is a type of JFET that uses not a pn junction as its control, but a Schottky barrier (between a semiconductor and a metal), which has a lower voltage drop and a higher operating speed.

HEMT (High Electron Mobility Transistor) or transistor with high electron mobility - an analog of JFET and MESFET, using a heterojunction. Such transistors are the most popular in complex semiconductors.

Figure 4. BJT, MOSFET, JFET

The first transistor was germanium, but technologists from different companies quickly switched to silicon. This was due to the fact that pure germanium is actually quite poorly suited for electronic applications (although germanium transistors are still used in antique mowing equipment). Among the advantages of germanium are the high electron mobility and, most importantly, the holes, as well as the unlock voltage of pn junctions of 0.3 V versus 0.7 V for silicon, although the second can be leveled using Schottky transitions (as was done in the TTLS logic) . But because of the smaller band gap (0.67 versus 1.14 eV), germanium diodes have large reverse currents that grow strongly with temperature, which also limits the temperature range of applicability of germanium circuits, and permissible powers (on small ones the influence of reverse currents is too great, on large ones self-heating begins to interfere). To top off the temperature problems of germanium, its thermal conductivity is much lower than that of silicon, that is, it is more difficult to remove heat from powerful transistors.

Even in the early period of the history of semiconductor electronics, germanium devices had great yield problems due to the difficulty of obtaining pure crystalline germanium without screw lattice dislocations and poor surface quality, unlike silicon, which is not protected from external influences by oxide. More precisely, germanium has an oxide, but its crystal lattice coincides with the lattice of pure germanium much worse than silicon, which leads to the formation of an unacceptably large number of surface defects. These defects seriously reduce the mobility of charge carriers, negating the main advantage of germanium over silicon. And, to top it off, germanium oxide reacts with water - both during the manufacturing process of the chip and during operation. However, the rest of the semiconductors were even less fortunate, and they have no oxide at all.

Trying to solve the problem of a poor germanium surface, which prevented the field-effect transistor from being made, Shockley came up with the idea of removing the channel deep into the semiconductor. So there was a field effect transistor with a control pn junction, aka JFET. These transistors quickly found their place in analog circuits - first of all, due to the very small input current (compared with bipolar transistors) and good noise characteristics. This combination makes JFET an excellent choice for the input stage of the operational amplifier - which can be observed, for example, in this articleKen Shirrif. Moreover, when instead of separate components they began to make integrated circuits, it turned out that JFETs are quite well compatible with bipolar technology (I even made a JFET from a bipolar transistor in the figure above), and they became a common place in analog bipolar manufacturing processes. But all this was already on silicon, and germanium remained forgotten for many years, until it came his time to strengthen the position of silicon instead of fighting with it. But more on that later.

What about MOS transistors? It would seem that they were forgotten for almost a decade in connection with the rapid progress of bipolar counterparts, they nevertheless developed. All the same Bell Labs in 1959, the first working MOS transistor was created by Devon Kang and Martin Attala. On the one hand, he almost directly realized the idea of Lilienfeld, and on the other, he immediately turned out to be almost identical to many next generations of transistors that use silicon oxide as a gate dielectric. Unfortunately, Bell Labs did not then recognize the commercial potential of the invention: the prototype was significantly slower than the bipolar transistors of the time. But the potential of the novelty was recognized by Radio Corporation of America (RCA) and Fairchild, and already in 1964, MOS transistors hit the market. They were slower than bipolar counterparts, worse amplified, they were noisy and suffered a lot from electrostatic discharge, but they had zero input current, low output resistance and excellent switching capabilities. It is not so much, but it was only the beginning of a very long journey.

In the early stages of the development of semiconductor electronics, analog and radio-frequency applications dominated: the word “transistor” for a long time meant not only the transistor itself, but also a radio receiver based on it. Digital computers based on microcircuits containing one or two gates were huge (although they could not be compared with lamp ones), so there were even attempts to do the calculations in an analogous way - it’s good to integrate or differentiate one operational amplifier instead of a whole scattering of digital chips . But digital computing turned out to be more convenient and practical, as a result of which the era of digital electronic computers began, which continues today (although quantum computing and neural networks have already achieved significant success).

The main advantage of the MOS technology of that time was simplicity (I recall that until the eighties, each microelectronic company had to organize its own production): to implement the simplest working n-MOS or p-MOS circuit, only four photolithographies are needed, for CMOS - six, and for a bipolar lithography circuit, seven are needed for one type of transistor, and more precise control of diffusions and, ideally, epitaxy are still needed. The fat minus was speed: MOS transistors lost in comparison with bipolar and JFET more than an order of magnitude. At the time when CMOS allowed reaching a frequency of 5 MHz, 100-200 could be made on ESL. There is no need to talk about analog applications - MOS transistors are very poorly suitable for them due to low speeds and low gain,

While the degree of integration of the microcircuits was small, and no one particularly considered the power consumption, the advantage of emitter-coupled logic (ESL) for high-performance applications was obvious, but the MOS technology had trump cards in its sleeve, which played a bit later. In the sixties, seventies and eighties, MOS and bipolar manufacturing processes developed in parallel, with MOS used exclusively for digital circuits, and bipolar technology was used both for analog circuits and for logic based on the TTL (transistor-transistor logic, TTL) and ESL families.

Figure 5. Cray-1, the first Seymour Cray supercomputer, introduced to the public in 1975, weighed 5.5 tons, consumed 115 kW of energy and had a capacity of 160 MFLOPS at 80 MHz. It was built on four types of discrete ECL circuits, and contained about 200 thousand valves. The chip on which the logic was built is Fairchild 11C01, a dual valve containing elements 4 ILINE and 5 ILINE, and consuming 25-30 mA of current when powered by -5.2 V.

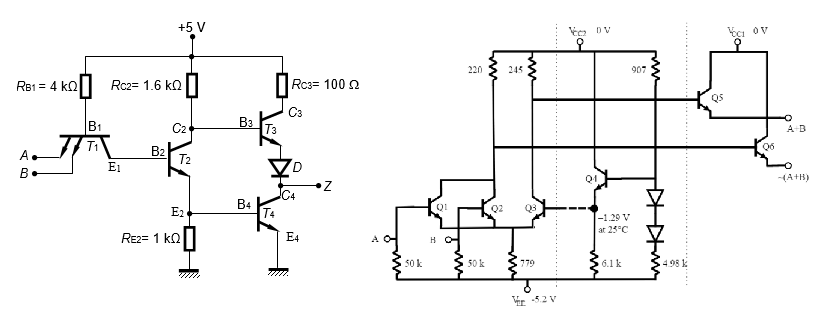

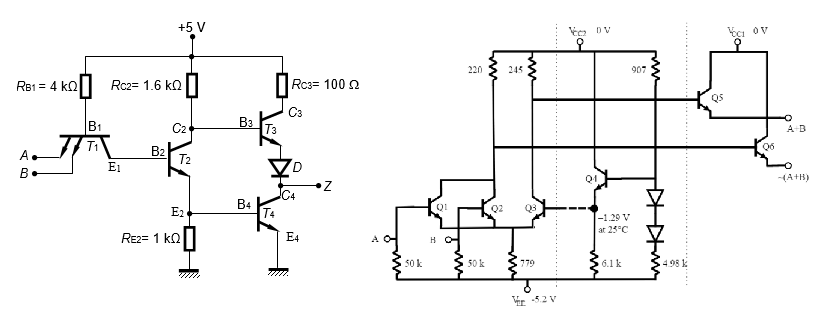

Figure 6. Logic element 2INE on TTL and 2OR / ILINE on ESL

Pay attention to the fact that the ESL logic element is just a feedback amplifier built in such a way that switching transistors are always in the “fast” linear mode and never fall into the “slow” saturation mode. The fee for speed is the current flowing continuously through the circuit, regardless of the operating frequency and the state of the inputs and outputs. It's funny, but they started trying to use this drawback some time ago as an advantage: due to the constancy of the consumed current, cryptographic circuits on ESLs are much more resistant to cracking by “listening” to the consumption current than CMOS, where the consumed current is proportional to the number of valves switching at a given time . If we replace bipolar transistors with field-effect transistors (JFET or MESFET), then we get ISL - source-related logic,

An obvious advantage of nMOS or pMOS logic is the simplicity of manufacture and the small number of transistors, which means a small area and the ability to place more elements on the chip. For comparison: the 2INE or 2ILINE element on the nMOS / pMOS consists of three elements, on the CMOS - four. On TTL, these elements contain 4-6 transistors, 1-3 diodes and 4-5 resistors. On the ESL - 4 transistors and 4 resistors (while on the ESL it is convenient to do OR and NOR, and it is inconvenient to AND and NAND). Pay attention, by the way, that all the transistors in the circuit of the TTL and ESL elements are npn. This is because making a pnp transistor in a p-substrate is more complicated than npn, and their structure is different - unlike CMOS technology, where the transistors of both types are almost the same. In addition, both pMOS and bipolar pnp, working due to holes, are slower than their “electronic” counterparts, which means in bipolar logic,

The second important advantage of the MOS technology, which manifested itself fully during the transition to CMOS and largely determined the dominance of this technology, is low power consumption. The CMOS valve consumes energy only during the switching process, and it does not have static energy consumption (for modern technologies this is not so, but we omit particulars). Typical operating current of the ESL valve is from 100 μA to 1 mA (0.5-5 mW powered by 5.2 V). Multiplying this number by, say, the billion gates that make up modern Intel processors, we get MegaWatt ... Actually, the consumption of Cray-1 you saw above. However, in the eighties it was usually a question of thousands or tens of thousands of valves, which, in theory, made it possible to keep within a reasonable power budget, even on bipolar logic. In practice, however,

Intel 8008 (1972) using ten-micron pMOS technology worked at a frequency of 500 kHz (versus 80 MHz for the much more complex Cray-1 system), Intel 8086 (1979) using three-micron nMOS and later CMOS accelerated to 10 MHz, and the original 80486 ( 1989) - up to 50 MHz.

What made designers continue to try bipolar designs, despite the rapid reduction in the difference between them and CMOS, and despite the energy consumption? The answer is simple - speed. At the dawn of time, an additional huge advantage of ESL was the minimal loss of performance when working on large capacitive loads or long lines - that is, the assembly of many cases with ESL logic was much faster than the assembly on CMOS or TTL. An increase in the degree of integration allowed CMOS to partially overcome this drawback, computer systems were still multi-chip, and each output of the signal outside the crystal (for example, to an external cache) slowed everything down. The bipolar valves, even in the late eighties, were still significantly faster, for example, due to the voltage difference several times smaller between a logical zero and a logical unit - 600-800 mV for ESL versus 5 V for CMOS, and this is in conditions when the sizes of transistors in bipolar technologies have already begun to lag behind CMOS. But if the CMOS scaling went in such a way that the specific power per unit area of the chip remained constant (this phenomenon is a “consequence” of Moore’s law and is called “Dennard scaling”), then the power of the ECL almost did not fall, because static working currents are needed for fast operation. As a result, digital circuit designers began to prefer CMOS to implement increasingly sophisticated computing system architectures, even where greater productivity was needed. But if the CMOS scaling went in such a way that the specific power per unit area of the chip remained constant (this phenomenon is a “consequence” of Moore’s law and is called “Dennard scaling”), then the power of the ECL almost did not fall, because static working currents are needed for fast operation. As a result, digital circuit designers began to prefer CMOS to implement increasingly sophisticated computing system architectures, even where greater productivity was needed. But if the CMOS scaling went in such a way that the specific power per unit area of the chip remained constant (this phenomenon is a “consequence” of Moore’s law and is called “Dennard scaling”), then the power of the ECL almost did not fall, because static working currents are needed for fast operation. As a result, digital circuit designers began to prefer CMOS to implement increasingly sophisticated computing system architectures, even where greater productivity was needed.

Help for digital bipolar technology came from where they did not wait. In the early eighties, the RISC concept was invented, which implies a significant simplification of the microprocessor and a decrease in the number of elements in it. Bipolar technologies lagged somewhat behind CMOS in the degree of integration, because bipolar LSIs were mostly analog, and there were no big reasons to rush for Moore’s law. Nevertheless, the beginning of RISC development coincided with the moment when it became realistic to pack an entire processor on one chip or at least two or three (the cache was usually external). In 1989, Intel 80486 was released, in which the FPU was executed on the same chip as the main processor - it was the first chip to use more than a million transistors.

By the time in question, many chip manufacturers began to switch to the Fabless model, providing production organization to other companies. The result of the activities of one of these companies was the development of integrated microprocessors on ESL. The company was called Bipolar Integrated Technology and was never particularly successful, from its very foundation in 1983 to its sale at PMC-Sierra in 1996. There is a suspicion that the reason for the failure was precisely the bet on bipolar digital products, but in the late eighties it was not so obvious , and the company possessed advanced bipolar processes in size and degree of integration. Their first own product was the FPU coprocessor chip, and BIT actively collaborated with two RISC pioneers - MIPS Computer Systems and Sun Microsystems - in order to create chips based on RISC architectures. for which this coprocessor would be useful. The first implementation of the MIPS II architecture - the R6000, R6010, and R6020 chipset - was implemented on ESL and was produced at BIT facilities. They also produced the SPARC B5000 processor.

Somewhat later, DEC implemented MIPS II on a single chip using Motorola's bipolar technology. So, imagine: in the yard in 1993, the leading Intel product is the Pentium (CMOS process technology 800 nm, clock frequency 66 MHz, TPD 15 W, three million transistors on a chip). The IEEE Journal of Solid-State Circuits publishes an article entitled “ A 300-MHz 115-W 32-b Bipolar ECL Microprocessor" Three hundred (!) Megahertz and one hundred and fifteen (!!!!) watts. A separate article, of course, was devoted to the body and heat sink of this monster. I highly recommend reading both articles if you have access to the IEEE library - this is an excellent document of the era in which phrases of the scale “the chip was designed largely with CAD tools developed by members of the design team” and “circuit performance has been increased significantly by using different signal swings in different applications, and by using circuit topologies (such as low-swing cascode and wired-OR circuits). " OK CAD, in 1993 only the lazy one didn’t write it himself (ask YuriPanchul , he will confirm), but wired OR!

Figure 7. Photograph of a DEC processor chip and its heatsink

Gallium arsenide is one of the first complex semiconductors to attract the attention of the microelectronic industry. The main advantage of gallium arsenide over both germanium and silicon is its enormous electron mobility. At the same time, he also has a rather wide forbidden zone, which makes it possible to work at high temperatures. The ability to work at frequencies of hundreds of MHz or even several GHz, at the same time when tens of MHz are barely squeezed out of silicon - is this not a dream? Gallium arsenide has long been considered the "material of the future", which is about to replace silicon. The first MESFET on it was created in 1966, and the last active attempts to make LSI on it were made already in the mid-nineties at Cray Corporation (they buried it finally) and at Mikron (a series of K6500 chips).

An important problem that had to be solved was the absence of native oxide in gallium arsenide. But is this a problem? After all, if there is no oxide, then there are no problems with radiation resistance! It was for these reasons that the development of gallium arsenide technology was heavily funded by the military. The durability results were really excellent, but with the technology itself it turned out to be somewhat more complicated. The need to use JFET means either the use of ISL - fast, but consuming a lot, or JFET instead of MOSFET in simulating nMOS logic - simpler, but not as fast and still pretty consuming. Another unpleasant trifle - if you do nothing, then JFET on gallium arsenide turns out to be normally open, that is, their threshold voltage is below zero, which means more power consumption than it would on a MOSFET. For, To make normally closed transistors, technologists need to try pretty hard. However, this problem was solved relatively quickly, and ED JFET technologies with normally closed (E - enhancement) active transistors and normally open (D - depleted) in loads began to be actively applied in GaAs logic. Another initially underestimated drawback is that gallium arsenide has a very high electron mobility, but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. and in GaAs logic ED JFET technologies with normally closed (E - enhancement) active transistors and normally open (D - depleted) in loads began to be actively applied. Another initially underestimated drawback is that gallium arsenide has a very high electron mobility, but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. and in GaAs logic ED JFET technologies with normally closed (E - enhancement) active transistors and normally open (D - depleted) in loads began to be actively applied. Another initially underestimated drawback is that gallium arsenide has a very high electron mobility, but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones.

And again, as with bipolar circuits, the military who really wanted to get radiation resistance came to the aid of the RISC concept in the person of the same MIPS architecture. In 1984, DARPA signed three contracts for the development of GaAs MIPS microprocessors - with RCA, McDonnell Douglas and the CDC-TI collaboration. One of the important requirements of the technical specifications was a limitation of 30 thousand transistors, with the wording “so that processors can be mass-produced with an acceptable yield”. In addition, there were conversion options for gallium arsenide from the Am2900 family from AMD, radiant resistant gallium arsenide versions of the legendary 1802 microcontrollers from the same RCA, base matrix crystals for several thousand valves and several kilobits of static memory chips.

A little later, in 1990, the MIPS architecture for space applications was also considered in Europe, but SPARC was chosen there - otherwise LEON could also be MIPS. By the way, ARM also participated in the choice of architecture for future LEONs, but was rejected due to poor software support. As a result, the first European space ARM processor will appear only next year. If you are interested in the topic of space processors and architectures for them, here is a link to an article by Maxim Gorbunov from NIISI RAS about space processors in general and about COMMODES in particular. Link, as befits a scientist, in the peer-reviewed journal Elsevier.

The most interesting decision, in my opinion, was with the group from McDonnell Douglas. I followed the publications on IEEE Transactions on Nuclear Science the history of their project (search by last name Zuleeg), from the first transistors in 1971 to my own complementary JFET technology and chips based on it in 1989. Why complementary? Because the majority (both in terms of the budget of transistors and in terms of the budget of power) of the microprocessor is cache memory, and the delay of the memory cell itself is by no means always a factor limiting the speed, but the gain in power consumption when using a complementary cell is obvious. Having made a complementary cache and nJFET logic, McDonnell Douglas got an excellent ratio of speed and consumption - and radiation resistance literally on delivery, without any additional effort.

And everything would be fine, but at the same time that tens of thousands of transistors in gallium arsenide microprocessors were counting, relatively inexpensive silicon CMOS chips with millions of transistors were already available on the commercial market, and the lag not only did not decrease, but it continued to grow. The developers of the “material of the future”, among numerous stories about achievements, wrote in some articles the phrases “yield yield of 3%, that is, one valid chip from a plate with a diameter of 75 mm,” or “if we reduce the density of defects to such and such level, then we can increase the percentage of yield from 1% to 10%, ”and similar figures appear among unrelated research groups from different countries. The capriciousness of gallium arsenide and the fragility of its crystal lattice, which interferes with the growth of large-diameter crystals and limits the levels of dopants, is well known, and this, combined with the desire to minimize the number of transistors on a microprocessor chip, makes me think that such a low yield of gallium suitable for arsenide was indeed the norm, and not only in the laboratory, but also in serial production. Moreover, according to already Soviet data, the final cost was practically independent of the complexity of the technology, because the gallium arsenide plates themselves were more expensive than any treatment. It is not surprising that no one but the military was interested in such VLSIs. and not only in the laboratory, but also in serial production. Moreover, according to already Soviet data, the final cost was practically independent of the complexity of the technology, because the gallium arsenide plates themselves were more expensive than any treatment. It is not surprising that no one but the military was interested in such VLSIs. and not only in the laboratory, but also in serial production. Moreover, according to already Soviet data, the final cost was practically independent of the complexity of the technology, because the gallium arsenide plates themselves were more expensive than any treatment. It is not surprising that no one but the military was interested in such VLSIs.

Until now, the article talked about the successes and failures of American companies, but not only in America was microelectronics, right? Unfortunately, you can tell a little about the difficult path of Soviet microelectronics in choosing technologies. The first reason - the history of American (as well as, for example, Japanese) developments is well documented by publications in specialized IEEE journals, the archive of which is now digitized, and studying them is a real pleasure for the connoisseur. Soviet microelectronics has been extremely closed throughout its history. There were few publications even in Russian, not to mention reporting on their successes to the whole world (which was done, for example, in fundamental physics). And even the little that was published is now very difficult to find and, of course, only in paper form, and not in electronic form. Therefore, by the way, to me It is now encouraging to see our Russian colleagues at international scientific conferences and industrial exhibitions, not only as guests, but also as speakers. The second reason is that most of the time Soviet microelectronics, albeit not by much, lagged behind the Americans and was actively engaged in copying successful Western developments. Moreover, from the beginning of the eighties, when the most interesting things began in the world, the USSR Ministry of Electronic Industry officially took a course towards abandoning original designs and making copies of American microcircuits — serial, and not experimental, designs and methods. Perhaps, in the conditions of limited resources, this was the right decision, but its result was an increase in the backlog (and not technological, but ideological),

As a result, although GaAs chips of medium degree of integration were used in the early nineties both in Cray supercomputers and in EC-4 EMUs, the USSR never had RISC processors that played an important role in the final stages of the struggle between CMOS, ESL, and gallium arsenide. From a technological point of view, at the same time that the Americans were developing single-crystal microprocessors, the Zelenograd “Micron” put into serial production the K6500 arsenide-gallium series of microcircuits, which included memory up to 16 kbps, base matrix crystals with up to ten thousand valves and a microprocessor set of five chips - that is, the same complex crystals as American processors. But if McDonnell Douglas, using normally closed JFETs of both types of conductivity, imitated GaOS nMOS and CMOS circuits,

Figure 8. Two variants of inverters from the McDonnell Douglas process technology and an inverter of K6500 series microcircuits.

Work on gallium arsenide continued at Mikron from 1984 until at least until 1996, but I could not find any information about what happened after that. Now all of Micron’s developments, including radiation-resistant and radio-frequency ones, are made on silicon.

Developers of silicon CMOS special-purpose circuits, meanwhile, did not stand still; by the beginning of the nineties, it became clear that providing radiation resistance on slightly modified commercial silicon CMOS technology is not much more difficult than on expensive and capricious gallium arsenide, which deprived it of its last important advantage and limited it to very narrow and specific niches - mainly discrete microwave and power devices . Moreover, even in these applications, it is now increasingly not used arsenide, but gallium nitride or various heterostructures with better temperature characteristics, higher mobility and a large breakdown field.

Figure 9. Comparison of the main properties of silicon, gallium arsenide and gallium nitride for power and microwave applications

And what, you ask, can VLSI be made on gallium nitride? Unfortunately, gallium nitride also has low hole mobility, and not only for it. Only indium antimonide has a radically greater hole mobility than silicon, but it has such a narrow forbidden zone that devices based on it can work only at cryogenic temperatures.

Do not get me wrong, other semiconductors are also needed, and they have many useful applications. When in 2000 the Nobel Committee finally decided to give an award for electronics, Jack Kilby received one half of the prize for creating the first integrated circuit, and the second - Zhores Alferov and Herbert Kremer for "developing semiconductor heterostructures used in high-frequency circuits and optoelectronics." It’s already difficult to imagine our life without heterostructure lasers, the markets for power devices based on gallium nitride and silicon carbide are growing by leaps and bounds (and on electrification of vehicles), the rapid deployment of 5G networks operating at frequencies up to 39 GHz is impossible to imagine without A3B5 semiconductors, but only silicon CMOS technology turned out to have all the necessary properties for creating computational VLSI,

However, even silicon microelectronics is much wider than only high-performance microprocessors. Right now, TSMC, simultaneously with the commissioning of the 5 nm process technology, is launching a new factory with design standards of 180 nm on 200 mm plates - because there is a demand for them and it is growing steadily. Yes, this market is much smaller than the market for chips for mobile phones, but the investment for entry is much more modest. The same can be said about the markets for silicon carbide and gallium nitride. And it is precisely complex semiconductors, microwave and power electronics, in my humble opinion, that can become a real driver of the revival of Russian microelectronics and its entry into the world market. In these areas, the competencies and equipment of Russian companies are very strong and quite close to world leaders. Everybody knows about 180, 90 and 65 nm on the Micron, but few have heard of 200 nm at the Source or 150 nm at Mikran. Few have heard that the STM factory in Catania, from which the 180 nm process at the Micron was copied, has now completely switched to the production of silicon carbide, the market of which should reach three billion dollars in five years. STM recently bought the SiC substrate manufacturer to own the entire production chain, and in principle they are doing everything to become leaders in the growing market.

Articles from the late eighties and early nineties on promising technologies - ESL on silicon, complementary to JFET on GaAs, attempts to make germanium great again - almost invariably end with the words “we showed great prospects for our idea, and literally a couple of years later, when the technologies develop a little more and they will allow more transistors on a chip / less consumption / higher yield, then we will conquer the world. ” That's just the promised progress on the money DARPA never came. Why? Because the technology of manufacturing chips increases in price with each new reduction in size, and no research grants could outstrip the volume of investments of Intel, working in the huge consumer market and well aware that technological leadership is one of the keys to commercial leadership. That is why Intel raised the flag of Moore’s law and made themselves responsible for its implementation, after which all other manufacturers were embroiled in a crazy arms race that small companies and other technologies predictably could not afford. As a result, Intel has exactly one competitor in the niche of personal computers, and in general only three companies in the world have technologies below 14 nm - TSMC, Intel and Samsung. We can say that Intel was very lucky a long time ago to start working with MOS transistors, and not with ESL, but if they were not lucky, someone else would be lucky, and the result would remain approximately the same. which small companies and other technologies predictably could not afford. As a result, Intel has exactly one competitor in the niche of personal computers, and in general only three companies in the world have technologies below 14 nm - TSMC, Intel and Samsung. We can say that Intel was very lucky a long time ago to start working with MOS transistors, and not with ESL, but if they were not lucky, someone else would be lucky, and the result would remain approximately the same. which small companies and other technologies predictably could not afford. As a result, Intel has exactly one competitor in the niche of personal computers, and in general only three companies in the world have technologies below 14 nm - TSMC, Intel and Samsung. We can say that Intel was very lucky a long time ago to start working with MOS transistors, and not with ESL, but if they were not lucky, someone else would be lucky, and the result would remain approximately the same.

The fact that the advantage of CMOS on silicon is undeniable became clear by the end of the nineties, and the disproportion of resources invested in it and in everything else became such that instead of developing new technologies for specific needs, it became more profitable and easier to attach the corresponding weighting elements to CMOS. BiKMOP technology with bipolar npn transistors appeared for analog circuit designers, non-volatile memory for embedded electronics, high-voltage DMOS transistors for power applications, SOI substrates for high temperatures or high speeds, integrated photodiodes for optoelectronics. An important driver for the integration of additional options in the CMOS technology was the concept of a system on a chip. If before the system designer chose the right chips, based on how well they cope with the target functions, not paying attention to the technology of their manufacture (in the worst case, level translators were still needed, but this is not scary), with the increase in the degree of integration, it became possible to place all the components of the system on one chip and thus kill a lot of birds with one stone - increase speed and reduce consumption due to the lack of the need to pump the capacity of the tracks on the printed circuit board, increase accuracy due to better matching of elements, increase reliability by reducing the number of solder points. But for this, all parts of the system had to be CMOS-compatible. The factories answered this “anything, just pay money for additional masks and technology options” and began to put into production specialized technological processes one by one. Extra masks are expensive and difficult, should the chip be cheap? And the analog design textbooks are already rewriting from good and fast bipolar transistors to bad and slow field ones. Is there absolutely no speed for microwave? Will we try gallium arsenide again? No, let's stretch the silicon crystal lattice with germanium to locally increase electron mobility. Sounds complicated? But it is CMOS compatible! A cheap microcontroller with flash memory and an ADC on a single chip sounds much nicer than the same on three chips, right? Digital data processing and control on the same chip as the analog part of the system became a key achievement that allowed microcontrollers to penetrate everywhere, from deep space to the electric kettle. Is there absolutely no speed for microwave? Will we try gallium arsenide again? No, let's stretch the silicon crystal lattice with germanium to locally increase electron mobility. Sounds complicated? But it is CMOS compatible! A cheap microcontroller with flash memory and an ADC on a single chip sounds much nicer than the same on three chips, right? Digital data processing and control on the same chip as the analog part of the system became a key achievement that allowed microcontrollers to penetrate everywhere, from deep space to the electric kettle. Is there absolutely no speed for microwave? Will we try gallium arsenide again? No, let's stretch the silicon crystal lattice with germanium to locally increase electron mobility. Sounds complicated? But it is CMOS compatible! A cheap microcontroller with flash memory and an ADC on a single chip sounds much nicer than the same on three chips, right? Digital data processing and control on the same chip as the analog part of the system became a key achievement that allowed microcontrollers to penetrate everywhere, from deep space to the electric kettle. A cheap microcontroller with flash memory and an ADC on a single chip sounds much nicer than the same on three chips, right? Digital data processing and control on the same chip as the analog part of the system became a key achievement that allowed microcontrollers to penetrate everywhere, from deep space to the electric kettle. A cheap microcontroller with flash memory and an ADC on a single chip sounds much nicer than the same on three chips, right? Digital data processing and control on the same chip as the analog part of the system became a key achievement that allowed microcontrollers to penetrate everywhere, from deep space to the electric kettle.

Figure 10. Schematic section of BCD technology.

My favorite example of this kind is BCD technology. BCD is Bipolar (for the analog part), CMOS (for digital), DMOS (high-voltage switches on the same chip as the control logic). Such technologies can work with voltages up to 200 Volts (and sometimes more) and allow you to implement on a single chip everything you need to control electric motors or DC / DC conversion.

Figure 11. Cross-section SOI BCD with high-voltage LDMOS transistor in an isolated pocket

BCD SOI technology complements all of the above with full dielectric isolation of the elements, which improves the thyristor effect resistance, noise isolation, increases the operating voltage, allows you to easily place high-side keys on the chip or, for example, work with negative voltages (necessary for powerful GaN keys with a threshold below zero volt). On the same chip, manufacturers offer to place non-volatile memory, IGBT, Zener diodes ... the list is long, you can play bullshit bingo at presentations) Pay attention to the depth of the silicon layer: unlike the “usual” SOI technologies, where they try to minimize it to get rid from the bottom of the drain and source pn junctions and increase the speed of operation, in the BCD the silicon layer is very deep, which helps ensure acceptable ESD and thermal performance. At the same time, transistors behave exactly like volumetric ones, only with full dielectric insulation. In addition to the target audience from manufacturers of auto electronics, they also use this to create their own not high-voltage, but radiant-resistant CMOS chips, for example, Milander or Atmel, receiving the main advantage of SOI without its usual shortcomings.

Even when Moore's law began to break due to the fact that the reduction in the size of silicon transistors came to physical limits, it turned out that continuing to bring CMOS to mind is more profitable than looking for something fundamentally new. Investments in alternatives and escape routes were, of course, invested, but the main efforts were thrown to improve silicon CMOS and ensure the continuity of developments. For the discovery of graphene, Novoselov and Game were given the Nobel Prize almost ten years ago; and where is that graphene? That's right, where the carbon nanotubes and all other materials of the future, and silicon has already begun production by the 5 nm process, and everything goes to the point that 3 or even 2 nm will also be. Of course, these are not quite real nanometers (about which I already wrote on Habr ’s here), but the packing density continues to grow; although very slow, it’s still a silicon CMOS.

Figure 12. Gate All Around Samsung transistors for 5 nm and below. The next step compared to FinFET and the answer to the question “why not pack transistors in several layers?” All the other methods have been exhausted, now it's the turn of several layers. Put seven of these transistors vertically, we get one nanometer instead of seven!

Even silicon oxide, for which everything was originally conceived, fell victim to progress in CMOS! It was replaced by complex multilayer structures based on hafnium oxide. Germanium began to be added to the channel to increase mobility (already tested in developments for BiKMOS microwave); even go so far as to test (for the time being only testing) in “silicon” transistors an n-type channel from A3B5 materials (which have high electron mobility), and a p-type channel from Germany (which has high hole mobility). About trifles like changing the shape of the channel from flat to three-dimensional (FinFET) and marketing tricks with the numbers of design standards, writing no amount is enough.

What awaits us in the future? On the one hand, the progress of silicon technology with the introduction of EUV lithography and Gate All Around transistors has already exhausted itself; The lag behind the plans of ITRS twenty years ago is already about ten years, Intel has long abandoned its famous “tick-tack”, Globalfoundries and completely refused to fall below 14 nm. The cost of one transistor per chip has passed at least at the standards of 28 nm and has since begun to grow. And most importantly, target markets have changed. For many years, the driver of reducing design standards was the personal computer market, then personal computers changed to mobile phones (around this time TSMC and Samsung caught up with Intel). But now on the mobile phone market, recession and stagnation. There was a short-term hope for mining chips, but it did not seem to materialize.

The new favorite chip maker is the Internet of things. Indeed, the market is large, fast-growing and with good long-term prospects. And most importantly - for the Internet of things, performance and the number of elements on a chip are not critical competitive advantages, but low power consumption and low cost are. This means that the main reason for reducing design standards has disappeared, but there are reasons to optimize the technology for specific tasks. Sounds interesting, doesn't it? Something like ... Globalfoundries press release on termination of work at 7 nm and concentration at 14/12 and 28/22 nm FDSOI. Moreover, the rise in price of new technologies, combined with fierce price competition, has led chip manufacturers to be in no hurry to switch to new design standards simply because they can, but remain on the old for so long. how it is reasonable to do this, as well as heterogeneous chips in the system - but now not on the board, but inside the case. The “system on a chip” was replaced by the “system in the case” (I’m already talking about this toowrote in more detail ). The appearance of systems in the case and the Internet of things, among other things, give a new chance to complex semiconductors, because placing the gallium arsenide chip in one case with silicon does not interfere anymore, and the need for a radio path in the system for the Internet of things is quite obvious. The same applies to a variety of optical devices, MEMS, sensors - and in general everything that exists in microelectronics besides CMOS on silicon

So my forecast for the further development of CMOS silicon technology and its substitutes will be that we will see a radical slowdown in progress, up to a complete stop - just as unnecessary - and we will not see anything fundamentally new in mass production (carbon nanotubes, graphene, logic on memristors) - again, as unnecessary. But undoubtedly, the use of existing technological baggage will be wider. Microelectronics continues to penetrate into all areas of our lives, the number of available niches is huge, new markets appear, grow and will continue to grow. The world's leading manufacturers are increasing production not only of the latest design standards, but also of older ones: TSMC is building a factory with 200 mm plates for the first time in 15 years, Globalfoundries last year introduced a new 180 nm BCD process technology. The world's leading manufacturers are optimistic about new niches that, with modest additions, now promise huge benefits in the foreseeable future. In general, despite the lack of progress with nanometers, it will not be boring.

If you want to know the answers to all these questions - welcome to cat. At the request of readers of previous articles, I warn you: there is a lot of text, for half an hour.

Start

In the yard between 1947 and 1948, John Bardin and Walter Brattain, led by William Shockley at The Bell Labs, study the field distribution in germanium diodes and accidentally discover a transistor effect. And although the potential usefulness of the discovery looked obvious (however, urban legends say that the discovery was declassified after military experts did not see any practical use in it), the first transistor looked like this:

Figure 2. Replica of the first transistor

Not very similar to a device suitable for industrial production, right? It took two years to make a capricious point bipolar transistor more convenient to manufacture from pn junctions, then days (well, not days, but years) of electron tubes in mass electronic equipment were counted.

Of the three pioneers of the transistor, it is true that only Shockley continued to work on them, who had little to do with the original work (because he was a theoretician and boss, not a researcher), but he took on all his fame and so he quarreled with Bardin and Brattein that they never dealt with microelectronics again. Brattain studied electrochemistry, and Bardin - superconductivity, for which he received the second Nobel Prize, becoming the only person in history who has two prizes in physics.

Shockley, having successfully broken up his research team with his ambitions, left Bell Labs and created his own Shockley Semiconductor Laboratory. The working climate in it, however, also left much to be desired, which led to the emergence of the famous “treasonous eight”, who fled from Shockley and founded Fairchild Semiconductor, which, in turn, became the parent of what we now know as “Silicon Valley” - including companies like Intel, AMD, and Intersil.

Figure 3. “Fairchildren” - companies founded by immigrants from Fairchild

. Shockley himself never recovered from the G8’s betrayal and rolled downhill: he was fired from his own company, carried away by racism and eugenics, became an outcast in the scientific community and died, forgotten by everyone . Even his children learned about death from newspapers.

Before the beginning

The history of the discovery of the transistor is widely known and much described. It is much less known that the first patent application for a transistor was not filed at all in 1947, but twenty-odd years earlier, in 1925, by an American of Austro-Hungarian origin Julius Lilienfeld. In this case, unlike the 1947 bipolar transistor, the devices described in the Lilienfeld patents were field: in the patent received in 1930, MESFET with a metal shutter, and in the 1933 patent - MOSFET, almost the same as we know it now. Lilienfeld intended to use gate aluminum and alumina as a gate dielectric.

Unfortunately, the then level of technology development did not allow Lilienfeld to realize his ideas in prototypes, but the experiments carried out by Shockley in 1948 (already alone) showed that Lilienfeld's patents described fundamentally operable devices. Actually, all the work of the Shockley group on the properties of diodes, which led to the random invention of a bipolar transistor, was part of research on the creation of a field effect transistor, much more similar in properties to vacuum tubes and therefore more understandable to physicists of those years. Nevertheless, despite the successful confirmation of the workability of Lilienfeld's ideas, in 1948 there was still no technology for the stable production of thin defect-free films of dielectrics, while a bipolar transistor turned out to be more technologically advanced and commercially promising.

A moment of terminology A

bipolar transistor or Bipolar Transistor is a transistor in which both types of charge carriers, both electrons and holes, are needed to operate, and which is controlled by the base current (multiplying it by the gain of the transistor). Usually done using pn junctions or heterojunctions, although the very first transistor, although it was bipolar, was not a junction transistor. A popular English acronym is BJT, bipolar junction transistor.

For transistors at heterojunctions (transitions between different materials, for example, gallium arsenide and gallium aluminitride), the acronym HBT (Heterojunction Bipolar Transistor) is used.

A unipolar or field effect transistor, also known as a Field-Effect Transistor or FET, is a transistor whose operation is based on the field effect and requires only one type of charge carrier. The field effect transistor has a channel controlled by the voltage applied to the gate. Field-effect transistors are quite a few varieties.

The usual MOSFET or MOSFET is a transistor with a gate isolated from the channel by a dielectric, usually oxide, and which is a Metal-Oxide-Semiconductor structure. If not oxide is used, they can be called MISFET (I - Insulator) or MDPT (D - Dielectric).

JFET (J - Junction) or transistor with a control pn junction. In such a transistor, the field blocking the channel is created by applying voltage to the control pn junction.

A Schottky field effect transistor (PTSH) or MESFET (ME - Metal) is a type of JFET that uses not a pn junction as its control, but a Schottky barrier (between a semiconductor and a metal), which has a lower voltage drop and a higher operating speed.

HEMT (High Electron Mobility Transistor) or transistor with high electron mobility - an analog of JFET and MESFET, using a heterojunction. Such transistors are the most popular in complex semiconductors.

Figure 4. BJT, MOSFET, JFET

Germanium

The first transistor was germanium, but technologists from different companies quickly switched to silicon. This was due to the fact that pure germanium is actually quite poorly suited for electronic applications (although germanium transistors are still used in antique mowing equipment). Among the advantages of germanium are the high electron mobility and, most importantly, the holes, as well as the unlock voltage of pn junctions of 0.3 V versus 0.7 V for silicon, although the second can be leveled using Schottky transitions (as was done in the TTLS logic) . But because of the smaller band gap (0.67 versus 1.14 eV), germanium diodes have large reverse currents that grow strongly with temperature, which also limits the temperature range of applicability of germanium circuits, and permissible powers (on small ones the influence of reverse currents is too great, on large ones self-heating begins to interfere). To top off the temperature problems of germanium, its thermal conductivity is much lower than that of silicon, that is, it is more difficult to remove heat from powerful transistors.

Even in the early period of the history of semiconductor electronics, germanium devices had great yield problems due to the difficulty of obtaining pure crystalline germanium without screw lattice dislocations and poor surface quality, unlike silicon, which is not protected from external influences by oxide. More precisely, germanium has an oxide, but its crystal lattice coincides with the lattice of pure germanium much worse than silicon, which leads to the formation of an unacceptably large number of surface defects. These defects seriously reduce the mobility of charge carriers, negating the main advantage of germanium over silicon. And, to top it off, germanium oxide reacts with water - both during the manufacturing process of the chip and during operation. However, the rest of the semiconductors were even less fortunate, and they have no oxide at all.

Trying to solve the problem of a poor germanium surface, which prevented the field-effect transistor from being made, Shockley came up with the idea of removing the channel deep into the semiconductor. So there was a field effect transistor with a control pn junction, aka JFET. These transistors quickly found their place in analog circuits - first of all, due to the very small input current (compared with bipolar transistors) and good noise characteristics. This combination makes JFET an excellent choice for the input stage of the operational amplifier - which can be observed, for example, in this articleKen Shirrif. Moreover, when instead of separate components they began to make integrated circuits, it turned out that JFETs are quite well compatible with bipolar technology (I even made a JFET from a bipolar transistor in the figure above), and they became a common place in analog bipolar manufacturing processes. But all this was already on silicon, and germanium remained forgotten for many years, until it came his time to strengthen the position of silicon instead of fighting with it. But more on that later.

Field effect transistors

What about MOS transistors? It would seem that they were forgotten for almost a decade in connection with the rapid progress of bipolar counterparts, they nevertheless developed. All the same Bell Labs in 1959, the first working MOS transistor was created by Devon Kang and Martin Attala. On the one hand, he almost directly realized the idea of Lilienfeld, and on the other, he immediately turned out to be almost identical to many next generations of transistors that use silicon oxide as a gate dielectric. Unfortunately, Bell Labs did not then recognize the commercial potential of the invention: the prototype was significantly slower than the bipolar transistors of the time. But the potential of the novelty was recognized by Radio Corporation of America (RCA) and Fairchild, and already in 1964, MOS transistors hit the market. They were slower than bipolar counterparts, worse amplified, they were noisy and suffered a lot from electrostatic discharge, but they had zero input current, low output resistance and excellent switching capabilities. It is not so much, but it was only the beginning of a very long journey.

Bipolar Logic and RISC

In the early stages of the development of semiconductor electronics, analog and radio-frequency applications dominated: the word “transistor” for a long time meant not only the transistor itself, but also a radio receiver based on it. Digital computers based on microcircuits containing one or two gates were huge (although they could not be compared with lamp ones), so there were even attempts to do the calculations in an analogous way - it’s good to integrate or differentiate one operational amplifier instead of a whole scattering of digital chips . But digital computing turned out to be more convenient and practical, as a result of which the era of digital electronic computers began, which continues today (although quantum computing and neural networks have already achieved significant success).

The main advantage of the MOS technology of that time was simplicity (I recall that until the eighties, each microelectronic company had to organize its own production): to implement the simplest working n-MOS or p-MOS circuit, only four photolithographies are needed, for CMOS - six, and for a bipolar lithography circuit, seven are needed for one type of transistor, and more precise control of diffusions and, ideally, epitaxy are still needed. The fat minus was speed: MOS transistors lost in comparison with bipolar and JFET more than an order of magnitude. At the time when CMOS allowed reaching a frequency of 5 MHz, 100-200 could be made on ESL. There is no need to talk about analog applications - MOS transistors are very poorly suitable for them due to low speeds and low gain,

While the degree of integration of the microcircuits was small, and no one particularly considered the power consumption, the advantage of emitter-coupled logic (ESL) for high-performance applications was obvious, but the MOS technology had trump cards in its sleeve, which played a bit later. In the sixties, seventies and eighties, MOS and bipolar manufacturing processes developed in parallel, with MOS used exclusively for digital circuits, and bipolar technology was used both for analog circuits and for logic based on the TTL (transistor-transistor logic, TTL) and ESL families.

Figure 5. Cray-1, the first Seymour Cray supercomputer, introduced to the public in 1975, weighed 5.5 tons, consumed 115 kW of energy and had a capacity of 160 MFLOPS at 80 MHz. It was built on four types of discrete ECL circuits, and contained about 200 thousand valves. The chip on which the logic was built is Fairchild 11C01, a dual valve containing elements 4 ILINE and 5 ILINE, and consuming 25-30 mA of current when powered by -5.2 V.

Figure 6. Logic element 2INE on TTL and 2OR / ILINE on ESL

Pay attention to the fact that the ESL logic element is just a feedback amplifier built in such a way that switching transistors are always in the “fast” linear mode and never fall into the “slow” saturation mode. The fee for speed is the current flowing continuously through the circuit, regardless of the operating frequency and the state of the inputs and outputs. It's funny, but they started trying to use this drawback some time ago as an advantage: due to the constancy of the consumed current, cryptographic circuits on ESLs are much more resistant to cracking by “listening” to the consumption current than CMOS, where the consumed current is proportional to the number of valves switching at a given time . If we replace bipolar transistors with field-effect transistors (JFET or MESFET), then we get ISL - source-related logic,

An obvious advantage of nMOS or pMOS logic is the simplicity of manufacture and the small number of transistors, which means a small area and the ability to place more elements on the chip. For comparison: the 2INE or 2ILINE element on the nMOS / pMOS consists of three elements, on the CMOS - four. On TTL, these elements contain 4-6 transistors, 1-3 diodes and 4-5 resistors. On the ESL - 4 transistors and 4 resistors (while on the ESL it is convenient to do OR and NOR, and it is inconvenient to AND and NAND). Pay attention, by the way, that all the transistors in the circuit of the TTL and ESL elements are npn. This is because making a pnp transistor in a p-substrate is more complicated than npn, and their structure is different - unlike CMOS technology, where the transistors of both types are almost the same. In addition, both pMOS and bipolar pnp, working due to holes, are slower than their “electronic” counterparts, which means in bipolar logic,

The second important advantage of the MOS technology, which manifested itself fully during the transition to CMOS and largely determined the dominance of this technology, is low power consumption. The CMOS valve consumes energy only during the switching process, and it does not have static energy consumption (for modern technologies this is not so, but we omit particulars). Typical operating current of the ESL valve is from 100 μA to 1 mA (0.5-5 mW powered by 5.2 V). Multiplying this number by, say, the billion gates that make up modern Intel processors, we get MegaWatt ... Actually, the consumption of Cray-1 you saw above. However, in the eighties it was usually a question of thousands or tens of thousands of valves, which, in theory, made it possible to keep within a reasonable power budget, even on bipolar logic. In practice, however,

Intel 8008 (1972) using ten-micron pMOS technology worked at a frequency of 500 kHz (versus 80 MHz for the much more complex Cray-1 system), Intel 8086 (1979) using three-micron nMOS and later CMOS accelerated to 10 MHz, and the original 80486 ( 1989) - up to 50 MHz.

What made designers continue to try bipolar designs, despite the rapid reduction in the difference between them and CMOS, and despite the energy consumption? The answer is simple - speed. At the dawn of time, an additional huge advantage of ESL was the minimal loss of performance when working on large capacitive loads or long lines - that is, the assembly of many cases with ESL logic was much faster than the assembly on CMOS or TTL. An increase in the degree of integration allowed CMOS to partially overcome this drawback, computer systems were still multi-chip, and each output of the signal outside the crystal (for example, to an external cache) slowed everything down. The bipolar valves, even in the late eighties, were still significantly faster, for example, due to the voltage difference several times smaller between a logical zero and a logical unit - 600-800 mV for ESL versus 5 V for CMOS, and this is in conditions when the sizes of transistors in bipolar technologies have already begun to lag behind CMOS. But if the CMOS scaling went in such a way that the specific power per unit area of the chip remained constant (this phenomenon is a “consequence” of Moore’s law and is called “Dennard scaling”), then the power of the ECL almost did not fall, because static working currents are needed for fast operation. As a result, digital circuit designers began to prefer CMOS to implement increasingly sophisticated computing system architectures, even where greater productivity was needed. But if the CMOS scaling went in such a way that the specific power per unit area of the chip remained constant (this phenomenon is a “consequence” of Moore’s law and is called “Dennard scaling”), then the power of the ECL almost did not fall, because static working currents are needed for fast operation. As a result, digital circuit designers began to prefer CMOS to implement increasingly sophisticated computing system architectures, even where greater productivity was needed. But if the CMOS scaling went in such a way that the specific power per unit area of the chip remained constant (this phenomenon is a “consequence” of Moore’s law and is called “Dennard scaling”), then the power of the ECL almost did not fall, because static working currents are needed for fast operation. As a result, digital circuit designers began to prefer CMOS to implement increasingly sophisticated computing system architectures, even where greater productivity was needed.

Help for digital bipolar technology came from where they did not wait. In the early eighties, the RISC concept was invented, which implies a significant simplification of the microprocessor and a decrease in the number of elements in it. Bipolar technologies lagged somewhat behind CMOS in the degree of integration, because bipolar LSIs were mostly analog, and there were no big reasons to rush for Moore’s law. Nevertheless, the beginning of RISC development coincided with the moment when it became realistic to pack an entire processor on one chip or at least two or three (the cache was usually external). In 1989, Intel 80486 was released, in which the FPU was executed on the same chip as the main processor - it was the first chip to use more than a million transistors.

By the time in question, many chip manufacturers began to switch to the Fabless model, providing production organization to other companies. The result of the activities of one of these companies was the development of integrated microprocessors on ESL. The company was called Bipolar Integrated Technology and was never particularly successful, from its very foundation in 1983 to its sale at PMC-Sierra in 1996. There is a suspicion that the reason for the failure was precisely the bet on bipolar digital products, but in the late eighties it was not so obvious , and the company possessed advanced bipolar processes in size and degree of integration. Their first own product was the FPU coprocessor chip, and BIT actively collaborated with two RISC pioneers - MIPS Computer Systems and Sun Microsystems - in order to create chips based on RISC architectures. for which this coprocessor would be useful. The first implementation of the MIPS II architecture - the R6000, R6010, and R6020 chipset - was implemented on ESL and was produced at BIT facilities. They also produced the SPARC B5000 processor.

Somewhat later, DEC implemented MIPS II on a single chip using Motorola's bipolar technology. So, imagine: in the yard in 1993, the leading Intel product is the Pentium (CMOS process technology 800 nm, clock frequency 66 MHz, TPD 15 W, three million transistors on a chip). The IEEE Journal of Solid-State Circuits publishes an article entitled “ A 300-MHz 115-W 32-b Bipolar ECL Microprocessor" Three hundred (!) Megahertz and one hundred and fifteen (!!!!) watts. A separate article, of course, was devoted to the body and heat sink of this monster. I highly recommend reading both articles if you have access to the IEEE library - this is an excellent document of the era in which phrases of the scale “the chip was designed largely with CAD tools developed by members of the design team” and “circuit performance has been increased significantly by using different signal swings in different applications, and by using circuit topologies (such as low-swing cascode and wired-OR circuits). " OK CAD, in 1993 only the lazy one didn’t write it himself (ask YuriPanchul , he will confirm), but wired OR!

Figure 7. Photograph of a DEC processor chip and its heatsink

We had 2 levels of logical zeros and ones, 75 elements in the library, 5 proprietary CAD systems, half-circuits in C and a whole host of tracing methods of all kinds and colors, topological primitives, as well as a block tree, three layers of metallization, radiation resistance, kilobytes of cache and two dozen testbenches. Not that it was a necessary supply for design, but if you started to assemble a microprocessor, it becomes difficult to stop. The only thing that worried me was Wired OR. Nothing in the world is more helpless, irresponsible and vicious than Wired OR. I knew that sooner or later we will move on to this rubbish.Speaking of radiation resistance and other special gadgets. The story of the opening of the transistor in 1948, as well as many other lesser-known events (for example, the creation of Silicon Valley with the money of the US military) shows us that the myth of the military as people who are ready to rivet fifth-generation fighters on the loose 74 series and TL431, but about design standards of 28 or 16 nm heard only on television, at least unfair. Real military not only constantly apply new technologies (after appropriate certification, which sometimes takes considerable time), but also finance their creation. So, the well-known “seventy-fourth” series of TTL-microcircuits is a simplified “fifty-fourth”, originally created for military applications. The same can be said about the silicon-on-insulator technology, which AMD has successfully used for many years, and about many other technologies that have long and firmly entered our everyday life. So, the radiation resistance of the ESL was on average higher than that of the CMOS analogs (it is probably higher now) - because when you have a large constant operating current in the valve, you are not very worried about leakage or a drop in the transistor gain. This fact has additionally prolonged the life of both ESL developments and the hero of the next part of my story.

Gallium arsenide - the material of the future

Gallium arsenide is one of the first complex semiconductors to attract the attention of the microelectronic industry. The main advantage of gallium arsenide over both germanium and silicon is its enormous electron mobility. At the same time, he also has a rather wide forbidden zone, which makes it possible to work at high temperatures. The ability to work at frequencies of hundreds of MHz or even several GHz, at the same time when tens of MHz are barely squeezed out of silicon - is this not a dream? Gallium arsenide has long been considered the "material of the future", which is about to replace silicon. The first MESFET on it was created in 1966, and the last active attempts to make LSI on it were made already in the mid-nineties at Cray Corporation (they buried it finally) and at Mikron (a series of K6500 chips).

An important problem that had to be solved was the absence of native oxide in gallium arsenide. But is this a problem? After all, if there is no oxide, then there are no problems with radiation resistance! It was for these reasons that the development of gallium arsenide technology was heavily funded by the military. The durability results were really excellent, but with the technology itself it turned out to be somewhat more complicated. The need to use JFET means either the use of ISL - fast, but consuming a lot, or JFET instead of MOSFET in simulating nMOS logic - simpler, but not as fast and still pretty consuming. Another unpleasant trifle - if you do nothing, then JFET on gallium arsenide turns out to be normally open, that is, their threshold voltage is below zero, which means more power consumption than it would on a MOSFET. For, To make normally closed transistors, technologists need to try pretty hard. However, this problem was solved relatively quickly, and ED JFET technologies with normally closed (E - enhancement) active transistors and normally open (D - depleted) in loads began to be actively applied in GaAs logic. Another initially underestimated drawback is that gallium arsenide has a very high electron mobility, but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. and in GaAs logic ED JFET technologies with normally closed (E - enhancement) active transistors and normally open (D - depleted) in loads began to be actively applied. Another initially underestimated drawback is that gallium arsenide has a very high electron mobility, but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. and in GaAs logic ED JFET technologies with normally closed (E - enhancement) active transistors and normally open (D - depleted) in loads began to be actively applied. Another initially underestimated drawback is that gallium arsenide has a very high electron mobility, but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones. but not holes. You can do a lot of interesting things on nJFET (for example, high-frequency amplifiers), but with a consumption of 1 mW per valve it is quite difficult to talk about VLSI, and if you make low-power complementary circuits, then due to the low mobility of the holes they will be even slower than silicon ones.

And again, as with bipolar circuits, the military who really wanted to get radiation resistance came to the aid of the RISC concept in the person of the same MIPS architecture. In 1984, DARPA signed three contracts for the development of GaAs MIPS microprocessors - with RCA, McDonnell Douglas and the CDC-TI collaboration. One of the important requirements of the technical specifications was a limitation of 30 thousand transistors, with the wording “so that processors can be mass-produced with an acceptable yield”. In addition, there were conversion options for gallium arsenide from the Am2900 family from AMD, radiant resistant gallium arsenide versions of the legendary 1802 microcontrollers from the same RCA, base matrix crystals for several thousand valves and several kilobits of static memory chips.

A little later, in 1990, the MIPS architecture for space applications was also considered in Europe, but SPARC was chosen there - otherwise LEON could also be MIPS. By the way, ARM also participated in the choice of architecture for future LEONs, but was rejected due to poor software support. As a result, the first European space ARM processor will appear only next year. If you are interested in the topic of space processors and architectures for them, here is a link to an article by Maxim Gorbunov from NIISI RAS about space processors in general and about COMMODES in particular. Link, as befits a scientist, in the peer-reviewed journal Elsevier.