The Impact of Transparent Huge Pages on System Performance

The article is published on behalf of John Akhaltsev, Jiga

Tinkoff.ru today is not just a bank, it is an IT company. It provides not only banking services, but also builds an ecosystem around them.

We at Tinkoff.ru enter into partnership with various services to improve the quality of customer service, and help to become better. For example, we carried out load testing and performance analysis of one of these services that helped to find bottlenecks in the system - included Transparent Huge Pages in OS configs.

If you want to know how to conduct an analysis of system performance and what came of it with us, then welcome to cat.

Description of the problem

At the moment, the service architecture is:

- Nginx web server for handling http connections

- Php-fpm for php process control

- Redis for caching

- PostgreSQL for data storage

- One-stop shopping solution

The main problem that we found during the next sale under high load was the high utilization of cpu, while the processor time in kernel mode (system time) increased and was longer than the time in user mode (user time).

- User Time - the time that the processor spends on the user's tasks. This is the main thing you pay for when buying a processor.

- System time - the amount of time the system spends on paging, changing contexts, launching scheduled tasks, and other system tasks.

Determination of the primary characteristics of the system

To begin with, we collected a load circuit with resources close to productive, and compiled a load profile corresponding to a normal load on a normal day.

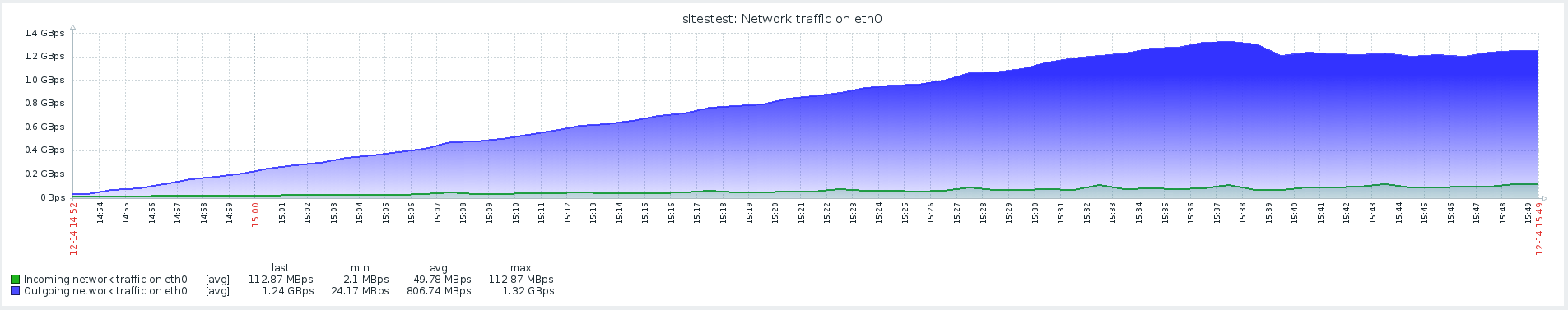

Gatling version 3 was chosen as the shelling tool, and the shelling itself was carried out inside the local network via gitlab-runner. The location of agents and targets in one local network is due to reduced network costs, so we focus on checking the execution of the code itself, and not on the performance of the infrastructure where the system is deployed.

When determining the primary characteristics of the system, a scenario with a linearly increasing load with an http configuration is suitable:

val httpConfig: HttpProtocolBuilder = http

.baseUrl("https://test.host.ru")

.inferHtmlResources() //Скачивает все найденные ресурсы на странице

.disableCaching // Отключение кеша, каждый новый цикл сценарий выполняется "новым" пользователем.

.disableFollowRedirect // Отключение редиректов/// MULTIPLIER задаётся через JAVA_OPTS

setUp(

Scenario.inject(

rampUsers(100 * MULTIPLIER) during (200 * MULTIPLIER seconds))

).protocols(httpConfig)

.maxDuration(1 hour)At this stage, we implemented a script to open the main page and download all resources

The results of this test showed a maximum performance of 1500 rps, a further increase in load intensity led to degradation of the system associated with increasing softirq time.

Softirq is a delayed interrupt mechanism and is described in the kernel / softirq.s file. At the same time, they hammer the queue of instructions to the processor, preventing them from making useful calculations in user mode. Interrupt handlers can also delay additional work with network packets in OS threads (system time). Briefly about the work of the network stack and optimizations can be found in a separate article .

Suspicion of the main problem was not confirmed, because there was a much longer system time on the prod with less network activity.

User scripts

The next step was to develop custom scripts and add something more than just opening a page with pictures. The profile included heavy operations, which involved the site and database code to the fullest, and not the web server that gave static resources.

The test with stable load was launched at a lower intensity from the maximum, a redirect transition was added to the configuration:

val httpConfig: HttpProtocolBuilder = http

.baseUrl("https://test.host.ru")

.inferHtmlResources() //Скачивает все найденные ресурсы на странице

.disableCaching // Отключение кеша, каждый новый цикл сценарий выполняется "новым" пользователем./// MULTIPLIER задаётся через JAVA_OPTS

setUp(

MainScenario

.inject(rampUsers(50 * MULTIPLIER) during (200 * MULTIPLIER seconds)),

SideScenario

.inject(rampUsers(100 * MULTIPLIER) during (200 * MULTIPLIER seconds))

).protocols(httpConfig)

.maxDuration(2 hours)

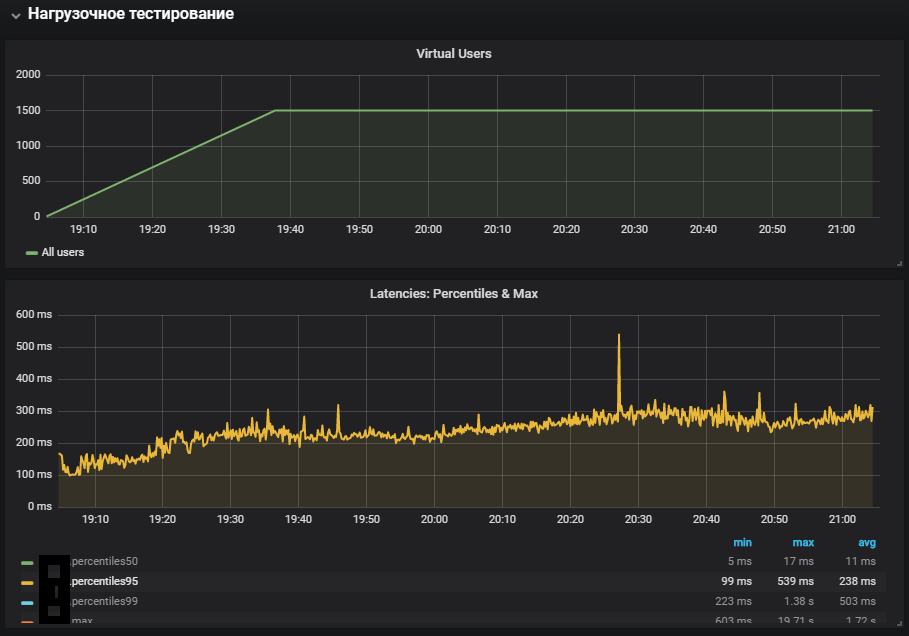

The most complete use of systems showed an increase in the system time metric, as well as its growth during the stability test. The issue with the production environment was reproduced.

Networking with Redis

When analyzing problems, it is very important to have monitoring of all components of the system in order to understand how it works and what impact the supplied load has on it.

With the advent of Redis monitoring, it became possible to look not at the general metrics of the system, but at its specific components. The scenario for stress testing was also changed, which, together with additional monitoring, helped to approach the localization of the problem.

In monitoring, Redis saw a similar picture with cpu utilization, or rather, system time is significantly longer than user time, while the main utilization of cpu was in the SET operation, that is, the allocation of RAM to store the value.

To eliminate the impact of network interaction with Redis, it was decided to test the hypothesis and switch Redis to a UNIX socket instead of a tcp socket. This was done right in the framework through which php-fpm connects to the database. In the file /yiisoft/yii/framework/caching/CRedisCache.php, we replaced the line from host: port with the hardcode redis.sock. Read more about socket performance in the article .

/**

* Establishes a connection to the redis server.

* It does nothing if the connection has already been established.

* @throws CException if connecting fails

*/protectedfunctionconnect(){

$this->_socket=@stream_socket_client(

// $this->hostname.':'.$this->port,"unix:///var/run/redis/redis.sock",

$errorNumber,

$errorDescription,

$this->timeout ? $this->timeout : ini_get("default_socket_timeout"),

$this->options

);

if ($this->_socket)

{

if($this->password!==null)

$this->executeCommand('AUTH',array($this->password));

$this->executeCommand('SELECT',array($this->database));

}

else

{

$this->_socket = null;

thrownew CException('Failed to connect to redis: '.$errorDescription,(int)$errorNumber);

}

}Unfortunately, this did not have much effect. CPU utilization stabilized a little, but did not solve our problem - most of the CPU utilization was in kernel mode computing.

Benchmark using stress and identifying THP problems

The stress utility helped to localize the problem - a simple workload generator for POSIX systems, which can load individual system components, for example, CPU, Memory, IO.

Testing is supposed on the hardware and OS version:

Ubuntu 18.04.1 LTS

12 Intel® Xeon® CPU

The utility is installed using the command:

sudo apt-get install stressWe look at how the CPU is utilized under load, run a test that creates workers for calculating square roots with a duration of 300 seconds:

-c, --cpu N spawn N workers spinning on sqrt()

> stress --cpu 12 --timeout 300s

stress: info: [39881] dispatching hogs: 12 cpu, 0 io, 0 vm, 0 hdd

The graph shows complete utilization in user mode - this means that all processor cores are loaded and useful calculations are performed, not system service calls.

The next step is to use resources when working intensively with io. Run the test for 300 seconds with the creation of 12 workers who execute sync (). The sync command writes data buffered in memory to disk. The kernel stores data in memory to avoid frequent (usually slow) disk read and write operations. The sync () command ensures that everything stored in memory is written to disk.

-i, --io N spawn N workers spinning on sync()

> stress --io 12 --timeout 300s

stress: info: [39907] dispatching hogs: 0 cpu, 0 io, 0 vm, 12 hdd

We see that the processor is mainly engaged in processing calls in kernel mode and a bit in iowait, you can also see> 35k ops writes to disk. This behavior is similar to a problem with high system time, the causes of which we are analyzing. But here there are several differences: these are iowait and iops are larger than on the productive circuit, respectively, this does not fit our case.

It is time to check your memory. We launch 20 workers who will allocate and free memory for 300 seconds using the command:

-m, --vm N spawn N workers spinning on malloc()/free()

> stress -m 20 --timeout 300s

stress: info: [39954] dispatching hogs: 0 cpu, 0 io, 20 vm, 0 hdd

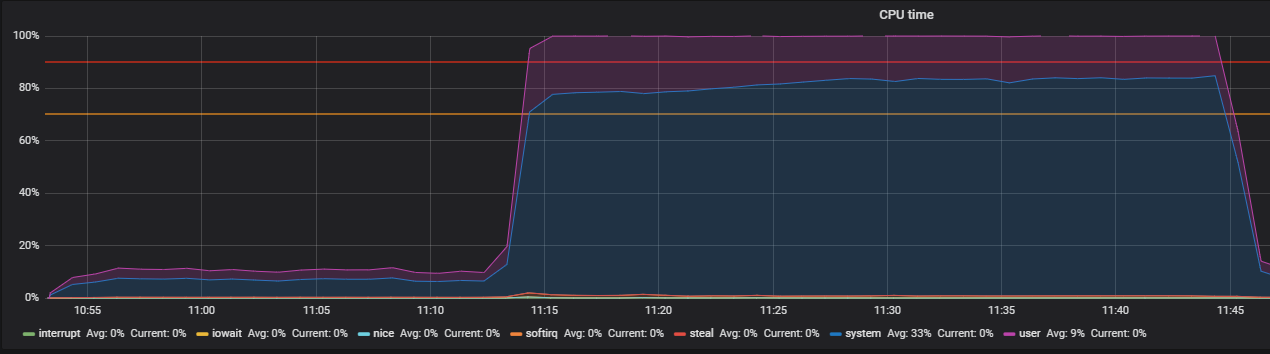

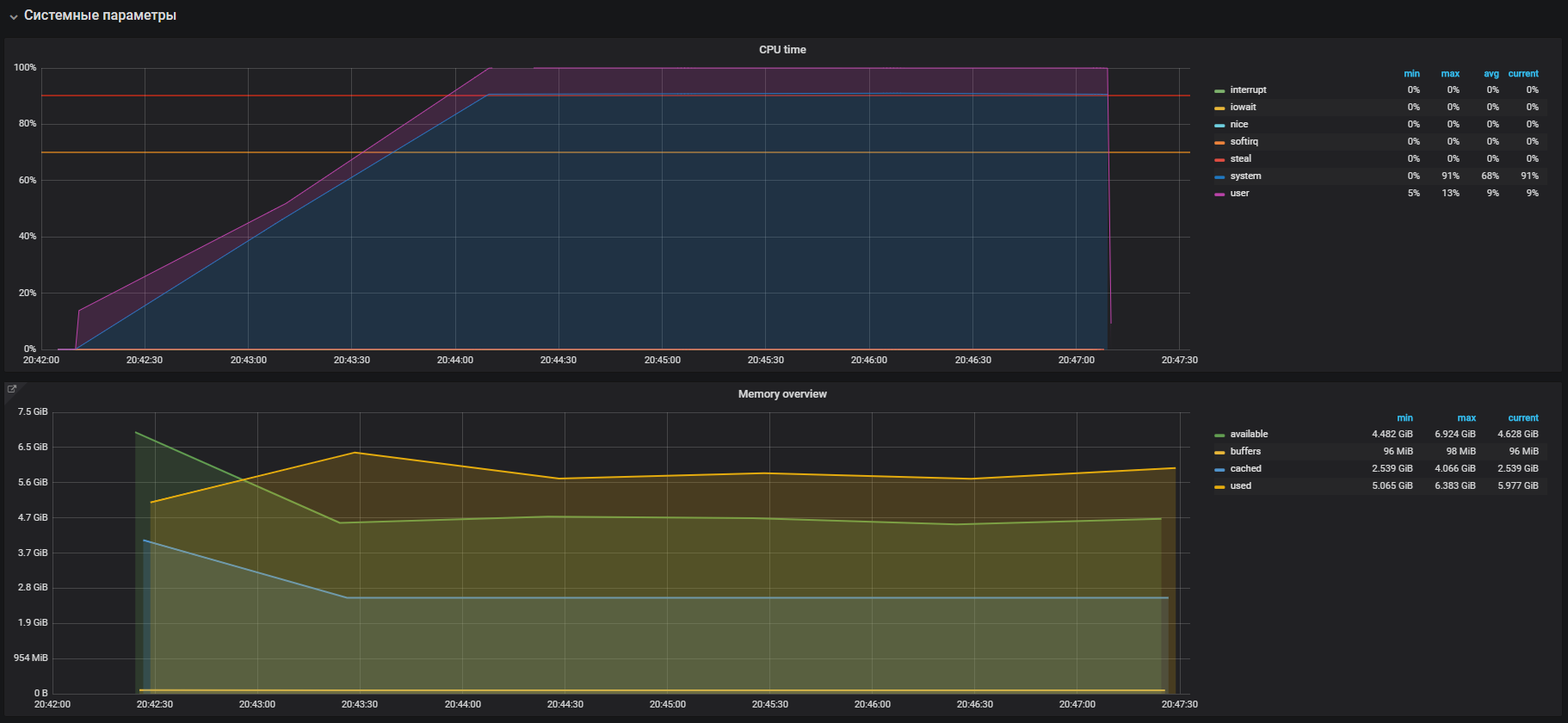

Immediately we see the high utilization of the CPU in system mode and a little in user mode, as well as the use of RAM more than 2 GB.

This case is very similar to the problem with the prod, which is confirmed by the large use of memory on load tests. Therefore, the problem must be sought in the memory operation. Allocation and freeing of memory occurs using malloc and free calls, respectively, which will eventually be processed by the kernel system calls, which means they will be displayed in the CPU utilization as system time.

In most modern operating systems, virtual memory is organized using paging, with this approach the entire memory area is divided into pages of a fixed length, for example 4096 bytes (default for many platforms), and when allocating, for example, 2 GB of memory, the memory manager will have to operate more than 500,000 pages. In this approach, there are large overheads for management and Huge pages and Transparent Huge Pages technologies were invented to reduce them, with their help you can increase the page size, for example, to 2MB, which will significantly reduce the number of pages in the memory heap. The only difference between the technologies is that for Huge pages we must explicitly set up the environment and teach the program how to work with them, while Transparent Huge Pages works “transparently” for programs.

THP and problem solving

If you google information about Transparent Huge Pages, you can see in the search results a lot of pages with the questions "How to turn off THP".

As it turned out, this “cool” feature was introduced into the Linux kernel by Red Hat Corporation, the essence of the feature is that applications can transparently work with memory, as if they work with real Huge Page. According to the benchmarks, THP accelerates the abstract application by 10%, you can see more details in the presentation, but in reality everything is different. In some cases, THP causes an unreasonable increase in CPU consumption in systems. For more information, see Oracle’s recommendations.

We go and check our parameter. As it turned out, THP is turned on by default, we turn it off with the command:

echo never > /sys/kernel/mm/transparent_hugepage/enabledWe confirm with the test before turning off THP and after, on the load profile:

setUp(

MainScenario.inject(

rampUsers(150) during (200 seconds)),

Peak.inject(

nothingFor(20 minutes), rampUsers(5000) during (30 minutes))

).protocols(httpConfig)

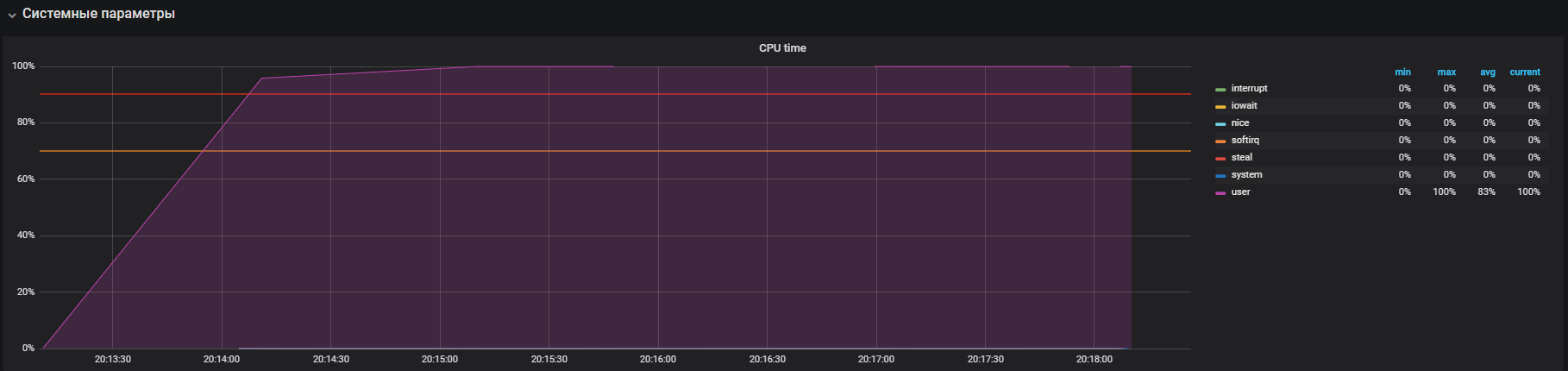

We watched this picture before turning off THP

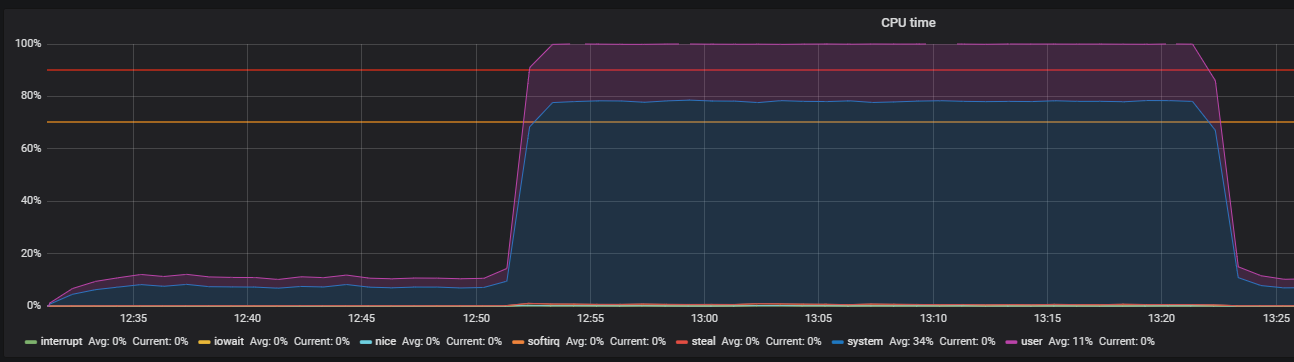

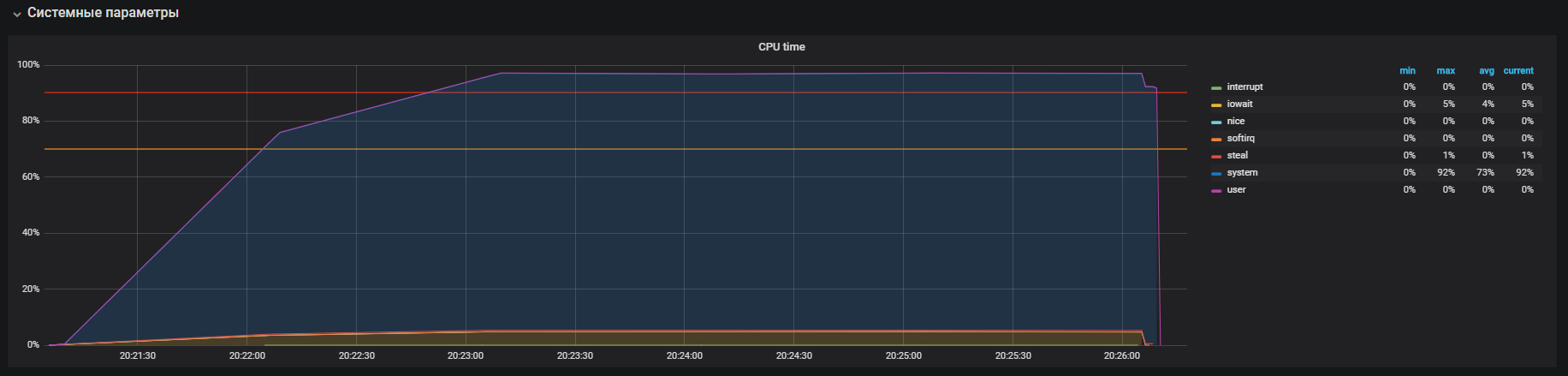

After turning off THP, we can observe already reduced resource utilization.

The main problem was localized. The reason was the

mechanism of transparent large pages included by default in the OS . After disabling the THP option, cpu utilization in system mode decreased by at least 2 times, which freed up resources for user mode. During the analysis of the main problem, “bottlenecks” of interaction with the network stack of the OS and Redis were also found, which is the reason for a deeper study. But this is a completely different story.

Conclusion

In conclusion, I would like to give some tips for successfully searching for performance problems:

- Before researching system performance, carefully understand its architecture and the interaction of components.

- Configure monitoring for all system components and track, if there are not enough standard metrics, go deeper and expand.

- Read manuals on the used systems.

- Check the default settings in the configuration files of the OS and system components.