How artificial intelligence is changing science

- Transfer

The latest AI algorithms understand the evolution of galaxies, calculate the functions of quantum waves, discover new chemical compounds, and so on. Is there anything in the work of scientists that cannot be automated?

No person or even a group of people can keep up with the waterfall information produced by a huge number of experiments in physics and astronomy. Some of them leave terabytes of data daily, and this flow is only increasing. The Square Kilometre Array antenna array, a radio telescope that they plan to turn on in the mid-2020s, will produce annually a volume of data comparable to the entire Internet.

This flood of data has led many scientists to turn to artificial intelligence (AI) for help. With minimal human involvement, AI systems such as neural networks — computer-simulated networks of neurons that mimic brain function — are able to wade through mountains of data, finding anomalies and recognizing sequences that people would never have noticed.

Of course, the help of computers in scientific research has been used for about 75 years, and the method of manually sorting data in search of meaningful sequences was invented thousands of years ago. But some scholars argue that the latest technology in machine learning and AI represent a fundamentally new way of doing science. One of these approaches, generative modeling (GM), can help determine the most probable theory among competing explanations of observed data, based only on these data, and without any pre-programmed knowledge of what physical processes can occur in the system under study . Proponents of GM consider it innovative enough to be seen as a potential “third way” to study the universe.

Usually we gain knowledge of nature through observation. How Johannes Kepler studied the tables of the position of the planets of Tycho Brahe, trying to find the underlying pattern (he eventually realized that the planets move in elliptical orbits). Science has also moved forward through simulations. An astronomer can simulate the movement of the Milky Way and the neighboring galaxy, Andromeda, and predict that they will collide in a few billion years. Observations and simulations help scientists create hypotheses that can be verified using future observations. GM is different from both of these approaches.

“Essentially, this is the third approach, between observation and simulation,” says Kevin Shavinsky, astrophysicist and one of the most active supporters of GM, until recently, worked at the Swiss Federal Institute of Technology. “This is a different way to attack the task.”

Some scientists consider GM and other technologies to be simply powerful tools for practicing traditional science. But most agree that AI will significantly affect this process, and its role in science will only grow. Brian Nord , an astrophysicist at the Fermi National Accelerator Laboratory who uses artificial neural networks to study space, is one of those who fear that none of the human scientist’s activities will escape automation. “The thought is pretty terrifying,” he said.

Generation discovery

Even at the institute, Shavinsky began to build a reputation in science based on data. While working on his doctorate, he met with the task of classifying thousands of galaxies based on their appearance. There were no ready-made programs for this task, so he decided to organize crowdsourcing for this purpose - that was how the Galactic Zoo project was born . Since 2007, ordinary users have been able to help astronomers make assumptions about which galaxy belongs to which category, and usually most of the voices correctly classified the galaxy. The project was successful, however, as Shavinsky notes, the AI made it pointless: “Today, a talented scientist with experience in the Moscow Region and access to cloud computing can make such a project in half a day.”

Shavinsky turned to GM's new powerful tool in 2016. In fact, the GM asks the question: how likely is it that under condition X we get the result Y? This approach has proven incredibly effective and universal. For example, let's say you fed GM a set of images of human faces, and for each person his age is affixed. The program combs these training data and begins to find a connection between old faces and the increased likelihood of wrinkles appearing on them. As a result, she can give out the age of any given person - that is, to predict what physical changes a given person of any age is likely to undergo.

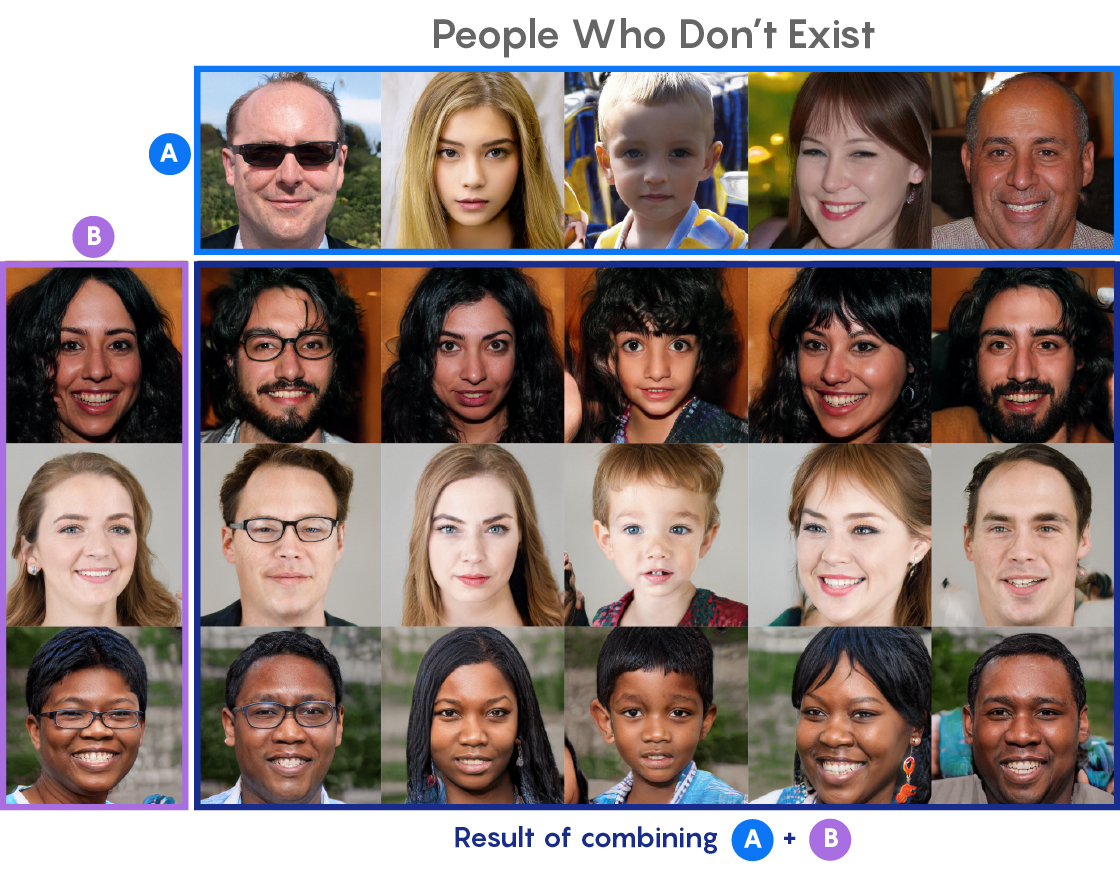

None of these individuals are real. The top row (A) and the left column (B) are created by a generative-adversarial network (GSS) using building blocks derived from elements of real persons. Then the GSS combined the main facial features of series A, including gender, grow and face shape, with smaller facial features of column B, for example, hair and eye color, and created faces in the rest of the table.

Of the GM systems, generative adversarial networks (GSS) are most known. After processing adequate training data, the GSS can restore images with missing or damaged pixels or make blurry photos clear. GSSs are trained to extract the missing information on the basis of competition (hence the "adversarial"): one part of the network, the generator, generates false data, and the second, the discriminator, tries to distinguish false data from real ones. While the program is running, both parts of it gradually work better. You may have seen some super-realistic "faces" created by the GSS - images of "incredibly realistic people who do not exist in reality," as they wrote in one of the headlines.

In a more general case, a GM takes a dataset (usually images, but not necessary), and breaks them into subsets of the basic abstract building blocks - scientists call them the "hidden space" of the data. The algorithm manipulates elements of the hidden space to see how this will affect the initial data, which helps to reveal the physical processes that ensure the operation of the system.

The idea of hidden space is abstract and hard to imagine, but as a crude analogy, think about what your brain can do when you try to determine the gender of a person by face. Perhaps you notice a hairstyle, a nose shape, and so on, as well as patterns that are not easy to describe in words. A computer program also looks for hidden signs in the data: although it has no idea what a mustache or gender is, if it was trained on a data set in which some images are labeled “man” or “woman”, and some have the label “mustache” ", She will quickly understand the relationship.

Kevin Shavinsky, astrophysicist, head of AI-company Modulos

In a paper published in December in the journal Astronomy & Astrophysics, Shavinsky and his colleagues, Denis Tharp and Che Zhen, used GM to study the physical changes in galaxies during evolution (the software they use calculates the hidden space a bit differently from GSS, so it’s technically impossible call GSS, although it is quite close in properties). Their model created artificial data sets to test hypotheses about physical processes. They, for example, asked how the “attenuation” of star formation — a sharp decrease in the speed of their formation — is associated with an increase in the density of the galaxy.

For Shavinsky, the key question is how much information about stellar and galactic processes can be extracted on the basis of only one data. “Exclude everything we know about astrophysics,” he said. “To what extent can we rediscover this knowledge using only data?”

First, galaxy images were reduced to hidden space; then Shavinsky could correct one element of this space so that it corresponds to a certain change in the environment of the galaxy - for example, the density of its environment. Then he could regenerate the galaxy and see what differences would appear this time. “And now I have a machine for generating hypotheses,” he explained. “I can take a bunch of galaxies that were originally surrounded by low density, and make it seem like their density is high.” Shavinsky, Tarp and Zhen found that moving from a lower to a higher density of the environment they become redder, and their stars are concentrated more densely. This is consistent with existing observations of galaxies, said Shavinsky. The only question is why.

The next step, says Shavinsky, has not yet been automated. “I, man, need to intervene and say: Well, what kind of physics can explain this effect?” There are two possible explanations for this process: it is possible that galaxies become redder in denser environments because they contain more dust, or because there is a decline in the formation of stars (in other words, their stars are usually older). Using the generative model, we can test both ideas. We change the elements of hidden space associated with dust and the speed of star formation, and see how this affects the color of galaxies. “And the answer is clear,” said Shavinsky. Redder galaxies are those "where the speed of star formation has fallen, and not those where there is more dust. Therefore, we are inclined in favor of the first explanation. ”

The top row are real galaxies in low-density regions.

Second row - reconstruction based on hidden space.

Next come the transformations made by the network, and below are the generated galaxies in high-density regions.

The approach is associated with traditional simulations, but has cardinal differences. The simulation, in fact, "is based on assumptions," said Shavinsky. “This one is the same as saying:“ I think I understood what physical fundamentals underlie everything that I observe in the system. ” I have a recipe for forming stars, for the behavior of dark matter, and so on. I place all my hypotheses and start the simulation. And then I ask: Does this look like reality? ” And with generative modeling, this, he said, looks “in a sense, the exact opposite of simulation.” We do not know anything, we do not want to assume anything. We want the data to tell us what can happen. ”

The apparent success of generative modeling in such a study, obviously, does not mean that astronomers and graduate students are no longer needed - but it seems to demonstrate a shift in the degree to which AI can learn anything about astrophysical objects and processes, having almost only a huge amount of data. “This is not a fully automated science, but it demonstrates that we are able to create tools that automate scientific progress at least partially,” said Shavinsky.

Generative modeling is obviously capable of much - but whether it really represents a new approach to science, this is a moot point. For David Hogg, a cosmologist from New York University and the Flatiron Institute, this technology, although impressive, is, in fact, a very complex way to extract sequences from data - and astronomers have been doing this for centuries. In other words, it is an advanced method of observation and analysis. The work of Hogg, like Shavinsky, is highly dependent on AI; he uses neural networks to classify stars by spectrum and draw conclusions about other physical propertiesstars using data-driven models. But he considers his work, and the work of Shavinsky, to be an old, kind, proven scientific method. “I don't think this is the third way,” he said recently. “I just think that we, as a community, are increasingly using our data. In particular, we are much better at comparing data. But from my point of view, my work fits perfectly into the framework of the observation regime. ”

Zealous assistants

Whether AI and neural networks are conceptually new tools or not, it is obvious that they began to play a critical role in modern astronomy and physical research. At the Heidelberg Institute for Theoretical Research, physicist Kai Polsterer leads a group on astroinformatics - a team of researchers working with new methods in astrophysics based on data processing. They recently used an algorithm with MOs to extract redshift information from galaxy datasets — a task that used to be debilitating.

Polsterer considers these new AI-based systems “zealous assistants,” capable of combing data for hours without getting bored and complaining about working conditions. These systems can do all the monotonous and hard work, he said, leaving us with a “cool, interesting science."

But they are not perfect. In particular, Polsterer warns, algorithms can only do what they have been trained. The system is indifferent to the input. Give her a galaxy and she will be able to appreciate her redshift and age. But give her a selfie or a photo of rotten fish, she will appreciate their age (naturally, wrong). In the end, he said, people’s oversight remains necessary. “Everything closes on us, the researchers. We are responsible for the interpretation. ”

For his part, Nord from Fermilab warns that it is important that the neural networks produce not only results, but also work errors, as any student is accustomed to. It is so accepted in science that if you take a measurement but don’t give an error, no one will take your results seriously.

Like many AI researchers, Nord is also worried that the results from neural networks are hard to understand; the neural network gives an answer without providing a clear way to get it.

However, not everyone believes that a lack of transparency is a problem. Lenka Zdeborova, a researcher at the Institute of Theoretical Physics in France, points out that human intuition is also sometimes impossible to understand. You look at the photo and find out that the cat is depicted on it - “but you don’t know how you know this,” she says. “Your brain, in a way, is also a black box.”

Not only astrophysicists and cosmologists migrate to the side of science using AI and data processing. Quantum physics specialist Roger Melko of the Institute for Theoretical Physics of Perimeter and the University of Waterloo used a neural network to solve some of the most complex and important problems in this area, for example, representing a wave function describing a system of many particles. AI is necessary because of what Melko calls the “exponential curse of dimension”. That is, the number of possible forms of the wave function increases exponentially with an increase in the number of particles in the described system. The difficulty is similar to trying to choose the best move in a game such as chess or go: you try to calculate the next move by imagining how your opponent will go and choose the best answer, but with each move the number of opportunities grows.

Of course, AI mastered both of these games, learning to play chess a few decades ago, and beating the best go player in 2016 - this was done by the AlphaGo system. She finely says that they are also well adapted to the problems of quantum physics.

Machine mind

Whether Shavinsky is right in declaring that he has found a “third way” to engage in science, or, as Hogg says, these are just traditional observations and data analysis “on steroids,” it is clear that AI changes the essence of a scientific discovery and clearly accelerates it. How far will the AI revolution go in science?

Periodically loud statements are made about the achievements of the "robo-scientists." Ten years ago, the Adam robot chemist examined the yeast genome and determined which genes are responsible for the production of certain amino acids. He did this by observing yeast strains that lacked certain genes and comparing the results of their behavior with each other. Wired magazine wrote, "The robot made a scientific discovery on its own ."

A little later, Lee Cronin, a chemist at Glazko University, used a robotto randomly mix chemicals to see if any new compounds appear. By tracking reactions in real time using a mass spectrometer, a nuclear magnetic resonance machine, and an infrared spectrometer, the system eventually learned to predict the most reactive combinations. Even though this did not lead to discoveries, Cronin said, a robotic system could allow chemists to speed up their research by 90%.

Last year another team of scientists from Zurich used neural networksto derive physical laws based on data sets. Their system, a kind of robotic Kepler, rediscovered the heliocentric model of the solar system, based on records of the location of the Sun and Mars in the sky visible from Earth, and also deduced the law of conservation of momentum from observations of collisions of balls. Since physical laws can often be expressed in several ways, researchers are interested in whether this system can offer new, and possibly simpler ways of working with known laws.

All these are examples of how AI accelerates scientific discoveries, although in each case it can be argued how revolutionary the new approach was. Perhaps the most controversial will be the question of how much information can be obtained from data alone - an important issue in the era of vast, ever-growing, mountains of data. In The Book of Why, 2018, computer science specialist Jadi Pearl and popular science writer Dana Mackenzie suggest that data is an “incredibly dumb” thing. Questions about causation "can never be answered based solely on data," they write. “Each time you see a work or study analyzing data without taking models into account, you can be sure that the output of this work sums up, and possibly transforms, but does not interpret the data.” Shawinsky sympathizes with Pearl’s position, but he describes the idea of working only with data as something like a “little man of dashes”. He said that he had never stated the possibility of deriving causes and effects from the data. "I just said that we can do much more with data than is usually the case."

Another common argument is that creativity is needed for science, and at least for now, we have no idea how to program it. A simple enumeration of all the possibilities, as the robot chemist Cronin did, does not look particularly creative. “I think that in order to come up with a theory, logical constructions, creativity is required,” said Polsterer. “Every time you need creativity, you need a person.” And where does creativity come from? Polsterer suspects that it is connected with boredom - the fact that, according to him, the car was not given to test. “To be creative, one must not love boredom. And I don’t think that the computer will ever get bored. " On the other hand, words like “creativity” and “inspiration” are often used to describe programs such as Deep Blue and AlphaGo. And futile attempts to describe

Shavinsky recently left academia in favor of the commercial sector; He now runs the Modulos startup, where many scientists from the Swiss Technical Institute work, and, according to their website, “works in the eye of a storm of developments in the field of AI and machine learning.” Whatever obstacles lie between modern AI and full-fledged artificial intelligence, he and other experts believe that machines are destined to do more and more work of scientists. Are there limits to this, we only have to find out.

“Will it be possible in the foreseeable future to create a machine capable of making discoveries in physics or mathematics that the smartest of living people using biological equipment are not capable of? - thinks Shavinsky. - Will the science of the future develop thanks to machines operating at a level inaccessible to us? I dont know. This is a good question".