Long-distance machine orientation using reinforced learning

- Transfer

In the United States alone, there are 3 million people with disabilities who cannot leave their homes. Helper robots that can automatically navigate long distances can make these people more independent by bringing them food, medicine, and packages. Studies show that deep learning with reinforcement (OP) is well suited for comparing raw input and actions, for example, for learning to capture objects or move robots , but usually OP agents lack the understanding of large physical spaces necessary for safe orientation to distant distances without human help and adaptation to a new environment.

In three recent works, "Orienteering training from scratch with AOP "," PRM-RL: implementation of robotic orientation at long distances by using samples on the basis of a combination of reinforcement learning and planning "and" Orientation at long distances from the PRM-RL", we study autonomous robots that adapt easily to a new environment, combining deep OP with long-term planning. We train local scheduling agents to perform the basic actions necessary for orientation, and to move short distances without collisions with moving objects. Local planners make noisy observations the environment using sensors such as one-dimensional lidars that provide distance to an obstacle and provide linear and angular velocities for controlling the robot. eat local scheduler in simulations using automatic reinforcement learning (AOP), the method for automating searches awards OP and architecture of the neural network. Despite the limited range, 10-15 m, the local schedulers are well adapted for use in both real robots and to new, previously unknown environments. This allows you to use them as building blocks for orientation on large spaces. Then we build a road map, a graph where the nodes are separate sections, and the edges connect the nodes only if local planners, well imitating real robots using noisy sensors and controls, can move between them.

In our first work, we train a local planner in a small static environment. However, when learning with the standard deep OP algorithm, for example, the deep deterministic gradient ( DDPG ), there are several obstacles. For example, the real goal of local planners is to achieve a given goal, as a result of which they receive rare rewards. In practice, this requires researchers to spend considerable time on step-by-step implementation of the algorithm and manual adjustment of awards. Researchers also have to make decisions about the architecture of neural networks without having clear, successful recipes. Finally, algorithms such as DDPG learn unstably and often exhibit catastrophic forgetfulness .

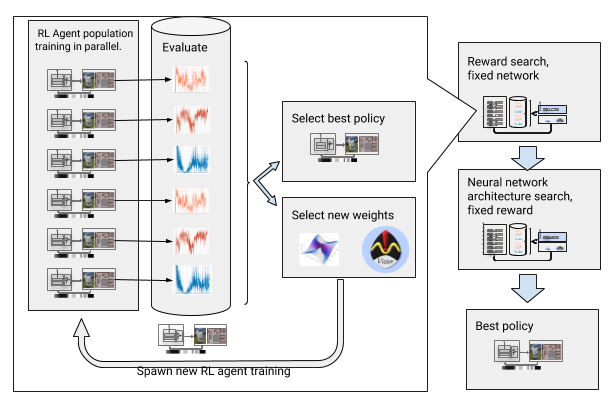

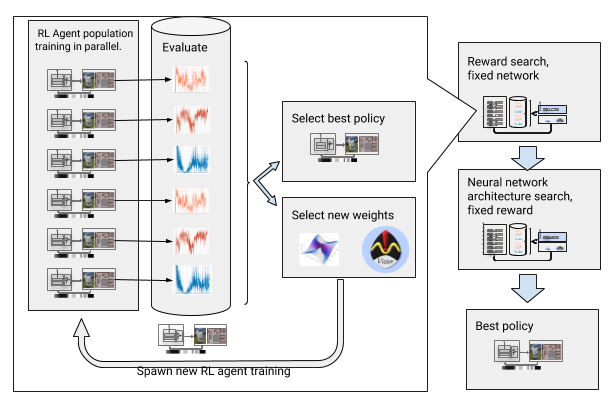

To overcome these obstacles, we automated deep learning with reinforcement. AOP is an evolutionary automatic wrapper around deep OP, looking for rewards and neural network architecture through large-scale hyperparameter optimization. It works in two stages, the search for rewards and the search for architecture. During the search for rewards, AOP has been simultaneously training the DDPG agent population for several generations, each of them having their own slightly changed reward function, optimized for the true task of the local planner: reaching the endpoint of the path. At the end of the reward search phase, we select one that most often leads agents to the goal. In the search phase of neural network architecture, we repeat this process, for this race using the selected award and adjusting the network layers, optimizing the cumulative award.

AOP with the search for award and architecture of the neural network

However, this step-by-step process makes AOP ineffective in terms of the number of samples. AOP training with 10 generations of 100 agents requires 5 billion samples, equivalent to 32 years of study! The advantage is that after AOP, the manual learning process is automated, and DDPG does not have catastrophic forgetting. Most importantly, the quality of the final policies is higher - they are resistant to noise from the sensor, drive and localization, and are well generalized to new environments. Our best policy is 26% more successful than other orientation methods at our test sites.

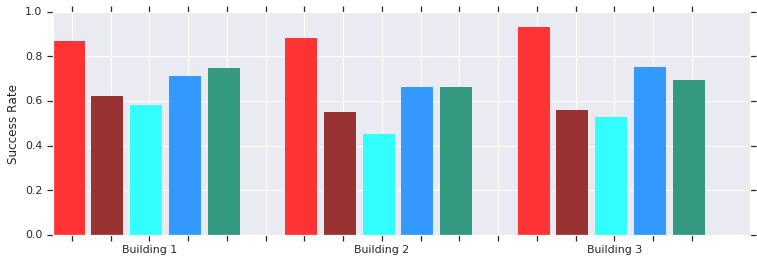

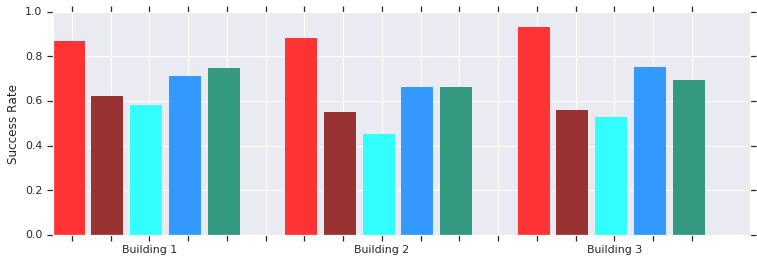

Red - AOP successes at short distances (up to 10 m) in several previously unknown buildings. Comparison with manually trained DDPG (dark red), artificial potential fields (blue), dynamic window (blue) and behavior cloning (green).

The local AOP scheduler policy works well with robots in real unstructured environments

. Although these policies can only be locally oriented, they are resistant to moving obstacles and are well tolerated for real robots in unstructured environments. And although they were trained in simulations with static objects, they effectively cope with moving ones. The next step is to combine AOP policies with sample-based planning in order to expand their area of work and teach them how to navigate long distances.

Pattern-based planners work with long-range orientation, approximating robot movements. For example, a robot builds probabilistic roadmaps (PRMs) by drawing transition paths between sections. In our second work , which won the award at the ICRA 2018 conference , we combine PRM with manually tuned local OP-schedulers (without AOP) to train robots locally and then adapt them to other environments.

First, for each robot, we train the local scheduler policy in a generalized simulation. Then we create a PRM taking into account this policy, the so-called PRM-RL, based on a map of the environment where it will be used. The same card can be used for any robot that we wish to use in the building.

To create a PRM-RL, we combine nodes from samples only if the local OP-scheduler can reliably and repeatedly move between them. This is done in a Monte Carlo simulation. The resulting map adapts to the capabilities and geometry of a particular robot. Cards for robots with the same geometry, but with different sensors and drives, will have different connectivity. Since the agent can rotate around the corner, nodes that are not in direct line of sight can also be turned on. However, nodes adjacent to walls and obstacles will be less likely to be included in the map due to sensor noise. At run time, the OP agent moves across the map from one section to another.

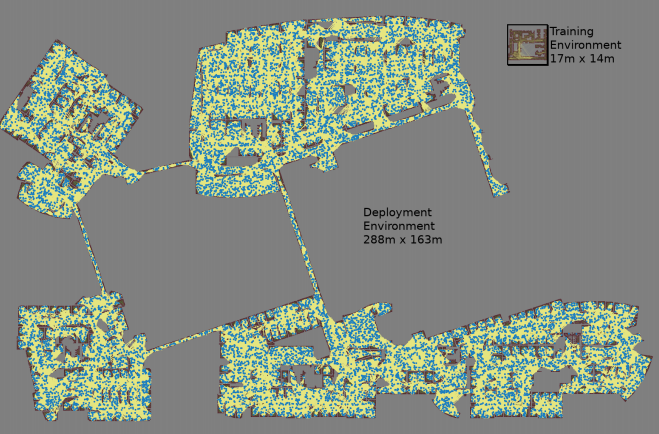

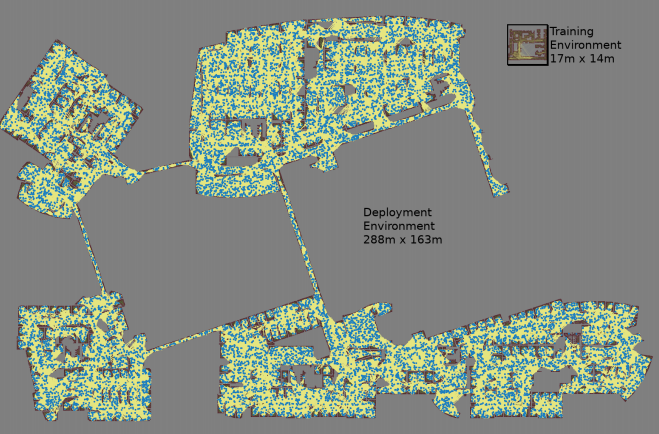

A map is created with three Monte Carlo simulations for each randomly selected pair of nodes

The largest map was 288x163 m in size and contained almost 700,000 edges. 300 workers collected it for 4 days, having carried out 1.1 billion collision checks.

The third work provides several improvements to the original PRM-RL. Firstly, we are replacing the manually tuned DDPG with local AOP schedulers, which gives an improvement in orientation over long distances. Secondly, maps of simultaneous localization and markup are added ( SLAM) that robots use at runtime as a source for building roadmaps. SLAM cards are subject to noise, and this closes the “gap between the simulator and reality”, a well-known problem in robotics, due to which agents trained in simulations behave much worse in the real world. Our level of success in the simulation coincides with the level of success of real robots. And finally, we added distributed building maps, so we can create very large maps containing up to 700,000 nodes.

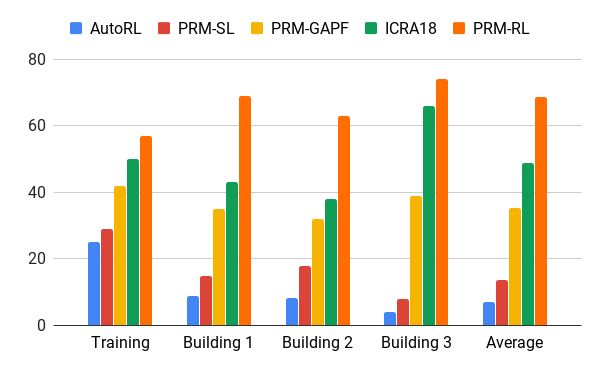

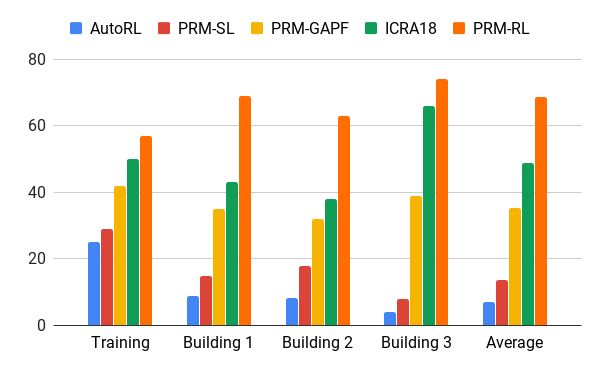

We evaluated this method using our AOP agent, who created maps based on drawings of buildings that exceeded the training environment by 200 times in area, including only the ribs, which were successfully completed in 90% of cases in 20 attempts. We compared PRM-RL with various methods at distances up to 100 m, which significantly exceeded the range of the local planner. PRM-RL achieved success 2-3 times more often than conventional methods due to the correct connection of nodes, suitable for the capabilities of the robot.

Success rate in moving 100 m in different buildings. Blue - local AOP scheduler, first job; red - original PRM; yellow - artificial potential fields; green is the second job; red - the third job, PRM with AOP.

We tested PRM-RL on many real robots in many buildings. Below is one of the test suites; the robot reliably moves almost everywhere, except for the most messy places and areas that go beyond the SLAM card.

Machine orientation can seriously increase the independence of people with mobility impairments. This can be achieved by developing autonomous robots that can easily adapt to the environment, and the methods available for implementation in the new environment based on existing information. This can be done by automating basic orientation training for short distances with AOP, and then using the acquired skills together with SLAM cards to create roadmaps. Roadmaps consist of nodes connected by ribs, on which robots can reliably move. As a result, a robot behavior policy is developed that, after one training, can be used in different environments and issue roadmaps specially adapted for a particular robot.

In three recent works, "Orienteering training from scratch with AOP "," PRM-RL: implementation of robotic orientation at long distances by using samples on the basis of a combination of reinforcement learning and planning "and" Orientation at long distances from the PRM-RL", we study autonomous robots that adapt easily to a new environment, combining deep OP with long-term planning. We train local scheduling agents to perform the basic actions necessary for orientation, and to move short distances without collisions with moving objects. Local planners make noisy observations the environment using sensors such as one-dimensional lidars that provide distance to an obstacle and provide linear and angular velocities for controlling the robot. eat local scheduler in simulations using automatic reinforcement learning (AOP), the method for automating searches awards OP and architecture of the neural network. Despite the limited range, 10-15 m, the local schedulers are well adapted for use in both real robots and to new, previously unknown environments. This allows you to use them as building blocks for orientation on large spaces. Then we build a road map, a graph where the nodes are separate sections, and the edges connect the nodes only if local planners, well imitating real robots using noisy sensors and controls, can move between them.

Automatic reinforcement learning (AOP)

In our first work, we train a local planner in a small static environment. However, when learning with the standard deep OP algorithm, for example, the deep deterministic gradient ( DDPG ), there are several obstacles. For example, the real goal of local planners is to achieve a given goal, as a result of which they receive rare rewards. In practice, this requires researchers to spend considerable time on step-by-step implementation of the algorithm and manual adjustment of awards. Researchers also have to make decisions about the architecture of neural networks without having clear, successful recipes. Finally, algorithms such as DDPG learn unstably and often exhibit catastrophic forgetfulness .

To overcome these obstacles, we automated deep learning with reinforcement. AOP is an evolutionary automatic wrapper around deep OP, looking for rewards and neural network architecture through large-scale hyperparameter optimization. It works in two stages, the search for rewards and the search for architecture. During the search for rewards, AOP has been simultaneously training the DDPG agent population for several generations, each of them having their own slightly changed reward function, optimized for the true task of the local planner: reaching the endpoint of the path. At the end of the reward search phase, we select one that most often leads agents to the goal. In the search phase of neural network architecture, we repeat this process, for this race using the selected award and adjusting the network layers, optimizing the cumulative award.

AOP with the search for award and architecture of the neural network

However, this step-by-step process makes AOP ineffective in terms of the number of samples. AOP training with 10 generations of 100 agents requires 5 billion samples, equivalent to 32 years of study! The advantage is that after AOP, the manual learning process is automated, and DDPG does not have catastrophic forgetting. Most importantly, the quality of the final policies is higher - they are resistant to noise from the sensor, drive and localization, and are well generalized to new environments. Our best policy is 26% more successful than other orientation methods at our test sites.

Red - AOP successes at short distances (up to 10 m) in several previously unknown buildings. Comparison with manually trained DDPG (dark red), artificial potential fields (blue), dynamic window (blue) and behavior cloning (green).

The local AOP scheduler policy works well with robots in real unstructured environments

. Although these policies can only be locally oriented, they are resistant to moving obstacles and are well tolerated for real robots in unstructured environments. And although they were trained in simulations with static objects, they effectively cope with moving ones. The next step is to combine AOP policies with sample-based planning in order to expand their area of work and teach them how to navigate long distances.

Long-distance orientation with PRM-RL

Pattern-based planners work with long-range orientation, approximating robot movements. For example, a robot builds probabilistic roadmaps (PRMs) by drawing transition paths between sections. In our second work , which won the award at the ICRA 2018 conference , we combine PRM with manually tuned local OP-schedulers (without AOP) to train robots locally and then adapt them to other environments.

First, for each robot, we train the local scheduler policy in a generalized simulation. Then we create a PRM taking into account this policy, the so-called PRM-RL, based on a map of the environment where it will be used. The same card can be used for any robot that we wish to use in the building.

To create a PRM-RL, we combine nodes from samples only if the local OP-scheduler can reliably and repeatedly move between them. This is done in a Monte Carlo simulation. The resulting map adapts to the capabilities and geometry of a particular robot. Cards for robots with the same geometry, but with different sensors and drives, will have different connectivity. Since the agent can rotate around the corner, nodes that are not in direct line of sight can also be turned on. However, nodes adjacent to walls and obstacles will be less likely to be included in the map due to sensor noise. At run time, the OP agent moves across the map from one section to another.

A map is created with three Monte Carlo simulations for each randomly selected pair of nodes

The largest map was 288x163 m in size and contained almost 700,000 edges. 300 workers collected it for 4 days, having carried out 1.1 billion collision checks.

The third work provides several improvements to the original PRM-RL. Firstly, we are replacing the manually tuned DDPG with local AOP schedulers, which gives an improvement in orientation over long distances. Secondly, maps of simultaneous localization and markup are added ( SLAM) that robots use at runtime as a source for building roadmaps. SLAM cards are subject to noise, and this closes the “gap between the simulator and reality”, a well-known problem in robotics, due to which agents trained in simulations behave much worse in the real world. Our level of success in the simulation coincides with the level of success of real robots. And finally, we added distributed building maps, so we can create very large maps containing up to 700,000 nodes.

We evaluated this method using our AOP agent, who created maps based on drawings of buildings that exceeded the training environment by 200 times in area, including only the ribs, which were successfully completed in 90% of cases in 20 attempts. We compared PRM-RL with various methods at distances up to 100 m, which significantly exceeded the range of the local planner. PRM-RL achieved success 2-3 times more often than conventional methods due to the correct connection of nodes, suitable for the capabilities of the robot.

Success rate in moving 100 m in different buildings. Blue - local AOP scheduler, first job; red - original PRM; yellow - artificial potential fields; green is the second job; red - the third job, PRM with AOP.

We tested PRM-RL on many real robots in many buildings. Below is one of the test suites; the robot reliably moves almost everywhere, except for the most messy places and areas that go beyond the SLAM card.

Conclusion

Machine orientation can seriously increase the independence of people with mobility impairments. This can be achieved by developing autonomous robots that can easily adapt to the environment, and the methods available for implementation in the new environment based on existing information. This can be done by automating basic orientation training for short distances with AOP, and then using the acquired skills together with SLAM cards to create roadmaps. Roadmaps consist of nodes connected by ribs, on which robots can reliably move. As a result, a robot behavior policy is developed that, after one training, can be used in different environments and issue roadmaps specially adapted for a particular robot.