Server analytics systems

This is the second part of a series of articles on analytical systems ( link to part 1 ).

Today there is no doubt that accurate data processing and interpretation of the results can help almost any type of business. In this regard, analytical systems are becoming increasingly loaded with parameters, the number of triggers and user events in applications is growing.

Because of this, companies give their analysts more and more “raw” information for analysis and turning it into the right decisions. The importance of the analytics system for the company should not be underestimated, and the system itself must be reliable and stable.

Client analytics is a service that a company connects to its website or application through the official SDK, integrates into its own code base and selects event triggers. This approach has an obvious drawback: all the collected data cannot be fully processed as you would like, due to the limitations of any selected service. For example, in one system it will not be easy to run MapReduce tasks, in another you will not be able to run your model. Another drawback will be a regular (impressive) bill for services.

There are many client analytics solutions on the market, but sooner or later, analysts are faced with the fact that there is no one universal service suitable for any task (while prices for all these services are constantly growing). In this situation, companies often decide to create their own analytics system with all the necessary custom settings and capabilities.

Server analytics is a service that can be deployed internally in a company on its own servers and (usually) by its own efforts. In this model, all user events are stored on internal servers, allowing developers to try different databases for storage and choose the most convenient architecture. And even if you still want to use third-party client analytics for some tasks, it will still be possible.

Server analytics can be deployed in two ways. First: select some open source utilities, deploy on your machines and develop business logic.

Second: take SaaS services (Amazon, Google, Azure) instead of deploying it yourself. About SaaS in more detail we will tell in the third part.

If we want to get away from using client analytics and assemble our own, first of all we need to think over the architecture of the new system. Below I will tell you step by step what to consider, why each of the steps is needed and what tools can be used.

Just as in the case of client analytics, first of all, company analysts choose the types of events that they want to study in the future and collect them into a list. Usually, these events take place in a certain order, which is called the “event pattern”.

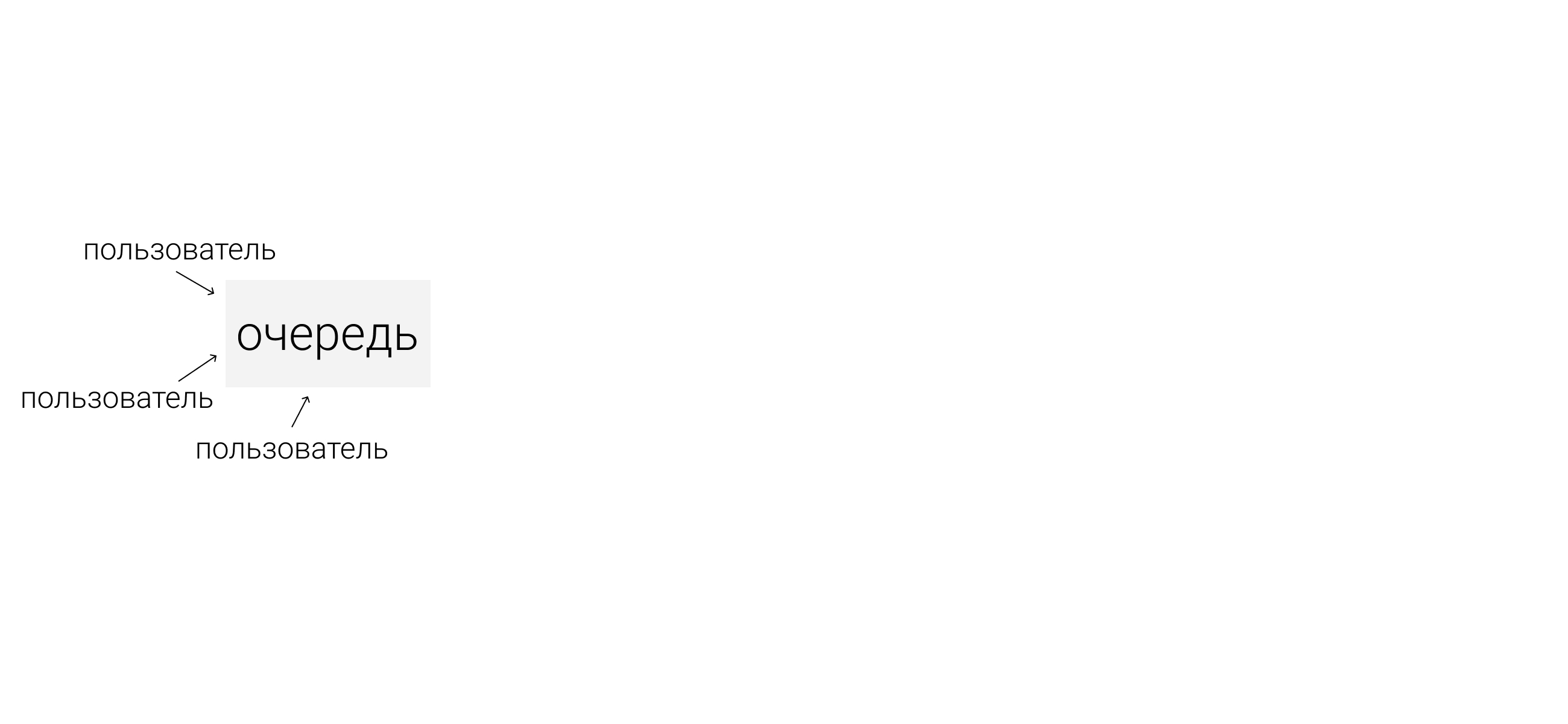

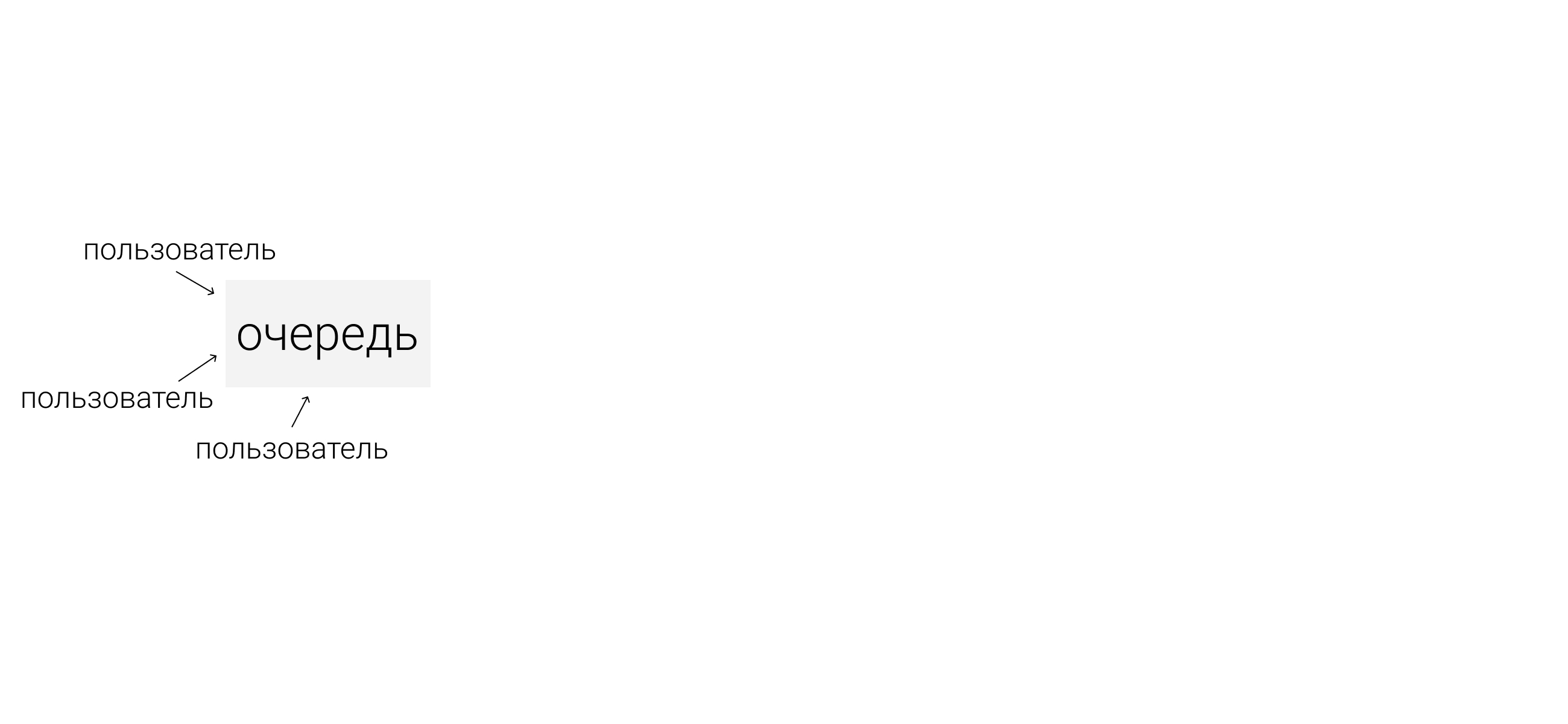

Next, imagine that a mobile application (website) has regular users (devices) and many servers. To securely transfer events from devices to servers, an intermediate layer is needed. Depending on the architecture, several different event queues may occur.

Apache Kafka is a pub / sub queue that is used as a queue for collecting events.

In our example, there are many data producers and their consumers (devices and servers), and Kafka helps to connect them to each other. Consumers will be described in more detail in the next steps, where they will be the main actors. Now we will consider only data producers (events).

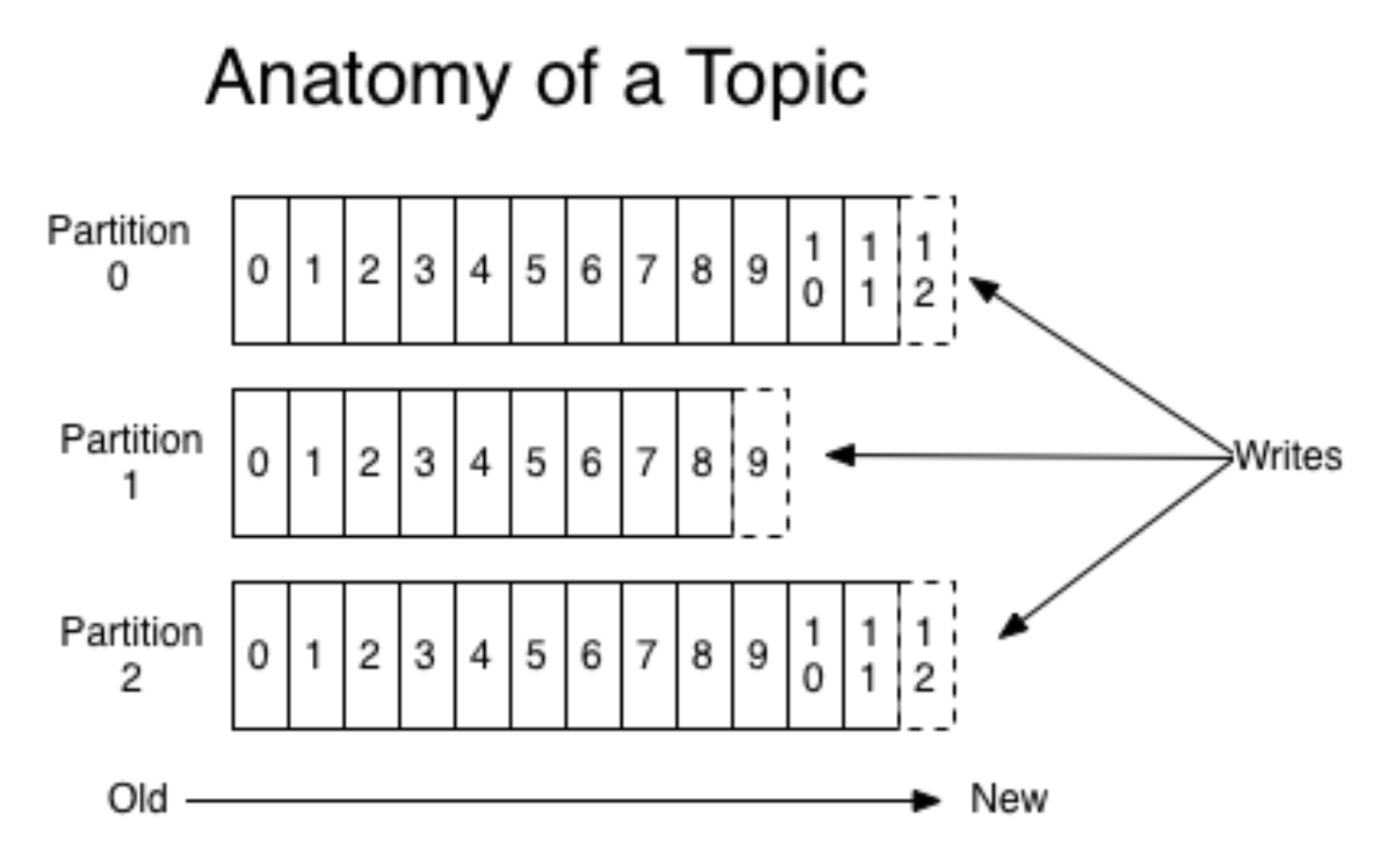

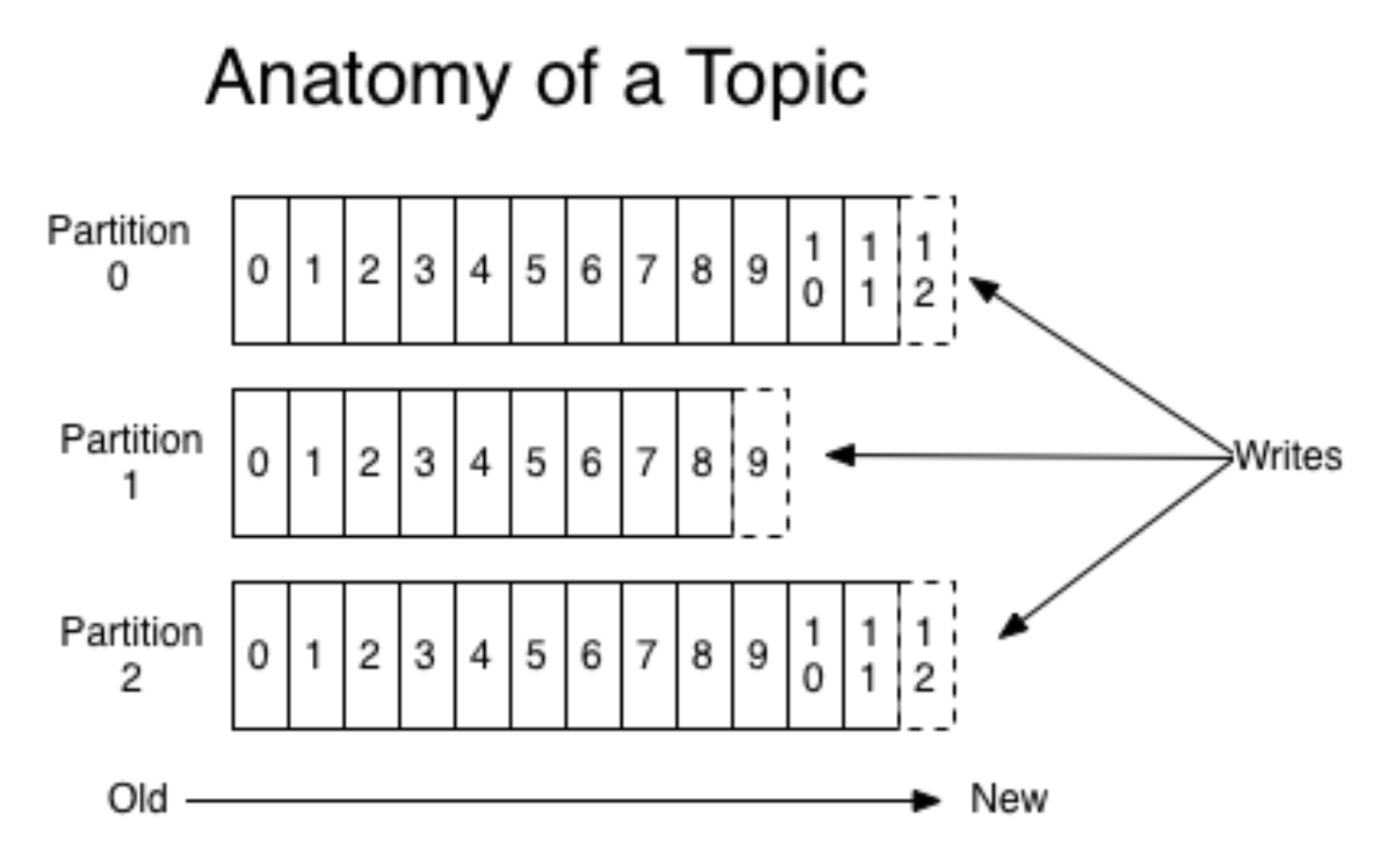

Kafka encapsulates the concepts of queue and partition; more specifically, it is better to read about it elsewhere (for example, in the documentation ). Without going into details, imagine that a mobile application is launched for two different OS. Then each version creates its own separate stream of events. Producers send events to Kafka, they are recorded in a suitable queue.

(picture from here )

At the same time, Kafka allows you to read in pieces and process the flow of events with mini-bats. Kafka is a very convenient tool that scales well with growing needs (for example, by geolocation of events).

Usually one shard is enough, but things become more difficult due to scaling (as always). Probably no one will want to use only one physical shard in production, since the architecture must be fault tolerant. In addition to Kafka, there is another well-known solution - RabbitMQ. We did not use it in production as a queue for event analytics (if you have such an experience, tell us about it in the comments!). However, they used AWS Kinesis.

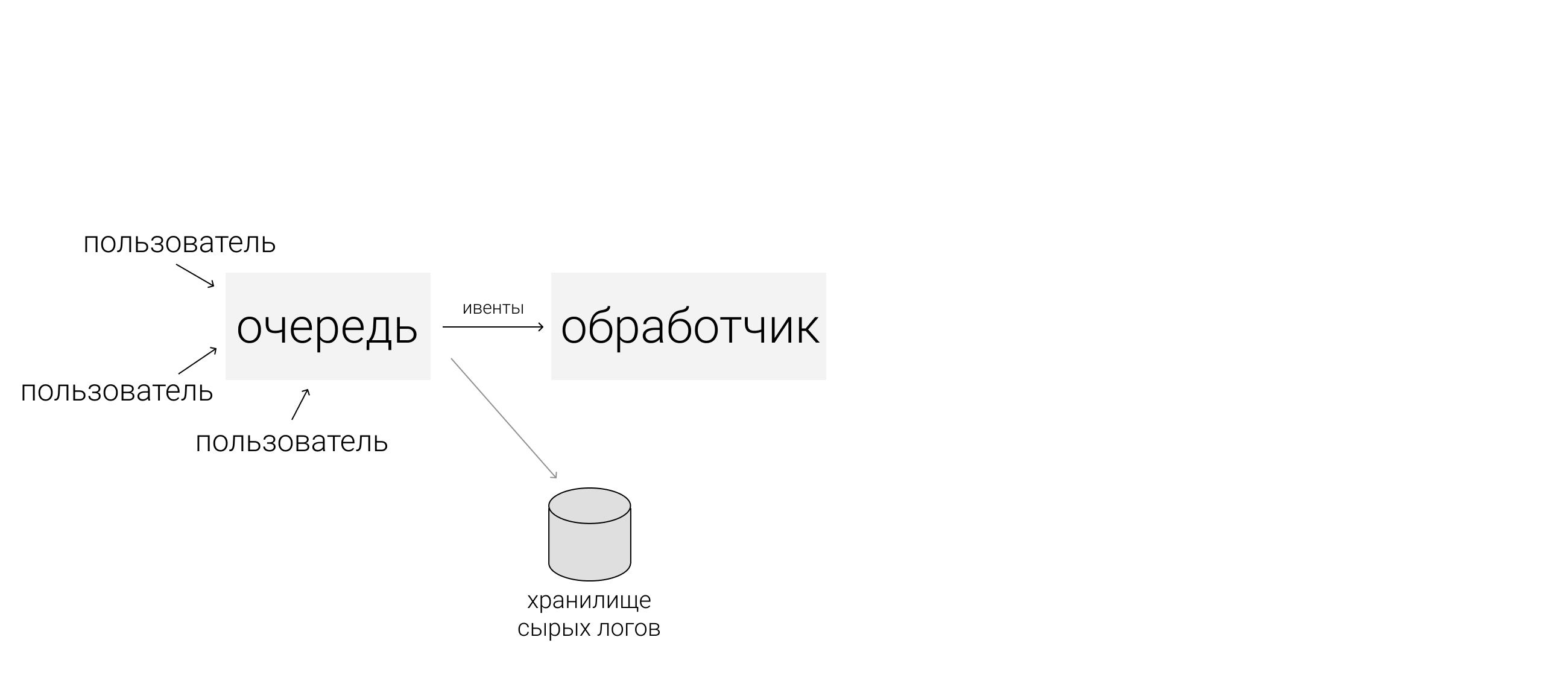

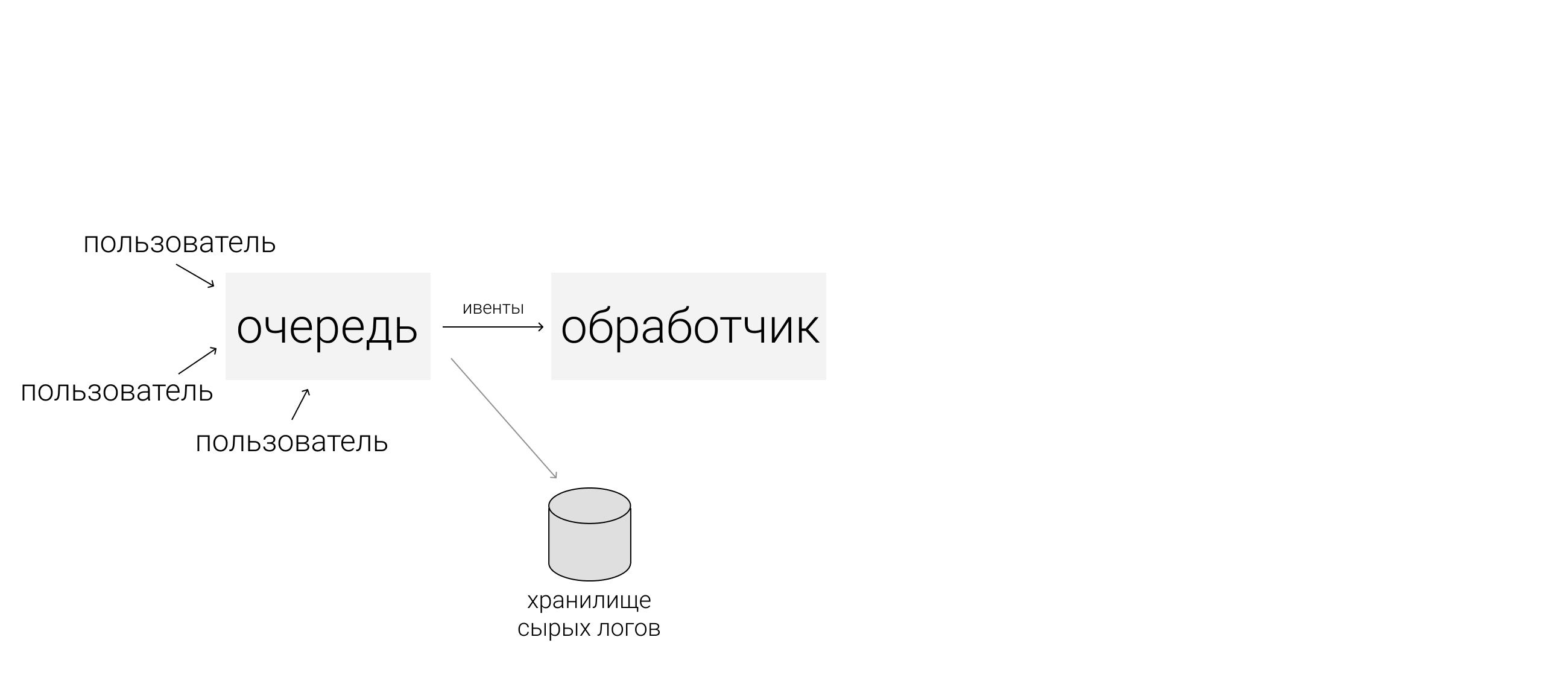

Before proceeding to the next step, we need to mention one more additional layer of the system - the storage of raw logs. This is not a required layer, but it will be useful if something goes wrong and the queues of events in Kafka are reset. Storage of raw logs does not require a complicated and expensive solution; you can simply record them somewhere in the correct order (even on a hard drive).

After we have prepared all the events and placed them in suitable queues, we proceed to the processing step. Here I will talk about the two most common processing options.

The first option is to enable Spark Streaming on the Apache system. All Apache products live in HDFS, a secure file replica file system. Spark Streaming is an easy-to-use tool that processes streaming data and scales well. However, it can be a little difficult to maintain.

Another option is to build your own event handler. To do this, for example, you need to write a Python application, build it in the docker and subscribe to the Kafka queue. When triggers arrive on the handlers in the docker, processing will start. With this method, you need to keep constantly running applications.

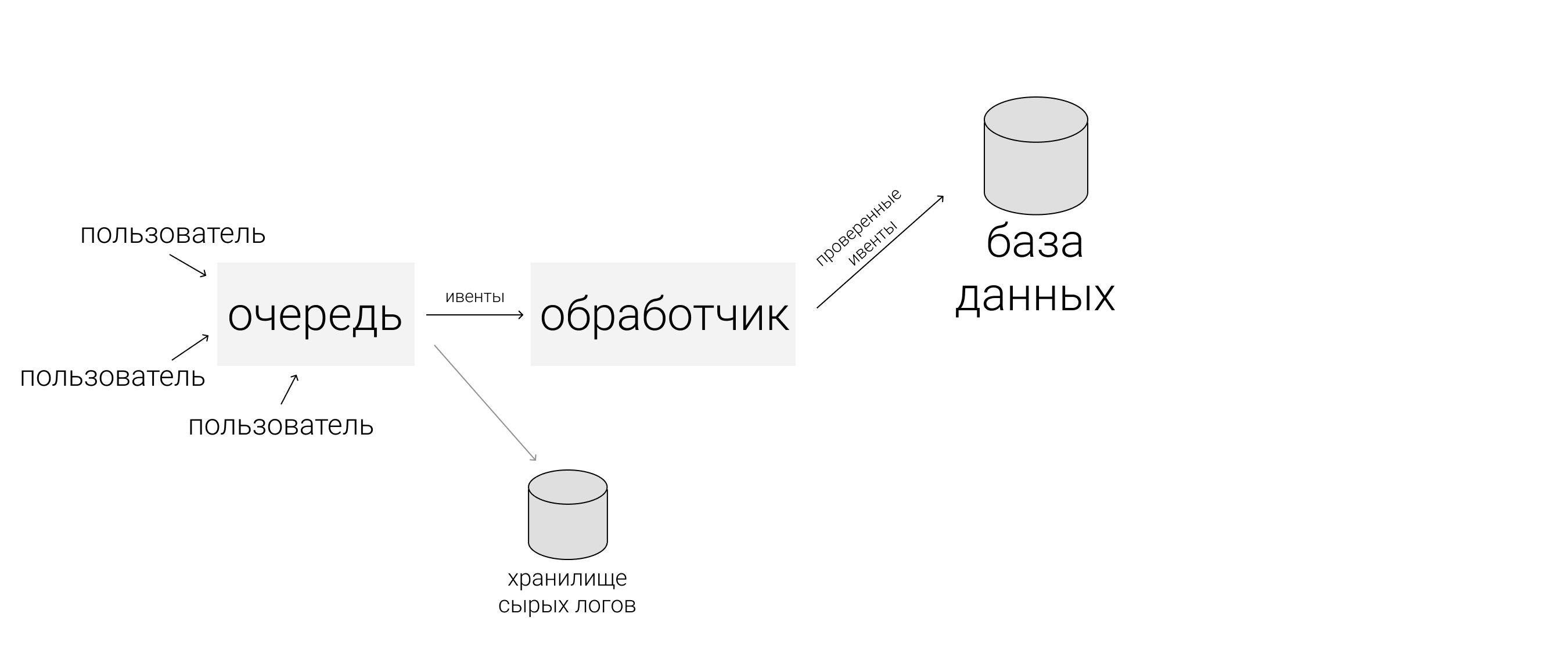

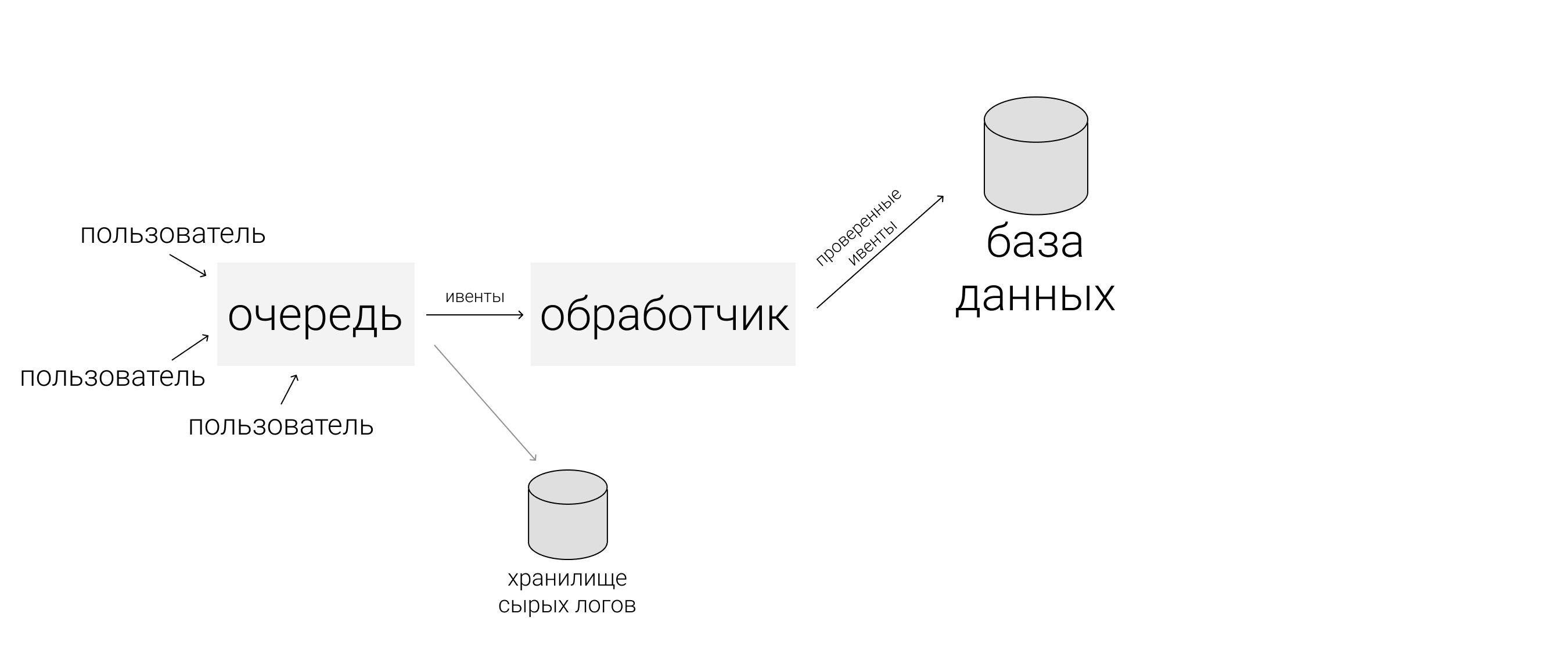

Suppose that we have chosen one of the options described above and proceed to the processing itself. Processors should start by checking the validity of the data, filtering garbage and "broken" events. For validation we usually use Cerberus . After that, you can make data mapping: data from different sources are normalized and standardized to be added to the general label.

The third step is to maintain normalized events. When working with a ready-made analytical system, we will often have to contact them, so it is important to choose a convenient database.

If the data fits well on a fixed schema, you can select Clickhouse or some other column database. So aggregations will work very quickly. The downside is that the scheme is rigidly fixed and therefore folding arbitrary objects without refinement will fail (for example, when a non-standard event occurs). But you can count really fast.

For unstructured data, you can take NoSQL, for example, Apache Cassandra . It works on HDFS, is well replicated, it is possible to raise many instances, it is fault tolerant.

You can pick up something simpler, for example, MongoDB . It is quite slow and for small volumes. But the plus is that it is very simple and therefore suitable for starting.

Having carefully saved all the events, we want to collect all the important information from the batch that came and update the database. Globally, we want to get relevant dashboards and metrics. For example, from events to collect a user profile and somehow measure the behavior. Events are aggregated, collected, and saved again (already in user tables). At the same time, you can build the system so that you also connect a filter to the aggregator-coordinator: collect users only from a certain type of events.

After that, if someone in the team needs only high-level analytics, you can connect external analytics systems. You can take Mixpanel again. but since it is quite expensive, sending not all user events there, but only what is needed. To do this, you need to create a coordinator who will transmit some raw events or something that we ourselves aggregated earlier to external systems, APIs or advertising platforms.

You need to connect the frontend to the created system. A good example is the redash service , a GUI for databases that helps build panels. How the interaction works:

Visualizations in the service are auto-updating, you can configure and track your monitoring. Redash is free, in case of self-hosted, and how SaaS will cost $ 50 per month.

After completing all the steps above, you will create your server analytics. Please note that this is not such an easy way as simply connecting client analytics, because everything needs to be configured independently. Therefore, before creating your own system, it is worth comparing the need for a serious analytics system with the resources that you are ready to devote to it.

If you calculated everything and found that the costs are too high, in the next part I will talk about how to make a cheaper version of server analytics.

Thanks for reading! I will be glad to questions in the comments.

Today there is no doubt that accurate data processing and interpretation of the results can help almost any type of business. In this regard, analytical systems are becoming increasingly loaded with parameters, the number of triggers and user events in applications is growing.

Because of this, companies give their analysts more and more “raw” information for analysis and turning it into the right decisions. The importance of the analytics system for the company should not be underestimated, and the system itself must be reliable and stable.

Customer analytics

Client analytics is a service that a company connects to its website or application through the official SDK, integrates into its own code base and selects event triggers. This approach has an obvious drawback: all the collected data cannot be fully processed as you would like, due to the limitations of any selected service. For example, in one system it will not be easy to run MapReduce tasks, in another you will not be able to run your model. Another drawback will be a regular (impressive) bill for services.

There are many client analytics solutions on the market, but sooner or later, analysts are faced with the fact that there is no one universal service suitable for any task (while prices for all these services are constantly growing). In this situation, companies often decide to create their own analytics system with all the necessary custom settings and capabilities.

Server analytics

Server analytics is a service that can be deployed internally in a company on its own servers and (usually) by its own efforts. In this model, all user events are stored on internal servers, allowing developers to try different databases for storage and choose the most convenient architecture. And even if you still want to use third-party client analytics for some tasks, it will still be possible.

Server analytics can be deployed in two ways. First: select some open source utilities, deploy on your machines and develop business logic.

| pros | Minuses |

| You can customize anything | Often it is very difficult and individual developers are needed. |

Second: take SaaS services (Amazon, Google, Azure) instead of deploying it yourself. About SaaS in more detail we will tell in the third part.

| pros | Minuses |

| It may be cheaper on medium volumes, but with large growth it will still become too expensive | Cannot control all parameters |

| Administration is entirely passed on to the service provider | It is not always known what is inside the service (it may not be needed) |

How to collect server analytics

If we want to get away from using client analytics and assemble our own, first of all we need to think over the architecture of the new system. Below I will tell you step by step what to consider, why each of the steps is needed and what tools can be used.

1. Data acquisition

Just as in the case of client analytics, first of all, company analysts choose the types of events that they want to study in the future and collect them into a list. Usually, these events take place in a certain order, which is called the “event pattern”.

Next, imagine that a mobile application (website) has regular users (devices) and many servers. To securely transfer events from devices to servers, an intermediate layer is needed. Depending on the architecture, several different event queues may occur.

Apache Kafka is a pub / sub queue that is used as a queue for collecting events.

According to a post on Kvor in 2014, the creator of Apache Kafka decided to name the software after Franz Kafka because “it is a system optimized for recording” and because he loved Kafka’s works. - Wikipedia

In our example, there are many data producers and their consumers (devices and servers), and Kafka helps to connect them to each other. Consumers will be described in more detail in the next steps, where they will be the main actors. Now we will consider only data producers (events).

Kafka encapsulates the concepts of queue and partition; more specifically, it is better to read about it elsewhere (for example, in the documentation ). Without going into details, imagine that a mobile application is launched for two different OS. Then each version creates its own separate stream of events. Producers send events to Kafka, they are recorded in a suitable queue.

(picture from here )

At the same time, Kafka allows you to read in pieces and process the flow of events with mini-bats. Kafka is a very convenient tool that scales well with growing needs (for example, by geolocation of events).

Usually one shard is enough, but things become more difficult due to scaling (as always). Probably no one will want to use only one physical shard in production, since the architecture must be fault tolerant. In addition to Kafka, there is another well-known solution - RabbitMQ. We did not use it in production as a queue for event analytics (if you have such an experience, tell us about it in the comments!). However, they used AWS Kinesis.

Before proceeding to the next step, we need to mention one more additional layer of the system - the storage of raw logs. This is not a required layer, but it will be useful if something goes wrong and the queues of events in Kafka are reset. Storage of raw logs does not require a complicated and expensive solution; you can simply record them somewhere in the correct order (even on a hard drive).

2. Event streams processing

After we have prepared all the events and placed them in suitable queues, we proceed to the processing step. Here I will talk about the two most common processing options.

The first option is to enable Spark Streaming on the Apache system. All Apache products live in HDFS, a secure file replica file system. Spark Streaming is an easy-to-use tool that processes streaming data and scales well. However, it can be a little difficult to maintain.

Another option is to build your own event handler. To do this, for example, you need to write a Python application, build it in the docker and subscribe to the Kafka queue. When triggers arrive on the handlers in the docker, processing will start. With this method, you need to keep constantly running applications.

Suppose that we have chosen one of the options described above and proceed to the processing itself. Processors should start by checking the validity of the data, filtering garbage and "broken" events. For validation we usually use Cerberus . After that, you can make data mapping: data from different sources are normalized and standardized to be added to the general label.

3. Database

The third step is to maintain normalized events. When working with a ready-made analytical system, we will often have to contact them, so it is important to choose a convenient database.

If the data fits well on a fixed schema, you can select Clickhouse or some other column database. So aggregations will work very quickly. The downside is that the scheme is rigidly fixed and therefore folding arbitrary objects without refinement will fail (for example, when a non-standard event occurs). But you can count really fast.

For unstructured data, you can take NoSQL, for example, Apache Cassandra . It works on HDFS, is well replicated, it is possible to raise many instances, it is fault tolerant.

You can pick up something simpler, for example, MongoDB . It is quite slow and for small volumes. But the plus is that it is very simple and therefore suitable for starting.

4. Aggregations

Having carefully saved all the events, we want to collect all the important information from the batch that came and update the database. Globally, we want to get relevant dashboards and metrics. For example, from events to collect a user profile and somehow measure the behavior. Events are aggregated, collected, and saved again (already in user tables). At the same time, you can build the system so that you also connect a filter to the aggregator-coordinator: collect users only from a certain type of events.

After that, if someone in the team needs only high-level analytics, you can connect external analytics systems. You can take Mixpanel again. but since it is quite expensive, sending not all user events there, but only what is needed. To do this, you need to create a coordinator who will transmit some raw events or something that we ourselves aggregated earlier to external systems, APIs or advertising platforms.

5. Frontend

You need to connect the frontend to the created system. A good example is the redash service , a GUI for databases that helps build panels. How the interaction works:

- The user makes an SQL query.

- In response, receives a tablet.

- For her, she creates a 'new visualization' and gets a beautiful schedule that you can already save to yourself.

Visualizations in the service are auto-updating, you can configure and track your monitoring. Redash is free, in case of self-hosted, and how SaaS will cost $ 50 per month.

Conclusion

After completing all the steps above, you will create your server analytics. Please note that this is not such an easy way as simply connecting client analytics, because everything needs to be configured independently. Therefore, before creating your own system, it is worth comparing the need for a serious analytics system with the resources that you are ready to devote to it.

If you calculated everything and found that the costs are too high, in the next part I will talk about how to make a cheaper version of server analytics.

Thanks for reading! I will be glad to questions in the comments.