Simple parser for youtube in google tables

Background

There was a task to collect data on 2000+ youtube videos, from which it was necessary to extract the name of the video, channel and language.

The IMPORTXML function, with which you can perform parsing from sites, did not help, because, as it turned out later, youtube stores data in JSON files. I didn’t want to sort out so many videos with my hands and I began to look for ways to automate or at least simplify this task.

I found an old article of 15 on Habré , which describes how to get the number of video views, but from the moment of its publication youtube updated the API and I needed to get a little different data.

In the process of solving the problem using the youtube API and Google tables, it turned out not only to extract data from a pre-prepared list of video URLs, but also to parse the output of youtube by keyword.

Being in the wildest delight of the result, I decided to share my experience and describe the process in more detail.

The method itself is certainly not ideal. It can be described as a simple solution in a hurry. But until I started to learn Python (I hope this day sooner or later comes) I have to do everything the old fashioned way in tables.

Training

For the parser to work, as in the original article, we need the ImportJSON script and youtube API.

Importjson

Download the script from github and install into the table through the tools >> script editor.

Youtube API

We get our API - the Youtube Data API V3 key in the library and create credentials for it. Previously, of course, we make an account, if you are not already registered with google cloud.

Information about the video is stored in a JSON file, which is available at the link:

www.googleapis.com/youtube/v3/videos?id= {Video_ID} & key = {API_Key} & part = {Part_Parametr}where

Video_ID is the video identifier

API_Key is the API key that you will receive in the

Part_Parametr console - the part of the file in which the information is stored

Parts of the file can be of several types:

snippet - contains almost all basic information about the video:

1. publication date

2. video

title 3. name channel

4. channel identifier

5. description for the video (Description)

6. video category (number)

7.

contentDetails tags - contains the duration and resolution of the video.

topicDetails- contains the video category in the form of a Wikipedia link with the name of the category, however this section is not always filled.

statistics

1. views

2. number of comments

3. likes

4. dislikes

In general, these are the main parts that you may need, but a complete list, if anything, is in the help .

Retrieving URL List Data

Consider the work of the parser using the badcomedian review as an example . In order to get information about a video, we only need its identifier ( Video_ID ), in this case it is EOWa0fmSGs8 .

We get and structure information using the ImportJSON formula and the XPath query language.

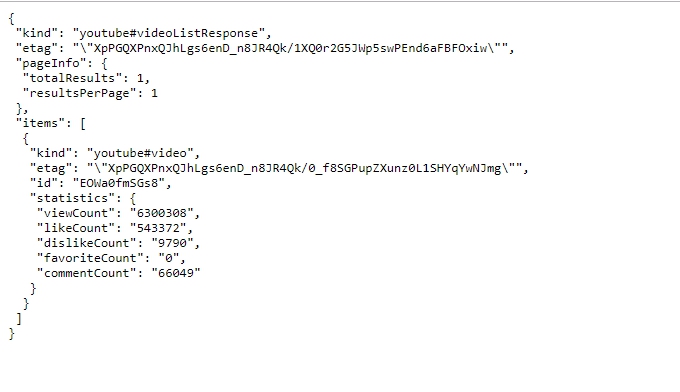

This is how the JSON file on the server looks: The

formula for obtaining the number of views in tables:

= ImportJSON ('' https://www.googleapis.com/youtube/v3/videos?id=EOWa0fmSGs8&key= {API_Key} & part = statistics ''; "/ items / statistics / viewCount"; '' noHeaders '')In principle, XPath may not be used in the request, but then you will get all the contents of the JSON file, without segmentation by parameters. Having created the necessary number of formulas with XPath parameters, you will get only the necessary data, which will be easier to process.

Getting a list of videos by keyword

The principle of parsing the output is the same as in the example above, but the structure of the request changes slightly.

In this case, the JSON file with the results of the video is located at the link

www.googleapis.com/youtube/v3/search?part=snippet&q= {Your_Query} & type = video & key = {API_Key}where the type parameter indicates exactly what we get at the output:

type = video - a list of videos relevant to the request

type = channel - a list of channels

type = playlist - a list of playlists

You can also specify in the request:

1. publication date or date range

2. region and language of search

3. video duration

4. number of results (default 5, maximum 50), etc.

A complete list of parameters and query constructor is available here .

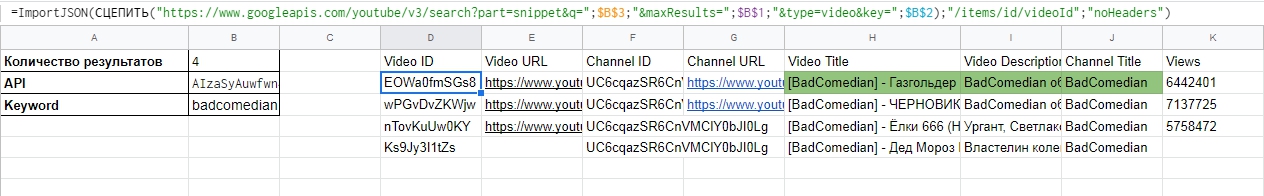

To get a list of IDs for badcomedian, use the formula:

= ImportJSON (" www.googleapis.com/youtube/v3/search?part=snippet&q=badcomedian&type=video&key= {API_Key}"; "/ items / id / videoId"; '' noHeaders '')ImportJSON works well in conjunction with the CONCEPT function, which allows us, by changing various parameters, to perform mass analysis or video parsing.

An example of how the table is organized:

Minus method

A serious minus of this method of parsing information is the quick exhaustion of the daily limit of API requests. I did not find a way to limit the number of requests or create a consistent call to the API. That is, an attempt to parse info on 10+ keys at the same time guaranteed to lead to an error - the end of the daily query limit. Therefore, you have to break down keywords into small groups and save the results as values.