Introduction to Kubernetes Network Policies for Security Professionals

- Transfer

Note perev. : The author of the article - Reuven Harrison - has over 20 years of experience in software development, and today is the technical director and co-founder of Tufin, a company that creates security policy management solutions. Considering Kubernetes network policies as a powerful enough tool for network segmentation in a cluster, he believes that they are not so easy to apply in practice. This material (rather voluminous) is designed to improve the knowledge of specialists in this matter and help them in creating the necessary configurations.

Today, many companies are increasingly choosing Kubernetes to run their applications. The interest in this software is so high that some call Kubernetes "the new data center operating system." Gradually, Kubernetes (or k8s) begins to be perceived as a critical part of the business, which requires the organization of mature business processes, including network security.

For security professionals who are puzzled by working with Kubernetes, the default policy of this platform may be a real discovery: allow it all.

This guide will help you understand the internal structure of network policies; Understand how they differ from the rules for regular firewalls. Some pitfalls will also be described and recommendations will be given to help protect applications in Kubernetes.

Kubernetes Network Policies

Kubernetes network policy mechanism allows you to control the interaction of applications deployed on the platform at the network level (the third in the OSI model). Network policies lack some of the advanced features of modern firewalls, such as OSI level 7 monitoring and threat detection, but they provide a basic level of network security, which is a good starting point.

Network policies control communications between pods

Kubernetes workloads are distributed across pods that consist of one or more containers deployed together. Kubernetes assigns each pod an IP address accessible from other pods. Kubernetes network policies set permissions for pod groups in the same way that security groups in the cloud are used to control access to virtual machine instances.

Defining Network Policies

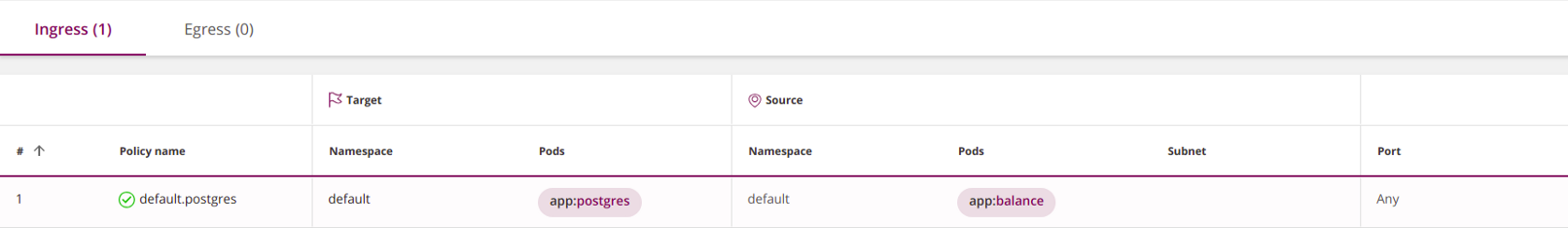

Like other Kubernetes resources, network policies are set in YAML. In the example below, the application

balanceis granted access to postgres:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.postgres

namespace: default

spec:

podSelector:

matchLabels:

app: postgres

ingress:

- from:

- podSelector:

matchLabels:

app: balance

policyTypes:

- Ingress

( Note : this screenshot, like all subsequent similar ones, was not created using Kubernetes native tools, but using the Tufin Orca tool, which is developed by the company of the author of the original article and which is mentioned at the end of the article.)

To define your own network policy basic knowledge of YAML. This language is based on indentation (specified by spaces, not tabs). An indented element belongs to the nearest indented element above it. A new list item begins with a hyphen, all other items are key-value .

Having described the policy in YAML, use kubectl to create it in the cluster:

kubectl create -f policy.yamlNetwork Policy Specification

The Kubernetes Network Policy Specification includes four elements:

podSelector: defines the pods affected by this policy (goals) - mandatory;policyTypes: indicates what types of policies are included in this: ingress and / or egress - optional, however, I recommend that you explicitly specify it in all cases;ingress: defines the allowed incoming traffic to the target pods - optional;egress: defines the allowed outgoing traffic from the target pods - optional.

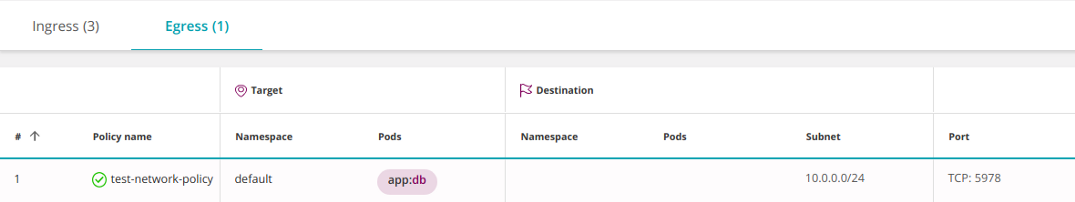

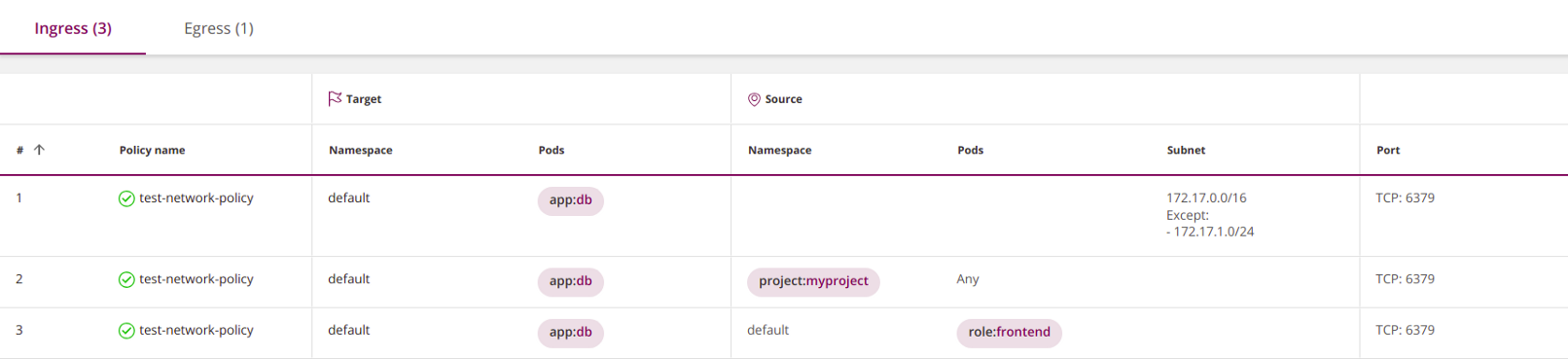

An example borrowed from the Kubernetes website (I replaced it

rolewith app) shows how all four elements are used:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector: # <<<

matchLabels:

app: db

policyTypes: # <<<

- Ingress

- Egress

ingress: # <<<

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

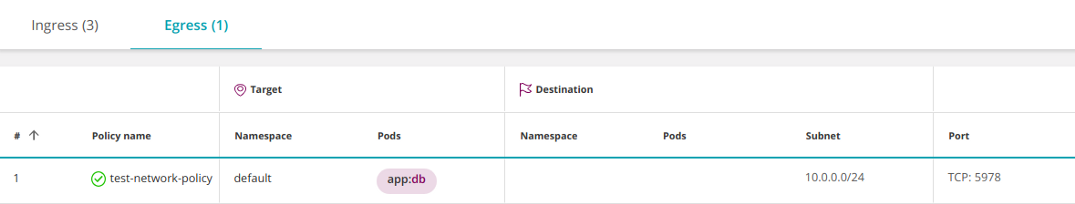

egress: # <<<

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978

Please note that all four elements are optional. Only required

podSelector, other parameters can be used as desired. If omitted

policyTypes, the policy will be interpreted as follows:- By default, it is assumed that it defines the ingress side. If the policy does not explicitly indicate this, the system will consider that all traffic is prohibited.

- The behavior on the egress side will be determined by the presence or absence of the corresponding egress parameter.

To avoid errors, I recommend always explicitly specifying

policyTypes . In accordance with the above logic, if the parameters

ingressand / or egressare omitted, the policy will prohibit all traffic (see the "Cleaning rule" below).The default policy is to allow

If no policies are defined, Kubernetes by default allows all traffic. All pods are free to exchange information with each other. From a security point of view, this may seem counterintuitive, but remember that Kubernetes was originally created by developers in order to ensure application interaction. Network policies were added later.

Namespaces

Namespaces - Kubernetes teamwork mechanism. They are designed to isolate logical environments from each other, while data exchange between spaces is enabled by default.

Like most Kubernetes components, network policies reside in a specific namespace. In the block,

metadatayou can specify which space the policy belongs to:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: my-namespace # <<<

spec:

...If the namespace is not explicitly specified in the metadata, the system will use the namespace specified in kubectl (by default

namespace=default):kubectl apply -n my-namespace -f namespace.yamlI recommend explicitly specifying namespace , unless you are writing a policy that targets multiple namespaces at once.

The main element

podSelectorin the policy will select pods from the namespace to which the policy belongs (it is denied access to pods from another namespace). Similarly, podSelectors in ingress and egress blocks can select pods only from their namespace, unless, of course, you combine them using

namespaceSelector(this will be discussed in the section “Filter by namespace and pod”).Policy Naming Rules

Policy names are unique within a single namespace. There can be no two policies with the same name in one space, but there can be policies with the same name in different spaces. This is useful when you want to reapply the same policy across multiple spaces.

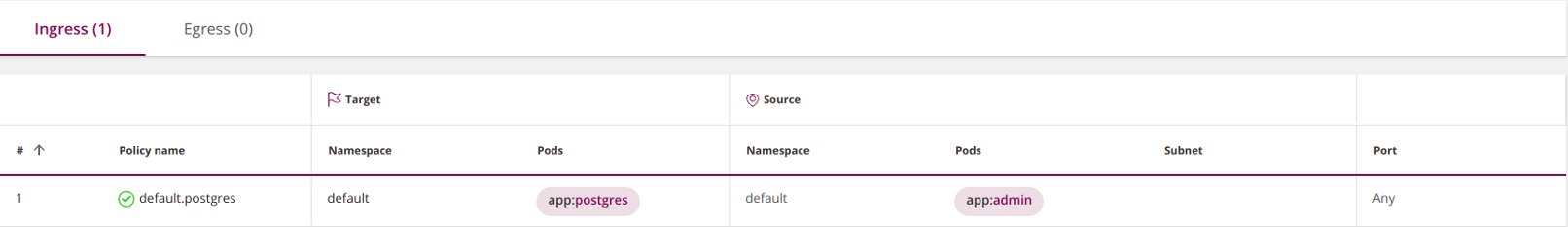

I especially like one way of naming. It consists in combining the namespace name with the target pods. For example:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.postgres # <<<

namespace: default

spec:

podSelector:

matchLabels:

app: postgres

ingress:

- from:

- podSelector:

matchLabels:

app: admin

policyTypes:

- Ingress

Labels

Custom labels can be attached to Kubernetes objects, such as pods and namespaces. Labels ( labels - labels) are the equivalent tag in the cloud. Kubernetes network policies use labels to select the pods to which they apply:

podSelector:

matchLabels:

role: db... or the namespaces to which they apply. In this example, all pods in the namespaces with the corresponding labels are selected:

namespaceSelector:

matchLabels:

project: myprojectOne caveat: when using,

namespaceSelectormake sure that the namespaces you select contain the label you want . Keep in mind that built-in namespaces, such as defaultand kube-system, do not contain labels by default. You can add a label to the space as follows:

kubectl label namespace default namespace=defaultIn this case, the namespace in the section

metadatashould refer to the actual name of the space, and not to the label:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default # <<<

spec:

...Source and Destination

Policies for firewalls consist of rules with sources and destinations. Kubernetes network policies are defined for the purpose - a set of pods to which they are applied, and then establish rules for incoming (ingress) and / or outgoing (egress) traffic. In our example, the policy goal will be all pods in the namespace

defaultwith the label with the key appand value db:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: db # <<<

policyTypes:

- Ingress

- Egress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978

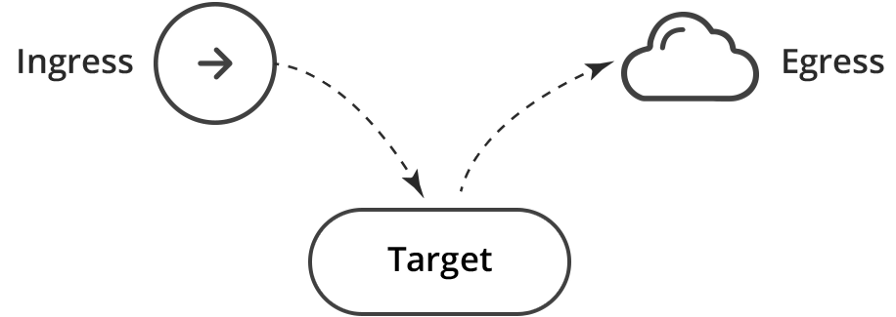

The subsection

ingressin this policy opens the incoming traffic to the target pods. In other words, ingress is the source, and the target is the appropriate recipient. Similarly, egress is the target, and the target is its source.

This is equivalent to two rules for the firewall: Ingress → Target; Goal → Egress.

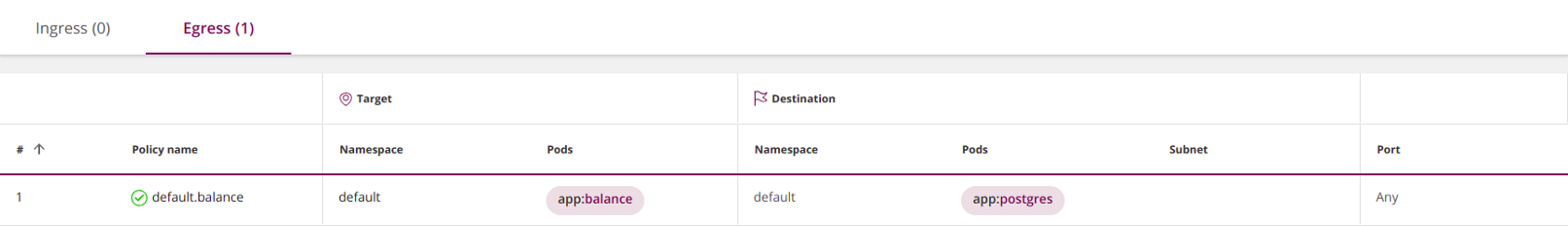

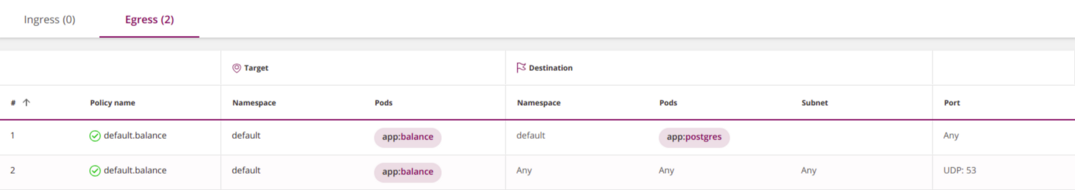

Egress and DNS (important!)

When limiting outgoing traffic, pay special attention to DNS - Kubernetes uses this service to map services to IP addresses. For example, the following policy will not work because you did not allow the application

balanceto access DNS:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.balance

namespace: default

spec:

podSelector:

matchLabels:

app: balance

egress:

- to:

- podSelector:

matchLabels:

app: postgres

policyTypes:

- Egress

You can fix it by opening access to the DNS service:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.balance

namespace: default

spec:

podSelector:

matchLabels:

app: balance

egress:

- to:

- podSelector:

matchLabels:

app: postgres

- to: # <<<

ports: # <<<

- protocol: UDP # <<<

port: 53 # <<<

policyTypes:

- Egress

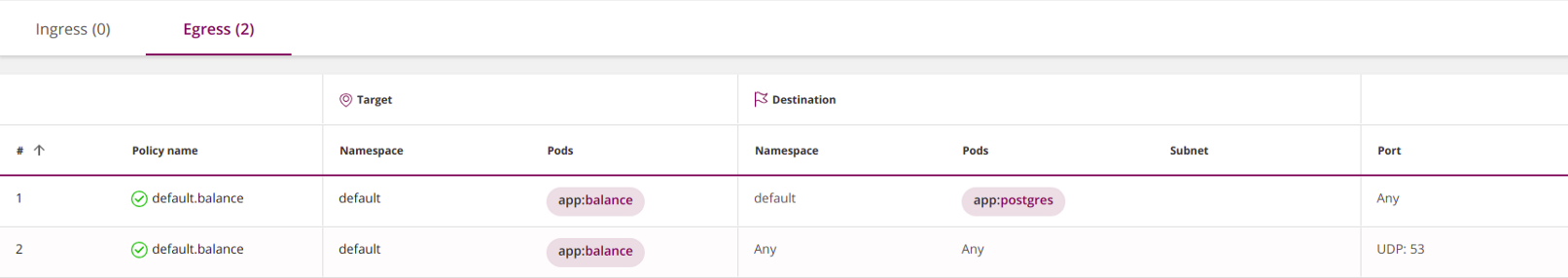

The last element

tois empty, and therefore it indirectly selects all pods in all namespaces , allowing you to balancesend DNS queries to the corresponding Kubernetes service (it usually works in space kube-system). This approach works, but it is overly permissive and unsafe , because it allows you to direct DNS queries outside the cluster.

You can improve it in three consecutive steps.

1. Allow DNS queries only within the cluster by adding

namespaceSelector:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.balance

namespace: default

spec:

podSelector:

matchLabels:

app: balance

egress:

- to:

- podSelector:

matchLabels:

app: postgres

- to:

- namespaceSelector: {} # <<<

ports:

- protocol: UDP

port: 53

policyTypes:

- Egress

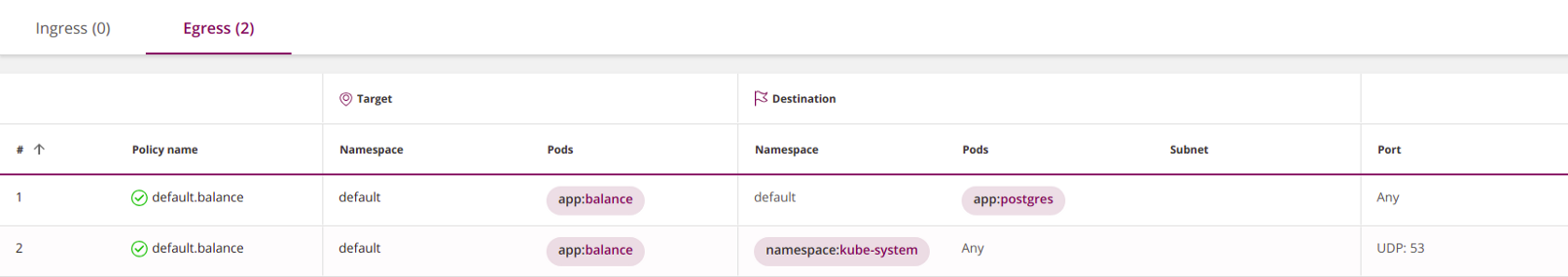

2. Allow DNS queries in the namespace only

kube-system. To do this, add the label to the namespace

kube-system: kubectl label namespace kube-system namespace=kube-system- and register it in the policy with namespaceSelector:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.balance

namespace: default

spec:

podSelector:

matchLabels:

app: balance

egress:

- to:

- podSelector:

matchLabels:

app: postgres

- to:

- namespaceSelector: # <<<

matchLabels: # <<<

namespace: kube-system # <<<

ports:

- protocol: UDP

port: 53

policyTypes:

- Egress

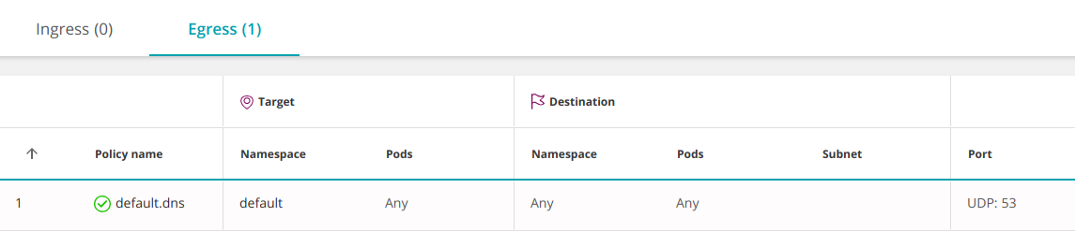

3. Paranoids can go even further and limit DNS queries to a specific DNS service

kube-system. In the section “Filter by namespaces and pods” we will explain how to achieve this. Another option is to resolve DNS at the namespace level. In this case, it will not need to be opened for each service:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.dns

namespace: default

spec:

podSelector: {} # <<<

egress:

- to:

- namespaceSelector: {}

ports:

- protocol: UDP

port: 53

policyTypes:

- EgressEmpty

podSelectorselects all pods in the namespace.

First match and rule order

In ordinary firewalls, the action (“Allow” or “Deny”) for a packet is determined by the first rule that it satisfies. In Kubernetes, the order of policies does not matter.

By default, when policies are not set, communications between pods are allowed and they can freely exchange information. As soon as you start formulating policies, each pod affected by at least one of them becomes isolated in accordance with the disjunction (logical OR) of all policies that have chosen it. Pods not affected by any policy remain open.

You can change this behavior using the stripping rule.

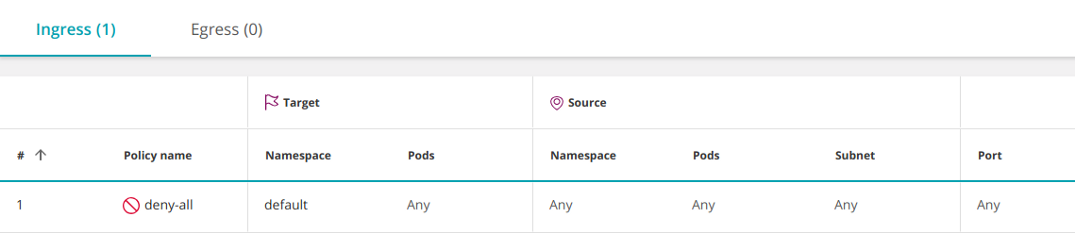

Stripping rule (Deny)

Firewall policies generally prohibit any explicitly unauthorized traffic.

Kubernetes does not have a deny action , however, a similar effect can be achieved with a regular (allowing) policy by selecting an empty group of source pods (ingress):

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

This policy selects all pods in the namespace and leaves the ingress undefined, blocking all incoming traffic.

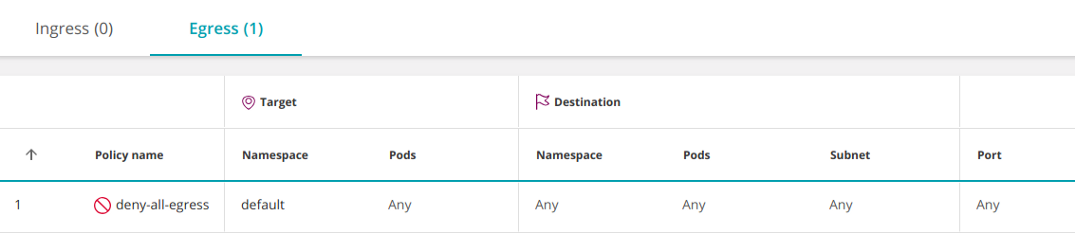

In a similar way, you can limit all outgoing traffic from the namespace:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-egress

namespace: default

spec:

podSelector: {}

policyTypes:

- Egress

Note that any additional policies that allow traffic to pods in the namespace will take precedence over this rule (similar to adding an allow rule over a deny rule in the firewall configuration).

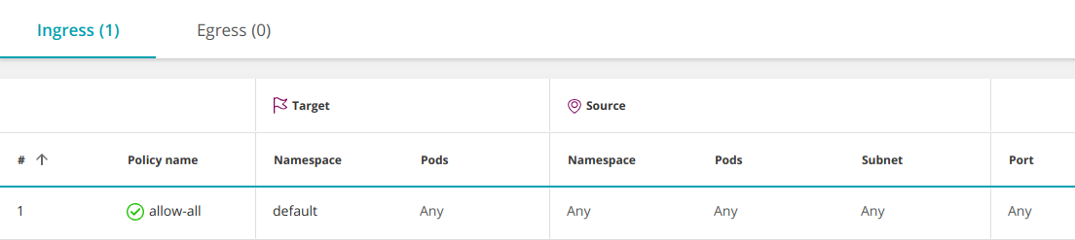

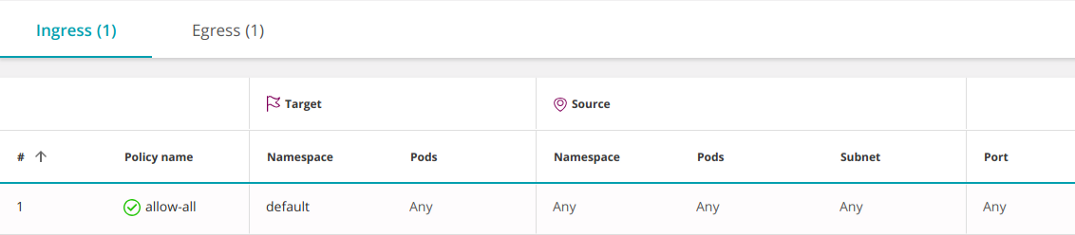

Allow All (Any-Any-Any-Allow)

To create an Allow All policy, you must add the above prohibition policy with an empty element

ingress:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all

namespace: default

spec:

podSelector: {}

ingress: # <<<

- {} # <<<

policyTypes:

- Ingress

It allows access from all pods in all namespaces (and all IPs) to any pods in the namespace

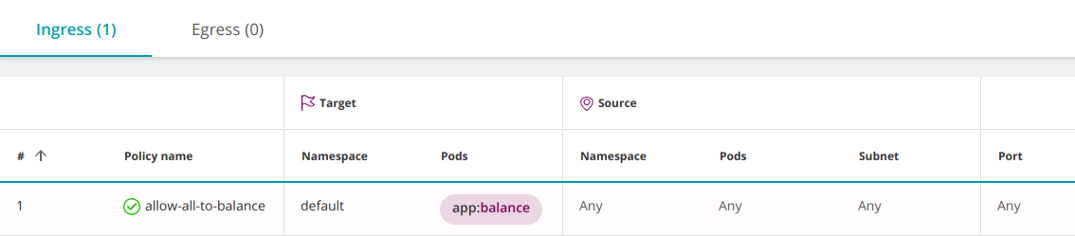

default . This behavior is enabled by default, so usually it does not need to be defined additionally. However, sometimes it may be necessary to temporarily disable some specific permissions to diagnose the problem. A rule can be narrowed and allowed access only to a specific set of pods (

app:balance) in the namespace default:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-to-balance

namespace: default

spec:

podSelector:

matchLabels:

app: balance

ingress:

- {}

policyTypes:

- Ingress

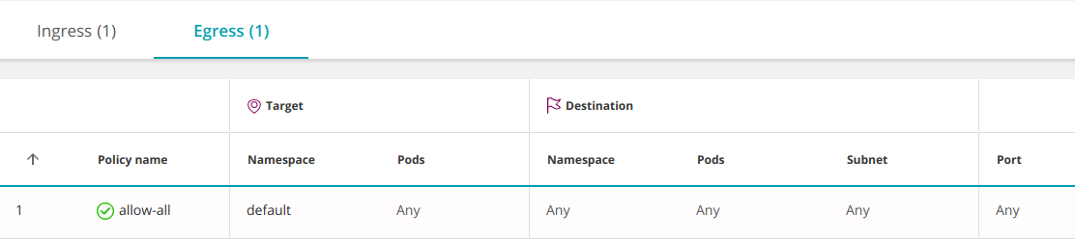

The following policy allows all ingress and egress traffic, including access to any IP outside the cluster:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all

spec:

podSelector: {}

ingress:

- {}

egress:

- {}

policyTypes:

- Ingress

- Egress

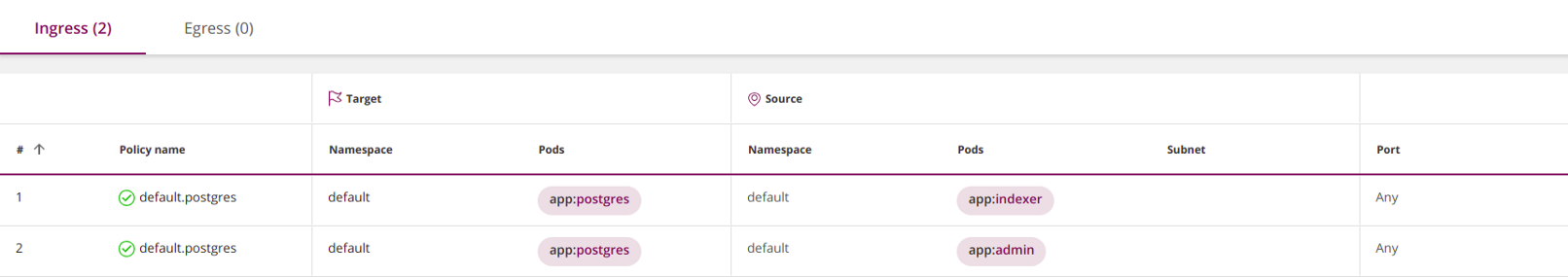

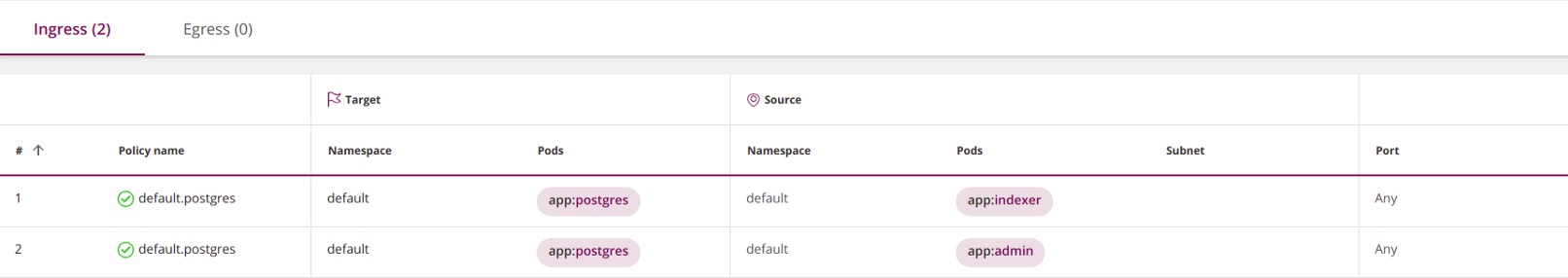

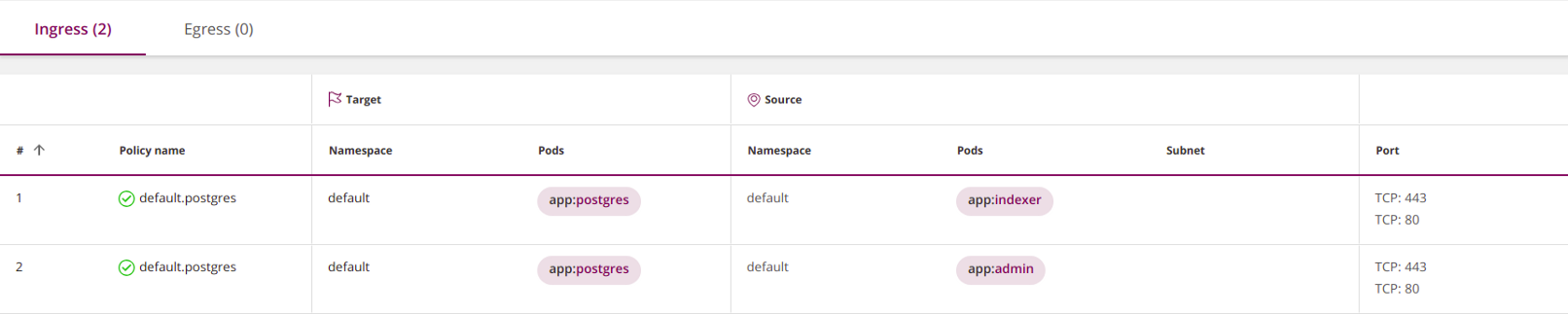

Combining multiple policies

Policies are combined using logical OR at three levels; permissions of each pod are set in accordance with the disjunction of all policies that affect it:

1. In the fields

fromand toyou can define three types of elements (they are all combined using OR):namespaceSelector- selects the entire namespace;podSelector- selects pods;ipBlock- selects a subnet.

At the same time, the number of elements (even the same) in subsections

from/ is tonot limited. All of them will be combined by logical OR.apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.postgres

namespace: default

spec:

ingress:

- from:

- podSelector:

matchLabels:

app: indexer

- podSelector:

matchLabels:

app: admin

podSelector:

matchLabels:

app: postgres

policyTypes:

- Ingress

2. Inside a policy, a section

ingresscan have many elements from(combined by logical OR). Similarly, a section egresscan include many elements to(also combined by a clause):apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.postgres

namespace: default

spec:

ingress:

- from:

- podSelector:

matchLabels:

app: indexer

- from:

- podSelector:

matchLabels:

app: admin

podSelector:

matchLabels:

app: postgres

policyTypes:

- Ingress

3. Different policies are also combined by logical OR.

But when they are combined, there is one limitation that Chris Cooney pointed out : Kubernetes can only combine policies with different ones ( or ). The policies that define ingress (or egress) will overwrite each other.

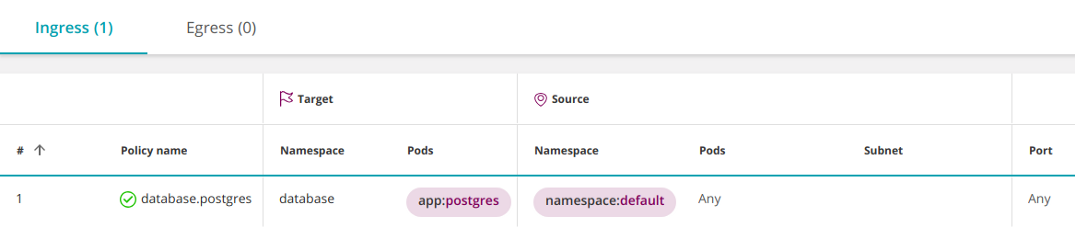

policyTypesIngressEgressThe relationship between namespaces

By default, information exchange between namespaces is allowed. This can be changed using a prohibitive policy that restricts outgoing and / or incoming traffic into the namespace (see "Stripping rule" above).

By blocking access to the namespace (see "Stripping rule" above), you can make exceptions to the restrictive policy by allowing connections from a specific namespace using

namespaceSelector:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: database.postgres

namespace: database

spec:

podSelector:

matchLabels:

app: postgres

ingress:

- from:

- namespaceSelector: # <<<

matchLabels:

namespace: default

policyTypes:

- Ingress

As a result, all pods in the namespace

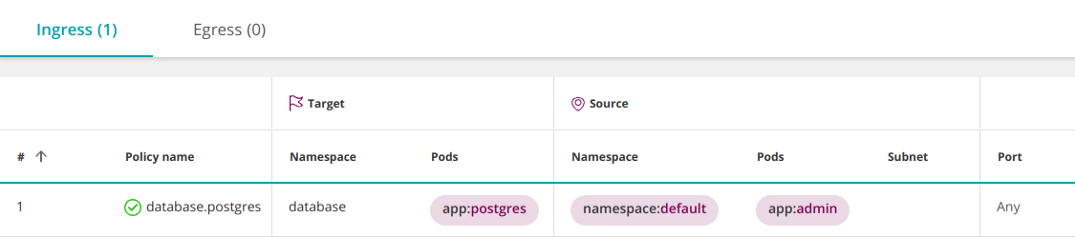

defaultwill gain access to pods postgresin the namespace database. But what if you want to open access postgresonly to specific pods in the namespace default?Filter by namespaces & pods

Kubernetes version 1.11 and higher allows to combine operators

namespaceSelectorand podSelectorby logical I. It looks as follows:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: database.postgres

namespace: database

spec:

podSelector:

matchLabels:

app: postgres

ingress:

- from:

- namespaceSelector:

matchLabels:

namespace: default

podSelector: # <<<

matchLabels:

app: admin

policyTypes:

- Ingress

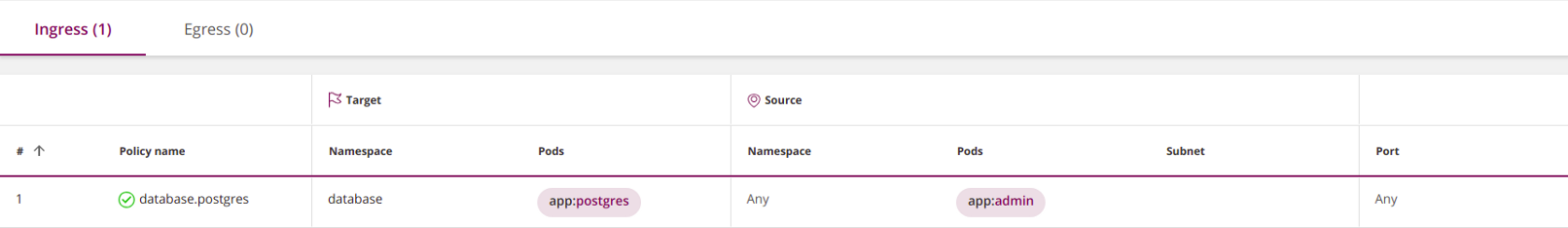

Why is this interpreted as AND instead of the usual OR?

Please note that

podSelectordoes not start with a hyphen. In YAML, this means that the one podSelectorin front of it namespaceSelectorrefers to the same list item. Therefore, they are combined by logical I. Adding a hyphen before

podSelectorwill lead to the appearance of a new list item, which will be combined with the previous one namespaceSelectorusing a logical OR. To select pods with a specific label in all namespaces , enter empty

namespaceSelector:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: database.postgres

namespace: database

spec:

podSelector:

matchLabels:

app: postgres

ingress:

- from:

- namespaceSelector: {}

podSelector:

matchLabels:

app: admin

policyTypes:

- Ingress

Multiple labels combine with AND

Rules for a firewall with many objects (hosts, networks, groups) are combined using a logical OR. The following rule will work if the source of the packet matches

Host_1OR Host_2:| Source | Destination | Service | Action |

| ----------------------------------------|

| Host_1 | Subnet_A | HTTPS | Allow |

| Host_2 | | | |

| ----------------------------------------|Conversely, in Kubernetes, various labels in

podSelectoror namespaceSelectorare combined by logical I. For example, the following rule will select pods that have both labels, role=dbAND version=v2:podSelector:

matchLabels:

role: db

version: v2The same logic applies to all types of operators: policy goal selectors, pod selectors, and namespace selectors.

Subnets and IP Addresses (IPBlocks)

Firewalls use VLANs, IP addresses, and subnets to segment a network.

In Kubernetes, IP addresses are assigned to pods automatically and can change frequently, so labels are used to select pods and namespaces in network policies.

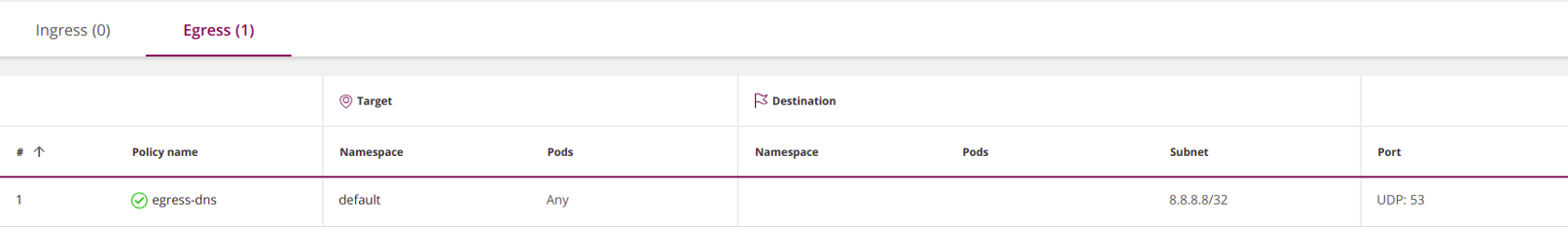

Subnets (

ipBlocks) are used to control incoming (egress) or outgoing (egress) external (North-South) connections. For example, this policy gives all pods from the namespace defaultaccess to the Google DNS service:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-dns

namespace: default

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- ipBlock:

cidr: 8.8.8.8/32

ports:

- protocol: UDP

port: 53

The empty pod selector in this example means "select all pods in the namespace."

This policy provides access only to 8.8.8.8; access to any other IP is denied. Thus, in essence, you blocked access to the Kubernetes internal DNS service. If you still want to open it, specify it explicitly.

Usually

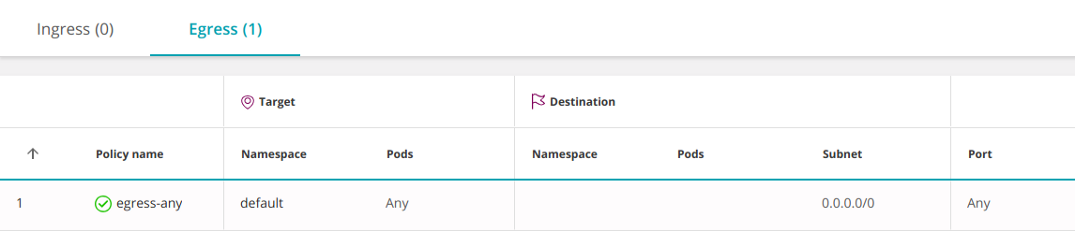

ipBlocksthey podSelectorsare mutually exclusive, since the internal IP addresses of pods are not used in ipBlocks. By specifying internal IP pods , you will actually allow connections to / from pods with these addresses. In practice, you will not know which IP address to use, which is why they should not be used to select pods. As a counter-example, the following policy includes all IPs and, therefore, allows access to all other pods:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-any

namespace: default

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- ipBlock:

cidr: 0.0.0.0/0

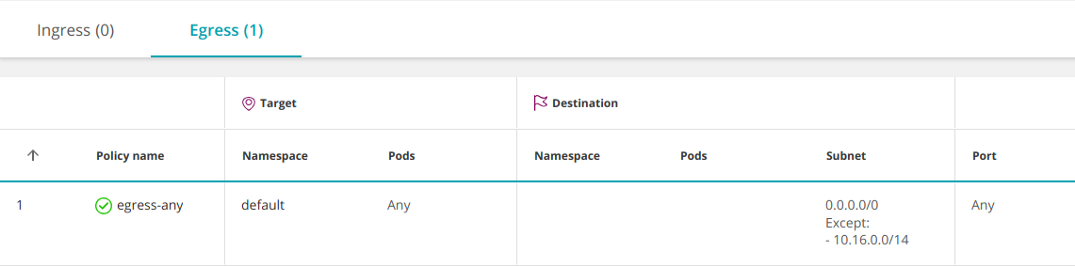

You can open access only to external IPs by excluding the internal IP addresses of pods. For example, if your pod's subnet is 10.16.0.0/14:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-any

namespace: default

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- ipBlock:

cidr: 0.0.0.0/0

except:

- 10.16.0.0/14

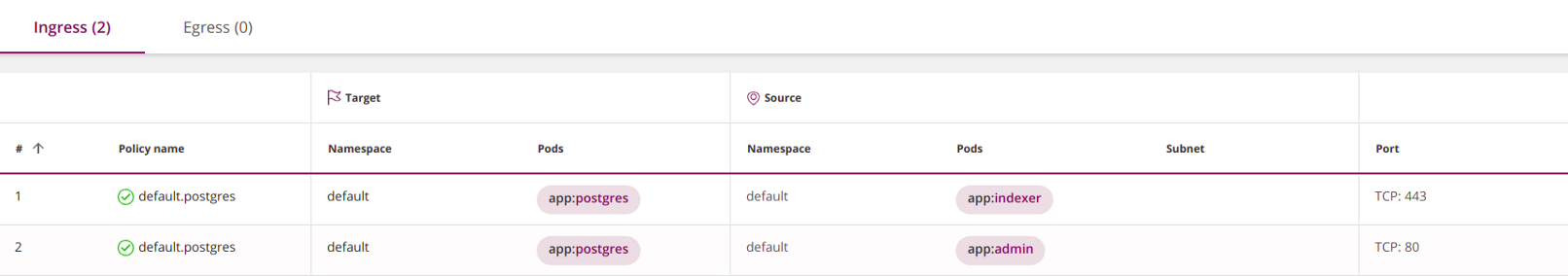

Ports and Protocols

Usually pods listen on one port. This means that you can simply omit port numbers in policies and leave everything as default. However, it is recommended that policies be made as restrictive as possible, so in some cases you can still specify ports:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.postgres

namespace: default

spec:

ingress:

- from:

- podSelector:

matchLabels:

app: indexer

- podSelector:

matchLabels:

app: admin

ports: # <<<

- port: 443 # <<<

protocol: TCP # <<<

- port: 80 # <<<

protocol: TCP # <<<

podSelector:

matchLabels:

app: postgres

policyTypes:

- Ingress

Notice that the selector

portsapplies to all elements in the block toor fromin which it is contained. To specify different ports for different sets of elements, ingresseither egresssplit into several subsections with toor fromand write down your ports in each:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default.postgres

namespace: default

spec:

ingress:

- from:

- podSelector:

matchLabels:

app: indexer

ports: # <<<

- port: 443 # <<<

protocol: TCP # <<<

- from:

- podSelector:

matchLabels:

app: admin

ports: # <<<

- port: 80 # <<<

protocol: TCP # <<<

podSelector:

matchLabels:

app: postgres

policyTypes:

- Ingress

The default ports work:

- If you completely omit the definition of ports (

ports), this means all protocols and all ports; - If you omit the protocol definition (

protocol), it means TCP; - If you omit the port definition (

port), this means all ports.

Best practice: do not rely on the default values, specify what you need explicitly.

Please note that you must use the ports of pods, not services (more on this in the next paragraph).

Are policies defined for pods or services?

Usually pods in Kubernetes contact each other through a service - a virtual load balancer that redirects traffic to pods that implement the service. You might think that network policies control access to services, but this is not so. Kubernetes network policies work with pod ports, not services.

For example, if a service listens to port 80, but redirects traffic to port 8080 of its pods, it is necessary to specify exactly 8080 in the network policy.

This mechanism should be recognized as not optimal: when changing the internal device of the service (the ports of which the pods are listening), you will have to update network policies.

A new architectural approach using Service Mesh (for example, see Istio below - approx. Transl.) Allows to cope with this problem.

Is it necessary to prescribe both Ingress and Egress?

The short answer is yes, so that pod A can communicate with pod B, you must allow it to create an outgoing connection (for this you need to configure the egress policy), and pod B must be able to accept an incoming connection (for this, ingress politics).

However, in practice, you can rely on the default policy to allow connections in one or both directions.

If a pod source is selected by one or more egress policies, the restrictions imposed on it will be determined by their disjunction. In this case, you will need to explicitly allow the connection to the pod destination . If the pod is not selected by any policy, its egress traffic is allowed by default.

Similarly fate pod'a- addressee , the selected one or more ingress politico will be determined by their disjunction. In this case, you must explicitly allow it to receive traffic from the source pod. If pod is not selected by any policy, all ingress traffic is allowed for it by default.

See “Stateful or Stateless” below.

Logs

Kubernetes network policies do not know how to log traffic. This makes it difficult to determine if a policy is working properly and greatly complicates security analysis.

Traffic control to external services

Kubernetes network policies do not allow a fully qualified domain name (DNS) in egress sections. This fact leads to significant inconvenience when trying to restrict traffic to external destinations without a fixed IP address (such as aws.com).

Policy check

Firewalls will warn you or even refuse to accept an erroneous policy. Kubernetes is also doing some verification. When defining a network policy through kubectl, Kubernetes may declare that it is incorrect and refuse to accept it. In other cases, Kubernetes will accept the policy and supplement it with the missing details. They can be seen using the command:

kubernetes get networkpolicy -o yaml Keep in mind that the Kubernetes verification system is not infallible and may miss some types of errors.

Execution

Kubernetes does not implement network policies on its own, but is just an API gateway that places the burdensome control work on a underlying system called the Container Networking Interface (CNI). Setting policies in the Kubernetes cluster without assigning the corresponding CNI is similar to creating policies on the firewall management server without subsequently installing them in the firewalls. You yourself need to make sure that you have a decent CNI, or, in the case of Kubernetes platforms hosted in the cloud (see the list of providers here , approx. Per.) , Enable network policies that install CNI for you.

Please note that Kubernetes will not warn you if you set the network policy without the corresponding auxiliary CNI.

Stateful or Stateless?

All the CNI Kubernetes I’ve encountered have state states (for example, Calico uses the Linux conntrack). This allows the pod to receive responses over the TCP connection it initiates without having to reinstall it. However, I am not aware of the Kubernetes standard, which would guarantee statefulness.

Advanced Security Policy Management

Here are some ways you can better enforce security policies in Kubernetes:

- The Service Mesh architectural pattern uses sidecar containers to provide detailed telemetry and control traffic at the service level. An example is Istio .

- Some of the CNI vendors have supplemented their tools to go beyond Kubernetes network policies.

- Tufin Orca provides transparency and automation of Kubernetes network policies.

The Tufin Orca package manages Kubernetes network policies (and serves as the source for the screenshots above).

Additional Information

- Network policy examples prepared by Ahmet Alp Balkan from GKE ;

- Documentation from the official Kubernetes website ;

- Guide Kubernetes network model ;

- A script for checking network policies .

Conclusion

Kubernetes network policies offer a good set of tools for cluster segmentation, but they are intuitive and have many subtleties. I believe that because of this complexity, the policies of many existing clusters contain errors. Possible solutions to this problem are automating policy definitions or using other segmentation tools.

Hope this guide helps clarify some issues and solve problems you may encounter.

PS from the translator

Read also in our blog:

- “Back to microservices with Istio”: part 1 (familiarity with the main features) , part 2 (routing, traffic management) , part 3 (security) ;

- “Illustrated Guide to Networking in Kubernetes”: parts 1 and 2 (network model, overlay networks) , part 3 (services and traffic processing) ;

- “ Docker and Kubernetes in security-demanding environments ”;

- “ 9 Best Security Practices at Kubernetes ”;

- " 11 Ways to (Not) Become a Victim of Kubernetes Hacking ."