Future data center infrastructures

General-purpose data center architectures (such data centers are still widely used today) performed their tasks well in the past, but more recently, most of them have reached their limits of scalability, performance, and efficiency. The architecture of such data centers typically uses the principle of aggregate allocation of resources - processors, hard drives, and network channel widths.

At the same time, the change in the volume of resources used (increase or decrease) occurs in such data centers discretely, with predetermined coefficients. For example, with a factor of 2, we can get such a series of configurations:

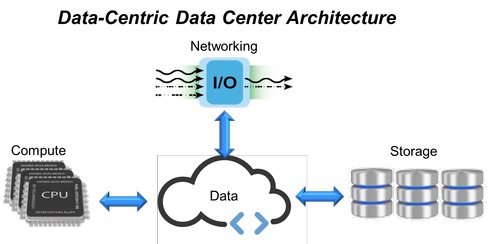

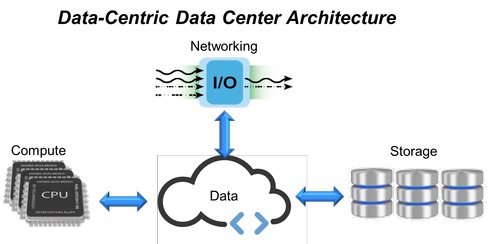

Fig. 1. Data-centered data center architecture.

In response to such requirements, hyperconverged infrastructures (HCI, Hyper-Converged Infrastructure) of the data center came to be combined with all computing resources, data storage systems and network channels into a single virtualized system (Fig. 1). However, this structure also requires the installation of additional servers to expand the scalability limits (adding new storage systems, memory or network channels). This predetermined the emergence of an approach based on the expansion of the HCI infrastructure with fixed modules (each of which contains processors, memory and data storage), which ultimately does not provide the level of flexibility and predictable performance that is so in demand in modern data centers.

HCI is already being replaced by component-disaggregated infrastructures (CDI, Composable-Disaggregated Infrastructures), which are designed to overcome the limitations of convergent or hyper-convergent IT solutions and provide better flexibility for data centers.

Appearance of component-disaggregated infrastructure

To overcome the problems associated with the general-purpose data center architecture (fixed ratio of resources, underutilization and redundant redundancy), a converged infrastructure was first developed, consisting of pre-configured hardware resources within a single system. Computing resources, storage systems and network interactions in them are discrete and the volumes of their consumption are configured programmatically. Then, convergent structures were transformed into hyperconvergent (HCI), where all hardware resources are virtualized, and the allocation of the necessary amounts of computing resources, storage and network channels is automated at the software level.

Despite the fact that HCI combines all resources into a single virtualized system, this approach also has its drawbacks. To add to the client, for example, a significantly larger amount of storage, RAM, or expand the network channel, in the HCI architecture this will require the use of additional processor modules, even if they are not directly used for computational operations. As a result, we have a situation where, while creating a data center that is more flexible in comparison with previous architectures, still uses inflexible building elements.

As shown by IT surveys of medium-sized and large enterprise data centers, approximately 50% of the total available storage capacity is allocated for actual use, while only half of the allocated storage volume is used by applications, CPU time is also used by about 50%. Thus, the approach with the use of fixed structural systems leads to their underutilization and does not provide the necessary flexibility and predictable performance. To solve these problems, a disaggregated model was created, which is easy to assemble from separate functional modules using open API software tools.

Component-Disaggregated Infrastructure (CDI) is a data center architecture in which physical resources — computing power, storage, and network links — are treated as services. Providing user applications with all the necessary resources to perform their current workload occurs in real time, thus achieving optimal performance within the data center.

Component-disaggregated model against hyperconvergence

Virtual servers in the component-disaggregated infrastructure (Fig. 2) are created by assembling resources from independent pools of computing systems, storages and network devices, unlike HCI, where physical resources are tied to HCI servers. In this way, CDI servers can be created and reconfigured as necessary according to the requirements of a specific workload. Using API access to virtualization software, an application can request any necessary resources, getting instant server reconfiguration in real time, without human intervention — a real step towards a self-managed data center.

Fig. 2: Hyper-converged model (HCI) and component-disaggregated (CDI).

An important part of the CDI architecture is an internal communication interface that provides the separation (disaggregation) of the storage devices of a particular server from its computing power and its provision for use by other applications. NVMe-over-Fabrics is used as the main protocol here . It provides the least end-to-end data transfer delays between applications and storage devices directly. As a result, it allows CDI to provide users with all the benefits of a direct-connected storage system (low latency and high performance), providing efficiency and flexibility through sharing resources.

Fig. 3. NVMe-over-Fabrics structure.

NVMe technology itself (Non-Volatile Memory Express) is an optimized, low-latency, high-performance interface that uses an architecture and a set of protocols designed specifically for connecting SSDs in servers via the PCI Express bus. For CDI, this standard has been extended to NVMe-over-Fabrics - beyond local servers.

This specification allows flash devices to communicate over a network (using different network protocols and transmission media — see Figure 3), providing the same high performance and transmission delay as low as local NVMe devices. At the same time, there are practically no restrictions on the number of servers that NVMe-over-Fabrics devices can share or on the number of such storage devices that can be accessible to a single server.

The needs of today's big data-intensive applications exceed the capabilities of traditional data center architectures, especially in terms of scalability, performance, and efficiency. With the advent of CDI (component-disaggregated infrastructure), data center architects, cloud service providers, system integrators, storage software developers, and OEMs can provide storage and computing services with greater efficiency, flexibility, efficiency and ease of scaling, dynamically providing the required SLA for all workloads.

At the same time, the change in the volume of resources used (increase or decrease) occurs in such data centers discretely, with predetermined coefficients. For example, with a factor of 2, we can get such a series of configurations:

However, for a variety of tasks, these configurations prove to be economically inefficient, often customers have some intermediate configuration, for example, 6CPU, 16GB RAM, 100GB storage. Thus, we come to the understanding that the above universal approach to the allocation of data center resources is ineffective, especially during intensive work with big data (for example, fast data, analytics, artificial intelligence, machine learning). In such cases, users want to have more flexible control over the resources they use, they need the ability to independently scale up the processors used, memory and data storages, network channels. The ultimate goal of this idea is to create a flexible component infrastructure.

- 2CPU, 8 GB RAM, 40GB storage;

- 4CPU, 16GB RAM, 80GB storage;

- 8CPU, 32GB RAM, 160GB storage;

- ...

Fig. 1. Data-centered data center architecture.

In response to such requirements, hyperconverged infrastructures (HCI, Hyper-Converged Infrastructure) of the data center came to be combined with all computing resources, data storage systems and network channels into a single virtualized system (Fig. 1). However, this structure also requires the installation of additional servers to expand the scalability limits (adding new storage systems, memory or network channels). This predetermined the emergence of an approach based on the expansion of the HCI infrastructure with fixed modules (each of which contains processors, memory and data storage), which ultimately does not provide the level of flexibility and predictable performance that is so in demand in modern data centers.

HCI is already being replaced by component-disaggregated infrastructures (CDI, Composable-Disaggregated Infrastructures), which are designed to overcome the limitations of convergent or hyper-convergent IT solutions and provide better flexibility for data centers.

Appearance of component-disaggregated infrastructure

To overcome the problems associated with the general-purpose data center architecture (fixed ratio of resources, underutilization and redundant redundancy), a converged infrastructure was first developed, consisting of pre-configured hardware resources within a single system. Computing resources, storage systems and network interactions in them are discrete and the volumes of their consumption are configured programmatically. Then, convergent structures were transformed into hyperconvergent (HCI), where all hardware resources are virtualized, and the allocation of the necessary amounts of computing resources, storage and network channels is automated at the software level.

Despite the fact that HCI combines all resources into a single virtualized system, this approach also has its drawbacks. To add to the client, for example, a significantly larger amount of storage, RAM, or expand the network channel, in the HCI architecture this will require the use of additional processor modules, even if they are not directly used for computational operations. As a result, we have a situation where, while creating a data center that is more flexible in comparison with previous architectures, still uses inflexible building elements.

As shown by IT surveys of medium-sized and large enterprise data centers, approximately 50% of the total available storage capacity is allocated for actual use, while only half of the allocated storage volume is used by applications, CPU time is also used by about 50%. Thus, the approach with the use of fixed structural systems leads to their underutilization and does not provide the necessary flexibility and predictable performance. To solve these problems, a disaggregated model was created, which is easy to assemble from separate functional modules using open API software tools.

Component-Disaggregated Infrastructure (CDI) is a data center architecture in which physical resources — computing power, storage, and network links — are treated as services. Providing user applications with all the necessary resources to perform their current workload occurs in real time, thus achieving optimal performance within the data center.

Component-disaggregated model against hyperconvergence

Virtual servers in the component-disaggregated infrastructure (Fig. 2) are created by assembling resources from independent pools of computing systems, storages and network devices, unlike HCI, where physical resources are tied to HCI servers. In this way, CDI servers can be created and reconfigured as necessary according to the requirements of a specific workload. Using API access to virtualization software, an application can request any necessary resources, getting instant server reconfiguration in real time, without human intervention — a real step towards a self-managed data center.

Fig. 2: Hyper-converged model (HCI) and component-disaggregated (CDI).

An important part of the CDI architecture is an internal communication interface that provides the separation (disaggregation) of the storage devices of a particular server from its computing power and its provision for use by other applications. NVMe-over-Fabrics is used as the main protocol here . It provides the least end-to-end data transfer delays between applications and storage devices directly. As a result, it allows CDI to provide users with all the benefits of a direct-connected storage system (low latency and high performance), providing efficiency and flexibility through sharing resources.

Fig. 3. NVMe-over-Fabrics structure.

NVMe technology itself (Non-Volatile Memory Express) is an optimized, low-latency, high-performance interface that uses an architecture and a set of protocols designed specifically for connecting SSDs in servers via the PCI Express bus. For CDI, this standard has been extended to NVMe-over-Fabrics - beyond local servers.

This specification allows flash devices to communicate over a network (using different network protocols and transmission media — see Figure 3), providing the same high performance and transmission delay as low as local NVMe devices. At the same time, there are practically no restrictions on the number of servers that NVMe-over-Fabrics devices can share or on the number of such storage devices that can be accessible to a single server.

The needs of today's big data-intensive applications exceed the capabilities of traditional data center architectures, especially in terms of scalability, performance, and efficiency. With the advent of CDI (component-disaggregated infrastructure), data center architects, cloud service providers, system integrators, storage software developers, and OEMs can provide storage and computing services with greater efficiency, flexibility, efficiency and ease of scaling, dynamically providing the required SLA for all workloads.