How we implemented cache on the Tarantool database

Good day!

I want to share with you a story about the implementation of cache on the Tarantool database and my work features.

I work as a Java developer in a telecommunications company. The main objective: implementing business logic for the platform that the company bought from the vendor. Of the first features, this is soap work and the almost complete absence of caching, except in the JVM memory. All this is certainly good until the number of application instances exceeds two dozen ...

In the course of work and an understanding of the platform’s features, an attempt was made to cache. At that time, MongoDB was already launched, and as a result, we did not get any special positive results as in the test.

Further search for alternatives and advice from my good friendmr_elzor it was decided to try the Tarantool database.

In a cursory study, only doubt appeared in lua, since I had not written on it from the word "completely" before. But pushing all doubts aside, he set about installing. About closed networks and firewalls, I think few people are interested, but I advise you to try to get around them and put everything from public sources.

Test servers with configuration: 8 Cpu, 16 GB Ram, 100 Gb HDD, Debian 9.4.

Installation was according to the instructions from the site. And so I got an example option. The thought immediately appeared about a visual interface with which support would be convenient. In a quick search, I found and configured tarantool-admin . Works at Docker and covers support tasks 100%, at least for now.

But let's talk about more interesting.

The next thought was to configure my version in the master - slave configuration within the same server, since the documentation contains only examples with two different servers.

After spending some time understanding lua and describing the configuration, I launch the wizard.

I immediately fall into a stupor and don’t understand why the error is, but I see that it is in the “loading” status.

I run slave:

And I see the same mistake. Here, I generally begin to strain and not understand what is happening, since there is nothing in the documentation about it at all ... But when checking the status, I see that it did not start at all, although it says that the status is "running":

But at the same time, the master began to work:

Restarting the slave does not help. I wonder why?

I stop the master. And follow the steps in reverse order.

I see that slave is trying to start.

I start the wizard and see that it has not risen and generally switched to orphan status, while slave generally fell.

It becomes even more interesting.

I see in the logs on slave that he even saw the master and tried to synchronize.

And the attempt was successful:

It even started:

But for unknown reasons, he lost connection and fell:

Trying to start slave again does not help.

Now delete the files created by the instances. In my case, I delete everything from the / var / lib / tarantool directory.

I start slave first, and only then master. And lo and behold ...

I did not find any explanation for such behavior, except as a "feature of this software."

This situation will appear every time if your server has completely rebooted.

Upon further analysis of the architecture of this software, it turns out that it is planned to use only one vCPU for one instance and many more resources remain free.

In the ideology of n vCPU, we can raise the master and n-2 slaves for reading.

Given that on the test server 8 vCPU we can raise the master and 6 instances for reading.

I copy the file for slave, correct the ports and run, i.e. some more slaves are added.

Important! When adding another instance, you must register it on the wizard.

But you must first start a new slave, and only then restart the master.

I already had a configuration running with a wizard and two slaves.

I decided to add a third slave.

I registered it on the master and restarted the master first, and this is what I saw:

Those. our master became a loner, and replication fell apart.

Starting a new slave will no longer help and will result in an error:

And in the logs I saw a little informative entry:

We stop the wizard and start a new slave. There will also be an error, as at the first start, but we will see that it is loading status.

But when you start master, the new slave crashes, and master does not go with the status of "running".

In this situation, there is only one way out. As I wrote earlier, I delete files created by instances and run slaves first, and then master.

Everything started successfully.

So, by trial and error, I found out how to configure and start replication correctly.

As a result, the following configuration was assembled:

2 servers.

2 master. Hot reserve.

12 slaves. All are active.

In the logic of tarantool, http.server was used so as not to block the additional adapter (remember the vendor, platform and soap) or fasten the library to each business process.

In order to avoid a discrepancy between the masters, on the balancer (NetScaler, HAProxy or any other your favorite), we set the reserve rule, i.e. insert, update, delete operations go only to the first active master.

At this time, the second simply replicates the records from the first. Slaves themselves are connected to the first specified master from the configuration, which is what we need in this situation.

On lua, implemented CRUD operations for key-value. At the moment, this is enough to solve the problem.

In view of the features of working with soap, a proxy business process was implemented, in which the logic of working with a tarantula via http was laid.

If the key data is present, then it is returned immediately. If not, then a request is sent to the master system, and saved in the Tarantool database.

As a result, one business process in tests processes up to 4k requests. In this case, the response time from the tarantula is ~ 1ms. The average response time is up to 3ms.

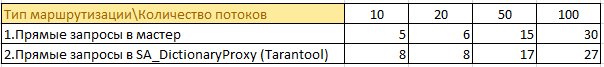

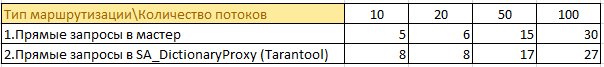

Here is some information from the tests:

There were 50 business processes that go to 4 master systems and cache data in their memory. Duplication of information in full growth at each instance. Given that java already loves memory ... the prospect is not the best.

50 business processes request information through the cache. Now information from 4 instances of the wizard is stored in one place, and is not cached in memory at each instance. It was possible to significantly reduce the load on the master system, there are no duplicates of information, and memory consumption on instances with business logic has been reduced.

An example of the size of information storage in the tarantula’s memory:

At the end of the day, these numbers can double, but there is no “drawdown” in performance.

In battle, the current version creates 2k - 2.5k requests per second of real load. The average response time is similar to tests up to 3ms.

If you look at htop on one of the servers with tarantool, we will see that they are "cooling":

Despite all the subtleties and nuances of the Tarantool database, you can achieve great performance.

I hope this project will develop and these uncomfortable moments will be resolved.

I want to share with you a story about the implementation of cache on the Tarantool database and my work features.

I work as a Java developer in a telecommunications company. The main objective: implementing business logic for the platform that the company bought from the vendor. Of the first features, this is soap work and the almost complete absence of caching, except in the JVM memory. All this is certainly good until the number of application instances exceeds two dozen ...

In the course of work and an understanding of the platform’s features, an attempt was made to cache. At that time, MongoDB was already launched, and as a result, we did not get any special positive results as in the test.

Further search for alternatives and advice from my good friendmr_elzor it was decided to try the Tarantool database.

In a cursory study, only doubt appeared in lua, since I had not written on it from the word "completely" before. But pushing all doubts aside, he set about installing. About closed networks and firewalls, I think few people are interested, but I advise you to try to get around them and put everything from public sources.

Test servers with configuration: 8 Cpu, 16 GB Ram, 100 Gb HDD, Debian 9.4.

Installation was according to the instructions from the site. And so I got an example option. The thought immediately appeared about a visual interface with which support would be convenient. In a quick search, I found and configured tarantool-admin . Works at Docker and covers support tasks 100%, at least for now.

But let's talk about more interesting.

The next thought was to configure my version in the master - slave configuration within the same server, since the documentation contains only examples with two different servers.

After spending some time understanding lua and describing the configuration, I launch the wizard.

# systemctl start tarantool@master

Job for tarantool@master.service failed because the control process exited with error code.

See "systemctl status tarantool@master.service" and "journalctl -xe"for details.

I immediately fall into a stupor and don’t understand why the error is, but I see that it is in the “loading” status.

# systemctl status tarantool@master

● tarantool@master.service - Tarantool Database Server

Loaded: loaded (/lib/systemd/system/tarantool@.service; enabled; vendor preset: enabled)

Active: activating (start) since Tue 2019-02-19 17:03:24 MSK; 17s ago

Docs: man:tarantool(1)

Process: 20111 ExecStop=/usr/bin/tarantoolctl stop master (code=exited, status=0/SUCCESS)

Main PID: 20120 (tarantool)

Status: "loading"

Tasks: 5 (limit: 4915)

CGroup: /system.slice/system-tarantool.slice/tarantool@master.service

└─20120 tarantool master.lua <loading>

Feb 19 17:03:24 tarantuldb-tst4 systemd[1]: Starting Tarantool Database Server...

Feb 19 17:03:24 tarantuldb-tst4 tarantoolctl[20120]: Starting instance master...

Feb 19 17:03:24 tarantuldb-tst4 tarantoolctl[20120]: Run console at unix/:/var/run/tarantool/master.control

Feb 19 17:03:24 tarantuldb-tst4 tarantoolctl[20120]: started

I run slave:

# systemctl start tarantool@slave2

Job for tarantool@slave2.service failed because the control process exited with error code.

See "systemctl status tarantool@slave2.service" and "journalctl -xe"for details.

And I see the same mistake. Here, I generally begin to strain and not understand what is happening, since there is nothing in the documentation about it at all ... But when checking the status, I see that it did not start at all, although it says that the status is "running":

# systemctl status tarantool@slave2

● tarantool@slave2.service - Tarantool Database Server

Loaded: loaded (/lib/systemd/system/tarantool@.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Tue 2019-02-19 17:04:52 MSK; 27s ago

Docs: man:tarantool(1)

Process: 20258 ExecStop=/usr/bin/tarantoolctl stop slave2 (code=exited, status=0/SUCCESS)

Process: 20247 ExecStart=/usr/bin/tarantoolctl start slave2 (code=exited, status=1/FAILURE)

Main PID: 20247 (code=exited, status=1/FAILURE)

Status: "running"

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: tarantool@slave2.service: Unit entered failed state.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: tarantool@slave2.service: Failed with result 'exit-code'.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: tarantool@slave2.service: Service hold-off time over, scheduling restart.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: Stopped Tarantool Database Server.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: tarantool@slave2.service: Start request repeated too quickly.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: Failed to start Tarantool Database Server.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: tarantool@slave2.service: Unit entered failed state.

Feb 19 17:04:52 tarantuldb-tst4 systemd[1]: tarantool@slave2.service: Failed with result 'exit-code'.

But at the same time, the master began to work:

# ps -ef | grep taran

taranto+ 20158 1 0 17:04 ? 00:00:00 tarantool master.lua <running>

root 20268 2921 0 17:06 pts/1 00:00:00 grep taran

Restarting the slave does not help. I wonder why?

I stop the master. And follow the steps in reverse order.

I see that slave is trying to start.

# ps -ef | grep taran

taranto+ 20399 1 0 17:09 ? 00:00:00 tarantool slave2.lua <loading>

I start the wizard and see that it has not risen and generally switched to orphan status, while slave generally fell.

# ps -ef | grep taran

taranto+ 20428 1 0 17:09 ? 00:00:00 tarantool master.lua <orphan>

It becomes even more interesting.

I see in the logs on slave that he even saw the master and tried to synchronize.

2019-02-19 17:13:45.113 [20751] iproto/101/main D> binary: binding to 0.0.0.0:3302...

2019-02-19 17:13:45.113 [20751] iproto/101/main I> binary: bound to 0.0.0.0:3302

2019-02-19 17:13:45.113 [20751] iproto/101/main D> binary: listening on 0.0.0.0:3302...

2019-02-19 17:13:45.113 [20751] iproto D> cpipe_flush_cb: locking &endpoint->mutex

2019-02-19 17:13:45.113 [20751] iproto D> cpipe_flush_cb: unlocking &endpoint->mutex

2019-02-19 17:13:45.113 [20751] main D> cbus_endpoint_fetch: locking &endpoint->mutex

2019-02-19 17:13:45.113 [20751] main D> cbus_endpoint_fetch: unlocking &endpoint->mutex

2019-02-19 17:13:45.113 [20751] main/101/slave2 I> connecting to 1 replicas

2019-02-19 17:13:45.113 [20751] main/106/applier/replicator@tarantuldb-t D> => CONNECT

2019-02-19 17:13:45.114 [20751] main/106/applier/replicator@tarantuldb-t I> remote master 825af7c3-f8df-4db0-8559-a866b8310077 at 10.78.221.74:3301 running Tarantool 1.10.2

2019-02-19 17:13:45.114 [20751] main/106/applier/replicator@tarantuldb-t D> => CONNECTED

2019-02-19 17:13:45.114 [20751] main/101/slave2 I> connected to 1 replicas

2019-02-19 17:13:45.114 [20751] coio V> loading vylog 14

2019-02-19 17:13:45.114 [20751] coio V> done loading vylog

2019-02-19 17:13:45.114 [20751] main/101/slave2 I> recovery start

2019-02-19 17:13:45.114 [20751] main/101/slave2 I> recovering from `/var/lib/tarantool/cache_slave2/00000000000000000014.snap'

2019-02-19 17:13:45.114 [20751] main/101/slave2 D> memtx_tuple_new(47) = 0x7f99a4000080

2019-02-19 17:13:45.114 [20751] main/101/slave2 I> cluster uuid 4035b563-67f8-4e85-95cc-e03429f1fa4d

2019-02-19 17:13:45.114 [20751] main/101/slave2 D> memtx_tuple_new(11) = 0x7f99a4004080

2019-02-19 17:13:45.114 [20751] main/101/slave2 D> memtx_tuple_new(17) = 0x7f99a4008068

And the attempt was successful:

2019-02-19 17:13:45.118 [20751] main/101/slave2 D> memtx_tuple_new(40) = 0x7f99a40004c0

2019-02-19 17:13:45.118 [20751] main/101/slave2 I> assigned id 1 to replica 825af7c3-f8df-4db0-8559-a866b8310077

2019-02-19 17:13:45.118 [20751] main/101/slave2 D> memtx_tuple_new(40) = 0x7f99a4000500

2019-02-19 17:13:45.118 [20751] main/101/slave2 I> assigned id 2 to replica 403c0323-5a9b-480d-9e71-5ba22d4ccf1b

2019-02-19 17:13:45.118 [20751] main/101/slave2 I> recover from `/var/lib/tarantool/slave2/00000000000000000014.xlog'

2019-02-19 17:13:45.118 [20751] main/101/slave2 I> done `/var/lib/tarantool/slave2/00000000000000000014.xlog'It even started:

2019-02-19 17:13:45.119 [20751] main/101/slave2 D> systemd: sending message 'STATUS=running'But for unknown reasons, he lost connection and fell:

2019-02-19 17:13:45.129 [20751] main/101/slave2 D> SystemError at /build/tarantool-1.10.2.146/src/coio_task.c:416

2019-02-19 17:13:45.129 [20751] main/101/slave2 tarantoolctl:532 E> Start failed: /usr/local/share/lua/5.1/http/server.lua:1146: Can't create tcp_server: Input/output error

Trying to start slave again does not help.

Now delete the files created by the instances. In my case, I delete everything from the / var / lib / tarantool directory.

I start slave first, and only then master. And lo and behold ...

# ps -ef | grep tara

taranto+ 20922 1 0 17:20 ? 00:00:00 tarantool slave2.lua <running>

taranto+ 20933 1 1 17:21 ? 00:00:00 tarantool master.lua <running>

I did not find any explanation for such behavior, except as a "feature of this software."

This situation will appear every time if your server has completely rebooted.

Upon further analysis of the architecture of this software, it turns out that it is planned to use only one vCPU for one instance and many more resources remain free.

In the ideology of n vCPU, we can raise the master and n-2 slaves for reading.

Given that on the test server 8 vCPU we can raise the master and 6 instances for reading.

I copy the file for slave, correct the ports and run, i.e. some more slaves are added.

Important! When adding another instance, you must register it on the wizard.

But you must first start a new slave, and only then restart the master.

Example

I already had a configuration running with a wizard and two slaves.

I decided to add a third slave.

I registered it on the master and restarted the master first, and this is what I saw:

# ps -ef | grep tara

taranto+ 20922 1 0 Feb19 ? 00:00:29 tarantool slave2.lua <running>

taranto+ 20965 1 0 Feb19 ? 00:00:29 tarantool slave3.lua <running>

taranto+ 21519 1 0 09:16 ? 00:00:00 tarantool master.lua <orphan>

Those. our master became a loner, and replication fell apart.

Starting a new slave will no longer help and will result in an error:

# systemctl restart tarantool@slave4

Job for tarantool@slave4.service failed because the control process exited with error code.

See "systemctl status tarantool@slave4.service" and "journalctl -xe"for details.

And in the logs I saw a little informative entry:

2019-02-20 09:20:10.616 [21601] main/101/slave4 I> bootstrapping replica from 3c77eb9d-2fa1-4a27-885f-e72defa5cd96 at 10.78.221.74:3301

2019-02-20 09:20:10.617 [21601] main/106/applier/replicator@tarantuldb-t I> can't join/subscribe

2019-02-20 09:20:10.617 [21601] main/106/applier/replicator@tarantuldb-t xrow.c:896 E> ER_READONLY: Can't modify data because this instance is inread-only mode.

2019-02-20 09:20:10.617 [21601] main/106/applier/replicator@tarantuldb-t D> => STOPPED

2019-02-20 09:20:10.617 [21601] main/101/slave4 xrow.c:896 E> ER_READONLY: Can't modify data because this instance is in read-only mode.

2019-02-20 09:20:10.617 [21601] main/101/slave4 F> can't initialize storage: Can't modify data because this instance is in read-only mode.

We stop the wizard and start a new slave. There will also be an error, as at the first start, but we will see that it is loading status.

# ps -ef | grep tara

taranto+ 20922 1 0 Feb19 ? 00:00:29 tarantool slave2.lua <running>

taranto+ 20965 1 0 Feb19 ? 00:00:30 tarantool slave3.lua <running>

taranto+ 21659 1 0 09:23 ? 00:00:00 tarantool slave4.lua <loading>

But when you start master, the new slave crashes, and master does not go with the status of "running".

# ps -ef | grep tara

taranto+ 20922 1 0 Feb19 ? 00:00:29 tarantool slave2.lua <running>

taranto+ 20965 1 0 Feb19 ? 00:00:30 tarantool slave3.lua <running>

taranto+ 21670 1 0 09:23 ? 00:00:00 tarantool master.lua <orphan>

In this situation, there is only one way out. As I wrote earlier, I delete files created by instances and run slaves first, and then master.

# ps -ef | grep tarantool

taranto+ 21892 1 0 09:30 ? 00:00:00 tarantool slave4.lua <running>

taranto+ 21907 1 0 09:30 ? 00:00:00 tarantool slave3.lua <running>

taranto+ 21922 1 0 09:30 ? 00:00:00 tarantool slave2.lua <running>

taranto+ 21931 1 0 09:30 ? 00:00:00 tarantool master.lua <running>

Everything started successfully.

So, by trial and error, I found out how to configure and start replication correctly.

As a result, the following configuration was assembled:

2 servers.

2 master. Hot reserve.

12 slaves. All are active.

In the logic of tarantool, http.server was used so as not to block the additional adapter (remember the vendor, platform and soap) or fasten the library to each business process.

In order to avoid a discrepancy between the masters, on the balancer (NetScaler, HAProxy or any other your favorite), we set the reserve rule, i.e. insert, update, delete operations go only to the first active master.

At this time, the second simply replicates the records from the first. Slaves themselves are connected to the first specified master from the configuration, which is what we need in this situation.

On lua, implemented CRUD operations for key-value. At the moment, this is enough to solve the problem.

In view of the features of working with soap, a proxy business process was implemented, in which the logic of working with a tarantula via http was laid.

If the key data is present, then it is returned immediately. If not, then a request is sent to the master system, and saved in the Tarantool database.

As a result, one business process in tests processes up to 4k requests. In this case, the response time from the tarantula is ~ 1ms. The average response time is up to 3ms.

Here is some information from the tests:

There were 50 business processes that go to 4 master systems and cache data in their memory. Duplication of information in full growth at each instance. Given that java already loves memory ... the prospect is not the best.

Now

50 business processes request information through the cache. Now information from 4 instances of the wizard is stored in one place, and is not cached in memory at each instance. It was possible to significantly reduce the load on the master system, there are no duplicates of information, and memory consumption on instances with business logic has been reduced.

An example of the size of information storage in the tarantula’s memory:

At the end of the day, these numbers can double, but there is no “drawdown” in performance.

In battle, the current version creates 2k - 2.5k requests per second of real load. The average response time is similar to tests up to 3ms.

If you look at htop on one of the servers with tarantool, we will see that they are "cooling":

Summary

Despite all the subtleties and nuances of the Tarantool database, you can achieve great performance.

I hope this project will develop and these uncomfortable moments will be resolved.