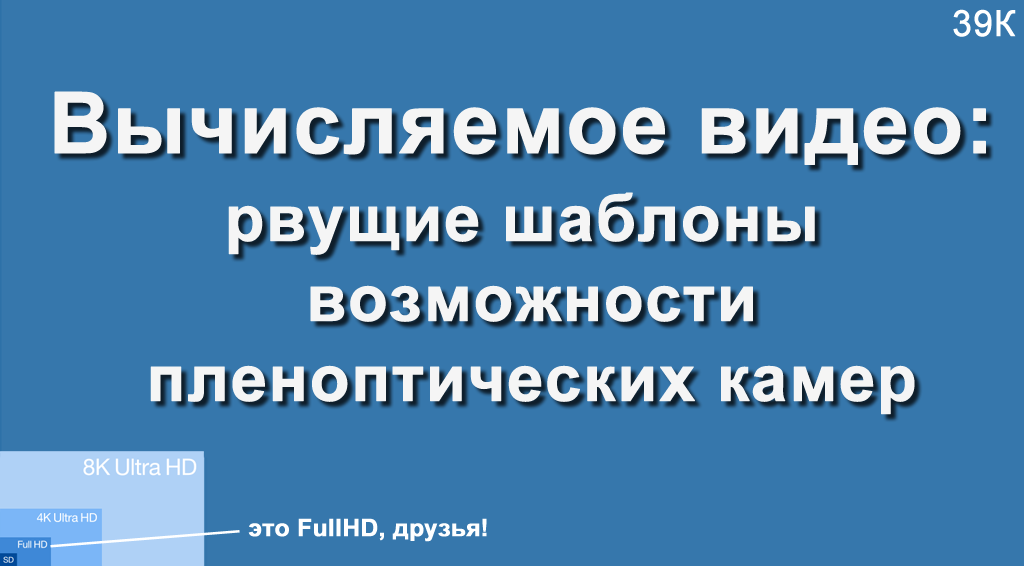

Computed video in 755 megapixels: plenoptics yesterday, today and tomorrow

Some time ago, the author had a chance to give a lecture at VGIK, and there were a lot of people from the camera house in the audience. The audience was asked: “With what maximum resolution did you shoot?”, And it turned out that about a third shot 4K or 8 megapixels, the rest - no more than 2K or 2 megapixels. It was a challenge! I had to tell you about a camera with a resolution of 755 megapixels (raw resolution, to be precise, since it has a final 4K) and what enchanting possibilities this gives for professional shooting.

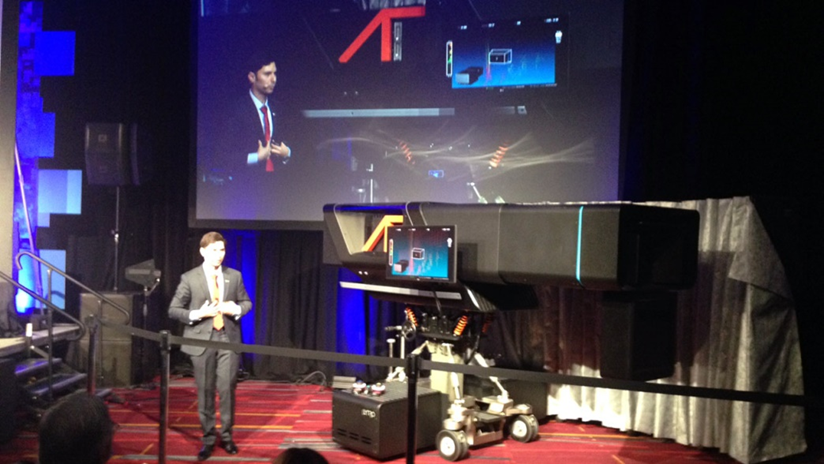

The camera itself looks like this (a kind of little elephant):

Moreover, I’ll open a terrible secret, in order to take this picture we were looking for a better angle and a bigger person. I had to feel this camera live, I will say that it looks muchlarger. The picture below with Yon Karafin, with whom we are about the same height, more accurately reflects the scale of the disaster:

Who is interested in principle in the possibilities of the calculated video that they rarely write about - the whole truth is under the cut! )

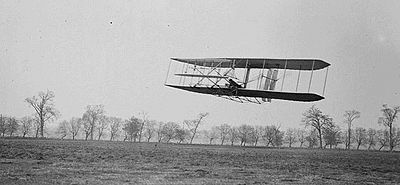

Discussions on the possibilities of plenoptics remind me of discussions about the first aircraft:

What people said:

- It is very expensive ...

- YES!

- It is completely impractical and now does not pay off ...

- YES!

- It is dangerous to use ...

- Damn it, YES!

- It looks wretched!

- Damn, YES, but it FLIES, you see, LE-TA-ET !!!

After 50 years, these experiments gave rise to a fundamentally new, convenient and fairly safe way of moving across the oceans (and not only).

The situation is the same here. Absolutely the same! Now it requires unreasonable expenses, damn inconvenient to use and looks wretched, but it really is FLYING! And to be aware of the principles and emerging prospects - it is simply beyond belief inspiring (at least your humble servant)!

The monster in the photo above is somewhat reminiscent of the first cameras, which were also large, with a huge lens, and rotated on a special frame:

Source: Television shooting of the Olympiad in 1936 at the very dawn of television

Here a lot looks like, also a huge lens (because there is also not enough light), a heavy camera on a special suspension, huge sizes:

Source: Lytro poised to forever change filmmaking

From the interesting: the “case” below is a liquid cooling system, in which case the camera fails can't shoot further.

The large rectangle under the lens (clearly visible in the first photo above) is not the backlight, but the lidar - a laser range finder that provides a three-dimensional scene in front of the camera.

A thick black tourniquet in the lower left in the background is a bundle of fiber optic cables of about 4 centimeters in diameter, through which the camera sends a monstrous stream of information to a special storage.

Those in the topic have already recognized Lytro Cinemaof which 3 pieces were created. The first, which usually appears in all pictures, is easy to recognize by one lidar under the lens. After 1.5 years, a second chamber was created with corrected structural problems of the first and two lidars (and, alas, almost no one writes about it). Moreover, the progress in development, for example, of lidars in 1.5 years was so great that two lidars of the second chamber gave 10 times more points than one lidar of the first. The third camera should have approximately the same resolution characteristics (to satisfy the requirements of filmmakers), but be half the size (which is critical for practical use). But ... but more on that later.

In any case, in every way recognizing the outstanding role that Lytro played in popularizing plenoptic shooting technology, I would like to note that the wedge light on it did not converge. A similar camera was made at the Fraunhofer Institute , Raytrix , a producer of plenoptic cameras , exists safely , and again they didn’t let me take pictures of Lytro Cinema, because I was very afraid of several Chinese companies that were actively working in this direction (no fresh information was found on them).

Therefore, let's write down the points all the advantages that plenoptics gives, and about which they almost do not write. But precisely because of which it is guaranteed to succeed.

Instead of introducing

Only on Habré and only Lytro is mentioned on approximately 300 pages , therefore we will be short.

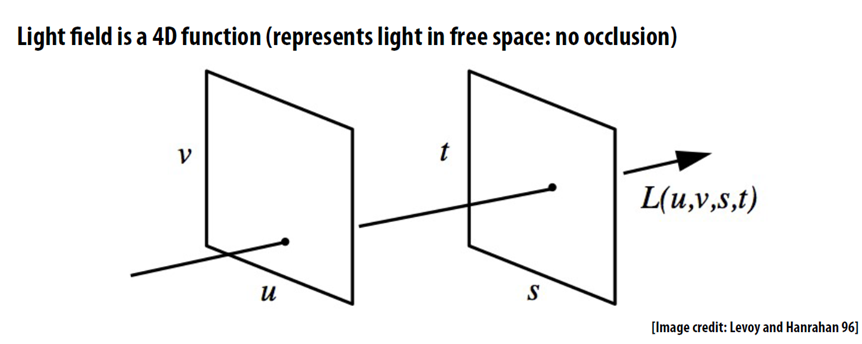

The key concept for plenoptic shooting is the light field, that is, we do not fix the pixel color at each point, but a two-dimensional matrix of pixels, turning a miserable two-dimensional frame into a normal four-dimensional frame (usually with very little resolution in t and s so far):

In the English Wikipedia , and then in the Russian in articles about the light field it is said that "The phrase" light field "was used by A. Gershun in ... 1936." In fairness, we note that it was not a

even at that time, these works were quite applied in nature and the author 6 years later at the height of the war in 1942 received the Stalin Prize of the second degree for the method of blackout.

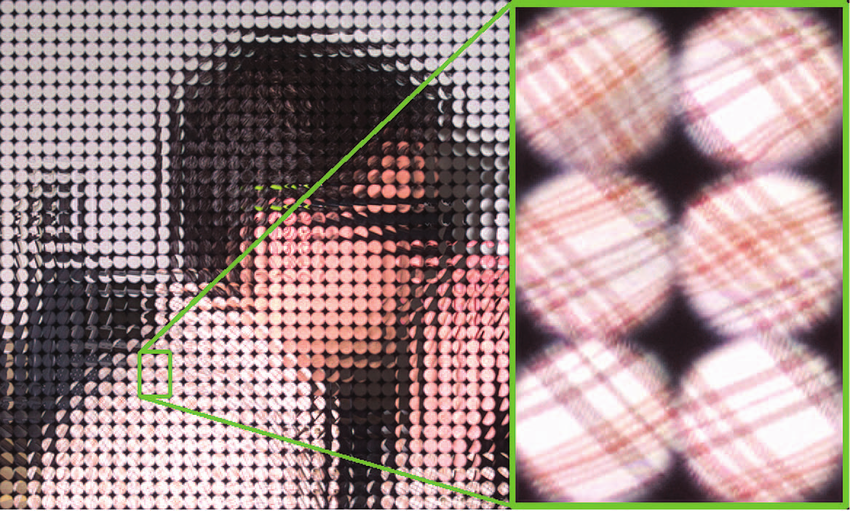

In practice, shooting a four-dimensional frame of the light field is provided by an array of microlenses located in front of the camera’s sensor: Source: plenoptic.infо (you can click and see in full resolution) Actually due to the fact that we have an array of microlenses with a small distance between them and it became possible to create Lytro Cinema, the sensor of which was assembled from an array of sensors, the boundaries of which fall on the border of the lenses. Liquid cooling was required to cool a very hot massif. A source:

Using Focused Plenoptic Cameras for Rich Image Capture

As a result, we get the most famous possibility of plenoptic cameras, which only the lazy did not write about - to change the focus distance after the shot was taken (these cute birds would not wait for focus):

Source: now deceased site Lytro

And little has been written about how plenoptics looks in the resolution suitable for cinema (opens by clicking): Source: Watch Lytro Change Cinematography Forever

I open another secret: the calculated focus is the tip of the iceberg of plenoptics. And the 90% of opportunities remaining below the level of interest of journalists in my opinion are even more interesting. Many of them are worthy of separate articles, but let's at least correct the injustice and at least call them. Go!

Calculated Aperture Shape

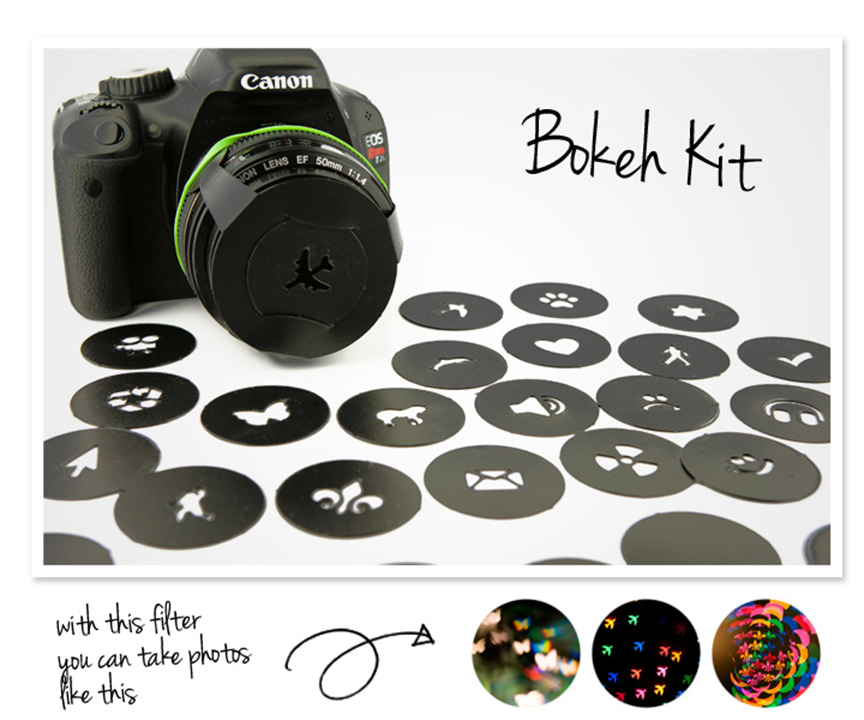

Since we are talking about defocus, it is worth mentioning the so-called bokeh effect , in which the glare is not necessarily round.

For photographers, the so-called Bokeh Kit is released , which allow you to get glare of different shapes:

This allows you to get pretty pretty pictures, and the photo below clearly shows that sparkles in the form of hearts even have stones in the foreground, only hearts are smaller. And it’s clear that trying to blur naturally in a similar way in Photoshop is extremely difficult, at least it is advisable to use a depth map:

At the same time, for plenoptics to “calculate” a different aperture is a relatively easy task:

So! We calculated the focusing distance, calculated the shape of the aperture, went further, it is more interesting there!

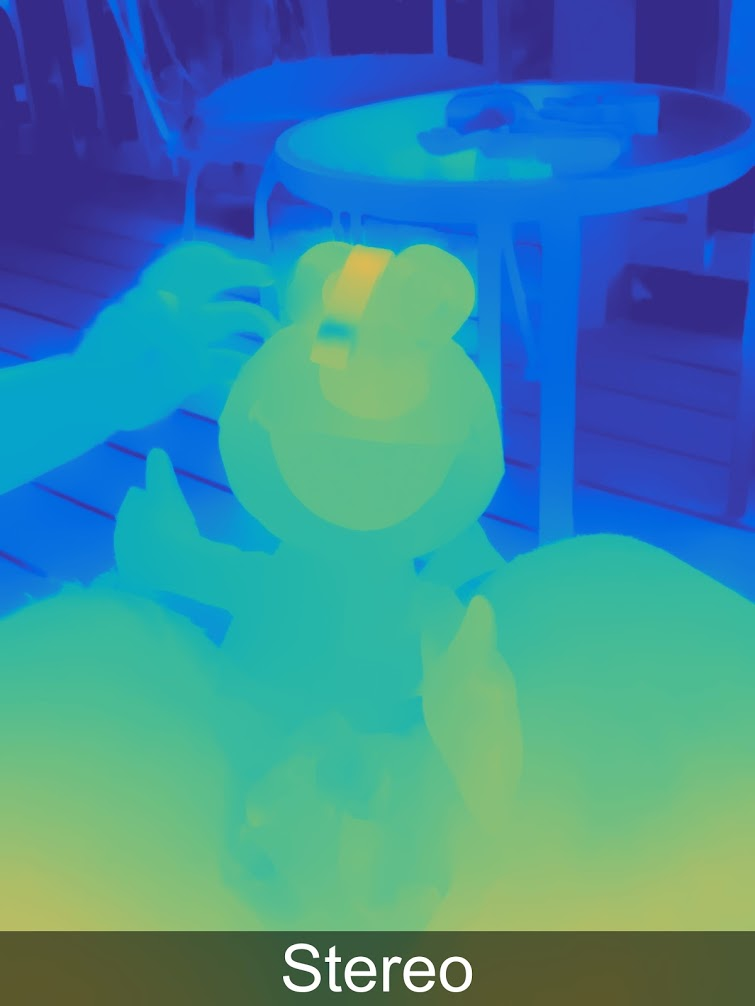

Computed Stereo

A very useful feature of the plenoptic sensor, about which almost no one mentions, but which already gives it a second life, is the ability to calculate an image from different points. The location of the point is actually determined by the size of the lens. If the lens is small, the frame may shift slightly left and right, as in this example (that this stereo photo is visible when looking at the chest of a nearby bird):

Source: Lytro, the now deceased site

If the lens is large, as, for example, Lytro Cinema, then the shooting point can be shifted by 10 centimeters. Let me remind you that between our eyes we have about 6.5 centimeters, that is, with a maximum distance of 10 cm, you can shoot both the general plan (and it will be “three-dimensional” than if you look with your eyes) and close-up (with any parallax without discomfort). In fact, this means that we can shoot full stereo video with one lens. Moreover, it will be not just stereo, but stereo with perfect quality and that sounds fantastic today - with a variable distance between the optical axes of virtual cameras AFTER shooting. Both that, and another - absolutely inconceivable opportunities which can cardinally change a situation with shooting stereo in the future.

Since the future is somehow in three-dimensional shooting, let us dwell on these two points in more detail. It's no secret that today most of 3D films, especially blockbusters, are not removed, but converted to 3D. This is done because when shooting a long list of problems arises (below is part of the list):

- The frames can be rotated, shifted vertically or enlarged one relative to the other (do not say that this is easily fixed, see how often such frames fall into movie releases - this is a quiet horror ... and a headache). So - the plenoptic stereo is PERFECTly aligned, even if the operator decided to zoom in (due to the mechanics in the two cameras, it is extremely difficult to make the approximation strictly synchronous).

- Frames may vary in color , especially if shooting requires a small distance between the optical axes of the cameras and you have to use a beam-splitter (there was a detailed post about this ). It turns out that in a situation where when shooting any flare object (the car in the background on a sunny day), we are doomed to entertainment at post-production with the vast majority of cameras, with plenoptic we will have a PERFECT picture with any glare. This is a holiday!

- Frames can vary in sharpness - again, a tangible problem in beam splitters, which is completely absent in plenoptics. With what sharpness is needed, with this we will calculate, absolutely PERFECT from the angles.

- Frames can float in time . Colleagues who worked with the Stalingrad material talked about the discrepancy between the timestamps of tracks by 4 frames, which is why the cracker is still relevant. We found more than 500 scenes with time differences in 105 films. The time difference, unfortunately, is difficult to detect, especially in scenes with slow motion, and at the same time is the most painful artifact according to our measurements. In the case of plenoptics, we will have PERFECT time synchronization.

- Another headache when shooting 3D - too large parallaxes that cause discomfort when viewingon the big screen, when your eyes can diverge to the sides for objects that are “beyond infinity”, or too converge for some foreground objects. Correct parallax calculation is a separate difficult topic that operators should have a good command of, and an object that accidentally gets into the frame can ruin the take, making it uncomfortable. With plenoptics, we ourselves choose parallax AFTER shooting, so any scene can be calculated with PERFECT parallax, moreover, the same already shot scene can be easily calculated for large screens and small screens. This is the so-called parallax change, which is usually extremely difficult and expensive to do without loss of quality, especially if you had translucent objects or borders in the foreground.

In general, the ability to CALCULATE the perfect stereo is the real basis for the next wave of 3D popularity, since over the past 100 years 5 such waves have been distinguished. And, judging by the progress in laser projection and plenoptics, for a maximum of 10 years (when the main Lytro patents expire or a little earlier in China), we will have a new hardware and a new level of quality of 3D films.

So! They got the perfect calculated stereo from one lens (a thing impossible for most, by the way). And they didn’t just get it, but also parallax recounted after the fact, even if the object is out of focus in the foreground. Let's go deeper!

Calculated Shooting Point

Changing the shooting point has an application in ordinary 2D video.

If you were on the set or at least saw photographs, you probably paid attention to the rails on which the camera travels. Often for one scene you need to lay them in several directions, which takes noticeable time. Rails ensure smooth running of the camera. Yes, of course, there is a steadicam , but in many cases the rails, alas, have no alternative.

The ability to change the shooting point in Lytro was used to demonstrate that there are enough wheels. In fact, when the camera moves, we form a virtual “tube” with a diameter of 10 cm in space, within which we can change the shooting point. And this pipe allows you to compensate for small fluctuations. This is well shown in this video:

Moreover, sometimes it is necessary to solve the inverse problem, that is, add oscillations during movement. For example, there was not enough dynamics and the director decided to make an explosion. It’s not long to apply a sound, but it’s advisable to shake the camera synchronously, and it’s better not just to move the picture flat, it will be visible, but to shake “honestly”. With plenoptics, the shooting point can be shaken completely “honestly” - with a change in the shooting point, angle, motion blur, etc. There will be a complete illusion that the camera shook violently, which gently and safely rode on wheels. Agree - an amazing opportunity! The next generation steadicam will show miracles.

Of course, for this to become a reality, you need to wait until the size of the plenoptic camera is reduced to a reasonable one. But considering how much money is being invested in miniaturization and increasing the resolution of sensors for smartphones and tablets, it seems that the wait is not so long. And it will be possible to create a new plenoptic camera! Every year it becomes easier and simpler.

So! The shooting point was calculated and, if necessary, moved, stabilizing or destabilizing the camera. Let's go further!

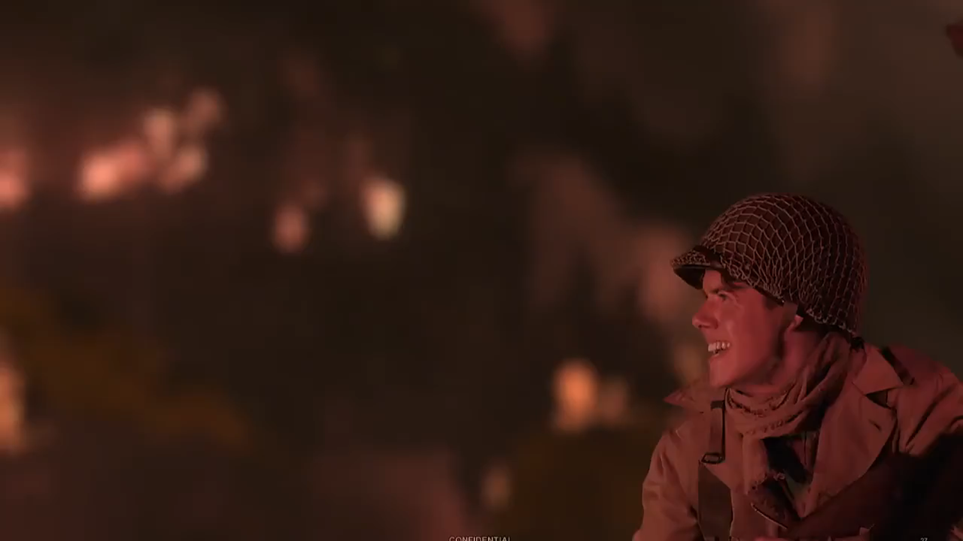

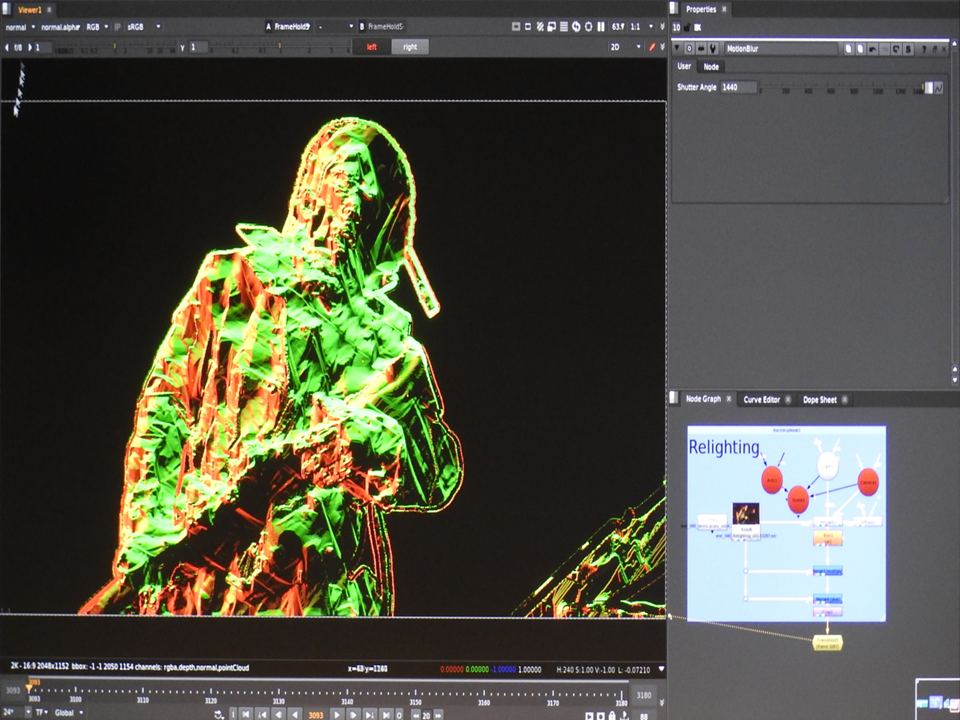

Computed Lighting

Since we started talking about the explosion nearby ...

Sometimes it becomes necessary to change the lighting of the subject, for example, add a flash realistically. Post-production studios know well what a difficult problem this is, even if our character’s clothing is relatively simple.

Here is an example of a video with a flash overlay taking into account clothing, its shadows, and so on (opens by clicking): Source: interview with Yon Karafin Under the hood, it looks like a plug-in for Nuke, which works with a full three-dimensional model of the actor and puts a new light on her given the normals map, materials, motion blur and so on: Source: Lytro materials So! The new lighting of the already captured object was calculated. Let's go further!

Computed Resolution

Those who experimented with the first Lytro often noted - yes, the toy is cool, but the resolution is completely insufficient for normal photography.

In our last article on Habr, it was described how small shifts of the shooting point can help to significantly increase the resolution. Question: Are these algorithms applicable to plenoptics? Answer: Yes! Moreover, Super Resolution algorithms are easier to work on plenoptic images, since there is a depth map, all pixels are taken at one time, and the shifts are fairly accurately known. That is, the situation of plenoptics is simply magical compared to the conditions under which Super Resolution algorithms work in ordinary 2D. The result is corresponding:

Source: Naive, smart and Super Resolution restoration of the plenoptic framefrom the Adobe Technical Report “Superresolution with Plenoptic Camera 2.0”

The only major problem (really big!) is the amount of data and the amount of computation that is required. Nevertheless, today it is completely realistic to load a computing farm at night and get twice as much resolution tomorrow where it is needed.

So - figured out the calculated resolution! Let's go further!

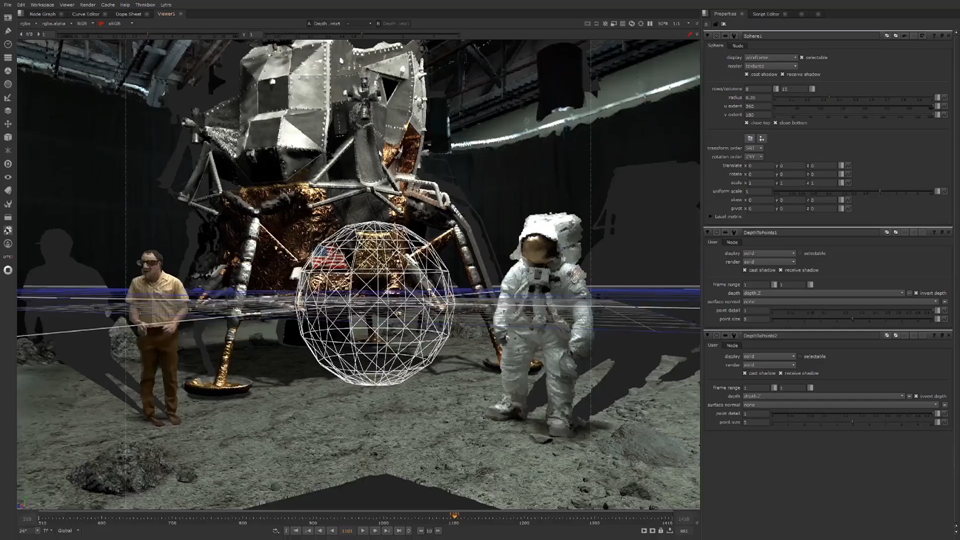

Computed Environment

A separate task that arises at the post-production stage, especially if it is some kind of fantastic or fantasy project, is to carefully glue the captured objects into a three-dimensional scene. Globally there is no big problem. Today the norm is three-dimensional scanning of the shooting area and all that remains is to carefully combine the shot model and the three-dimensional scene so that there are no classics of the genre when the actors' legs sometimes slightly fall into the painted uneven floor (this jamb is not very visible on the small screen, but can be clearly seen at the cinema, especially somewhere in IMAX).

Not without humor, for an example of the camera’s operation, the scene of shooting the moon landing was used (“three-dimensional shadows” are clearly visible in places that the camera does not see):

Source: Moon | Lytro | VR Playhouse | Lightfield post-production

Since a multi-angle video is shot in a plenoptic camera by definition, building a depth map is not a big problem. And if the camera is combined with lidar - we immediately shoot a three-dimensional scene, which categorically simplifies the combination of real shooting and graphics at the next stage.

So, we counted the three-dimensional scene around (combining lidar data and plenoptics data). Now, you guessed it, the rendering of the lunar survey will be even better! Go ahead.

Calculated Chroma Key

But that is not all!

The usual technology of a modern film set is green screens , when in places where a computer background is supposed to be put on green plywood shields or green banners are pulled. This is done so that there are no problems at the translucent borders when the background changes. And since in 4K when moving objects, most of our borders become translucent - the relevance of green (or blue, depending on the color of the actors' clothes) screens is extremely high.

This is extremely unusual for filmmakers, but since we ourselves can change the sharpness of objects, we have the opportunity to process frames with different depth of field and, as a result, read the translucency map of the borders and the exact color of the captured object. This allows you to shoot without the use of green screens, including a rather complex image, and then restore the transparency map:

Source: interview with Yon Karafin

Lytro specially shot a video on which ladders in the background are worn (since in real shooting on the most successful takessomething doesn’t fit into the frame - this is the unshakable law of filming!), and blurry confetti fall in the foreground (which immediately turns the cleaning of men and ladders into a child’s challenge), but in the frame these distant objects are relatively easily cropped and we still have neat foreground, including with a map of translucent borders (a background has been chosen against which it is easy to assess the quality of confetti that does not mask it): A

competent reader will ask, but what about the movement or the same hair? And he will be absolutely right. Their difficulties, of course, still remain (and a lot). Another thing is that knowing the background precisely and having the ability to slightly move the object in front of the background, we have completely new opportunities for automatically and better building a transparency map .

Actually, by virtue of the last three points, it is possible to predict the future success of plenoptic cameras in filming series such as "Games of the Throne", when a large number of special effects can be put on stream. Which means a significant reduction in their cost. There it is not so simple, but the ability to calculate transparency maps due to refocusing and the ability to shift the shooting point are an order of magnitude higher than those for conventional 2D cameras. And the tools will tighten.

So, we figured out the calculated transparency map without greenscreens and drove on!

Calculated Exposure

A separate important topic is the calculated exposure or the calculated shutter. This topic is not directly related to plenoptics, but in modern conditions, when the diagonal, contrast and screen resolution together and quickly grow, it becomes more and more relevant. The problem is the strobe effect, which is typical for the rapid movement of objects in the frame during "normal" shooting.

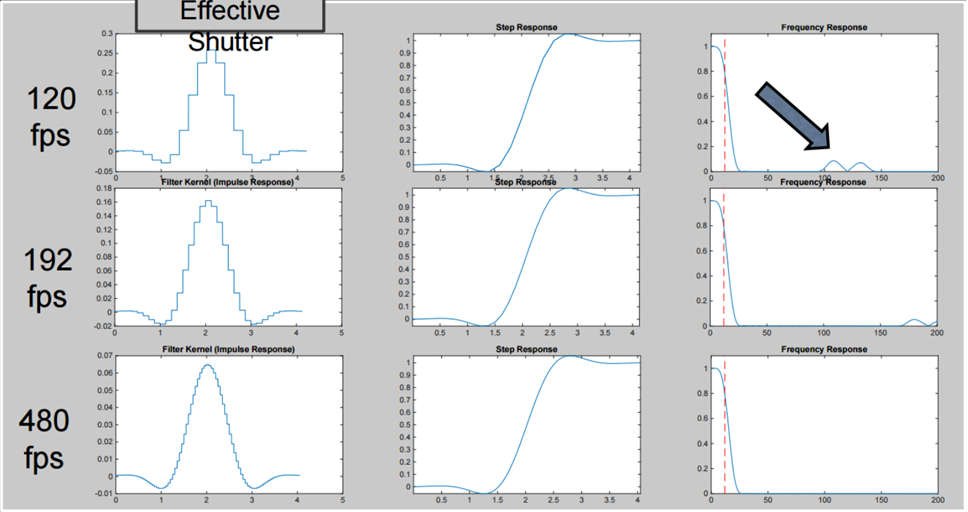

To drastically reduce this effect by making the movement “soft” and calculating the “ideal” shutter, you can shoot a video with a higher frame rate and then recount it to a lower frequency using various conversion functions, including not only summing frames, but also subtracting (Lytro Cinema had 300 fps to make it clear how to interpret these graphs):

This makes it possible to “shoot” a video with a shutter that is physically extremely difficult, if not impossible to create, but which provides extreme “softness” and “pleasantness” of moving objects in the scene without the strobe effect from branches, wheels and other fast moving objects ( especially pay attention to the wheel disks, and how much the car has become more visible behind obstacles):

Source: https://vimeo.com/114743605

Or here are two more shots, the movement of the camera in which will be much more pleasant to perceive by eye, especially on the big screen:

Source: https: / /vimeo.com/114743605 Phew

, we calculated the exposure. Let's move on!

Plenoptic camera in smartphone today

Usually, when it comes to plenoptics today, there are typical conversations in the style of “The boss is all gone: the plaster is removed, the client is leaving!” , Lytro bought Google cheaply last year, Pelican Imaging experiments did not go into mass production and in general everything remained beautiful theory ...

Source: Pelican Imaging: Smartphone Plenoptic Camera Modules for 20 Dollars

So there you go! Rumors about the death of plenoptics are greatly exaggerated. Very much!

On the contrary, right now plenoptic sensors are produced and used on a scale that had never before been in history.

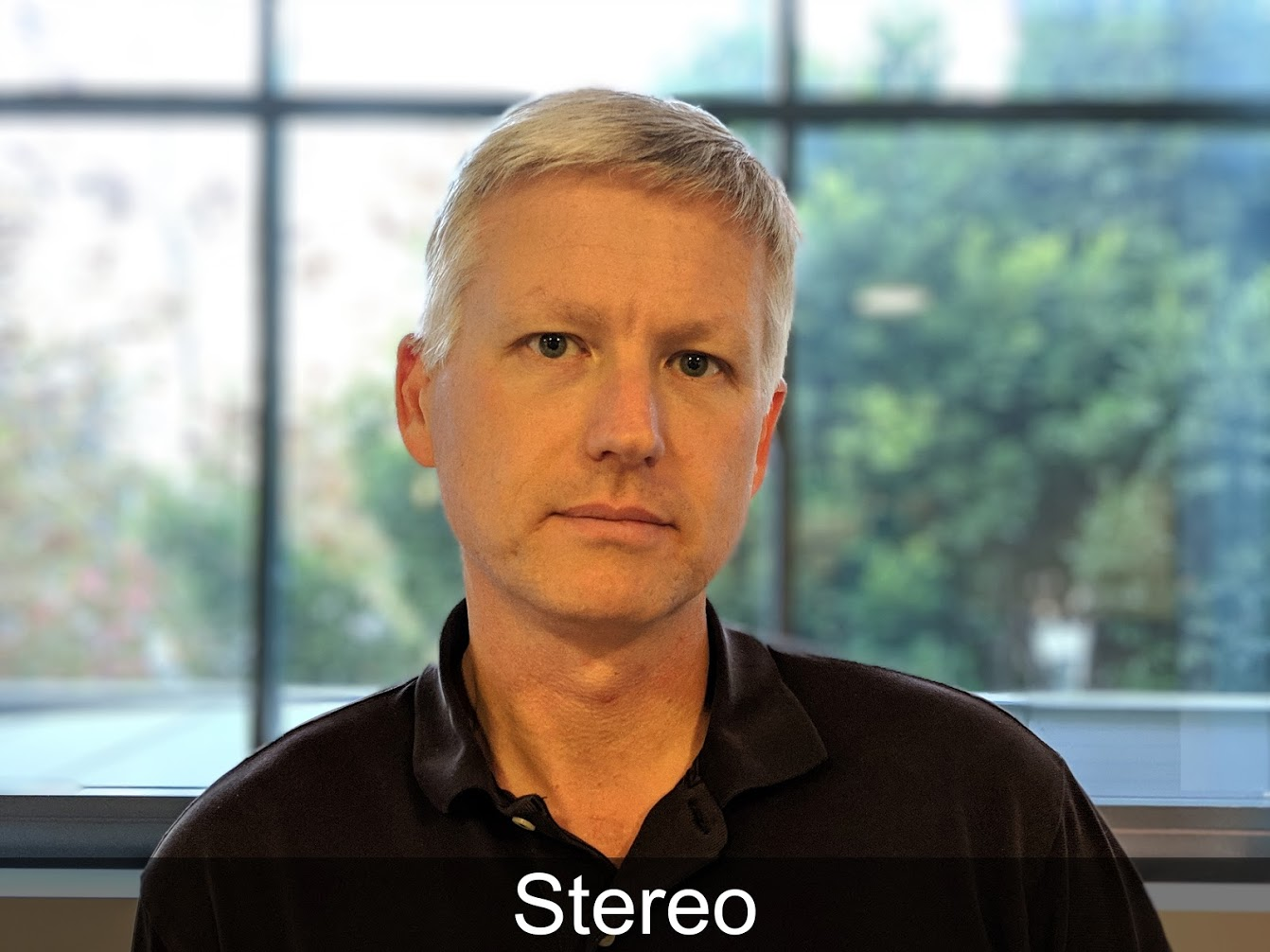

The notorious Google company without much fanfare released Google Pixel 2 and Google Pixel 3 with plenoptic sensors. If you look at the phone, it’s very clear that the camera has one camera:

However, the phone does a very good blur in the background, in general, no worse than its “two-eyed”, “three-eyed” and “four-eyed” colleagues (agree the following photo especially benefits from this effect): Source: AI Google Blog (you can click and see a larger size, primarily on the borders of the foreground object) How do they do this ?! A source of magical miracles, inescapable in recent times, is being used - childish magic of neural networks?

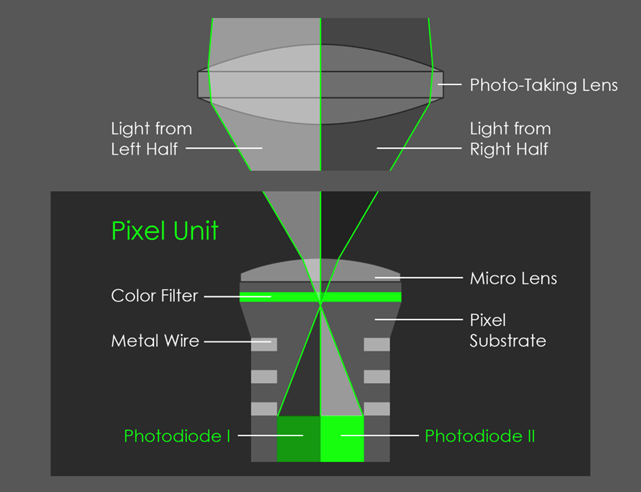

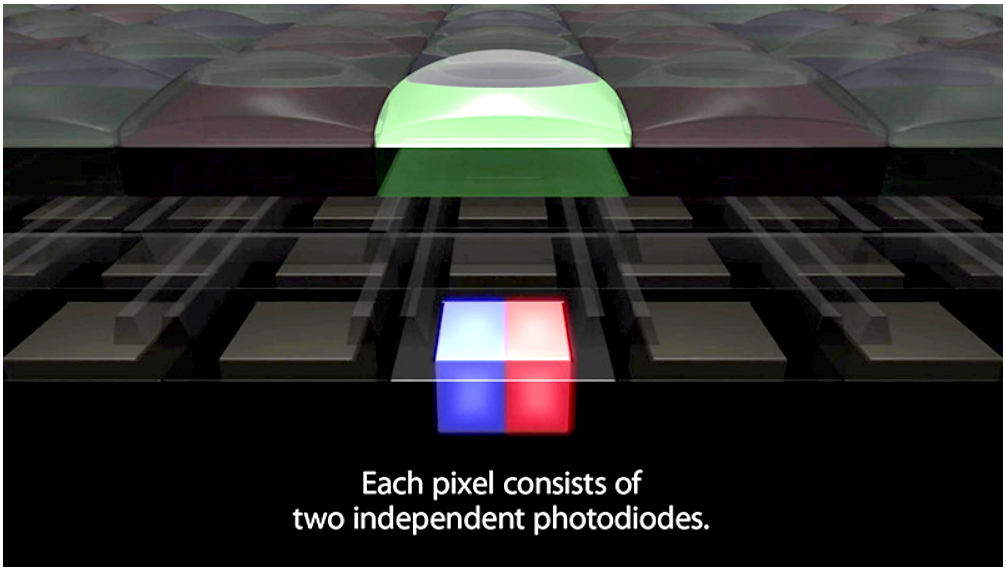

Neural networks, especially in Pixel 3, are also actively used in this task, but more on that later. And the secret of the quality of the effect is that the smartphone’s sensor is plenoptic, although the lens only covers two pixels, as shown in the picture:

Source: AI Google Blog

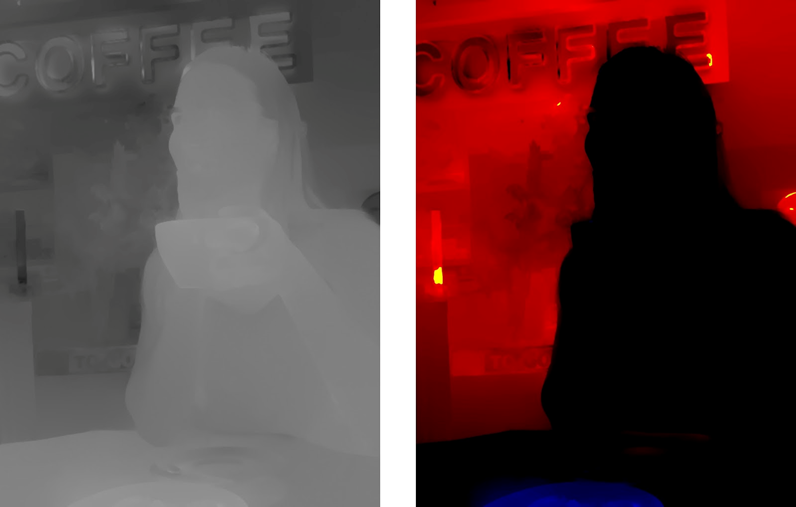

As a result, we get a micro-stereo picture, here we see a very slight shift in movement (the right picture is animated and moves up and down, because they held the phone like that when shooting):

But even this extremely small shift is enough to build a depth map for the photo being taken (about the use of sub-pixel shifts was in the previous article ):

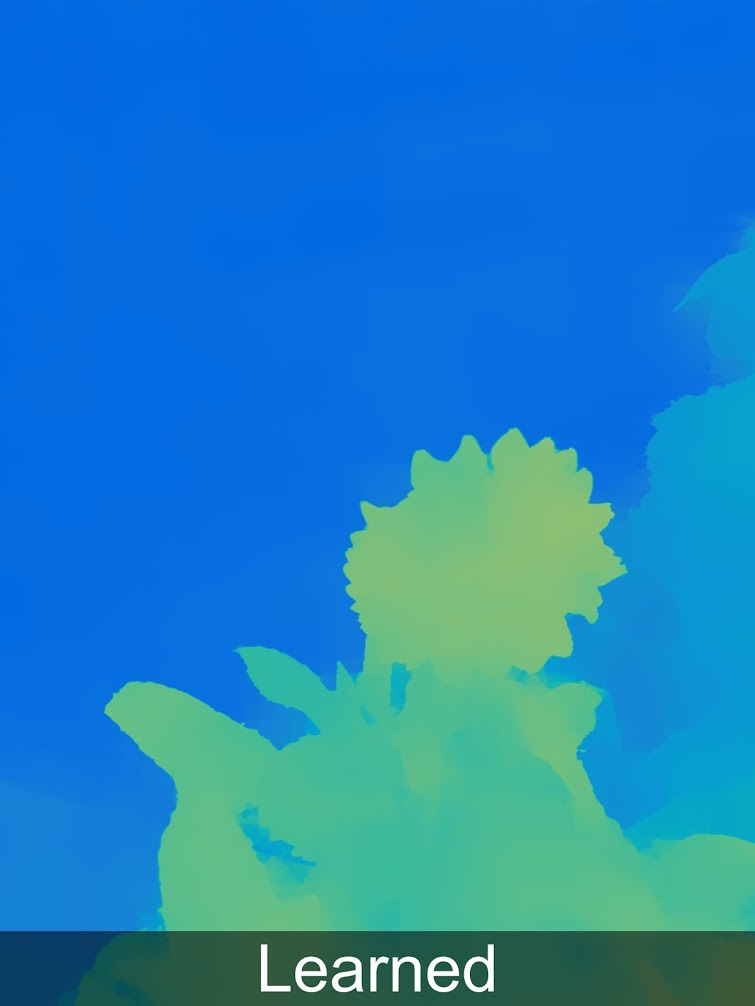

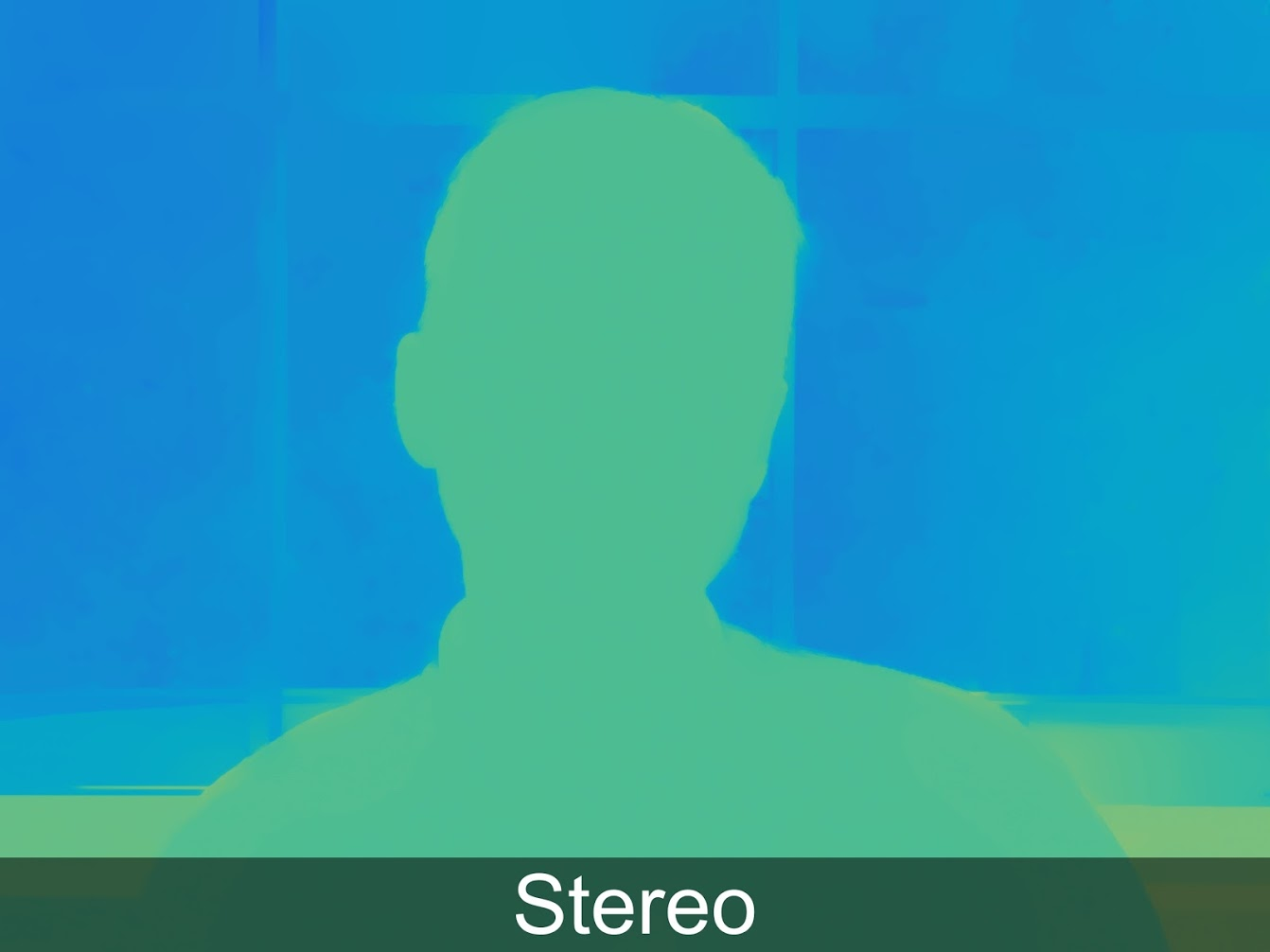

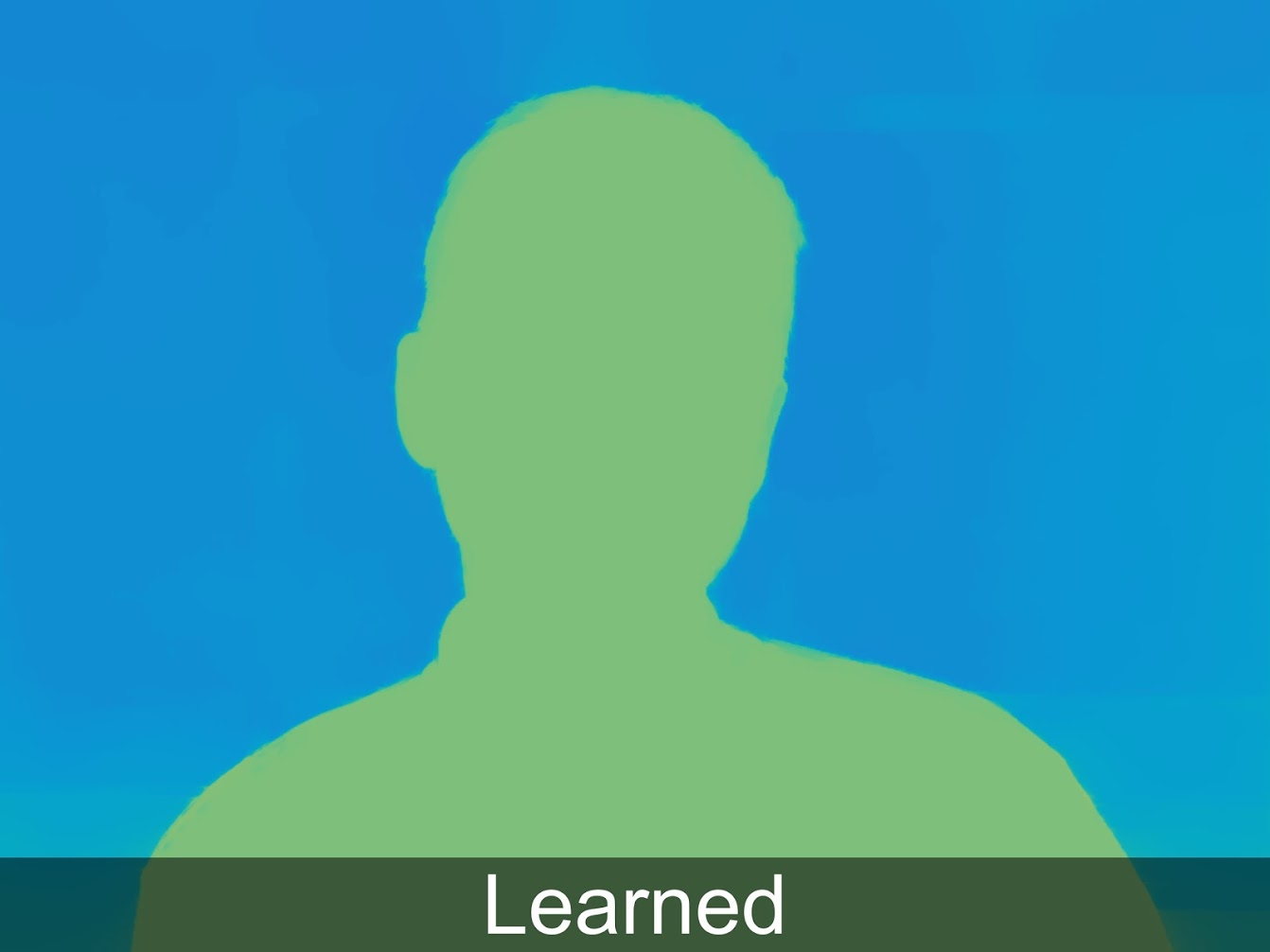

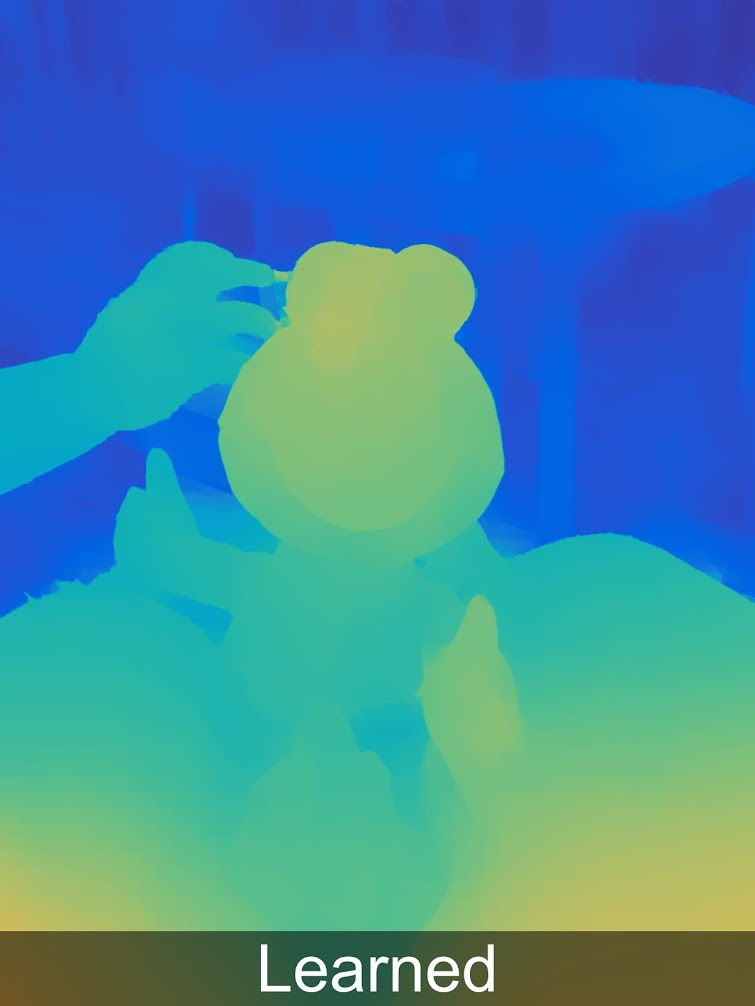

At the same time, with the use of machine learning, the results can be significantly improved and this is already implemented in Pixel 3. In the example below, Learned means Stereo + Learned, more about this, hopefully, there will be a separate post:

For those who like to look at full resolution depth, there are more examples in a special gallery .

It is clearly seen that not everything is perfect, and we have typical artifacts that are characteristic for building a depth map from stereo (which, by the way, are also available in Pixel's two-eyed colleagues), and a little parallax affects. But it’s already clear that the quality of the depth is quite sufficient to confidently segment the image by depth and further impose different effects, more confidently add objects to the scene, and so on. The results of the MEASURED depth are an order of magnitude better than the results based on various assumptions (arbitrarily neural networks).

So congratulations to everyone who has read up to this point! You live during the release of plenoptic cameras in a successful mass market product, even if you don’t even know about it!

The story does not end there, of course:

- Firstly, it’s interesting that plenoptics was “almost free”, because in modern smartphone cameras, with miniaturization of the sensor and an increase in resolution, there is a catastrophic lack of light flux, so each pixel is covered with a microlens. That is, such a sensor does not cost more (this is very important!), Although we are somewhat sacrificing a resolution that we just increased due to another technology . As a result of applying two technologies, the result becomes better in 2D (less noise, higher resolution, HDR) and at the same time is complemented by the measured depth, that is, it becomes 3D. The price of the issue is a dramatic increase in the number of calculations per frame. But for photos this is already possible today and already works in real smartphones.

- Secondly, at a conference, a Google employee said that they were thinking of covering 4 pixels with a lens, after which the quality of the depth map will be dramatically higher, since there will be 2 stereo pairs with a 1.4 times larger stereo base (two diagonals), this will dramatically improve the quality of the depth map, including many stereo artifacts at the borders. Competitors can achieve this quality only by placing at least 3 cameras in a row. Such an increase in quality is important for AR.

- Thirdly, Google is no longer alone, here is an example of a description of a similar technology in the Vivo V11 Pro, you see, you have just seen a similar picture:

Source: What is Dual Pixel technology?

- Finally, in recent years, the number of publications on the interpolation of light field data has grown simply avalanche-like. And this is wonderful, because in order to drastically reduce computational complexity, you will need non-child mathematics.

Plenoptics is also used in the autofocus of professional cameras, for example, with Canon (google DPAF - Dual Pixel Auto Focus). Who would have thought that a theoretical joke of 30 years ago - the ability to shoot stereo with one lens - would be the first mass application of plenoptics ...

In general, the topic went into products!

She is already flying! You see, LE-TA-ET!

To summarize

Filmoptics in the cinema

Above, we examined two cases of the use of plenoptics - in film production and in smartphones. This is not a complete list, for example, plenoptics is very relevant in microscopy - you can make calculated stereo micrographs with a large "honest" depth of field; plenoptics is relevant for industrial cameras, especially if you need to take photos of translucent multilevel objects and so on. But about it somehow another time.

Recall for the application in the film industry relevant:

- Calculated Focus Distance

- Calculated Bokeh

- Computed Resolution

- Computed Perfect Stereo

- Calculated Shooting Point

- Computed Lighting

- Computed Environment

- Calculated Greenscreen

- Calculated Shutter

In the coming years, with miniaturization and increased resolution of sensors, technology can be developed to create a practical, fundamentally new movie camera that allows you to remove material for applying special effects faster (without a green screen) and with fewer takes (more points are easier to fix). The new features are so interesting that by the time the plenoptic sensor with a suitable film resolution can be made compact, such cameras are doomed to success.

When can such a camera appear?

A cautious answer is in the next 10 years.

What does the term depend on?

From many factors. Good question: what will be the situation with the ability to license Lytro patents that Google now owns? This can be critical. Fortunately, the key ones will expire in 10 years (here we politely do not remember Lytro's Chinese colleagues who can speed up the process). Also, the work with colossal amounts of data generated by the plenoptic camera should be simplified: with modern clouds, this is becoming easier and simpler. From the good news - in due time thanks to Lytro in a very popular composing program, which is used in a huge number of stereo processing studios, the plenoptic data format was supported. As they say, Lytro died, but the opportunity to write plug-ins for Nuke with support for plenoptic video remained with us. This simplifies the "entry" into this market with a professional product, since it is important that the studios immediately without the training of personnel and in the same programs can immediately work with the format of new cameras.

Plenoptika in smartphones

If we talk about smartphones, then everything looks even better. Most relevant for this industry is the ability of the plenoptic camera to measure depth with a single sensor (potentially quickly) and this feature will soon be mega-demand.

When can such a camera appear?

Already exists. And tomorrow, the technology will be repeated by other manufacturers. Moreover, the key driver of the next stage will be augmented reality on smartphones and tablets, which today lacks accuracy and the ability to "see" a three-dimensional scene.

What does the term depend on?

The ability to measure distance with the main sensor in real time is very likely to appear soon with Google Pixel, since Google has been developing in this direction for a long time (see Project Tangowhich is closed, but whose business lives on). And ARCore looks very promising, as well as the competing ARKit . And the breakthrough, as you now guess, does not have long to wait, since the value of the sensor pixel drops exponentially, they call the average speed 10 times in 10 years, and we need a 2 times drop. Then count for yourself. Not tomorrow, but not for long.

Instead of a conclusion

Remember, at the very beginning we were talking about a lecture at VGIK? I must say that I did not expect the reaction to it that was in the end. To put it in one word, it was mourning. If in two words, then universal mourning. And at first I did not understand what was happening. The operator who came up after the lecture very well explained the situation to me. It wasn’t even that camera art was declining. Although there was a great example: a movie fragment for about 6 seconds, when a person approaches the door of the apartment, knocks, another person opens the door, greets and moves a little forward, while the camera focuses on the corridor, then on the doorframe, then instantly switches focus on the person who discovered it, and then into the room. And the operator needs to perfectly control the camera so that with a cinema shallow depth of fieldmasterfully work with zoom, remembering the backlight, composition of the frame, motion sickness when shooting with hands and another 1000 important little things. So here. It’s not even that this is becoming easier. This is even good. Fewer takes will be spoiled due to the fact that the operator somewhere did not have time or missed. He told how recently he shot a series in 4K for the channel. And the stock of resolution turned out to be large. As a result, the director cut the frames at the post-production, and in some places just fragments of the frame were used for interruptions. As a result, the composition was just awful and this operator wanted to remove his name from the credits.

The above-described features of cameras for filmmakers mean the transfer of many effects from the filming stage to the post-production stage. And there will be great sadness if those who will process the shot scenes are illiterate in matters of composition, focus distance control and so on. If they are literate, these are new fantastic opportunities.

So, we wish all of us more competence, which is not always easy in this rapidly changing world!

And

Acknowledgments

I would like to heartily thank:

- Laboratory of Computer Graphics, VMK Moscow State University MV Lomonosov for his contribution to the development of computer graphics in Russia and not only

- Our colleagues from the video group, thanks to which you saw this article,

- personally Konstantin Kozhemyakov, who did a lot to make this article better and more visual,

- Yon Karafin when he was Head of Light Field Video at Lytro, thanks to which we almost started working on improving their product (and didn’t start for reasons beyond our control or from us),

- Lytro for their contribution to the popularization of light fields and their capabilities, Google, which caught the falling flag, and other companies that make products based on this interesting technology,

- and finally, many thanks to Sergey Lavrushkin, Roman Kazantsev, Ivan Molodetsky, Evgeny Kuptsov, Yegor Sklyarov, Evgeny Lyapustin and Denis Kondranin for a large number of sensible comments and corrections that made this text much better!