Inside Quake: Determining Visible Surfaces

- Transfer

Michael Abrash, a veteran of programming three-dimensional graphics, on the example of developing the first Quake, talks about the need for creative thinking in programming.

Many years ago, I worked for the now-defunct video video card manufacturer Video Seven. There I helped develop the VGA clone. My colleague Tom Wilson, who worked around the clock for a long time to develop the Video Seven VGA chip, tried to make VGA as fast as possible and was confident that its performance was optimized to the maximum. However, when Tom was already putting the final touches to the chip design, we heard rumors that our competitor Paradise had achieved even greater performance in its developed clone, adding to it a FIFO.

This ended the rumors - we did not know what the FIFO was, or how much it helped, nothing else. However, Tom, usually a friendly and relaxed person, turned into an active, obsessive fanatic with too much blood caffeine. Based on these little bits of information, he tried to figure out what Paradise managed to do. In the end, he came to the conclusion that Paradise probably inserted a FIFO-write buffer between the system bus and VGA, so that when the CPU was writing to the video memory, the recorded data would immediately fall into the FIFO, and this would allow the CPU to continue processing, rather than idle each time when he was writing to the display memory.

Tom had neither logical elements nor enough time to implement a full FIFO, but he managed to implement a FIFO with a depth of one operation, which allowed the processor to overtake the VGA chip by one write operation. Tom was not sure that it would give good results, but it was the only thing he could do, so he implemented this system and transferred the chip to production.

It turned out that the FIFO for one operation works incredibly well: at that time, Video Seven VGA chips were the fastest on the market; they became evidence of Tom’s genius and evidence of what the creator is capable of under the pressure of circumstances. However, the most remarkable thing about this story is that Paradise’s FIFO design had nothing to do with Tom’s design and didn’t work at all . Paradise inserted a FIFO read buffer between the display memory and the video output stage of the VGA chip, which made it possible to read the video output in advance so that when the CPU needed to access the display memory, pixels could be taken from the FIFO, and the CPU command was executed instantly. This really improved performance, but not as much as Tom's FIFO buffer.

And this case can be considered a good parable about the very nature of the creative process that a person can achieve. Scraps of news about Paradise's chip contained almost no factual information, but they forced Tom to go beyond the boundaries that he subconsciously set while developing the original chip design. I believe that this is the most important element of any ingenious design, be it from the field of equipment, software or any other creative sphere, and it was him who instilled in Tome news about Paradise: the ability to detect the boundaries that you built while working on a project and overcome these boundaries.

The problem, of course, is how to overcome the boundaries, the existence of which you do not even know. There is no formula for success, but two principles can serve as a good service: firstly, simplify, secondly, constantly try something new.

Usually, when you feel that the code becomes complicated, you begin to make minor changes to the frozen structure, and there is a great likelihood that you can improve performance and reduce the amount of code by inventing a new design. A really good structure should bring a moment of deep satisfaction, when everything falls into place, and you are surprised at how small the amount of code is needed and how well all boundary cases work.

In the process of revising the structure, it is important to study any ideas that come to mind, no matter how insane they may seem. Many of the truly magnificent ideas that I heard at first seemed nonsense because they did not fit into my current picture of the world. Often, such ideas are really insane, but just as the news about the Paradise chip stimulated Tom’s imagination, an aggressive exploration of seemingly delusional ideas can open up new possibilities for you.

Good illustration: the evolution of the three-dimensional graphics engine Quake.

The most difficult task in the world of three-dimensional graphics

I spent the last seven months working on Quake, DOOM's heir from id Software, and I assume that after three more months, by the time you read this article, Quake will already be released in the form of shareware.

In terms of graphics, Quake will be for DOOM what DOOM was for its predecessor, Wolfenstein 3D. Quake adds real arbitrary 3D (the player can look up and down, lean to the sides and even fall to the side), detailed lighting and shadows, 3D monsters and characters instead of DOOM sprites. Soon I will talk about all of this in more detail, but this month I want to talk about the task that I call the most difficult in my book in three-dimensional graphics: determining visible surfaces (drawing the corresponding surface for each pixel), and about a very close task to it - clipping (as fast as possible to drop invisible polygons as a way to accelerate the determination of visible surfaces).

Why do I think VSD is the most difficult task of 3D graphics? Although the tasks of rasterization, for example, the imposition of textures, are just as overwhelming and important, they are quite limited in scope, and after the appearance of 3D accelerators, their solution will be transferred to “iron”; besides, their scale depends only on the screen resolution, that is, they are rather modest.

In contrast to them, VSD is an unlimited task and dozens of approaches are being used to solve it. But more importantly, the performance of VSD in a naive solution scales depending on the complexity of the scene, which usually increases as a quadratic or cubic function, so it quickly becomes a limiting factor in creating realistic worlds. I believe that in the next few years, VSD will become an increasingly dominant problem in real-time 3D graphics for a PC, because the detailing of 3D worlds will constantly increase. Even today, the Quake level of solid dimensions can contain about 10,000 polygons, that is, almost three times more than the DOOM level of comparable size.

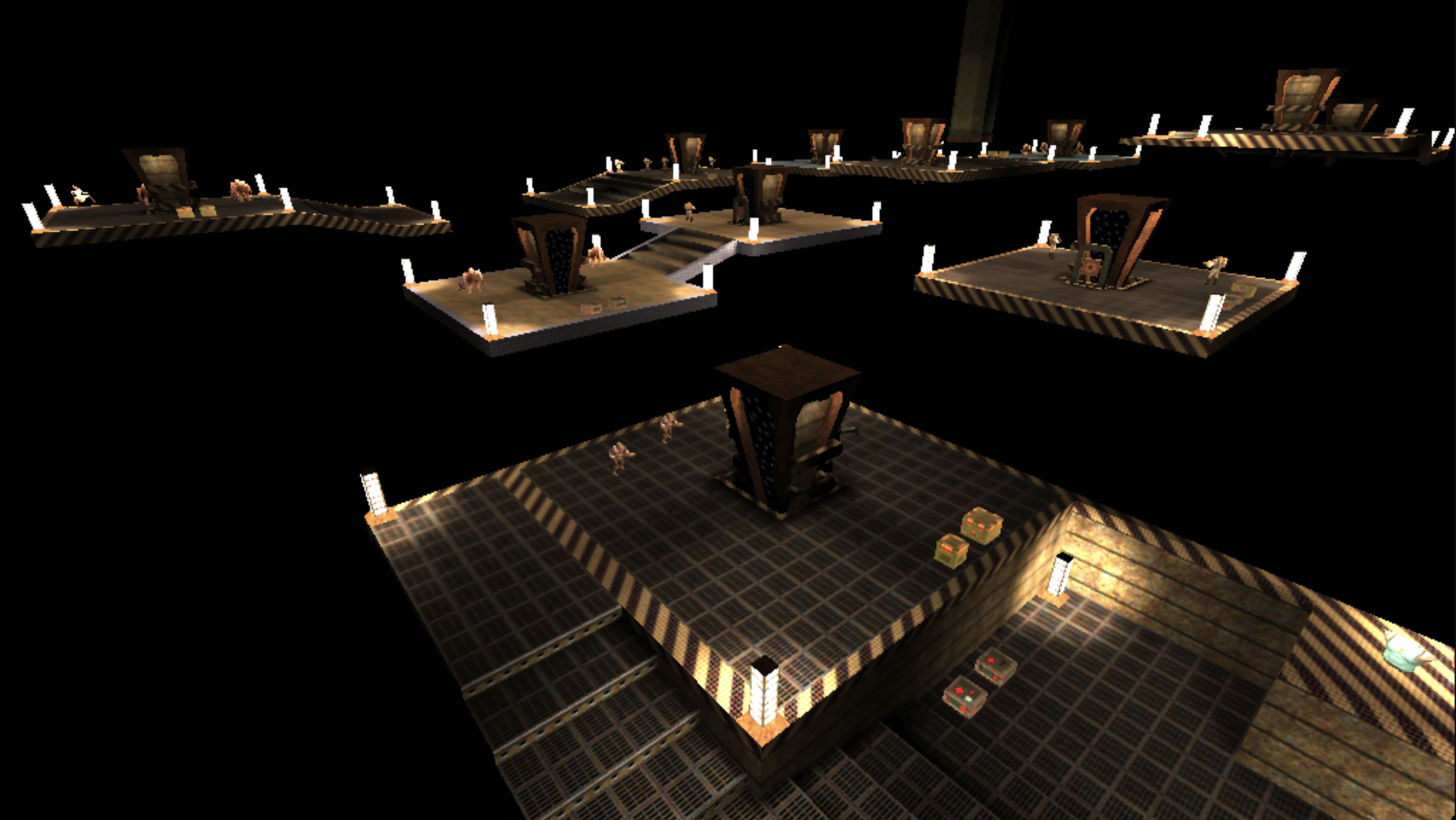

Quake tier structure

Before delving into the VSD, let me mention that each level of Quake is stored as one huge three-dimensional BSP-tree. This BSP-tree, like any other BSP, divides the space, in this case, into the planes of the polygons. However, unlike the BSP-tree, which I demonstrated earlier, the BSP-tree in Quake does not store polygons in tree nodes, as part of the separation planes, but in empty (unfilled) leaves, as shown in Figure 1 in a plan view.

Figure 1. In Quake, polygons are stored in empty leaves. Dark areas are filled leaves (filled volumes, for example, the insides of walls). You

can get the correct drawing order by drawing leaves in the BSP order “front to back” or “back to front”. In addition, since BSP-leaves are always convex, and polygons are the boundaries of BSP-leaves, looking inwards, polygons of any sheet can never overlap each other and can be drawn in any order. (This is a common property of convex polyhedra.)

Clipping and detection of visible surfaces

Ideally, the VSD process should work as follows: first, you need to discard all polygons that are outside the pyramid of visibility, and also cut off all the invisible parts of polygons that are partially visible. Then you need to draw only those pixels of each polygon that are actually visible from the current viewpoint, as shown in Figure 2 in the top view, without wasting time on repeated redrawing of pixels; Notice how small the set of polygons in Figure 2 are to draw. And finally, in the ideal world, visibility of parts of polygons should be inexpensive, and the processing time should be the same for all possible points of view, so the game runs smoothly.

Figure 2. Ideal VSD architecture renders only visible parts of visible polygons.

In the process of performing this task, it is easy to determine which of the polygons are completely outside the limits of the pyramid of visibility or are partially clipped, and it is quite possible to find out exactly which pixels need to be drawn. Alas, the world is far from ideal, and all these checks are costly, so the trick is to speed up or skip different checks, while still creating the necessary result.

As I explained in detail in previous articles, having a BSP, you can easily and cost-effectively bypass the world in the order of "front to back" or "back to front." The simplest VSD solution is to simply walk the tree backwards, truncate each polygon with the pyramid of visibility and draw it if its face is directed into the camera and is not completely cut off (artist's algorithm). But is this an adequate solution?

For relatively simple worlds, it is quite applicable. However, it does not scale well. One of the problems is that when new polygons are added to the world, for cutting off invisible polygons, more and more transformations and checks are required; after a certain threshold, this will start to significantly slow down performance.

Fortunately, this particular problem has a good workaround. As mentioned above, each leaf in a BSP tree describes a convex subspace, and nodes connected to the leaf limit the space. It is less obvious that each node in a BSP tree also describes a subspace — a subspace consisting of all the child elements of the node, as shown in Figure 3. You can think of it this way: each node splits into two parts the subspace created by the nodes located higher in tree, and the child elements of the node further cut this subspace on all the leaves emanating from the node.

Figure 3: Node E describes a shaded subspace that contains leaves 5, 6, 7 and node F.

Since the node subspace is bounded and convex, it is possible to check whether it is completely outside of the visibility pyramid. If this is the case, then all the children of the node will definitely be completely clipped and can be discarded without additional processing. Since the main part of the world is usually located outside the pyramid of visibility, many polygons of the world can be cut off almost without spending huge fragments of subspaces of nodes. Performing an ideal cut check of subspaces is quite expensive, so usually for each node there are bounding parallelograms or spheres used in the cut checks.

That is, clipping on the pyramid of visibility is not a problem, and for drawing back to front, you can use BSP. So what's the problem?

Redrawing

The problem that the main author of DOOM and Quake technologies, John Carmack, encountered while developing Quake, was that in a complex world, many scenes in the pyramid of visibility have a huge amount of polygons. Most of these polygons are partially or completely overlapped by other polygons, but the artist’s algorithm described above requires that each pixel of each polygon be drawn in the visibility pyramid. However, they are often drawn only to be redrawn by others. At a Quake level of 10,000 polygons, it’s easy to get the worst case of a redraw when pixels are drawn 10 or more times; that is, in some frames, each pixel will be drawn on average 10 or more times. No rasterizer is fast enough to compensate for the order of values needed to render the scene; worse

So, John faced the problem of reducing the amount of redrawing to acceptable levels. Ideally, each pixel should be rendered only once, but no more than two or three times in the worst case. As for the cut-off by the pyramid of visibility, ideally, it was required that all the invisible polygons in the pyramid should be cut off almost at no extra cost. The advantage would also be that only the visible parts of the partially visible polygons could be drawn, but the balance must be maintained: the operation should spend less than the redrawing.

When I arrived at id in early March, John already had a prototype of the engine and an outlined plan, so I assumed that our job would be simply to complete and optimize this engine. However, if I knew the history of id, I could figure out what was happening. John created not only DOOM, but also engines for Wolf 3D and several other previous games, and also developed several different versions of each engine during the development process (once he created four engines in four weeks), that is, in four years he wrote about 20 engines . John’s irrepressible pursuit of increasingly new and high-quality Quake engine technologies will end only after the game is released.

Three months after my arrival, only one element of the original VSD structure remained in the engine, and John’s desire to “try new things” has gone so far that I have never seen this before.

Sheaf tree

In the original John Quake project, the rendering was performed from front to back using the second BSP tree, which tracks the already drawn and still empty parts of the screen that had to be painted over with the remaining polygons. Logically, this BSP-tree can be considered a two-dimensional area that describes the filled and empty parts of the screen, as shown in Figure 4, but in fact it is a three-dimensional tree, known as the “beam tree” (beam tree). A bundle tree is a set of 3D sectors (bundles) bounded by planes projected from some central point (in our case, a viewpoint), as shown in Figure 5.

Figure 4. Quake Beaming Tree effectively splits the screen into 2D regions

Figure 5. A Quake bundle tree consists of 3D sectors, or bundles, projected from a viewpoint to the edges of polygons.

In John’s project, a beam tree initially consists of a single beam describing the visibility pyramid; everything outside this bundle is considered to be filled (that is, there is no need to draw anything), and everything inside the bundle is considered empty. When a polygon was reached by the BSP world tree from front to back, this polygon was transformed into a beam by passing planes from its edges through a viewpoint, and all parts of the beam intersecting the empty beams in the beam tree were considered rendered and added to the beam tree as a filled beam. This went on, or until the polygons ended, or until the beam tree was completely filled. After the completion of the beam tree, visible parts of polygons trapped in the beam tree were drawn.

The advantage of working with a three-dimensional beam tree instead of a 2D region was that to determine which side of the beam plane the vertex of the polygon is located, it suffices to check the sign of the vector product of the ray to the top and normal to the plane, because all beam planes pass through the beginning coordinates (viewpoint). In addition, since the beam plane is completely described by a single normal, only the scalar product of the edge and the beam from this edge to the point of view is sufficient to generate a beam from a polygon edge. Finally, for the visibility pyramid group clipping described above, you can use the bounding spheres of BSP nodes.

At first, the sheaf tree property (completion, when the sheaf tree becomes full) seemed attractive because it looked like it would limit the performance in the worst cases. Unfortunately, scenes were still possible in which you could see everything up to the sky or the back wall of the world, so in the worst case, you still had to check all the polygons in the pyramid of visibility relative to the beam tree. Similar problems may also occur with small cracks due to numerical precision limitations. A lot of time is spent on clipping on a bundle tree, and in scenes with a large visual range, for example, when viewed from the top of the level, the total costs of beam processing reduced the Quake frame rate to turtle speeds. That is, in the end it turned out

New 3D engine every day

After the beam tree started working, John worked tirelessly to accelerate the 3D engine, constantly trying to improve its structure, rather than making changes to the implementation. At least once a week, and often every day, he went to my office and said: “I could not sleep last night, so I thought that ...”, and I knew that he would slightly strain my brain again. John tried many ways to improve the beam tree, and achieved some success, but much more interesting was the abundance of completely different approaches that he generated. Some of them were barely mentioned, others were implemented overnight or weekend intense coding. In both cases, they as a result were discarded or evolved, until they began to meet the necessary criteria. Here are some examples of such approaches. I will tell about them in the minimum details

Dividing raycast : in a screen divided into an 8x8 grid, rays are fired; This is a very efficient operation, because the first intersection with the surface can be found simply by limiting the beam to the BSP tree, starting from the point of view until the completed sheet is reached. If neighboring rays do not fall on the same surface, then a beam is emitted in the middle between them, and this continues until all neighboring rays either fall onto one surface or are in neighboring pixels; then a block is drawn around each ray from the polygon to which the ray fell. This approach scales very well, and is limited to the number of pixels, and the redrawing does not occur. His problem is falling out: it is highly likely that small polygons will be between the rays and disappear.

Tipless surfaces: the world is represented as a set of planes of surfaces. Polygons indirectly appear at the intersections of the planes and are extracted from the planes at the last stage before drawing. This provides fast clipping and a very small set of data (compared to polygons, the planes are described much more compactly), but it takes a long time to extract polygons from the planes.

Render buffer: it is similar to the z-buffer, but with only one bit per pixel, indicating whether a pixel has already been drawn. This eliminates redrawing, but at the cost of checking the internal loop in the buffer, additional write operations and cache misses, and worst of all, the complexity of the code increases significantly. Variants of this approach are: checking the rendering buffer one byte at a time and completely skipping fully overlapped bytes, or branching each byte of the rendering buffer into one of 256 internal cycles deployed to draw 0-8 pixels; In this process, one can theoretically take advantage of the ability of x86 processors to perform parallel perspective fractional divisions when processing 8 pixels.

Interval based rendering: polygons are rasterized into intervals that are added to the global list of intervals and are truncated by this list so that only the nearest interval remains in each pixel. A small sort of traversing from front to back is necessary, because if there are intersections, the interval already in the list is closer. This eliminates the need for redrawing, but at the cost of arithmetic for calculating intervals; besides, each polygon still needs to be turned into intervals.

Portals: holes are tracked in which there are no polygons on the surfaces, because the visibility range can be extended only through such portals. Drawing is performed from front to back, and when a portal is found, the polygons and portals behind it are limited to its limits, until there are no visible polygons and portals. When recursively applying this principle, it allows you to draw only the visible parts of visible polygons, but at the cost of a significant amount of clipping on the portals.

Breakthrough

In the end, John decided that a beam tree is a second-order structure that reflects information already indirectly contained in the BSP-tree of the world, so he began to solve the problem of visibility information directly from the BSP-tree of the world. He spent a week on it, in the process of creating the ideal visibility architecture DOOM (2D), in which one linear bypass of the BSP-tree DOOM creates a 2D-visibility with zero redrawing. However, solving the same problem for 3D turned out to be a much more complicated problem, and by the end of the week John had already taken out of himself the increasing complexity and constant glitches in the visibility code. Although the solution with the direct processing of BSP was already close to the working version, it required more and more refinement, and the simple and clear architecture did not take shape at all. When I left work one Friday,

On Monday morning, John looked like a man breaking into another world, and also like a man who didn’t sleep much. Over the weekend, he worked on a solution with direct BSP processing, and achieved satisfactory performance, and also made some notes on how to complete it. At 3:30 am on Monday, when John lay in bed and thought about the portals, he thought that it was possible to pre-calculate and save in each sheet a list of all the leaves visible from this sheet, and then during the program just draw the visible leaves back to front, depending on which sheet was the point of view, completely ignoring all the other leaves.

The difficulty was in size: the initially uncompressed set of potentially visible leaves (potentially visible set, PVS) took several megabytes. However, PVS could be stored as a bit vector with 1 bit per leaf. This structure is very easy to compress with simple compression of zero bytes. John also suggested changing the BSP heuristics so that it generates fewer leaves. This was the opposite of what I proposed several months ago, namely, choosing the polygon as the next separator separating the smallest number of other polygons, and according to the latest data it turned out to be the best heuristics. John’s approach allowed to limit space beyond the level so that the BSP handler could remove external surfaces that the player would never see

In exchange for these 20 kilobytes, the cutting of leaves outside the visibility pyramid is accelerated (because only leaves in PVS are taken into account), and only a small redrawing is required for clipping inside the visibility pyramid (PVS for a sheet contains all the leaves visible from any point on the sheet, therefore slight redrawing, usually about 50%, but capable of reaching up to 150%). Even better, pre-compute PVS results in leveling performance; the worst case is now not much worse than the best, because additional VSD processing is no longer needed, only more polygons and perhaps a small additional redrawing in the case of complex scenes. When John first showed me his working prototype, I specifically launched the most difficult scene - the place where the frame rate was reduced to single-digit numbers, and it worked smoothly,

John said that the preliminary calculation of the PVS was a logical development of the approaches that he considered, and there was no moment of “euryka”. Nevertheless, it was the discovery of a completely new, qualitatively better design, which, along with the rasterizer under development with the sorting of ribs that completely eliminates redrawing, becomes very close to the “ideal” requirements that we chose from the very beginning.

Simplify and try something new

What does this mean? Exactly what I said at the beginning: simplify and try something new. Pre-computed PVS is simpler than any other schemes that we considered earlier (although pre-computation of PVS is an interesting problem that we will discuss another time). In fact, at runtime, pre-computed PVS is just a limited version of the artist's algorithm. Does this mean that this approach is not very deep?

Not at all. All really excellent structures seem simple and even obvious, but after they have been invented. But the process of the invention itself requires incredible perseverance and readiness to try many different ideas until you find the right one, as happened in this case.

My friend Chris Hecker has a theory that all approaches ultimately boil down to one, because they all reflect the same internal state and functionality. From the point of view of the underlying theories, this is true: no matter how you calculate the perspective texture mapping - using division or incremental hyperbolic calculations, the numbers perform the same task. However, when it comes to implementation, a simple difference in time resolution, or improved optimization for hardware or caching, can make a huge difference. My friend Terje Mathisen likes to repeat that “almost all programming can be viewed as caching exercises”; that's exactly what John did. No matter how fast he did his VSD calculations, they would never have become as fast as a preliminary calculation and search for visibility.

The hardest thing in the world is to move away from the usual, fairly good solution to a complex task and try to find another, better solution. It seems to me that for this it is best to try new crazy ideas and always, always try to simplify. One of John’s goals was to have less code in each subsequent 3D game than in the previous one; He believed that if he learned more, he would be able to solve problems more qualitatively in a smaller amount of code.

And while this system works quite well for him.

Study now pay forward

There is one more thing that I would like to mention at the end of the article. How many can remember, Dr. Dobb's Journal has always said that the exchange of information about programming is good. I know a lot of programmers who made the jump in their development thanks to Tiny C Hendrix, or D-Flat Stevens, or simply because of reading the annual DDJ files . (Among them, for example, I myself.) Many companies quite understandably consider the exchange of information quite differently - as a potential loss of profits, and this is what makes the DDJ so valuable for the programmer community.

Thanks to this philosophy, id Software has allowed me to tell in this article how Quake works even before its release. And that is why id put the full Wolfenstein 3D source code on ftp.idsoftware.com/idstuff/source[approx. Lane: now the source is posted on github ] ; you can't just recompile the code and sell it, but you can find out how a full-scale successful game works; Check out the wolfsrc.txt file from the above directory for details on how to use the code.

So remember: when it is legal, in the long run, sharing information benefits all of us. You can pay your debt in advance for information received here and in other places, by sharing everything you can, writing an article or a book, or publishing knowledge on the Web. None of us learn in a vacuum; we all stand on the shoulders of giants - Wirth, Knut and thousands of others. Substitute your shoulders to build the future!

Reference materials

Foley, James D., et al. , Computer Graphics: Principles and Practice , Addison Wesley, 1990, ISBN 0-201-12110-7 (bundles, BSP-trees, VSD).

Teller, Seth, Visibility Computations in Densely Occluded Polyhedral Environments (dissertation), posted on http://theory.lcs.mit.edu/~seth/ along with other articles on the definition of visibility.

Teller, Seth, Visibility Preprocessing for Interactive Walkthroughs , SIGGRAPH 91, pp. 61-69.