We are doing a machine learning project in Python. Part 2

- Transfer

- Tutorial

Translation A Complete Machine Learning Walk-Through in Python: Part Two

Putting together all the parts of a machine learning project can be tricky. In this series of articles, we will go through all stages of the implementation of the machine learning process using real data, and find out how the various techniques are combined with each other.

In the first article, we cleaned and structured the data, conducted an exploratory analysis, collected a set of attributes for use in the model, and set a baseline for evaluating the results. With the help of this article we will learn how to implement in Python and compare several machine learning models, perform hyperparametric tuning to optimize the best model, and evaluate the performance of the final model on a test data set.

All the project code is on GitHub , and here is the second notebook related to the current article. You can use and modify the code as you wish!

Model Evaluation and Selection

Memo: We are working on a controlled regression task, using the energy information for buildings in New York to create a model that predicts which Energy Star Score a particular building will receive. We are interested in both the accuracy of forecasting and the interpretability of the model.

Today you can choose from the many available machine learning models , and this abundance can be intimidating. Of course, there are comparative reviews on the network that will help you navigate when choosing an algorithm, but I prefer to try a few and see which is better. Machine learning is for the most part based on empirical rather than theoretical results , and in practiceit is impossible to understand in advance which model is more accurate .

It is usually recommended that you start with simple, interpretable models, such as linear regression, and if the results are unsatisfactory, then move on to more complex, but usually more accurate methods. This graph (highly anti-scientific) shows the relationship between the accuracy and interpretability of some algorithms:

Interpretability and accuracy ( Source ).

We will evaluate five models of varying degrees of complexity:

- Linear regression.

- The method of k-nearest neighbors.

- "Random forest."

- Gradient boosting.

- Method of support vectors.

We will consider not the theoretical apparatus of these models, but their implementation. If you're interested in theory, check out An Introduction to Statistical Learning (available for free) or Hands-On Machine Learning with Scikit-Learn and TensorFlow . In both books, the theory is perfectly explained and the effectiveness of using the mentioned methods in the R and Python languages, respectively, is shown.

Fill in the missing values

Although when we cleared the data, we discarded the columns in which more than half of the values are missing, we still have a lot of values. Machine learning models cannot work with missing data, so we need to fill them out .

First, we consider the data and remember how they look:

import pandas as pd

import numpy as np

# Read in data into dataframes

train_features = pd.read_csv('data/training_features.csv')

test_features = pd.read_csv('data/testing_features.csv')

train_labels = pd.read_csv('data/training_labels.csv')

test_labels = pd.read_csv('data/testing_labels.csv')

Training Feature Size: (6622, 64)

Testing Feature Size: (2839, 64)

Training Labels Size: (6622, 1)

Testing Labels Size: (2839, 1)Each

NaN-value is a missing record in the data. You can fill them in different ways , and we will use the fairly simple median imputation method, which replaces the missing data with the average values for the corresponding columns. In the code below, we will create a Scikit-Learn object

Imputerwith a median strategy. Then we train it on the training data (using imputer.fit), and apply it to fill in the missing values in the training and test sets (using imputer.transform). That is, the records that are missing in the test data will be filled with the corresponding median value from the training data .We do the filling and do not train the model on the data as is, in order to avoid the problem of leakage of test data when information from the test dataset goes into the training.

# Create an imputer object with a median filling strategy

imputer = Imputer(strategy='median')

# Train on the training features

imputer.fit(train_features)

# Transform both training data and testing data

X = imputer.transform(train_features)

X_test = imputer.transform(test_features)

Missing values in training features: 0

Missing values in testing features: 0Now all the values are filled, there are no gaps.

Feature Scaling

Scaling is the general process of changing the range of a characteristic. This is a necessary step , because signs are measured in different units, which means they cover different ranges. This greatly distorts the results of such algorithms as the support vector method and the k-nearest neighbor method, which take into account the distances between the measurements. And scaling allows you to avoid this. Although methods like linear regression and “random forest” do not require scaling of features, it is better not to neglect this step when comparing several algorithms.

We will scale using each attribute to a range from 0 to 1. We take all the values of the attribute, select the minimum and divide it by the difference between the maximum and minimum (range). This scaling method is often called normalization, and the other main way is standardization .

This process is easy to implement manually, so we will use the object

MinMaxScalerfrom Scikit-Learn. The code for this method is identical to the code for filling in the missing values, only scaling is used instead of pasting. Recall that we learn the model only on the training set, and then we transform all the data.# Create the scaler object with a range of 0-1

scaler = MinMaxScaler(feature_range=(0, 1))

# Fit on the training data

scaler.fit(X)

# Transform both the training and testing data

X = scaler.transform(X)

X_test = scaler.transform(X_test)Now, each attribute has a minimum value of 0, and a maximum of 1. Filling in the missing values and scaling of the attributes - these two stages are needed in almost any machine learning process.

We implement machine learning models in Scikit-Learn

After all the preparatory work, the process of creating, training and running models is relatively simple. We will use the Scikit-Learn library in Python , which is beautifully documented and with elaborate syntax for building models. By learning how to create a model in Scikit-Learn, you can quickly implement all kinds of algorithms.

We will illustrate the process of creating, training (

.fit) and testing ( .predict) using gradient boosting:from sklearn.ensemble import GradientBoostingRegressor

# Create the model

gradient_boosted = GradientBoostingRegressor()

# Fit the model on the training data

gradient_boosted.fit(X, y)

# Make predictions on the test data

predictions = gradient_boosted.predict(X_test)

# Evaluate the model

mae = np.mean(abs(predictions - y_test))

print('Gradient Boosted Performance on the test set: MAE = %0.4f' % mae)

Gradient Boosted Performance on the test set: MAE = 10.0132Just one line of code for creating, training and testing. To build other models, we use the same syntax, changing only the name of the algorithm.

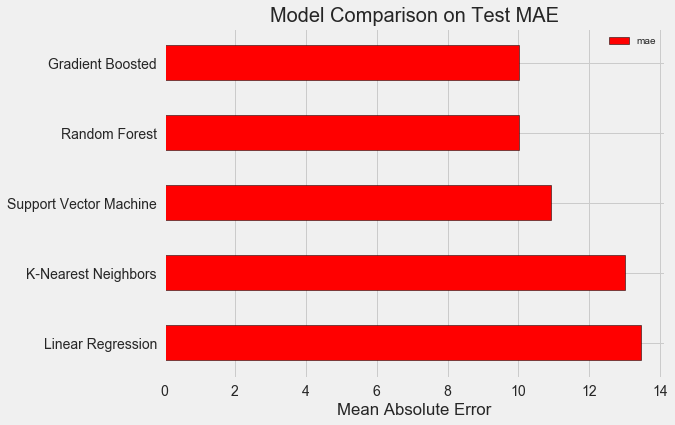

In order to objectively evaluate models, we calculated the base level using the median value of the goal and got 24.5. And the results were much better, so our problem can be solved using machine learning.

In our case, gradient boosting (MAE = 10.013) turned out to be slightly better than the "random forest" (10.014 MAE). Although these results cannot be considered completely honest, because for hyperparameters we mostly use the default values. Model performance is highly dependent on these settings, especially in the support vector method. Nevertheless, based on these results, we will choose gradient boosting and begin to optimize it.

Hyperparametric model optimization

After choosing a model, you can optimize it for the task to be solved by adjusting the hyper parameters.

But first of all, let's understand what are hyperparameters and how do they differ from ordinary parameters ?

- The hyperparameters of the model can be considered the settings of the algorithm, which we set before starting its training. For example, the hyperparameter is the number of trees in the "random forest", or the number of neighbors in the k-nearest neighbors method.

- Model parameters - what she learns during training, for example, weights in linear regression.

By controlling the hyperparameter, we influence the results of the model, changing the balance between its under-education and retraining . Under learning is a situation where the model is not complex enough (it has too few degrees of freedom) to study the correspondence of signs and goals. An under-trained model has a high bias, which can be corrected by complicating the model.

Retraining is a situation where the model essentially remembers the training data. The retrained model has a high variance, which can be adjusted by limiting the complexity of the model through regularization. Both under-trained and retrained models will not be able to generalize test data well.

The difficulty in choosing the right hyperparameters is that for each task there will be a unique optimal set. Therefore, the only way to choose the best settings is to try different combinations on the new dataset. Fortunately, Scikit-Learn has a number of methods that allow you to effectively evaluate hyperparameters. Moreover, projects like TPOT are attempting to optimize the search for hyperparameters using approaches such as genetic programming . In this article, we restrict ourselves to using Scikit-Learn.

Cross-check random search

Let's implement a hyperparameter tuning method called random cross-validation lookups:

- Random search - a technique for selecting hyperparameters. We define a grid, and then randomly select various combinations from it, in contrast to grid search, in which we successively try each combination. By the way, random search works almost as well as grid search , but much faster.

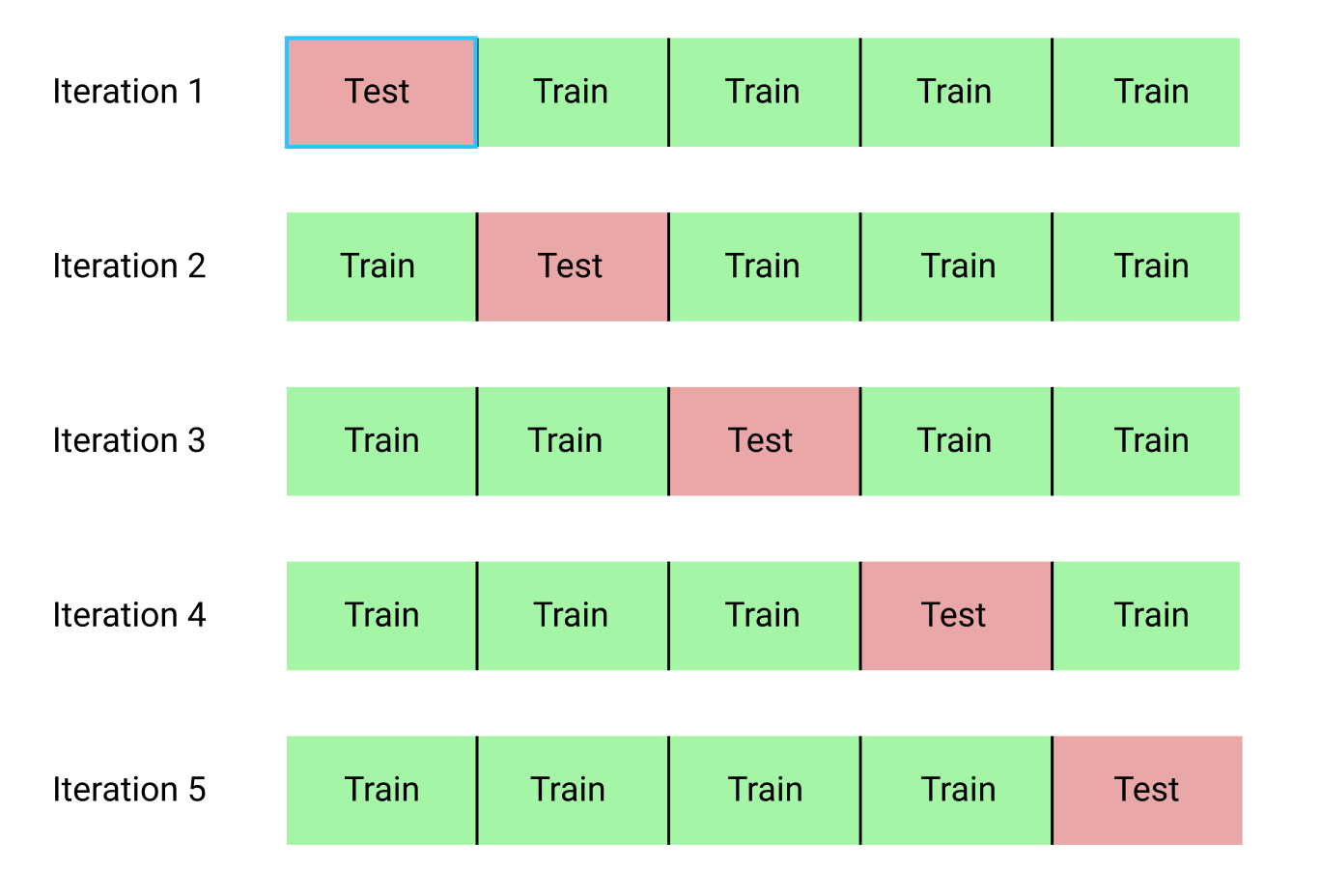

- Cross-checking is a way of evaluating the selected combination of hyperparameters. Instead of dividing the data into training and test sets, which reduces the amount of data available for training, we will use k-block cross validation (K-Fold Cross Validation). To do this, we will divide the training data into k blocks, and then run the iterative process, during which we first train the model on k-1 blocks, and then compare the result when learning on the k-th block. We will repeat the process k times, and in the end we will get the average error value for each iteration. This will be the final assessment.

Here is a clear illustration of k-block cross-validation for k = 5:

The whole process of random search with cross-validation looks like this:

- We set a grid of hyperparameters.

- Randomly select a combination of hyperparameters.

- Create a model using this combination.

- We evaluate the result of the model using k-block cross-validation.

- We decide which hyperparameters give the best result.

Of course, all this is done not manually, but with the help

RandomizedSearchCVof Scikit-Learn!Small digression: gradient boosting methods

We will use a gradient boost based regression model. This is a collective method, that is, the model consists of numerous “weak learners”, in this case, from separate decision trees. If students learn in parallel algorithms like “random forest” , and then the prediction result is selected by voting, then in boosting algorithms like gradient boosting, students are trained sequentially, and each of them “focuses” on mistakes made by predecessors.

In recent years, boosting algorithms have become popular and often win in machine learning competitions. Gradient Boost- one of the implementations in which Gradient Descent is used to minimize the cost of the function. The implementation of gradient boosting in Scikit-Learn is considered not as effective as in other libraries, for example, in XGBoost , but it works well on small datasets and gives fairly accurate forecasts.

Back to the hyperparametric setting

In regression using gradient boosting, there are many hyperparameters that need to be configured, for details I refer you to the Scikit-Learn documentation. We will optimize:

loss: minimization of the loss function;n_estimators: number of weak decision trees used (decision trees);max_depth: maximum depth of each decision tree;min_samples_leaf: the minimum number of examples that should be in the “leaf” node of the decision tree;min_samples_split: the minimum number of examples that are needed to separate the decision tree node;max_features: The maximum number of features that are used to separate nodes.

Not sure if anyone really understands how it all works, and the only way to find the best combination is to try different options.

In this code, we create a grid of hyperparameters, then create an object

RandomizedSearchCVand look for it using 4-block cross-validation for 25 different combinations of hyperparameters:# Loss function to be optimized

loss = ['ls', 'lad', 'huber']

# Number of trees used in the boosting process

n_estimators = [100, 500, 900, 1100, 1500]

# Maximum depth of each tree

max_depth = [2, 3, 5, 10, 15]

# Minimum number of samples per leaf

min_samples_leaf = [1, 2, 4, 6, 8]

# Minimum number of samples to split a node

min_samples_split = [2, 4, 6, 10]

# Maximum number of features to consider for making splits

max_features = ['auto', 'sqrt', 'log2', None]

# Define the grid of hyperparameters to search

hyperparameter_grid = {'loss': loss,

'n_estimators': n_estimators,

'max_depth': max_depth,

'min_samples_leaf': min_samples_leaf,

'min_samples_split': min_samples_split,

'max_features': max_features}

# Create the model to use for hyperparameter tuning

model = GradientBoostingRegressor(random_state = 42)

# Set up the random search with 4-fold cross validation

random_cv = RandomizedSearchCV(estimator=model,

param_distributions=hyperparameter_grid,

cv=4, n_iter=25,

scoring = 'neg_mean_absolute_error',

n_jobs = -1, verbose = 1,

return_train_score = True,

random_state=42)

# Fit on the training data

random_cv.fit(X, y) After performing the search, we can inspect the RandomizedSearchCV object to find the best model:

# Find the best combination of settings

random_cv.best_estimator_

GradientBoostingRegressor(loss='lad', max_depth=5,

max_features=None,

min_samples_leaf=6,

min_samples_split=6,

n_estimators=500)You can use these results for a grid search by selecting parameters for the grid that are close to these optimal values. But further tuning is unlikely to significantly improve the model. There is a general rule: the competent construction of features will have a much greater impact on the accuracy of the model than the most expensive hyperparameter setting. This is the law of decreasing profitability in relation to machine learning : designing attributes gives the highest return, and hyperparametric tuning brings only modest benefits.

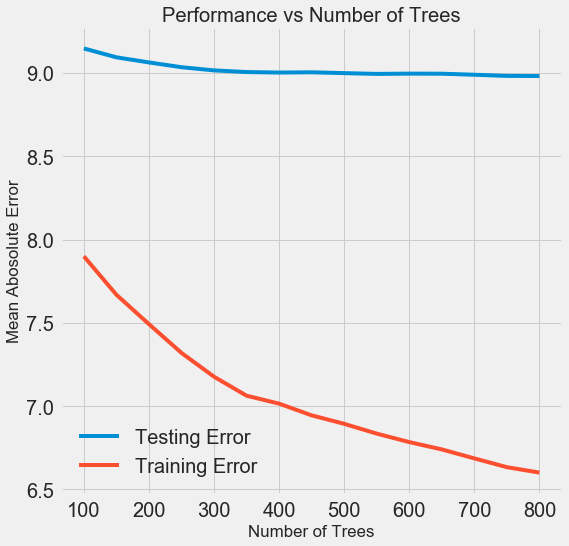

To change the number of estimators (decision trees) while preserving the values of other hyperparameters, one experiment can be performed that will demonstrate the role of this setting. The implementation is given here , but here is the result:

As the number of trees used by the model increases, the level of errors during training and testing decreases. But learning errors decrease much faster, and as a result, the model is retrained: it shows excellent results on training data, but it works worse on test data.

On test data, accuracy always decreases (because the model sees the correct answers for the training dataset), but a significant drop indicates retraining . This problem can be solved by increasing the amount of training data or reducing the complexity of the model using hyperparameters . Here we will not touch on hyperparameters, but I recommend that you always pay attention to the problem of retraining.

For our final model, we will take 800 evaluators, because this will give us the lowest level of error in the cross-validation. Now test the model!

Assessment Using Test Data

As responsible people, we made sure that our model in no way gained access to test data during training. Therefore, we can use accuracy when working with test data as a model quality indicator when it is admitted to real tasks.

We feed the model test data and calculate the error. Here is a comparison of the results of the default gradient boosting algorithm and our customized model:

# Make predictions on the test set using default and final model

default_pred = default_model.predict(X_test)

final_pred = final_model.predict(X_test)

Default model performance on the test set: MAE = 10.0118.

Final model performance on the test set: MAE = 9.0446.Hyperparametric tuning helped improve model accuracy by about 10%. Depending on the situation, this can be a very significant improvement, but it takes a lot of time.

You can compare the duration of training for both models using the magic team

%timeitin Jupyter Notebooks. First, measure the default duration of the model:%%timeit -n 1 -r 5

default_model.fit(X, y)

1.09 s ± 153 ms per loop (mean ± std. dev. of 5 runs, 1 loop each)One second to study is very decent. But the tuned model is not so fast:

%%timeit -n 1 -r 5

final_model.fit(X, y)

12.1 s ± 1.33 s per loop (mean ± std. dev. of 5 runs, 1 loop each)This situation illustrates the fundamental aspect of machine learning: it is all about compromises . It is constantly necessary to choose a balance between accuracy and interpretability, between displacement and dispersion , between accuracy and operating time, and so on. The right combination is completely determined by the specific task. In our case, a 12-fold increase in the duration of work in relative terms is large, but in absolute terms it is insignificant.

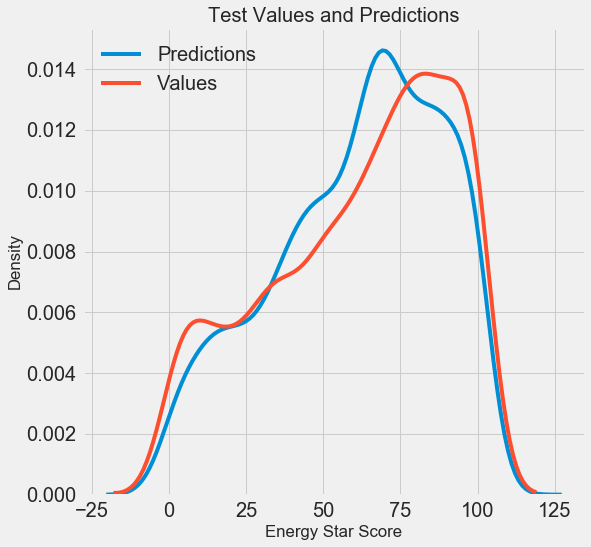

We got the final forecasting results, now let's analyze them and find out if there are any noticeable deviations. On the left is a graph of the density of predicted and real values, on the right is a histogram of the error:

The forecast of the model repeats well the distribution of real values, while on the training data, the density peak is located closer to the median value (66) than to the real density peak (about 100). Errors have an almost normal distribution, although there are several large negative values when the model forecast is very different from real data. In the next article, we will examine in more detail the interpretation of the results.

Conclusion

In this article, we examined several stages of solving the problem of machine learning:

- Filling in missing values and scaling features.

- Evaluation and comparison of the results of several models.

- Hyperparametric tuning using random grid search and cross validation.

- Evaluation of the best model using test data.

The results indicate that we can use machine learning to predict Energy Star Score based on available statistics. With the help of gradient boosting, an error of 9.1 was achieved on test data. Hyperparametric tuning can greatly improve the results, but at the cost of a significant slowdown. This is one of many trade-offs to consider in machine learning.

In the next article, we will try to figure out how our model works. We will also look at the main factors that influence the Energy Star Score. If we know that the model is accurate, then we will try to understand why it predicts this way and what this tells us about the problem itself.