Create a simple neural network

- Transfer

- Tutorial

Translation Making a Simple Neural Network

What will we do? We will try to create a simple and very small neural network, which we will explain and teach you to distinguish something. At the same time, we will not go into the history and mathematical jungle (such information is very easy to find) - instead, we will try to explain the problem (not the fact that it will be possible) to you and to ourselves with drawings and code.

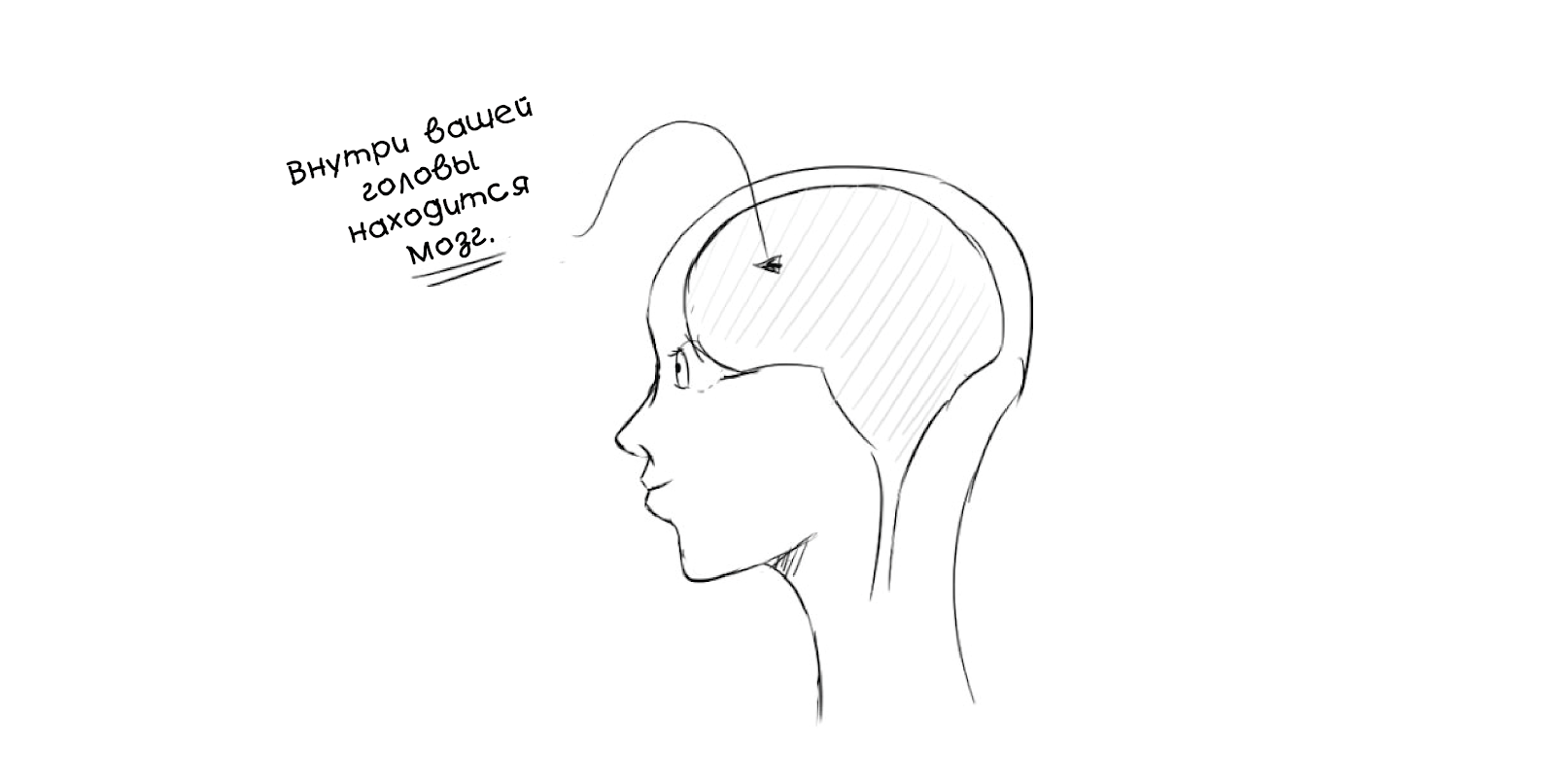

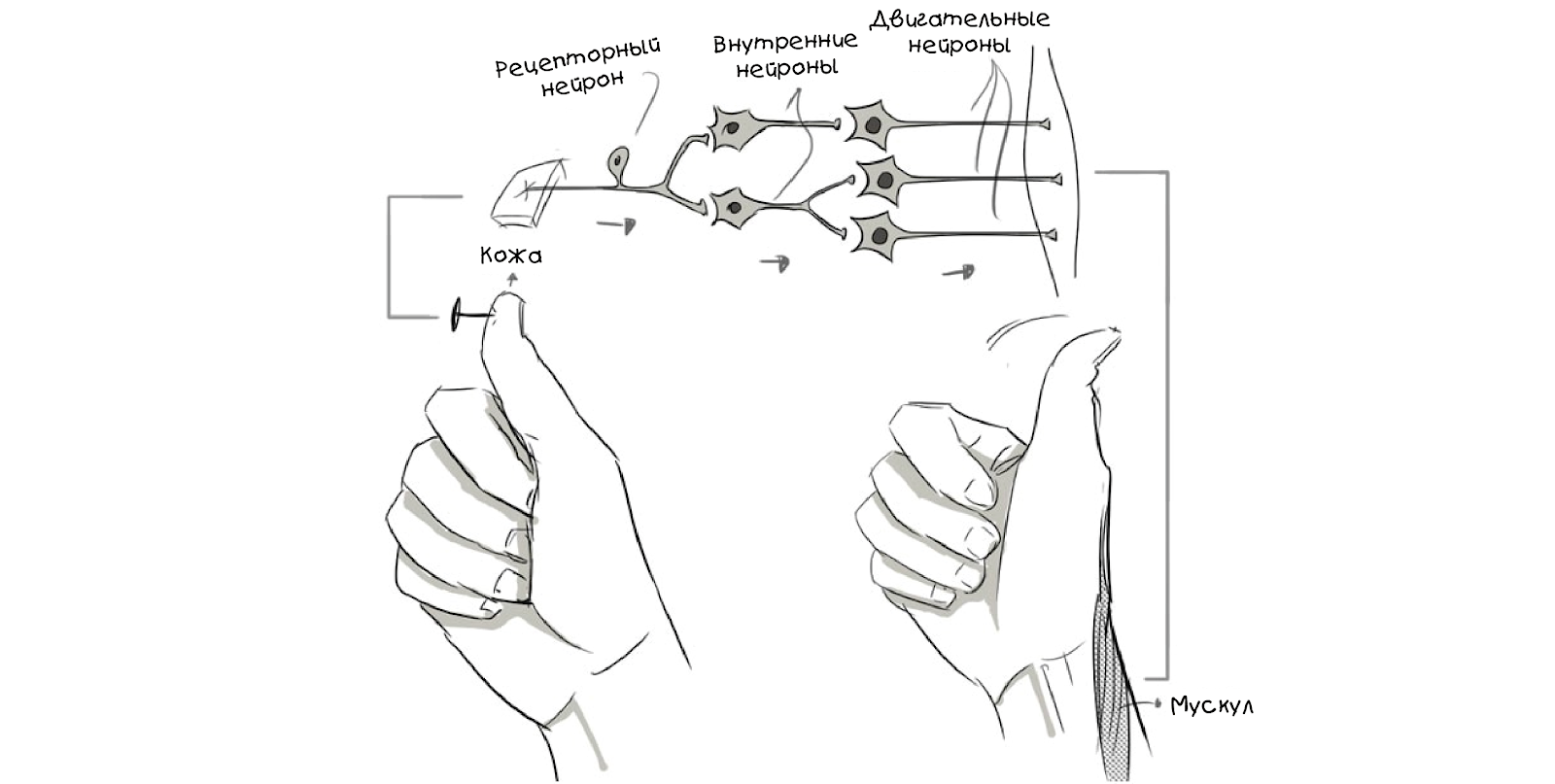

Many of the terms in neural networks are related to biology, so let's start from the very beginning:

The brain is a complicated thing, but it can also be divided into several main parts and operations:

The causative agent can also be internal (for example, an image or an idea):

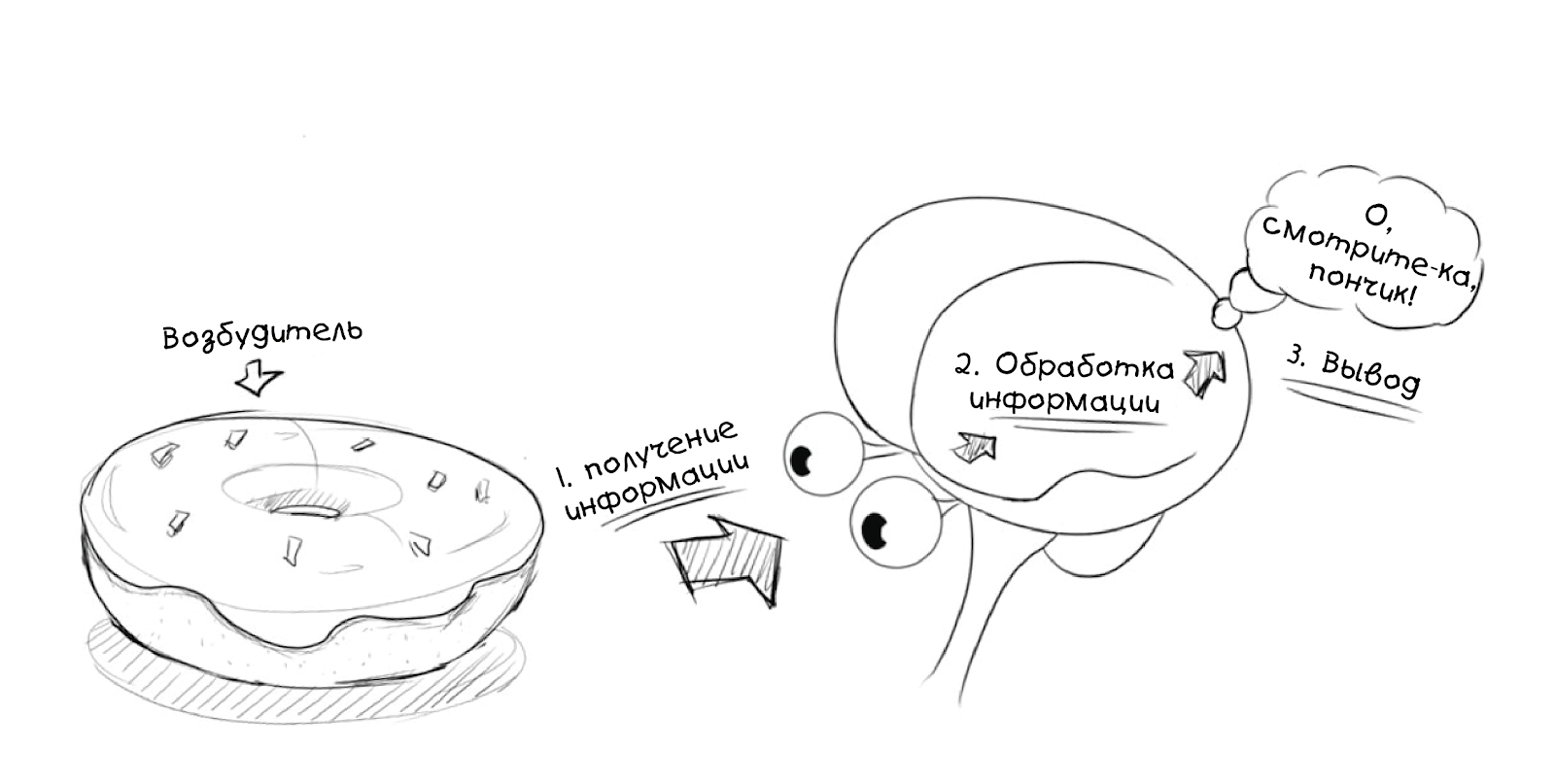

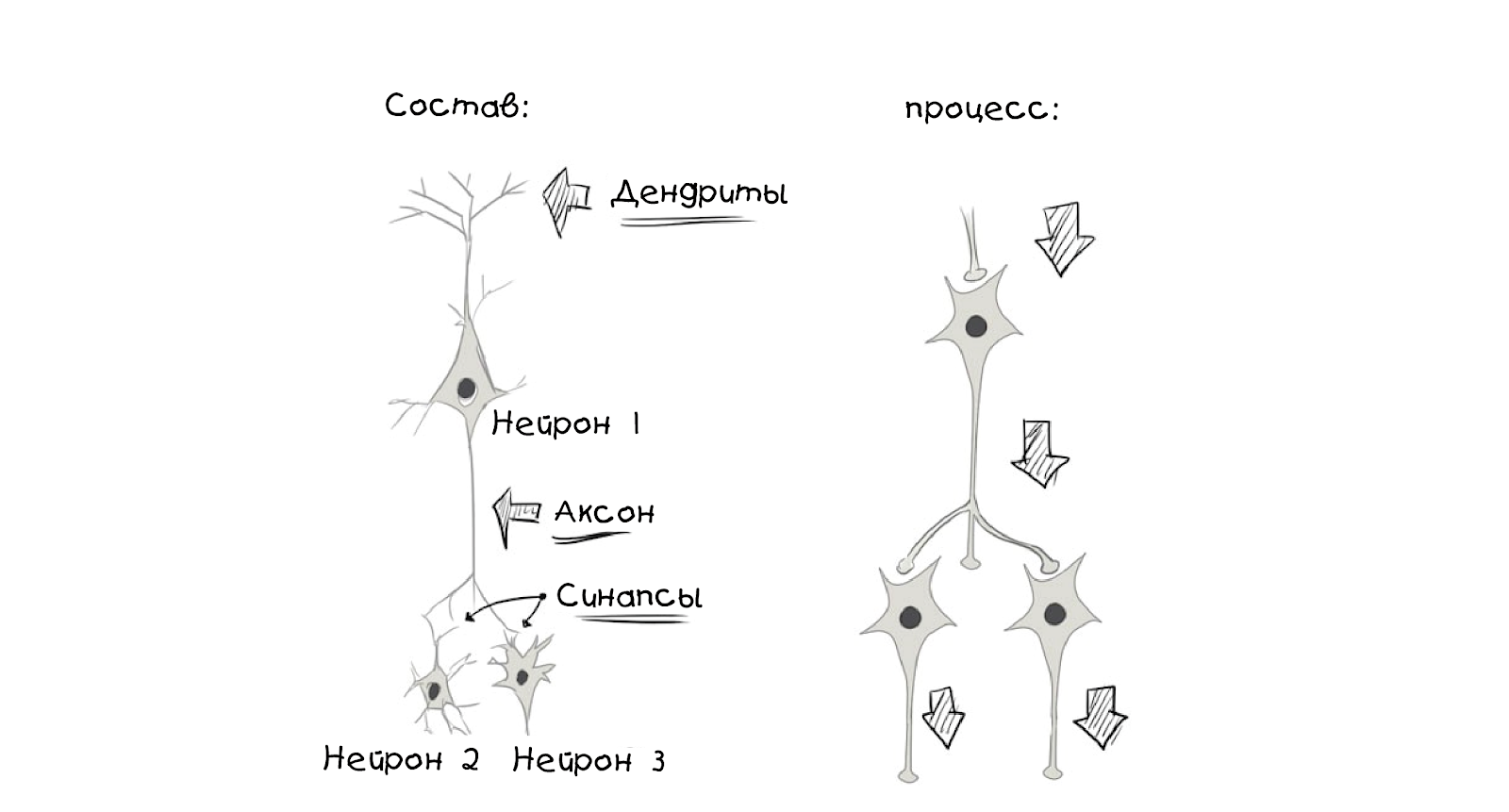

Now let's take a look to basic and simplified partsBrain:

The brain generally looks like a cable network.

A neuron is the main unit of calculus in the brain, it receives and processes the chemical signals of other neurons, and, depending on a number of factors, either does nothing, or generates an electrical impulse, or Action Potential, which then sends signals to neighboring connected neurons through synapses :

Dreams , memories, self-regulating movements, reflexes, and indeed everything that you think or do - everything happens thanks to this process: millions, or even billions of neurons work at different levels and create connections that create ra different parallel subsystems and represent a biological neural network .

Of course, these are all simplifications and generalizations, but thanks to them we can describe a simple

neural network:

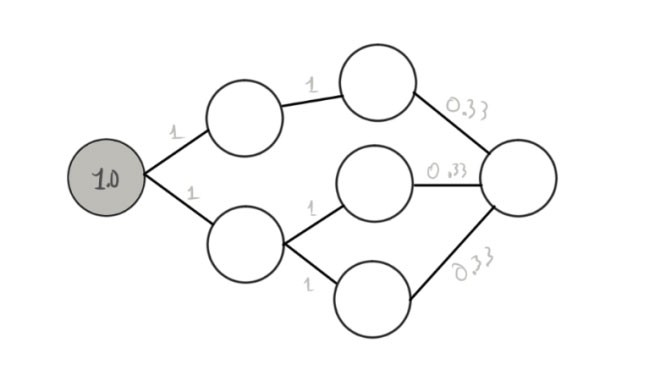

And we can formalize it using a graph:

Here some clarifications are required. The circles are neurons, and the lines are the connections between them,

and in order not to complicate at this stage, the relationships are a direct movement of information from left to right . The first neuron is currently active and grayed out. We also assigned him a number (1 - if it works, 0 - if not). The numbers between neurons indicate the weight of the connection.

The graphs above show the time moment of the network, for a more accurate display, you need to divide it into time periods:

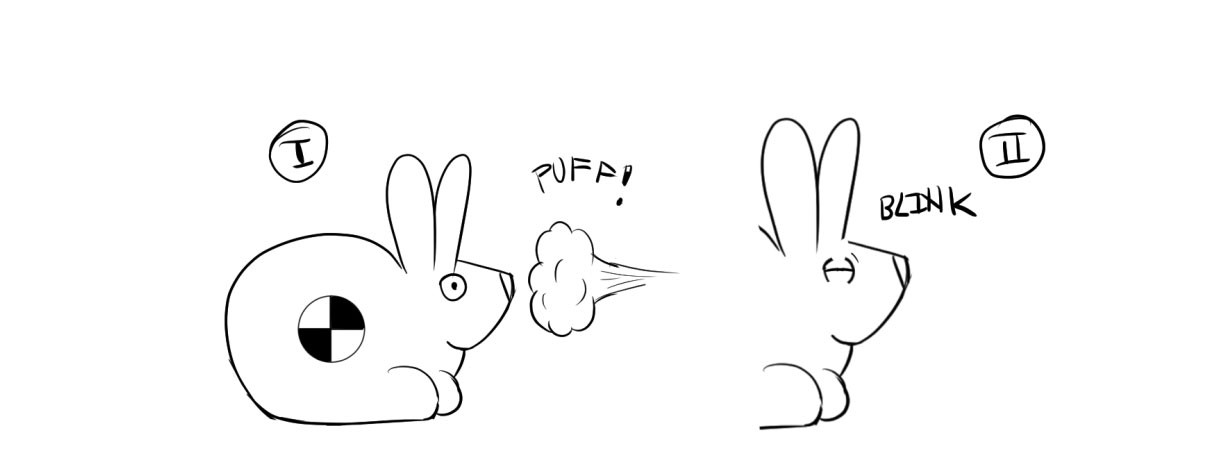

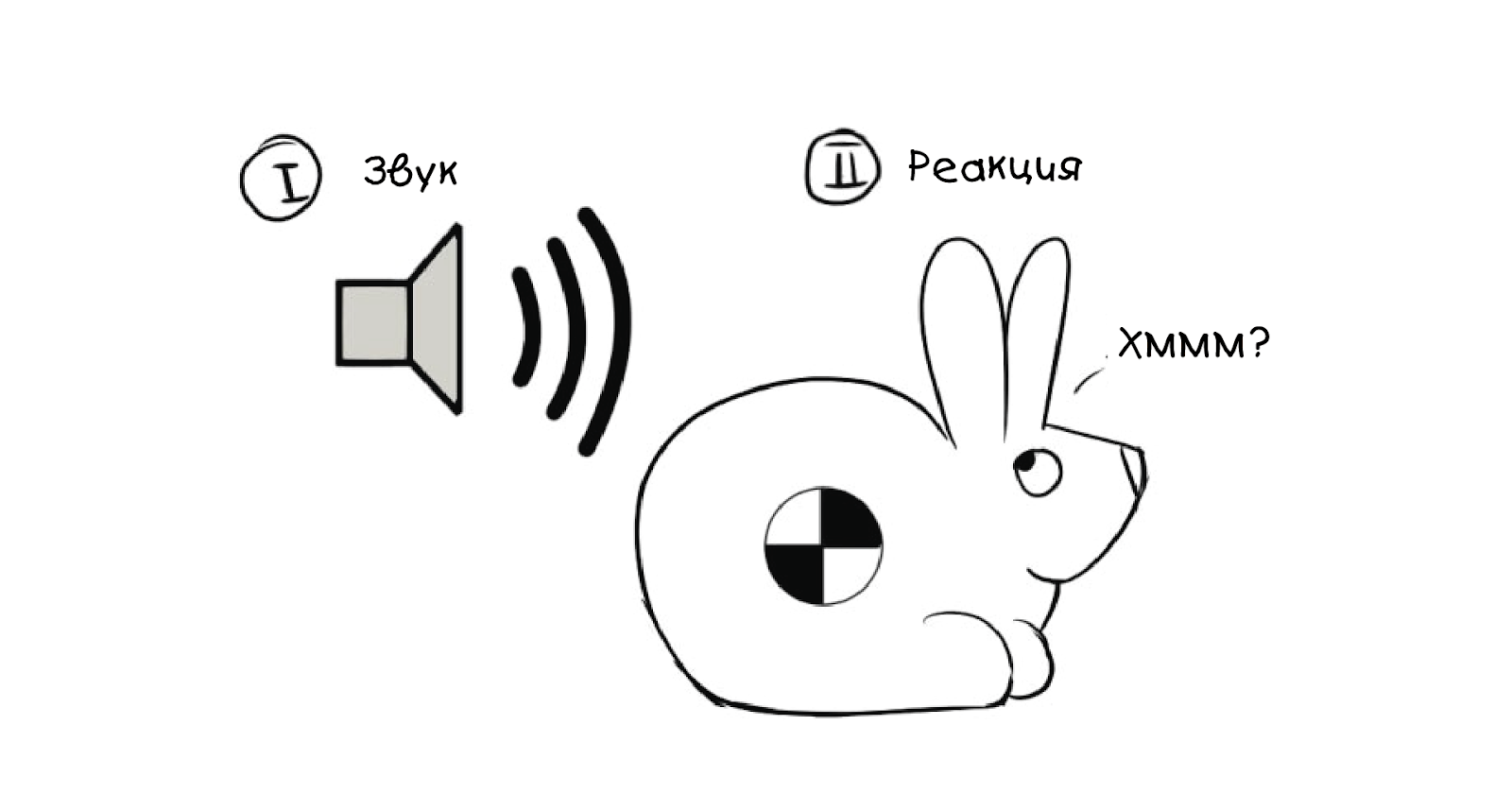

To create your own neural network, you need to understand how weights affect neurons and how neurons are trained. As an example, take a rabbit (test rabbit) and put it in the conditions of a classical experiment.

When a safe stream of air is directed at them, rabbits, like people, blink:

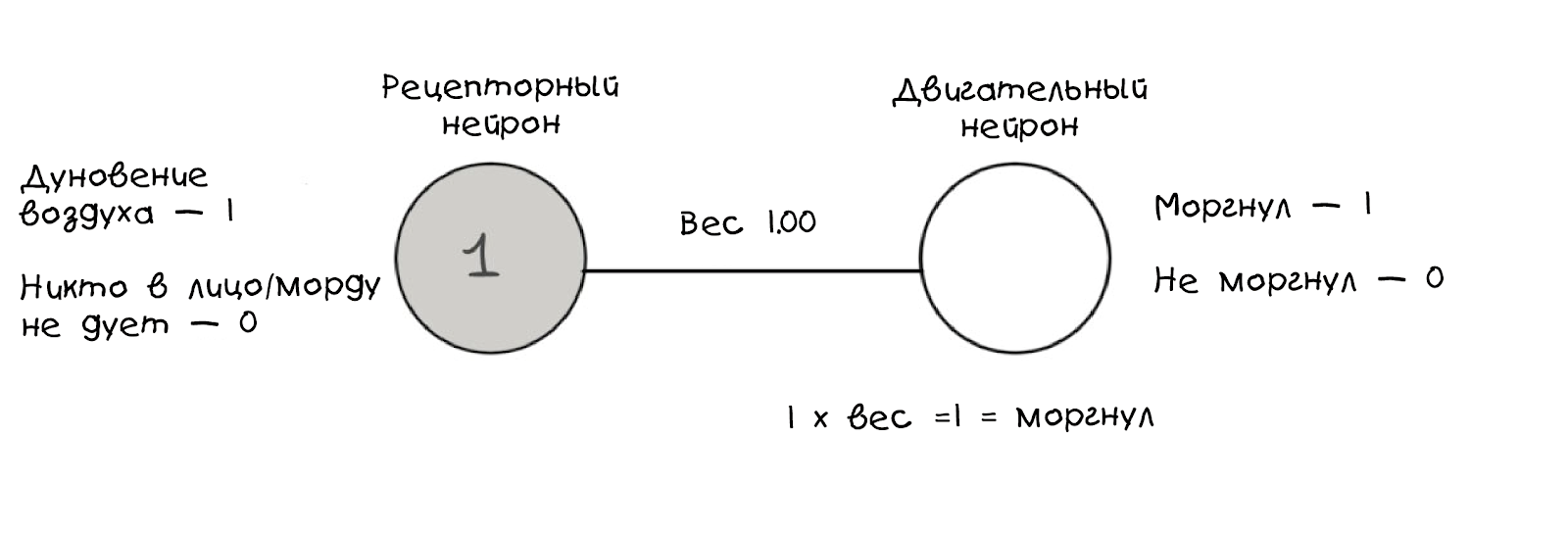

This behavior model can be graphed:

As in the previous diagram, these graphs show only the moment when the rabbit feels a breath, and in this way we code the blow as a logical value . In addition, we calculate whether the second neuron is triggered based on the weight value. If it is 1, then the sensory neuron is triggered, we blink; if the weight is less than 1, we do not blink: the second neuron has a limit of 1.

We introduce another element - a safe sound signal:

We can simulate the interest of a rabbit as follows:

The main difference is that now the weight is zero , so we have not received a blinking rabbit, at least for now. Now we will teach the rabbit to blink on command, mixing

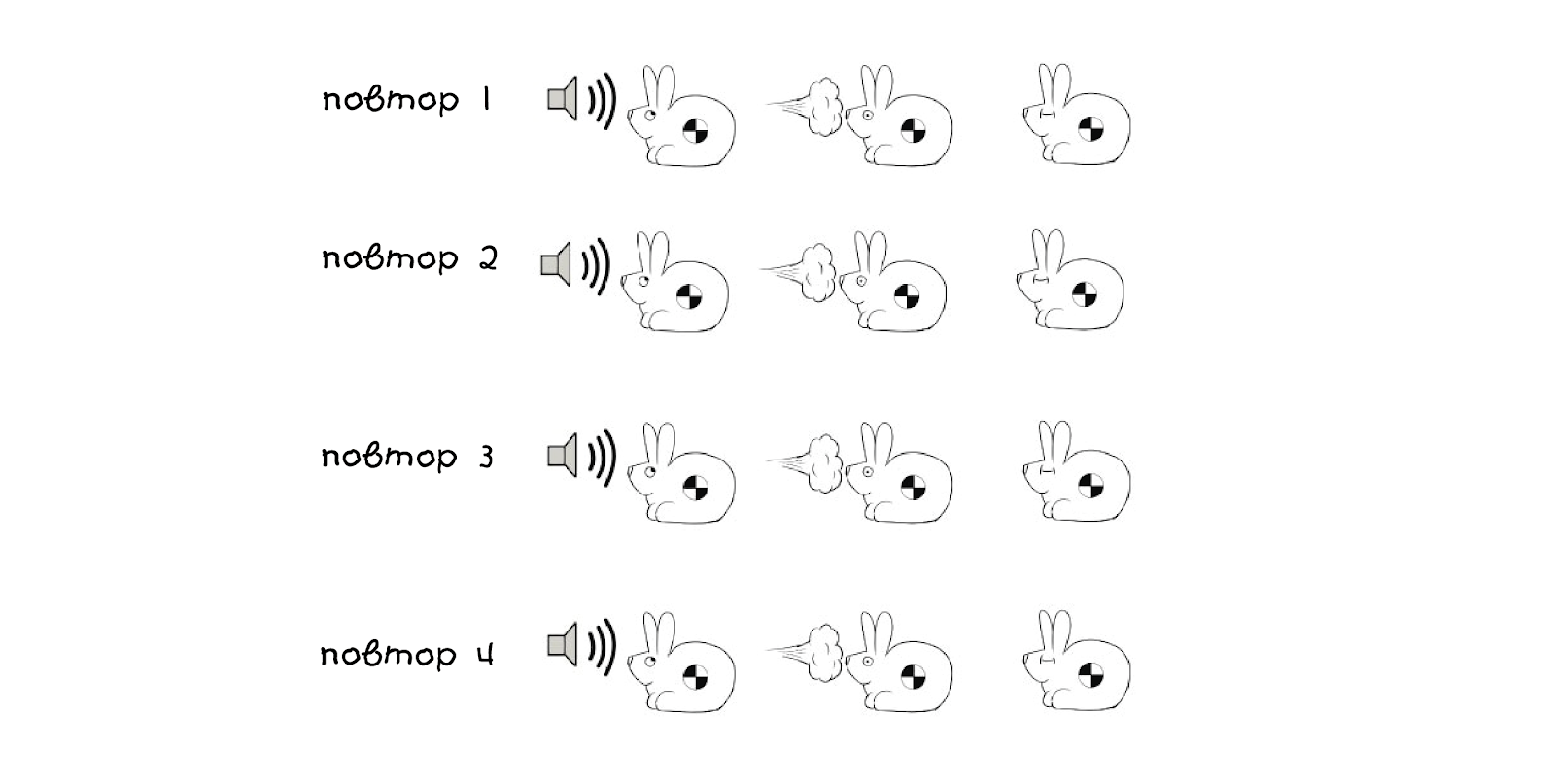

irritants (sound signal and breath):

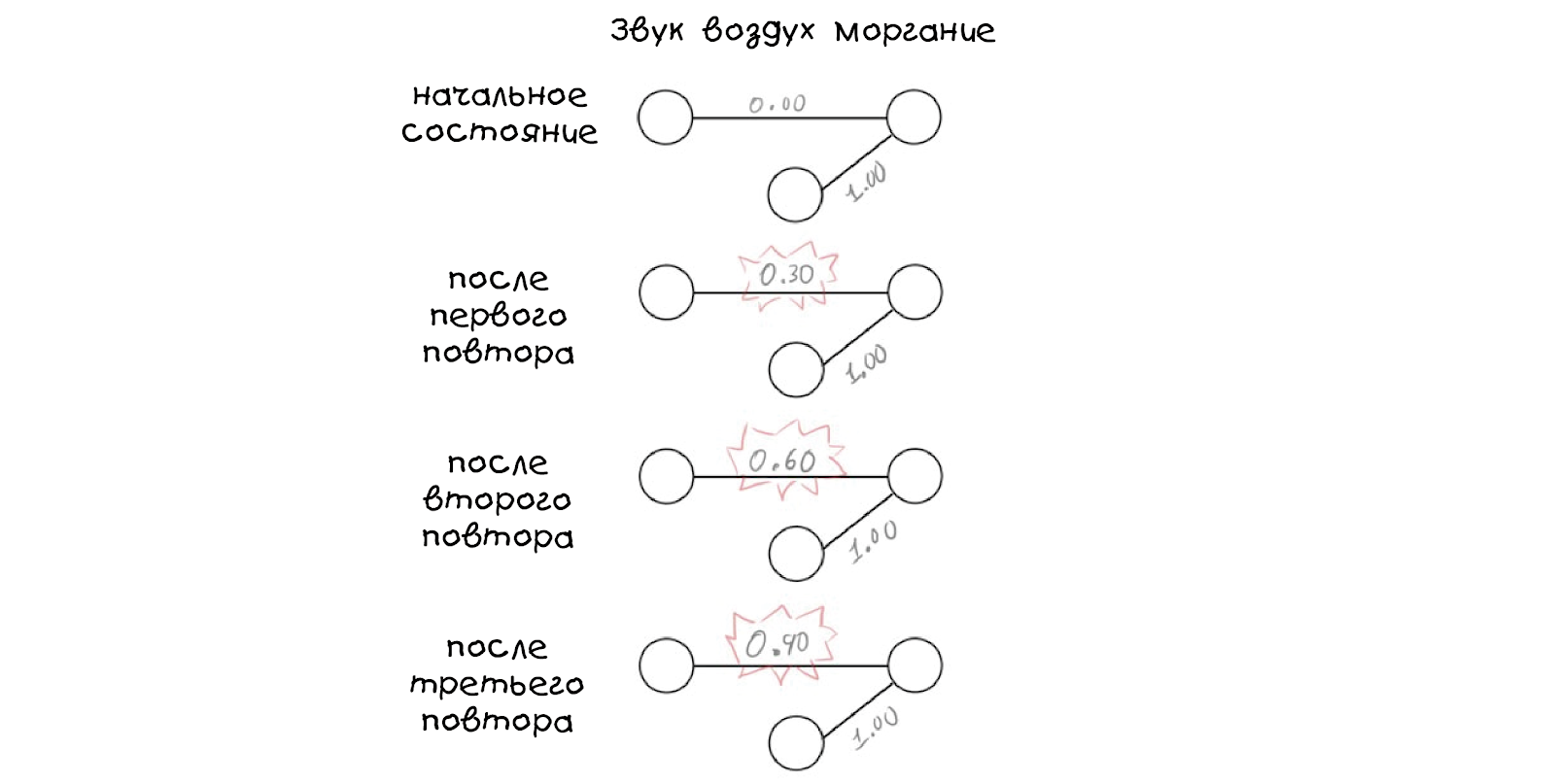

It is important that these events occur at different time periods , in graphs it will look like this:

Sound itself does nothing, but the air flow still makes the rabbit blink, and we show this through weights multiplied by stimuli (red).

Trainingcomplex behavior can be simplistically expressed as a gradual change in weight between connected neurons over time.

To train the rabbit, we repeat the steps:

For the first three attempts, the patterns will look like this:

Note that the weight for the sound stimulus increases after each repetition (highlighted in red), this value is now arbitrary - we chose 0.30, but the number can be anything, even negative. After the third repetition, you will not notice a change in the behavior of the rabbit, but after the fourth repetition something surprising will happen - the behavior will change.

We removed the effect from the air, but the rabbit is still blinking when he heard a beep! Our last little diagram can explain this behavior:

We trained the rabbit to react to the sound by blinking.

In a real experiment of this kind, more than 60 repetitions may be required to achieve a result.

Now we will leave the biological world of the brain and rabbits and try to adapt everything we

have learned to create an artificial neural network. First, let's try to do a simple task.

Suppose we have a machine with four buttons that gives out food when you press the right

button (well, or energy, if you're a robot). The task is to find out which button gives the reward:

We can depict (schematically) what the button does when pressed as follows:

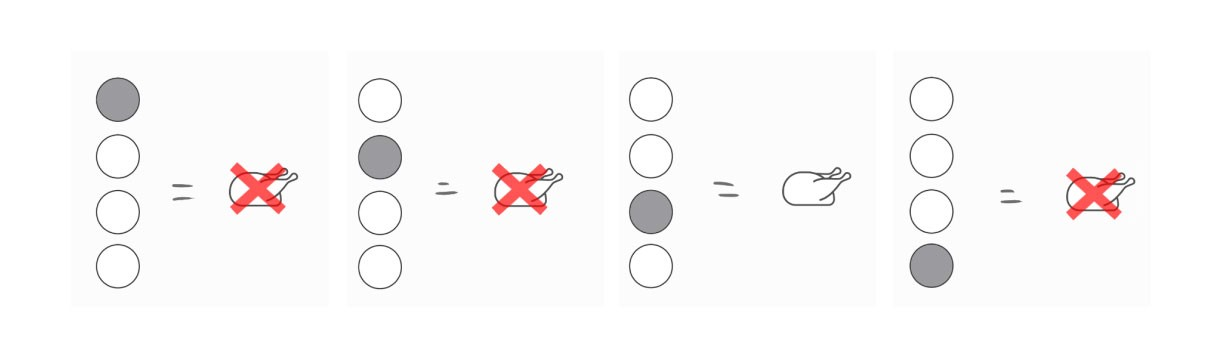

This problem is best solved in its entirety, so let's look at all the possible results, including the correct one:

Click on the 3rd button to get your dinner.

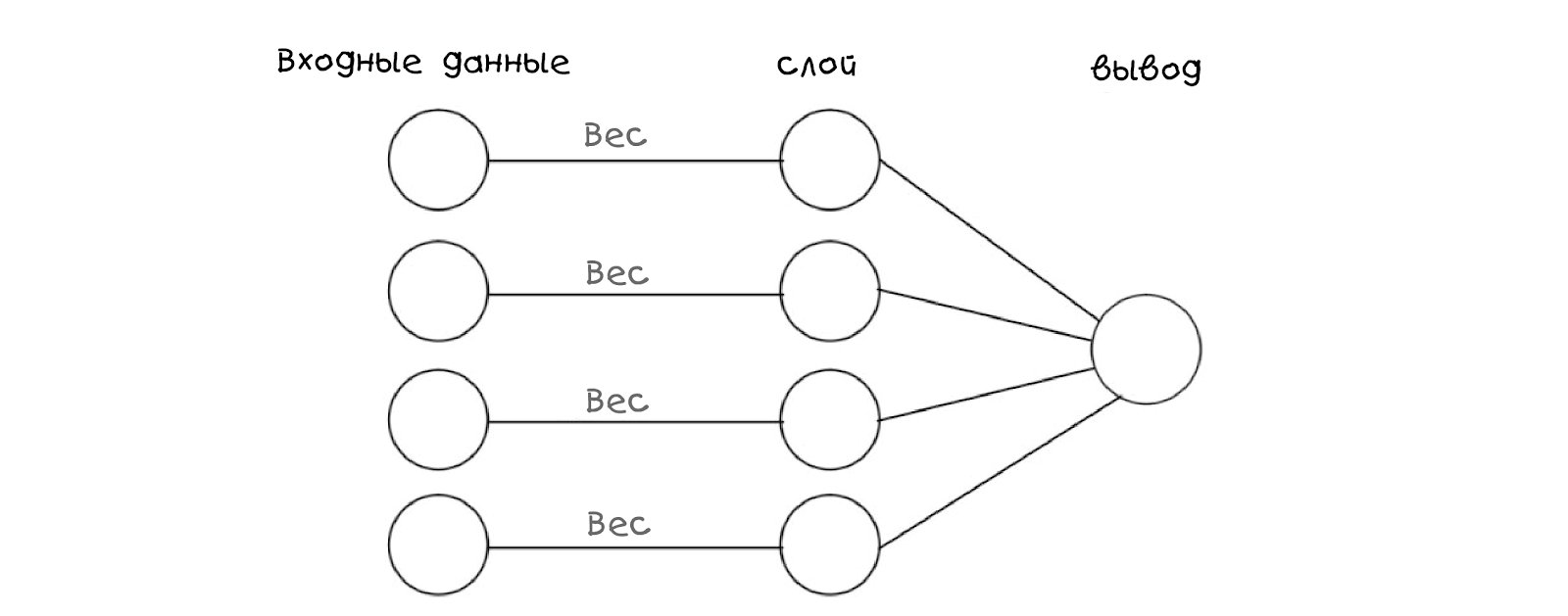

To reproduce a neural network in code, we first need to make a model or graph with which the network can be associated. Here is one graph suitable for the task, in addition, it displays its biological counterpart well:

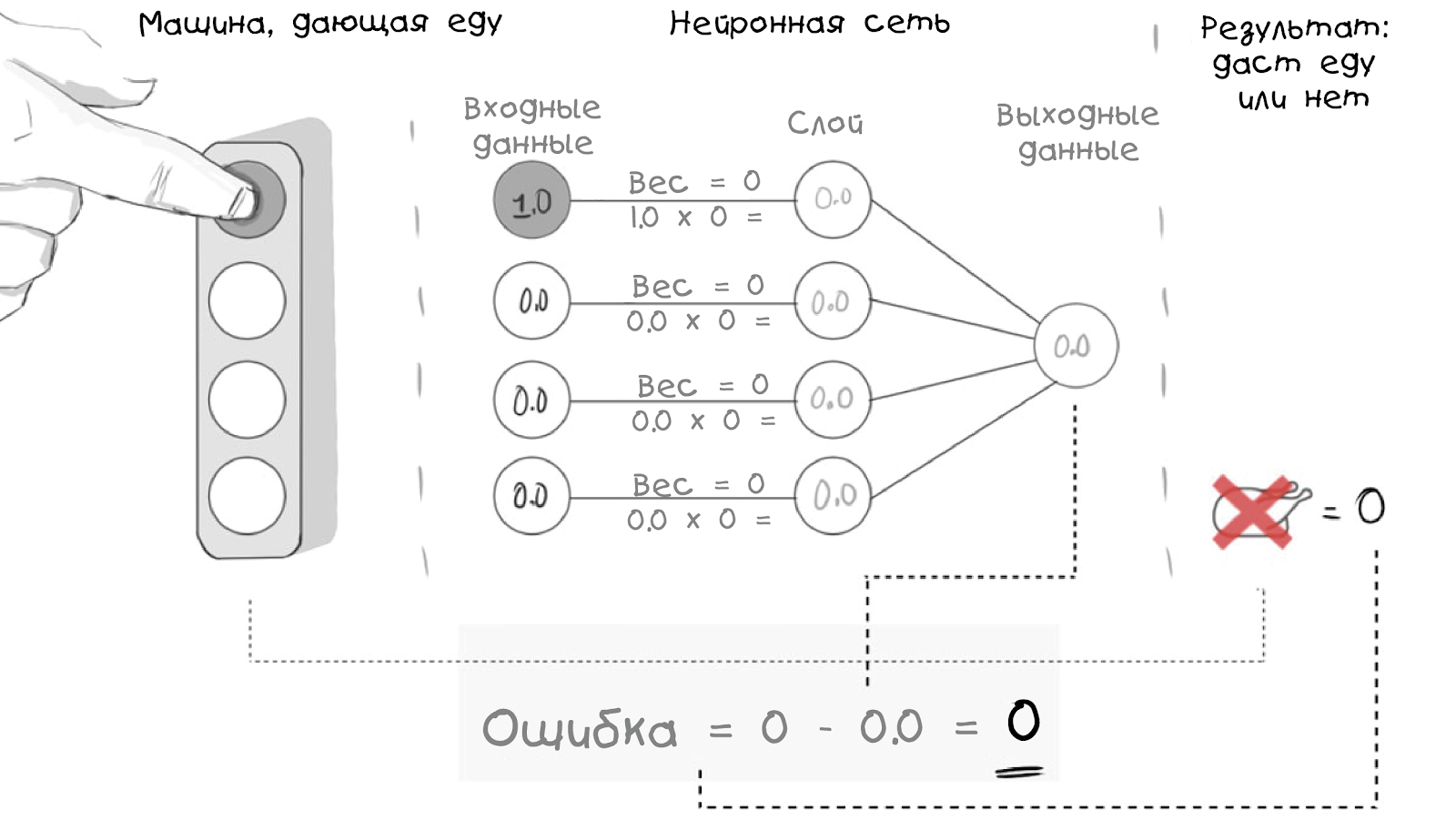

This neural network simply receives incoming information - in this case it will be the perception of which button was pressed. Further, the network replaces the incoming information with weights and makes a conclusion based on the addition of a layer. It sounds a bit confusing, but let's see how the button is presented in our model:

Note that all weights are 0, so the neural network, like a baby, is completely empty, but completely interconnected.

Thus, we compare the external event with the input layer of the neural network and calculate the value at its output. It may or may not coincide with reality, but for now we will ignore it and begin to describe the task in a computer-friendly way. Let's start by entering weights (we will use JavaScript):

var inputs = [0,1,0,0];

var weights = [0,0,0,0];

// Для удобства эти векторы можно назватьThe next step is to create a function that collects input values and weights and calculates the value at the output:

function evaluateNeuralNetwork(inputVector, weightVector){

var result = 0;

inputVector.forEach(function(inputValue, weightIndex) {

layerValue = inputValue*weightVector[weightIndex];

result += layerValue;

});

return (result.toFixed(2));

}

// Может казаться комплексной, но все, что она делает — это сопоставляет пары вес/ввод и добавляет результатAs expected, if we run this code, we will get the same result as in our model or graph ...

evaluateNeuralNetwork(inputs, weights); // 0.00Live example: Neural Net 001 .

The next step in improving our neural network will be a way to check its own output or resulting values in a comparable real situation,

let's first encode this specific reality into a variable:

To detect inconsistencies (and how many of them), we add an error function:

Error = Reality - Neural Net OutputWith it, we can evaluate the performance of our neural network:

But more importantly - what about situations where reality gives a positive result?

Now we know that our neural network model does not work (and we know how much), great! This is great because now we can use the error function to control our learning. But all this will make sense if we redefine the error function as follows:

Error = Desired Output - Neural Net OutputAn elusive, but such an important discrepancy, tacitly showing that we will

use the previously obtained results to compare with future actions

(and for training, as we will see later). It exists in real life, full of

repeating patterns, so it can become an evolutionary strategy (well, in

most cases).

Next, in our sample code, we will add a new variable:

var input = [0,0,1,0];

var weights = [0,0,0,0];

var desiredResult = 1;And a new feature:

function evaluateNeuralNetError(desired,actual) {

return (desired — actual);

}

// After evaluating both the Network and the Error we would get:

// "Neural Net output: 0.00 Error: 1"Live example: Neural Net 002 .

To summarize the subtotal . We started with the task, made its simple model in the form of a biological neural network, and got a way to measure its performance compared to reality or the desired result. Now we need to find a way to correct the discrepancy - a process that can be regarded as a training for both computers and people.

How to train a neural network?

The basis of training both biological and artificial neural networks is repetition

and learning algorithms , so we will work with them separately. Let's start with

training algorithms.

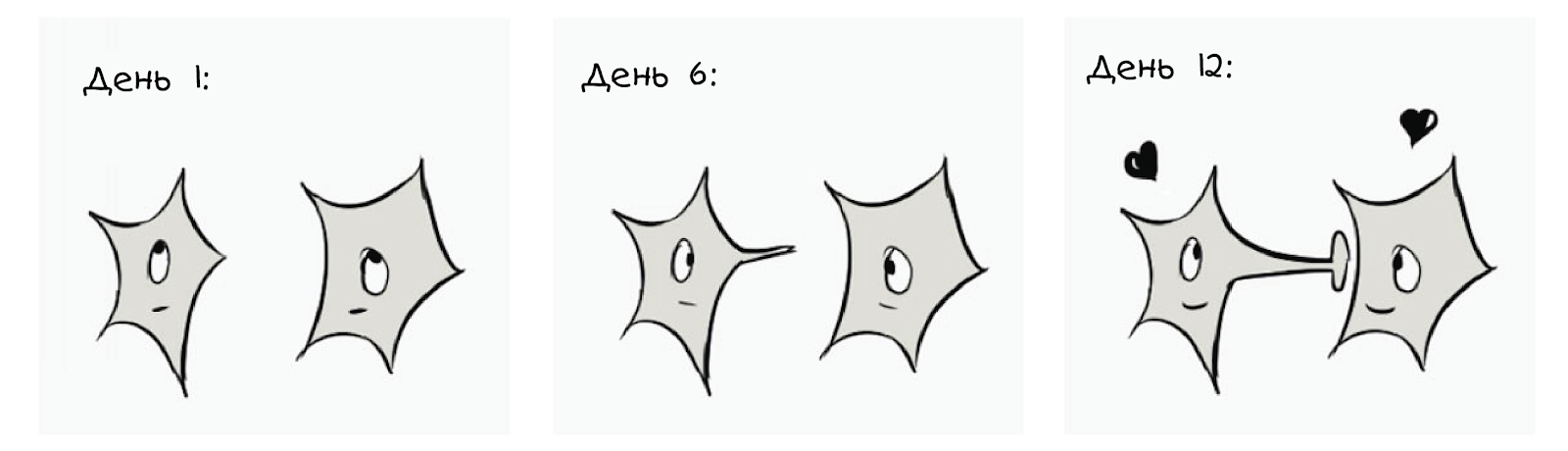

In nature, learning algorithms are understood as changes in physical or chemical

characteristics of neurons after experiments: A

dramatic illustration of how two neurons change over time in the code and our model “learning algorithm” means that we will just change something over time to make our life easier. Therefore, let's add a variable to indicate the degree of facilitation of life:

var learningRate = 0.20;

// Чем больше значение, тем быстрее будет процесс обучения :)And what will it change?

This will change the weight (just like a rabbit!), Especially the weight of the output that we want to get:

How to code such an algorithm is your choice, for simplicity, I add the training coefficient to the weight, here it is in the form of a function:

function learn(inputVector, weightVector) {

weightVector.forEach(function(weight, index, weights) {

if (inputVector[index] > 0) {

weights[index] = weight + learningRate;

}

});

}When using this training function, it simply adds our learning coefficient to the weight vector of the active neuron , before and after the training circle (or repetition), the results will be as follows:

// Original weight vector: [0,0,0,0]

// Neural Net output: 0.00 Error: 1

learn(input, weights);

// New Weight vector: [0,0.20,0,0]

// Neural Net output: 0.20 Error: 0.8

// Если это не очевидно, вывод нейронной сети близок к 1 (выдача курицы) — то, чего мы и

хотели, поэтому можно сделать вывод, что мы движемся в правильном направленииLive example: Neural Net 003 .

Okay, now that we are moving in the right direction, the last detail of this puzzle will be the introduction of repetitions .

It’s not so difficult, in nature we just do the same thing over and over, and in the code we just indicate the number of repetitions:

var trials = 6;And the introduction into our training neural network of the function of the number of repetitions will look like this:

function train(trials) {

for (i = 0; i < trials; i++) {

neuralNetResult = evaluateNeuralNetwork(input, weights);

learn(input, weights);

}

}Well, our final report:

Neural Net output: 0.00 Error: 1.00 Weight Vector: [0,0,0,0]

Neural Net output: 0.20 Error: 0.80 Weight Vector: [0,0,0.2,0]

Neural Net output: 0.40 Error: 0.60 Weight Vector: [0,0,0.4,0]

Neural Net output: 0.60 Error: 0.40 Weight Vector: [0,0,0.6,0]

Neural Net output: 0.80 Error: 0.20 Weight Vector: [0,0,0.8,0]

Neural Net output: 1.00 Error: 0.00 Weight Vector: [0,0,1,0]

// Chicken Dinner !Live example: Neural Net 004 .

Now we have a weight vector that will give only one result (chicken for dinner), if the input vector corresponds to reality (pressing the third button).

So what is so cool we just did?

In this particular case, our neural network (after training) can recognize the input data and say what will lead to the desired result (we still need to program specific situations):

In addition, it is a scalable model, a toy and a tool for our training. We were able to learn something new about machine learning, neural networks and artificial intelligence.

Caution to users:

- Механизм хранения изученных весов не предусмотрен, поэтому данная нейронная сеть забудет всё, что знает. При обновлении или повторном запуске кода нужно не менее шести успешных повторов, чтобы сеть полностью обучилась, если вы считаете, что человек или машина будут нажимать на кнопки в случайном порядке… Это займет какое-то время.

- Биологические сети для обучения важным вещам имеют скорость обучения 1, поэтому нужен будет только один успешный повтор.

- Существует алгоритм обучения, который очень напоминает биологические нейроны, у него броское название: правило widroff-hoff, или обучение widroff-hoff.

- Пороги нейронов (1 в нашем примере) и эффекты переобучения (при большом количестве повторов результат будет больше 1) не учитываются, но они очень важны в природе и отвечают за большие и сложные блоки поведенческих реакций. Как и отрицательные веса.

Заметки и список литературы для дальнейшего чтения

I tried to avoid mathematics and strict terms, but if you are interested, we built a perceptron , which is defined as an algorithm for supervised learning ( teaching with a teacher ) of double classifiers - a hard thing.

The biological structure of the brain is not a simple topic, partly because of inaccuracy, partly because of its complexity. It's best to start with Neuroscience (Purves) and Cognitive Neuroscience (Gazzaniga). I modified and adapted the rabbit example from Gateway to Memory (Gluck), which is also a great guide to the graph world.

Another gorgeous resource, An Introduction to Neural Networks (Gurney), is suitable for all your AI-related needs.

And now in Python! Thanks to Ilya Andschmidt for the provided Python version:

inputs = [0, 1, 0, 0]

weights = [0, 0, 0, 0]

desired_result = 1

learning_rate = 0.2

trials = 6

def evaluate_neural_network(input_array, weight_array):

result = 0

for i in range(len(input_array)):

layer_value = input_array[i] * weight_array[i]

result += layer_value

print("evaluate_neural_network: " + str(result))

print("weights: " + str(weights))

return result

def evaluate_error(desired, actual):

error = desired - actual

print("evaluate_error: " + str(error))

return error

def learn(input_array, weight_array):

print("learning...")

for i in range(len(input_array)):

if input_array[i] > 0:

weight_array[i] += learning_rate

def train(trials):

for i in range(trials):

neural_net_result = evaluate_neural_network(inputs, weights)

learn(inputs, weights)

train(trials)And now on GO! Thank you for this version of Kieran Maher.

package main

import (

"fmt"

"math"

)

func main() {

fmt.Println("Creating inputs and weights ...")

inputs := []float64{0.00, 0.00, 1.00, 0.00}

weights := []float64{0.00, 0.00, 0.00, 0.00}

desired := 1.00

learningRate := 0.20

trials := 6

train(trials, inputs, weights, desired, learningRate)

}

func train(trials int, inputs []float64, weights []float64, desired float64, learningRate float64) {

for i := 1; i < trials; i++ {

weights = learn(inputs, weights, learningRate)

output := evaluate(inputs, weights)

errorResult := evaluateError(desired, output)

fmt.Print("Output: ")

fmt.Print(math.Round(output*100) / 100)

fmt.Print("\nError: ")

fmt.Print(math.Round(errorResult*100) / 100)

fmt.Print("\n\n")

}

}

func learn(inputVector []float64, weightVector []float64, learningRate float64) []float64 {

for index, inputValue := range inputVector {

if inputValue > 0.00 {

weightVector[index] = weightVector[index] + learningRate

}

}

return weightVector

}

func evaluate(inputVector []float64, weightVector []float64) float64 {

result := 0.00

for index, inputValue := range inputVector {

layerValue := inputValue * weightVector[index]

result = result + layerValue

}

return result

}

func evaluateError(desired float64, actual float64) float64 {

return desired - actual

}