The development of graphics cards in the 2000s

Continuing the history of the development of video cards from the previous one - articles , video adapters of the 2000s.

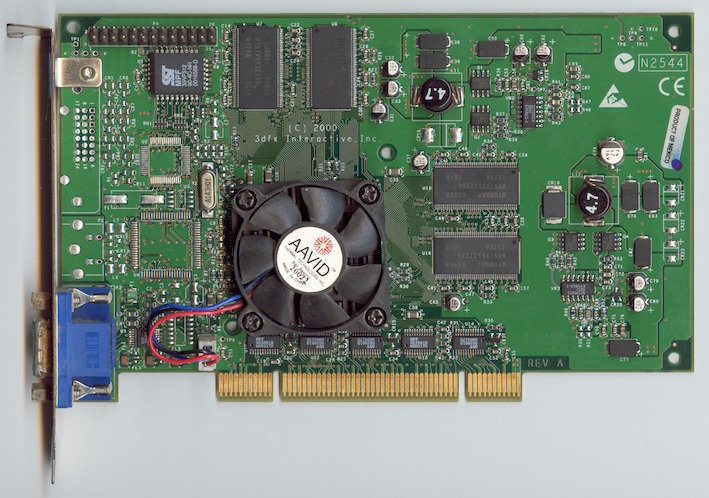

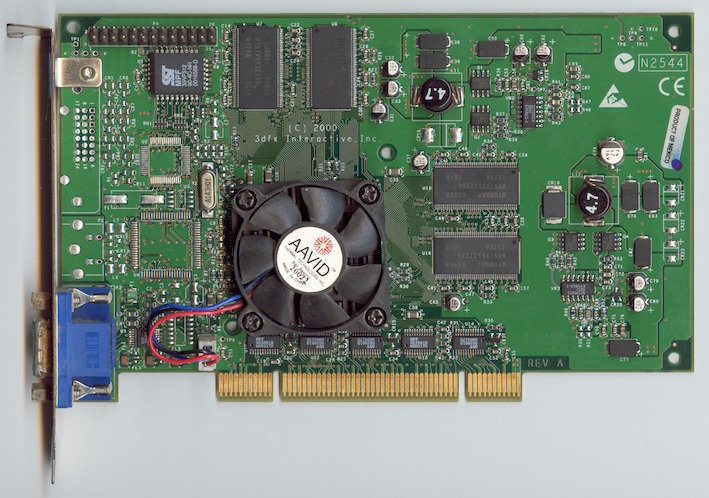

The VSA-100 chipset (Voodoo Scalable Architecture - Voodoo Scalable Architecture) was released by 3dfx in June 2000. The first video card to use this chip (VSA-100x2) was Voodoo5 5500. Manufactured using a 250-nm process technology with 14 million transistors. The SDRAM memory reached 64 MB, with a 128-bit bus. The frequency of the GPU and memory was 166 MHz. For the first time, Voodoo graphics cards supported 32-bit color in 3D, as well as textures with a high resolution of 2048x2048 pixels. For compression, the algorithms FXT1 and DXTC were used. A feature of the Voodoo5 5500 was its high performance when using anti-aliasing.

The video card was produced with different interfaces, such as AGP, PCI, etc. Also available was a version for the Macintosh, which has two connectors (DVI and VGA).

In the fall of the same year, 3dfx released the Voodoo4 4500 with 32 MB of memory using one VSA-100 chip. The model turned out to be rather slow and significantly inferior to the GeForce 2 MX and Radeon SDR.

3Dfx company announced the release of a productive Voodoo5 6000 video card on 4 VSA-100 chips and with 128 MB of memory. But it was not possible to finally implement the project - serious financial difficulties bankrupted 3Dfx.

In 2000-2001, NVIDIA released a series of GeForce 2 graphics cards (GTS, Ultra, Pro, MX, etc.). These video adapters had a 256-bit core - one of the most productive cores of the time.

The base model is the GeForce 2 GTS (GigaTexel Shading), codenamed NV15. This video card was manufactured according to the 180-nm process technology and contained 25 million transistors. The DDR SGRAM memory was 32 MB or 64 MB with a frequency of 200 MHz and a 128-bit bus. The adapter had 4 pixel pipelines. NV15 included full support for DirectX 7, OpenGL 1.2, as well as hardware processing of geometry and lighting (T&L).

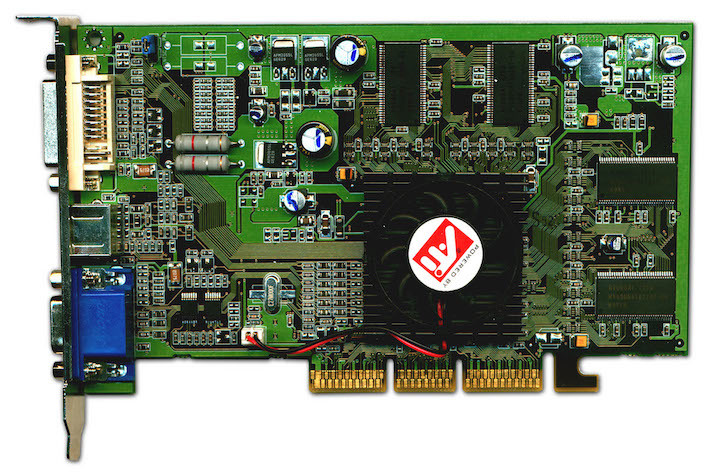

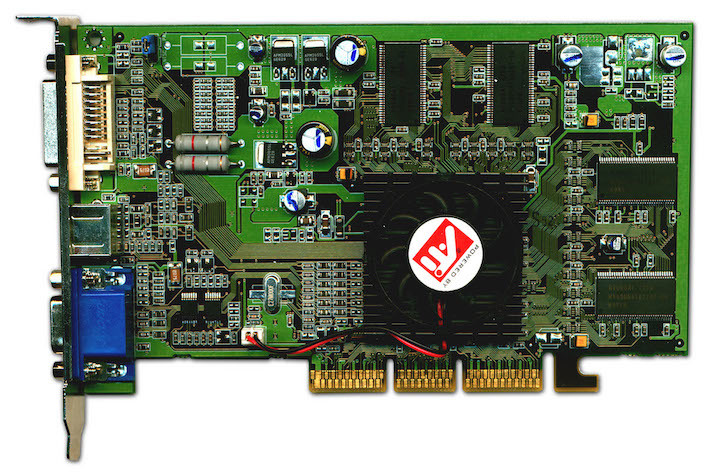

ATI kept pace with progress and in 2000 released the Radeon R100 processor (originally called Rage 6). It was manufactured using a 180-nm process technology and supported ATI HyperZ technology.

Based on the R100, Radeon DDR and SDR video cards were released.

Radeon DDR came with 32 MB or 64 MB of video memory. The core and memory frequencies were 183 MHz, a 128-bit bus was used. The interface was AGP 4x. The video card had 2 pixel pipelines.

A simplified version of SDR differed from Radeon DDR in the type of memory used and lower frequencies (166 MHz). The memory capacity of the Radeon SDR was provided only at 32 MB.

In 2001, two Radeon 8500 and Radeon 7500 chips were launched on the basis of the RV200.

The Radeon 8500 collected the latest ATI developments, and it turned out to be very fast. It was manufactured using a 150-nm process technology and contained 60 million transistors. The core and memory frequencies were 275 MHz. A 128-bit bus was used. The DDR SDRAM memory was offered in two versions: 64 MB and 128 MB. There were 4 pixel pipelines. The

Radeon 7500 was manufactured using the same 150-nm process technology, but with 30 million transistors. The core worked at a frequency of 290 MHz, and the memory at 230 MHz. There were 2 pixel conveyors.

In 2001, GeForce 3 GPUs codenamed NV20 came out. The processor was produced according to the 150-nm process technology. The amount of memory offered on 64 MB and 128 MB. The bus was 128-bit and consisted of four 32-bit controllers. The core worked at a frequency of 200 MHz, and the memory at a frequency of 230 MHz. There were 4 pixel conveyors. Productivity was 800 billion operations / sec. The memory bandwidth was 7.36 Gb / s.

The device supported nFinite FX Engine, allowing you to create a huge number of different special effects. There was an improved LMA (Lightspeed Memory Architecture) architecture.

The line of video cards consisted of modifications of the GeForce 3, GeForce 3 Ti 200 and Ti 500. They differed in clock speed, performance and memory bandwidth.

GeForce 3 Ti 200: 175 MHz core, 200 MHz memory; 700 billion operations / sec; 6.4 Gb / s bandwidth.

GeForce 3 Ti 500: 240 MHz core and 250 MHz memory; 960 billion operations / sec; 8.0 GB / s bandwidth.

The next NVIDIA graphics card was GeForce 4, which was released in 2002. Two types of graphic cards were issued with this name: high-performance Ti (Titanium) and budget MX.

The GeForce 4 Ti line was presented by the Ti 4400, Ti 4600, and Ti 4200 models. The video cards differed in the core and memory clock speeds. The amount of video memory was 128 MB (the Ti 4200 also offered an option for 64 MB). Titanium used a 128-bit 4-channel memory controller with LightSpeed Memory Architecture II, there were 4 rendering units, 8 texture units, 2 T&L, there was an Accuview anti-aliasing subsystem and the nFiniteFX II shader engine, which provides full support for DirectX 8.1 and OpenGL 1.3. The GeForce 4 Ti 4200 model was the most common due to its high performance at an affordable price.

The GeForce 4 MX inherited the GeForce 2 architecture (with increased speed). They were based on the NV17 chip, manufactured by the 150-nm process technology and consisting of 27 million transistors. The amount of video memory was 64 MB. The GPU had 2 rendering units, 4 texture units, 1 T&L unit, a 128-bit 2-channel memory controller with LightSpeed Memory Architecture II. The chip also had an Accuview anti-aliasing subsystem.

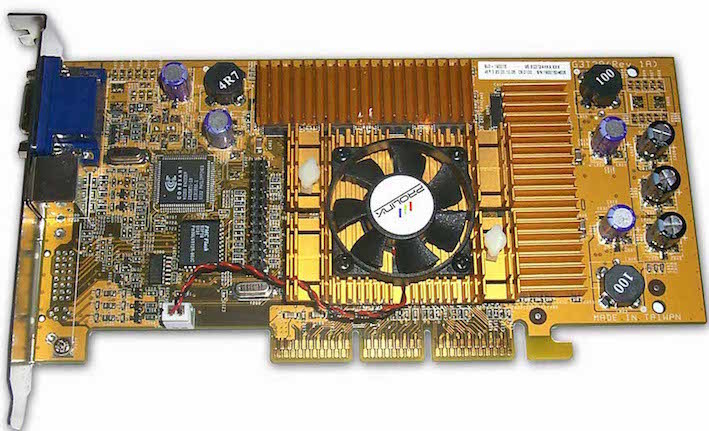

In the summer of 2002, ATI released the R300 chip, which was manufactured using the 150-nm process technology and contained about 110 million transistors. He had 8 pixel pipelines. The chip also supported improved anti-aliasing techniques.

Based on the R300, a Radeon 9700 graphics card with clock speeds of the 325 MHz core and 310 MHz memory was released. The memory capacity was 128 MB. The memory bus was a 256-bit DDR.

In early 2003, the Radeon 9700 was replaced by the Radeon 9800 graphics card. New solutions were built on the R350 chip, with an increase in clock speeds and the completion of shader units, a memory controller.

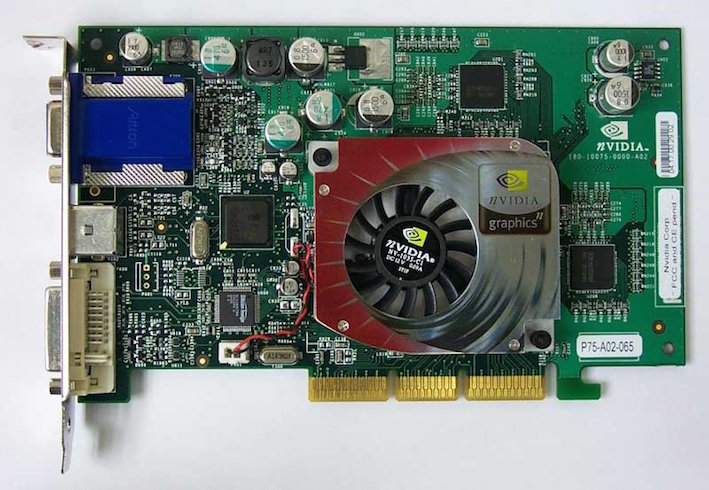

GeForce FX is the fifth generation of GPUs developed and released by NVIDIA from the end of 2002 to 2004. One of the first video cards in the GeForce FX series had advanced anti-aliasing and anisotropic filtering methods. It supported vertex and pixel shaders version 2.0. Thanks to 64-bit and 128-bit color representation, the quality of vivid images has improved. The NV30 chip was manufactured using a 130-nm process technology and worked with a 128-bit AGP 8x bus, supporting DDR2 memory.

GeForce FX was presented in various modifications: entry-level (5200, 5300, 5500), mid-range (5600, 5700, 5750), high-end (5800, 5900, 5950), enthusiast (5800 Ultra, 5900 Ultra, 5950 Ultra). The bus used was 126-bit and 256-bit.

On the basis of NV30, a new generation top-end device was created - the GeForce FX 5800 graphics card. The video memory reached 256 MB, the core frequency - 400 MHz, and the memory - 800 MHz. In the 5800 Ultra, the core frequency increased to 500 MHz, and the memory - up to 1000 MHz. The first NV30-based cards featured an innovative cooling system.

The development of video cards continued actively and in 2004 the next product of the company was released - GeForce 6 Series (code name NV40).

The NV40 chip was also produced according to the 130-nm process technology, which did not prevent it from becoming more economical. Modification of pixel pipelines made it possible to process up to 16 pixels per cycle. There were a total of 16 pixel pipelines. Video cards supported pixel and vertex shaders version 3.0, UltraShadow technology (rendering of shadows). In addition, the GeForce 6 Series decoded H.264, VC-1, and MPEG-2 video formats using PureVideo technology. The NV40 worked through a 256-bit bus, while using very fast memory modules such as GDDR3.

One of the first models, the GeForce 6800 graphics card was very productive and pulled the latest games of the time. It worked both through the AGP interface and through the PCI Express bus. The core frequency was 325 MHz, and the memory frequency was 700 MHz. The amount of memory reached 256 MB or 512 MB.

ATI was in a better position. In 2004, the company introduced the 130nm R420 chip (an advanced version of the R300). Pixel pipelines were divided into four blocks of four pipelines in each (a total of 16 pixel pipelines). The number of vertex pipelines increased to 6. Since the R420 did not support the work of third-generation shaders, it worked with the updated HyperZ HD technology.

The most powerful and productive video card in the new Radeon line was the X800 XT. The card was equipped with 256 MB GDDR3 memory and 256-bit bus capacity. The operating frequency reached 520 MHz in the core and 560 MHz in memory. The Radeon X800 XT was sold in two versions: AGP and PCI Express. In addition to the regular version, there was a Radeon X800 XT Platinum Edition with higher chip and memory frequencies.

In 2005, the G70 chip was released, which formed the basis of the GeForce 7800 series video cards. The number of transistors increased to 302 million.

The number of pixel pipelines doubled - up to 24 pieces. Additional ALUs have been added to each pipeline, responsible for processing the most popular pixel shaders. Thus, increased chip performance in games that focus on the performance of pixel processors.

The GeForce 7800 GTX was the first G70-based graphics card. The core frequency was 430 MHz, memory - 600 MHz. Fast GDDR3 was used, as well as a 256-bit bus. The memory capacity was 256 MB or 512 MB. The GeForce 7800 GTX worked exclusively through the PCI Express x16 interface, which finally began to displace the aging AGP.

The 2006 event for NVIDIA was the release of the first dual-chip GeForce 7950 graphics card, created using the 90-nm process technology. The NVidia 7950 GX2 had one G71 chip on each of the boards. The core of the video card worked at a frequency of 500 MHz, the memory at a frequency of 600 MHz. The amount of video memory type GDDR3 was 1 GB (512 MB per chip), a 256-bit bus.

The new card has optimized power consumption and improved the cooling system. The 7950 GX2 launched the development of Quad SLI technology, which allows the simultaneous use of the power of multiple graphics cards for processing three-dimensional images.

Based on the R520, the Radeon X1800 XT graphics card was developed. The card was equipped with a 256 MB or 512 MB GDDR3 memory operating at a frequency of 750 MHz. A 256-bit bus was used.

The Radeon X1800 XT graphics cards have not been on the market for long. Soon they were replaced by adapters of the Radeon X1900 XTX series based on the R580 chip. The processor fully supported at the hardware level specifications SM 3.0 (DirectX 9.0c) and HDR blending in FP16 format with the ability to share MSAA. The number of pixel pipelines was increased in the new chip - up to 48. The core frequency was 650 MHz, and the memory - 775 MHz.

Six months later, the R580 + chip came out with a new memory controller that works with the GDDR4 standard. The memory frequency was increased to 2000 MHz, while the bus remained 256-bit. The main characteristics of the chip remained the same: 48 pixel pipelines, 16 texture pipelines and 8 vertex pipelines. The core frequency was 625 MHz, the memory was more - 900 MHz.

In 2006, several video cards were released based on the G80 processor, the most powerful of which was the GeForce 8800 GTX. The G80 was one of the most complex existing chips of the time. It was produced using the 90-nm process technology and contained 681 million transistors. The core worked at a frequency of 575 MHz, the memory at a frequency of 900 MHz. The frequency of unified shader units was 1350 MHz. The GeForce 8800 GTX had 768 MB of GDDR3 video memory, and the bus width was 384-bit. New anti-aliasing methods were supported, which allowed the ROP units to work with HDR light in MSAA (Multisample anti-aliasing) mode. PureVideo technology has been developed.

The GeForce 8800 GTX architecture proved to be particularly efficient and for several years it has been one of the fastest graphics cards.

In 2007, the flagship Radeon HD2900 XT graphics card based on the R600 chip was introduced. The core frequency of the video card was 740 MHz, GDDR4 memory - 825 MHz. A 512-bit memory bus was used. The amount of video memory reached 512 MB and 1 GB.

A more successful development was the RV670 processor, released in the same year. It almost did not differ in architecture from its predecessor, but it was manufactured according to the 55-nm process technology and with a 256-bit memory bus. Support for DirectX 10.1 and Shader Model 4.1 has appeared. Based on the processor, video cards Radeon HD 3870 (core frequency 775 MHz, memory 1125 MHz) and Radeon HD 3850 (core frequency 670 MHz, memory 828 MHz) with a video memory capacity of 256 MB and 512 MB and a 256-bit bus were produced.

The G92 chip formed the basis of the GeForce 9800 GTX - one of the fastest and most affordable graphics cards. It was manufactured according to the 65-nm process technology. The core frequency was 675 MHz, the memory frequency was 1100 MHz, and the bus was 256-bit. The memory was offered in two versions: 512 MB and 1 GB. A bit later, the GTX + model appeared, which was distinguished by a 55-nm process technology and a core frequency of 738 MHz.

Another two-chip video card GeForce 9800 GX2 also appeared in this line. Each of the processors had specifications, like the GeForce 8800 GTS 512 MB, only with different frequencies.

In 2008, NVIDIA released the GT200 chip, which was used in the GeForce GTX 280 and GTX 260 graphics cards. The chip was manufactured using the 65-nm process technology and contained 1.4 billion transistors, had 32 ROPs and 80 texture units. The memory bus has increased to 512-bit. Support has also been added for the PhysX physics engine and the CUDA platform. The core frequency of the video card was 602 MHz, and the memory type GDDR3 - 1107 MHz.

The GeForce GTX 260 used the 448-bit GDDR3 bus. The core frequency reached 576 MHz, and the memory - 999 MHz.

The senior video card of the new line was called Radeon HD 4870. The core frequency was 750 MHz, and the memory worked at an effective frequency of 3600 MHz. With the new line of video cards, the company continued its new policy for the release of devices that could successfully compete in the Middle-End segment. So, the Radeon HD 4870 became a worthy competitor to the GeForce GTX 260 graphics card. And the leader of the HD 4000 line was soon taken by the next dual-chip solution Radeon HD 4870X2. The architecture of the video card itself corresponded to that of the Radeon HD 3870X2, not counting the presence of the Sideport interface, directly linking the two cores for the most rapid exchange of information.

In 2010, NVIDIA introduced the GF100 with the Fermi architecture, which formed the basis of the GeForce GTX 480 graphics card. The GF100 was manufactured using the 40nm process technology and received 512 stream processors. The core frequency was 700 MHz, and the memory - 1848 MHz. The bus width was 384-bit. The amount of video memory GDDR5 reached 1.5 GB.

The GF100 chip supported DirectX 11 and Shader Model 5.0, as well as the new NVIDIA Surround technology that allows you to deploy applications on three screens, thereby creating the effect of complete immersion.

AMD has released a 40nm Cypress chip. The developers of the company decided to change the approach and not use exclusively alphanumeric values. A generation of chips began to be assigned their own names. The very principle of Cypress architecture continued the ideas of the RV770, but the design was redesigned. The number of stream processors, texture units, and ROPs doubled. Support for DirectX 11 and Shader Model 5.0 has appeared. Cypress introduced new texture compression methods that allowed developers to use large textures. AMD also introduced the new Eyefinity technology, later NVIDIA Surround technology became its full counterpart.

The Cypress chip was implemented in a series of Radeon HD 5000 video cards. Soon, AMD also released a dual-chip solution Radeon HD 5970. In general, Cypress was very successful.

The Radeon HD 6000 series of video cards, released at the end of 2010, was designed to compete with the GeForce GTX 500 accelerators. The graphics cards were based on the Cayman chip. It used a slightly different VLIW4 architecture. The number of stream processors was 1536 pieces. The number of texture modules increased - there were 96 of them. Cayman was also able to work with the new Enhanced Quality AA smoothing algorithm. The memory bus width of the chip was 256-bit. Video cards used GDDR5 memory.

Since 2011, NVIDIA has released a generation of graphics accelerators. One of the notable models was the GeForce GTX 680 graphics card, based on the GK104 chip, which was produced using the 28-nm process technology. The core frequency is 1006 MHz, the memory frequency is 6008 MHz, the 256-bit GDDR5 bus.

In 2013, the company introduced the GK110 chip, which is based on the flagship GeForce GTX 780 and GeForce GTX Titan. The 384-bit GDDR5 bus was used, and the amount of memory increased to 6 GB.

VSA-100 and the new generation of Voodoo

The VSA-100 chipset (Voodoo Scalable Architecture - Voodoo Scalable Architecture) was released by 3dfx in June 2000. The first video card to use this chip (VSA-100x2) was Voodoo5 5500. Manufactured using a 250-nm process technology with 14 million transistors. The SDRAM memory reached 64 MB, with a 128-bit bus. The frequency of the GPU and memory was 166 MHz. For the first time, Voodoo graphics cards supported 32-bit color in 3D, as well as textures with a high resolution of 2048x2048 pixels. For compression, the algorithms FXT1 and DXTC were used. A feature of the Voodoo5 5500 was its high performance when using anti-aliasing.

The video card was produced with different interfaces, such as AGP, PCI, etc. Also available was a version for the Macintosh, which has two connectors (DVI and VGA).

In the fall of the same year, 3dfx released the Voodoo4 4500 with 32 MB of memory using one VSA-100 chip. The model turned out to be rather slow and significantly inferior to the GeForce 2 MX and Radeon SDR.

3Dfx company announced the release of a productive Voodoo5 6000 video card on 4 VSA-100 chips and with 128 MB of memory. But it was not possible to finally implement the project - serious financial difficulties bankrupted 3Dfx.

GeForce 2

In 2000-2001, NVIDIA released a series of GeForce 2 graphics cards (GTS, Ultra, Pro, MX, etc.). These video adapters had a 256-bit core - one of the most productive cores of the time.

The base model is the GeForce 2 GTS (GigaTexel Shading), codenamed NV15. This video card was manufactured according to the 180-nm process technology and contained 25 million transistors. The DDR SGRAM memory was 32 MB or 64 MB with a frequency of 200 MHz and a 128-bit bus. The adapter had 4 pixel pipelines. NV15 included full support for DirectX 7, OpenGL 1.2, as well as hardware processing of geometry and lighting (T&L).

Radeon DDR and SDR

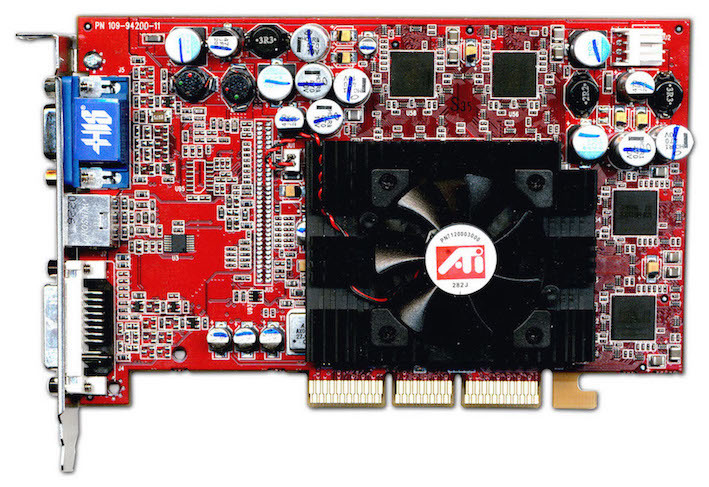

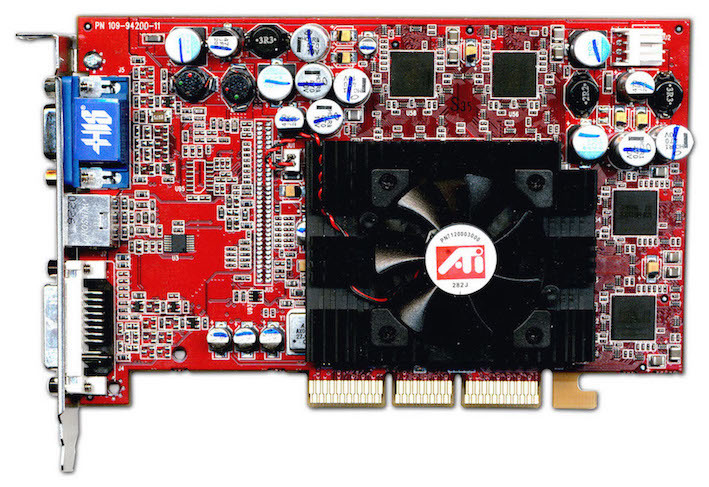

ATI kept pace with progress and in 2000 released the Radeon R100 processor (originally called Rage 6). It was manufactured using a 180-nm process technology and supported ATI HyperZ technology.

Based on the R100, Radeon DDR and SDR video cards were released.

Radeon DDR came with 32 MB or 64 MB of video memory. The core and memory frequencies were 183 MHz, a 128-bit bus was used. The interface was AGP 4x. The video card had 2 pixel pipelines.

A simplified version of SDR differed from Radeon DDR in the type of memory used and lower frequencies (166 MHz). The memory capacity of the Radeon SDR was provided only at 32 MB.

Radeon 8500 and Radeon 7500

In 2001, two Radeon 8500 and Radeon 7500 chips were launched on the basis of the RV200.

The Radeon 8500 collected the latest ATI developments, and it turned out to be very fast. It was manufactured using a 150-nm process technology and contained 60 million transistors. The core and memory frequencies were 275 MHz. A 128-bit bus was used. The DDR SDRAM memory was offered in two versions: 64 MB and 128 MB. There were 4 pixel pipelines. The

Radeon 7500 was manufactured using the same 150-nm process technology, but with 30 million transistors. The core worked at a frequency of 290 MHz, and the memory at 230 MHz. There were 2 pixel conveyors.

GeForce 3

In 2001, GeForce 3 GPUs codenamed NV20 came out. The processor was produced according to the 150-nm process technology. The amount of memory offered on 64 MB and 128 MB. The bus was 128-bit and consisted of four 32-bit controllers. The core worked at a frequency of 200 MHz, and the memory at a frequency of 230 MHz. There were 4 pixel conveyors. Productivity was 800 billion operations / sec. The memory bandwidth was 7.36 Gb / s.

The device supported nFinite FX Engine, allowing you to create a huge number of different special effects. There was an improved LMA (Lightspeed Memory Architecture) architecture.

The line of video cards consisted of modifications of the GeForce 3, GeForce 3 Ti 200 and Ti 500. They differed in clock speed, performance and memory bandwidth.

GeForce 3 Ti 200: 175 MHz core, 200 MHz memory; 700 billion operations / sec; 6.4 Gb / s bandwidth.

GeForce 3 Ti 500: 240 MHz core and 250 MHz memory; 960 billion operations / sec; 8.0 GB / s bandwidth.

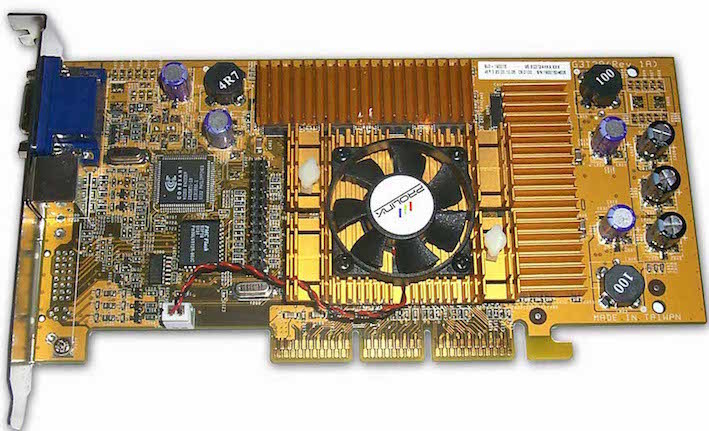

GeForce 4

The next NVIDIA graphics card was GeForce 4, which was released in 2002. Two types of graphic cards were issued with this name: high-performance Ti (Titanium) and budget MX.

The GeForce 4 Ti line was presented by the Ti 4400, Ti 4600, and Ti 4200 models. The video cards differed in the core and memory clock speeds. The amount of video memory was 128 MB (the Ti 4200 also offered an option for 64 MB). Titanium used a 128-bit 4-channel memory controller with LightSpeed Memory Architecture II, there were 4 rendering units, 8 texture units, 2 T&L, there was an Accuview anti-aliasing subsystem and the nFiniteFX II shader engine, which provides full support for DirectX 8.1 and OpenGL 1.3. The GeForce 4 Ti 4200 model was the most common due to its high performance at an affordable price.

The GeForce 4 MX inherited the GeForce 2 architecture (with increased speed). They were based on the NV17 chip, manufactured by the 150-nm process technology and consisting of 27 million transistors. The amount of video memory was 64 MB. The GPU had 2 rendering units, 4 texture units, 1 T&L unit, a 128-bit 2-channel memory controller with LightSpeed Memory Architecture II. The chip also had an Accuview anti-aliasing subsystem.

Radeon 9700 Pro

In the summer of 2002, ATI released the R300 chip, which was manufactured using the 150-nm process technology and contained about 110 million transistors. He had 8 pixel pipelines. The chip also supported improved anti-aliasing techniques.

Based on the R300, a Radeon 9700 graphics card with clock speeds of the 325 MHz core and 310 MHz memory was released. The memory capacity was 128 MB. The memory bus was a 256-bit DDR.

In early 2003, the Radeon 9700 was replaced by the Radeon 9800 graphics card. New solutions were built on the R350 chip, with an increase in clock speeds and the completion of shader units, a memory controller.

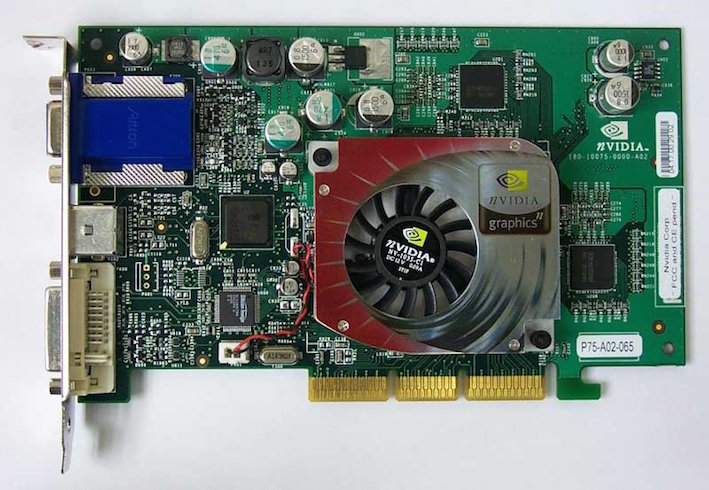

GeForce FX

GeForce FX is the fifth generation of GPUs developed and released by NVIDIA from the end of 2002 to 2004. One of the first video cards in the GeForce FX series had advanced anti-aliasing and anisotropic filtering methods. It supported vertex and pixel shaders version 2.0. Thanks to 64-bit and 128-bit color representation, the quality of vivid images has improved. The NV30 chip was manufactured using a 130-nm process technology and worked with a 128-bit AGP 8x bus, supporting DDR2 memory.

GeForce FX was presented in various modifications: entry-level (5200, 5300, 5500), mid-range (5600, 5700, 5750), high-end (5800, 5900, 5950), enthusiast (5800 Ultra, 5900 Ultra, 5950 Ultra). The bus used was 126-bit and 256-bit.

On the basis of NV30, a new generation top-end device was created - the GeForce FX 5800 graphics card. The video memory reached 256 MB, the core frequency - 400 MHz, and the memory - 800 MHz. In the 5800 Ultra, the core frequency increased to 500 MHz, and the memory - up to 1000 MHz. The first NV30-based cards featured an innovative cooling system.

GeForce 6 Series

The development of video cards continued actively and in 2004 the next product of the company was released - GeForce 6 Series (code name NV40).

The NV40 chip was also produced according to the 130-nm process technology, which did not prevent it from becoming more economical. Modification of pixel pipelines made it possible to process up to 16 pixels per cycle. There were a total of 16 pixel pipelines. Video cards supported pixel and vertex shaders version 3.0, UltraShadow technology (rendering of shadows). In addition, the GeForce 6 Series decoded H.264, VC-1, and MPEG-2 video formats using PureVideo technology. The NV40 worked through a 256-bit bus, while using very fast memory modules such as GDDR3.

One of the first models, the GeForce 6800 graphics card was very productive and pulled the latest games of the time. It worked both through the AGP interface and through the PCI Express bus. The core frequency was 325 MHz, and the memory frequency was 700 MHz. The amount of memory reached 256 MB or 512 MB.

Radeon X800 XT

ATI was in a better position. In 2004, the company introduced the 130nm R420 chip (an advanced version of the R300). Pixel pipelines were divided into four blocks of four pipelines in each (a total of 16 pixel pipelines). The number of vertex pipelines increased to 6. Since the R420 did not support the work of third-generation shaders, it worked with the updated HyperZ HD technology.

The most powerful and productive video card in the new Radeon line was the X800 XT. The card was equipped with 256 MB GDDR3 memory and 256-bit bus capacity. The operating frequency reached 520 MHz in the core and 560 MHz in memory. The Radeon X800 XT was sold in two versions: AGP and PCI Express. In addition to the regular version, there was a Radeon X800 XT Platinum Edition with higher chip and memory frequencies.

GeForce 7800 GTX

In 2005, the G70 chip was released, which formed the basis of the GeForce 7800 series video cards. The number of transistors increased to 302 million.

The number of pixel pipelines doubled - up to 24 pieces. Additional ALUs have been added to each pipeline, responsible for processing the most popular pixel shaders. Thus, increased chip performance in games that focus on the performance of pixel processors.

The GeForce 7800 GTX was the first G70-based graphics card. The core frequency was 430 MHz, memory - 600 MHz. Fast GDDR3 was used, as well as a 256-bit bus. The memory capacity was 256 MB or 512 MB. The GeForce 7800 GTX worked exclusively through the PCI Express x16 interface, which finally began to displace the aging AGP.

GeForce 7950 GX2

The 2006 event for NVIDIA was the release of the first dual-chip GeForce 7950 graphics card, created using the 90-nm process technology. The NVidia 7950 GX2 had one G71 chip on each of the boards. The core of the video card worked at a frequency of 500 MHz, the memory at a frequency of 600 MHz. The amount of video memory type GDDR3 was 1 GB (512 MB per chip), a 256-bit bus.

The new card has optimized power consumption and improved the cooling system. The 7950 GX2 launched the development of Quad SLI technology, which allows the simultaneous use of the power of multiple graphics cards for processing three-dimensional images.

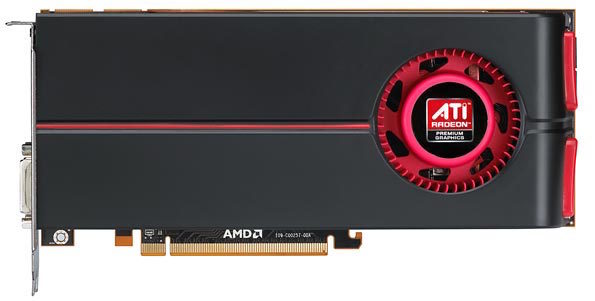

Radeon X1800 XT, X1900

Based on the R520, the Radeon X1800 XT graphics card was developed. The card was equipped with a 256 MB or 512 MB GDDR3 memory operating at a frequency of 750 MHz. A 256-bit bus was used.

The Radeon X1800 XT graphics cards have not been on the market for long. Soon they were replaced by adapters of the Radeon X1900 XTX series based on the R580 chip. The processor fully supported at the hardware level specifications SM 3.0 (DirectX 9.0c) and HDR blending in FP16 format with the ability to share MSAA. The number of pixel pipelines was increased in the new chip - up to 48. The core frequency was 650 MHz, and the memory - 775 MHz.

Six months later, the R580 + chip came out with a new memory controller that works with the GDDR4 standard. The memory frequency was increased to 2000 MHz, while the bus remained 256-bit. The main characteristics of the chip remained the same: 48 pixel pipelines, 16 texture pipelines and 8 vertex pipelines. The core frequency was 625 MHz, the memory was more - 900 MHz.

GeForce 8800 GTX

In 2006, several video cards were released based on the G80 processor, the most powerful of which was the GeForce 8800 GTX. The G80 was one of the most complex existing chips of the time. It was produced using the 90-nm process technology and contained 681 million transistors. The core worked at a frequency of 575 MHz, the memory at a frequency of 900 MHz. The frequency of unified shader units was 1350 MHz. The GeForce 8800 GTX had 768 MB of GDDR3 video memory, and the bus width was 384-bit. New anti-aliasing methods were supported, which allowed the ROP units to work with HDR light in MSAA (Multisample anti-aliasing) mode. PureVideo technology has been developed.

The GeForce 8800 GTX architecture proved to be particularly efficient and for several years it has been one of the fastest graphics cards.

Radeon HD2900 XT, HD 3870 and HD 3850

In 2007, the flagship Radeon HD2900 XT graphics card based on the R600 chip was introduced. The core frequency of the video card was 740 MHz, GDDR4 memory - 825 MHz. A 512-bit memory bus was used. The amount of video memory reached 512 MB and 1 GB.

A more successful development was the RV670 processor, released in the same year. It almost did not differ in architecture from its predecessor, but it was manufactured according to the 55-nm process technology and with a 256-bit memory bus. Support for DirectX 10.1 and Shader Model 4.1 has appeared. Based on the processor, video cards Radeon HD 3870 (core frequency 775 MHz, memory 1125 MHz) and Radeon HD 3850 (core frequency 670 MHz, memory 828 MHz) with a video memory capacity of 256 MB and 512 MB and a 256-bit bus were produced.

GeForce 9800

The G92 chip formed the basis of the GeForce 9800 GTX - one of the fastest and most affordable graphics cards. It was manufactured according to the 65-nm process technology. The core frequency was 675 MHz, the memory frequency was 1100 MHz, and the bus was 256-bit. The memory was offered in two versions: 512 MB and 1 GB. A bit later, the GTX + model appeared, which was distinguished by a 55-nm process technology and a core frequency of 738 MHz.

Another two-chip video card GeForce 9800 GX2 also appeared in this line. Each of the processors had specifications, like the GeForce 8800 GTS 512 MB, only with different frequencies.

GeForce GTX 280 and GTX 260

In 2008, NVIDIA released the GT200 chip, which was used in the GeForce GTX 280 and GTX 260 graphics cards. The chip was manufactured using the 65-nm process technology and contained 1.4 billion transistors, had 32 ROPs and 80 texture units. The memory bus has increased to 512-bit. Support has also been added for the PhysX physics engine and the CUDA platform. The core frequency of the video card was 602 MHz, and the memory type GDDR3 - 1107 MHz.

The GeForce GTX 260 used the 448-bit GDDR3 bus. The core frequency reached 576 MHz, and the memory - 999 MHz.

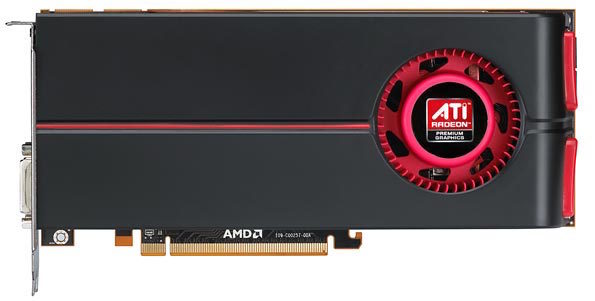

Radeon HD 4870

The senior video card of the new line was called Radeon HD 4870. The core frequency was 750 MHz, and the memory worked at an effective frequency of 3600 MHz. With the new line of video cards, the company continued its new policy for the release of devices that could successfully compete in the Middle-End segment. So, the Radeon HD 4870 became a worthy competitor to the GeForce GTX 260 graphics card. And the leader of the HD 4000 line was soon taken by the next dual-chip solution Radeon HD 4870X2. The architecture of the video card itself corresponded to that of the Radeon HD 3870X2, not counting the presence of the Sideport interface, directly linking the two cores for the most rapid exchange of information.

GeForce GTX 480

In 2010, NVIDIA introduced the GF100 with the Fermi architecture, which formed the basis of the GeForce GTX 480 graphics card. The GF100 was manufactured using the 40nm process technology and received 512 stream processors. The core frequency was 700 MHz, and the memory - 1848 MHz. The bus width was 384-bit. The amount of video memory GDDR5 reached 1.5 GB.

The GF100 chip supported DirectX 11 and Shader Model 5.0, as well as the new NVIDIA Surround technology that allows you to deploy applications on three screens, thereby creating the effect of complete immersion.

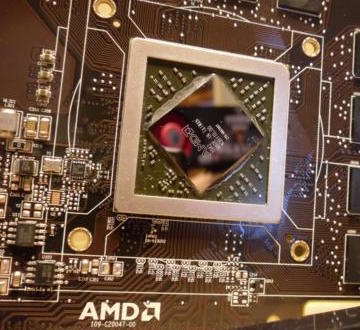

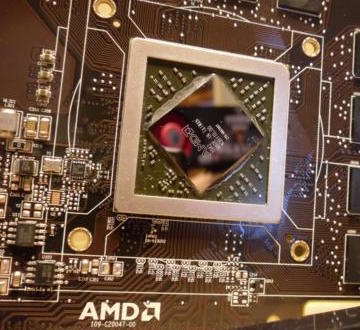

Chips Cypress and Cayman

AMD has released a 40nm Cypress chip. The developers of the company decided to change the approach and not use exclusively alphanumeric values. A generation of chips began to be assigned their own names. The very principle of Cypress architecture continued the ideas of the RV770, but the design was redesigned. The number of stream processors, texture units, and ROPs doubled. Support for DirectX 11 and Shader Model 5.0 has appeared. Cypress introduced new texture compression methods that allowed developers to use large textures. AMD also introduced the new Eyefinity technology, later NVIDIA Surround technology became its full counterpart.

The Cypress chip was implemented in a series of Radeon HD 5000 video cards. Soon, AMD also released a dual-chip solution Radeon HD 5970. In general, Cypress was very successful.

The Radeon HD 6000 series of video cards, released at the end of 2010, was designed to compete with the GeForce GTX 500 accelerators. The graphics cards were based on the Cayman chip. It used a slightly different VLIW4 architecture. The number of stream processors was 1536 pieces. The number of texture modules increased - there were 96 of them. Cayman was also able to work with the new Enhanced Quality AA smoothing algorithm. The memory bus width of the chip was 256-bit. Video cards used GDDR5 memory.

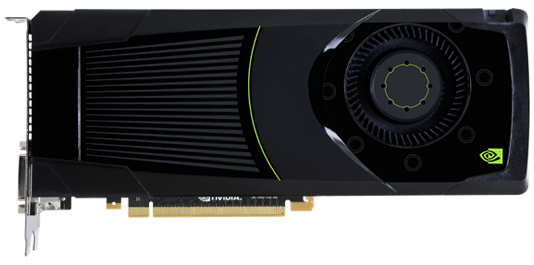

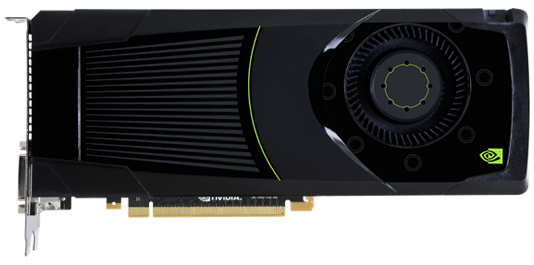

GeForce GTX 680

Since 2011, NVIDIA has released a generation of graphics accelerators. One of the notable models was the GeForce GTX 680 graphics card, based on the GK104 chip, which was produced using the 28-nm process technology. The core frequency is 1006 MHz, the memory frequency is 6008 MHz, the 256-bit GDDR5 bus.

In 2013, the company introduced the GK110 chip, which is based on the flagship GeForce GTX 780 and GeForce GTX Titan. The 384-bit GDDR5 bus was used, and the amount of memory increased to 6 GB.