A tour of the Swedish Facebook data center near the Arctic Circle

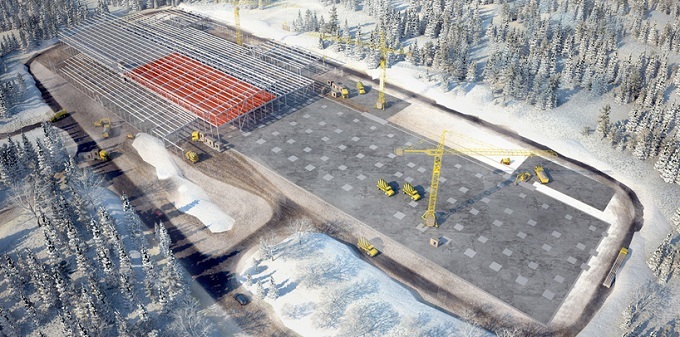

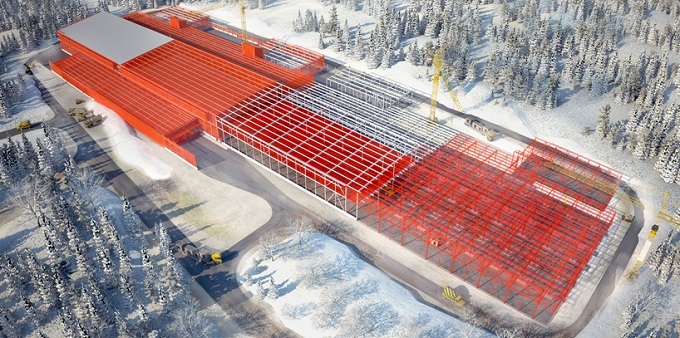

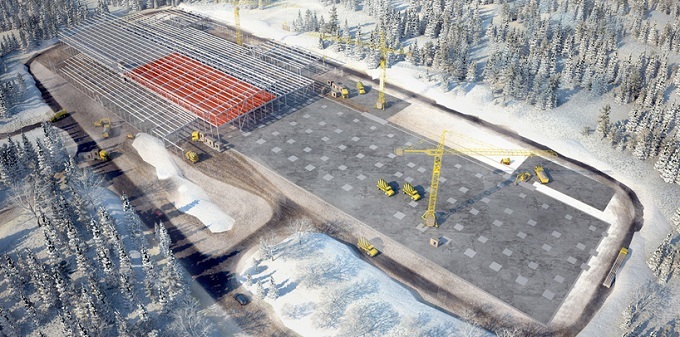

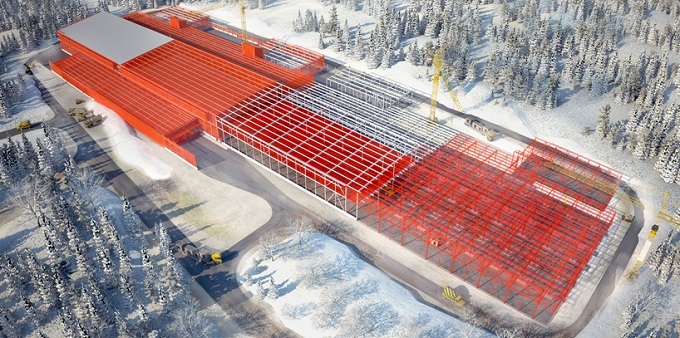

A little south of the Arctic Circle, in the middle of a forest on the outskirts of the Swedish town of Luleå, is a server farm - a Facebook hypermount data center. The facade of the gigantic building is framed by thousands of rectangular metal panels, while it itself looks like a real spaceship. The company server farm in Lulea is huge. It is 300 meters wide and 100 meters long, which roughly corresponds to the size of four football fields.

Interestingly, the Facebook data center project in Sweden at one point turned out to be extremely close to failure. One local ecologist said the new data center could harm birds, including the rare three-fingered Swedish woodpecker that nests near the campus. But officials from the municipality of Lulea rejected the objection. The Facebook project looked too attractive, involving the investment of hundreds of millions of dollars in construction and the creation of many jobs.

Facebook's server farm is one of the most energy-efficient computing facilities in human history. About a century ago, Sweden began the construction of numerous hydropower plants to organize the power supply of its steel and pulp and paper industry. Such hydroelectric power stations appeared in Lulea. Now they are used to power the Facebook data center, which, by the way, consumes about the same amount of electricity as a steel mill. According to Facebook engineers, the company's goal is to provide 50% of the power to its data centers through environmentally friendly and renewable energy sources by 2018. And the Swedish campus has significantly brought Facebook to that level. The campus also houses three powerful square-shaped diesel generators,

“So many hydropower plants are connected to the regional grid that generators are simply not needed,” said Jay Park, Facebook’s director of infrastructure development. According to Park, when connecting the substations to the new data center, Facebook engineers used the 2N redundancy scheme, that is, electricity comes from independent networks using various routes. In the first case, underground cables are used, while in the second - overhead power lines.

The server farm helps Facebook process about 350 million photos, 4.5 billion likes and 10 billion messages per day. The company began construction of the Swedish data center in order to improve the quality of service for European users who generate more and more electronic data. At the same time, there is a high probability that if you upload a selfie in London or publish a status update in Paris, your data will be stored in Lulea. In a conventional data center, one and a half to two watts of electromechanical equipment and cooling systems fall on each watt of IT load, the Luleå data center works many times more efficiently: its PUE coefficient is 1.04.

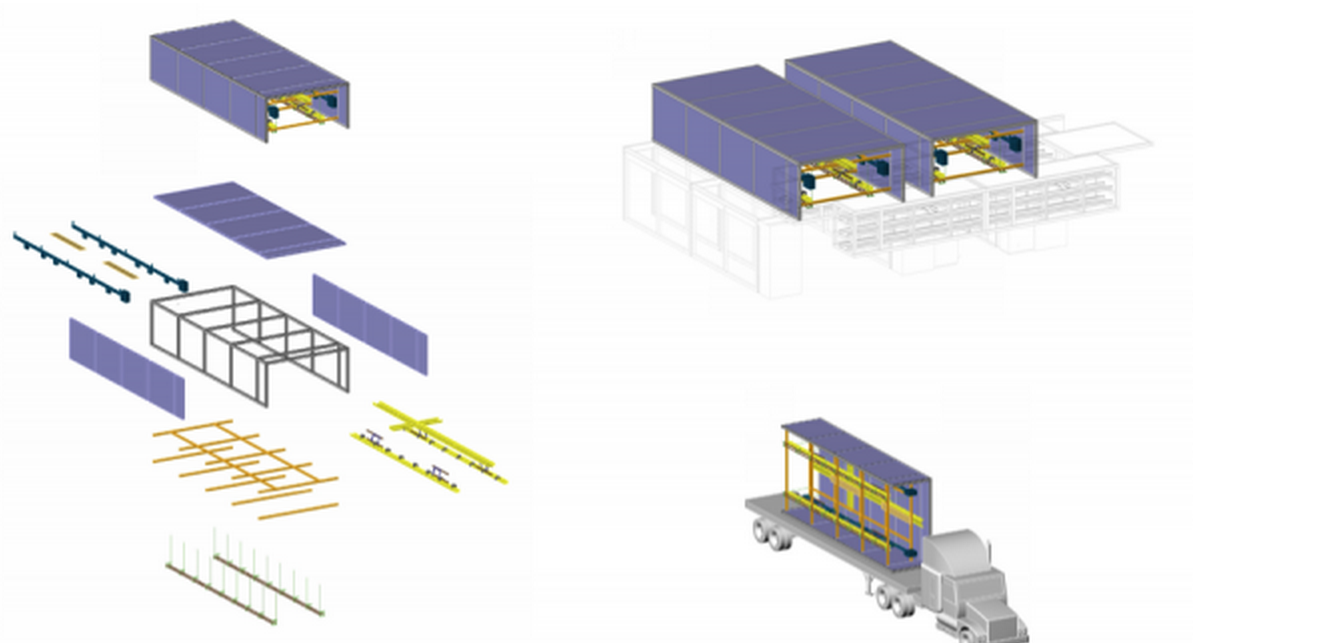

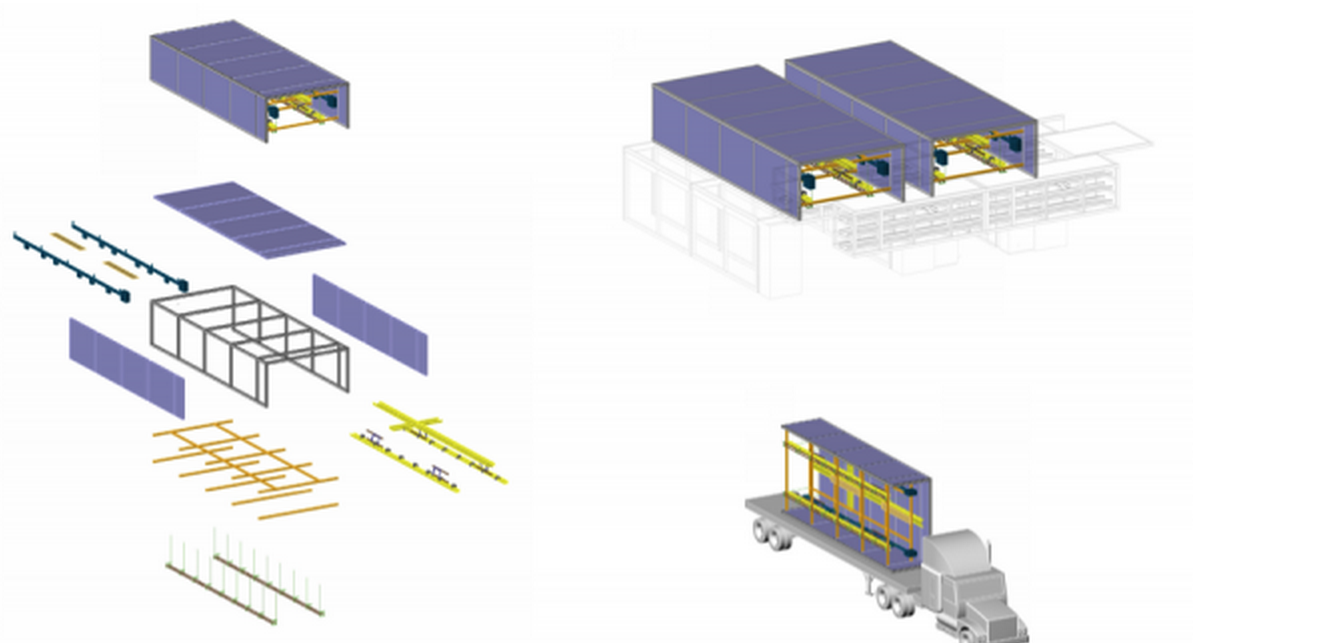

Facebook built a server farm, repeating the concept of "chassis". Using tips from various industries where automation and standardization of production are widely used, we managed to form a vision of the idea and implement the “chassis” - the basis (in this case, a steel frame with approximate dimensions of 3.6 m by 12.2 m) and use the assembly line for those delivered to the place of implementation work items.

Marco Magarelli design engineer for the construction of the data center for Facebook:

After assembly, the chassis is loaded into the body and transported directly to the server farm itself. In the same building, the delivered assembly is mounted on prepared concrete poles.

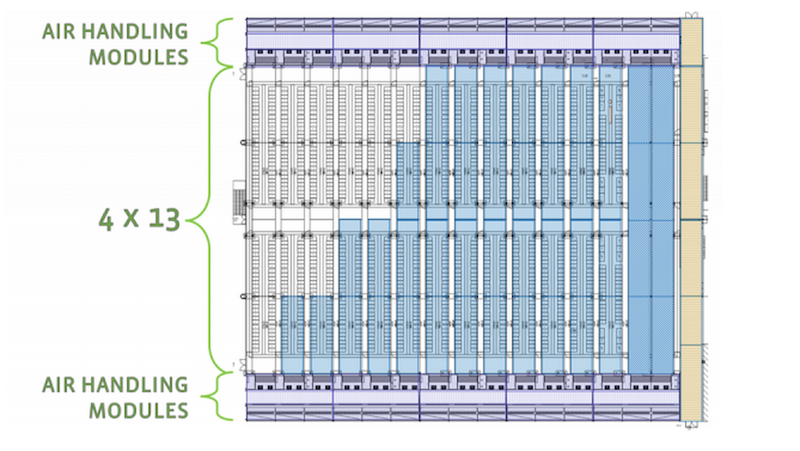

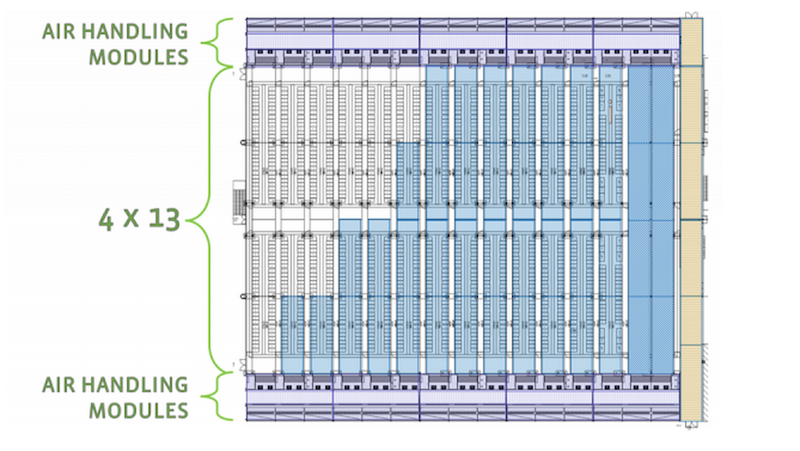

Two connected chassis form an 18-meter passage. The usual data center machine room will accommodate 52 chassis, thereby forming 13 corridors, including those intended for cooling server assemblies.

High efficiency and environmental friendliness of the data center due to its location. Swedish operators have at their disposal cheap electricity generated by reliable hydroelectric power stations, as well as a cold climate, which IT specialists can wrap in their favor. This is due, among other things, to low temperatures in the region (in winter, the thermometer keeps an average of around -20 C). Instead of spending money on huge energy-consuming air conditioners to cool tens of thousands of servers in Lulea, Facebook engineers use outside air, putting it into the data center's after-hours, after the air goes through the filtering process, as well as optimizing temperature and humidity, and this cool server systems. The new data center functions as one large mechanism.

Inside are long corridors, numerous servers with flashing lights and the buzz of giant fans.

Facebook engineers have simplified the design of servers by eliminating the use of a number of standard components, such as additional memory slots, cables and protective plastic cases. These servers, with unprotected motherboards, are housed inside a standard fridge-sized rack.

Experts are confident that this design allows you to intensify the air flow passing through each server. Effective operation of such computing systems requires less cooling capacity, because due to the reduced number of components they emit less thermal energy than standard analogues. And besides, Facebook machines are able to function at elevated temperatures.

When Facebook engineers began to bring their ideas to the "public", most experts in the field of data centers were still skeptical, especially regarding the operation of server systems at elevated temperatures. But, as they say, time has put everything in its place. At the same time, further improvement of infrastructure systems by the participants of the Open Compute Project initiative (in addition to the Facebook project is developed by many manufacturers of hardware and microchips, hosting and colocation providers, as well as banking organizations far from the IT segment) made it possible to use them far from the Arctic. The next major data center Facebook, it was decided to build in Iowa (USA), where the climate is temperate. As in Sweden, there is more than enough cheap electricity from renewable sources (in Iowa, wind farms play the role of hydroelectric power plants).

HP quickly responded to the initiative of the social network by launching sales of a microserver called Moonshot , which is devoid of all the excess, is equipped with low-power chips and is characterized by high energy efficiency. The Moonshot release was the most radical change in the server farm product line undertaken by the US company in recent years. HP is also working on mechanisms to improve server energy efficiency through water cooling.

According to the leadership of the network giant, only a few companies will want to deal with such specialized systems that were designed primarily to meet the needs of large Internet companies that own hyper-large data centers. According to Cisco spokesman David McCulloch, the network giant does not see the new trend as a threat to its business. Six years ago, another major US vendor, Dell, created a special team of engineers to design computer systems without the "frills" that were acquired by Internet companies. Since then, Dell's revenue has grown significantly. This suggests that the vendor has chosen the right direction for the development of his business.

While specialized hardware developed within the walls of the web giants Google and Amazon is accessible only to data center operators of these companies, the openness of Facebook and the desire of the social network to share their best practices have become the reasons for the growing interest in its data centers not only from other Internet companies - even small and medium-sized businesses are actively interested in the developments of the social network in the field of improving the efficiency of data centers. Facebook has created step-by-step instructions for creating a hyper-scale server farm, which can be used by any company with enough man-hours and money.

Facebook top manager Frank Frankovsky

The board of directors of the non-profit organization Open Compute Project Foundation, led by Facebook top manager Frank Frankovskii, includes heads of such giants from the world of high technology and finance as Intel and Goldman Sachs, respectively. At the same time, many large Asian hardware manufacturers like Quanta Computer and Tyan Computer have already begun selling server systems based on specifications created by members of the Open Compute Project. The social media initiative turned out to be really popular.

A long fence has been laid around the perimeter of the data center campus, which was actually erected to prevent local moose from getting inside the campus.

Inside the building, the culture of Sweden mixes with the American. At the entrance you can see colorful pictures of deer adjacent to the Facebook logo and watches, which show the time on other company campuses in the US states of North Carolina, Iowa and Oregon.

Most of the one and a half hundred Swedish campus staff are locals. Employees get to work on snowmobiles and bicycles decorated with the Facebook logo. They also often spend time together, for example, arranging fishing on ice.

Interestingly, the Facebook data center project in Sweden at one point turned out to be extremely close to failure. One local ecologist said the new data center could harm birds, including the rare three-fingered Swedish woodpecker that nests near the campus. But officials from the municipality of Lulea rejected the objection. The Facebook project looked too attractive, involving the investment of hundreds of millions of dollars in construction and the creation of many jobs.

Facebook's server farm is one of the most energy-efficient computing facilities in human history. About a century ago, Sweden began the construction of numerous hydropower plants to organize the power supply of its steel and pulp and paper industry. Such hydroelectric power stations appeared in Lulea. Now they are used to power the Facebook data center, which, by the way, consumes about the same amount of electricity as a steel mill. According to Facebook engineers, the company's goal is to provide 50% of the power to its data centers through environmentally friendly and renewable energy sources by 2018. And the Swedish campus has significantly brought Facebook to that level. The campus also houses three powerful square-shaped diesel generators,

“So many hydropower plants are connected to the regional grid that generators are simply not needed,” said Jay Park, Facebook’s director of infrastructure development. According to Park, when connecting the substations to the new data center, Facebook engineers used the 2N redundancy scheme, that is, electricity comes from independent networks using various routes. In the first case, underground cables are used, while in the second - overhead power lines.

The server farm helps Facebook process about 350 million photos, 4.5 billion likes and 10 billion messages per day. The company began construction of the Swedish data center in order to improve the quality of service for European users who generate more and more electronic data. At the same time, there is a high probability that if you upload a selfie in London or publish a status update in Paris, your data will be stored in Lulea. In a conventional data center, one and a half to two watts of electromechanical equipment and cooling systems fall on each watt of IT load, the Luleå data center works many times more efficiently: its PUE coefficient is 1.04.

Chassis concept

Facebook built a server farm, repeating the concept of "chassis". Using tips from various industries where automation and standardization of production are widely used, we managed to form a vision of the idea and implement the “chassis” - the basis (in this case, a steel frame with approximate dimensions of 3.6 m by 12.2 m) and use the assembly line for those delivered to the place of implementation work items.

Marco Magarelli design engineer for the construction of the data center for Facebook:

Elements of the creation of DCs also include all the infrastructure that accompanies server racks: cable trays, laying power lines, the installation of control and measuring nodes and lighting. Our chassis already supports the entire infrastructure that accompanies server racks. In container solutions, in addition to the equipment itself, it is also necessary to transport the “air” in the container. In this idea, only the basic design is transported, which is located above the server racks and is ready for connecting communications.

After assembly, the chassis is loaded into the body and transported directly to the server farm itself. In the same building, the delivered assembly is mounted on prepared concrete poles.

Two connected chassis form an 18-meter passage. The usual data center machine room will accommodate 52 chassis, thereby forming 13 corridors, including those intended for cooling server assemblies.

High efficiency and environmental friendliness of the data center due to its location. Swedish operators have at their disposal cheap electricity generated by reliable hydroelectric power stations, as well as a cold climate, which IT specialists can wrap in their favor. This is due, among other things, to low temperatures in the region (in winter, the thermometer keeps an average of around -20 C). Instead of spending money on huge energy-consuming air conditioners to cool tens of thousands of servers in Lulea, Facebook engineers use outside air, putting it into the data center's after-hours, after the air goes through the filtering process, as well as optimizing temperature and humidity, and this cool server systems. The new data center functions as one large mechanism.

Inside are long corridors, numerous servers with flashing lights and the buzz of giant fans.

A good geographical location will allow eight months of the year to cool this northern data center solely due to outside air, which makes it cheaper to maintain by an average of 40% compared to a similar data center in the USA. An important factor for Facebook’s investment was the fact that for the construction of its data center, the American company received subsidies from the European Union in the amount of 10 million pounds, in addition, it was here that the lowest electricity prices were offered throughout Europe.

Facebook engineers have simplified the design of servers by eliminating the use of a number of standard components, such as additional memory slots, cables and protective plastic cases. These servers, with unprotected motherboards, are housed inside a standard fridge-sized rack.

Experts are confident that this design allows you to intensify the air flow passing through each server. Effective operation of such computing systems requires less cooling capacity, because due to the reduced number of components they emit less thermal energy than standard analogues. And besides, Facebook machines are able to function at elevated temperatures.

When Facebook engineers began to bring their ideas to the "public", most experts in the field of data centers were still skeptical, especially regarding the operation of server systems at elevated temperatures. But, as they say, time has put everything in its place. At the same time, further improvement of infrastructure systems by the participants of the Open Compute Project initiative (in addition to the Facebook project is developed by many manufacturers of hardware and microchips, hosting and colocation providers, as well as banking organizations far from the IT segment) made it possible to use them far from the Arctic. The next major data center Facebook, it was decided to build in Iowa (USA), where the climate is temperate. As in Sweden, there is more than enough cheap electricity from renewable sources (in Iowa, wind farms play the role of hydroelectric power plants).

HP quickly responded to the initiative of the social network by launching sales of a microserver called Moonshot , which is devoid of all the excess, is equipped with low-power chips and is characterized by high energy efficiency. The Moonshot release was the most radical change in the server farm product line undertaken by the US company in recent years. HP is also working on mechanisms to improve server energy efficiency through water cooling.

According to the leadership of the network giant, only a few companies will want to deal with such specialized systems that were designed primarily to meet the needs of large Internet companies that own hyper-large data centers. According to Cisco spokesman David McCulloch, the network giant does not see the new trend as a threat to its business. Six years ago, another major US vendor, Dell, created a special team of engineers to design computer systems without the "frills" that were acquired by Internet companies. Since then, Dell's revenue has grown significantly. This suggests that the vendor has chosen the right direction for the development of his business.

While specialized hardware developed within the walls of the web giants Google and Amazon is accessible only to data center operators of these companies, the openness of Facebook and the desire of the social network to share their best practices have become the reasons for the growing interest in its data centers not only from other Internet companies - even small and medium-sized businesses are actively interested in the developments of the social network in the field of improving the efficiency of data centers. Facebook has created step-by-step instructions for creating a hyper-scale server farm, which can be used by any company with enough man-hours and money.

Facebook top manager Frank Frankovsky

The board of directors of the non-profit organization Open Compute Project Foundation, led by Facebook top manager Frank Frankovskii, includes heads of such giants from the world of high technology and finance as Intel and Goldman Sachs, respectively. At the same time, many large Asian hardware manufacturers like Quanta Computer and Tyan Computer have already begun selling server systems based on specifications created by members of the Open Compute Project. The social media initiative turned out to be really popular.

Server farm campus

A long fence has been laid around the perimeter of the data center campus, which was actually erected to prevent local moose from getting inside the campus.

Inside the building, the culture of Sweden mixes with the American. At the entrance you can see colorful pictures of deer adjacent to the Facebook logo and watches, which show the time on other company campuses in the US states of North Carolina, Iowa and Oregon.

Most of the one and a half hundred Swedish campus staff are locals. Employees get to work on snowmobiles and bicycles decorated with the Facebook logo. They also often spend time together, for example, arranging fishing on ice.