Heterogeneous System Architecture or about meeting CPU and GPU

For a long time, the development of microelectronics took place under the motto "less and faster." The technical process was reduced, new elements of the x86 architecture were introduced (sets of instruction extensions), the clock frequency of the computing core was increased. When the growth of “rough” productivity rested on economic and physical factors, various methods of parallelizing computations became popular. At the same time, not only CPUs were developing that showed good performance in single-threaded and complex calculations, but also GPUs that were able to quickly perform a large number of similar and simple tasks that were difficult to give to conventional processors.

Today we are entering a new era in the development of chips that are responsible for computing in the hearts of desktops, servers, mobile devices and wearable electronics. By combining the approaches to information processing on the CPU and GPU, we developed a new, open architecture, without which the further implementation of the same Moore’s law seems difficult. Meet HSA - Heterogeneous System Architecture.

In order to fully understand how HSA, on the one hand, is close, and on the other hand, it surpasses the architecture of modern solutions, let's look at the history. Even if we discard decades of lamp technology, and start from the 1950s, from the moment transistors appeared in microelectronics, the story is such that you can write a separate article. Let's go through very briefly the main "milestones" of the processor industry, and very little on the history of video cards.

At the dawn of computer engineering, CPUs were fairly simple. In fact, all operations came down to adding numbers in a binary system. When it was required to subtract, the so-called " Reverse codes": They were simple and easily fit" iron ", without requiring the most complicated architectural delights that implement the" honest "subtraction of numbers. For each computer model, programs were written separately until 1964 came.

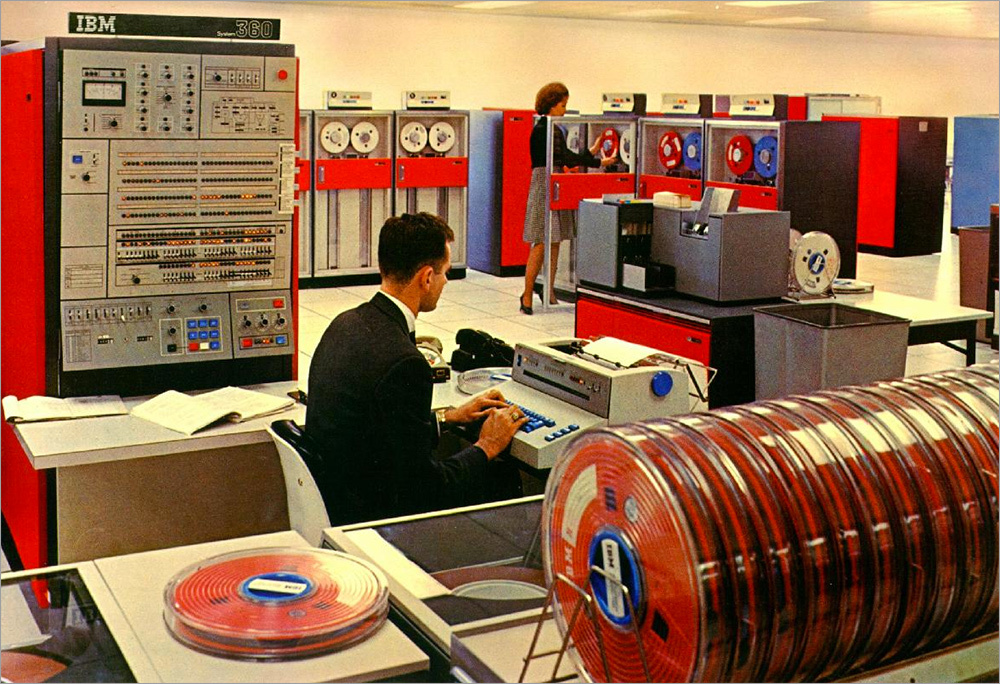

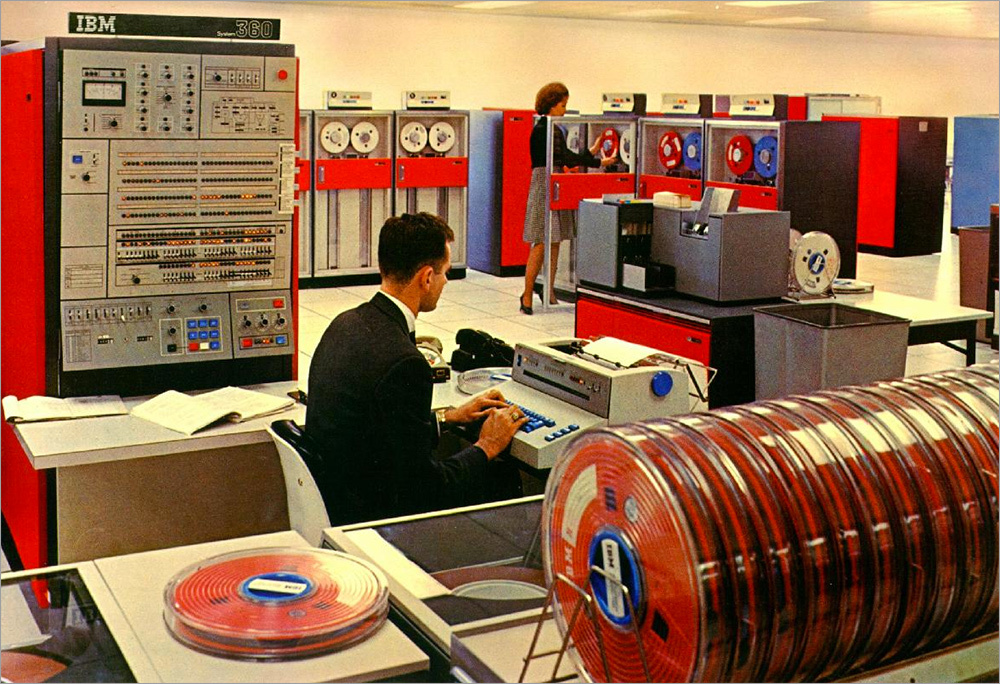

Ibm system / 360

In 1964, IBM released System / 360, a computer that changed the way we create processors. Most likely, it was he who had the honor of introducing such a concept as system architecture. Just because before him there was no "system". The fact is that before System / 360 all computers of the 50s and 60s worked only with the program code that was written specifically for them. IBM, on the other hand, developed the first set of instructions, which was supported in a variety of configurations (and performance), but the same system / 360 architecture. By the way, in the same computer, the byte for the first time became 8-bit. Before that, in almost all popular computers, it consisted of six bits.

The second major innovation in the 60s was the development of DEC. In their PDP-8 computer, they used an extremely simple architecture for that time, containing only four registers of 12 bits each and a little more than 500 CPU blocks. Those “transistors" that were already measured in the 1970s by the thousands, and by the billions in the 2000s. This simplicity and the creation by IBM of such a concept as a "set of instructions" and determined the further direction of development of computer technology.

The boom of microelectronics began in the 70s. It all started with the production of the first single-chip processors: many companies then produced chips under license, and then improved them, adding new instructions and expanding capabilities.

Since the mid-70s, the 8-bit processor market has been full and, towards the end of the decade, affordable 16-bit solutions appeared in computers, bringing with them the x86 architecture, which (albeit with significant improvements) is still alive and well. The main scourge that stopped the development of 16-bit processors at that time was the “confinement” of manufacturers in the production of the so-called support chips for 8-bit architectures. Together they made up what was later called the “north” and “south” bridges.

In the early 80s, tired of struggling with the inertia of the market, many manufacturers moved part of the control "support chips" inside the processor itself. Subsequently, something from the processor will go to the chipsets of the motherboard and then return back, but even then, such an architecture was somewhat similar to modern SoCs.

The middle and end of the 80s passed under the banner of transition to 32-bit memory addressing and 32-bit processor cores. Moore's law worked as before: the number of transistors grew, the clock frequency and the “rough” processor performance increased.

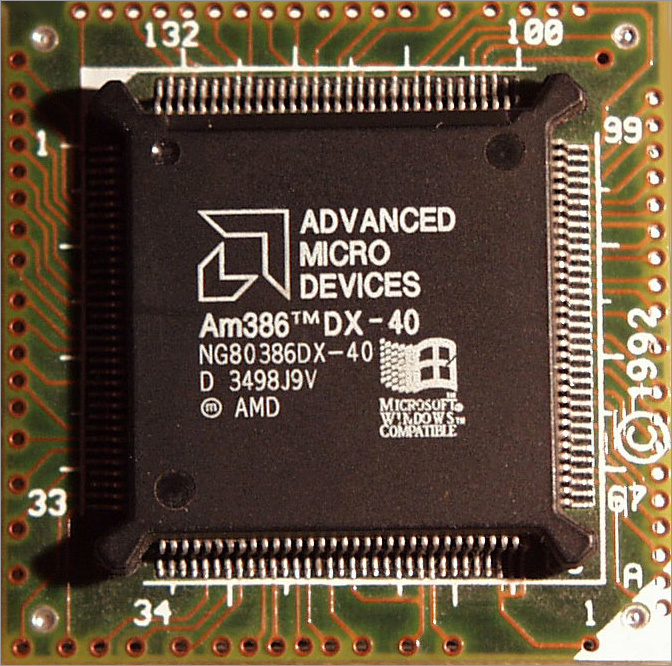

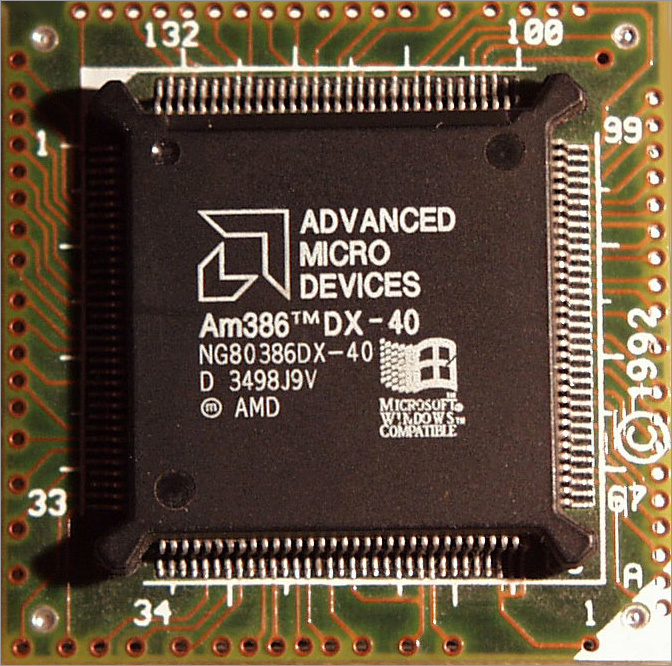

In 1991, AMD Am386DX and Am386SX processors saw the light, the performance of which was comparable to the next generation of systems (486). Am386SX is considered by many to be the first independent development of the company and the starting point for almost 15 years of dominance in the market of high-performance home PCs and workstations. Yes, it was architecturally a clone of the i368SX chip, but it had a lower technical process, 35% better energy efficiency and at the same time it worked at a higher clock frequency than its progenitor, but it was cheaper.

The nineties themselves were quite rich both in events in the field of microelectronics and in the rapid growth of the market. It was in the 90s that AMD began to be considered as one of the serious players in the market, since our processors in terms of price and performance ratio often left their competitors out of the Intel Pentium, especially in the home segment. An expanded set of instructions (MMX / 3DNow!), The appearance of a second-level cache, an aggressive decrease in the process technology, an increase in clock speeds ... and now the new millennium is already in the yard.

In 2000, AMD processors for the first time in the world crossed the line at 1 GHz, and a little later, the same K7 architecture also took on a new height - 1.4 GHz.

At the end of 2003, we released new processors based on the K8 architecture, which contained three important innovations: 64-bit memory addressing, an integrated memory controller, and the HyperTransport bus, which provided amazing bandwidth at that time (up to 3.2 GB / s.).

In 2005, the first dual-core processors appeared (Intel has two separate crystal cores on one substrate, AMD has two cores inside one crystal, but with a separate cache memory).

After a couple of years of natural development, the K8 architecture was replaced by a new one (K10). The number of cores on one chip in the maximum configuration increased to six, a general cache of the third level appeared. And further development was more quantitative and qualitative than revolutionary. More megahertz, more cores, better optimization, lower power consumption, finer manufacturing process, improved internal units like a branch predictor, memory controller and instruction decoder.

What interests us in the evolution of the GPU (as part of the HSA article) can be described very briefly. With the proliferation of computers as universal home-based work and entertainment solutions, computer games have grown in popularity. Together with it, the possibilities of 3D graphics grew, requiring more and more “infusion” of muscles in the face of video accelerators. The use of special microprograms and shaders made it possible to implement realistic lighting with relatively little blood in 3D graphics. Initially, shader processors were divided into vertex and pixel ones (the former were responsible for working with geometry, the latter for textures), later a unified shader architecture and, accordingly, universal shader processors that could execute code for both vertex and pixel shaders appeared.

GPGPU, general-purpose computing on a graphics accelerator could be implemented using open standard and OpenCL, or a somewhat simplified dialect of C.

Since then, of the key innovations in the GPU, we can only mention the appearance of the low-level Mantle API, which allows you to access AMD graphics cards on about that at the same level as access to graphics accelerators inside the PS4 and Xbox One consoles) and the explosive increase in memory capacity of the GPU in the last two years.

This is the end of the story, it is time to move on to the most interesting: HSA.

I want to start with the fact that HSA, first of all, is an open platform on the basis of which microelectronics manufacturers can build their products (regardless of the set of instructions used) that comply with certain principles and general rules.

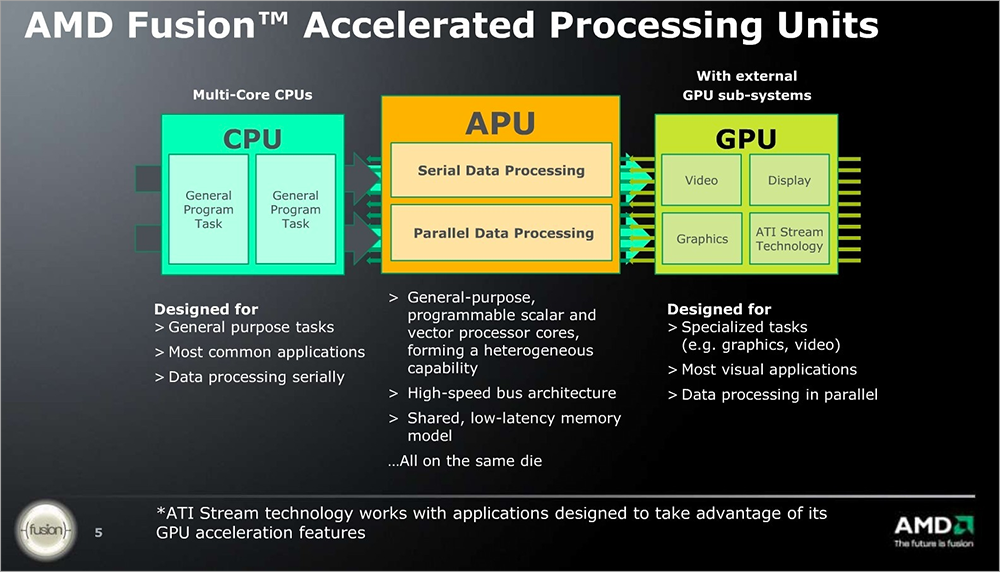

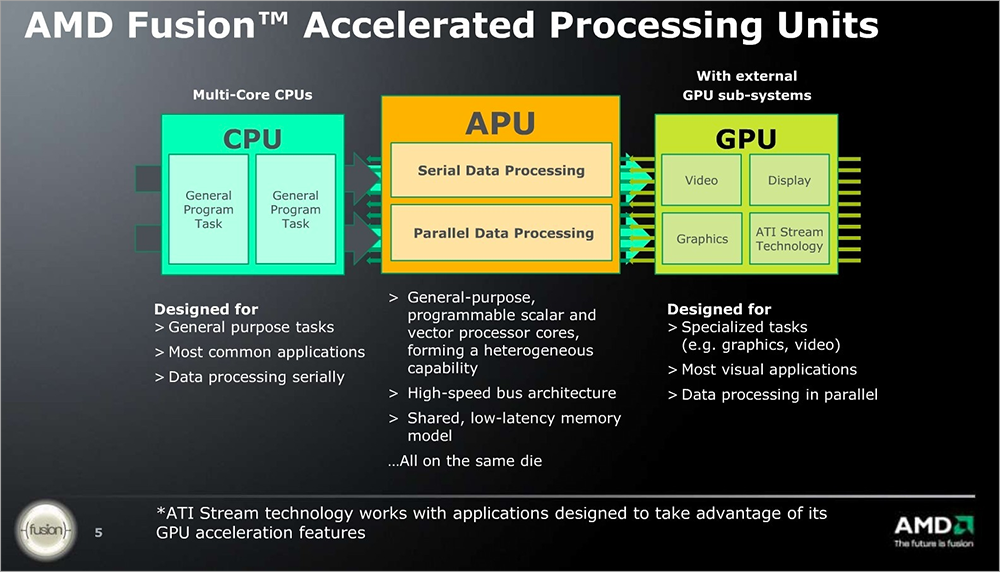

At the same time, HSA is a processor architecture that combines scalar computations on classic CPU cores, mass parallel computations on GPUs and work with signal processing on DSP modules, and connects them using coherent access to RAM. That is, the whole history of processor and video production in HSA converges at one point: almost 50 years of progress in the field of microelectronics have led to the creation of a logical combination of the best aspects of various systems.

The development of x86 architecture and processors made it possible to create highly efficient processor modules that provide common tasks and low power consumption.

The unification of shader processors inside the GPU core and the general simplification of programming for a system with a huge number of parallel executing modules have given way to GPGPU and the application of the processing power of video cards in those areas where previously used individual hardware accelerators, which have not won any tangible market share, so that stay afloat.

An integrated memory controller, PCIe bus and I / O system provided transparent memory access for various HSA modules.

Finally, the built-in DSP allows you to remove the load from the CPU and GPU when working with video and audio content, as it is hardware-based on the work of encoding and decoding the corresponding signals.

All these modules together cover the whole spectrum of modern tasks, and HSA allows you to transparently and easily teach programs to work with the full range of available hardware capabilities using classic tools such as languages like Java and C ++.

Modern realities (the spread of wearable and mobile electronics, economic and environmental issues) have created certain trends in the development of microelectronics: reducing the energy consumption of all devices, whether smartphones or servers, increasing productivity, improving the work with pattern recognition.

The first, in principle, is understandable. Everyone wants the gadget to work longer, the case was not thick with Big Mac, and it was not heated like a frying pan on a stove. Owners of data centers have a lot of trouble to remove heat and ensure uninterrupted power, to load them with extra watts of heat packet means to increase the cost of their services. More expensive hosting - more advertising on your favorite resources, more CPU load on devices, higher power consumption, less battery life.

Increased productivity today is simply taken for granted. People are used to the fact that since the 70s processors have been breaking records for performance every year, games are becoming more beautiful, systems and software are more complicated (and at the same time do not lose their visual speed), and modern programmer tools contain more and more layers of iron abstraction , each of which takes a piece of real performance.

Well, modern developments in the field of intelligent assistants, virtual assistants, and artificial intelligence development prospects simply require normal recognition of human speech, facial expressions, gestures, which, in turn, requires an increase in both direct performance and optimization of audio and audio decoding operations video stream.

All these problems would be solved by a universal hardware architecture implemented in the form of SoC, and combining the charms of classic CPUs and GPUs, competently distributing serial and parallel operations between those modules that are capable of performing the corresponding tasks most effectively.

But how to teach software to work with all this magnificence of computing capabilities?

Programmers should not have problems accessing the computing capabilities of such a system. To do this, the HSA has a number of key features that simplify the work of software developers with it and bring HSA closer to classical systems in terms of development:

The application developer does not need to understand low-level programming languages: standard components, a simple intermediate language, interaction interfaces and hardware are available to the developer, and coherent memory and how tasks will be distributed between computing modules are easily hidden “under the hood”.

The role of unified memory addressing can hardly be overestimated. Formally, without it, there would be no HSA. It does not matter where the data is located in the memory, how many cores, modules, computing units you have. You move the pointer and perform calculations, and do not "transfer" bytes from one actuator to another. The cache load is reduced, and the control of the processor itself is simplified. Abstraction of memory at the platform level will allow the use of the same code for different platforms, simplifying the life of software developers.

Programming for parallel systems is not an easy task. To make life easier for developers, we have developed the Bolt library, which provides effective patterns for the most commonly used templates for sorting, shrinking, scanning, and transforming data using parallel computing.

To speed up Java code without rewriting it on OpenCL, a special AparApi library (open source) is used, which allows you to convert Java bytecode to OpenCL with support for parallel computing on CPU and GPU cores.

In the future, it is planned to finalize Aparapi, first associating it with HSAIL, then adding a special optimizer. In the end, the HSA must work with heterogeneous acceleration directly through the Java machine, transparent to the user and programmer.

We have already said that HSA is an open platform. APIs and specifications are provided free of charge by the developer, and the HSA itself is independent of the instruction set of the CPU or GPU.

To ensure the compatibility of hardware solutions of various vendors, we created our own ISA kit: HSAIL (HSA Intermediate Layer), which ensures the operation of the software regardless of what is inside the HSA solution. Intermediate Layer itself supports working with exceptions, virtual functions, memory models of modern languages, so no problems are expected with support for C ++, Java and .Net, while developers can both access the hardware directly and use ready-made optimization libraries HSA, which will independently distribute tasks and streamline communication with hardware, simplifying the work of the programmer.

Not AMD alone is alive HSA. It is important for developers that once written code works equally well on different devices. Someone uses high-level programming languages like C ++ or Java for this, but we suggest working at a lower level. On the one hand, classic applications can run on HSA devices as if nothing had changed. Operating systems will provide old applications with clear and easy access to the processor, memory, and video core. On the other hand, HSAIL allows you to extract all the power from the new SoC, and developers can create high-performance and resource-saving applications as easily as for the classic OS and hardware bundles.

Now HSA FoundationThere are seven companies that are the founders of this organization: AMD, ARM, Imagination Technologies, MediaTek, Texas Instruments, Samsung Electronics and Qualcomm®.

Standardization in the field of distributing tasks across computing cores, transferring data and memory pointers, working with the main elements of the platform using HSAIL allows vendors to use their experience in the field of iron, and developers do not have to worry about what's under the hood. Applications ground under HSAIL will work on any platform.

The heterogeneous architecture allows you to combine the capabilities of the CPU and GPU, and this is its main advantage. At the same time, HSA takes care of the energy consumed, without loading the processor with calculations that are much more efficient to run on the GPU.

Simulation of solid-state physics is used everywhere today: from computer games and 3D packages to CAD, simulators for doctors, military and athletes. Work on the CPU of such a system is often not optimal, because requires numerous but simple calculations. And when the number of interacting objects exceeds hundreds or even thousands, classical CPUs simply are not able to provide the appropriate amount of calculations. But the GPU architecture is suitable for such calculations as well as possible. And unified addressing, page memory, and complete coherence allow you to transfer the calculations to the appropriate hardware with minimal resources and developer resources.

Simulation of solid-state physics is used everywhere today: from computer games and 3D packages to CAD, simulators for doctors, military and athletes. Work on the CPU of such a system is often not optimal, because requires numerous but simple calculations. And when the number of interacting objects exceeds hundreds or even thousands, classical CPUs simply are not able to provide the appropriate amount of calculations. But the GPU architecture is suitable for such calculations as well as possible. And unified addressing, page memory, and complete coherence allow you to transfer the calculations to the appropriate hardware with minimal resources and developer resources.

HSA performance on general tasks is often higher than that of classic CPUs and higher than that of CPU + GPU bundle due to the fact that no matter how ideal the drivers are, copying data from shared memory to GPU memory and sending calculation results back can take more time than the calculations themselves.

HSA is already faster than classic systems, but it can work even better. Actually, the only current minus of the system is that it is new. Popularity is just beginning to grow, new models of iron in the most unfavorable economic conditions enter the market more slowly, and the popularity of HSA is not growing at the same pace as we all would like. As soon as developers get a taste, understand the advantages of HSA and the simplicity of development for a new system and begin to natively support the heterogeneous architecture in their applications, we will see the rise of new, high-performance applications for servers, classic computers and mobile devices.

Today we are entering a new era in the development of chips that are responsible for computing in the hearts of desktops, servers, mobile devices and wearable electronics. By combining the approaches to information processing on the CPU and GPU, we developed a new, open architecture, without which the further implementation of the same Moore’s law seems difficult. Meet HSA - Heterogeneous System Architecture.

Minute of history

In order to fully understand how HSA, on the one hand, is close, and on the other hand, it surpasses the architecture of modern solutions, let's look at the history. Even if we discard decades of lamp technology, and start from the 1950s, from the moment transistors appeared in microelectronics, the story is such that you can write a separate article. Let's go through very briefly the main "milestones" of the processor industry, and very little on the history of video cards.

At the dawn of computer engineering, CPUs were fairly simple. In fact, all operations came down to adding numbers in a binary system. When it was required to subtract, the so-called " Reverse codes": They were simple and easily fit" iron ", without requiring the most complicated architectural delights that implement the" honest "subtraction of numbers. For each computer model, programs were written separately until 1964 came.

Ibm system / 360

In 1964, IBM released System / 360, a computer that changed the way we create processors. Most likely, it was he who had the honor of introducing such a concept as system architecture. Just because before him there was no "system". The fact is that before System / 360 all computers of the 50s and 60s worked only with the program code that was written specifically for them. IBM, on the other hand, developed the first set of instructions, which was supported in a variety of configurations (and performance), but the same system / 360 architecture. By the way, in the same computer, the byte for the first time became 8-bit. Before that, in almost all popular computers, it consisted of six bits.

The second major innovation in the 60s was the development of DEC. In their PDP-8 computer, they used an extremely simple architecture for that time, containing only four registers of 12 bits each and a little more than 500 CPU blocks. Those “transistors" that were already measured in the 1970s by the thousands, and by the billions in the 2000s. This simplicity and the creation by IBM of such a concept as a "set of instructions" and determined the further direction of development of computer technology.

The boom of microelectronics began in the 70s. It all started with the production of the first single-chip processors: many companies then produced chips under license, and then improved them, adding new instructions and expanding capabilities.

Since the mid-70s, the 8-bit processor market has been full and, towards the end of the decade, affordable 16-bit solutions appeared in computers, bringing with them the x86 architecture, which (albeit with significant improvements) is still alive and well. The main scourge that stopped the development of 16-bit processors at that time was the “confinement” of manufacturers in the production of the so-called support chips for 8-bit architectures. Together they made up what was later called the “north” and “south” bridges.

In the early 80s, tired of struggling with the inertia of the market, many manufacturers moved part of the control "support chips" inside the processor itself. Subsequently, something from the processor will go to the chipsets of the motherboard and then return back, but even then, such an architecture was somewhat similar to modern SoCs.

The middle and end of the 80s passed under the banner of transition to 32-bit memory addressing and 32-bit processor cores. Moore's law worked as before: the number of transistors grew, the clock frequency and the “rough” processor performance increased.

In 1991, AMD Am386DX and Am386SX processors saw the light, the performance of which was comparable to the next generation of systems (486). Am386SX is considered by many to be the first independent development of the company and the starting point for almost 15 years of dominance in the market of high-performance home PCs and workstations. Yes, it was architecturally a clone of the i368SX chip, but it had a lower technical process, 35% better energy efficiency and at the same time it worked at a higher clock frequency than its progenitor, but it was cheaper.

The nineties themselves were quite rich both in events in the field of microelectronics and in the rapid growth of the market. It was in the 90s that AMD began to be considered as one of the serious players in the market, since our processors in terms of price and performance ratio often left their competitors out of the Intel Pentium, especially in the home segment. An expanded set of instructions (MMX / 3DNow!), The appearance of a second-level cache, an aggressive decrease in the process technology, an increase in clock speeds ... and now the new millennium is already in the yard.

In 2000, AMD processors for the first time in the world crossed the line at 1 GHz, and a little later, the same K7 architecture also took on a new height - 1.4 GHz.

At the end of 2003, we released new processors based on the K8 architecture, which contained three important innovations: 64-bit memory addressing, an integrated memory controller, and the HyperTransport bus, which provided amazing bandwidth at that time (up to 3.2 GB / s.).

In 2005, the first dual-core processors appeared (Intel has two separate crystal cores on one substrate, AMD has two cores inside one crystal, but with a separate cache memory).

After a couple of years of natural development, the K8 architecture was replaced by a new one (K10). The number of cores on one chip in the maximum configuration increased to six, a general cache of the third level appeared. And further development was more quantitative and qualitative than revolutionary. More megahertz, more cores, better optimization, lower power consumption, finer manufacturing process, improved internal units like a branch predictor, memory controller and instruction decoder.

What interests us in the evolution of the GPU (as part of the HSA article) can be described very briefly. With the proliferation of computers as universal home-based work and entertainment solutions, computer games have grown in popularity. Together with it, the possibilities of 3D graphics grew, requiring more and more “infusion” of muscles in the face of video accelerators. The use of special microprograms and shaders made it possible to implement realistic lighting with relatively little blood in 3D graphics. Initially, shader processors were divided into vertex and pixel ones (the former were responsible for working with geometry, the latter for textures), later a unified shader architecture and, accordingly, universal shader processors that could execute code for both vertex and pixel shaders appeared.

GPGPU, general-purpose computing on a graphics accelerator could be implemented using open standard and OpenCL, or a somewhat simplified dialect of C.

Since then, of the key innovations in the GPU, we can only mention the appearance of the low-level Mantle API, which allows you to access AMD graphics cards on about that at the same level as access to graphics accelerators inside the PS4 and Xbox One consoles) and the explosive increase in memory capacity of the GPU in the last two years.

This is the end of the story, it is time to move on to the most interesting: HSA.

What is HSA?

I want to start with the fact that HSA, first of all, is an open platform on the basis of which microelectronics manufacturers can build their products (regardless of the set of instructions used) that comply with certain principles and general rules.

At the same time, HSA is a processor architecture that combines scalar computations on classic CPU cores, mass parallel computations on GPUs and work with signal processing on DSP modules, and connects them using coherent access to RAM. That is, the whole history of processor and video production in HSA converges at one point: almost 50 years of progress in the field of microelectronics have led to the creation of a logical combination of the best aspects of various systems.

The development of x86 architecture and processors made it possible to create highly efficient processor modules that provide common tasks and low power consumption.

The unification of shader processors inside the GPU core and the general simplification of programming for a system with a huge number of parallel executing modules have given way to GPGPU and the application of the processing power of video cards in those areas where previously used individual hardware accelerators, which have not won any tangible market share, so that stay afloat.

An integrated memory controller, PCIe bus and I / O system provided transparent memory access for various HSA modules.

Finally, the built-in DSP allows you to remove the load from the CPU and GPU when working with video and audio content, as it is hardware-based on the work of encoding and decoding the corresponding signals.

All these modules together cover the whole spectrum of modern tasks, and HSA allows you to transparently and easily teach programs to work with the full range of available hardware capabilities using classic tools such as languages like Java and C ++.

Reasons for creating HSA

Modern realities (the spread of wearable and mobile electronics, economic and environmental issues) have created certain trends in the development of microelectronics: reducing the energy consumption of all devices, whether smartphones or servers, increasing productivity, improving the work with pattern recognition.

The first, in principle, is understandable. Everyone wants the gadget to work longer, the case was not thick with Big Mac, and it was not heated like a frying pan on a stove. Owners of data centers have a lot of trouble to remove heat and ensure uninterrupted power, to load them with extra watts of heat packet means to increase the cost of their services. More expensive hosting - more advertising on your favorite resources, more CPU load on devices, higher power consumption, less battery life.

Increased productivity today is simply taken for granted. People are used to the fact that since the 70s processors have been breaking records for performance every year, games are becoming more beautiful, systems and software are more complicated (and at the same time do not lose their visual speed), and modern programmer tools contain more and more layers of iron abstraction , each of which takes a piece of real performance.

Well, modern developments in the field of intelligent assistants, virtual assistants, and artificial intelligence development prospects simply require normal recognition of human speech, facial expressions, gestures, which, in turn, requires an increase in both direct performance and optimization of audio and audio decoding operations video stream.

All these problems would be solved by a universal hardware architecture implemented in the form of SoC, and combining the charms of classic CPUs and GPUs, competently distributing serial and parallel operations between those modules that are capable of performing the corresponding tasks most effectively.

But how to teach software to work with all this magnificence of computing capabilities?

Key features of a heterogeneous system

Programmers should not have problems accessing the computing capabilities of such a system. To do this, the HSA has a number of key features that simplify the work of software developers with it and bring HSA closer to classical systems in terms of development:

- Unified addressing for all processors;

- Full memory coherence;

- Operations in the page memory system;

- Custom send mode;

- Queue management at the architecture level;

- High-level language support for computing processors - GPU;

- Change of context and preemptive multitasking.

The application developer does not need to understand low-level programming languages: standard components, a simple intermediate language, interaction interfaces and hardware are available to the developer, and coherent memory and how tasks will be distributed between computing modules are easily hidden “under the hood”.

The role of unified memory addressing can hardly be overestimated. Formally, without it, there would be no HSA. It does not matter where the data is located in the memory, how many cores, modules, computing units you have. You move the pointer and perform calculations, and do not "transfer" bytes from one actuator to another. The cache load is reduced, and the control of the processor itself is simplified. Abstraction of memory at the platform level will allow the use of the same code for different platforms, simplifying the life of software developers.

Difficulties of OpenCL and C ++ AMP

Programming for parallel systems is not an easy task. To make life easier for developers, we have developed the Bolt library, which provides effective patterns for the most commonly used templates for sorting, shrinking, scanning, and transforming data using parallel computing.

To speed up Java code without rewriting it on OpenCL, a special AparApi library (open source) is used, which allows you to convert Java bytecode to OpenCL with support for parallel computing on CPU and GPU cores.

In the future, it is planned to finalize Aparapi, first associating it with HSAIL, then adding a special optimizer. In the end, the HSA must work with heterogeneous acceleration directly through the Java machine, transparent to the user and programmer.

Open standard and hardware independence

We have already said that HSA is an open platform. APIs and specifications are provided free of charge by the developer, and the HSA itself is independent of the instruction set of the CPU or GPU.

To ensure the compatibility of hardware solutions of various vendors, we created our own ISA kit: HSAIL (HSA Intermediate Layer), which ensures the operation of the software regardless of what is inside the HSA solution. Intermediate Layer itself supports working with exceptions, virtual functions, memory models of modern languages, so no problems are expected with support for C ++, Java and .Net, while developers can both access the hardware directly and use ready-made optimization libraries HSA, which will independently distribute tasks and streamline communication with hardware, simplifying the work of the programmer.

Hardware component

Not AMD alone is alive HSA. It is important for developers that once written code works equally well on different devices. Someone uses high-level programming languages like C ++ or Java for this, but we suggest working at a lower level. On the one hand, classic applications can run on HSA devices as if nothing had changed. Operating systems will provide old applications with clear and easy access to the processor, memory, and video core. On the other hand, HSAIL allows you to extract all the power from the new SoC, and developers can create high-performance and resource-saving applications as easily as for the classic OS and hardware bundles.

Now HSA FoundationThere are seven companies that are the founders of this organization: AMD, ARM, Imagination Technologies, MediaTek, Texas Instruments, Samsung Electronics and Qualcomm®.

Standardization in the field of distributing tasks across computing cores, transferring data and memory pointers, working with the main elements of the platform using HSAIL allows vendors to use their experience in the field of iron, and developers do not have to worry about what's under the hood. Applications ground under HSAIL will work on any platform.

Pros and cons of HSA-based hardware solutions

The heterogeneous architecture allows you to combine the capabilities of the CPU and GPU, and this is its main advantage. At the same time, HSA takes care of the energy consumed, without loading the processor with calculations that are much more efficient to run on the GPU.

Simulation of solid-state physics is used everywhere today: from computer games and 3D packages to CAD, simulators for doctors, military and athletes. Work on the CPU of such a system is often not optimal, because requires numerous but simple calculations. And when the number of interacting objects exceeds hundreds or even thousands, classical CPUs simply are not able to provide the appropriate amount of calculations. But the GPU architecture is suitable for such calculations as well as possible. And unified addressing, page memory, and complete coherence allow you to transfer the calculations to the appropriate hardware with minimal resources and developer resources.

Simulation of solid-state physics is used everywhere today: from computer games and 3D packages to CAD, simulators for doctors, military and athletes. Work on the CPU of such a system is often not optimal, because requires numerous but simple calculations. And when the number of interacting objects exceeds hundreds or even thousands, classical CPUs simply are not able to provide the appropriate amount of calculations. But the GPU architecture is suitable for such calculations as well as possible. And unified addressing, page memory, and complete coherence allow you to transfer the calculations to the appropriate hardware with minimal resources and developer resources.HSA performance on general tasks is often higher than that of classic CPUs and higher than that of CPU + GPU bundle due to the fact that no matter how ideal the drivers are, copying data from shared memory to GPU memory and sending calculation results back can take more time than the calculations themselves.

HSA is already faster than classic systems, but it can work even better. Actually, the only current minus of the system is that it is new. Popularity is just beginning to grow, new models of iron in the most unfavorable economic conditions enter the market more slowly, and the popularity of HSA is not growing at the same pace as we all would like. As soon as developers get a taste, understand the advantages of HSA and the simplicity of development for a new system and begin to natively support the heterogeneous architecture in their applications, we will see the rise of new, high-performance applications for servers, classic computers and mobile devices.