Forward to the past. Geforce FX. The dawn of war

Since its founding, Microsoft has been able to do two of the most important things in life: to analyze something foreign in time and make some money of their own. Largely thanks to Microsoft, as the main generator of the most maximalist ideas, the entire IT industry has been (and is still going on) development paths that are beneficial primarily to Microsoft itself. The result of the implementation of many such ideas was not only the bankruptcy of many giants of the IT industry, but also a rapid universal unification. All components in the PC, from hardware to software, became more and more universal and similar, losing the ability to differ favorably. And so, in 2002, when Microsoft once again put its playful monopolistic little hands to the 3D industry, the DirectX9 specification rolled out in a thunderous wave on 3D chip manufacturers ...

And as we all remember well) the next year 2003 marked the arrival of cinema graphics on the PC. Well, yes, that’s how it was: WinXP, DVD games that require DirectX9 to be installed, and ... identical video cards with some kind of shaders. Conditionally, we can say that the DX9 specification was supposed to put an end to differences in the results of rendering the same image on cards of different manufacturers . However, even this specification could not finally curb NVIDIA. And rightly, otherwise why would NVIDIA invest in something promising?

And here we come to what prompted me once again to stick a corpse with a stick and still write this review.

Danger: Inside a lot of BIG pictures. Only the first is TWO megabytes.

Perhaps you did not know either:

By the time of this statement, the GeForce FX was already a notable long-term construction and was very late to the market. You can say that NVIDIA once managed to avoid that very day

Indeed ... The first thing that rushed to the edge of our eyes at the sight of the legendary dastbuster (GeForce FX 5800) was the dimensions.

Here is a two-slot monster next to the Radeon 9800:

To understand the reason for such an extraordinary solution, we will have to take a closer look ...

The GeForce FX, although it was not the first superscalar GPU in the world, was without a doubt the most complex superscalar GPU of its time and (it remained so for a long time). So complex that accurate information about the seemingly simplest components of its internal architecture is still missing. As a result, his market entry was postponed ... postponed ... And finally, he came, long-awaited.

To be precise, this most complex architectural part of the NV30 was called the CineFX (1.0) Engine . NVIDIA marketers then decided that it would sound more attractive than the nfiniteFX III Engine . Controversial, but true.

CineFX (who did not understand: Cine matic Ef fe cts ) - in many respects the merit of 3dfx engineers (remember the sensational T-Buffer). It contains everything you need to render cinematic special effects in your home.

You may ask: what does a beginner cinema need? Everything is simple. Beginning cinema will need only the most necessary: 128-bit precision data representation for working with floating point andFully programmable graphics pipeline with support for Shader Model 2.0, allowing you to impose up to 16 textures. Are you intrigued? Yes, this is here:

Most of the peaceful discussions about the performance of one or another device most often somehow fall into a holivar on the topic “What is more important: Iron or Software? In fact, no matter how many people would not want to get away from reality, but hardware without software - it is hardware :)

At the time of the release of the GeForce FX 5800 ( NV30 ), a competitor in the face of the Radeon 9700 Pro ( ATI R300 ) was amusing for more than a quarter already . It was February 2003. Today, such a delay in death is similar, and that is why we can safely call 2003 the year no other than the year of NVIDIA.

It was like this: Comes NV30to the labor exchange, and there is not one in the list of vacancies with DirectX9 knowledge. It turns out that the NV30, as it were, wasn’t late anywhere ... Indeed, the first any known powerful games for DX9 (such as DooM3, HL2, NFS Underground 2 ) appeared only in 2004. The long-awaited Stalker is already in 2007.

I’m all about the fact that there was simply nothing to compare the performance of the cards for reviewers in 2003. To be honest: when writing this article, I analyzed the picture for a long time, but I never met a single NV30 review with live DX9 tests. Basically, the capabilities of DX9 were tested in 3DMark ...

As a result, in those years very often one could hear something like:

And, although the same John Carmack could easily argue with this statement, in any case, this is a clear confirmation that iron is nothing without software. Moreover, in fact, it turned out that the shader performance of the NV30 was disastrously dependent primarily on how the shader code was written (even a sequence of instructions is important). It was then that NVIDIA began to advance in every way possible the optimization of shader code for NV30 .

Why was this needed? And then, the NV30 with the implementation of shaders is not so simple:

That is, the NV30 architecture did not allow him to fully reveal himself using the code of the SM2.0 standard. But now, if you shuffle the instructions a little before execution, maybe even replace one with the other (hi, 3DMark 2003) ... But at NVIDIA they understood: it is much more efficient to deal with the cause and not with the consequences.

This was not a new architectural solution, but a very competent improvement. NVIDIA engineers so fanatically corrected their mistakes that they even inherited in the history of shaders the officially documented Shader Model 2.0a (SM2.0 a ), which was named NVIDIA GeForce FX / PCX-optimized model, DirectX 9.0a . Of course, he did not become a panacea for NV35 , but he was remembered:

However, due to the very extreme complexity of the NV30 microarchitecture, some very interesting nuances in NV35 remained unchanged. Therefore, in its specifications, reviewers often indicated something like:

This one is the very “simplest components” that I mentioned at the beginning of the article. Yes, the terminology is somewhat strange, but that's not so bad. In fact, in this way, marketers simply tried to convey the simplest things to us: how many vertex and pixel pipelines (shader units, if you want) the chip has.

Like any normal person who has read such a specification, my normal reaction is: well, so how many are there? So the whole thing is that no one knows this :) It would seem that the most ordinary characteristics of a graphics chip, on which you could immediately draw some conclusions about performance?

Well, yes, the same ATI R300everything is transparent: 8 pixel and 4 vertex shaders ... Ok, you could run the same GPU-Z and he, following the established terminology, would tell you that against this , the NV35 has only 4 pixel and 3 vertex shaders. But that would not be entirely accurate, because at the dawn of movie games, NVIDIA had its

Be that as it may, if the main goal of NVIDIA engineers in those days was to vividly inherit in history - no doubt they succeeded! Moreover, another interesting feature appeared in NV35 , the competitors didn’t have an analogue of it: UltraShadow Technology , which, however, will be discussed later :) But let's stop talking about the beautiful and come back

Fortunately , I don’t have a dastbuster ... (but you can always look at a museum exhibit ). Fortunately, because ears are more expensive . In addition, he and his sophisticated brother, GeForce FX 5900, were disproportionate to a simple person’s wallet with simple needs. And for such people, NVIDIA offered options for the GeForce FX 5600 on the NV31 chip , but I didn’t get it either.

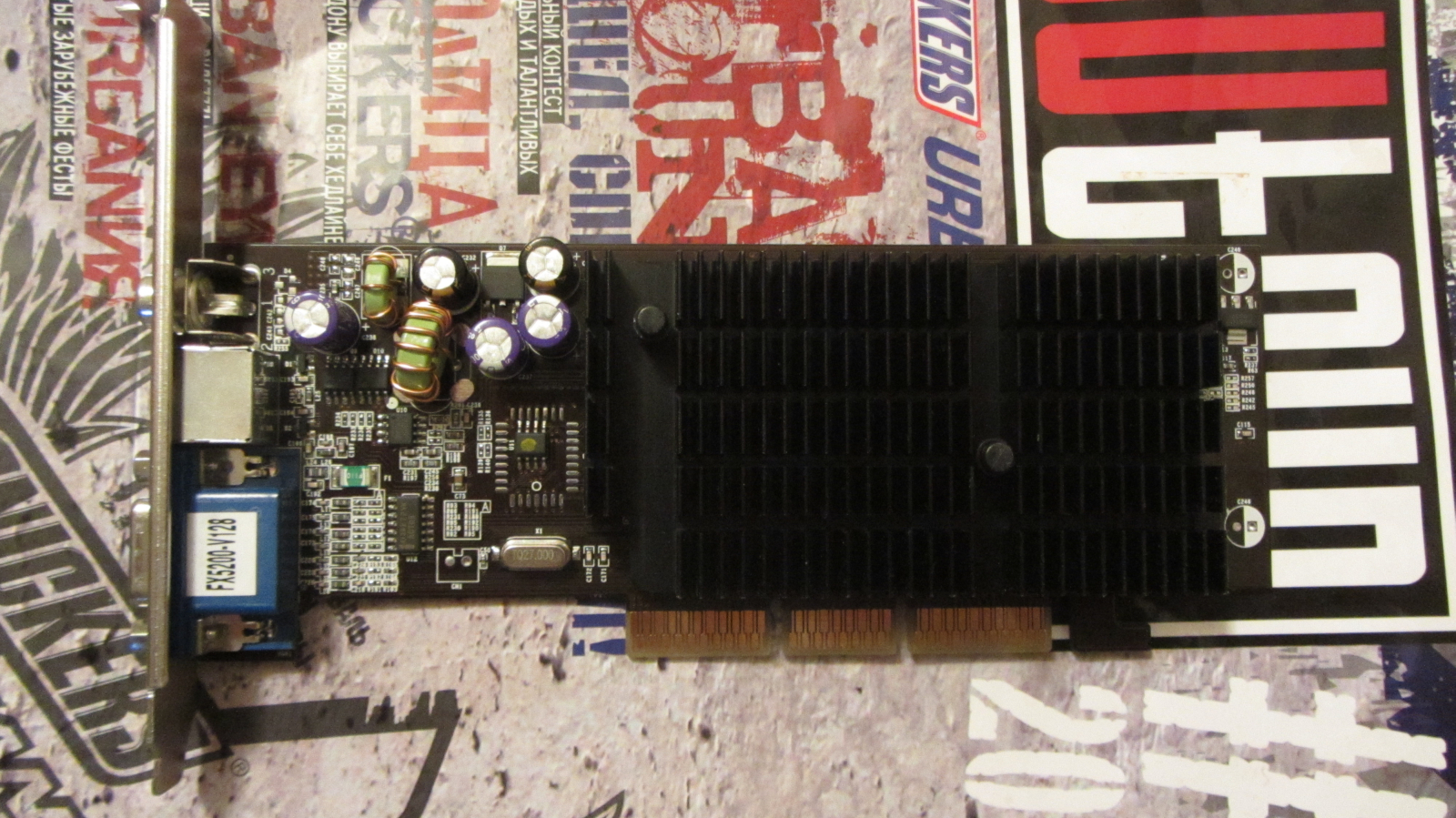

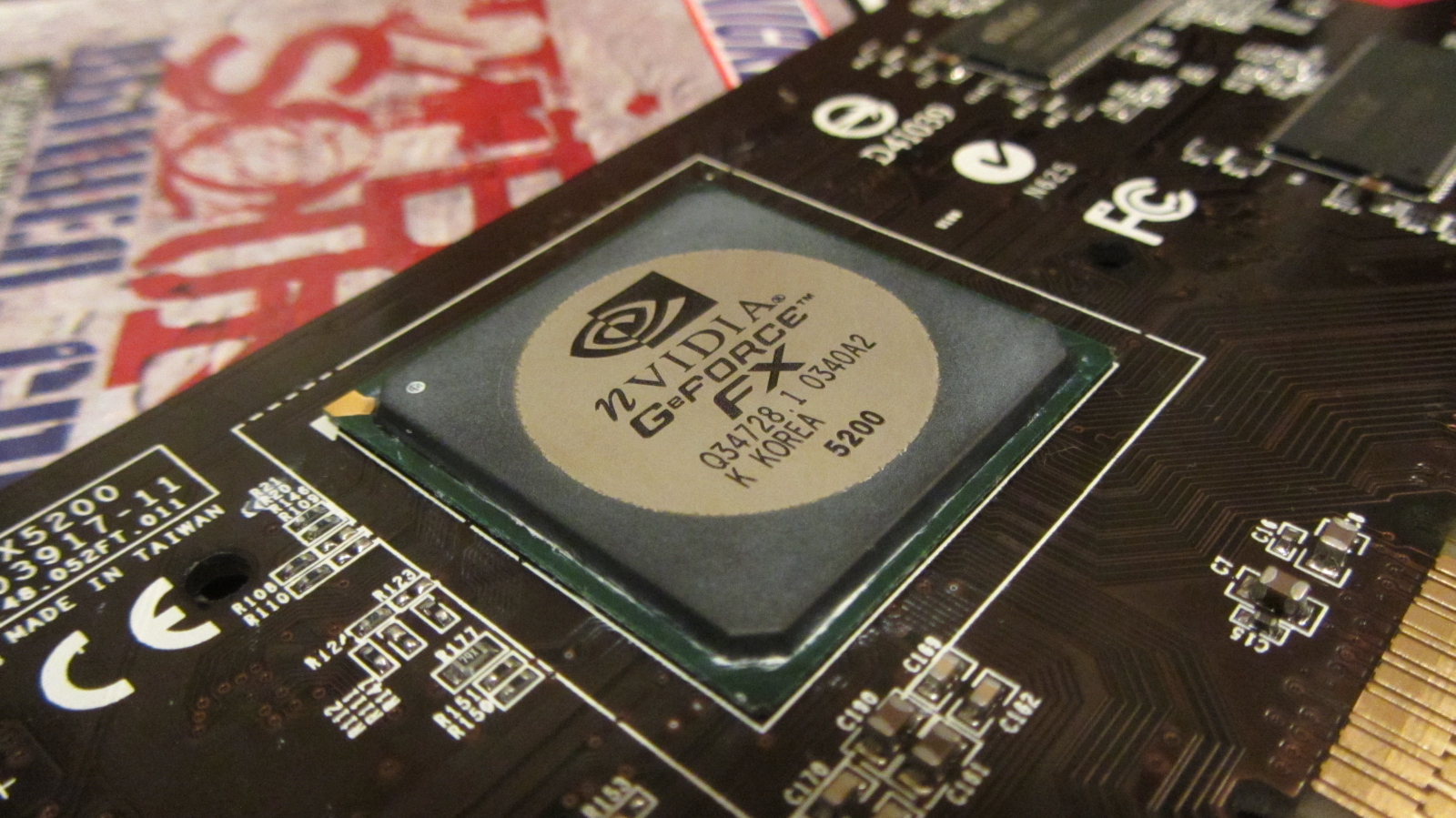

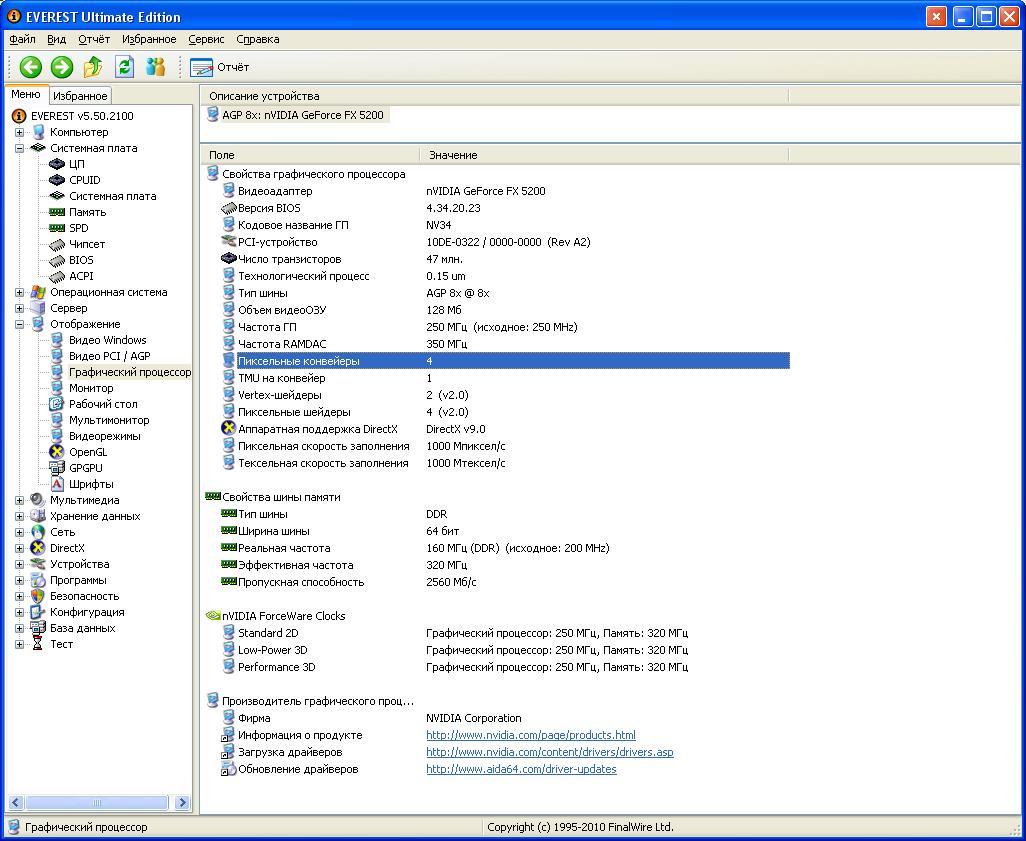

And now, by chance, after 12 years, I can say, they “sucked in” such an office stub:

But it’s not buzzing! That is, in addition to the truncated NV31 was released more and NV34 , which is not different performance, but rather was a marriage NV31 .

Now briefly about what's interesting in NV34 did not fit :(

Apparently, almost nothing fit :) But the worst was not even that. Everyone knows this word.

Here, right now, so many of you must be smiling. This was probably the most epic implementation after TNT2.

If you don’t even remember this:

... then you should definitely remember this:

Needless to say, with the advent of DX9, vendors began to punch drivers with incredible speed, and they remembered WHQL only as a last resort. The performance of the same DX9 card could dance so dynamically that sometimes a DX8 card could become its partner. So it was, for example, with the GeForce MX 440 (NV18) , which from a certain version of firewood was emulated with SM2.0 emulation, but later stabbed back. For as something indecent turns: NV18 wins NV34 in shaders, which she does not even have ...

Here, for example, are good statistics on popular troubles with GeForce FX .

Nevertheless, there was much to be proud of. For instance:

This time I will not measure performance and show you boring shots with fps monitoring. This is not at all what interests me in this generation of video cards. By and large, on this generation of video cards, my interest in them ends, because Geforce FX were the last cards that had something unique that their competitors did not have. That's it about this in the first place.

At the same time, I decided to diversify a lot: let's see different features in different games :) If interested, then the graphics settings in the games: “maximum rich colors”! Cinema after all, or where? True, cinema permissions in games were not yet provided for then ...

But before we rush through the shaders, I would like to warn: the pictures are actually much larger than the ones shown on the page. Where it seemed to me necessary, I provided for the possibility of clicking on the picture in order to open the source - do not neglect if you are interested! Also below will be a few gifs. Gifs are such gifs that the quality of the conversion is inexorably reduced. This must be accepted, because their main function is animation on the web, and not a photo presentation in a museum. But you can click them too!

So the games ...

UltraShadow ... Sounds it, huh? We’ll talk about it here. There is a legend:

Although there like to start that likeme all the serious people on this planet, John Carmack'a was also a very interesting .plan . It is understandable, but how else to work? ;)

Firstly, thanks to this dotplan, John Carmack did a little more honest than Gabe Newell. He simply refused any proprietary OpenGL extensions when programming the DooM3 engine, focusing on the publicly available ARB _ *** extensions. Although for compatibility of the engine with old non-DX9 cards the corresponding codepaths were written, everything was fair with the new cards: they had to fight on equal terms in its engine. Now many of you will protest, they say, but what about:

Let's analyze this moment in more detail. In fact, further experiments with the NV30 did not go away. Perhaps John’s simply could not stand the membranes:

Be that as it may, but only 6 “working” code reserves (and 1 experimental :) were included in the release.

NV10, NV20, R200. As the name implies, these three of them are specific to some chips.

Cg, ARB, ARB2. These three are universal. And even despite the fact that ATI is still trained in Cg, this code pass was still disabled (for the honesty of the experiment?). So ARB2 here is the most sophisticated renderer.

As for the NV30 renderer , he stayed in Alpha .

So everything was honest in general. On the NV30 / R300, you saw DooM3 like this:

Whereas on some GeForce MX 440 (NV18) , for example, DooM3 was like this:

But back to the fact that the dot plan didn’t affect ...

The famous " Carmack's Reverse algorithm " and support for NVIDIA UltraShadow .

It’s interesting here. You could read about the algorithm by reference, but in general, the “Carmack hack on shadow” itself is nothing more than a way to get shadows “from the opposite”. By the way, this is not surprising, considering how the DooM3 engine itself is designed - id Tech 4 - after all, it itself is essentially a solid shadow :) And the NV35 just has hardware support for such frauds, by the way, like all subsequent NVIDIA cards . For example, my GTS 450 (highlighted):

But what kind of technology is this?

And it seems that the UltraShadow II features even featured DooM3 shots and are assured that the technology is being used. In fact, with the release of DooM3, it turned out that it was not until the end :

Nevertheless, the missing can still be turned on forcibly today (and in the last patch it is even turned on by default). In fact, you get only a boost in performance, but no visual differences. Well, thanks for that ...

Since we are talking about shadows, how can we not recall the so booming NFS: MW? I understand that it’s a strange choice, but only at first glance ...

... In those days it was very popular to say that NFS: MW is a good example of how you can cheaply and angrily emulate HDR .

It would seem true what we see throughout the game?

Overbright (Bloom):

Motion Blur:

and maybe sometimes we notice World Reflections : If you really wanted to, you could easily turn a movie outfit ( Visual treatment = high ): into elegant shorts ( Visual treatment = low ): Still, the charges of emulation they are undeserved here, because the same Bloom (without a doubt taken as the basis here) is still also an HDR effect. Just many other things in this game, for example, the rain effect or the shadow detail setting, are available only to owners of video cards more powerful than NV34 .

Well, here we are hooked on where we started. Particularly attentive could still notice a very offensive implementation of shadow maps. It is believed that the game simply uses shadow maps of low resolution. Perhaps this was a compromise, perhaps there wasn’t enough time to implement a more detailed adjustment of the shadows, but maybe again it’s about the code reserves ... Who knows? Who better than the EA compatibility check utility to know this better: In

total, on the NVIDIA GTS 450 the scene looks like this:

Well, at first glance everything is logical: on NV34 there are clearly fewer shadows (look at the shadow under the machine):

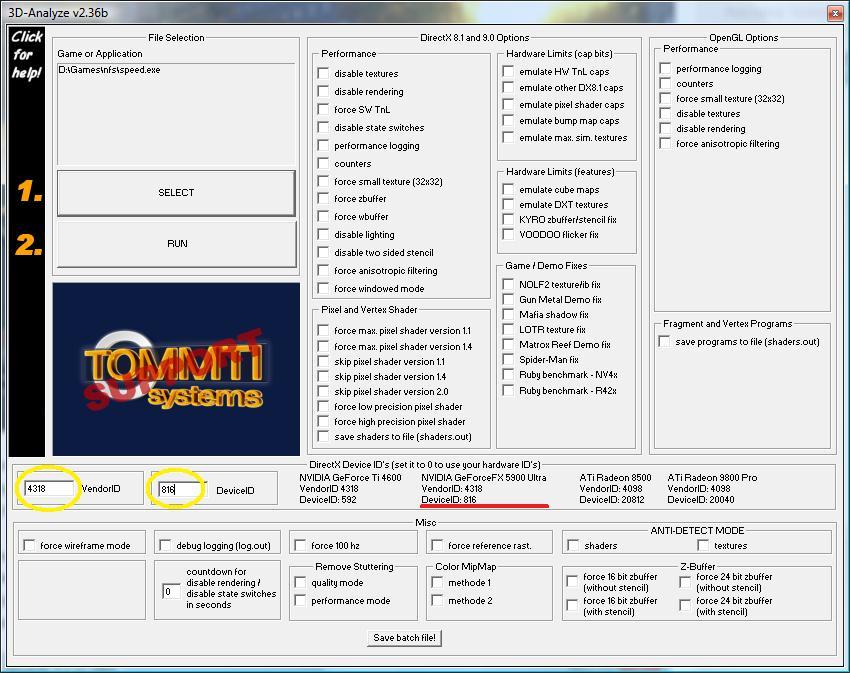

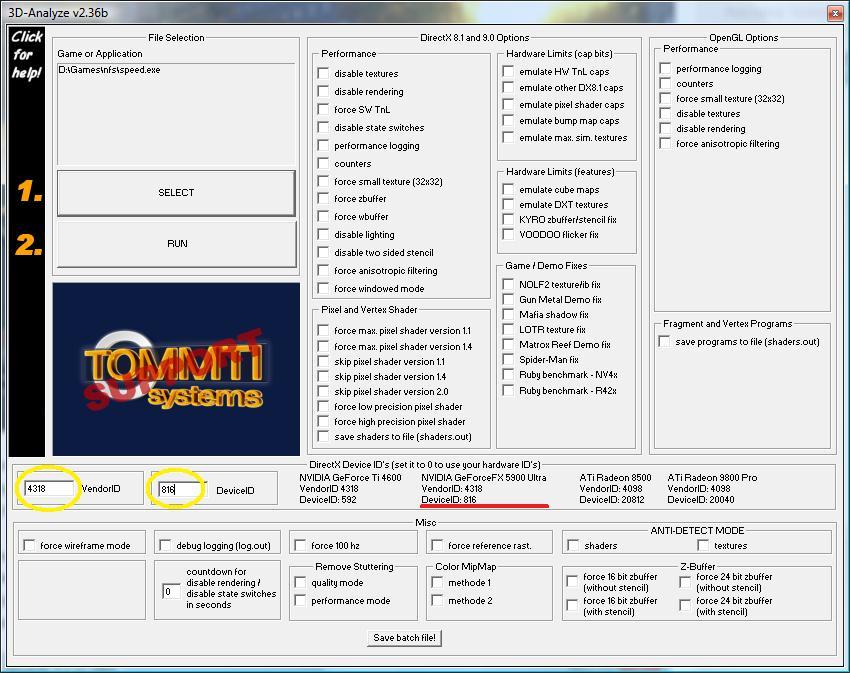

Now let's check the code reserves . For this we need 3D-Analyze : Replaced NV34

(FX 5200) on the NV35 (FX 5900), launch:

As you can see, now there is not much difference with the GTS 450. There are artificial limitations in order to ensure better performance. True, the quality of the shadows can hardly be called good anyway :)

By the way, on the Radeon 9600 ( RV350 ) it looks like this:

It is believed that Far Cry was the first full-fledged game that truly supports HDR rendering (unlike Half-Life 2: Lost Coast). At least its latest official version (1.4). Yes, you yourself see how gorgeous the famous water in Far Cry looks using HDR:

In general, after the release of patch 1.4, there was a complete set of all fashionable effects in Far Cry, for example, the same Heat Haze (look at the inscription on the wall or the lamp on the ceiling):

By the way, Ubisoft then actively advocated for the new-fangled AMD64, so that Far Cry was produced in two versions: x86 and x64 (by the way, it was exactly the same with half-life 2 ). Exclusive Content has also been released for the x64 version . As a result, an unofficial 64-to-32 bit Convertor Mod immediately appeared , allowing you to watch this Exclusive Content on a 32-bit system. Much later, an unofficial patch appeared before version 1.6 , in which, perhaps, This Exclusive Content has finally migrated to the x86 version of Far Cry. I suggest you check this moment yourself.

Yes, if this is not enough for you, you can play around with the experimental OpenGL renderer included in the package. How to do it is written here (read from the words System.cfg ).

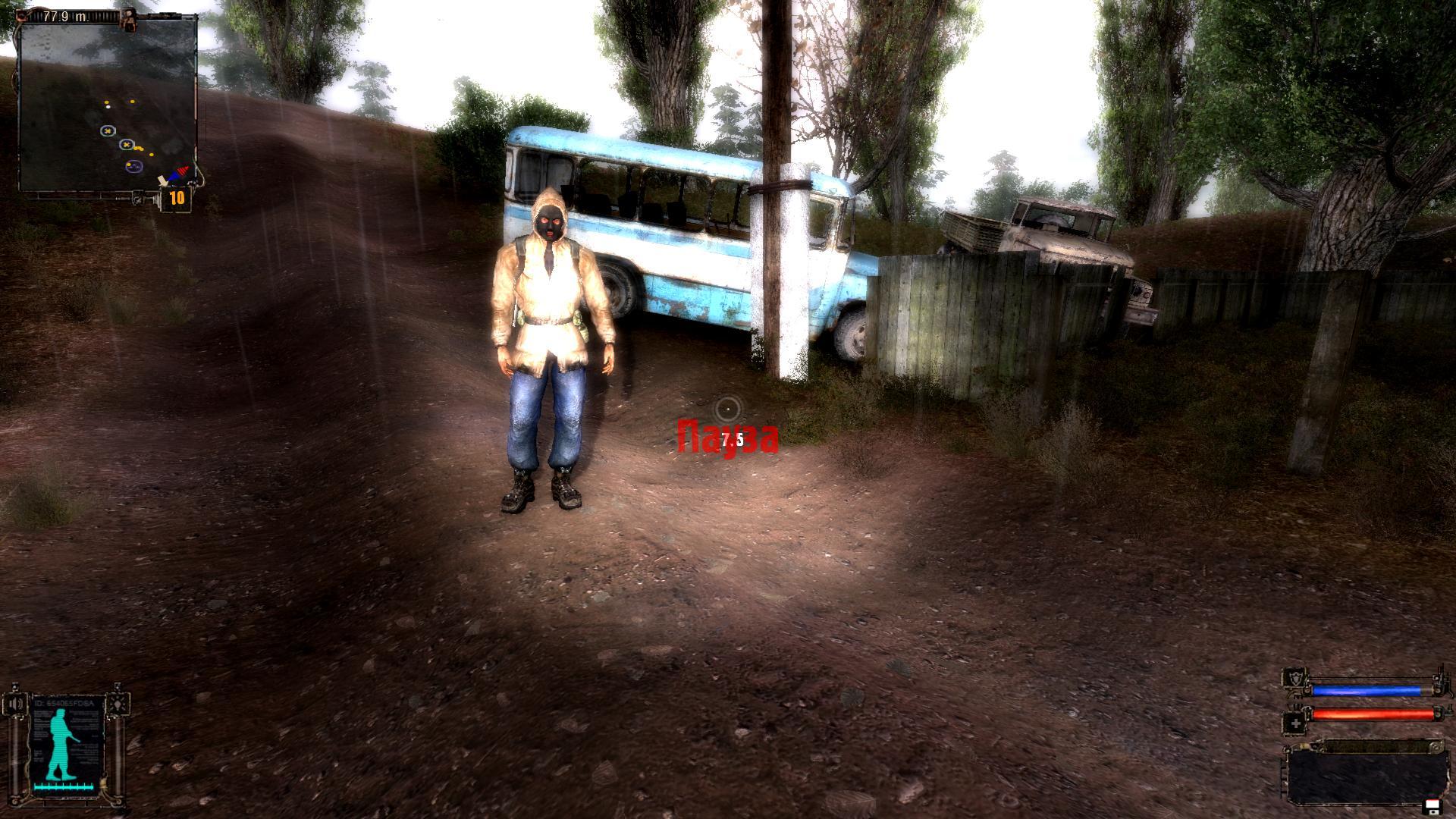

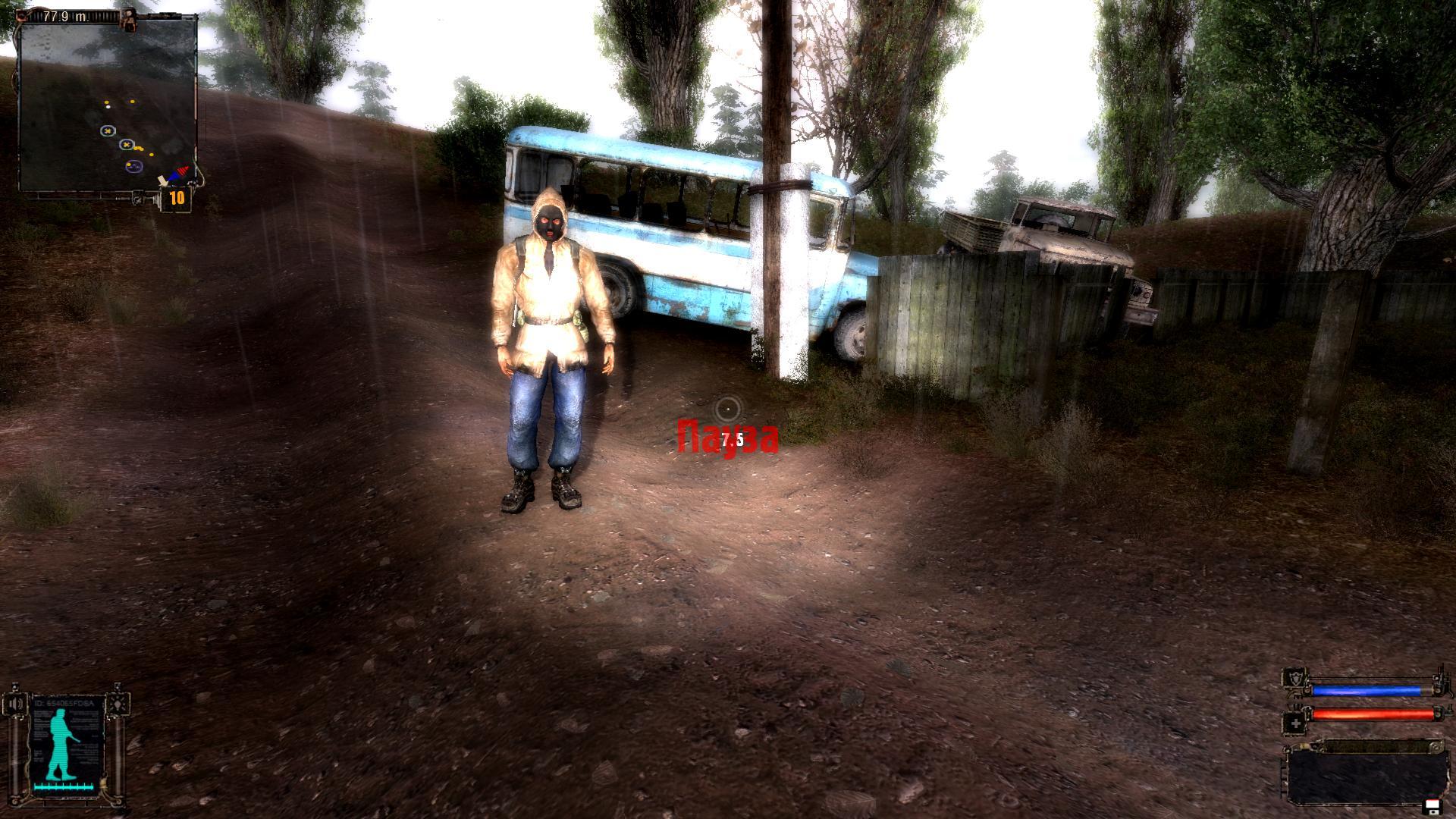

That's really “SHOCK”, given that at first one game was developed , then another , but in the end they got Sh adow o f C hernobyl . But 7 years of development, one way or another, have borne fruit: the X-Ray Engine was the first engine to use the revolutionary concept of rendering Deferred Shading . It is believed that STALKER is more of a DX8 game with some elements of DirectX9 capabilities. So this is fundamentally wrong . To be precise, exactly the opposite is true. The X-Ray engine contains three complete renderers: r1 (Static Lighting - DX8), r2(Objects Dynamic Lighting - DirectX9) and r2a (R2 + Full Dynamic Lighting). Just look at the console and type “help” to see the scale of the options for each renderer.

You can talk a long time about this game, which is amazing from all points of view, but let's limit ourselves to what was already said above ... and still add that STALKER is one of the first games where the volumetric texture rendering technique was applied - Parallax Mapping. However, there is a nuance. In the original version of the game (with the latest patch 1.0006), this technique cannot be controlled via the console (or I didn’t notice any changes) and in general there is an opinion that the shader code responsible for its application does not work as it should. Therefore, there were a lot of fans who rewrote these and many other STALKER shader effects so that it would be clear to the end user that his system can be seriously loaded with useless calculations ... and, of course, to compare with anything. For example, you can compare the original and custom code STALKER Shaders MAX 1.05 It is debatable, of course. But since we are talking about custom shaders, there is one more thing that I would like to tell about. Background blur effect when focusing on the subject - Depth of Field

. 3dfx started talking about this back in the days of DirectX6, but then it didn’t reach a full implementation. In the original and here it was not, but in STALKER Shaders MAX 1.05 it finally appeared. The make-up was done poorly, but it’s too lazy to redo it, so look at how the background floats away with a direct look at the stalker: Perhaps it’s also not the most beautiful implementation, but it’s amateur, so it’s alright :) Now, what I wanted to tell: STALKER has two great implementations of the Bloom HDR effect. The default and the so-called Fast-Bloom, which is included separately. Here they are in action: Bloom Fast-Bloom

In view of the fact that Fast-Bloom is included separately, it is possible that there is some kind of complex implementation, and not an approximation by simple Gaussian blur. Or are these echoes of testing.

STALKER also introduced Motion Blur! To enable it, you need to start the game with the -mblur switch , and also prescribe the effect in the console. Then you get something like:

But ending up on this would be completely unfair to an engine such as X-Ray ... The fact is that the development was delayed and it was not fully debugged, but

that in STALKER there is even Global Illumination . That is, if it seems to you that the room clearly lacks light reflections:

... then you can say r2_gi 1 and then it will look like this:

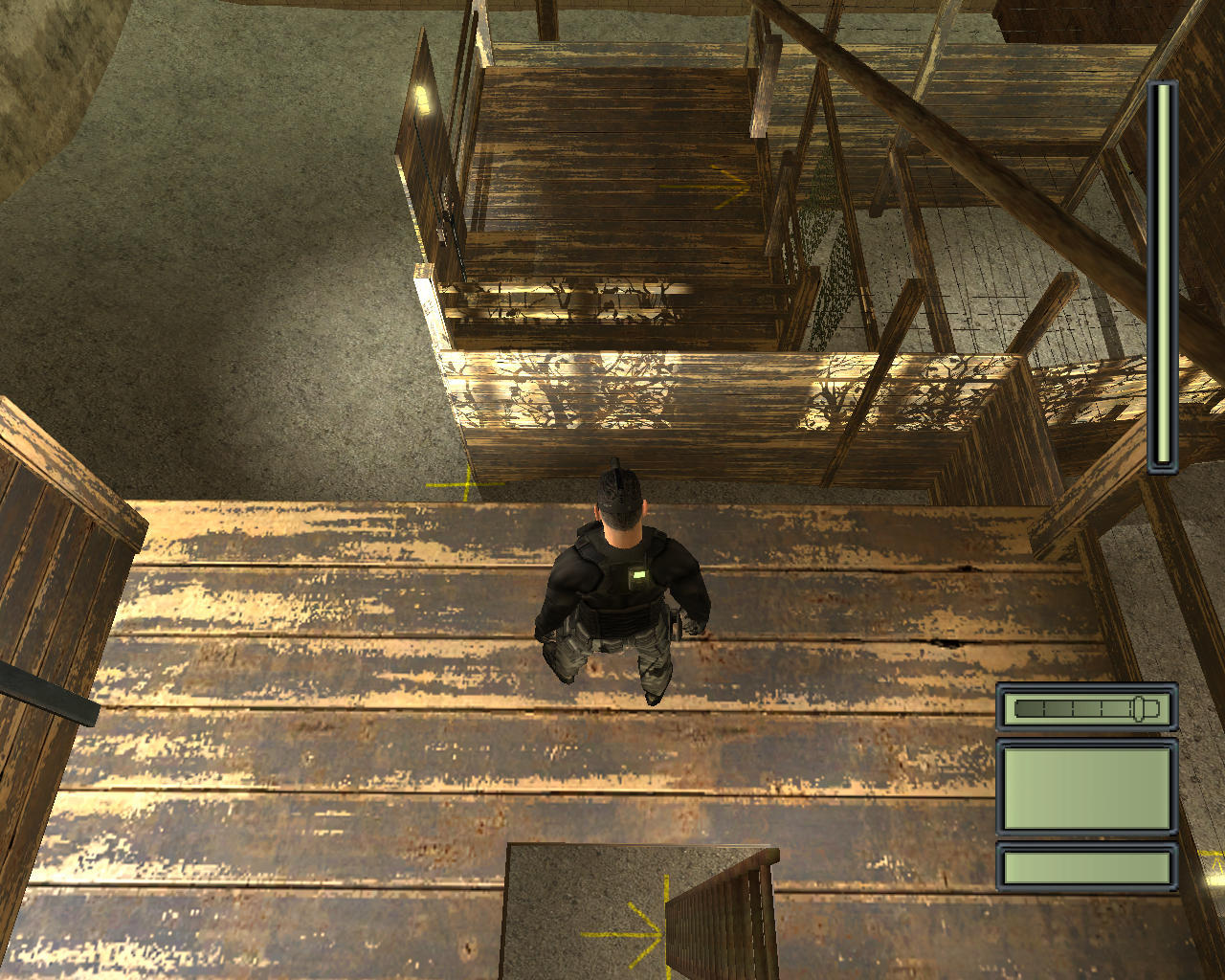

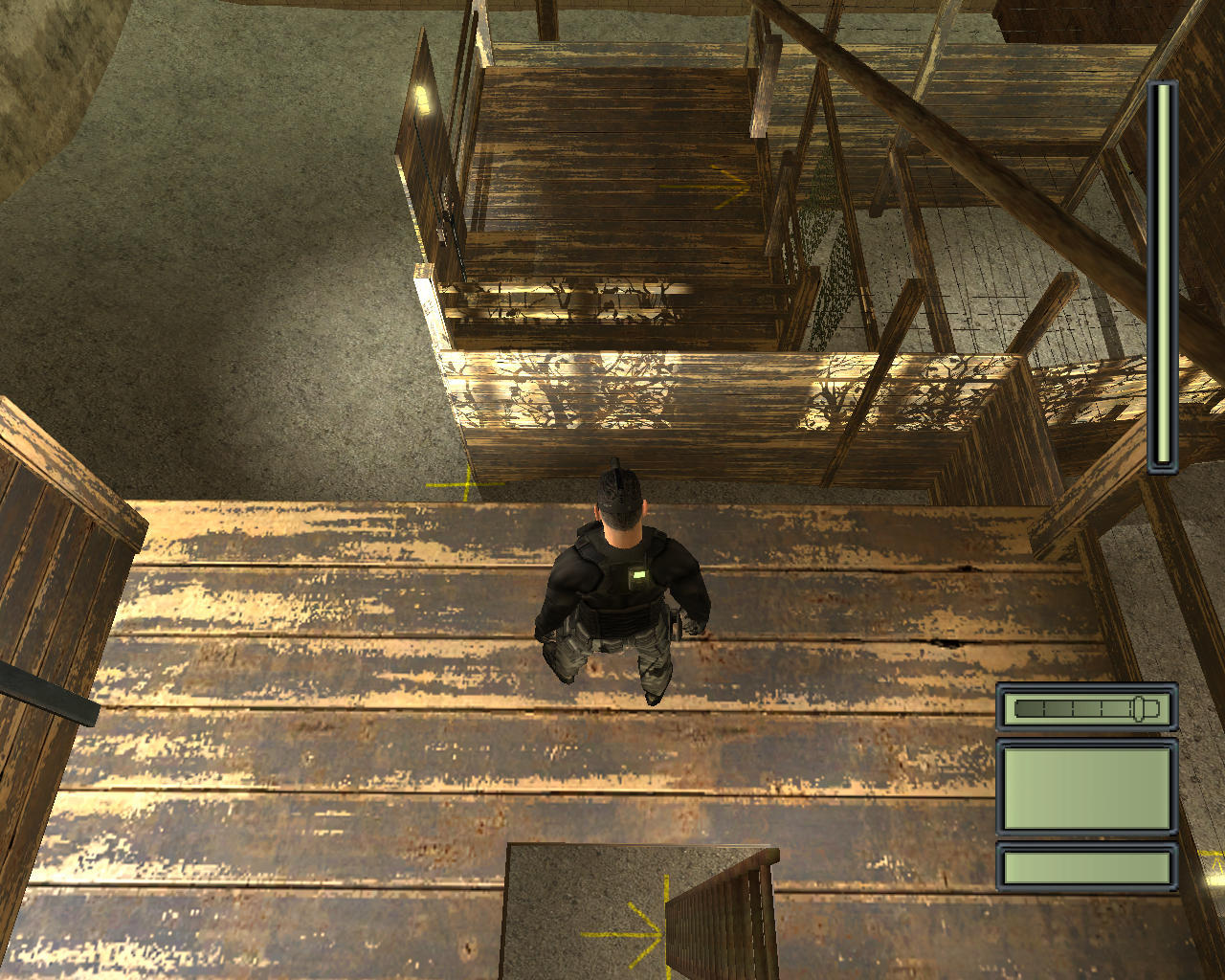

Ever since the GeForce4 Ti , the inconspicuous Shadow Buffers feature appeared in Nvidia cards . I could not find a complete list of games using this technology (probably because it is not), but any lucky owner of the then so acclaimed Xbox knew exactly what the shadows of the new generation looked like. Well, now we look at the brand feature in action, only on the PC ... but first a little theory:

I think the principle of casting shadows has been described quite clearly. From the foregoing, the following:

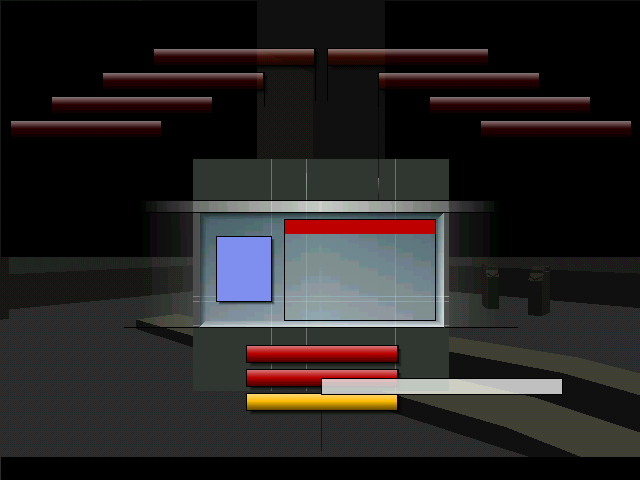

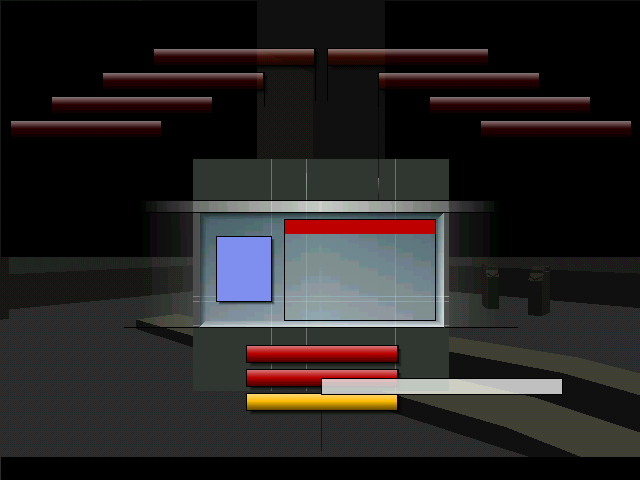

Well, here's how it finally looks on the NVIDIA GeForce FX 5200 ( NV34 ):

Projection Shadows: Shadow Buffers: For the sake of fidelity, I tried to look at the ATI Radeon 9600 ( RV350 ). Indeed, Shadow Buffers will not turn on here and 3D-Analyze will not save. Projection Shadows mode looks identical to NV34 : It’s interesting that the game in the Shadow Buffers mode will still start on the GeForce GTS 450 , but it will look like this: Which of these is correct (on the GeForce FX 5200 or on the GTS 450) is still unknown, so as there is an opinion that in the game itself there is an uncorrected bug with shadows

. But there is an opinion that the matter is in the nvidia drivers, so for a full immersion you need to use the old cards (after all, the new cards just do not work with the old drivers).

Fortunately, the same problems with shadows in the next part of the splitter (which Pandora Tomorrow ) today are partially solved by a fan wrapper .

This could start to end, but it seems to me that the story would be incomplete without mentioning another remarkable technique ...

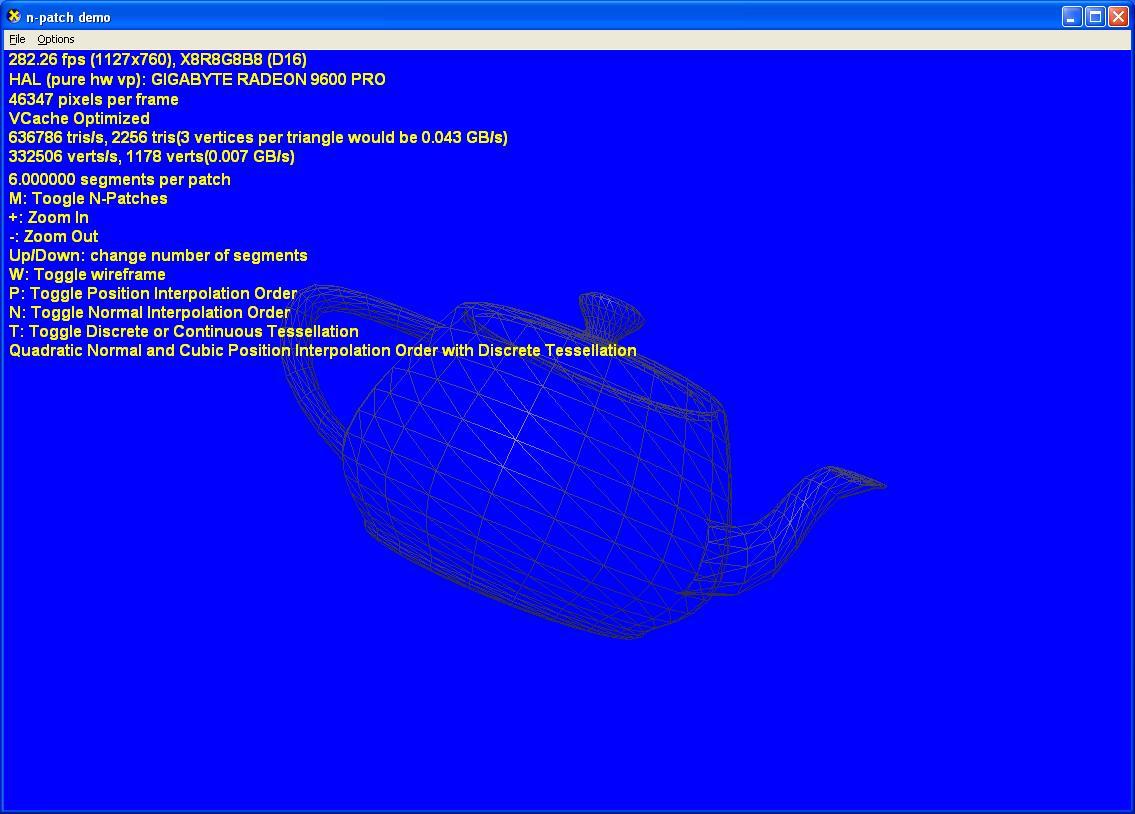

Let's start with how ATI inherited the history of their TruForm hardware technology . Scientifically, this is called N-Patches (or, equivalently, PN Triangles ). It appeared in Radeon 8xxx, but the peak of popularity came, perhaps, just at the time of Radeon 9xxx ( R300 ). I can understand if you were too lazy to follow the next link, so in two pictures about the essence of TruForm :

from such a teapot TruForm , independently increasing the number of primitives , will make you like this:

This was called adaptive tessellation - the use of a video card chip to increase the initial number of primitives based on predefined ones transmitted by the application (game). It was promised that it will be possible to see the action immediately in all games and no support from the game is required. In the hope of this, I chased the training grounds for a long time in my favorite and not so games, trying to see it in practice. So in fact it turned out that in the last firewood for RV350 (Catalyst 10.2) TruForm was stupidly banned. You see, there is an opinion that the clever TruForm technique rounded up absolutely everything that was in the square scene, and the same wooden boxes with supplies became round. The equipment was first modified, but then just thrown out of the drivers.

But we have a historical interest with you, so this is how it actually looked in the Catalyst 5.7 drivers:

Thus, contrary to our expectations, TruForm could only be seen if the game really supported it. Oh, these marketers, ah! Well, since you can’t force it on all games in a row, then where can you see it? On the same Wikipedia, we are offered a small list of mostly games with third-party patches for TruForm, which is somewhat unauthentic. If we talk about games with native TruForm support, no doubt most of the first time saw the true forms of Serious Sam . Since I personally don’t have any sympathy for his forms, I venture to suggest you look at the true forms of Neverwinter Nights :

And here’s how it really was with the boxes: And everything would be fine, but already in Catalyst 5.8 it was really possible to observe their majesty artifacts: And then the feature was finally buried. So what am I doing ... You see, at about the same time, NVIDIA also inherited similar technology in history. They began to use a technique called Rectangular and Triangular Patches ( RT-Patches ) back in Geforce 3 Ti , moreover, in hardware. It's funny, but the problem of implementing RT-Patches

consisted, in fact, of the implementation itself. If we wanted to make a game engine for this technique, all surface formulas for their adaptive tessellation had to be put into this engine at the development stage. Accordingly, if we want our engine to work on any other map without RT-Patches support, we need to rewrite all the polygons anew classically. Total double work.

This is probably why RT-Patches did not find support from game developers, but it is claimed that they could be seen in Aquanox .

True, there is an opinion that:

A couple of people on OG honestly tried to find drivers that supported RT-Patches ( ! And such were found! ), But somehow did not move further.

Interested in the question:

In general, everything, but ...

The FX the GeForce . How much in this word :)

It is known that GeForce is an abbreviation for Geometric Force. This was also called the NVIDIA GeForce256 in view of the presence on board of the revolutionary GPU (Geometric Processor Unit), which was then often called Hw T'n'L.

And here comes the FX :

Ah, Sure not! :)

Compatibility with Glide

While NVIDIA engineers persistently dubbed their brainchild ( NV30 ) to a working state, marketers sold pre-orders as in Vaporone advertising. To increase potential buyers, a new NvBlur API was even developed , one of the main features of which was backward compatibility with the Glide API. Of course, NvBlur existed no more than 3dfx Voodoo 590 or 3dfx Voodoo Reloaded .

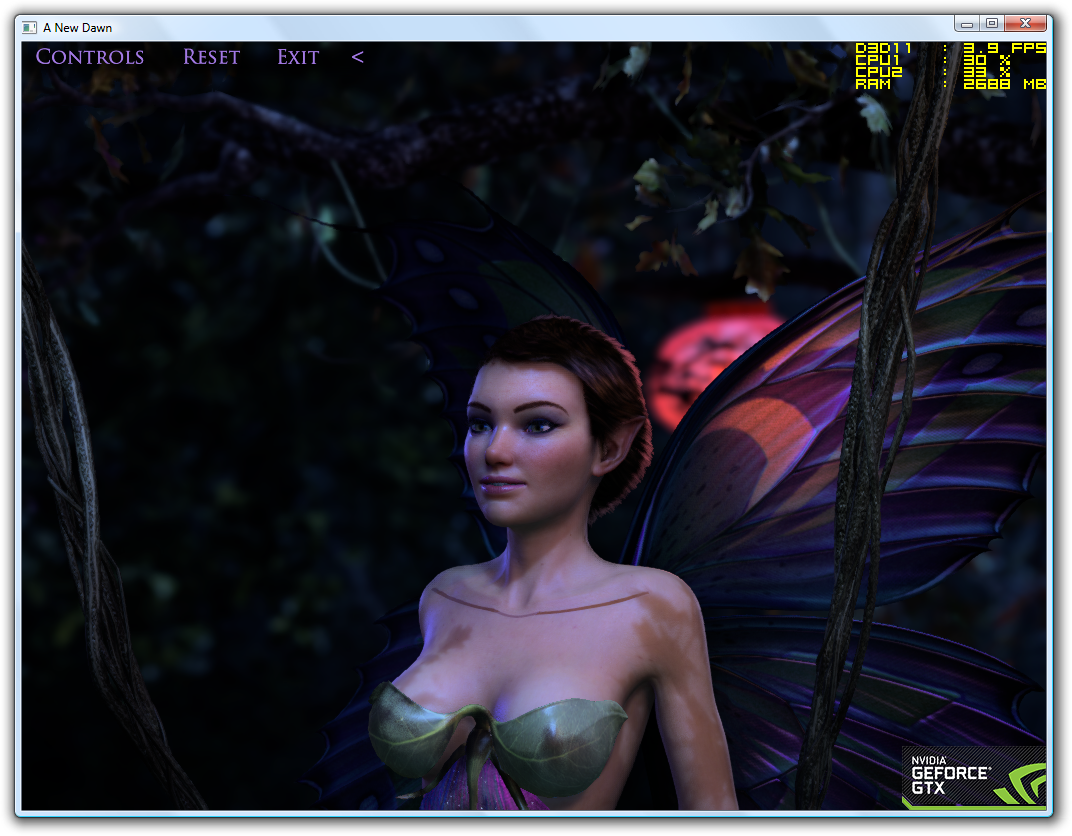

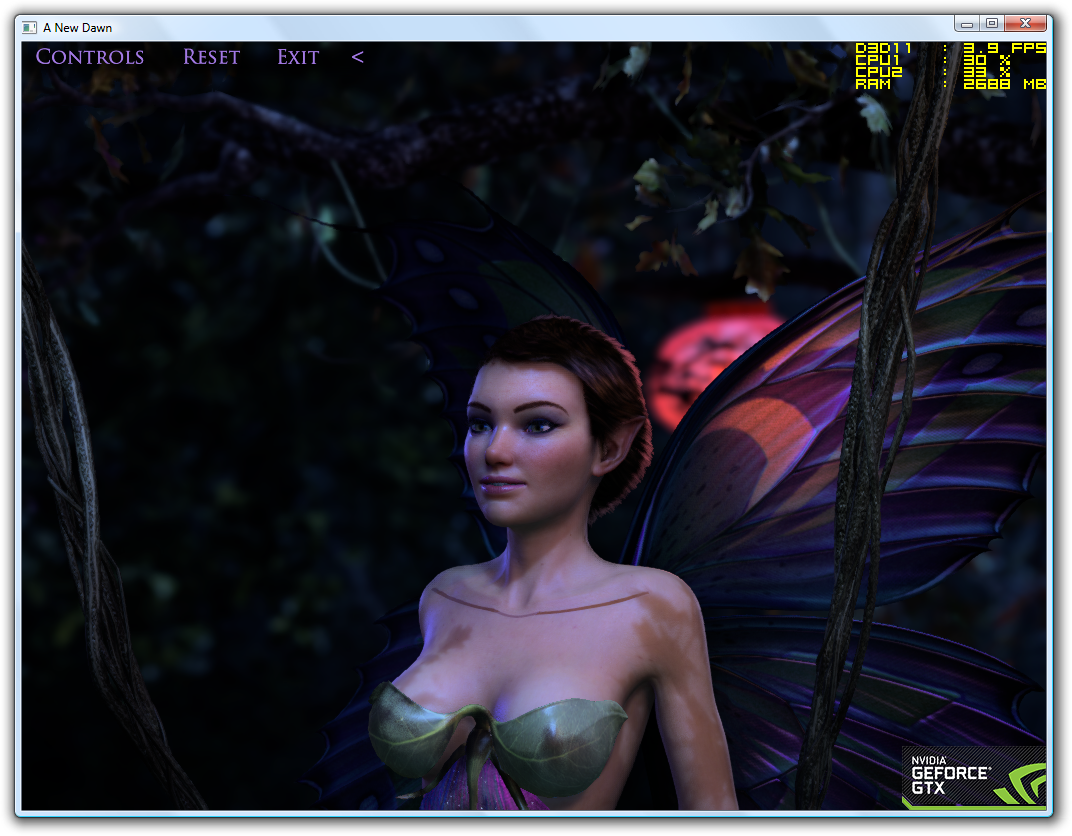

A new Dawn

Well, yes, if anyone remembers, in 2012 NVIDIA resurrected the demo . When you look at such things, sometimes it seems that this is a really shot video. It was DirectX11. But even this is no longer where the gaming industry is now ...

In general, of course, this whole epic with a sunrise in floating colors inspired something like the following:

At the next GDC, a game developer looks with disgust at the Duma3 demonstration on a 1024x768 screen. FPS do not even show.

Developer : - Well, tell me why, after so many efforts, I again see thin on the floor of the screen and monsters the size of a minimap?

NVIDIA : - It was because of ATI that we did not manage to do anything!

ATI : - Yes, Microsoft set the tasks again ...

Microsoft : - By the way, beautiful slides are also movies!

In general, the sunrise did turn out to be controversial, but the sunset was still what was needed:

At that very sunset, NVIDIA managed to regain its status with its next NV40 chip, which came on time and heroically fought with the ATI R420 biathlon in Half-Life 2 . But we all know: all thanks to the fact that the NV40, although it was considered a new architectural solution, still consisted of at least 70% NV30water .

The administration of old-games.ru for the ability to quickly and free download 20 games in bulk just to check out the file structure and run it once.

To a man who, in a fit of intrigue with my upcoming reviews, has thrust me this invaluable in all senses unengineered example of past excitement and greatness :)

And as we all remember well) the next year 2003 marked the arrival of cinema graphics on the PC. Well, yes, that’s how it was: WinXP, DVD games that require DirectX9 to be installed, and ... identical video cards with some kind of shaders. Conditionally, we can say that the DX9 specification was supposed to put an end to differences in the results of rendering the same image on cards of different manufacturers . However, even this specification could not finally curb NVIDIA. And rightly, otherwise why would NVIDIA invest in something promising?

And here we come to what prompted me once again to stick a corpse with a stick and still write this review.

Danger: Inside a lot of BIG pictures. Only the first is TWO megabytes.

Perhaps you did not know either:

...

David Kirk adds a bit of back-story as to how this technology was brought into GeForce FX:

When we did the acquisition of 3dfx, NV30 was underway, and there was a project [code-named Fusion ] at 3dfx underway. The teams merged, and the projects were merged. We had each team switch to understand and present the other's architecture and then advocate it. We then picked and chose the best parts from both.

...

www.extremetech.com/computing/52560-inside-the-geforcefx-architecture/6

By the time of this statement, the GeForce FX was already a notable long-term construction and was very late to the market. You can say that NVIDIA once managed to avoid that very day

Out of the corner of my eye

Indeed ... The first thing that rushed to the edge of our eyes at the sight of the legendary dastbuster (GeForce FX 5800) was the dimensions.

Here is a two-slot monster next to the Radeon 9800:

To understand the reason for such an extraordinary solution, we will have to take a closer look ...

Closer to heart

The GeForce FX, although it was not the first superscalar GPU in the world, was without a doubt the most complex superscalar GPU of its time and (it remained so for a long time). So complex that accurate information about the seemingly simplest components of its internal architecture is still missing. As a result, his market entry was postponed ... postponed ... And finally, he came, long-awaited.

NV30: The Dawn of CineFX

To be precise, this most complex architectural part of the NV30 was called the CineFX (1.0) Engine . NVIDIA marketers then decided that it would sound more attractive than the nfiniteFX III Engine . Controversial, but true.

CineFX (who did not understand: Cine matic Ef fe cts ) - in many respects the merit of 3dfx engineers (remember the sensational T-Buffer). It contains everything you need to render cinematic special effects in your home.

You may ask: what does a beginner cinema need? Everything is simple. Beginning cinema will need only the most necessary: 128-bit precision data representation for working with floating point andFully programmable graphics pipeline with support for Shader Model 2.0, allowing you to impose up to 16 textures. Are you intrigued? Yes, this is here:

- 64/128 bit depth rendering - the Dawn of Cinematic Computing

In the world of Trukolor, such a marketing statement would sound a little strange. I will only inform all ignorant people that from this moment the dull world of Trukolor has remained in the past . And, if you still think that all new games will render the image in 32-bit color ... You, of course, are right ... Only in part. Because, in fact, many (if not all) of the "parts" in games are now calculated on the way to your monitor with much greater accuracy, called Floating Point Color Accuracy .

Of course, I did not believe it. And, as it turned out, I was not the only one who did not believe it, because there was even such a necessary programmine ShaderMark 2.1 on the network, which, as the name implies, was created just for this: testing the performance of gpu shader units. And now, imagine, there really is a difference not only in speed, but also in color with different precision presets! The quality when converting shots to gifs, of course, fell, but not less than the “corrected believe”: But most importantly, thanks to such a bit depth of the graphic conveyor, we were finally able to observe movie graphics in games. The essence of HDR rendering is not only in stupid rendering of pixels accurate to thousandths. HDR rendering is primarily adaptive lighting. Too lazy to read, I will show

Too lazy to read, I will show

In the animation above, you can visually observe an effect called Tone mapping . Everything is just like in life: when the esteemed Mr. Freeman looks directly at the light source (the sun), it seems very bright to him, and everything around him seems much darker than the sun. When the esteemed Mr. Freeman deigns leisurely to turn his head (to the side), his eyes gradually become accustomed to a new balance of brightness: now the sun is somewhat on the side and does not seem so bright, and everything else becomes brighter.

By the way, what is still important to note : due to the fact that now the entire graphic pipeline in GeForce FX works with at least FP16 accuracy , even old toys (where multitexturing and other techniques that require pixel color mixing in the frame were actively used) will look better. - Shader Model 2.0 +

Yes, these are the same notorious “shaders”, thanks to which you probably had to go to the store to change your video card or just bought a game. To begin with, let's decide: these same shaders ... but who needs them at all?

The answer to this question in the general case will depend on the context in which it is asked, since the term “shaders” is generally ambiguous. As usual, for starters, it is proposed to determine what they are needed for. That is, if the statement “shaders are shading programs” fully represents shaders for you, I strongly recommend that you read at least this:Very simplified viewBefore we move along the assembly line, here is its algorithm for you:

Even if you’re not a belme, I suppose you already guessed that there are two options for processing the geometry of the scene and its rendering: with shaders and without ... Okay, and now a little history .

What happened to the shaders?

At the dawn of the development of 3D rendering, it was decided to strictly standardize 3D pipeline (aka “ Graphic Pipeline ”). Thus, video cards could be considered as “pixel-blasters”, because all they did then was eat driver commands and spit out pixels on your screen. As a result, game developers (game developers) were severely limited only by the rendering capabilities that this or that video chip provided them.

Say, you could visually see the situation in Unreal or in Quake II, each of which has several APIs to choose from (API - a program interface for "drawing" a video card).

So, for example, in Quake II, when choosing the OpenGL API , the hardware 3D capabilities of the video chip were used. Thus, in this case, the video chip will independently impose textures on objects and calculate special effects. And everything in the game was beautiful, only the water looked like glass:

John Carmack offered you an alternative: API Software , which used a central processing unit (CPU)instead of a video card for calculating effects and texture mapping. In this case, the game could implement absolutely arbitrary special effects, and the video card only drew ready-made pixels. Especially not to see colors (after all it is expensive!), But here the water was mobile (imitation of waves). Note the distortion of the bottom or wall on the horizon:

Why? It was all about the standardization of the graphics pipeline of video cards. A specific set of features, for example, special effects, was firmly sewn into each specific video chip. Exactly this effect of moving waves was impossible to obtain using hardware 3D-rendering (perhaps you could get a similar, but not the same).

What hasn't happened before the shaders?

Absolute power (over the conveyor belt)! In those years, use, say, the bump-mapping effect(embossed texturing) of the chip you could, but the chip will make it only along the tops of the figure . And this algorithm is not subject to change! That is, you get only what the chip can do.

Consider the example of a favorite donut and a Voodoo DX6 video card that does not know how to shader:

Here is a donut consisting of 20,000 polygons:

Here Voodoo sketched a texture on it:

But what happens if Voodoo imposes a relief texturing effect on it:

Is it beautiful? This is because there are a lot of polygons from which our bagel is made. But this greatly reduces the rendering speed (FPS). And what we want is, first of all, to increase it. Well, we’ll have to reduce the number of polygons to 1352:

Great, here it is - our freshly baked bagel:

Now voodoo will lay a relief on him:

See how the relief "went" along the edge near you? Those. with a decrease in the number of polygons, FPS grows, but the embossed texturing on voodoo begins to look worse. Because it is applied to the vertices of the polygons, which are much smaller here.

So how do we get a more acceptable result without increasing the number of vertices? To do this, we need to apply bump-mapping pixel by pixel . This is not possible on this video card, since it does not know how to use shaders. Of course, you are still free to do pixel-based bump-mapping programmatically via the CPU (without using the hardware capabilities of the video chip). And it will be oh how slow, you know ...

All this led to the fact that soon in the 3D industry, shaders came up and gradually began to implement them in the graphics pipeline.

What exactly do shaders give us?

As you probably already guessed, shaders allow us to get some control over how exactly the video chip will apply this or that special effect. To be precise, it has become possible to even apply absolutely any special effects using the video chip , and not the CPU. Thus, shaders potentially give us the ability to render exactly the effects we want. And also much more, but we will not talk about this here.

What kind of shaders are they?

There are many versions and types of these same shaders. Versions are usually characterized as Shader M odel xx (for example, SM 2.0), and the most common types of shaders are pixel, vertex and geometric. Obviously, the higher the supported version of SM , the more absolute the chip will provide us with power. And you can use this power with the help of programming.

That is, from the point of view of the game developer, a shader is a kind of program code written by him that explains to the video chip what it will need to do, for example, with a specific polygon in the scene. In addition, shader programs can calculate (and very often do in games) any complex mathematical equations in order to further use the obtained values. That is, it is completely optional that every shader program “obscures” anything at all.

Be that as it may, shaders firmly entered our lives and began to develop more and more. In particular, NV30 support for Shader Model 2.0 (hereinafter SM2.0 ) is a generic term meaning that the NV30 now supports Pixel & Vertex Shaders 2.0 specifications. But NVIDIA did not stop there, and the very “ plus ” in the title was designed to add freedom to game developers by expanding the DX9 specification to testing ranges .

All this is very interesting ... you say, but how do you use these shaders in general? Well, here it’s still more interesting :)

With the advent of SM2.0for both types of shaders (pixel and vertex) it became possible to write quite complex programs with conditions and loops. The only question is in what language did you prefer to do this. In the era of SM1.x, the choice was small and they were written mainly on the ARB assembly or DirectX ASM , which, as it becomes clear even from the names, are low-level and quite difficult to learn and use. With the advent of SM2.0 , at least three high-level C-like languages appeared, writing shader programs on which is much simpler:- OpenGL Architecture Review Board developed GLSL ;

- NVIDIA developed C for Graphics (and not only for itself);

- Microsoft has developed HLSL .

But which of the shader languages to prefer and what depends on it? Alas, I will not introduce such nuances . Let's better remember the implementation of these same shaders in the NV30 . - OpenGL Architecture Review Board developed GLSL ;

Implementation

Most of the peaceful discussions about the performance of one or another device most often somehow fall into a holivar on the topic “What is more important: Iron or Software? In fact, no matter how many people would not want to get away from reality, but hardware without software - it is hardware :)

At the time of the release of the GeForce FX 5800 ( NV30 ), a competitor in the face of the Radeon 9700 Pro ( ATI R300 ) was amusing for more than a quarter already . It was February 2003. Today, such a delay in death is similar, and that is why we can safely call 2003 the year no other than the year of NVIDIA.

It was like this: Comes NV30to the labor exchange, and there is not one in the list of vacancies with DirectX9 knowledge. It turns out that the NV30, as it were, wasn’t late anywhere ... Indeed, the first any known powerful games for DX9 (such as DooM3, HL2, NFS Underground 2 ) appeared only in 2004. The long-awaited Stalker is already in 2007.

I’m all about the fact that there was simply nothing to compare the performance of the cards for reviewers in 2003. To be honest: when writing this article, I analyzed the picture for a long time, but I never met a single NV30 review with live DX9 tests. Basically, the capabilities of DX9 were tested in 3DMark ...

And one coolstory is connected with this

If anyone remembers, it started back in GeForce 4: The bottom line :

As a result, all this, like any other charity, gave rise to a fascinating drama ... In short, if you turn off the rails on the NV30 in 3DMark 2003 (you need a development version), you could easily see the results of such optimizations:

We can assume that the optimizations consisted in Do not draw what should not be seen on the rails. And we can assume that the whole point is in poorly debugged optimization of shader code execution in drivers, which we will also discuss below.

... By the way, ATI was also seen behind similar optimizations at the same time. Nevertheless, all of the above could still be called such, when compared with the way in which it all began:

...

NVIDIA debuted a new campaign to motivate developers to optimize their titles for NVIDIA hardware at the Game Developers Conference (GDC) in 2002. In exchange for prominently displaying the NVIDIA logo on the outside of the game packaging, NVIDIA offered free access to a state -of-the-art test lab in Eastern Europe, that tested against 500 different PC configurations for compatibility. Developers also had extensive access to NVIDIA engineers, who helped produce code optimized for NVIDIA products

...

en.wikipedia.org/wiki/GeForce_FX_series

As a result, all this, like any other charity, gave rise to a fascinating drama ... In short, if you turn off the rails on the NV30 in 3DMark 2003 (you need a development version), you could easily see the results of such optimizations:

We can assume that the optimizations consisted in Do not draw what should not be seen on the rails. And we can assume that the whole point is in poorly debugged optimization of shader code execution in drivers, which we will also discuss below.

... By the way, ATI was also seen behind similar optimizations at the same time. Nevertheless, all of the above could still be called such, when compared with the way in which it all began:

ATI Quake III optimizations

As a result, in those years very often one could hear something like:

...

NV30's apparent advantages in pixel processing power and precision might make it better suited for a render farm, which is great for Quantum3D, but these abilities may mean next to nothing to gamers. Developers tend to target their games for entire generations of hardware, and it's hard to imagine many next-gen games working well on the NV30 but failing on R300 for want of more operations per pass or 128-bit pixel shaders.

...

techreport.com/review/3930/radeon-9700-and-nv30-technology-explored/6

And, although the same John Carmack could easily argue with this statement, in any case, this is a clear confirmation that iron is nothing without software. Moreover, in fact, it turned out that the shader performance of the NV30 was disastrously dependent primarily on how the shader code was written (even a sequence of instructions is important). It was then that NVIDIA began to advance in every way possible the optimization of shader code for NV30 .

Why was this needed? And then, the NV30 with the implementation of shaders is not so simple:

...

Its weak performance in processing Shader Model 2 programs is caused by several factors. The NV3x design has less overall parallelism and calculation throughput than its competitors. It is more difficult, compared to GeForce 6 and ATI Radeon R3x0, to achieve high efficiency with the architecture due to architectural weaknesses and a resulting heavy reliance on optimized pixel shader code. While the architecture was compliant overall with the DirectX 9 specification, it was optimized for performance with 16-bit shader code, which is less than the 24-bit minimum that the standard requires. When 32-bit shader code is used, the architecture's performance is severely hampered. Proper instruction ordering and instruction composition of shader code is critical for making the most of the available computational resources.

...

en.wikipedia.org/wiki/GeForce_FX_series

That is, the NV30 architecture did not allow him to fully reveal himself using the code of the SM2.0 standard. But now, if you shuffle the instructions a little before execution, maybe even replace one with the other (hi, 3DMark 2003) ... But at NVIDIA they understood: it is much more efficient to deal with the cause and not with the consequences.

Take Two. NV35: The Dusk

This was not a new architectural solution, but a very competent improvement. NVIDIA engineers so fanatically corrected their mistakes that they even inherited in the history of shaders the officially documented Shader Model 2.0a (SM2.0 a ), which was named NVIDIA GeForce FX / PCX-optimized model, DirectX 9.0a . Of course, he did not become a panacea for NV35 , but he was remembered:

However, due to the very extreme complexity of the NV30 microarchitecture, some very interesting nuances in NV35 remained unchanged. Therefore, in its specifications, reviewers often indicated something like:

Vertex pipeline design: FP Array

Pixel pipeline design: 4x2

This one is the very “simplest components” that I mentioned at the beginning of the article. Yes, the terminology is somewhat strange, but that's not so bad. In fact, in this way, marketers simply tried to convey the simplest things to us: how many vertex and pixel pipelines (shader units, if you want) the chip has.

Like any normal person who has read such a specification, my normal reaction is: well, so how many are there? So the whole thing is that no one knows this :) It would seem that the most ordinary characteristics of a graphics chip, on which you could immediately draw some conclusions about performance?

Well, yes, the same ATI R300everything is transparent: 8 pixel and 4 vertex shaders ... Ok, you could run the same GPU-Z and he, following the established terminology, would tell you that against this , the NV35 has only 4 pixel and 3 vertex shaders. But that would not be entirely accurate, because at the dawn of movie games, NVIDIA had its

alternative look at shaders

When I decided to try to figure it out, just mind-blowing things started to surface. But since this is not officially confirmed anywhere, I immediately make a reservation here that all of the following is just a convincing hypothesis .

Let's start with the Vertex pipeline - a conveyor with vertex blocks.

The specification above apparently does not accidentally mention the term “ design ” because:

Yes, it seems that the chip ( NV35 ) was designed in such a way as to cope with tasks as efficiently as possible, distributing free transistors depending on the demand. Thus , if the NV35 has three large compartments with vertex units, then each such compartment can be transformed as you like: you can combine all forces and use “like one powerful vertex processor”, or you can parallelize tasks by dividing the compartment into several blocks . Thus, exactly how many vertex blocks will be involved depends on the case :) The minimum number of them in NV35 is 3 . By the way, even vipipipendia hints!

Pixel pipeline.

If information about the Vetrex pipeline was at least announced by NVIDIA themselves, then everything is somewhat more interesting. Initially, NVIDIA claimed that the released NV30 has 8 pixel pipelines, and each of them has one TMU (texture unit) . This is called the " 8x1 design ". However, almost immediately after the release, intriguing independent investigations were carried out , noting a clear discrepancy between the actual speed of filling the screen with pixels declared in the specification. I was somewhat surprised to learn about such investigations, but people could understand: after all, there was nothing more to do with DX9 cards ...

So, after intrigues and investigations, it turned out thatThe “design” of the pixel pipelines of the NV30 / NV35 is actually 4x2 (4 pixel pipelines with 2 TMUs each). All of these themselves NVIDIA indirectly confirmed by this statement:

But even this confession was not enough for the fanatics and scandals followed and, as a result, shocking interviews with sincere confessions:

So how did it happen?

Here I can even offer you my vision of the issue ...

Once again defeating all competitors in the market, NVIDIA decided to approach the development of the DX9 chip on a grand scale, because the possibilities allowed. The architecture of the NV3x chip was to be the mostcharming and attractive revolutionary and innovative in history:

In fact, the architectural solution, which exceeded all the requirements of DX9 and raised the bar of the operating frequency to 500 MHz, collected as many as 125 million transistors (at that time a record bar) in its first performance. Something had to be done with this army of parts: it was warming excessively. They decided to use the 0.13 micron process technology (innovative at the time), which would reduce heat generation. However, this was not enough - I had to use a vacuum cleaner for cooling.

Okay, here they fixed what to do with memory? There were no official DDR2 specifications then, but Samsung volunteered to help: any whim for your money! As a result, expensive DDR2 microcircuits were manufactured, but the trouble is: the memory bus width of the controller turned out to be 128 bits. Why is this bad?

This is not very good, because it was originally planned that NV3x will have 8 honest pixel pipelines, one TMU on each (8x1) . However, in this case, a problem would arise. As you know, the frame buffer in which all displayed pixels are stored is contained in this particular video card memory. We also know that the dimension of a typical pixel displayed on the screen is 32 bits.

If we have 8 pixel pipelines, then we can transfer up to 8 32-bit pixels (= 256 bit) at a time to our frame buffer. The trouble is that for this we need a memory bus bandwidth of at least 256 bits. But we have only a 128-bit memory bus at our disposal, which means that for the transfer of 8 32-bit pixels on this bus, we need two clock cycles . This is called bus overflow and this is the loss of imaginary fps.

How to get out of the situation? Yes, it’s very simple: leave only 4 pixel conveyors, but give each 2 TMU (4x2) . Of course, not only you now thought that you were being deceived somewhere:

And here I agree with NVIDIA, damn it! You can’t take into account the dull coloring of pixels in the era of shaders, because this is not a measure of performance. It is much more important how many texture operations a chip has in time.

And now those who wish can pick up (whoever has) their FX Giraffe, carefully look at the chip and say “ Everything ingenious is simple! ” This whole story reminds me of the logical Happy Gilmore, who “... the only one took off his skates, to fight! " :)

Let's start with the Vertex pipeline - a conveyor with vertex blocks.

The specification above apparently does not accidentally mention the term “ design ” because:

...

Whereas the GeForce4 had two parallel vertex shader units, the GeForce FX has a single vertex shader pipeline that has a massively parallel array of floating point processors

...

www.anandtech.com/show/1034/3

Yes, it seems that the chip ( NV35 ) was designed in such a way as to cope with tasks as efficiently as possible, distributing free transistors depending on the demand. Thus , if the NV35 has three large compartments with vertex units, then each such compartment can be transformed as you like: you can combine all forces and use “like one powerful vertex processor”, or you can parallelize tasks by dividing the compartment into several blocks . Thus, exactly how many vertex blocks will be involved depends on the case :) The minimum number of them in NV35 is 3 . By the way, even vipipipendia hints!

...

The GeForce FX Series runs vertex shaders in an array

...

en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#GeForce_FX_.285xxx.29_Series

Pixel pipeline.

If information about the Vetrex pipeline was at least announced by NVIDIA themselves, then everything is somewhat more interesting. Initially, NVIDIA claimed that the released NV30 has 8 pixel pipelines, and each of them has one TMU (texture unit) . This is called the " 8x1 design ". However, almost immediately after the release, intriguing independent investigations were carried out , noting a clear discrepancy between the actual speed of filling the screen with pixels declared in the specification. I was somewhat surprised to learn about such investigations, but people could understand: after all, there was nothing more to do with DX9 cards ...

So, after intrigues and investigations, it turned out thatThe “design” of the pixel pipelines of the NV30 / NV35 is actually 4x2 (4 pixel pipelines with 2 TMUs each). All of these themselves NVIDIA indirectly confirmed by this statement:

...

"Geforce FX 5800 and 5800 Ultra run at 8 Pixels per clock for all of the following:

a) z-rendering

b) Stencil operations

c) Texture operations

d) shader operations

For most advanced applications (such as Doom3) most of the time is spent in these modes because of the advanced shadowing techniques that use shadow buffers, stencil testing and next generation shaders that are longer and therefore make apps “shading bound” rather than “color fillrate bound. Only Z + color rendering is calculated at 4 pixels per clock, all other modes (z, stencil, texture, shading) run at 8 pixels per clock. The more advanced the application the less percentage of the total rendering is color, because more time is spent texturing, shading and doing advanced shadowing / lighting »

...

www.beyond3d.com/content/reviews/10/5

But even this confession was not enough for the fanatics and scandals followed and, as a result, shocking interviews with sincere confessions:

...

Beyond3D:

We've seen the official response concerning the pipeline arrangement, and to some extent it would seem that you are attempting to redefine how 'fill-rate' is classified. For instance, you are saying that Z and Stencils operate at 8 per cycle, however both of these are not colour values rendered to the frame buffer (which is how we would normally calculate fill-rate), but are off screen samples that merely contribute to the generation of the final image — if we are to start calculating these as 'pixels' it potentially opens the floodgates to all kinds of samples that could be classed as pure 'fill-rate', such as FSAA samples, which will end up in a whole confusing mess of numbers. Even though we are moving into a more programmable age, don't we still need to stick to some basic fundamental specifications?

Tony Tamasi:

No, we need to make sure that the definitions/specifications that we do use to describe these architectures reflect the capabilities of the architecture as accurately as possible.

Using antiquated definitions to describe modern architectures results in inaccuracies and causes people to make bad conclusions. This issue is amplified for you as a journalist, because you will communicate your conclusion to your readership. This is an opportunity for you to educate your readers on the new metrics for evaluating the latest technologies.

Let's step through some math. At 1600x1200 resolution, there are 2 million pixels on the screen. If we have a 4ppc GPU running at 500MHz, our «fill rate» is 2.0Gp/sec. So, our GPU could draw the screen 1000 times per second if depth complexity is zero (2.0G divided by 2.0M). That is clearly absurd. Nobody wants a simple application that runs at 1000 frames per second (fps.) What they do want is fancier programs that run at 30-100 fps.

So, modern applications render the Z buffer first. Then they render the scene to various 'textures' such as depth maps, shadow maps, stencil buffers, and more. These various maps are heavily biased toward Z and stencil rendering. Then the application does the final rendering pass on the visible pixels only. In fact, these pixels are rendered at a rate that is well below the 'peak' fill rate of the GPU because lots of textures and shading programs are used. In many cases, the final rendering is performed at an average throughput of 1 pixel per clock or less because sophisticated shading algorithms are used. One great example is the paint shader for NVIDIA's Time Machine demo. That shader uses up to 14 textures per pixel.

And, I want to emphasize that what end users care most about is not pixels per clock, but actual game performance. The NV30 GPU is the world's fastest GPU. It delivers better game performance across the board than any other GPU. Tom's Hardware declared «NVDIA takes the crown» and HardOCP observed that NV30 outpaces the competition across a variety of applications and display modes.

…

www.beyond3d.com/content/reviews/10/24

So how did it happen?

Here I can even offer you my vision of the issue ...

Once again defeating all competitors in the market, NVIDIA decided to approach the development of the DX9 chip on a grand scale, because the possibilities allowed. The architecture of the NV3x chip was to be the most

- NV3x must definitely exceed all the requirements of the DX9 specification, even if it requires a lot of transistors ... even if it is too much!

- NV3x must definitely raise the record bar of the operating frequency to 500 MHz!

- Cards on NV3x must be equipped with DDR2 memory!

In fact, the architectural solution, which exceeded all the requirements of DX9 and raised the bar of the operating frequency to 500 MHz, collected as many as 125 million transistors (at that time a record bar) in its first performance. Something had to be done with this army of parts: it was warming excessively. They decided to use the 0.13 micron process technology (innovative at the time), which would reduce heat generation. However, this was not enough - I had to use a vacuum cleaner for cooling.

Okay, here they fixed what to do with memory? There were no official DDR2 specifications then, but Samsung volunteered to help: any whim for your money! As a result, expensive DDR2 microcircuits were manufactured, but the trouble is: the memory bus width of the controller turned out to be 128 bits. Why is this bad?

This is not very good, because it was originally planned that NV3x will have 8 honest pixel pipelines, one TMU on each (8x1) . However, in this case, a problem would arise. As you know, the frame buffer in which all displayed pixels are stored is contained in this particular video card memory. We also know that the dimension of a typical pixel displayed on the screen is 32 bits.

If we have 8 pixel pipelines, then we can transfer up to 8 32-bit pixels (= 256 bit) at a time to our frame buffer. The trouble is that for this we need a memory bus bandwidth of at least 256 bits. But we have only a 128-bit memory bus at our disposal, which means that for the transfer of 8 32-bit pixels on this bus, we need two clock cycles . This is called bus overflow and this is the loss of imaginary fps.

How to get out of the situation? Yes, it’s very simple: leave only 4 pixel conveyors, but give each 2 TMU (4x2) . Of course, not only you now thought that you were being deceived somewhere:

...

Beyond3D:

Our testing concludes that the pipeline arrangement of NV30, certainly for texturing operations, is similar to that of NV25, with two texture units per pipeline — this can even be shown when calculating odd numbers of textures in that they have the same performance drop as even numbers of textures. I also attended the 'Dawn-Till-Dusk' developer even in London and sat in on a number of the presentations in which developers were informed that the second texture comes for free (again, indicating a 2 texture units) and that ddx, ddy works by just looking at the values in there neighbours pixels shader as this is a 2x2 pipeline configuration, which it is unlikely to be if it was a true 8 pipe design (unless it operated as two 2x2 pipelines!!) In what circumstances, if any, can it operate beyond a 4 pipe x 2 textures configuration, bearing in mind that Z and stencils do not require texture sampling (on this instance its 8x0!).

Tony Tamasi:

Not all pixels are textured, so it is inaccurate to say that fill rate requires texturing.

For Z+stencil rendering, NV30 is 8 pixels per clock. This is in fact performed as two 2x2 areas as you mention above.

For texturing, NV30 can have 16 active textures and apply 8 textures per clock to the active pixels. If an object has 4 textures applied to it, then NV30 will render it at 4 pixels per 2 clocks because it takes 2 clock cycles to apply 4 textures to a single pixel.

…

www.beyond3d.com/content/reviews/10/24

And here I agree with NVIDIA, damn it! You can’t take into account the dull coloring of pixels in the era of shaders, because this is not a measure of performance. It is much more important how many texture operations a chip has in time.

And now those who wish can pick up (whoever has) their FX Giraffe, carefully look at the chip and say “ Everything ingenious is simple! ” This whole story reminds me of the logical Happy Gilmore, who “... the only one took off his skates, to fight! " :)

Be that as it may, if the main goal of NVIDIA engineers in those days was to vividly inherit in history - no doubt they succeeded! Moreover, another interesting feature appeared in NV35 , the competitors didn’t have an analogue of it: UltraShadow Technology , which, however, will be discussed later :) But let's stop talking about the beautiful and come back

From heaven to earth

Fortunately , I don’t have a dastbuster ... (but you can always look at a museum exhibit ). Fortunately, because ears are more expensive . In addition, he and his sophisticated brother, GeForce FX 5900, were disproportionate to a simple person’s wallet with simple needs. And for such people, NVIDIA offered options for the GeForce FX 5600 on the NV31 chip , but I didn’t get it either.

And now, by chance, after 12 years, I can say, they “sucked in” such an office stub:

But it’s not buzzing! That is, in addition to the truncated NV31 was released more and NV34 , which is not different performance, but rather was a marriage NV31 .

Now briefly about what's interesting in NV34 did not fit :(

- The NV30 / NV35 had a 4x2 pixel pipelines design, and only 4x1 remained

- Interestingly, Everest and GPU-Z will tell you that it has 2 Vertex shaders, while Wikipedia seems to have 1. Here you have the closed FP Array architecture;)

- The NV30 / NV35 introduced paranoid compression of everything that just got on the graphics pipeline. This included not only textures, but even z-buffer and the color values of each pixel in the frame buffer! So in NV34 this complicated 4: 1 lossless compression algorithm did not fit :)

- UltraShadow Technology was not included either ( GeForce FX 5900 and 5700 models only ). But do not be upset, I'll show you everything;)

- This instance has a memory bus width of as much as 64bit (yes, like old 2D video cards)

Apparently, almost nothing fit :) But the worst was not even that. Everyone knows this word.

Drivers

Here, right now, so many of you must be smiling. This was probably the most epic implementation after TNT2.

If you don’t even remember this:

... then you should definitely remember this:

Needless to say, with the advent of DX9, vendors began to punch drivers with incredible speed, and they remembered WHQL only as a last resort. The performance of the same DX9 card could dance so dynamically that sometimes a DX8 card could become its partner. So it was, for example, with the GeForce MX 440 (NV18) , which from a certain version of firewood was emulated with SM2.0 emulation, but later stabbed back. For as something indecent turns: NV18 wins NV34 in shaders, which she does not even have ...

Here, for example, are good statistics on popular troubles with GeForce FX .

Nevertheless, there was much to be proud of. For instance:

Adaptive texture filtering

К слову сказать, из всего огромного множества обзоров NV30 в 90% при тестировании использовали анизотропную фильтрацию вкупе с трилинейной! Сначала это показалось мне какой-то массовой эпидемией. Ну, то есть, я понимаю, что в виду столь долгих ожиданий хотелось выжать из карты всё до последней капли, но о практическом смысле тоже надо было как-то подумать?

Однако всё оказалось намного интереснее. Копнув глубже, можно было уяснить себе две вещи: есть общепринятые стандарты фильтрации текстур, а есть такие «адаптивные» вещи, как, например, Intellisample. Поскольку в данном случае алгоритм той или иной фильтрации задаётся драйвером, то тут у NVIDIA был ещё один полигон возможностей. Производительность и качество фильтрации текстур различались практически с каждой новой версией дров…

Что интереснее, во всех обзорах тех дней часто можно было найти сравнение алгоритмов работы так жадной до fps анизотропки у ATI и у NVIDIA. Так вот ATI ещё более преуспели на данном поприще: они не только действительно использовали трилинейную фильтрацию вкупе с анизотропной (не поверите: ради экономии!), но даже умудрились придумать гибридный термин: brilinear filtration.

И в общем-то те же ATI были правы: если не видно разницы,- зачем платить больше? Понятно, если у меня бюджетная карта, я буду рад дополнительным 10 кадрам без видимой потери качества. Однако этот замечательный принцип почему-то распространялся и на топовые чипы (NV30 / R300), тогда как вот здесь-то нам хотелось бы получить лучшую в мире картинку. «Да, все эти оптимизации должны быть отключаемыми» — твердили тогда обозреватели. Вендоры же были непреклонны и с каждой версией алгоритмы становились всё бесплатнее и оптимизированнее. В ответ на такое наплевательское отношение к покупателям дорогих карт был выдвинут такой транспарант:

"As long as this is achieved, there is no «right» or «wrong» way to implement the filtering..."

Конечно же, все драйвера тестировать я не стал, ибо нахожу это абсолютно бессмысленным занятием. Хотя бы потому, что в последних в мире 175.19 для GeForce FX всё честно:Поддерживаемые API (и немного о новых способах оптимизаций под конкретные видеочипы).

С тех пор, как все проприетарные закрытые API (за исключением Direct3D) приказали долго жить в пользу открытых API, видеокарты должны были постепенно становиться более универсальными. Производители видеочипов обязались отныне в первую очередь поддерживать общеизвестные API и уметь полноценно работать с ними. Одной из первых серьёзный шаг в направлении стандартизации сделала Microsoft. Повторюсь:спецификация DX9 должна была положить конец различиям результатов рендеринга одного и того же изображения на картах разных производителей

Здорово, но на самом деле нас по-прежнему ждали сюрпризы. Их можно было разделить на несколько типов:- Собственно-разработанные расширения OpenGL.

Например (выделено):

Конечно, это можно считать оптимизацией под свою продукцию лишь наполовину. Ведь расширение зачастую хоть и работает только на картах этого производителя, но является частью спецификации. Ничто не мешает конкурентам реализовать то же самое в следующей серии :) - Game Codepaths. Кодпас в игре применительно к видеочипу — это отдельный код для поддержки работы именно с этим чипом для обеспечения его максимальной производительности в данной игре. По сути это так или иначе существовало всегда, но наиболее полно раскрылось теперь.

…

Некоторые компании, такие как Valve, отказались от Shader Model 2.x на NV30 и использовали Shader Model 1.х для них в Half-Life 2.

…

ru.wikipedia.org/wiki/GeForce_FX

Это, пожалуй, самый яркий пример, но были и другие. Даже сегодня не утихают такие многочисленные споры о производительности Half-Life 2 на разных видеокартах. Так что, если вы тоже уверены, что играли на FX 5600 (или даже на MX 440) в тот же самый Half-Life 2, что и я на RV350, — настоятельно рекомендую ознакомиться. - Следствие портирования с приставки.

Отличный пример — Tom Clancy’s Splinter Cell:…

Q: Why does Splinter Cell have a special mode for NV2x/NV3x graphic chips?

A: Splinter Cell was originally developed on XBOXTM. Features only available on NV2x chips were used and it was decided to port them to the PC version even if these chips would be the only one able to support them. Considering the lighting system of XBOXTM was well validated, it was easy to keep that system intact.

…

Patchinfo.rtf (from patch 1.3)

- Собственно-разработанные расширения OpenGL.

Прикладной уровень

This time I will not measure performance and show you boring shots with fps monitoring. This is not at all what interests me in this generation of video cards. By and large, on this generation of video cards, my interest in them ends, because Geforce FX were the last cards that had something unique that their competitors did not have. That's it about this in the first place.

At the same time, I decided to diversify a lot: let's see different features in different games :) If interested, then the graphics settings in the games: “maximum rich colors”! Cinema after all, or where? True, cinema permissions in games were not yet provided for then ...

But before we rush through the shaders, I would like to warn: the pictures are actually much larger than the ones shown on the page. Where it seemed to me necessary, I provided for the possibility of clicking on the picture in order to open the source - do not neglect if you are interested! Also below will be a few gifs. Gifs are such gifs that the quality of the conversion is inexorably reduced. This must be accepted, because their main function is animation on the web, and not a photo presentation in a museum. But you can click them too!

So the games ...

Doom3

- Renderers: OpenGL 1.5

- Shadowtech: Stenciled Shadow Volumes (via Carmack's Reverse) + UltraShadow Technology

- Shading Language: ARB ASM

UltraShadow ... Sounds it, huh? We’ll talk about it here. There is a legend:

…

Фактически, некоторые опции линейки GeForceFX были специально разработаны для ускорения Doom3, хотя и другие игры их могут использовать в той же мере. Примером может служить функция UltraShadow, реализованная в GeForceFX 5900. Но, возможно, лучше просто привести высказывание Джона из недавнего интервью на QuakeCon 2003:

«GeForce FX на данный момент является самой быстрой картой, где мы тестировали технологию Doom, и в немалой степени это связано с тесным сотрудничеством с nVidia во время разработки алгоритмов, используемых в Doom. Они знают, что используемая нами техника построения теней будет очень важна для многих игр, поэтому nVidia решила внести соответствующие оптимизации в своё „железо“, чтобы оно смогло в должной мере её поддерживать».

…

www.thg.ru/graphic/20030924/nvidia_interview-01.html

Although there like to start that like

Firstly, thanks to this dotplan, John Carmack did a little more honest than Gabe Newell. He simply refused any proprietary OpenGL extensions when programming the DooM3 engine, focusing on the publicly available ARB _ *** extensions. Although for compatibility of the engine with old non-DX9 cards the corresponding codepaths were written, everything was fair with the new cards: they had to fight on equal terms in its engine. Now many of you will protest, they say, but what about:

…

At the moment, the NV30 is slightly faster on most scenes in Doom than

the R300, but I can still find some scenes where the R300 pulls a little bit

ahead. The issue is complicated because of the different ways the cards

can choose to run the game.

The R300 can run Doom in three different modes: ARB (minimum exten-

sions, no specular highlights, no vertex programs), R200 (full featured,

almost always single pass interaction rendering), ARB2 (floating point

fragment shaders, minor quality improvements, always single pass).

The NV30 can run DOOM in five different modes: ARB, NV10 (full fea-

tured, five rendering passes, no vertex programs), NV20 (full featured,

two or three rendering passes), NV30 (full featured, single pass), and

ARB2.

...

John Carmack's .plan_2003

content.atrophied.co.uk/id/johnc-plan_2003.pdf

Let's analyze this moment in more detail. In fact, further experiments with the NV30 did not go away. Perhaps John’s simply could not stand the membranes:

...

The current NV30 cards do have some other disadvantages: They take up two

slots, and when the cooling fan fires up they are VERY LOUD. I'm not usually

one to care about fan noise, but the NV30 does annoy me.

...

John Carmack's .plan_2003

content.atrophied.co.uk/id/johnc-plan_2003.pdf

Be that as it may, but only 6 “working” code reserves (and 1 experimental :) were included in the release.

NV10, NV20, R200. As the name implies, these three of them are specific to some chips.

Cg, ARB, ARB2. These three are universal. And even despite the fact that ATI is still trained in Cg, this code pass was still disabled (for the honesty of the experiment?). So ARB2 here is the most sophisticated renderer.

As for the NV30 renderer , he stayed in Alpha .

So everything was honest in general. On the NV30 / R300, you saw DooM3 like this:

Whereas on some GeForce MX 440 (NV18) , for example, DooM3 was like this:

But back to the fact that the dot plan didn’t affect ...

The famous " Carmack's Reverse algorithm " and support for NVIDIA UltraShadow .

It’s interesting here. You could read about the algorithm by reference, but in general, the “Carmack hack on shadow” itself is nothing more than a way to get shadows “from the opposite”. By the way, this is not surprising, considering how the DooM3 engine itself is designed - id Tech 4 - after all, it itself is essentially a solid shadow :) And the NV35 just has hardware support for such frauds, by the way, like all subsequent NVIDIA cards . For example, my GTS 450 (highlighted):

But what kind of technology is this?

In simple terms, the UltraShadow technique allows programmers to set lighting boundaries in a 3D scene. This allows you to limit the calculation of effects from each of the light sources. As a result, the amount of computation spent on calculating the effects of lighting and shading is sharply reduced.

And it seems that the UltraShadow II features even featured DooM3 shots and are assured that the technology is being used. In fact, with the release of DooM3, it turned out that it was not until the end :

...

Anthony'Reverend'Tan

What's the situation with regards to depth bounds test implementation in the game? Doesn't appear to have any effect on a

NV35 or NV40 using the cvar.

John Carmack

Nvidia claims some improvement, but it might require unreleased drivers. It's not a big deal one way or another.

...

web.archive.org/web/20040813015408/http : //www.beyond3d.com/interviews/carmack04/

Nevertheless, the missing can still be turned on forcibly today (and in the last patch it is even turned on by default). In fact, you get only a boost in performance, but no visual differences. Well, thanks for that ...

If anyone is clearly interested (r_showshadows 1)

Here's how shadows are calculated without Ultrashadow:

If you enable Ultrashadow:

If you enable Ultrashadow:

Need for Speed: Most Wanted

- Renderers: Direct3D9

- Shadowtech: Shadow Mapping

- Shading Language: HLSL

Since we are talking about shadows, how can we not recall the so booming NFS: MW? I understand that it’s a strange choice, but only at first glance ...

... In those days it was very popular to say that NFS: MW is a good example of how you can cheaply and angrily emulate HDR .

…

К сожалению, графическим движком игры не поддерживается «настоящий» HDR рендеринг с использованием форматов повышенной точности, как могут подумать некоторые, глядя на bloom и нечто подобное динамическому tone mapping в процессе игры. Это всего лишь простые LDR эффекты постобработки: bloom (overbright), увеличивающий яркость светлых участков, и псевдо HDR (фальшивый динамический tone mapping) эффект, динамически изменяющий параметры фильтра bloom таким образом, чтобы результат был похож на имитацию восприятия освещения человеческим зрением, его адаптацию к различным условиям освещения.

Эффект больше всего заметен при выезде из тоннелей или из-под мостов. Сначала интенсивность свечения ярких поверхностей велика, но постепенно яркость снижается. Это похоже на HDR рендеринг и эффект от работы оператора tone mapping, но без использования формата хотя бы с 16-бит на цвет добиться «настоящего» HDR не получится, некоторые и 16-битные форматы называют лишь средним динамическим диапазоном. Поэтому можно сказать, что в Need For Speed: Most Wanted применяется хитрая эмуляция, на самом деле, даже снижающая количество одновременно видимых цветов.

…

www.ixbt.com/video2/tech_nfsmw.shtml

It would seem true what we see throughout the game?

Overbright (Bloom):

Motion Blur:

and maybe sometimes we notice World Reflections : If you really wanted to, you could easily turn a movie outfit ( Visual treatment = high ): into elegant shorts ( Visual treatment = low ): Still, the charges of emulation they are undeserved here, because the same Bloom (without a doubt taken as the basis here) is still also an HDR effect. Just many other things in this game, for example, the rain effect or the shadow detail setting, are available only to owners of video cards more powerful than NV34 .

Well, here we are hooked on where we started. Particularly attentive could still notice a very offensive implementation of shadow maps. It is believed that the game simply uses shadow maps of low resolution. Perhaps this was a compromise, perhaps there wasn’t enough time to implement a more detailed adjustment of the shadows, but maybe again it’s about the code reserves ... Who knows? Who better than the EA compatibility check utility to know this better: In

total, on the NVIDIA GTS 450 the scene looks like this: