Music and neural interfaces: Encephalophone - neurology of creativity

More recently, the media published information about the invention of a new musical instrument - an encephalophone, which allows you to play music using the electrical activity of the brain. In my opinion, this development is perhaps the most recent and promising innovation in the field of experimental musical instruments.

Despite the relative accordion (most media publications date back to July 2017), I am convinced that the topic is worthy of attention and discussion. Unfortunately, short news reports, in addition to the fact of the invention of the device, the names of the authors and vague descriptions of the principles of operation, contain little useful information.

I think that the appearance of a working prototype of an encephalophon is comparable in importance with the invention, for example, Thereminvox. But it should also be noted that experiments on this topic and attempts to use electroencephalography as an interface for sound extraction have been carried out over the past 60 years.

According to the developers, the new tool will not only be able to change the usual notions of sound extraction and neural interfaces, but also help people with disabilities. Under the cut, a story about the history of the invention, related developments, the principles of operation of the encephalophone, research results and development prospects.

The first application of EEG in music dates back to 1934, when certain Adrian and Matthews noticed the “musicality” of the transformed signal of encephalography. The name encephalophone was first used for a device developed by neurophysiologist E. Bevers and physicist and mathematician R. Furt in 1943. The invention of scientists was intended for the diagnosis of neurological diseases, in particular, the localization of damaged neurons.

Like a modern musical instrument, the 43rd year encephalophone was able to make certain sounds depending on the readings of the electroencephalogram, which indicated damage to areas of the cortex, reticular formation and other parts of the brain. According to the New York Times on March 2, 1943, Bevers and Furt's invention was based on combining electroencephalography with active sonar technology. Unlike the modern device, the first encephalophone did not allow arbitrary sounds to be extracted with “the effort of thought."

Another breakthrough that brought the creation of the “mental sound extraction” interface closer was the electroencephalophone created by Erkki Kurenniemi, a member of the Department of Theoretical Physics at the University of Helsinki. Erkki is mainly known for creating a variety of musical devices and synthesizers (advanced for his time), as well as the creator of the first Finnish microprocessor.

In 1970, a talented scientist became the managing and technical director of Digelius Electronics Finland, where he initiated the creation of a number of electric musical instruments with unusual interfaces. A series of bold experiments called Dimi (from the abbreviation (digital music instrument). So DIMI-O received an optical interface associated with movements and recognition of the graphic image of notes. Dimi-S or Sexophone used the skin electrical conductivity as a modulator. DIMI-T was a device controlled by the electrical activity of the brain.

The electroencephalophone DIMI-T, as well as other devices of this type, was created on the basis of an EEG apparatus. The experiment can be considered relatively successful, since the frequencies of the signal that the instrument reproduced through a long workout could be tuned to harmonious combinations with the notes of the main composition. According to some reports, Kurenniemi tried to use the beta rhythm, etc., to control the instrument. activation reaction.

In 1965, composer and experimental musician Lucier, together with composer John Cage, created a device that allows you to control percussion instruments using EEG. A number of information useful for creating a modern instrument was also obtained from the experiments of David Rosenbum, who in the 1970s used an encephalograph as a source of musical and modulating signals.

Neuropsychologist and futurologist Ariel Garten, in collaboration with inventor Stefan (Steve) Mann, in the early 2000s developed a neuro-interface for controlling musical variables under water. The instrument had an original sound, but did not give accurate sound extraction.

An important contribution to the topic was made by Yuan H. and He B., having published in 2014 the current work “Brain Interfaces Using Sensory-Motor Rhythms: Current Status and Prospects”, which, among others, formed the basis of the modern Encephalophone.

Brazilian composer and scientist Eduardo Rék Miranda is creating neural interfaces and biological feedback systems. Some of his studies are aimed at creating devices that make the electrical activity of the brain a way of sound extraction. In addition, the researcher conducts experiments with neural networks that generate music.

A new “food” for continuing the scientist’s development was given in 1999 by Roberts, Penny, and Rezek on computer control using the EEG-based interface (Temporal and spatial complexity measures for electroencephalogram based brain-computer interfacing.). Important information was contained in a related work of 2000 by Pfurstschler, Newper, Gugger et al. (Current trends in graz brain-computer interface (BCI) research.). In these publications, the effectiveness of applying alpha-rhythm changes (PDR - visual cortex rhythm) and mu-rhythm (motor cortex rhythm) for the implementation of computer control interfaces via EEG was noted.

Using these developments and his own research on the statistical analysis of the influence of subjective factors on the EEG results, as well as research in the field of accurate recording of changes in alpha, beta and mu rhythms, Eduardo managed to create sensors that subsequently formed the basis of the modern encephalophone.

As I have already noted, the most successful experimental encephalophone was the tool presented this year by Thomas A. Duel (Department of Neurology, Swedish Institute of Neurology, Seattle, Washington, USA), Juan Pampin (Center for Digital and Experimental Research, University of Washington (DXARTS), Jacob Sundstrom (University of Washington School of Music) and Felix Darvas (Department of Neurosurgery, University of Washington) Research Results on the Development and Testing of a New Biological Device Feedback was published in Frontiers in Human Neuroscience, and prior to this device, experiments on using neural interfaces to play music with accurate sound production were unsuccessful.

The researchers used a neurointerface that uses alpha (PDR) rhythms to control (visual - occipital lobes, characteristic of a wakeful state without physical stress) and mu rhythms (motor cortex, accompany a state of complete rest). Reliance on mu rhythm opens up possibilities for using the instrument by patients with pathologies such as amyotrophic lateral sclerosis, severe spinal cord injuries, traumatic amputations, various forms of paralysis, and other conditions that limit natural abilities and motor activity.

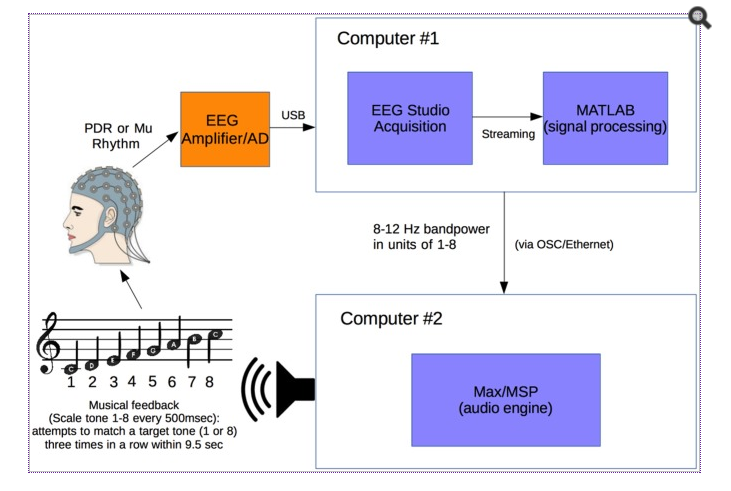

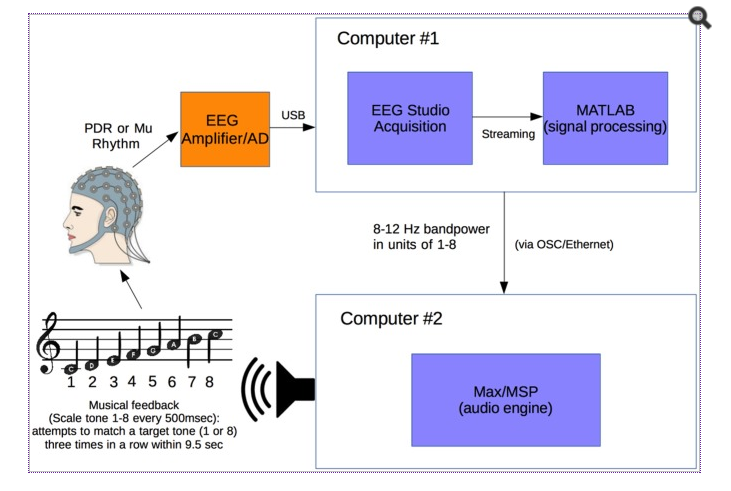

Technically, the device is a 19-channel encephalograph equipped with electrodes placed on a special cap and an amplifier. This unit transmits the EEG results via a USB interface to a computer that analyzes the frequency and amplitude of the signals, assigning them values from 1 to 8. The second computer converts the signals into notes in real time (synthesizes piano sounds).

The principle of the system / Thomas A. Deuel et al., Frontiers in Human Neuroscience, 2017

Interestingly, to use the tool almost no special training is required. Basically, 15 healthy subjects aged 25 to 60 years who did not have experience with other musical instruments took part in the study. They showed very impressive results in controlled precise sound extraction.

In this experiment, the subjects, practically without preparation (apart from a short 5-minute calibration), tried to accurately extract 27 notes within 5 minutes using intuitive “conscious efforts (modulations)”. For example, it was proposed to extract the notes “do” and “re” of the third and fourth octaves. In case of successful completion of the task three times, the program synthesized a major chord and produced the following task, and in case of an incorrect one, an unpleasant disharmonious combination of sounds.

So in the control mode via the PDR rhythm, the accuracy of sound extraction was 67.1%, and when using the mu rhythm, 57.1%. Such a result is significantly higher than the generation of random notes, which would be indicated by an accuracy of not more than 19.03%. A study of trained people with experience in music showed a significant increase in accuracy in controlling alpha rhythm, but in controlling mu rhythm accuracy remained at the level of unprepared subjects.

Percent accuracy for control via PDR and Mu

Scientists are actively promoting development through live performances with the jazz project Dr. Gyrus and The Electric Sulci Featuring the Encephalophone. The party is played by the project manager Thomas Diuel himself. The first performance with an experimental prototype, shockingly decorated with fake wires, dates back to the year 2015 (i.e., before the official release of the device).

In 2017, another jazz presentation was held with the same team.

Research and refinement of the tool are ongoing. The developers are convinced that the possibilities of the encephalophon can be significantly expanded. The most promising direction for the further development of the topic is the use of mu rhythm, due to the high social significance for paralyzed patients.

The most detailed features and results of the study are described here .

Traditional jeans paragraph

Before the start of serial production, encephalophones, unfortunately, will not be included in our catalog , but meanwhile, there is a wide range of other devices for playing and creating music. Photo

content was used in the post:

www.ncbi.nlm.nih.gov

neuromusic.soc.plymouth.ac.uk

Frontiers in Human Neuroscience

Despite the relative accordion (most media publications date back to July 2017), I am convinced that the topic is worthy of attention and discussion. Unfortunately, short news reports, in addition to the fact of the invention of the device, the names of the authors and vague descriptions of the principles of operation, contain little useful information.

I think that the appearance of a working prototype of an encephalophon is comparable in importance with the invention, for example, Thereminvox. But it should also be noted that experiments on this topic and attempts to use electroencephalography as an interface for sound extraction have been carried out over the past 60 years.

According to the developers, the new tool will not only be able to change the usual notions of sound extraction and neural interfaces, but also help people with disabilities. Under the cut, a story about the history of the invention, related developments, the principles of operation of the encephalophone, research results and development prospects.

The first steps of encephalophony - 30th and 40th

The first application of EEG in music dates back to 1934, when certain Adrian and Matthews noticed the “musicality” of the transformed signal of encephalography. The name encephalophone was first used for a device developed by neurophysiologist E. Bevers and physicist and mathematician R. Furt in 1943. The invention of scientists was intended for the diagnosis of neurological diseases, in particular, the localization of damaged neurons.

Like a modern musical instrument, the 43rd year encephalophone was able to make certain sounds depending on the readings of the electroencephalogram, which indicated damage to areas of the cortex, reticular formation and other parts of the brain. According to the New York Times on March 2, 1943, Bevers and Furt's invention was based on combining electroencephalography with active sonar technology. Unlike the modern device, the first encephalophone did not allow arbitrary sounds to be extracted with “the effort of thought."

Electroencephalophone DIMI-T Erkki Kurenniemi

Another breakthrough that brought the creation of the “mental sound extraction” interface closer was the electroencephalophone created by Erkki Kurenniemi, a member of the Department of Theoretical Physics at the University of Helsinki. Erkki is mainly known for creating a variety of musical devices and synthesizers (advanced for his time), as well as the creator of the first Finnish microprocessor.

In 1970, a talented scientist became the managing and technical director of Digelius Electronics Finland, where he initiated the creation of a number of electric musical instruments with unusual interfaces. A series of bold experiments called Dimi (from the abbreviation (digital music instrument). So DIMI-O received an optical interface associated with movements and recognition of the graphic image of notes. Dimi-S or Sexophone used the skin electrical conductivity as a modulator. DIMI-T was a device controlled by the electrical activity of the brain.

The electroencephalophone DIMI-T, as well as other devices of this type, was created on the basis of an EEG apparatus. The experiment can be considered relatively successful, since the frequencies of the signal that the instrument reproduced through a long workout could be tuned to harmonious combinations with the notes of the main composition. According to some reports, Kurenniemi tried to use the beta rhythm, etc., to control the instrument. activation reaction.

Other studies and experiments

In 1965, composer and experimental musician Lucier, together with composer John Cage, created a device that allows you to control percussion instruments using EEG. A number of information useful for creating a modern instrument was also obtained from the experiments of David Rosenbum, who in the 1970s used an encephalograph as a source of musical and modulating signals.

Neuropsychologist and futurologist Ariel Garten, in collaboration with inventor Stefan (Steve) Mann, in the early 2000s developed a neuro-interface for controlling musical variables under water. The instrument had an original sound, but did not give accurate sound extraction.

An important contribution to the topic was made by Yuan H. and He B., having published in 2014 the current work “Brain Interfaces Using Sensory-Motor Rhythms: Current Status and Prospects”, which, among others, formed the basis of the modern Encephalophone.

Contribution of Eduardo Rivers Miranda

Brazilian composer and scientist Eduardo Rék Miranda is creating neural interfaces and biological feedback systems. Some of his studies are aimed at creating devices that make the electrical activity of the brain a way of sound extraction. In addition, the researcher conducts experiments with neural networks that generate music.

A new “food” for continuing the scientist’s development was given in 1999 by Roberts, Penny, and Rezek on computer control using the EEG-based interface (Temporal and spatial complexity measures for electroencephalogram based brain-computer interfacing.). Important information was contained in a related work of 2000 by Pfurstschler, Newper, Gugger et al. (Current trends in graz brain-computer interface (BCI) research.). In these publications, the effectiveness of applying alpha-rhythm changes (PDR - visual cortex rhythm) and mu-rhythm (motor cortex rhythm) for the implementation of computer control interfaces via EEG was noted.

Using these developments and his own research on the statistical analysis of the influence of subjective factors on the EEG results, as well as research in the field of accurate recording of changes in alpha, beta and mu rhythms, Eduardo managed to create sensors that subsequently formed the basis of the modern encephalophone.

Encephalophone 2017

As I have already noted, the most successful experimental encephalophone was the tool presented this year by Thomas A. Duel (Department of Neurology, Swedish Institute of Neurology, Seattle, Washington, USA), Juan Pampin (Center for Digital and Experimental Research, University of Washington (DXARTS), Jacob Sundstrom (University of Washington School of Music) and Felix Darvas (Department of Neurosurgery, University of Washington) Research Results on the Development and Testing of a New Biological Device Feedback was published in Frontiers in Human Neuroscience, and prior to this device, experiments on using neural interfaces to play music with accurate sound production were unsuccessful.

The researchers used a neurointerface that uses alpha (PDR) rhythms to control (visual - occipital lobes, characteristic of a wakeful state without physical stress) and mu rhythms (motor cortex, accompany a state of complete rest). Reliance on mu rhythm opens up possibilities for using the instrument by patients with pathologies such as amyotrophic lateral sclerosis, severe spinal cord injuries, traumatic amputations, various forms of paralysis, and other conditions that limit natural abilities and motor activity.

Technically, the device is a 19-channel encephalograph equipped with electrodes placed on a special cap and an amplifier. This unit transmits the EEG results via a USB interface to a computer that analyzes the frequency and amplitude of the signals, assigning them values from 1 to 8. The second computer converts the signals into notes in real time (synthesizes piano sounds).

The principle of the system / Thomas A. Deuel et al., Frontiers in Human Neuroscience, 2017

Interestingly, to use the tool almost no special training is required. Basically, 15 healthy subjects aged 25 to 60 years who did not have experience with other musical instruments took part in the study. They showed very impressive results in controlled precise sound extraction.

In this experiment, the subjects, practically without preparation (apart from a short 5-minute calibration), tried to accurately extract 27 notes within 5 minutes using intuitive “conscious efforts (modulations)”. For example, it was proposed to extract the notes “do” and “re” of the third and fourth octaves. In case of successful completion of the task three times, the program synthesized a major chord and produced the following task, and in case of an incorrect one, an unpleasant disharmonious combination of sounds.

So in the control mode via the PDR rhythm, the accuracy of sound extraction was 67.1%, and when using the mu rhythm, 57.1%. Such a result is significantly higher than the generation of random notes, which would be indicated by an accuracy of not more than 19.03%. A study of trained people with experience in music showed a significant increase in accuracy in controlling alpha rhythm, but in controlling mu rhythm accuracy remained at the level of unprepared subjects.

Percent accuracy for control via PDR and Mu

Scientists are actively promoting development through live performances with the jazz project Dr. Gyrus and The Electric Sulci Featuring the Encephalophone. The party is played by the project manager Thomas Diuel himself. The first performance with an experimental prototype, shockingly decorated with fake wires, dates back to the year 2015 (i.e., before the official release of the device).

In 2017, another jazz presentation was held with the same team.

Total

Research and refinement of the tool are ongoing. The developers are convinced that the possibilities of the encephalophon can be significantly expanded. The most promising direction for the further development of the topic is the use of mu rhythm, due to the high social significance for paralyzed patients.

The most detailed features and results of the study are described here .

Traditional jeans paragraph

Before the start of serial production, encephalophones, unfortunately, will not be included in our catalog , but meanwhile, there is a wide range of other devices for playing and creating music. Photo

content was used in the post:

www.ncbi.nlm.nih.gov

neuromusic.soc.plymouth.ac.uk

Frontiers in Human Neuroscience