The neural network generates the character of a video game character in real time

Creating a real-time controlled controller for virtual characters is a difficult task, even if there is a large amount of high-quality motion capture data available.

This is partly due to the fact that a lot of requirements are made to the character controller, and only if all of them are met can it be useful. The controller must be able to learn from large amounts of data, but at the same time not require a lot of manual data preprocessing, and should also work as quickly as possible and not require large amounts of memory.

And although some progress has already been made in this area, almost all existing approaches correspond to one or more of these requirements, but do not satisfy them all. In addition, if the projected area will have a terrain with a lot of obstacles, this complicates matters even more. The character has to change the pace of the movement, jump, dodge or climb the heights, following the user's commands.

In such a scenario, we need a system that can learn from a very large amount of motion data, since there are so many different combinations of motion paths and corresponding geometries.

Developments in the field of deep learning of neural networks can potentially solve this problem: they can learn from large data sets, and once trained, they take up little memory and quickly complete tasks. The question remains as to how exactly neural networks are best applied to motion data in such a way as to obtain high-quality results in real time with minimal data processing.

Researchers at the University of Edinburgh have developed a new learning system called the Phase Functional Neural Network (PFNN), which uses machine learning to animate characters in video games and other applications.

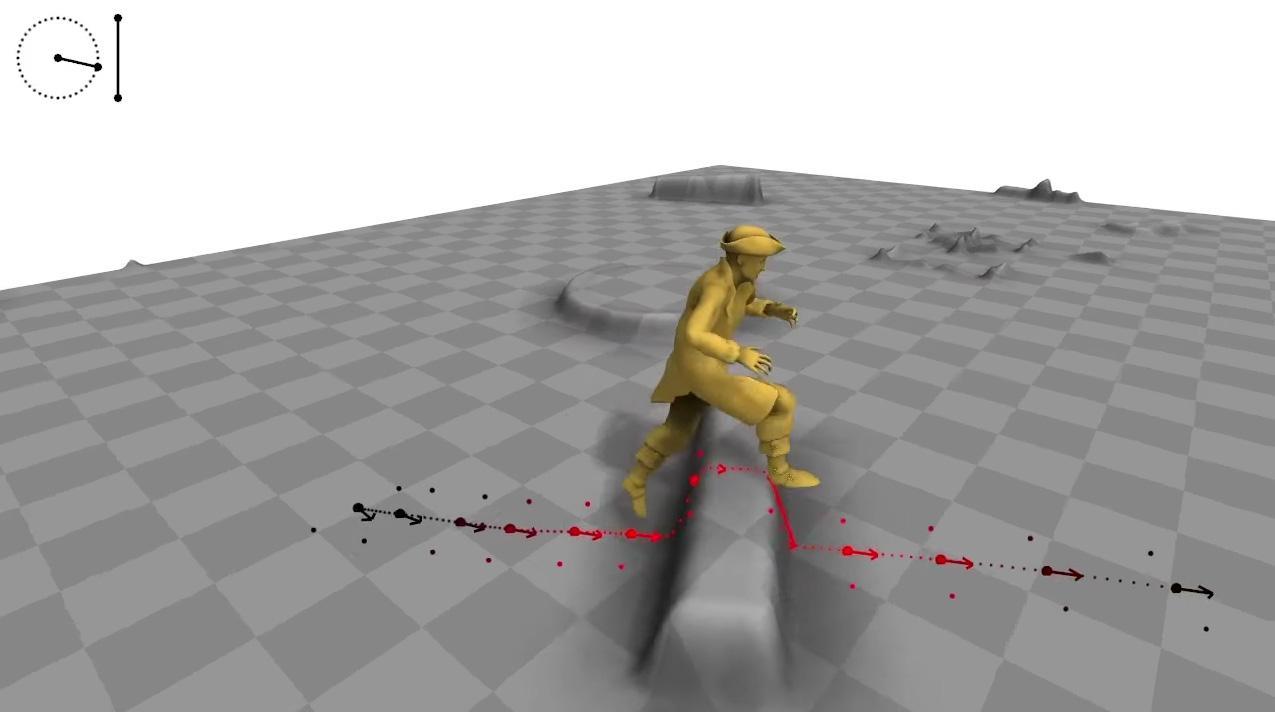

Selection of results using PFNN to cross rough terrain: the character automatically moves in accordance with user control in real time and the geometry of the environment.

Ubisoft Montreal researcher and lead project researcher Daniel Holden described PFNN as a learning framework that is suitable for creating cyclic behavior, such as human movement. He and his team are also developing network input and output parameters for real-time character control in difficult conditions with detailed user interaction.

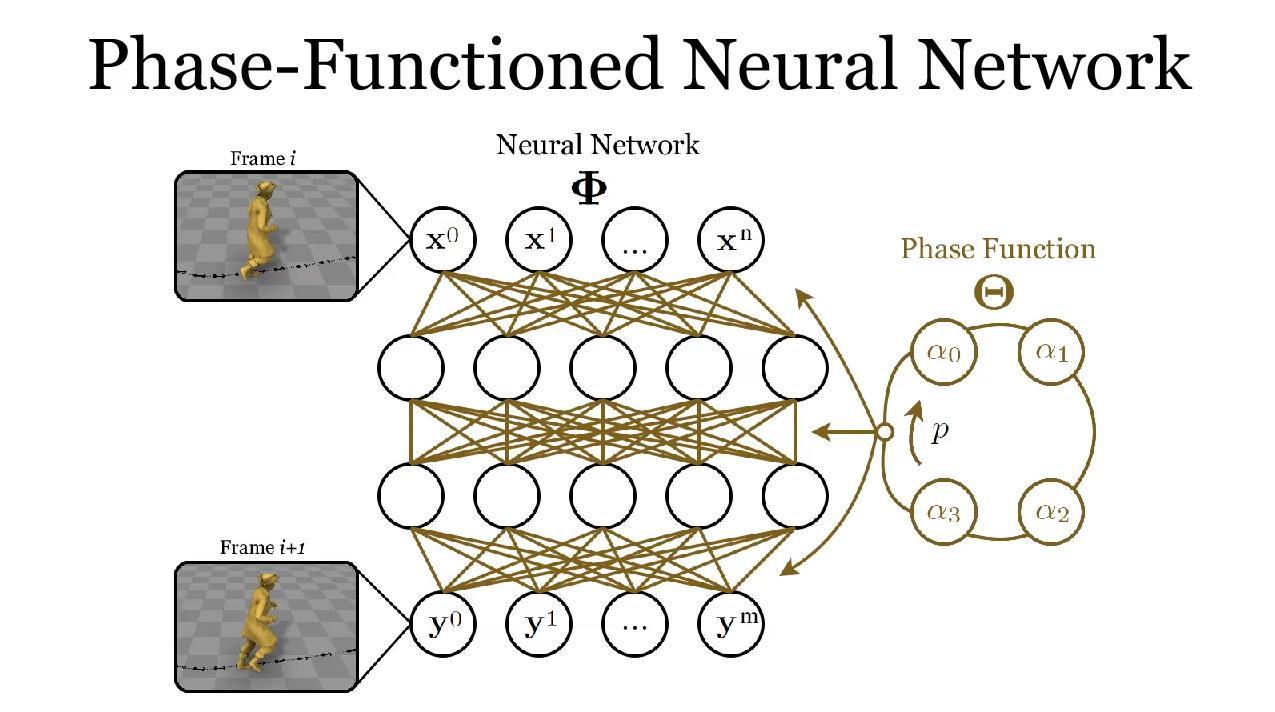

Visual outline of the PFNN. The figure shows in yellow the cyclic function of the phase - the function that generates the weights of the regression network that performs the control task.

Despite its compact structure, a network can learn from a large amount of large-volume data due to a phase function that changes smoothly over time to create a wide variety of network configurations.

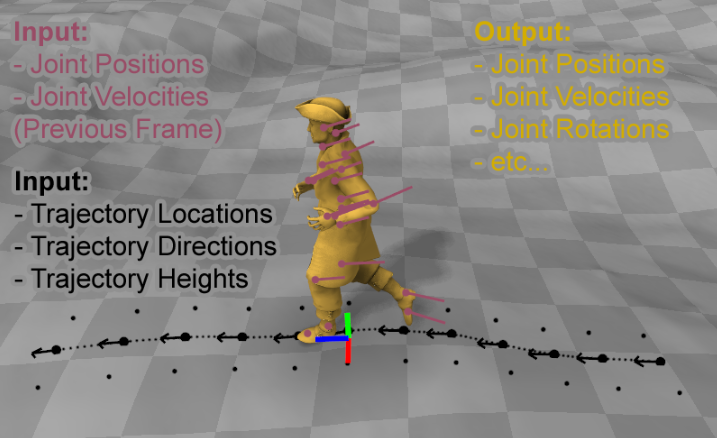

Visualization of the input parameterization of the system. Pink color represents the position and speed of the character’s joints from the previous frame. Black describes the subsample positions of the trajectory, direction and height. The grid of the character deformed using the positions of the joints and rotations derived from the PFNN system is highlighted in yellow.

Researchers also offer a framework for additional data for PFNN training, where human movement and environmental geometry are interconnected. They claim that after training, the system works quickly and requires little memory - it needs several milliseconds of time and megabytes of memory even when learning motion data on gigabytes. In addition, PFNN produces high-quality motion without the artifacts that can be found in existing methods.

PFNN is trained in end-to-end mode on a large data set consisting of walking, running, jumping, climbing, which are mounted in virtual environments. The system is able to automatically generate movements in which the character adapts to various geometric conditions such as walking and running over rough terrain, jumping over obstacles and squats in structures with low ceilings.

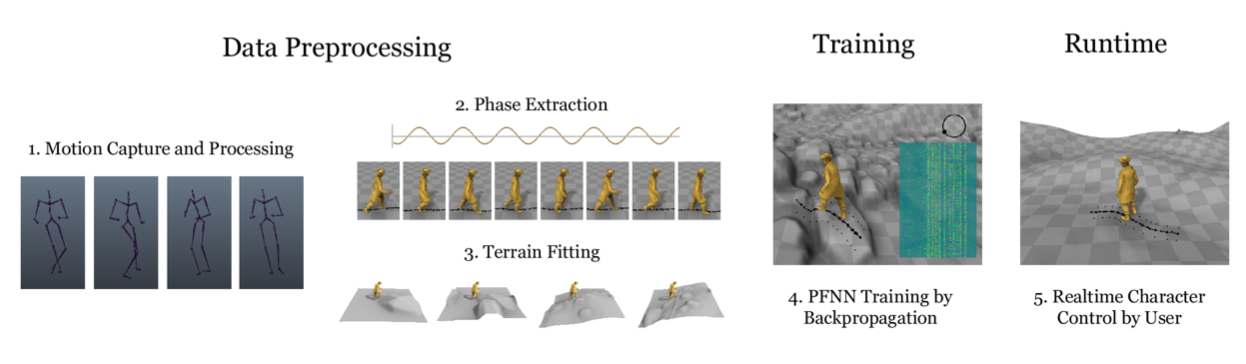

The PFNN system goes through three consecutive stages: the pre-processing stage, the training stage and the execution stage. At the pre-processing stage, the data for the preparation of the neural network is configured so that it is possible to automatically extract control parameters from them that the user will later provide. This process involves setting elevation data for captured motion data using a separate elevation map database.

At the training stage, PFNN learns to use this data to create character movement in each frame, taking into account the control parameter. At the execution stage, the input parameters in the neural network are collected from user input and from the environment, and then are input into the system to determine the character’s movement.

This control mechanism is ideal for working with characters in interactive scenes in video games and virtual reality systems.The researchers said that if you train a network with a non-cyclic phase function, PFNN can be easily used to solve other problems, such as modeling kicks and kicks.

A research team led by Holden plans to unveil this new neural network at SIGGRAPH conferences in August.

→ Project page