Adventures with a home Kubernetes cluster

- Transfer

Note trans. : The author of the article, Marshall Brekka, is holding the position of director for systems design at Fair.com, which offers its application for car leasing. In his free time from work, he likes to apply his extensive experience to solve “domestic” tasks that are unlikely to surprise any geek (therefore, the question “Why?” - applied to the actions described later - is a priori omitted). So, in its publication, Marshall shares the results of the recent deployment of Kubernetes on ... ARM boards.

Like many other geeks, over the past years I have accumulated a variety of development boards like Raspberry Pi. And like many geeks, they were gathering dust on the shelves with the idea that they would someday come in handy. And for me, this day has finally come!

During the winter holidays, there were several weeks out of work, within which there was enough time to inventory all accumulated iron and decide what to do with it. Here is what I had:

Of the 5 listed iron components, I used perhaps RAID and a netbook as a temporary NAS. However, due to the lack of USB3 support in the netbook, RAID didn’t use all the speed potential.

Since working with RAID was not optimal when using a netbook, I set myself the following goals to get the best configuration:

Since none of the available devices supported USB3 and gigabit ethernet, unfortunately, I had to make additional purchases. The choice fell on the board ROC-RK3328-CC . She had all the necessary specifications and sufficient support for operating systems.

Having solved my hardware needs (and awaiting the arrival of this solution), I switched to the second goal.

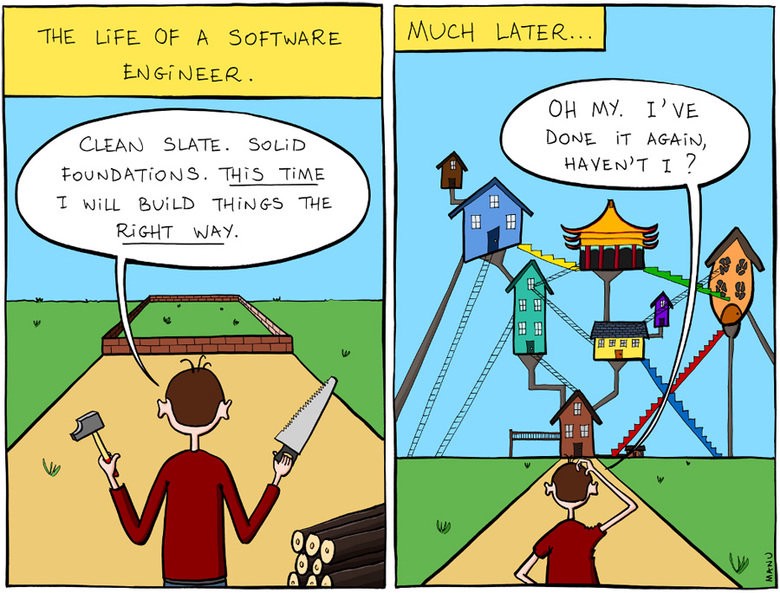

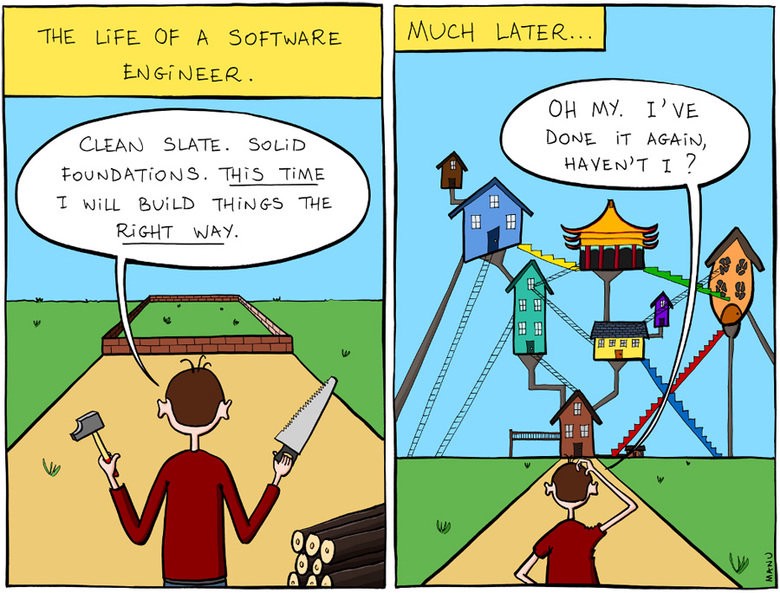

Part of my past projects related to development boards failed due to insufficient attention to reproducibility and documentation issues. When creating a new configuration for my current needs, I did not bother to write down either the steps taken or the links to the publications on the blogs I followed. And when, after months or years, something went wrong and I tried to fix the problem, I did not have an understanding of how everything was originally arranged.

So I told myself that this time everything will be different!

And he turned to what I know well enough - to Kubernetes.

Although K8s is too hard a solution to a rather simple problem, after almost three years of managing clusters using various tools (own, kops, etc.) in my main job, I am very familiar with this system. In addition, deploying K8s outside the cloud environment, and even on ARM devices, all this seemed to be an interesting task.

I also thought that since the available hardware does not satisfy the necessary requirements for the NAS, I will try to at least assemble a cluster from it and, possibly, some software that is not so demanding of resources will be able to work on older devices.

At work, I did not have the opportunity to use the utility

Raspbian was chosen as the operating system because it is famous for better support of the boards I have.

I found a good article on setting up Kubernetes on Raspberry Pi using HypriotOS. Since I was not sure about the availability of HypriotOS for all of my boards, I adapted these instructions for Debian / Raspbian.

For a start, the installation of the following tools was required:

The docker should be installed using a special script — the convenience script (as indicated for the Raspbian use case).

After that, I installed the Kubernetes components according to the instructions from the Hypriot blog, adapting them so that specific dependencies are used for all dependencies:

The first difficulty arose when trying to bootstrap a cluster on a Raspberry Pi B:

It turned out that the support for ARMv6 had been removed from Kubernetes . Well, I also have CubbieBoard and Banana Pi.

Initially it seemed that the same sequence of actions for the Banana Pi would be more successful, but the team

Finding out with the help of

Obviously, he

While checking the logs, I saw very standard start-up procedures — there was a record of the start of listening to the safe port and a long pause before the appearance of numerous errors in TLS handshakes:

And soon after this, the server is shutting down. Googling has led to this problem , indicating a possible reason for the slow operation of cryptographic algorithms on some ARM devices.

I went ahead and thought that maybe it

Extracting these files from the manifest directory will tell kubelet to stop the execution of the corresponding pods:

A review of the latest logs

Therefore, I checked

Pod was killed after 135 seconds (

Success! Well, errors in the handshakes still occur (presumably from the kubelet), but the launch still took place:

When I

Now it's time to make sure that the download will pass successfully if you leave all the files in the source directory: is it enough just to change the allowable delay in initialization

Yes, everything works, although such old devices, apparently, were not intended to launch the control plane, since repeated TLS connections cause significant brakes. One way or another - the working installation of K8s on ARM is received! Let's go further ...

Since SD cards are not suitable for writing in the long term, for the most volatile parts of the file system, I decided to use more reliable storage — in this case, RAID. On it were divided into 4 sections:

I haven’t yet come up with a specific purpose for 20 GB partitions, but I wanted to leave additional possibilities for the future.

In the file

When the new board was delivered, I installed the necessary components for K8s (see the beginning of the article) and launched it

Fine! No fuss with timeouts.

And since RAID will also be used on this board, the mount setting will be required again. To summarize all the steps:

I omit the phase with control plane, because I want to be able to plan normal pods on this node:

The information about this in the Hypriot article was a bit outdated, since the Weave network plugin is now also supported on ARM :

On this site, I’m going to start the NAS server, so I’ll mark it with labels for future use in the scheduler:

Setting up other devices (Banana Pi, CubbieBoard) was just as easy. For them, you need to repeat the first 3 steps (by changing the settings for mounting disks / flash-media depending on their availability) and execute the command

Building most of the necessary Docker containers normally runs on a Mac, but for ARM everything is somewhat more complicated. Having found many articles on how to use QEMU for this purpose, I still came to the conclusion that most of the applications I need are already assembled, and many of them are available on linuxserver .

Still not having received the initial configuration of the devices in such an automated / scripted form, as we would like, I at least compiled a set of basic commands (mount, calls

In the future, I would like to achieve the following:

Read also in our blog:

Like many other geeks, over the past years I have accumulated a variety of development boards like Raspberry Pi. And like many geeks, they were gathering dust on the shelves with the idea that they would someday come in handy. And for me, this day has finally come!

During the winter holidays, there were several weeks out of work, within which there was enough time to inventory all accumulated iron and decide what to do with it. Here is what I had:

- 5-disk RAID enclosure with USB3 connection;

- Raspberry Pi Model B (OG model);

- CubbieBoard 1;

- Banana Pi M1;

- HP netbook (2012?).

Of the 5 listed iron components, I used perhaps RAID and a netbook as a temporary NAS. However, due to the lack of USB3 support in the netbook, RAID didn’t use all the speed potential.

Life goals

Since working with RAID was not optimal when using a netbook, I set myself the following goals to get the best configuration:

- NAS with USB3 and gigabit ethernet;

- the best way to manage software on a device;

- (bonus) the ability to stream multimedia content from RAID to Fire TV.

Since none of the available devices supported USB3 and gigabit ethernet, unfortunately, I had to make additional purchases. The choice fell on the board ROC-RK3328-CC . She had all the necessary specifications and sufficient support for operating systems.

Having solved my hardware needs (and awaiting the arrival of this solution), I switched to the second goal.

Software management on the device

Part of my past projects related to development boards failed due to insufficient attention to reproducibility and documentation issues. When creating a new configuration for my current needs, I did not bother to write down either the steps taken or the links to the publications on the blogs I followed. And when, after months or years, something went wrong and I tried to fix the problem, I did not have an understanding of how everything was originally arranged.

So I told myself that this time everything will be different!

And he turned to what I know well enough - to Kubernetes.

Although K8s is too hard a solution to a rather simple problem, after almost three years of managing clusters using various tools (own, kops, etc.) in my main job, I am very familiar with this system. In addition, deploying K8s outside the cloud environment, and even on ARM devices, all this seemed to be an interesting task.

I also thought that since the available hardware does not satisfy the necessary requirements for the NAS, I will try to at least assemble a cluster from it and, possibly, some software that is not so demanding of resources will be able to work on older devices.

Kubernetes on ARM

At work, I did not have the opportunity to use the utility

kubeadmto deploy clusters, so I decided that now is the time to try it in action. Raspbian was chosen as the operating system because it is famous for better support of the boards I have.

I found a good article on setting up Kubernetes on Raspberry Pi using HypriotOS. Since I was not sure about the availability of HypriotOS for all of my boards, I adapted these instructions for Debian / Raspbian.

Required components

For a start, the installation of the following tools was required:

- Docker,

- kubelet

- kubeadm

- kubectl.

The docker should be installed using a special script — the convenience script (as indicated for the Raspbian use case).

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.shAfter that, I installed the Kubernetes components according to the instructions from the Hypriot blog, adapting them so that specific dependencies are used for all dependencies:

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

echo"deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -y kubelet=1.13.1-00 kubectl=1.13.1-00 kubeadm=1.13.1-00Raspberry pi b

The first difficulty arose when trying to bootstrap a cluster on a Raspberry Pi B:

$ kubeadm init

Illegal instructionIt turned out that the support for ARMv6 had been removed from Kubernetes . Well, I also have CubbieBoard and Banana Pi.

Banana pi

Initially it seemed that the same sequence of actions for the Banana Pi would be more successful, but the team

kubeadm initended with a timeout when trying to wait for the control plane to work:error execution phase wait-control-plane: couldn't initialize a Kubernetes clusterFinding out with the help of

docker pswhat was happening with the containers, I saw that and kube-controller-manager, and kube-schedulerhad already been working for at least 4-5 minutes, but kube-api-serverhad risen only 1-2 minutes ago:$ docker ps

CONTAINER ID COMMAND CREATED STATUS

de22427ad594 "kube-apiserver --au…" About a minute ago Up About a minute

dc2b70dd803e "kube-scheduler --ad…" 5 minutes ago Up 5 minutes

60b6cc418a66 "kube-controller-man…" 5 minutes ago Up 5 minutes

1e1362a9787c "etcd --advertise-cl…" 5 minutes ago Up 5 minutesObviously, he

api-serverdied or the strontium process killed and restarted him. While checking the logs, I saw very standard start-up procedures — there was a record of the start of listening to the safe port and a long pause before the appearance of numerous errors in TLS handshakes:

20:06:48.604881 naming_controller.go:284] Starting NamingConditionController

20:06:48.605031 establishing_controller.go:73] Starting EstablishingController

20:06:50.791098 log.go:172] http: TLS handshake error from 192.168.1.155:50280: EOF

20:06:51.797710 log.go:172] http: TLS handshake error from 192.168.1.155:50286: EOF

20:06:51.971690 log.go:172] http: TLS handshake error from 192.168.1.155:50288: EOF

20:06:51.990556 log.go:172] http: TLS handshake error from 192.168.1.155:50284: EOF

20:06:52.374947 log.go:172] http: TLS handshake error from 192.168.1.155:50486: EOF

20:06:52.612617 log.go:172] http: TLS handshake error from 192.168.1.155:50298: EOF

20:06:52.748668 log.go:172] http: TLS handshake error from 192.168.1.155:50290: EOFAnd soon after this, the server is shutting down. Googling has led to this problem , indicating a possible reason for the slow operation of cryptographic algorithms on some ARM devices.

I went ahead and thought that maybe it

api-servergets too many repeated requests from schedulerand controller-manager. Extracting these files from the manifest directory will tell kubelet to stop the execution of the corresponding pods:

mkdir /etc/kubernetes/manifests.bak

mv /etc/kubernetes/manifests/kube-scheduler.yaml /etc/kubernetes/manifests.bak/

mv /etc/kubernetes/manifests/kube-controller-mananger.yaml /etc/kubernetes/manifests.bak/A review of the latest logs

api-servershowed that the process now went on, but still died after about 2 minutes. Then I remembered that the manifesto could contain liveness tests with timeouts that are too low for such a slow device. Therefore, I checked

/etc/kubernetes/manifests/kube-api-server.yaml- and in it, of course ...livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.1.155

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15Pod was killed after 135 seconds (

initialDelaySeconds+ timeoutSeconds* failureThreshold). Increase the value initialDelaySecondsto 120 ... Success! Well, errors in the handshakes still occur (presumably from the kubelet), but the launch still took place:

20:06:54.957236 log.go:172] http: TLS handshake error from 192.168.1.155:50538: EOF

20:06:55.004865 log.go:172] http: TLS handshake error from 192.168.1.155:50384: EOF

20:06:55.118343 log.go:172] http: TLS handshake error from 192.168.1.155:50292: EOF

20:06:55.252586 cache.go:39] Caches are synced for autoregister controller

20:06:55.253907 cache.go:39] Caches are synced for APIServiceRegistrationController controller

20:06:55.545881 controller_utils.go:1034] Caches are synced for crd-autoregister controller

...

20:06:58.921689 storage_rbac.go:187] created clusterrole.rbac.authorization.k8s.io/cluster-admin

20:06:59.049373 storage_rbac.go:187] created clusterrole.rbac.authorization.k8s.io/system:discovery

20:06:59.214321 storage_rbac.go:187] created clusterrole.rbac.authorization.k8s.io/system:basic-userWhen I

api-servergot up, I moved the YAML files for the controller and scheduler back to the manifests directory, after which they also started normally. Now it's time to make sure that the download will pass successfully if you leave all the files in the source directory: is it enough just to change the allowable delay in initialization

livenessProbe?20:29:33.306983 reflector.go:134] k8s.io/client-go/informers/factory.go:132: Failed to list *v1.Service: Get https://192.168.1.155:6443/api/v1/services?limit=500&resourceVersion=0: dial tcp 192.168.1.155:6443: i/o timeout

20:29:33.434541 reflector.go:134] k8s.io/client-go/informers/factory.go:132: Failed to list *v1.ReplicationController: Get https://192.168.1.155:6443/api/v1/replicationcontrollers?limit=500&resourceVersion=0: dial tcp 192.168.1.155:6443: i/o timeout

20:29:33.435799 reflector.go:134] k8s.io/client-go/informers/factory.go:132: Failed to list *v1.PersistentVolume: Get https://192.168.1.155:6443/api/v1/persistentvolumes?limit=500&resourceVersion=0: dial tcp 192.168.1.155:6443: i/o timeout

20:29:33.477405 reflector.go:134] k8s.io/client-go/informers/factory.go:132: Failed to list *v1beta1.PodDisruptionBudget: Get https://192.168.1.155:6443/apis/policy/v1beta1/poddisruptionbudgets?limit=500&resourceVersion=0: dial tcp 192.168.1.155:6443: i/o timeout

20:29:33.493660 reflector.go:134] k8s.io/client-go/informers/factory.go:132: Failed to list *v1.PersistentVolumeClaim: Get https://192.168.1.155:6443/api/v1/persistentvolumeclaims?limit=500&resourceVersion=0: dial tcp 192.168.1.155:6443: i/o timeout

20:29:37.974938 controller_utils.go:1027] Waiting for caches to sync for scheduler controller

20:29:38.078558 controller_utils.go:1034] Caches are synced for scheduler controller

20:29:38.078867 leaderelection.go:205] attempting to acquire leader lease kube-system/kube-scheduler

20:29:38.291875 leaderelection.go:214] successfully acquired lease kube-system/kube-schedulerYes, everything works, although such old devices, apparently, were not intended to launch the control plane, since repeated TLS connections cause significant brakes. One way or another - the working installation of K8s on ARM is received! Let's go further ...

RAID mounting

Since SD cards are not suitable for writing in the long term, for the most volatile parts of the file system, I decided to use more reliable storage — in this case, RAID. On it were divided into 4 sections:

- 50 GB;

- 2 × 20 GB;

- 3.9 TB.

I haven’t yet come up with a specific purpose for 20 GB partitions, but I wanted to leave additional possibilities for the future.

In the file

/etc/fstabfor a partition with 50 GB, the mount point was specified as /mnt/root, and for 3.9 TB - /mnt/raid. After that, I mounted the directories with etcd and docker to the 50 GB partition:UUID=655a39e8-9a5d-45f3-ae14-73b4c5ed50c3 /mnt/root ext4 defaults,rw,user,auto,exec 0 0

UUID=0633df91-017c-4b98-9b2e-4a0d27989a5c /mnt/raid ext4 defaults,rw,user,auto 0 0

/mnt/root/var/lib/etcd /var/lib/etcd none defaults,bind 0 0

/mnt/root/var/lib/docker /var/lib/docker none defaults,bind 0 0Arrival ROC-RK3328-CC

When the new board was delivered, I installed the necessary components for K8s (see the beginning of the article) and launched it

kubeadm init. A few minutes of waiting - success and output of the command jointo run on other nodes. Fine! No fuss with timeouts.

And since RAID will also be used on this board, the mount setting will be required again. To summarize all the steps:

1. Mount disks in / etc / fstab

UUID=655a39e8-9a5d-45f3-ae14-73b4c5ed50c3 /mnt/root ext4 defaults,rw,user,auto,exec 0 0

UUID=0633df91-017c-4b98-9b2e-4a0d27989a5c /mnt/raid ext4 defaults,rw,user,auto 0 0

/mnt/root/var/lib/etcd /var/lib/etcd none defaults,bind 0 0

/mnt/root/var/lib/docker /var/lib/docker none defaults,bind 0 02. Installing Docker and K8s binaries

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.shcurl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

echo"deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -y kubelet=1.13.1-00 kubectl=1.13.1-00 kubeadm=1.13.1-003. Configure a unique host name (important because many nodes are added)

hostnamectl set-hostname k8s-master-14. Initializing Kubernetes

I omit the phase with control plane, because I want to be able to plan normal pods on this node:

kubeadm init --skip-phases mark-control-plane5. Installing Network Plugin

The information about this in the Hypriot article was a bit outdated, since the Weave network plugin is now also supported on ARM :

export KUBECONFIG=/etc/kubernetes/admin.conf

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"6. Adding Node Labels

On this site, I’m going to start the NAS server, so I’ll mark it with labels for future use in the scheduler:

kubectl label nodes k8s-master-1 marshallbrekka.raid=true

kubectl label nodes k8s-master-1 marshallbrekka.network=gigabitConnect other nodes to the cluster

Setting up other devices (Banana Pi, CubbieBoard) was just as easy. For them, you need to repeat the first 3 steps (by changing the settings for mounting disks / flash-media depending on their availability) and execute the command

kubeadm joininstead kubeadm init.Finding Docker Containers for ARM

Building most of the necessary Docker containers normally runs on a Mac, but for ARM everything is somewhat more complicated. Having found many articles on how to use QEMU for this purpose, I still came to the conclusion that most of the applications I need are already assembled, and many of them are available on linuxserver .

Next steps

Still not having received the initial configuration of the devices in such an automated / scripted form, as we would like, I at least compiled a set of basic commands (mount, calls

dockerand kubeadm) and documented them in the Git repository. The rest of the applications used also got the YAML configurations for K8s stored in the same repository, so getting the necessary configuration from scratch is now very simple. In the future, I would like to achieve the following:

- make master nodes highly accessible;

- add monitoring / notifications to know about failures in any components;

- change the DCHP settings of the router to use the DNS server from the cluster in order to simplify the detection of applications (who wants to remember the internal IP addresses?);

- Run MetalLB to forward cluster services to a private network (DNS, etc.).

PS from translator

Read also in our blog:

- “ Kubernetes tips & tricks: about allocating nodes and the load on the web application ”;

- “ Kubernetes tips & tricks: access to dev sites ”;

- “ Kubernetes tips & tricks: speeding up the bootstrap of large databases ”;

- “ 11 ways to (not) become a victim of hacking at Kubernetes ”;

- “ Play with Kubernetes is a service for getting to know K8s in practice .”