How to Solve 90% of NLP Tasks: A Walkthrough on Natural Language Processing

- Transfer

It doesn’t matter who you are - a proven company, or just about to launch your first service - you can always use text data to check your product, improve it and expand its functionality.

Natural Language Processing (NLP) is an actively developing scientific discipline engaged in the search for meaning and learning based on textual data.

Over the past year, the Insight team has participated in several hundred projects, combining the knowledge and experience of leading companies in the United States. They summarized the results of this work in an article, the translation of which is now in front of you, and deduced approaches to solving the most common applied problems of machine learning .

We'll start with the simplest method that can work - and gradually move on to more subtle approaches like feature engineering , word vectors, and deep learning.

After reading the article, you will know how to:

The post is written in a walkthrough format; it can also be seen as a review of high-performance standard approaches.

An original Jupyter notebook is attached to the original post , demonstrating the use of all the techniques mentioned. We encourage you to use it as you read the article.

Natural language processing provides exciting new results and is a very broad field. However, Insight identified the following key aspects of practical application that are much more common than others:

Despite the large number of scientific publications and training manuals on the topic of NLP on the Internet, today there are practically no full-fledged recommendations and tips on how to effectively deal with NLP tasks, while considering solutions to these problems from the very basics.

Any machine learning task starts with data — whether it's a list of email addresses, posts, or tweets. Common sources of textual information are:

To illustrate the approaches described, we will use the Disasters in Social Media dataset , kindly provided by CrowdFlower .

We set ourselves the task of determining which of the tweets are related to the disaster event, as opposed to those tweets that relate to irrelevant topics (for example, films). Why do we have to do this? A potential use would be to give officials emergency notification of urgent attention, and the reviews of Adam Sandler’s latest film would be ignored. The particular difficulty of this task is that both of these classes contain the same search criteria, so we will have to use more subtle differences to separate them.

Next, we will refer to the disaster tweets as “disaster,” and the tweets about everything else as “irrelevant . ”

Our data is tagged, so we know which categories tweets belong to. As Richard Socher emphasizes, it is usually faster, easier, and cheaper to find and mark up enough data to model on, rather than trying to optimize a complex teaching method without a teacher.

Instead of spending a month formulating a machine learning task without a teacher, just spend a week marking up the data and train the classifier.

One of the key skills of a professional Data Scientist is knowing what should be the next step - working on a model or data. As practice shows, first it is better to look at the data itself, and only then clean it up.

A clean dataset will allow the model to learn significant attributes and not retrain on irrelevant noise.

The following is a checklist that is used to clear our data (details can be found in the code ).

After we go through these steps and check for additional errors, we can begin to use clean, tagged data to train the models.

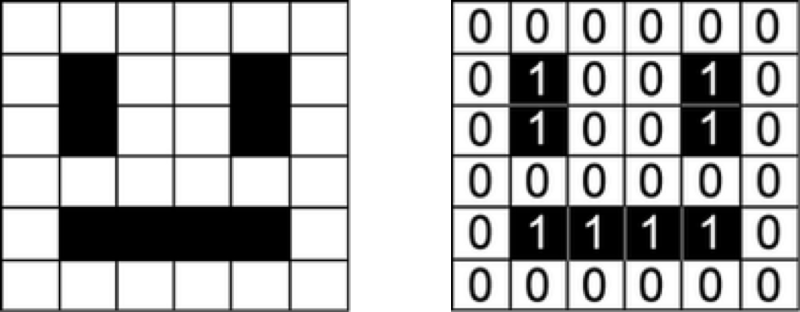

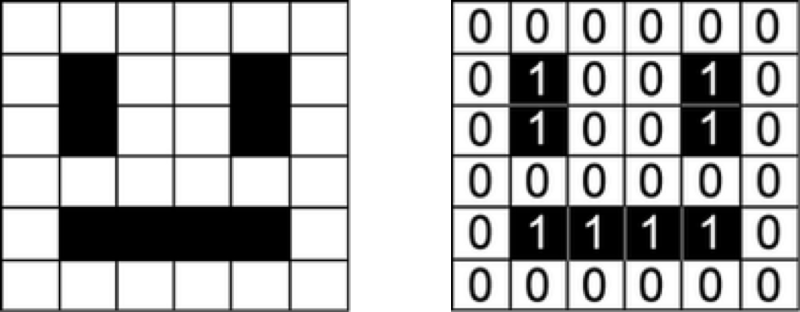

As input, machine learning models accept numerical values. For example, models that work with images take a matrix that displays the intensity of each pixel in each color channel.

A smiling face, represented as an array of numbers

Our dataset is a list of sentences, so in order for our algorithm to extract patterns from data, we must first find a way to present it in such a way that our algorithm can understand it.

The natural way to display text in computers is to encode each character individually as a number (an example of this approach is ASCII encoding ). If we “feed” such a simple representation to the classifier, he will have to study the structure of words from scratch, based only on our data, which is impossible on most datasets. Therefore, we must use a higher level approach.

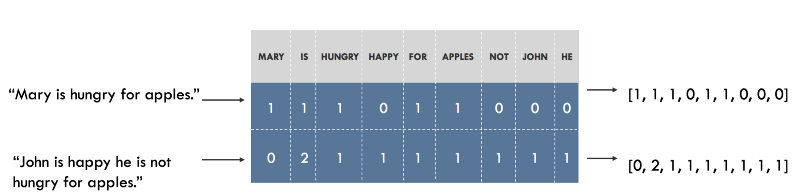

For example, we can build a dictionary of all unique words in our dataset, and associate a unique index with each word in the dictionary. Each sentence can then be displayed in a list, the length of which is equal to the number of unique words in our dictionary, and in each index in this list it will be stored how many times this word appears in the sentence. This model is called the “Bag of words” (Bag of Words ), since it is a mapping completely ignoring the word order of a sentence. Below is an illustration of this approach.

Presentation of sentences in the form of a “Bag of words”. The original sentences are indicated on the left, their presentation is on the right. Each index in vectors represents one specific word.

The Social Media Disasters dictionary contains about 20,000 words. This means that each sentence will be reflected by a vector of length 20,000. This vector will contain mainly zeros , since each sentence contains only a small subset of our dictionary.

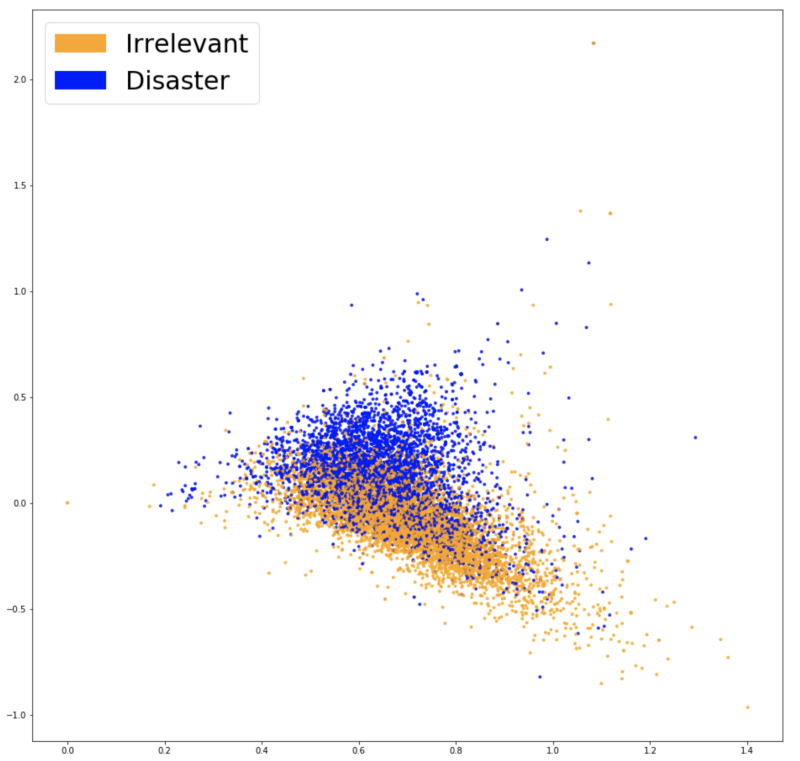

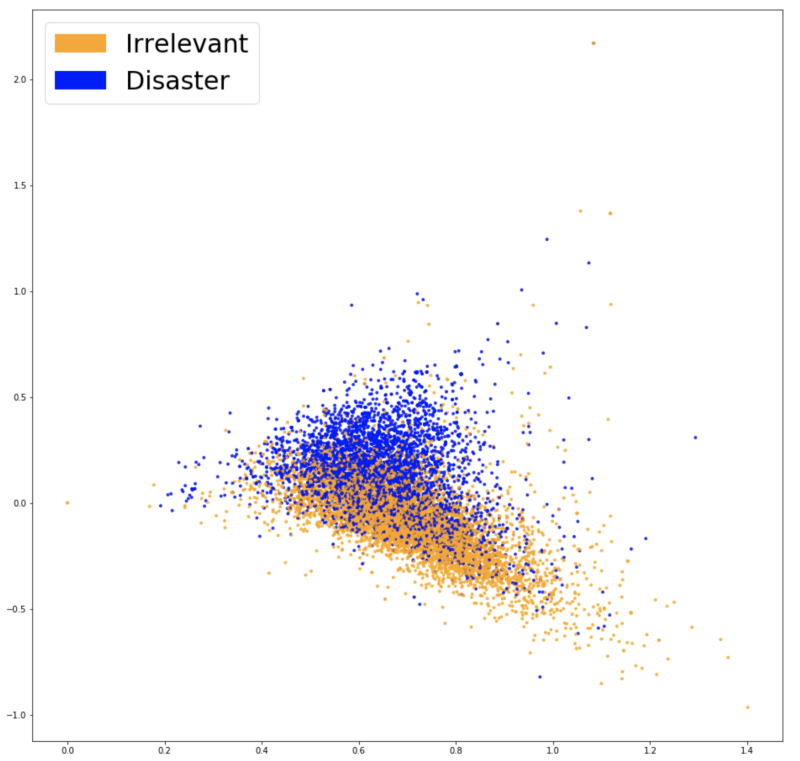

In order to find out whether our vector representations ( embeddings ) capture information relevant to our task (for example, whether tweets are related to disasters or not), you should try to visualize them and see how well these classes are separated. Since dictionaries are usually very large and data visualization of 20,000 measurements is not possible, approaches like the principal component method(PCA) help project data into two dimensions.

Visualization of vector representations for the “bag of words”

Judging by the resulting graph, it does not seem that the two classes are separated as it should — this may be a feature of our representation or simply the effect of reducing the dimension. In order to find out whether the capabilities of the “bag of words” are useful to us, we can train a classifier based on them.

When you are starting a task for the first time, it is common practice to start with the simplest method or tool that can solve this problem. When it comes to data classification, the most common way is logistic regression because of its versatility and ease of interpretation. It is very simple to train, and its results can be interpreted, since you can easily extract all the most important coefficients from the model.

We will divide our data into a training sample, which we will use to train our model, and a test one, in order to see how well our model generalizes to data that I have not seen before. After training, we get an accuracy of 75.4%. Not so bad! Guessing the most frequent class (“irrelevant”) would give us only 57%.

However, even if a result with 75% accuracy would be enough for our needs, we should never use the model in production without trying to understand it.

The first step is to understand what types of errors our model makes and what types of errors we would like to encounter less often in the future. In the case of our example, false positive results classify an irrelevant tweet as a catastrophe, false negative ones classify a catastrophe as an irrelevant tweet. If our priority is the reaction to each potential event, then we will want to reduce our false-negative responses. However, if we are limited in resources, then we can prioritize a lower false-negative rate to reduce the likelihood of a false alarm. A good way to visualize this information is to use an error matrix., which compares the predictions made by our model with real marks. Ideally, this matrix will be a diagonal line going from the upper left to the lower right corner (this will mean that our predictions coincided perfectly with the truth).

Our classifier creates more false negative than false positive results (proportionally). In other words, the most common mistake in our model is the inaccurate classification of catastrophes as irrelevant. If false positives reflect a high cost for law enforcement, then this may be a good option for our classifier.

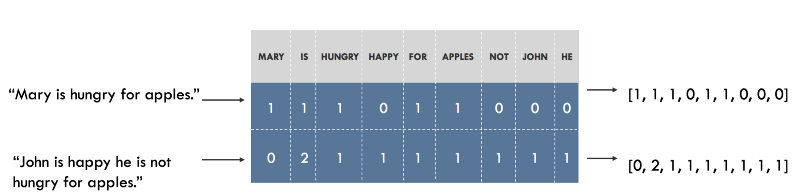

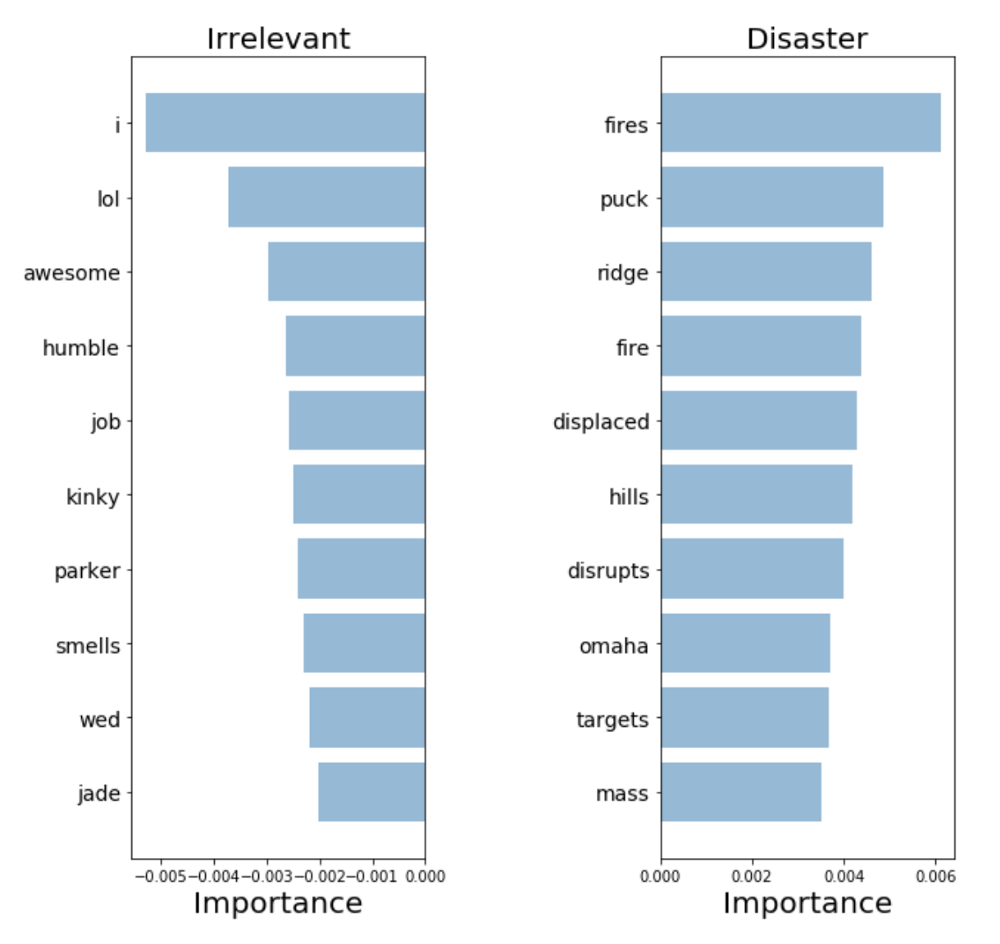

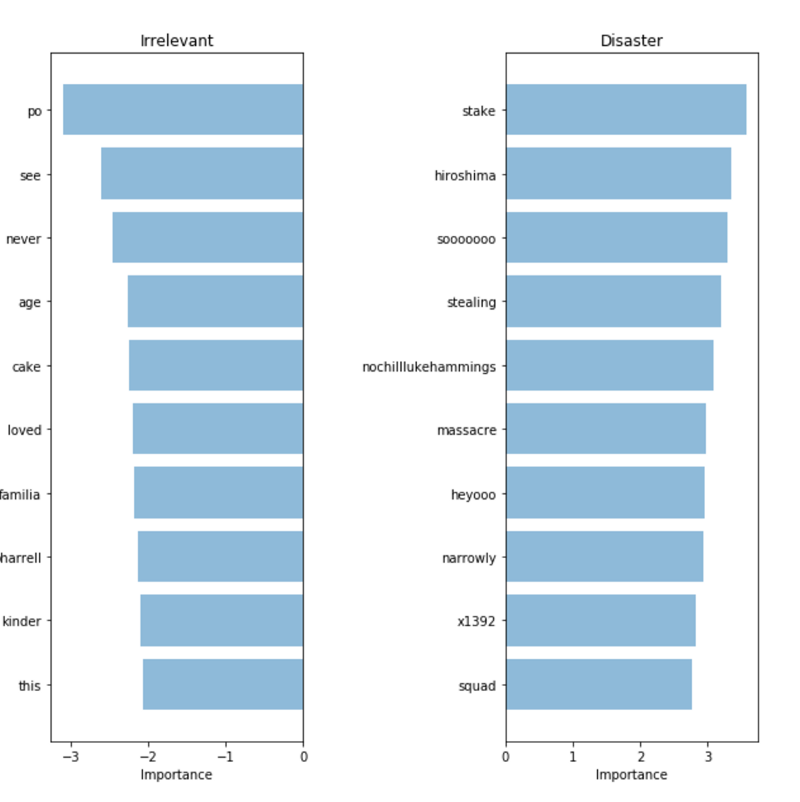

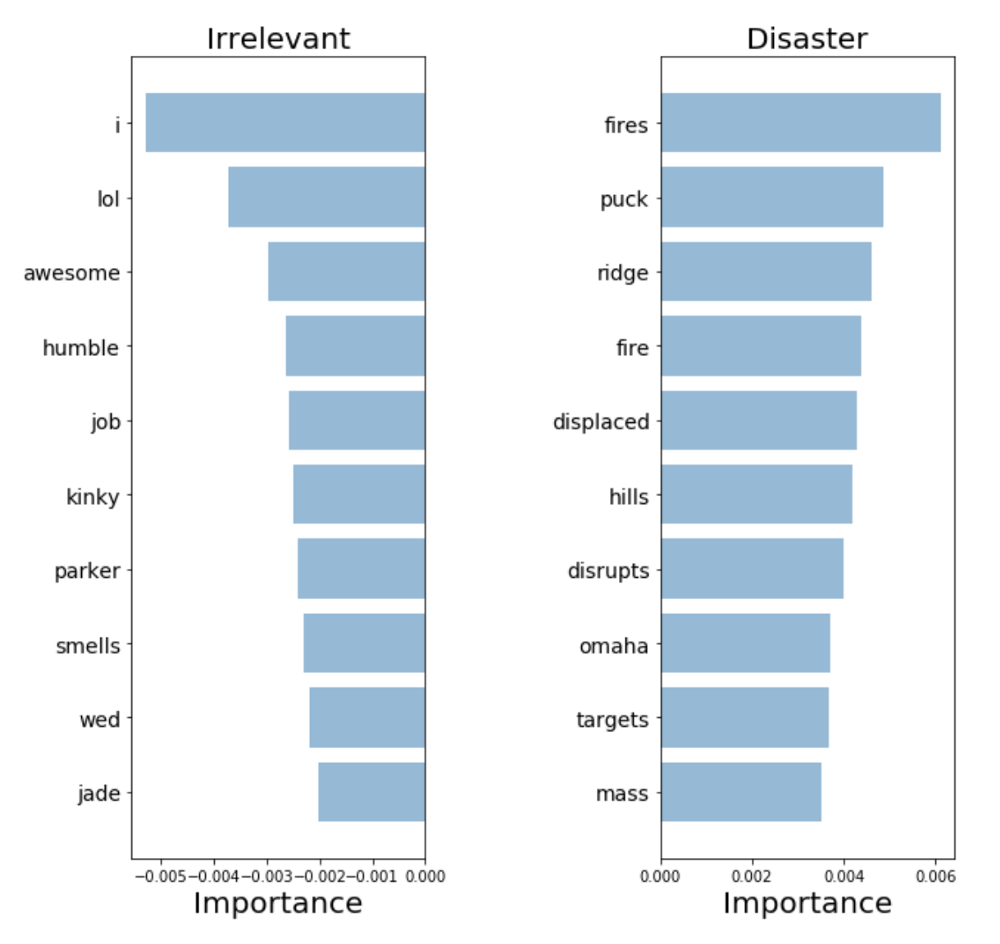

In order to validate our model and interpret its predictions, it is important to look at what words it uses to make decisions. If our data is biased, our classifier will make accurate predictions on the sample data, but the model will not be able to generalize them well enough in the real world. The diagram below shows the most significant words for catastrophe classes and irrelevant tweets. Drawing up charts reflecting the meaning of words is not difficult in the case of using a “bag of words” and logistic regression, since we simply extract and rank the coefficients that the model uses for its predictions.

“Bag of words”: the meaning of words

Our classifier correctly found several patterns ( hiroshima - “Hiroshima”, massacre - “massacre”), but it is clear that he retrained on some meaningless terms (“heyoo”, “x1392”). So, now our “bag of words” deals with a huge dictionary of various words and all these words are equivalent for him. However, some of these words are very common, and only add noise to our predictions. Therefore, we will try to find a way to present sentences in such a way that they can take into account the frequency of words, and see if we can get more useful information from our data.

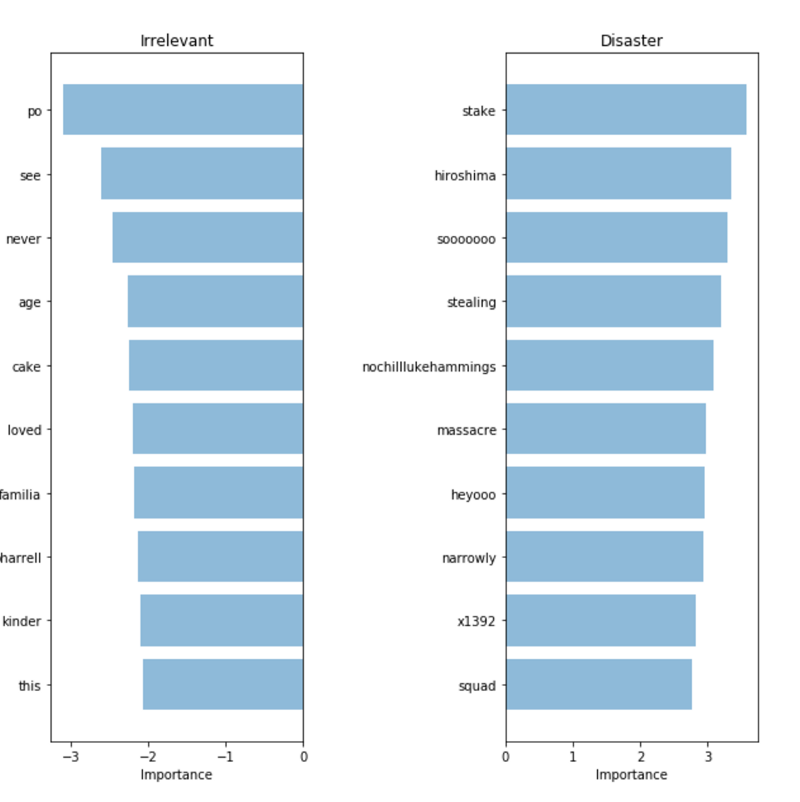

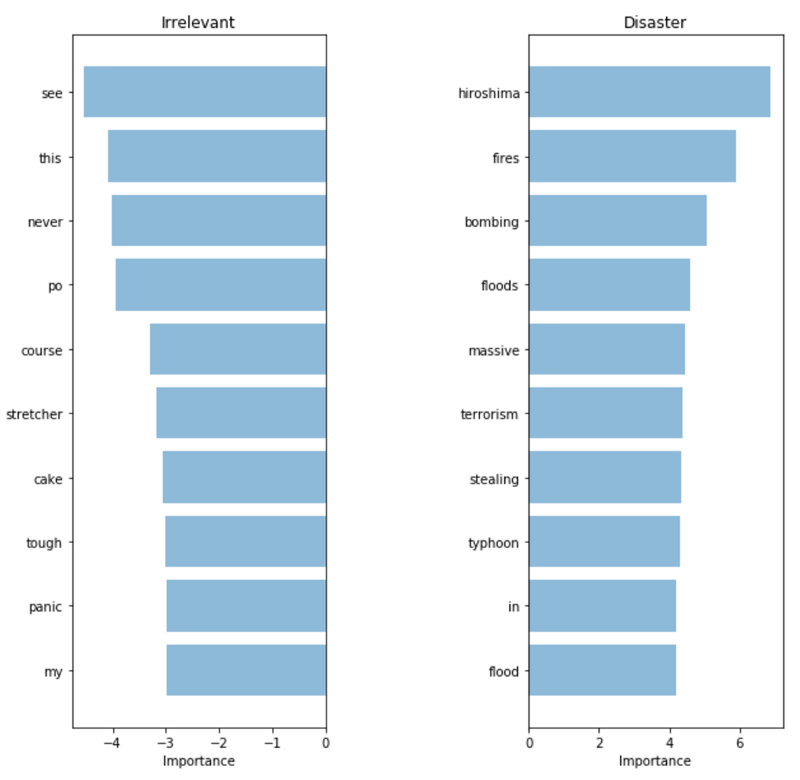

To help our model focus on meaningful words, we can use TF-IDF ( Term Frequency, Inverse Document Frequency ) scoring on top of our “word bag” model. TF-IDF weighs based on how rare they are in our dataset, lowering in priority words that are too common and just add noise. The following is a projection of the principal component method to evaluate our new view.

Visualization of vector representation using TF-IDF.

We can observe a clearer separation between the two colors. This indicates that it should become easier for our classifier to separate both groups. Let's see how our results improve. Having trained another logistic regression in our new vector representations, we get an accuracy of 76.2% .

Very slight improvement. Maybe our model even began to choose more important words? If the result obtained in this part has become better, and we do not allow the model to “cheat”, then this approach can be considered an improvement.

TF-IDF: Significance of Words The words

chosen by the model really look much more relevant. Despite the fact that the metrics on our test set have increased very slightly, we now havemuch more confidence in using the model in a real system that will interact with customers.

Our latest model was able to “grab” the words that carry the greatest meaning. However, most likely, when we release her in production, she will encounter words that were not found in the training sample - and will not be able to accurately classify these tweets, even if she saw very similar words during the training .

To solve this problem, we need to capture the semantic (semantic) meaning of words - this means that it is important for us to understand that the words “good” and “positive” are closer to each other than the words “apricot” and “continent”. We will use the Word2Vec tool to help us match word meanings.

Word2Vec is a technique for finding continuous mappings for words. Word2Vec learns by reading a huge amount of text, and then remembering which word appears in similar contexts. After training on a sufficient amount of data, Word2Vec generates a vector of 300 dimensions for each word in the dictionary, in which words with a similar meaning are located closer to each other.

The authors of the publication on the topic of continuous vector representations of words laid out in open access a model that was previously trained on a very large amount of information, and we can use it in our model to bring knowledge about the semantic meaning of words. Pre-trained vectors can be taken from the repository mentioned in the article by reference .

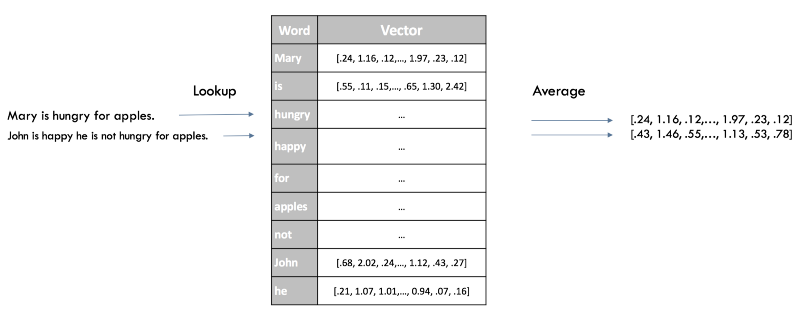

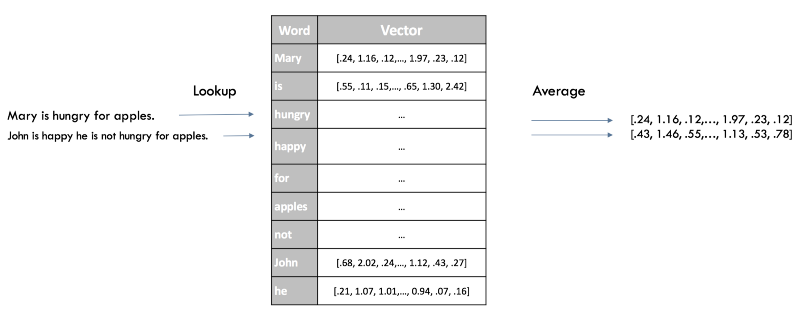

A quick way to get sentence attachments for our classifier is to average Word2Vec ratings for all words in our sentence. This is the same approach as with the “bag of words” earlier, but this time we only lose the syntax of our sentence, while preserving the semantic (semantic) information.

Vector representations of sentences in Word2Vec

Here is a visualization of our new vector representations after using the listed techniques:

Visualization of vector representations of Word2Vec.

Now the two groups of colors look even more separated, and this should help our classifier to find the difference between the two classes. After training the same model for the third time (logistic regression), we get an accuracy of 77.7% - and this is our best result at the moment! It is time to study our model.

Since our vector representations are no longer represented as a vector with one dimension per word, as in previous models, it is now harder to understand which words are most relevant to our classification. Despite the fact that we still have access to the coefficients of our logistic regression, they relate to 300 dimensions of our investments, and not to word indices.

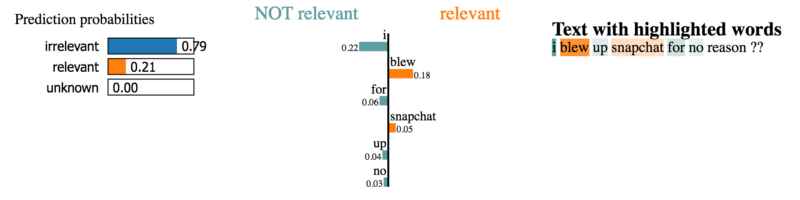

For such a small increase in accuracy, a complete loss of the ability to explain the operation of the model is too much a compromise. Fortunately, when working with more complex models, we can use interpreters like LIME , which are used to get some idea of how the classifier works.

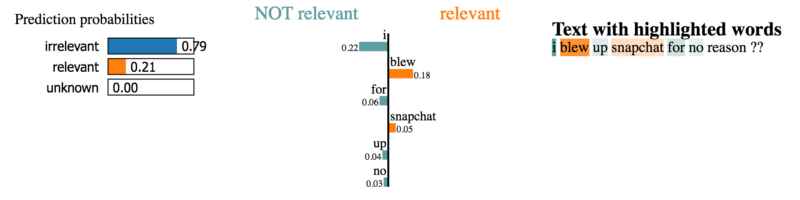

LIME is available on Github as an open package. This black box-based interpreter allows users to explain the decisions of any classifier using one specific example by changing the input (in our case, removing a word from a sentence) and observing how the prediction changes.

Let's take a look at a couple of explanations for the suggestions from our dataset.

The correct words for disasters are selected for classification as “relevant”.

Here, the contribution of words to the classification seems less obvious.

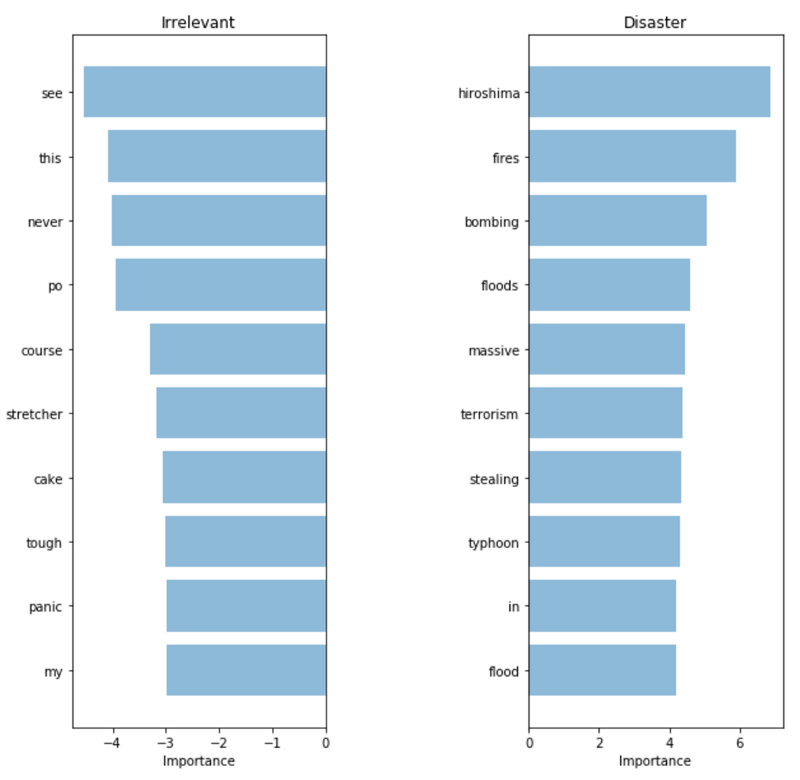

However, we don’t have enough time to explore thousands of examples from our dataset. Instead, let's run LIME on a representative sample of test data, and see which words are found regularly and contribute most to the end result. Using this approach, we can obtain estimates of the significance of words in the same way as we did for previous models, and validate the predictions of our model.

It seems that the model selects highly relevant words and accordingly makes clear decisions. Compared to all previous models, she selects the most relevant words, so it would be better to send her to production.

We looked at fast and efficient approaches for generating compact vector representations of sentences. However, omitting the word order, we discard all syntactic information from our sentences. If these methods do not give sufficient results, you can use a more complex model that takes whole expressions as input and predicts labels, without the need for an intermediate representation. A common way to do this is to consider a sentence as a sequence of individual word vectors using either Word2Vec, or more recent approaches like GloVe or CoVe . That is what we will do next.

Highly efficient model training architecture without additional pre-processing and post-processing (end-to-end, source )

Convolutional neural networks for classifying sentences ( CNNs for Sentence Classification ) are trained very quickly and can serve as an excellent input level in the deep learning architecture. Although convolutional neural networks (CNNs) are mostly known for their high performance on image data, they show excellent results when working with text data, and are usually much faster to learn than most complex NLP approaches (e.g., LSTM networks and Encoder / Decoder Architecture) This model preserves the word order and learns valuable information about which word sequences predict our target classes. Unlike previous models, she is aware of the difference between the phrases "Lesha eats plants" and "Plants eat Lesha."

Training this model does not require much more effort than previous approaches (see code ), and, as a result, we get a model that works much better than the previous one, allowing us to obtain an accuracy of 79.5%. As with the models we reviewed earlier, the next step is to research and explain the predictions using the methods we described above to make sure that the model is the best option we can offer users. At this point, you should already feel confident enough to handle the next steps yourself.

So, a summary of the approach that we have successfully put into practice:

We examined these approaches using a specific example using models tailored to recognize, understand, and use short texts - for example, tweets; however, these same ideas are widely applicable to many different tasks .

Natural Language Processing (NLP) is an actively developing scientific discipline engaged in the search for meaning and learning based on textual data.

How can this article help you?

Over the past year, the Insight team has participated in several hundred projects, combining the knowledge and experience of leading companies in the United States. They summarized the results of this work in an article, the translation of which is now in front of you, and deduced approaches to solving the most common applied problems of machine learning .

We'll start with the simplest method that can work - and gradually move on to more subtle approaches like feature engineering , word vectors, and deep learning.

After reading the article, you will know how to:

- collect, prepare, and inspect data;

- build simple models, and, if necessary, make the transition to deep learning;

- interpret and understand your models to make sure that you are interpreting information, not noise.

The post is written in a walkthrough format; it can also be seen as a review of high-performance standard approaches.

An original Jupyter notebook is attached to the original post , demonstrating the use of all the techniques mentioned. We encourage you to use it as you read the article.

Using machine learning to understand and use text

Natural language processing provides exciting new results and is a very broad field. However, Insight identified the following key aspects of practical application that are much more common than others:

- Identification of various cohorts of users or customers (for example, prediction of customer churn, total customer profit, product preferences)

- Accurate detection and extraction of various categories of reviews (positive and negative opinions, references to individual attributes like clothing size, etc.)

- Classification of the text in accordance with its meaning (request for basic help, urgent problem).

Despite the large number of scientific publications and training manuals on the topic of NLP on the Internet, today there are practically no full-fledged recommendations and tips on how to effectively deal with NLP tasks, while considering solutions to these problems from the very basics.

Step 1: Collect Your Data

Sample data sources

Any machine learning task starts with data — whether it's a list of email addresses, posts, or tweets. Common sources of textual information are:

- Product reviews (Amazon, Yelp and various app stores).

- Content created by users (tweets, Facebook posts, questions on StackOverflow).

- Diagnostic information (user requests, support tickets, chat logs).

Social Media Disasters dataset

To illustrate the approaches described, we will use the Disasters in Social Media dataset , kindly provided by CrowdFlower .

The authors examined over 10,000 tweets that were selected using various search queries such as “on fire”, “quarantine” and “pandemonium”. They then noted whether the tweet was related to a disaster event (as opposed to jokes using these words, movie reviews, or anything not related to disasters).

We set ourselves the task of determining which of the tweets are related to the disaster event, as opposed to those tweets that relate to irrelevant topics (for example, films). Why do we have to do this? A potential use would be to give officials emergency notification of urgent attention, and the reviews of Adam Sandler’s latest film would be ignored. The particular difficulty of this task is that both of these classes contain the same search criteria, so we will have to use more subtle differences to separate them.

Next, we will refer to the disaster tweets as “disaster,” and the tweets about everything else as “irrelevant . ”

Labels

Our data is tagged, so we know which categories tweets belong to. As Richard Socher emphasizes, it is usually faster, easier, and cheaper to find and mark up enough data to model on, rather than trying to optimize a complex teaching method without a teacher.

Instead of spending a month formulating a machine learning task without a teacher, just spend a week marking up the data and train the classifier.

Step 2. Clear your data

Rule number one: “Your model can only become as good

as your data.”

One of the key skills of a professional Data Scientist is knowing what should be the next step - working on a model or data. As practice shows, first it is better to look at the data itself, and only then clean it up.

A clean dataset will allow the model to learn significant attributes and not retrain on irrelevant noise.

The following is a checklist that is used to clear our data (details can be found in the code ).

- Delete all irrelevant characters (for example, any non-alphanumeric characters).

- Tokenize the text by dividing it into individual words.

- Remove irrelevant words - for example, Twitter mentions or URLs.

- Convert all characters to lower case so that the words "hello", "hello" and "hello" are considered the same word.

- Consider combining misspelled or alternative spelling words (for example, “cool” / “cool” / “cool”)

- Consider lemmatization , that is, reducing the various forms of one word to a dictionary form (for example, “machine” instead of “machine”, “by machine”, “machines”, etc.)

After we go through these steps and check for additional errors, we can begin to use clean, tagged data to train the models.

Step 3. Choose a good view of the data.

As input, machine learning models accept numerical values. For example, models that work with images take a matrix that displays the intensity of each pixel in each color channel.

A smiling face, represented as an array of numbers

Our dataset is a list of sentences, so in order for our algorithm to extract patterns from data, we must first find a way to present it in such a way that our algorithm can understand it.

One-hot encoding ("Bag of words")

The natural way to display text in computers is to encode each character individually as a number (an example of this approach is ASCII encoding ). If we “feed” such a simple representation to the classifier, he will have to study the structure of words from scratch, based only on our data, which is impossible on most datasets. Therefore, we must use a higher level approach.

For example, we can build a dictionary of all unique words in our dataset, and associate a unique index with each word in the dictionary. Each sentence can then be displayed in a list, the length of which is equal to the number of unique words in our dictionary, and in each index in this list it will be stored how many times this word appears in the sentence. This model is called the “Bag of words” (Bag of Words ), since it is a mapping completely ignoring the word order of a sentence. Below is an illustration of this approach.

Presentation of sentences in the form of a “Bag of words”. The original sentences are indicated on the left, their presentation is on the right. Each index in vectors represents one specific word.

Visualize vector representations.

The Social Media Disasters dictionary contains about 20,000 words. This means that each sentence will be reflected by a vector of length 20,000. This vector will contain mainly zeros , since each sentence contains only a small subset of our dictionary.

In order to find out whether our vector representations ( embeddings ) capture information relevant to our task (for example, whether tweets are related to disasters or not), you should try to visualize them and see how well these classes are separated. Since dictionaries are usually very large and data visualization of 20,000 measurements is not possible, approaches like the principal component method(PCA) help project data into two dimensions.

Visualization of vector representations for the “bag of words”

Judging by the resulting graph, it does not seem that the two classes are separated as it should — this may be a feature of our representation or simply the effect of reducing the dimension. In order to find out whether the capabilities of the “bag of words” are useful to us, we can train a classifier based on them.

Step 4. Classification

When you are starting a task for the first time, it is common practice to start with the simplest method or tool that can solve this problem. When it comes to data classification, the most common way is logistic regression because of its versatility and ease of interpretation. It is very simple to train, and its results can be interpreted, since you can easily extract all the most important coefficients from the model.

We will divide our data into a training sample, which we will use to train our model, and a test one, in order to see how well our model generalizes to data that I have not seen before. After training, we get an accuracy of 75.4%. Not so bad! Guessing the most frequent class (“irrelevant”) would give us only 57%.

However, even if a result with 75% accuracy would be enough for our needs, we should never use the model in production without trying to understand it.

Step 5. Inspection

Error matrix

The first step is to understand what types of errors our model makes and what types of errors we would like to encounter less often in the future. In the case of our example, false positive results classify an irrelevant tweet as a catastrophe, false negative ones classify a catastrophe as an irrelevant tweet. If our priority is the reaction to each potential event, then we will want to reduce our false-negative responses. However, if we are limited in resources, then we can prioritize a lower false-negative rate to reduce the likelihood of a false alarm. A good way to visualize this information is to use an error matrix., which compares the predictions made by our model with real marks. Ideally, this matrix will be a diagonal line going from the upper left to the lower right corner (this will mean that our predictions coincided perfectly with the truth).

Our classifier creates more false negative than false positive results (proportionally). In other words, the most common mistake in our model is the inaccurate classification of catastrophes as irrelevant. If false positives reflect a high cost for law enforcement, then this may be a good option for our classifier.

Explanation and interpretation of our model

In order to validate our model and interpret its predictions, it is important to look at what words it uses to make decisions. If our data is biased, our classifier will make accurate predictions on the sample data, but the model will not be able to generalize them well enough in the real world. The diagram below shows the most significant words for catastrophe classes and irrelevant tweets. Drawing up charts reflecting the meaning of words is not difficult in the case of using a “bag of words” and logistic regression, since we simply extract and rank the coefficients that the model uses for its predictions.

“Bag of words”: the meaning of words

Our classifier correctly found several patterns ( hiroshima - “Hiroshima”, massacre - “massacre”), but it is clear that he retrained on some meaningless terms (“heyoo”, “x1392”). So, now our “bag of words” deals with a huge dictionary of various words and all these words are equivalent for him. However, some of these words are very common, and only add noise to our predictions. Therefore, we will try to find a way to present sentences in such a way that they can take into account the frequency of words, and see if we can get more useful information from our data.

Step 6. Consider the structure of the dictionary

TF-IDF

To help our model focus on meaningful words, we can use TF-IDF ( Term Frequency, Inverse Document Frequency ) scoring on top of our “word bag” model. TF-IDF weighs based on how rare they are in our dataset, lowering in priority words that are too common and just add noise. The following is a projection of the principal component method to evaluate our new view.

Visualization of vector representation using TF-IDF.

We can observe a clearer separation between the two colors. This indicates that it should become easier for our classifier to separate both groups. Let's see how our results improve. Having trained another logistic regression in our new vector representations, we get an accuracy of 76.2% .

Very slight improvement. Maybe our model even began to choose more important words? If the result obtained in this part has become better, and we do not allow the model to “cheat”, then this approach can be considered an improvement.

TF-IDF: Significance of Words The words

chosen by the model really look much more relevant. Despite the fact that the metrics on our test set have increased very slightly, we now havemuch more confidence in using the model in a real system that will interact with customers.

Step 7. Application of semantics

Word2vec

Our latest model was able to “grab” the words that carry the greatest meaning. However, most likely, when we release her in production, she will encounter words that were not found in the training sample - and will not be able to accurately classify these tweets, even if she saw very similar words during the training .

To solve this problem, we need to capture the semantic (semantic) meaning of words - this means that it is important for us to understand that the words “good” and “positive” are closer to each other than the words “apricot” and “continent”. We will use the Word2Vec tool to help us match word meanings.

Using the results of pre-training

Word2Vec is a technique for finding continuous mappings for words. Word2Vec learns by reading a huge amount of text, and then remembering which word appears in similar contexts. After training on a sufficient amount of data, Word2Vec generates a vector of 300 dimensions for each word in the dictionary, in which words with a similar meaning are located closer to each other.

The authors of the publication on the topic of continuous vector representations of words laid out in open access a model that was previously trained on a very large amount of information, and we can use it in our model to bring knowledge about the semantic meaning of words. Pre-trained vectors can be taken from the repository mentioned in the article by reference .

Offer Level Display

A quick way to get sentence attachments for our classifier is to average Word2Vec ratings for all words in our sentence. This is the same approach as with the “bag of words” earlier, but this time we only lose the syntax of our sentence, while preserving the semantic (semantic) information.

Vector representations of sentences in Word2Vec

Here is a visualization of our new vector representations after using the listed techniques:

Visualization of vector representations of Word2Vec.

Now the two groups of colors look even more separated, and this should help our classifier to find the difference between the two classes. After training the same model for the third time (logistic regression), we get an accuracy of 77.7% - and this is our best result at the moment! It is time to study our model.

The trade-off between complexity and explainability

Since our vector representations are no longer represented as a vector with one dimension per word, as in previous models, it is now harder to understand which words are most relevant to our classification. Despite the fact that we still have access to the coefficients of our logistic regression, they relate to 300 dimensions of our investments, and not to word indices.

For such a small increase in accuracy, a complete loss of the ability to explain the operation of the model is too much a compromise. Fortunately, when working with more complex models, we can use interpreters like LIME , which are used to get some idea of how the classifier works.

LIME

LIME is available on Github as an open package. This black box-based interpreter allows users to explain the decisions of any classifier using one specific example by changing the input (in our case, removing a word from a sentence) and observing how the prediction changes.

Let's take a look at a couple of explanations for the suggestions from our dataset.

The correct words for disasters are selected for classification as “relevant”.

Here, the contribution of words to the classification seems less obvious.

However, we don’t have enough time to explore thousands of examples from our dataset. Instead, let's run LIME on a representative sample of test data, and see which words are found regularly and contribute most to the end result. Using this approach, we can obtain estimates of the significance of words in the same way as we did for previous models, and validate the predictions of our model.

It seems that the model selects highly relevant words and accordingly makes clear decisions. Compared to all previous models, she selects the most relevant words, so it would be better to send her to production.

Step 8. Using syntax when applying end-to-end approaches

We looked at fast and efficient approaches for generating compact vector representations of sentences. However, omitting the word order, we discard all syntactic information from our sentences. If these methods do not give sufficient results, you can use a more complex model that takes whole expressions as input and predicts labels, without the need for an intermediate representation. A common way to do this is to consider a sentence as a sequence of individual word vectors using either Word2Vec, or more recent approaches like GloVe or CoVe . That is what we will do next.

Highly efficient model training architecture without additional pre-processing and post-processing (end-to-end, source )

Convolutional neural networks for classifying sentences ( CNNs for Sentence Classification ) are trained very quickly and can serve as an excellent input level in the deep learning architecture. Although convolutional neural networks (CNNs) are mostly known for their high performance on image data, they show excellent results when working with text data, and are usually much faster to learn than most complex NLP approaches (e.g., LSTM networks and Encoder / Decoder Architecture) This model preserves the word order and learns valuable information about which word sequences predict our target classes. Unlike previous models, she is aware of the difference between the phrases "Lesha eats plants" and "Plants eat Lesha."

Training this model does not require much more effort than previous approaches (see code ), and, as a result, we get a model that works much better than the previous one, allowing us to obtain an accuracy of 79.5%. As with the models we reviewed earlier, the next step is to research and explain the predictions using the methods we described above to make sure that the model is the best option we can offer users. At this point, you should already feel confident enough to handle the next steps yourself.

Finally

So, a summary of the approach that we have successfully put into practice:

- start with a quick and easy model;

- We explain her predictions;

- We understand what kinds of mistakes she makes;

- we use the acquired knowledge to make a decision about the next step - whether it's working on data, or on a more complex model.

We examined these approaches using a specific example using models tailored to recognize, understand, and use short texts - for example, tweets; however, these same ideas are widely applicable to many different tasks .

As already noted in the article, anyone can benefit by applying machine learning methods, especially in the world of the Internet, with all the variety of analytical data. Therefore, the topics of artificial intelligence and machine learning are certainly discussed at our RIT ++ and Highload ++ conferences , and from a completely practical point of view, as in this article. Here, for example, is a video of several last year’s performances:

- Поиск признаков мошенничества в убытках по медицинскому страхованию / Василий Рязанов (Allianz)

- Ранжирование откликов соискателей с помощью машинного обучения / Сергей Сайгушкин (Superjob)

- Машинное обучение в электронной коммерции / Александр Сербул (1С-Битрикс)

- Применение машинного обучения для генерации структурированных сниппетов / Никита Спирин (Datastars)

А программа майского фестиваля РИТ++ и июньского Highload++ Siberia уже в пути, за текущим состоянием можно следить на сайтах конференций или подписаться на рассылку, и мы будем периодически присылать анонсы одобренных докладов, чтобы вам ничего не пропустить.