Comparison of Open Big Data OLAP Systems: ClickHouse, Druid, and Pinot

- Transfer

ClickHouse , Druid and Pinot are three open data warehouses that allow you to perform analytical queries on large volumes of data with interactive delays. This article is a translation of a detailed comparison made by Roman Leventov.

And under the cut - a detailed story about how Roman came to this.

Details of ClickHouse implementation became known to me from Alexei Zatelepin , one of the key developers of the project . The documentation available in English is scarce - the last four sections of this documentation page are the best source of information .

I myself am involved in the development of Druid , but I have no personal interest in this system - in truth, most likely in the near future I will cease to develop it. Therefore, readers can count on the absence of any bias.

All that I will continue to write about Pinot is based on the Architecture page on the Pinot wiki, as well as on other wiki pages in the “Project Documentation” section. The last time they were updated in June 2017 is more than half a year ago.

The reviewers of the original article were Alexey Zatelepin and Vitaliy Ludvichenko (developers of ClickHouse), Jean Merlino (the most active developer of Druid), Kishore Gopalakrishna (architect of Pinot) and Jean-Francois Im (developer of Pinot). We join in the gratitude of the author and believe that this greatly increases the credibility of the article.

Warning : the article is large enough, so you may want to limit yourself to reading the “Conclusion” section at the end.

At a fundamental level, ClickHouse, Druid, and Pinot are similar because they store data and process requests on the same nodes, moving away from the "disconnected" BigQuery architecture. Recently, I have already described several inherited problems with related architecture in the case of Druid [ 1 , 2 ]. There is no open equivalent for BigQuery at the moment (with the possible exception of Drill ?). Possible approaches to building such open systems are discussed in another article on my blog .

The systems discussed in this article execute queries faster than Big Data systems from the SQL-on-Hadoop family of families: Hive, Impala, Presto, and Spark, even when the latter access data stored in a column format - for example, Parquet or Kudu. This is because in ClickHouse, Druid, and Pinot:

On the other side of the database spectrum, ClickHouse, Druid, and Pinot do not support point updates and deletes , as opposed to column systems like Kudu, InfluxDB, and Vertica (?). This gives ClickHouse, Druid, and Pinot the ability to produce more efficient column compression and more aggressive indexes, which means greater resource utilization and faster query execution.

Yandex ClickHouse developers plan to start supporting updates and deletions in the future , but I'm not sure if these will be “real” point queries or updates / deletes of data ranges.

All three systems support streaming data absorption from Kafka. Druid and Pinot support lambda-style streaming data and batch absorption of the same data. ClickHouse supports batch inserts directly, so it doesn’t need a separate batch absorption system like the one used by Druid and Pinot. If you are interested in the details, then you can find them further.

All three systems have been tested for operability on a large scale: Yandex.Metrica has the ClickHouse cluster , which consists of about ten thousand CPU cores. Metamarkets uses a similar-sized Druid cluster . One Linkedot Pinot cluster includes thousands of machines .

All systems discussed in this article are immature by the standards of open Big Data enterprise systems . However, most likely they are immature no more than the average open Big Data system - but this is a completely different story. ClickHouse, Druid and Pinot lack some obvious optimizations and functionality, and they are teeming with bugs (I'm not 100% sure about ClickHouse and Pinot, but I see no reason why they would be better than Druid in this regard).

This smoothly leads us to the next important section.

I regularly see on the net how some people make comparisons of big data systems: they take a set of their data, somehow “feed” it to the system being evaluated, and then immediately try to measure performance - how much memory or disk space was used, and how fast they were running inquiries. Moreover, they do not have an understanding of how the systems they test are arranged from the inside. Then, using only such specific performance data - sometimes together with lists of the functionality that they need and which is currently in the system - they eventually make their choice or, even worse, choose to write their own, “best” system with zero.

This approach seems to me wrong, at least it does not apply to open OLAP systems for Big Data. The task of creating a Bid Data OLAP system that could work efficiently in most use cases and contain all the necessary functions is so great that I evaluate its implementation at least 100 man-years .

Today, ClickHouse, Druid, and Pinot are optimized only for the specific use cases that are required by their developer - and for the most part contain only those functions that the developers themselves need. I can guarantee that your case will surely “run into” those bottlenecks that the developers of the OLAP systems under consideration have not yet encountered, or those places that they are not interested in.

Not to mention that the above-mentioned approach of “throwing data into a system that you don’t know anything about and then measuring its effectiveness” is very likely to give a distorted result due to serious “bottlenecks” that could actually be fixed by a simple configuration change , data schema, or other query construction.

One such example that illustrates well the problem described above is Marek Wawrush's post on choosing between ClickHouse and Druid in Cloudflare . They needed 4 ClickHouse servers (which eventually turned into 9), and they estimated that they would need “hundreds of nodes” to deploy a similar Druid installation. Although Marek admits that the comparison is unfair , since Druid lacks “sorting by primary key,” he may not even realize that it is possible to achieve approximately the same effect in Druid by simply setting the correct measurement order in the “ ingestion spec ” and making simple preparations data: trim column value

However, I will not argue with their final decision to choose ClickHouse, since on a scale of about 10 nodes and for their needs, ClickHouse also seems to me a better choice than Druid. But their conclusion that ClickHouse is at least an order of magnitude more efficient (by the standards of infrastructure costs) than Druid is a serious misconception. In fact, of the systems we are considering today,Druid offers the best opportunity for really cheap installations (see the section “Druid Request Processing Node Levels” below).

By virtue of their fundamental architectural similarity, ClickHouse, Druid and Pinot share approximately the same “limit” of efficiency and performance optimization. There is no "magic pill" that would allow any of these systems to be faster than the rest. Do not let yourself be confused by the fact that in their current state, systems show themselves very differently in different benchmarks.

Suppose Druid does not support “sorting by primary key” as well as ClickHouse can do - and ClickHouse, in turn, does not support “inverted indexes” as well as Druid, which gives these systems advantages with one load or another. Lost optimizations can be implemented in the selected system using the wrongthey are already a lot of their efforts , if you have the intention and the opportunity to decide on such a step.

Other system design considerations you should consider:

The Druid and Pinot architectures are almost identical to each other, while ClickHouse stands slightly apart. Therefore, we first compare ClickHouse with the "generalized" Druid / Pinot architecture, and then discuss the minor differences between Druid and Pinot.

In Druid and Pinot, all the data in each “table” (whatever it is called in the terminology of these systems) is divided into a specified number of parts. On the time axis, data is usually divided at a given interval. Then these pieces of data are “sealed” individually into independent autonomous entities called “segments”. Each segment includes table metadata, compressed column data, and indexes.

Segments are stored in the file system of the “deep storage” storage (for example, HDFS) and can be uploaded to request processing nodes, but the latter are not responsible for the stability of segments, therefore, request processing nodes can be replaced relatively freely. Segments are not tied to specific nodes.and can be downloaded to these or other nodes. A dedicated server (called a “coordinator” in Druid and a “controller” in Pinot, but I refer to it as a “wizard” below) is responsible for assigning segments to nodes, and moving segments between nodes, if necessary.

This does not contradict what I noted above, all three systems have a static distribution of data between nodes, since loading segments and moving them in Druid - and as I understand it in Pinot - are expensive operations and therefore are not performed for each individual queue, but rather occur usually every few minutes / hours / days.

Segment metadata is stored in ZooKeeper - directly in the case of Druid, and using the Helix frameworkin pinot. In Druid, metadata is also stored in the SQL database, more on this in the section “Differences between Druid and Pinot”.

ClickHouse has no "segments" containing data falling within specific time ranges. There is no "deep storage" for data in it, the nodes in the ClickHouse cluster are also responsible for processing requests and the persistence / stability of the data stored on them. So you don’t need HDFS or cloud storage like Amazon S3.

ClickHouse has partitioned tables consisting of a specified set of nodes. There is no “central authority" or metadata server. All nodes between which this or that table is divided contain complete, identical copies of metadata, including the addresses of all other nodes on which sections of this table are stored.

The metadata of a partitioned table includes “weights” of nodes for distributing freshly recorded data - for example, 40% of the data should go to node A, 30% to node B and 30% to C. Normally, the distribution should occur evenly, “skew”, as in this As an example, it is required only when a new node is added to the partitioned table and it is necessary to quickly fill it with some data. Updates to these weights must be done manually by administrators of the ClickHouse cluster, or by an automated system built on top of ClickHouse.

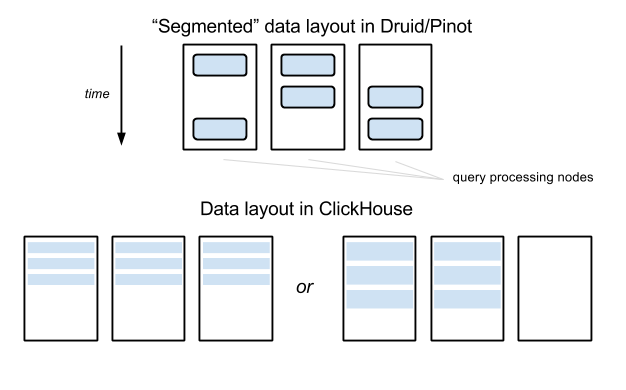

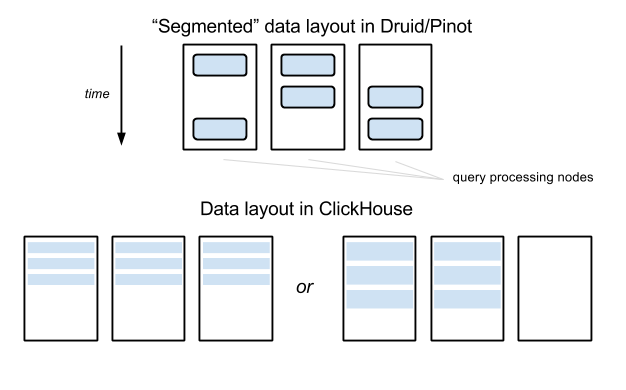

The approach to data management in ClickHouse is simpler than in Druid and Pinot: no “deep storage” is required, only one type of nodes, no dedicated server for data management is required. But the ClickHouse approach leads to some difficulties when any data table grows so large that it needs to be split between ten or more nodes: the query gain becomes as large as the partitioning factor - even for queries that cover a small data interval:

ClickHouse data distribution tradeoff

In the example shown in the image above, the table data is distributed between three nodes in Druid / Pinot, but a query for a small data interval usually affects only two of them (until the interval crosses the segment boundary interval). In ClickHouse, any queries will be forced to affect three nodes — if the table is segmented between three nodes. In this example, the difference does not look so significant, but imagine what would happen if the number of nodes reaches 100 - while the segmentation factor can still be equal, for example, to 10 in Druid / Pinot.

To mitigate this problem, the largest ClickHouse cluster in Yandex, consisting of hundreds of nodes, is actually divided into many “sub-clusters” with several dozen nodes in each. The ClickHouse cluster is used in website analytics, and each data point has a “Website ID” dimension. There is a rigid binding of each site ID to a specific sub-cluster, where all the data for this site ID goes. On top of the ClickHouse cluster, there is a business logic layer that manages this data sharing when absorbing data and executing queries. Fortunately, in their usage scenarios, very few requests affect several site identifiers, and such requests do not come from users of the service, so they do not have a hard link to real time according to the service level agreement.

Another drawback of the ClickHouse approach is that when the cluster grows very fast, the data cannot be rebalanced automatically without the participation of a person who manually changes the “weights” of the nodes in the split table.

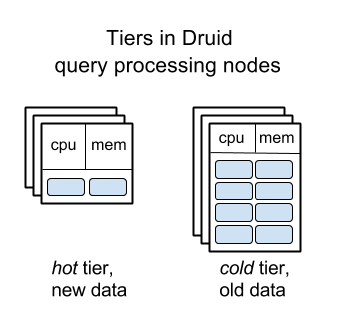

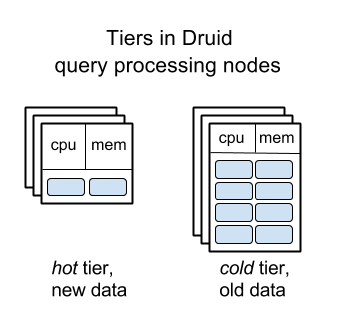

Managing data with “easy to imagine” segments - this concept fits well with our cognitive abilities. Segments themselves can be relocated between nodes relatively easily. These two reasons allowed Druid to implement “ leveling ” the nodes involved in processing requests: old data is automatically moved to servers with relatively large disks, but less memory and CPU, which can significantly reduce the cost of a large Druid working cluster by slowing down requests to more old data.

This feature allows Metamarkets to save hundreds of thousands of dollars in Druid infrastructure costs every month — as opposed to using a “flat” cluster.

Levels of request processing nodes in Druid

As far as I know, ClickHouse and Pinot do not yet have similar functionality - it is assumed that all nodes in their clusters are the same.

Due to the fact that the architecture of Pinot is very similar to that of Druid, it seems to me that it will not be too difficult to add a similar function to Pinot. It will be harder in the case of ClickHouse, since the concept of “segments” is extremely useful for implementing this function, but it is still possible.

The replication unit in Druid and Pinot is a single segment. Segments are replicated at the “deep storage” level (for example, to three replicas on HDFS, or using BLOB storage in Amazon S3), and at the request processing level: usually in Druid and Pinot, each segment is loaded onto two different nodes . A “master” server monitors replication levels for each segment and uploads the segment to a server if the replication factor falls below a specified level (for example, if any of the nodes stops responding).

The replication unit in ClickHouse is a section of a table on a server (for example, all data from a table stored on a server). Like partitioning, ClickHouse replication is more “static and specific” than “cloud-style”: several servers know that they are replicas of each other (for some specific table; in the case of another table, the replication configuration may differ). Replication provides both resilience and query availability. When a disk is damaged on one node, data is not lost, since it is also stored on another node. When a node is temporarily unavailable, requests can be redirected to the replica.

In the largest ClickHouse cluster in Yandex, there are two identical sets of nodes in different data centers, and they are paired. In each pair, nodes are replicas of each other (a replication factor of two is used), and they are located in different data centers.

ClickHouse relies on ZooKeeper to manage replication - so if you don't need replication, then you don't need ZooKeeper either. This means that ZooKeeper is not required for ClickHouse deployed on a single node.

In Druid and Pinot, query processing nodes specialize in loading segments and serving data requests in segments; they do not accumulate new data and produce new segments.

When a table can be updated with a delay of one hour or more, segments are created using batch processing engines - for example, Hadoop or Spark. Both Druid and Pinot have first-class Hadoop support out of the box. There is a third-party plugin to support Druid indexing in Spark, but at the moment it is not officially supported. As far as I know, there is no such level of Spark support in Pinot, that is, you should be prepared to deal with Pinot interfaces and code, and then write Java / Scala code yourself, even if it should not be too difficult. (However, since the publication of the original article, Spark support for Pinot has been contributed by the contributor ).

When a table needs to be updated in real time, the idea of “real-time” nodes that do three things comes to the rescue: accepts new data from Kafka (Druid also supports other sources), serves requests with recent data, creates segments in the background and then writes them in the "deep vault".

The fact that ClickHouse does not need to prepare “segments” that contain all the data and fall within the specified time intervals allows us to build a simpler data absorption architecture. ClickHouse does not require a batch processing engine like Hadoop or real-time nodes. Regular ClickHouse nodes - the same ones that store data and serve requests to them - directly accept batch data records.

If the table is segmented, then the node that receives the packet record (for example, 10k rows) distributes the data according to the "weights" (see the section below). Lines are written in one packet, which forms a small "set". Many immediately converted to column format. Each ClickHouse host runs a background process that combines row sets into even larger sets. The ClickHouse documentation is strongly tied to the principle known as "MergeTree" and emphasizes the similarity of its work with the LSM tree , although it bothers me a bit, because the data is not organized into trees - they are in a flat columnar format.

Druid and Pinot data absorption is “difficult”: it consists of several different services, and managing them is hard work.

The absorption of data in ClickHouse is much simpler (which is offset by the complexity of managing "historical" data - that is, data not in real time), but there is one point here: you must be able to collect data in packages until ClickHouse itself. Automatic absorption and batch data collection from Kafka is available “out of the box”but if you use a different source of real-time data (anything is meant here, in the range between the request infrastructure, alternative to Kafka, and streaming processing engines, up to various HTTP endpoints), then you will have to create an intermediate collection service packages, or add code directly to ClickHouse.

В Druid и Pinot имеется отдельный слой узлов, называемых «брокерами&кaquo;, которые принимают все запросы к системе. Они определяют, к каким «историческим» ( содержащим данные не в реальном времени) узлам обработки запросов должны быть отправлены подзапросы, основываясь на отображении сегментов в узлы, в которых сегменты загружаются. Брокеры хранят информацию об отображении в памяти. Брокер-узлы отправляют дальше подзапросы к узлам обработки запросов, и когда результаты этих подзапросов возвращаются, брокер объединяет их и возвращает финальный комбинированный результат пользователю.

I can’t presume why during the design of Druid and Pinot it was decided to introduce another type of nodes. However, now they seem to be an integral part of them, because when the total number of segments in a cluster begins to exceed ten million, information about mapping segments to nodes begins to occupy gigabytes of memory. It is very wasteful to allocate so much memory on each node to process requests. Here is another drawback that overlaps Druid and Pinot with their “segmented” data management architecture.

In ClickHouse , it is usually not necessary to select a separate set of nodes under the “request broker”. There is a special, ephemeral "distributed" type of tablein ClickHouse, which can be installed on any node, and queries to this table will do the same, for which the broker nodes in Druid and Pinot are responsible. Typically, such ephemeral tables are located on each node that participates in a partitioned table, so in practice each node can be an “entry point” for a request to the ClickHouse cluster. This node can issue the necessary subqueries to other sections, process its part of the request independently and then combine it with partial results from other sections.

When a node (or one of the processing nodes in ClickHouse, or a broker node in Druid and Pinot) issues subqueries to others, and one or more subqueries for some reason fail, ClickHouse and Pinot handle this situation correctly: they combine the results successfully completed subqueries together, and still return a partial result to the user. The Druid of this function is now very lacking : if in it the execution of the subquery fails, then the entire request will also fail.

The “segmented” approach to data management in Druid and Pinot versus simpler data management in ClickHouse defines many aspects of systems. However, it is important to note that this difference has little (or no effect) on the potential compression efficiency (however, the history of compression for all three systems has a sad end to the current state of affairs), or on the speed of request processing.

Clickhousesimilar to traditional RDMBS, for example, PostgreSQL. In particular, ClickHouse can be deployed to just one server. If the planned size is small - say, no more than about 100 CPU cores for processing requests and 1 TB of data, I would say that ClickHouse has significant advantages over Druid and Pinot due to its simplicity and the absence of the need for additional types of nodes, such as a “master” "," Nodes of absorption in real time "," brokers ". In this field, ClickHouse competes more with InfluxDB than with Druid or Pinot.

Druid and Pinotsimilar to Big Data systems like HBase. Here, we do not mean performance characteristics, but dependence on ZooKeper, dependence on persistent replicated storage (for example, HDFS), focusing on fault tolerance of individual nodes, as well as autonomous operation and data management that do not require constant human attention. For a wide range of applications, neither ClickHouse, nor Druid or Pinot are clear winners. First of all, you should take into account your ability to deal with the source code of the system, fix bugs, add new features, etc. This is discussed in more detail in the section “About performance comparison and system selection."

Secondly, you should take a look at the table below. Each cell in this table describes an application property that allows you to determine your preferred system. Lines are sorted out of order of importance. The importance of various properties may vary from application to application, but in general, the following approach can be applied: if your application matches the vast majority of lines with properties in one of the columns, then the system related to it is the preferred choice in your case.

Note: none of the properties above means that you should use the appropriate system (s), or avoid another. For example, if it is planned that your cluster will be large, this does not mean that you should definitely consider only Druid or Pinot, excluding ClickHouse. Most likely, in this situation, Druid or Pinot may be the best choice, but other useful properties can outweigh the scales in the direction of ClickHouse, which for some applications is the best choice even for large clusters.

As noted more than once in this article, Druid and Pinot have very similar architectures. There are several fairly noticeable features that exist in one system and are absent in another, and areas in which each of the systems is developed much more strongly than the other. Nevertheless, everything that I am going to mention below can be reproduced in another system with a reasonable amount of effort.

There is only one significant difference between Druid and Pinot , which is too big to get rid of in the foreseeable future - this is the implementation of segment management in the master node. By the way, the developers of both systems probably would not want to do this anyway, since both approaches have their pros and cons - there is no one among them that would be better.

The master node in Druid (and none of the nodes in Pinot) are responsible for the safety of metadata in the data segments in the cluster, and the current display between the segments and data processing nodes on which the segments are loaded. This information is stored in ZooKeeper. However, Druid in addition stores this information in the SQL database, which is necessary for deploying a Druid cluster. I can’t say for what purpose such a decision was made, but now it gives the following advantages:

What we have to have in the dependencies of the SQL database leads to a greater workload, especially if the company has not yet used any SQL database. Druid supports MySQL and PostgreSQL, and there is an extension for Microsoft SQL Server. In addition, when Druid is deployed in the cloud, you can use standard services to manage RDBMS - for example, Amazon RDS.

Unlike Druid, which implements all the segment control logic independently and relies only on Curator to interact with ZooKeeper, Pinot delegates most of the segment and cluster management logic to the Helix framework .

On the one hand, I can understand that this gives Pinot developers the opportunity to focus on other parts of their system. In Helix, fewer bugs are possible than in the logic inside Druid itself, since it is tested in other conditions and since it was supposedly invested a lot more working time.

Helix, on the other hand, may limit Pinot to its “framework framework." Helix, and therefore Pinot, will most likely depend on ZooKeeper forever .

Next, I’m going to list less important differences between Druid and Pinot - in the sense that if you have a serious desire to repeat one of these functions on your system, then this will be entirely feasible.

If during the acquisition data is partitioned in Kafka according to some dimension keys, Pinot creates segments that contain information about this partition and then, when a query is performed with a predicate on this dimension, the broker node filters the segments so that as few segments as possible and the request processing nodes were affected.

This concept is originally called “ predicate pushdown ” and is important for maintaining high performance in some applications.

Druid currently supports key splitting if segments were created in Hadoop, but does not yet support segments created during real-time takeover. Druid does not currently implement the function of “pushing predicates” to brokers.

Since Druid is used by various organizations and several companies take part in its development, he acquired support for several interchangeable options for almost any highlighted part or “service”:

At the same time, Pinot was almost entirely developed exclusively within the walls of LinkedIn and had to meet the current needs of the company, so the choice that you are offered is not so great. It is necessary to use HDFS or Amazon S3 as a “deep storage”, and only Kafka is suitable for real-time data absorption. But if someone really needs it, it seems to me not difficult to add support for any other service in Pinot. In addition, positive developments can be expected in this direction, as Uber and Slack begin to use Pinot.

In particular, the following Pinot segment format features are currently missing in Druid:

However, all this can be implemented in Druid. And despite the fact that the Pinot format is optimized much better than the Druid format, it is still quite far from being optimal. One example: Pinot (like Druid) uses only general-purpose compression (like Zstd) and even implemented the idea of compression from Gorilla .

Unfortunately, Uber for the most part used count (*) queries to compare the performance of Druid and Pinot regarding query execution [ 1 , 2 ], which now in Druid is a dumb linear scan, although it is not difficult to replace it with a correct O (1) implementation . This is another example of meaningless black-box comparisons that we talked about earlier.

In my opinion, the reasons for the strong difference in query performance

The Pinot algorithm is to assign a segment to request processing nodes that have the fewest segments currently loaded. The Druid algorithm is much more complex; it takes into account the table of each segment and time, and uses complex formulas for calculating the final coefficient , according to which the query processing nodes will be ranked to select the best one, which will be assigned a new segment. This algorithm showed an acceleration in query execution speed in Metamarkets production by 30–40% . Although, even despite such a result, we are still not too happy with them - details can be found in a separate article .

I don’t know how LinkedIn manages everything with such a simple Pinot segment balancing algorithm, but it is quite possible that they will expect significant improvements in performance if they decide to spend time improving their algorithm.

As already mentioned in the section “Query Execution”, when a broker node creates subqueries to other nodes, some subqueries fail, but Pinot combines the results of all successfully executed subqueries and still returns a partial result to the user.

Druid has such a feature at the moment.

See a similar section above. Druid allows you to enter levels of query processing nodes for old and new data, and for nodes with "old" data, the ratio of "CPU resources, RAM / number of loaded segments" is much lower, which allows you to win on infrastructure costs in exchange for poor query performance when accessing to old data.

As far as I know, there is no similar functionality in Pinot at the moment.

ClickHouse, Druid and Pinot have a fundamentally similar architecture , and occupy their own niche between general-purpose Big Data frameworks like Impala, Presto, Spark, and column databases with the correct support for primary keys, point updates and deletes, like InfluxDB.

Due to the similarity of architectures, ClickHouse, Druid and Pinot have approximately the same “optimization limit”. But in their current state, all three systems are still immature and very far from this limit. Significant improvements in the performance of these systems (in relation to specific usage scenarios) can be achieved by several man-months of work of experienced engineers.

Of these three systems, ClickHouse stands slightly apart from Druid and Pinot - while Druid and Pinot are almost identical, and they can be considered two independently developed implementations of the same system.

ClickHouse is more like "traditional" databases like PostgreSQL. ClickHouse can be installed on one node. With small scales (less than 1 TB of memory, less than 100 CPU cores), ClickHouse looks much more interesting than Druid or Pinot - if you still want to compare them - due to the fact that ClickHouse is simpler and has fewer moving parts and services. I would even say that on such a scale he is more likely to become a competitor for InfluxDB or Prometheus, and not for Druid or Pinot.

Druid and Pinot are more reminiscent of other Big Data systems from the Hadoop ecosystem. They retain their “self-managing” properties even on a very large scale (more than 500 nodes), while ClickHouse will require a lot of professional SRE for this. In addition, Druid and Pinot have a winning position in terms of optimizing the infrastructure cost of large clusters, and are better suited for cloud environments than ClickHouse.

The only long-term difference between Druid and Pinot is that Pinot is dependent on the Helix framework and will continue to be dependent on ZooKeeper, while Druid can get away from dependence on ZooKeeper. On the other hand, the installation of Druid will continue to depend on the availability of any SQL database. At the moment, Pinot is better optimized than Druid.

Spoiler

| Clickhouse | Druid or Pinot |

|---|---|

| The organization has C ++ experts | The organization has Java experts |

| Small cluster | Large cluster |

| Some tables | Many tables |

| One dataset | Multiple Unrelated Datasets |

| Tables and data are permanently in the cluster | Tables and data sets periodically appear in the cluster and are removed from it. |

| The size of the tables (and the intensity of queries to them) remains stable over time | Tables grow and shrink significantly |

| Homogeneous requests (their type, size, distribution by time of day, etc.) | Heterogeneous requests |

| There is a dimension in the data by which it can be segmented, and there are almost no queries that affect data located in several segments | There is no such dimension, and queries often affect data located throughout the cluster. |

| The cloud is not used, the cluster must be deployed to a specific configuration of physical servers | Cluster deployed in the cloud |

| No existing Hadoop or Spark clusters | Hadoop or Spark clusters already exist and can be used |

Sources of information

Details of ClickHouse implementation became known to me from Alexei Zatelepin , one of the key developers of the project . The documentation available in English is scarce - the last four sections of this documentation page are the best source of information .

I myself am involved in the development of Druid , but I have no personal interest in this system - in truth, most likely in the near future I will cease to develop it. Therefore, readers can count on the absence of any bias.

All that I will continue to write about Pinot is based on the Architecture page on the Pinot wiki, as well as on other wiki pages in the “Project Documentation” section. The last time they were updated in June 2017 is more than half a year ago.

The reviewers of the original article were Alexey Zatelepin and Vitaliy Ludvichenko (developers of ClickHouse), Jean Merlino (the most active developer of Druid), Kishore Gopalakrishna (architect of Pinot) and Jean-Francois Im (developer of Pinot). We join in the gratitude of the author and believe that this greatly increases the credibility of the article.

Warning : the article is large enough, so you may want to limit yourself to reading the “Conclusion” section at the end.

Similarities between systems

Related Data and Computing

At a fundamental level, ClickHouse, Druid, and Pinot are similar because they store data and process requests on the same nodes, moving away from the "disconnected" BigQuery architecture. Recently, I have already described several inherited problems with related architecture in the case of Druid [ 1 , 2 ]. There is no open equivalent for BigQuery at the moment (with the possible exception of Drill ?). Possible approaches to building such open systems are discussed in another article on my blog .

Differences from Big Data SQL Systems: Indexes and Static Data Distribution

The systems discussed in this article execute queries faster than Big Data systems from the SQL-on-Hadoop family of families: Hive, Impala, Presto, and Spark, even when the latter access data stored in a column format - for example, Parquet or Kudu. This is because in ClickHouse, Druid, and Pinot:

- It has its own format for storing data with indexes , and they are closely integrated with query processing engines. Systems of the SQL-on-Hadoop class can usually be called agnostics regarding data formats and therefore they are less “intrusive” in Big Data backends.

- Data is distributed relatively “statically” between nodes, and this can be used for distributed query execution. The flip side of the coin is that ClickHouse, Druid and Pinot do not support queries that require moving a large amount of data between nodes - for example, join between two large tables.

Lack of point updates and deletions

On the other side of the database spectrum, ClickHouse, Druid, and Pinot do not support point updates and deletes , as opposed to column systems like Kudu, InfluxDB, and Vertica (?). This gives ClickHouse, Druid, and Pinot the ability to produce more efficient column compression and more aggressive indexes, which means greater resource utilization and faster query execution.

Yandex ClickHouse developers plan to start supporting updates and deletions in the future , but I'm not sure if these will be “real” point queries or updates / deletes of data ranges.

Big Data Absorption

All three systems support streaming data absorption from Kafka. Druid and Pinot support lambda-style streaming data and batch absorption of the same data. ClickHouse supports batch inserts directly, so it doesn’t need a separate batch absorption system like the one used by Druid and Pinot. If you are interested in the details, then you can find them further.

Tested on a large scale

All three systems have been tested for operability on a large scale: Yandex.Metrica has the ClickHouse cluster , which consists of about ten thousand CPU cores. Metamarkets uses a similar-sized Druid cluster . One Linkedot Pinot cluster includes thousands of machines .

Immaturity

All systems discussed in this article are immature by the standards of open Big Data enterprise systems . However, most likely they are immature no more than the average open Big Data system - but this is a completely different story. ClickHouse, Druid and Pinot lack some obvious optimizations and functionality, and they are teeming with bugs (I'm not 100% sure about ClickHouse and Pinot, but I see no reason why they would be better than Druid in this regard).

This smoothly leads us to the next important section.

About performance comparison and system selection

I regularly see on the net how some people make comparisons of big data systems: they take a set of their data, somehow “feed” it to the system being evaluated, and then immediately try to measure performance - how much memory or disk space was used, and how fast they were running inquiries. Moreover, they do not have an understanding of how the systems they test are arranged from the inside. Then, using only such specific performance data - sometimes together with lists of the functionality that they need and which is currently in the system - they eventually make their choice or, even worse, choose to write their own, “best” system with zero.

This approach seems to me wrong, at least it does not apply to open OLAP systems for Big Data. The task of creating a Bid Data OLAP system that could work efficiently in most use cases and contain all the necessary functions is so great that I evaluate its implementation at least 100 man-years .

Today, ClickHouse, Druid, and Pinot are optimized only for the specific use cases that are required by their developer - and for the most part contain only those functions that the developers themselves need. I can guarantee that your case will surely “run into” those bottlenecks that the developers of the OLAP systems under consideration have not yet encountered, or those places that they are not interested in.

Not to mention that the above-mentioned approach of “throwing data into a system that you don’t know anything about and then measuring its effectiveness” is very likely to give a distorted result due to serious “bottlenecks” that could actually be fixed by a simple configuration change , data schema, or other query construction.

CloudFlare: ClickHouse vs. Druid

One such example that illustrates well the problem described above is Marek Wawrush's post on choosing between ClickHouse and Druid in Cloudflare . They needed 4 ClickHouse servers (which eventually turned into 9), and they estimated that they would need “hundreds of nodes” to deploy a similar Druid installation. Although Marek admits that the comparison is unfair , since Druid lacks “sorting by primary key,” he may not even realize that it is possible to achieve approximately the same effect in Druid by simply setting the correct measurement order in the “ ingestion spec ” and making simple preparations data: trim column value

__timein Druid to some coarse detail (for example, one hour) and optionally add another "long-type" column "precise_time" if some queries require a more accurate time frame. Yes, this is a hack, but, as we just found out, in Druid you can sort data by some dimension before __time, and it is quite simple to implement. However, I will not argue with their final decision to choose ClickHouse, since on a scale of about 10 nodes and for their needs, ClickHouse also seems to me a better choice than Druid. But their conclusion that ClickHouse is at least an order of magnitude more efficient (by the standards of infrastructure costs) than Druid is a serious misconception. In fact, of the systems we are considering today,Druid offers the best opportunity for really cheap installations (see the section “Druid Request Processing Node Levels” below).

When you choose the OLAP Big Data system, do not compare how well they are now suitable for your case. Now they are all suboptimal. Instead, compare how fast your company is able to get these systems moving in the direction that is right for you.

By virtue of their fundamental architectural similarity, ClickHouse, Druid and Pinot share approximately the same “limit” of efficiency and performance optimization. There is no "magic pill" that would allow any of these systems to be faster than the rest. Do not let yourself be confused by the fact that in their current state, systems show themselves very differently in different benchmarks.

Suppose Druid does not support “sorting by primary key” as well as ClickHouse can do - and ClickHouse, in turn, does not support “inverted indexes” as well as Druid, which gives these systems advantages with one load or another. Lost optimizations can be implemented in the selected system using the wrongthey are already a lot of their efforts , if you have the intention and the opportunity to decide on such a step.

- Your organization must have engineers who can read, understand and modify the source code of the selected system, and they must also have time for this. Note that ClickHouse is written in C ++, while Druid and Pinot are written in Java.

- Or your organization must sign a contract with a company that provides support for the selected system. These will be Altinity for ClickHouse, Imply and Hortonworks for Druid. There are currently no such companies for Pinot.

Other system design considerations you should consider:

- The authors of ClickHouse, who work at Yandex, claim that they spend 50% of their time creating the functionality that they need within the company, and another 50% go to the functions that most “community voices” gain. However, in order for you to gain an advantage from this fact, it is required that the functions you need are most demanded by the ClickHouse community .

- Imply's Druid developers are motivated to work on commonly used features, as this will allow them to maximize the reach of their business in the future.

- The development process of Druid strongly resembles the Apache model , when the software has been developed by several companies for several years, each of which has rather peculiar and different priorities, and there is no leading company among them. ClickHouse and Pinot are still far from a similar stage, since only Yandex and Linkedin respectively are engaged in them. A third-party contribution to the development of Druid has a minimal chance of being rejected due to the fact that it differs from the vision of the main developer - after all , Druid does not have a “main” developer company .

- Druid supports the "Developer API", which allows you to bring your own column types, aggregation mechanisms, possible options for "deep storage", etc., all of which you can keep in a code base separate from the Druid core itself. This API is documented by Druid developers, and they monitor its compatibility with previous versions. However, it is not "mature" enough, and breaks with almost every new release of Druid. As far as I know, similar APIs are not supported in ClickHouse and Pinot.

- According to Github, the largest number of people are working on Pinot - apparently, last year at least 10 person-years were invested in Pinot . For ClickHouse, this figure is about 6 person-years, and for Druid - 7. In theory, this should mean that Pinot improves faster than all the other systems that we consider.

The Druid and Pinot architectures are almost identical to each other, while ClickHouse stands slightly apart. Therefore, we first compare ClickHouse with the "generalized" Druid / Pinot architecture, and then discuss the minor differences between Druid and Pinot.

Differences between ClickHouse and Druid / Pinot

Data Management: Druid and Pinot

In Druid and Pinot, all the data in each “table” (whatever it is called in the terminology of these systems) is divided into a specified number of parts. On the time axis, data is usually divided at a given interval. Then these pieces of data are “sealed” individually into independent autonomous entities called “segments”. Each segment includes table metadata, compressed column data, and indexes.

Segments are stored in the file system of the “deep storage” storage (for example, HDFS) and can be uploaded to request processing nodes, but the latter are not responsible for the stability of segments, therefore, request processing nodes can be replaced relatively freely. Segments are not tied to specific nodes.and can be downloaded to these or other nodes. A dedicated server (called a “coordinator” in Druid and a “controller” in Pinot, but I refer to it as a “wizard” below) is responsible for assigning segments to nodes, and moving segments between nodes, if necessary.

This does not contradict what I noted above, all three systems have a static distribution of data between nodes, since loading segments and moving them in Druid - and as I understand it in Pinot - are expensive operations and therefore are not performed for each individual queue, but rather occur usually every few minutes / hours / days.

Segment metadata is stored in ZooKeeper - directly in the case of Druid, and using the Helix frameworkin pinot. In Druid, metadata is also stored in the SQL database, more on this in the section “Differences between Druid and Pinot”.

Data Management: ClickHouse

ClickHouse has no "segments" containing data falling within specific time ranges. There is no "deep storage" for data in it, the nodes in the ClickHouse cluster are also responsible for processing requests and the persistence / stability of the data stored on them. So you don’t need HDFS or cloud storage like Amazon S3.

ClickHouse has partitioned tables consisting of a specified set of nodes. There is no “central authority" or metadata server. All nodes between which this or that table is divided contain complete, identical copies of metadata, including the addresses of all other nodes on which sections of this table are stored.

The metadata of a partitioned table includes “weights” of nodes for distributing freshly recorded data - for example, 40% of the data should go to node A, 30% to node B and 30% to C. Normally, the distribution should occur evenly, “skew”, as in this As an example, it is required only when a new node is added to the partitioned table and it is necessary to quickly fill it with some data. Updates to these weights must be done manually by administrators of the ClickHouse cluster, or by an automated system built on top of ClickHouse.

Data Management: Comparison

The approach to data management in ClickHouse is simpler than in Druid and Pinot: no “deep storage” is required, only one type of nodes, no dedicated server for data management is required. But the ClickHouse approach leads to some difficulties when any data table grows so large that it needs to be split between ten or more nodes: the query gain becomes as large as the partitioning factor - even for queries that cover a small data interval:

ClickHouse data distribution tradeoff

In the example shown in the image above, the table data is distributed between three nodes in Druid / Pinot, but a query for a small data interval usually affects only two of them (until the interval crosses the segment boundary interval). In ClickHouse, any queries will be forced to affect three nodes — if the table is segmented between three nodes. In this example, the difference does not look so significant, but imagine what would happen if the number of nodes reaches 100 - while the segmentation factor can still be equal, for example, to 10 in Druid / Pinot.

To mitigate this problem, the largest ClickHouse cluster in Yandex, consisting of hundreds of nodes, is actually divided into many “sub-clusters” with several dozen nodes in each. The ClickHouse cluster is used in website analytics, and each data point has a “Website ID” dimension. There is a rigid binding of each site ID to a specific sub-cluster, where all the data for this site ID goes. On top of the ClickHouse cluster, there is a business logic layer that manages this data sharing when absorbing data and executing queries. Fortunately, in their usage scenarios, very few requests affect several site identifiers, and such requests do not come from users of the service, so they do not have a hard link to real time according to the service level agreement.

Another drawback of the ClickHouse approach is that when the cluster grows very fast, the data cannot be rebalanced automatically without the participation of a person who manually changes the “weights” of the nodes in the split table.

Druid Query Node Levels

Managing data with “easy to imagine” segments - this concept fits well with our cognitive abilities. Segments themselves can be relocated between nodes relatively easily. These two reasons allowed Druid to implement “ leveling ” the nodes involved in processing requests: old data is automatically moved to servers with relatively large disks, but less memory and CPU, which can significantly reduce the cost of a large Druid working cluster by slowing down requests to more old data.

This feature allows Metamarkets to save hundreds of thousands of dollars in Druid infrastructure costs every month — as opposed to using a “flat” cluster.

Levels of request processing nodes in Druid

As far as I know, ClickHouse and Pinot do not yet have similar functionality - it is assumed that all nodes in their clusters are the same.

Due to the fact that the architecture of Pinot is very similar to that of Druid, it seems to me that it will not be too difficult to add a similar function to Pinot. It will be harder in the case of ClickHouse, since the concept of “segments” is extremely useful for implementing this function, but it is still possible.

Data Replication: Druid and Pinot

The replication unit in Druid and Pinot is a single segment. Segments are replicated at the “deep storage” level (for example, to three replicas on HDFS, or using BLOB storage in Amazon S3), and at the request processing level: usually in Druid and Pinot, each segment is loaded onto two different nodes . A “master” server monitors replication levels for each segment and uploads the segment to a server if the replication factor falls below a specified level (for example, if any of the nodes stops responding).

Data Replication: ClickHouse

The replication unit in ClickHouse is a section of a table on a server (for example, all data from a table stored on a server). Like partitioning, ClickHouse replication is more “static and specific” than “cloud-style”: several servers know that they are replicas of each other (for some specific table; in the case of another table, the replication configuration may differ). Replication provides both resilience and query availability. When a disk is damaged on one node, data is not lost, since it is also stored on another node. When a node is temporarily unavailable, requests can be redirected to the replica.

In the largest ClickHouse cluster in Yandex, there are two identical sets of nodes in different data centers, and they are paired. In each pair, nodes are replicas of each other (a replication factor of two is used), and they are located in different data centers.

ClickHouse relies on ZooKeeper to manage replication - so if you don't need replication, then you don't need ZooKeeper either. This means that ZooKeeper is not required for ClickHouse deployed on a single node.

Data Absorption: Druid and Pinot

In Druid and Pinot, query processing nodes specialize in loading segments and serving data requests in segments; they do not accumulate new data and produce new segments.

When a table can be updated with a delay of one hour or more, segments are created using batch processing engines - for example, Hadoop or Spark. Both Druid and Pinot have first-class Hadoop support out of the box. There is a third-party plugin to support Druid indexing in Spark, but at the moment it is not officially supported. As far as I know, there is no such level of Spark support in Pinot, that is, you should be prepared to deal with Pinot interfaces and code, and then write Java / Scala code yourself, even if it should not be too difficult. (However, since the publication of the original article, Spark support for Pinot has been contributed by the contributor ).

When a table needs to be updated in real time, the idea of “real-time” nodes that do three things comes to the rescue: accepts new data from Kafka (Druid also supports other sources), serves requests with recent data, creates segments in the background and then writes them in the "deep vault".

Data Absorption: ClickHouse

The fact that ClickHouse does not need to prepare “segments” that contain all the data and fall within the specified time intervals allows us to build a simpler data absorption architecture. ClickHouse does not require a batch processing engine like Hadoop or real-time nodes. Regular ClickHouse nodes - the same ones that store data and serve requests to them - directly accept batch data records.

If the table is segmented, then the node that receives the packet record (for example, 10k rows) distributes the data according to the "weights" (see the section below). Lines are written in one packet, which forms a small "set". Many immediately converted to column format. Each ClickHouse host runs a background process that combines row sets into even larger sets. The ClickHouse documentation is strongly tied to the principle known as "MergeTree" and emphasizes the similarity of its work with the LSM tree , although it bothers me a bit, because the data is not organized into trees - they are in a flat columnar format.

Data Absorption: Comparison

Druid and Pinot data absorption is “difficult”: it consists of several different services, and managing them is hard work.

The absorption of data in ClickHouse is much simpler (which is offset by the complexity of managing "historical" data - that is, data not in real time), but there is one point here: you must be able to collect data in packages until ClickHouse itself. Automatic absorption and batch data collection from Kafka is available “out of the box”but if you use a different source of real-time data (anything is meant here, in the range between the request infrastructure, alternative to Kafka, and streaming processing engines, up to various HTTP endpoints), then you will have to create an intermediate collection service packages, or add code directly to ClickHouse.

Выполнение запроса

В Druid и Pinot имеется отдельный слой узлов, называемых «брокерами&кaquo;, которые принимают все запросы к системе. Они определяют, к каким «историческим» ( содержащим данные не в реальном времени) узлам обработки запросов должны быть отправлены подзапросы, основываясь на отображении сегментов в узлы, в которых сегменты загружаются. Брокеры хранят информацию об отображении в памяти. Брокер-узлы отправляют дальше подзапросы к узлам обработки запросов, и когда результаты этих подзапросов возвращаются, брокер объединяет их и возвращает финальный комбинированный результат пользователю.

I can’t presume why during the design of Druid and Pinot it was decided to introduce another type of nodes. However, now they seem to be an integral part of them, because when the total number of segments in a cluster begins to exceed ten million, information about mapping segments to nodes begins to occupy gigabytes of memory. It is very wasteful to allocate so much memory on each node to process requests. Here is another drawback that overlaps Druid and Pinot with their “segmented” data management architecture.

In ClickHouse , it is usually not necessary to select a separate set of nodes under the “request broker”. There is a special, ephemeral "distributed" type of tablein ClickHouse, which can be installed on any node, and queries to this table will do the same, for which the broker nodes in Druid and Pinot are responsible. Typically, such ephemeral tables are located on each node that participates in a partitioned table, so in practice each node can be an “entry point” for a request to the ClickHouse cluster. This node can issue the necessary subqueries to other sections, process its part of the request independently and then combine it with partial results from other sections.

When a node (or one of the processing nodes in ClickHouse, or a broker node in Druid and Pinot) issues subqueries to others, and one or more subqueries for some reason fail, ClickHouse and Pinot handle this situation correctly: they combine the results successfully completed subqueries together, and still return a partial result to the user. The Druid of this function is now very lacking : if in it the execution of the subquery fails, then the entire request will also fail.

ClickHouse vs. Druid or Pinot: Conclusions

The “segmented” approach to data management in Druid and Pinot versus simpler data management in ClickHouse defines many aspects of systems. However, it is important to note that this difference has little (or no effect) on the potential compression efficiency (however, the history of compression for all three systems has a sad end to the current state of affairs), or on the speed of request processing.

Clickhousesimilar to traditional RDMBS, for example, PostgreSQL. In particular, ClickHouse can be deployed to just one server. If the planned size is small - say, no more than about 100 CPU cores for processing requests and 1 TB of data, I would say that ClickHouse has significant advantages over Druid and Pinot due to its simplicity and the absence of the need for additional types of nodes, such as a “master” "," Nodes of absorption in real time "," brokers ". In this field, ClickHouse competes more with InfluxDB than with Druid or Pinot.

Druid and Pinotsimilar to Big Data systems like HBase. Here, we do not mean performance characteristics, but dependence on ZooKeper, dependence on persistent replicated storage (for example, HDFS), focusing on fault tolerance of individual nodes, as well as autonomous operation and data management that do not require constant human attention. For a wide range of applications, neither ClickHouse, nor Druid or Pinot are clear winners. First of all, you should take into account your ability to deal with the source code of the system, fix bugs, add new features, etc. This is discussed in more detail in the section “About performance comparison and system selection."

Secondly, you should take a look at the table below. Each cell in this table describes an application property that allows you to determine your preferred system. Lines are sorted out of order of importance. The importance of various properties may vary from application to application, but in general, the following approach can be applied: if your application matches the vast majority of lines with properties in one of the columns, then the system related to it is the preferred choice in your case.

| Clickhouse | Druid or Pinot |

|---|---|

| The organization has C ++ experts | The organization has Java experts |

| Small cluster | Large cluster |

| Some tables | Many tables |

| One dataset | Multiple Unrelated Datasets |

| Tables and data are permanently in the cluster | Таблицы и наборы данных периодически появляются в кластере и удаляются из него |

| Размер таблиц (и интенсивность запросов к ним) остается стабильным во времени | Таблицы значительно растут и сжимаются |

| Однородные запросы (их тип, размер, распределение по времени суток и т.д.) | Разнородные запросы |

| В данных есть измерение, по которому они могут быть сегментированы, и почти не выполняется запросов, которые затрагивают данные, расположенные в нескольких сегментах | Подобного измерения нет, и запросы часто затрагивают данные, расположенные во всем кластере |

| Облако не используется, кластер должен быть развернут на специфическую конфигурацию физических серверов | Кластер развернут в облаке |

| Нет существующих кластеров Hadoop или Spark | Кластеры Hadoop или Spark уже существуют и могут быть использованы |

Note: none of the properties above means that you should use the appropriate system (s), or avoid another. For example, if it is planned that your cluster will be large, this does not mean that you should definitely consider only Druid or Pinot, excluding ClickHouse. Most likely, in this situation, Druid or Pinot may be the best choice, but other useful properties can outweigh the scales in the direction of ClickHouse, which for some applications is the best choice even for large clusters.

Differences between Druid and Pinot

As noted more than once in this article, Druid and Pinot have very similar architectures. There are several fairly noticeable features that exist in one system and are absent in another, and areas in which each of the systems is developed much more strongly than the other. Nevertheless, everything that I am going to mention below can be reproduced in another system with a reasonable amount of effort.

There is only one significant difference between Druid and Pinot , which is too big to get rid of in the foreseeable future - this is the implementation of segment management in the master node. By the way, the developers of both systems probably would not want to do this anyway, since both approaches have their pros and cons - there is no one among them that would be better.

Segment Management in Druid

The master node in Druid (and none of the nodes in Pinot) are responsible for the safety of metadata in the data segments in the cluster, and the current display between the segments and data processing nodes on which the segments are loaded. This information is stored in ZooKeeper. However, Druid in addition stores this information in the SQL database, which is necessary for deploying a Druid cluster. I can’t say for what purpose such a decision was made, but now it gives the following advantages:

- В ZooKeeper хранится меньше данных. Только минимум информации об отображении идентификатора сегмента на список узлов, занимающихся обработкой запросов, куда загружен сегмент, сохраняется в ZooKeeper. Оставшиеся метаданные, к примеру, размер сегмента, список измерений и метрики, и т.д. — хранятся только в SQL базе данных.

- Когда сегменты данных вытесняются из кластера, поскольку они становятся слишком старыми (это общая функция всех баз данных временных рядов — она есть и в ClickHouse, и в Druid, и в Pinot), они выгружаются из узлов обработки запросов и их метаданные удаляются из ZooKeeper, но не из «глубокого хранилища» и не из базы данных SQL. Пока они не будут удалены из этих мест вручную, остается возможность «оживить» действительно старые данные быстро, если он потребуются для построения отчетов или исследований.

- Вряд ли это планировалось с самого начала, но теперь есть планы сделать зависимость Druid от ZooKeeper опциональной. Сейчас ZooKeeper используется для трех различных функций: управления сегментами, обнаружения сервисов и хранения свойств (например, для управления поглощением данных в реальном времени). Обнаружение сервисов может быть предоставлено Consul. Управление сегментами может быть реализовано при помощи HTTP-команд, и оно доступно нам благодаря тому, что функции хранения в ZooKeeper «бекапится» в базе SQL.

What we have to have in the dependencies of the SQL database leads to a greater workload, especially if the company has not yet used any SQL database. Druid supports MySQL and PostgreSQL, and there is an extension for Microsoft SQL Server. In addition, when Druid is deployed in the cloud, you can use standard services to manage RDBMS - for example, Amazon RDS.

Pinot Segment Management

Unlike Druid, which implements all the segment control logic independently and relies only on Curator to interact with ZooKeeper, Pinot delegates most of the segment and cluster management logic to the Helix framework .

On the one hand, I can understand that this gives Pinot developers the opportunity to focus on other parts of their system. In Helix, fewer bugs are possible than in the logic inside Druid itself, since it is tested in other conditions and since it was supposedly invested a lot more working time.

Helix, on the other hand, may limit Pinot to its “framework framework." Helix, and therefore Pinot, will most likely depend on ZooKeeper forever .

Next, I’m going to list less important differences between Druid and Pinot - in the sense that if you have a serious desire to repeat one of these functions on your system, then this will be entirely feasible.

Push Predicate in Pinot

If during the acquisition data is partitioned in Kafka according to some dimension keys, Pinot creates segments that contain information about this partition and then, when a query is performed with a predicate on this dimension, the broker node filters the segments so that as few segments as possible and the request processing nodes were affected.

This concept is originally called “ predicate pushdown ” and is important for maintaining high performance in some applications.

Druid currently supports key splitting if segments were created in Hadoop, but does not yet support segments created during real-time takeover. Druid does not currently implement the function of “pushing predicates” to brokers.

"Replaceable" Druid and masterful Pinot

Since Druid is used by various organizations and several companies take part in its development, he acquired support for several interchangeable options for almost any highlighted part or “service”:

- HDFS, Cassandra, Amazon S3, Google Cloud Storage or Azure Blob Storage, etc. as a "deep repository";

- Kafka, orr RabbitMQ, Samza, or Flink, or Spark, Storm, etc. (via Tranquility ) as a source of real-time data absorption;

- Druid itself, or Graphite, or Ambari, or StatsD, or Kafka as a "drain" for telemetry of the Druid cluster (metrics).

At the same time, Pinot was almost entirely developed exclusively within the walls of LinkedIn and had to meet the current needs of the company, so the choice that you are offered is not so great. It is necessary to use HDFS or Amazon S3 as a “deep storage”, and only Kafka is suitable for real-time data absorption. But if someone really needs it, it seems to me not difficult to add support for any other service in Pinot. In addition, positive developments can be expected in this direction, as Uber and Slack begin to use Pinot.

The data format and query engine are better optimized in Pinot

In particular, the following Pinot segment format features are currently missing in Druid:

- Сжатие проиндексированных столбцов с битовой гранулярностью, но байтовой гранулярностью в Druid.

- Инвертированный индекс опционален для каждого столбца. В Druid он является обязательным, иногда этого не требуется, но все равно занимает много места. Различие в потреблении места между Druid и Pinot, на которое указывает Uber в своих тестах, вполне возможно вызвано именно этим.

- Минимальные и максимальные значения в числовых столбцах записываются посегментно.

- Поддержка сортировки данных из коробки. В Druid этого можно достичь только вручную и слегка специфическим способом (как было описано в разделе «CloudFlare: ClickHouse против Druid»). Сортировка данных означает лучшее сжатие, и эта функция в Pinot — еще одна причина различия между Druid и Pinot в потреблении пространства (и производительности запросов!), на которую указывает Uber.

- Формат данных, используемый для многозначных столбцов, на данный момент лучше оптимизирован в Pinot, чем в Druid.

However, all this can be implemented in Druid. And despite the fact that the Pinot format is optimized much better than the Druid format, it is still quite far from being optimal. One example: Pinot (like Druid) uses only general-purpose compression (like Zstd) and even implemented the idea of compression from Gorilla .

Unfortunately, Uber for the most part used count (*) queries to compare the performance of Druid and Pinot regarding query execution [ 1 , 2 ], which now in Druid is a dumb linear scan, although it is not difficult to replace it with a correct O (1) implementation . This is another example of meaningless black-box comparisons that we talked about earlier.

In my opinion, the reasons for the strong difference in query performance

GROUP BY,observed in Uber are worth looking for in the lack of data sorting in Druid segments, as noted above in this section.Druid has a smarter segment assignment (balancing) algorithm

The Pinot algorithm is to assign a segment to request processing nodes that have the fewest segments currently loaded. The Druid algorithm is much more complex; it takes into account the table of each segment and time, and uses complex formulas for calculating the final coefficient , according to which the query processing nodes will be ranked to select the best one, which will be assigned a new segment. This algorithm showed an acceleration in query execution speed in Metamarkets production by 30–40% . Although, even despite such a result, we are still not too happy with them - details can be found in a separate article .

I don’t know how LinkedIn manages everything with such a simple Pinot segment balancing algorithm, but it is quite possible that they will expect significant improvements in performance if they decide to spend time improving their algorithm.

Pinot is more resistant to failures when performing complex queries

As already mentioned in the section “Query Execution”, when a broker node creates subqueries to other nodes, some subqueries fail, but Pinot combines the results of all successfully executed subqueries and still returns a partial result to the user.

Druid has such a feature at the moment.

Hierarchy of request processing nodes in Druid

See a similar section above. Druid allows you to enter levels of query processing nodes for old and new data, and for nodes with "old" data, the ratio of "CPU resources, RAM / number of loaded segments" is much lower, which allows you to win on infrastructure costs in exchange for poor query performance when accessing to old data.

As far as I know, there is no similar functionality in Pinot at the moment.

Conclusion

ClickHouse, Druid and Pinot have a fundamentally similar architecture , and occupy their own niche between general-purpose Big Data frameworks like Impala, Presto, Spark, and column databases with the correct support for primary keys, point updates and deletes, like InfluxDB.

Due to the similarity of architectures, ClickHouse, Druid and Pinot have approximately the same “optimization limit”. But in their current state, all three systems are still immature and very far from this limit. Significant improvements in the performance of these systems (in relation to specific usage scenarios) can be achieved by several man-months of work of experienced engineers.

Я бы не рекомендовал вам сравнивать производительность данных систем между собой — выберите для себя ту, чей исходный код вы способны понять и модифицировать, или ту, в которую вы хотите инвестировать свои ресурсы.

Of these three systems, ClickHouse stands slightly apart from Druid and Pinot - while Druid and Pinot are almost identical, and they can be considered two independently developed implementations of the same system.

ClickHouse is more like "traditional" databases like PostgreSQL. ClickHouse can be installed on one node. With small scales (less than 1 TB of memory, less than 100 CPU cores), ClickHouse looks much more interesting than Druid or Pinot - if you still want to compare them - due to the fact that ClickHouse is simpler and has fewer moving parts and services. I would even say that on such a scale he is more likely to become a competitor for InfluxDB or Prometheus, and not for Druid or Pinot.

Druid and Pinot are more reminiscent of other Big Data systems from the Hadoop ecosystem. They retain their “self-managing” properties even on a very large scale (more than 500 nodes), while ClickHouse will require a lot of professional SRE for this. In addition, Druid and Pinot have a winning position in terms of optimizing the infrastructure cost of large clusters, and are better suited for cloud environments than ClickHouse.

The only long-term difference between Druid and Pinot is that Pinot is dependent on the Helix framework and will continue to be dependent on ZooKeeper, while Druid can get away from dependence on ZooKeeper. On the other hand, the installation of Druid will continue to depend on the availability of any SQL database. At the moment, Pinot is better optimized than Druid.

If you have already encountered the need to compare these systems and made your choice, then come to one of our conferences and tell us about your case: about exactly what tasks and what rake (and probably they were) you met. Although, of course, databases are far from the only topic. The closest RIT ++ festival at the end of the application deadline ( until April 9 ) includes the following areas: frontend , backend , operation and management . Participants are usually most interested in learning about specific examples, but speeches in the form of reviews and studies are also possible - the main thing is that the topic is of interest to you personally.