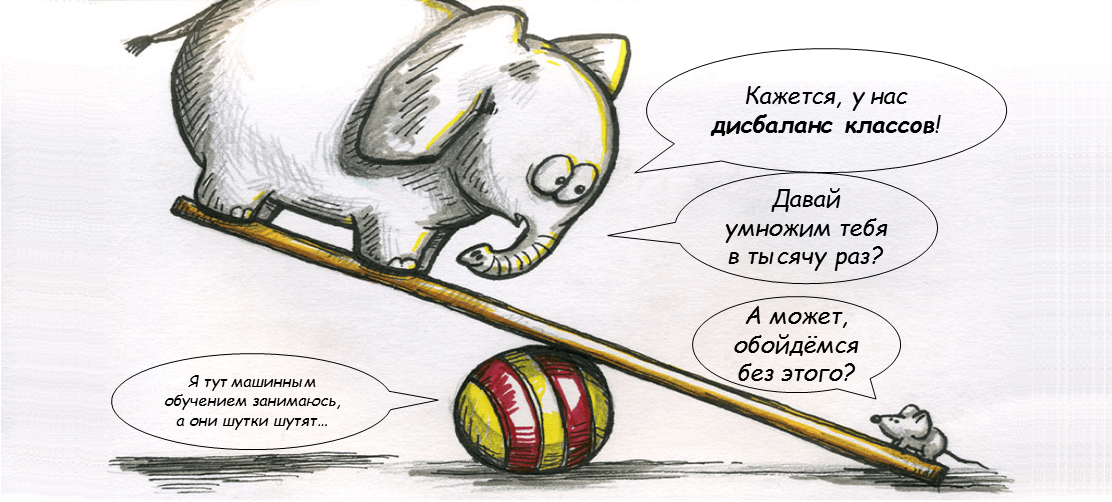

Do I need to be afraid of class imbalances?

There are many posts and resources on the network that teach us how to deal with class imbalance in the classification task. Usually they offer sampling methods: artificially duplicate observations from a rare class, or throw out some of the observations from a popular class. In this post, I want to make it clear that the “curse” of class imbalance is a myth that is important only for certain types of tasks.

To begin with, not all machine learning models work poorly with unbalanced classes. Most probabilistic models are weakly dependent on class balance. Problems usually arise when we move to a non-probabilistic or multi-class classification.

In logistic regression (and its generalizations - neural networks), the balance of classes strongly affects the free term, but very weakly affects the slope coefficients. Indeed, the predicted odds ratio from binary logistic regression changes to a constant when changing the balance of classes, and this effect goes into a free term

.

In decision trees (and their generalizations - random forest and gradient boosting), class imbalance affects impurity measures of leaves, but this effect is approximately proportional to all candidates for the next split, and therefore usually does not particularly affect the choice of breakdowns .

On the other hand, non-probabilistic models of the SVM type can seriously affect class imbalances. SVM constructs a training hyperplane so that approximately the same number of positive and negative examples is on the dividing strip or on its wrong side. Therefore, a change in the balance of classes can affect this number, and hence the position of the border.

When we use probabilistic models for binary classification, everything is OK: during training, the models are not very dependent on the balance of classes, and in testing we can use metrics that are insensitive to the balance of classes. Such metrics (for example, ROC AUC) depend on the predicted class probabilities, and not on the “hard” discrete classification.

However, metrics such as ROC AUC are not well generalized for multiclass classification, and we usually use simple accuracy to evaluate multiclass models. Accuracy has known problems with class imbalances: it is based on a “hard” classification, and can completely ignore rare classes. This is where many practitioners turn to sampling. However, if you stay true to probabilistic predictions, and use the likelihood (aka cross-entropy) to evaluate the quality of the model, the imbalance of the classes can be overcome without sampling.

Sampling makes sense if you don't need probabilistic classification. In this case, the true distribution of classes is simply irrelevant for you, and you can distort it as you like. Imagine a task where you do not need to know the probability that the cat in front of you is in the picture, but only that this picture is more like a picture of a cat than a picture of a dog. In this setting, it may be desirable for you that cats and dogs have the same number of “votes”, even if cats constitute the vast majority in the training sample.

In other tasks (such as detecting fraud, predicting clicks, or my favorite credit scoring), in fact, you do not need a “hard” classification, but ranking: which customers are more prone to fraud, click or default than others? In this case, the balance of classes is not important at all, because the thresholds for decision-making are still usually chosen manually - based on economic considerations such as expected losses.

However, in such tasks it is often useful to predict the “true” probability of fraud (or clicks, or defaults), in which case sampling that distorts these probabilities is undesirable. This is how, for example, default probability models for credit scoring are built - gradient imaging or neuron is built on unbalanced data, and then it is checked for a long time that the predicted default probabilities coincide on average with the actual ones in different sections.

Therefore, think three times before worrying about class imbalances and trying to “fix” them - maybe it’s better to spend valuable time on feature engineering, parameter selection, and other equally important stages of your data analysis.