The future of Kubernetes is behind virtual machines

- Transfer

Guessing on the coffee grounds.

In my work, Kubernetes has already played an important role, and in the future it will become even more important. But 2018 is coming to an end, so let's forget about modesty and make a bold prediction:

[1] If you are trying to develop a Kubernetes strategy, you have already failed, but this is a topic for another article.

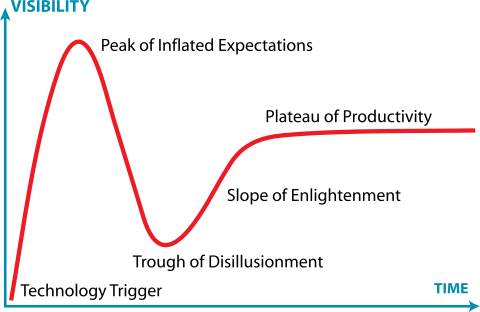

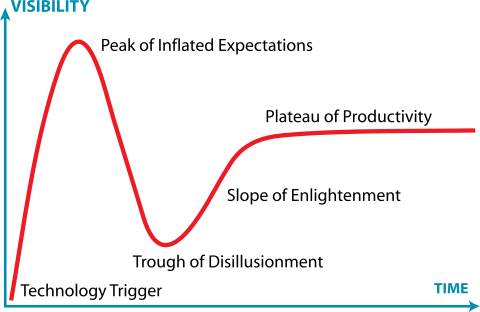

In other words, at each stage of the “Gartner hype cycle” for Kubernetes there are a lot of people. Some were stuck in a hollow of frustration or drowned in a pit of despair.

Jeremykemp, Wikipedia . Creative Commons CC BY-SA 3.0

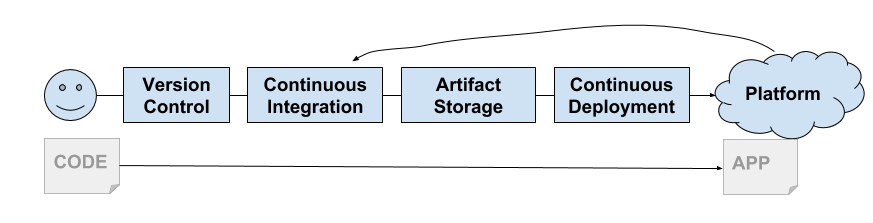

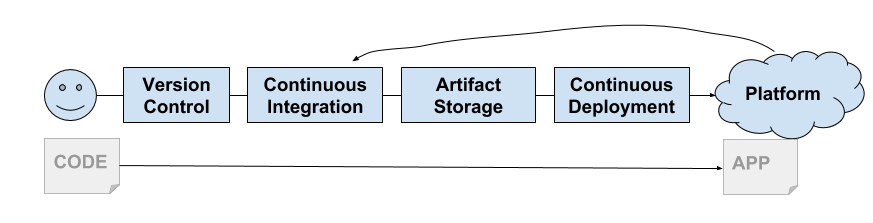

I am a big fan of containers and will not say that containers are dead . Docker in 2013 gave us a shell for Linux Containers: an amazing new way to create, package, share, and deploy applications. He appeared at the right time, as we began to take seriously the continuous delivery. Their model was ideal for the delivery chain and contributed to the emergence of the PaaS platform, and then CaaS.

Engineers from Google saw that the IT community was finally ready for containers. Google has been using containers for a long time and in a sense they can be considered inventors of containerization. They began to develop Kubernetes. As is now known, this is the free reincarnation of Google’s own Borg platform.

Soon Kubernetes support was provided by all the big clouds (GKE, AKS, EKS). On-premise services also quickly raised Kubernetes-based platforms (Pivotal Container Service, Openshift, etc.).

It was necessary to solve one annoying problem, which can be considered a lack of containers ... this is multi-tenancy.

Linux containers were not created as secure isolated environments (like Solaris Zones or FreeBSD Jails). Instead, they were built on a common kernel model, which uses kernel functions to provide basic process isolation. As Jesse Frazel would say , “containers are not the real thing.”

This is exacerbated by the fact that most Kubernetes components are not aware of tenants. Of course, you have the namespace and security policy Pod , but in the API there is no such thing. Also as in internal components, such as

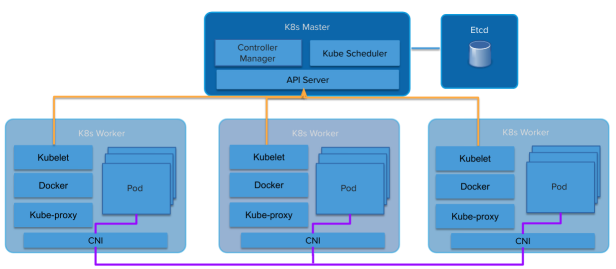

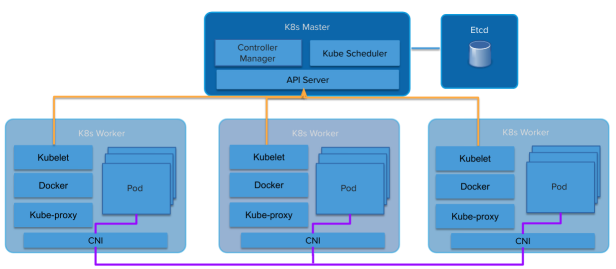

Architecture Kubernetes

Abstractions seep further. Platform on top of containers inherits many aspects of soft leases. Platforms on top of multi-tenant virtual machines inherit this hard lease (VMware, Amazon Web Services, OpenStack, etc.).

The Kubernetes soft rental model puts service providers and distributions in a strange position. The Kubernetes cluster itself becomes the “hard lease” line. Even within an organization, there are many reasons for requiring a rigid lease between users (or applications). Since public clouds provide fully managed Kubernetes as a service, it is fairly easy for customers to take their own cluster and use the cluster boundary as an isolation point.

Some Kubernetes distributions, such as Pivotal Container Service (PKS) , are well aware of this rental problem and use a similar model, providing the same Kubernetes as a service that can be obtained from a public cloud, but in its own data center.

This led to the emergence of the “many clusters” model, instead of “one large common cluster”. Often, Google's GKE customers have dozens of Kubernetes clusters deployed for several teams. Often, each developer gets his cluster. This behavior generates a shocking number of instances (Kubesprawl).

As an option, operators raise their own Kubernetes clusters in their own data centers to take on additional work to manage multiple clusters, or agree to softly rent one or two large clusters.

Usually the smallest cluster is four machines (or virtual machines). One (or three for HA) for Kubernetes Master, three for Kubernetes Workers. A lot of money is spent on systems that are mostly idle.

Therefore, you need to somehow move Kubernetes in the direction of a rigid multi-rent. The Kubernetes community is well aware of this need. A multi-rental working group has already been established . She is working hard on this problem, and they have several models and suggestions on how to work with each model.

Jesse Frasel wrote a blog post on this topic , which is great because she is much smarter than me, so I can refer to her and save myself from ten years of hard work trying to reach her level. If you have not read that post, read right now.

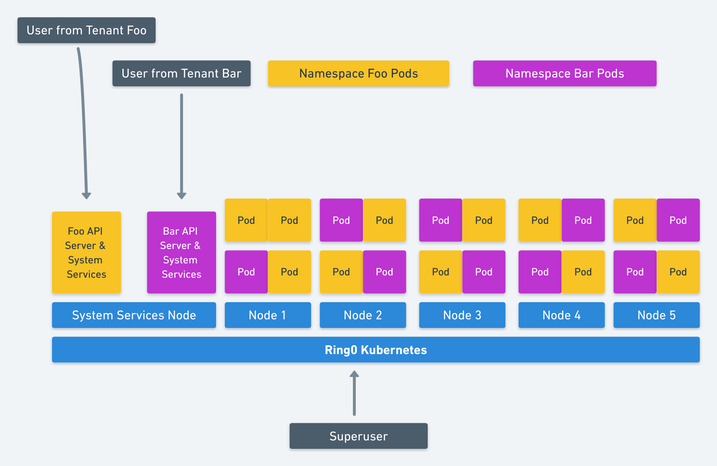

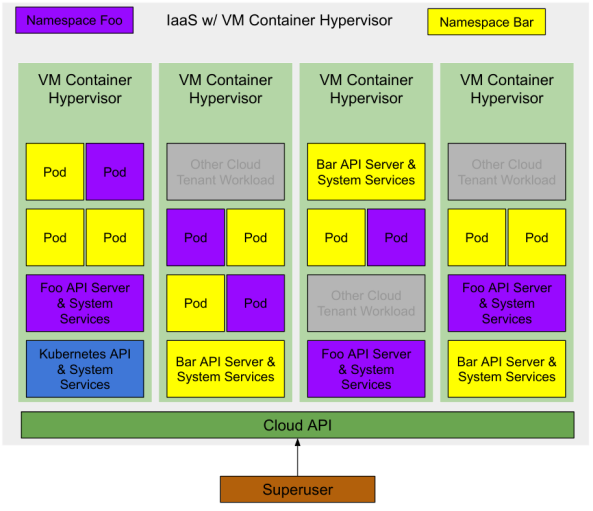

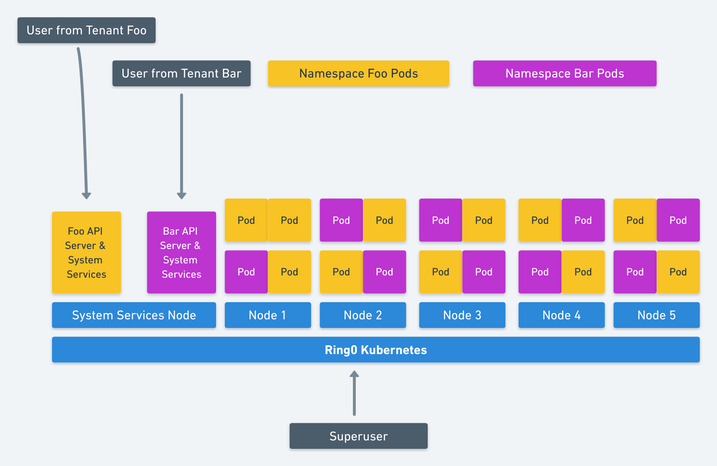

Image of Jesse Frazel: Kubernetes' hard multi-tenancy

This is an elegant solution of Kubernetes multi-rent. Her proposal goes even further, so that Kubernetes uses VM sub-containers for workloads (Pods) running on Kubernetes, which provides a significant increase in resource utilization.

We still have at least one optimization. Create a suitable hypervisor for the underlying infrastructure provider or cloud provider. If the VM container is a first-level abstraction provided by IaaS, then we will further increase resource utilization. The minimum number of VMs to start the Kubernetes cluster is reduced to one machine (or three for HA) to accommodate the Kubernetes management system available to the “superuser”.

Deploying Kubernetes with two namespaces and several running applications will look something like this:

The superuser requests the Kubernetes cluster from the cloud. The cloud service provider creates a virtual container with one container (or three for HA), on which the main API and system services of Kubernetes work. The superuser can deploy modules in the system namespace or create new spaces to delegate access to other users.

The superuser creates two namespaces

At all stages, the superuser pays only for the resources actually consumed. The cloud service provider controls these resources and they are available to any cloud user.

Cloud service providers are already working in this direction. You can see this by watching the events in the communities (perhaps Amazon has quietly done it with Fargate).

The first tip is Virtual Kubelet , an open source tool for disguising as a kubelet. It connects Kubernetes with other APIs, which allows Kubernetes to request VM containers from a standard scheduler in the cloud.

Other leads are a large number of new technologies for VM containerization, like the already mentioned Kata containers, as well as Firecracker from Amazon and gvisor from Google.

If you correctly implement the improvements in the model of a hard lease, then you will find the Holy Grail of the Kubernetes virtualization. Complete isolation of workloads and no extra costs.

If you do not use the public cloud, you still get the benefits of higher resource utilization, which pays off with lower hardware requirements.

Hopefully, VMware and OpenStack will pay attention and release hypervisors based on lightweight VM containers and the corresponding Virtual Kubelet implementations.

In my work, Kubernetes has already played an important role, and in the future it will become even more important. But 2018 is coming to an end, so let's forget about modesty and make a bold prediction:

The future of Kubernetes is virtual machines, not containersAccording to the Chinese horoscope, 2018 was the year of the dog, but in technology it was the year of Kubernetes. Many are just now learning about this revolutionary technology, and IT departments are everywhere trying to develop a “Kubernetes strategy” [1]. Some organizations have already transferred large workloads to Kubernetes.

[1] If you are trying to develop a Kubernetes strategy, you have already failed, but this is a topic for another article.

In other words, at each stage of the “Gartner hype cycle” for Kubernetes there are a lot of people. Some were stuck in a hollow of frustration or drowned in a pit of despair.

Jeremykemp, Wikipedia . Creative Commons CC BY-SA 3.0

I am a big fan of containers and will not say that containers are dead . Docker in 2013 gave us a shell for Linux Containers: an amazing new way to create, package, share, and deploy applications. He appeared at the right time, as we began to take seriously the continuous delivery. Their model was ideal for the delivery chain and contributed to the emergence of the PaaS platform, and then CaaS.

Engineers from Google saw that the IT community was finally ready for containers. Google has been using containers for a long time and in a sense they can be considered inventors of containerization. They began to develop Kubernetes. As is now known, this is the free reincarnation of Google’s own Borg platform.

Soon Kubernetes support was provided by all the big clouds (GKE, AKS, EKS). On-premise services also quickly raised Kubernetes-based platforms (Pivotal Container Service, Openshift, etc.).

Soft multi-hindrance

It was necessary to solve one annoying problem, which can be considered a lack of containers ... this is multi-tenancy.

Linux containers were not created as secure isolated environments (like Solaris Zones or FreeBSD Jails). Instead, they were built on a common kernel model, which uses kernel functions to provide basic process isolation. As Jesse Frazel would say , “containers are not the real thing.”

This is exacerbated by the fact that most Kubernetes components are not aware of tenants. Of course, you have the namespace and security policy Pod , but in the API there is no such thing. Also as in internal components, such as

kubeletor kube-proxy. This leads to the fact that in Kubernetes the soft tenancy model is implemented.

Architecture Kubernetes

Abstractions seep further. Platform on top of containers inherits many aspects of soft leases. Platforms on top of multi-tenant virtual machines inherit this hard lease (VMware, Amazon Web Services, OpenStack, etc.).

The Kubernetes soft rental model puts service providers and distributions in a strange position. The Kubernetes cluster itself becomes the “hard lease” line. Even within an organization, there are many reasons for requiring a rigid lease between users (or applications). Since public clouds provide fully managed Kubernetes as a service, it is fairly easy for customers to take their own cluster and use the cluster boundary as an isolation point.

Some Kubernetes distributions, such as Pivotal Container Service (PKS) , are well aware of this rental problem and use a similar model, providing the same Kubernetes as a service that can be obtained from a public cloud, but in its own data center.

This led to the emergence of the “many clusters” model, instead of “one large common cluster”. Often, Google's GKE customers have dozens of Kubernetes clusters deployed for several teams. Often, each developer gets his cluster. This behavior generates a shocking number of instances (Kubesprawl).

As an option, operators raise their own Kubernetes clusters in their own data centers to take on additional work to manage multiple clusters, or agree to softly rent one or two large clusters.

Usually the smallest cluster is four machines (or virtual machines). One (or three for HA) for Kubernetes Master, three for Kubernetes Workers. A lot of money is spent on systems that are mostly idle.

Therefore, you need to somehow move Kubernetes in the direction of a rigid multi-rent. The Kubernetes community is well aware of this need. A multi-rental working group has already been established . She is working hard on this problem, and they have several models and suggestions on how to work with each model.

Jesse Frasel wrote a blog post on this topic , which is great because she is much smarter than me, so I can refer to her and save myself from ten years of hard work trying to reach her level. If you have not read that post, read right now.

Just very small VMs optimized for speed ...

Kata Containers is an open source project and community working to create a standard implementation of lightweight virtual machines that look and work like containers, but provide workload isolation and VM security benefits.Jesse suggests using VM container technology, such as Kata containers . They offer VM level isolation, but work like containers. This allows Kubernetes to give each “tenant” (we assume a tenant in the namespace) with its own set of Kubernetes system services running in nested VM containers (VM container inside a virtual machine provided by the IaaS infrastructure).

Image of Jesse Frazel: Kubernetes' hard multi-tenancy

This is an elegant solution of Kubernetes multi-rent. Her proposal goes even further, so that Kubernetes uses VM sub-containers for workloads (Pods) running on Kubernetes, which provides a significant increase in resource utilization.

We still have at least one optimization. Create a suitable hypervisor for the underlying infrastructure provider or cloud provider. If the VM container is a first-level abstraction provided by IaaS, then we will further increase resource utilization. The minimum number of VMs to start the Kubernetes cluster is reduced to one machine (or three for HA) to accommodate the Kubernetes management system available to the “superuser”.

Resource-optimized multi-rent (cost)

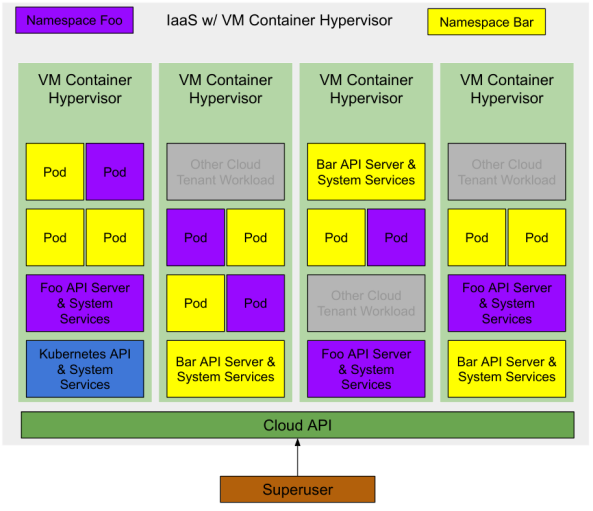

Deploying Kubernetes with two namespaces and several running applications will look something like this:

Note: There are other loads on the same IaaS infrastructure. Since these are VM containers, they have the same isolation level as normal cloud VMs. Therefore, they can run on a single hypervisor with minimal risk.Initially, no infrastructure is deployed in the cloud, so for the superuser the costs are zero.

The superuser requests the Kubernetes cluster from the cloud. The cloud service provider creates a virtual container with one container (or three for HA), on which the main API and system services of Kubernetes work. The superuser can deploy modules in the system namespace or create new spaces to delegate access to other users.

The superuser creates two namespaces

fooandbar. Kubernetes requests from the cloud two VM containers for each level of name space management (Kubernetes API and System Services). The superuser delegates access to these namespaces to some users, each of them starts up some workloads (modules), and the appropriate management levels request VM containers for these workloads. At all stages, the superuser pays only for the resources actually consumed. The cloud service provider controls these resources and they are available to any cloud user.

I don't really say anything new ...

Cloud service providers are already working in this direction. You can see this by watching the events in the communities (perhaps Amazon has quietly done it with Fargate).

The first tip is Virtual Kubelet , an open source tool for disguising as a kubelet. It connects Kubernetes with other APIs, which allows Kubernetes to request VM containers from a standard scheduler in the cloud.

Other leads are a large number of new technologies for VM containerization, like the already mentioned Kata containers, as well as Firecracker from Amazon and gvisor from Google.

Conclusion

If you correctly implement the improvements in the model of a hard lease, then you will find the Holy Grail of the Kubernetes virtualization. Complete isolation of workloads and no extra costs.

If you do not use the public cloud, you still get the benefits of higher resource utilization, which pays off with lower hardware requirements.

Hopefully, VMware and OpenStack will pay attention and release hypervisors based on lightweight VM containers and the corresponding Virtual Kubelet implementations.