All the pain of p2p development

Good afternoon, habrasociety! Today I would like to talk about the magical and wonderful project of Tensor - a remote assistant. This is a remote access system that connects millions of customers and operators within the framework of a common VLSI client base. Remote Assistance is now closely integrated with online.sbis.ru. Every day we register more than ten thousand connections and tens of hours of session time per day. In this article we will talk about how we establish p2p connections and what to do if this fails.

There are a lot of remote access systems. These are all kinds of variations of free VNC, and quite powerful and offering a wide range of functionality paid solutions. Initially, our company used the adaptation of one of such solutions - UltraVNC. This is a great free system that allows you to connect to another PC, knowing its IP. The option of how to proceed if the PC has indirect access to the interne network has already flickered in the vastness of Habr, and we will not touch on this topic. This solution will be sufficient only until a relatively small number of simultaneous connections are achieved. A step to the left, a step to the right, and the smut begins with scaling, usability, integration into the system and complexity of improvements, which, of course, appear in the process of the software life cycle, which we encountered.

So, it was decided to invent your bike to create your own remote desktop management system, which could be integrated into the overall VLSI ecosystem. Of course, the easiest way to connect 2 PCs that does not use only the lazy one is by a numerical identifier. In our implementation, we use random 6-digit numbers without reference to a specific client.

So, it was decided to invent your bike to create your own remote desktop management system, which could be integrated into the overall VLSI ecosystem. Of course, the easiest way to connect 2 PCs that does not use only the lazy one is by a numerical identifier. In our implementation, we use random 6-digit numbers without reference to a specific client.

One very famous person once said:

At the very beginning of our journey, this quote was very similar to the truth: there was an understanding of how to “introduce” the client and the operator to each other. But in practice, everything turned out to be not entirely trivial.

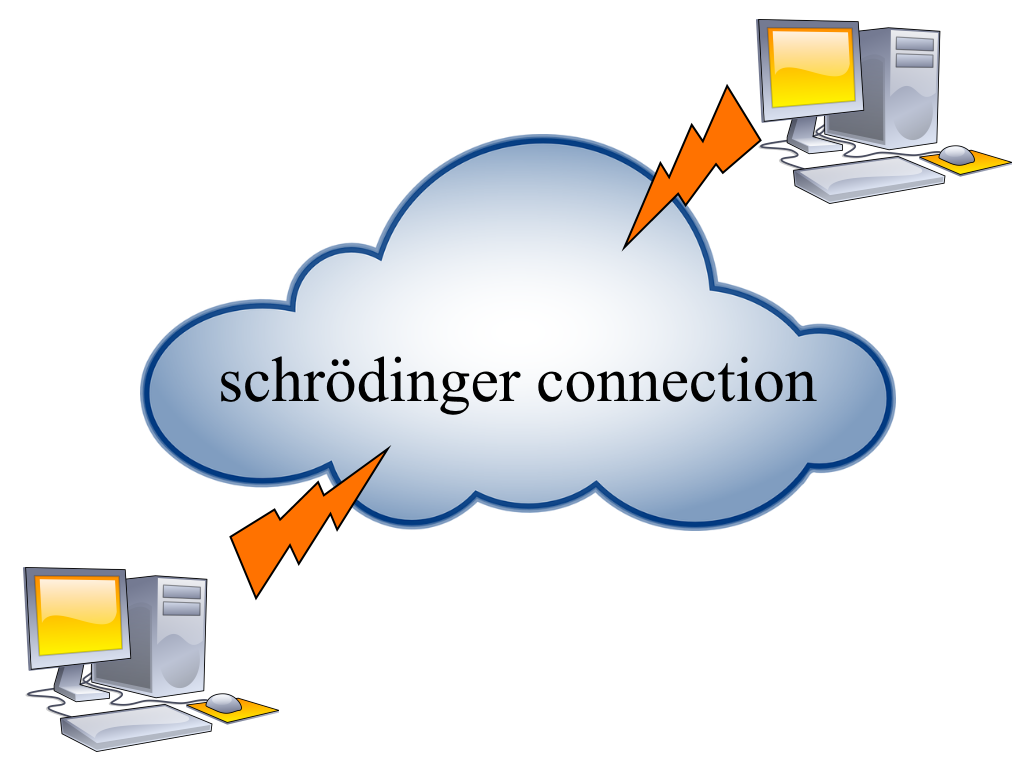

To connect 2 devices, we use a signal server - an intermediary, access to which is available to both parties. Its role is to register and exchange information between participants in real time. Through it, without any hassle, we exchange endpoints (a bunch of IP-address and port, access point) in order to establish a connection.

This signal server, which we call remote helper manager (RHM), is a pool of systems written in nodejs that ensure the fault-tolerant operation of the entire service. Nuuu, more precisely, as a "fault tolerant" ... we hope so :). Connection to one of the servers is based on the round-robin principle. Thus, the client and operator can be connected to different servers, and all the mechanics for their synchronization and coordination are completely removed from the desktop application.

All work is reduced to the exchange of service packages, with the help of which the parties can uniquely identify each other and perform any actions relatively synchronously, for example, to begin the process of collecting candidates for connection or the beginning of the connection attempt itself.

By the way, do not act like us - do not shoot yourself in the foot: if you use the 443 TCP port - use TLS, not pure traffic. More and more firewalls block it and disconnect, moreover, often on the provider's side.

By the way, do not act like us - do not shoot yourself in the foot: if you use the 443 TCP port - use TLS, not pure traffic. More and more firewalls block it and disconnect, moreover, often on the provider's side.

The most common communication protocols on the Internet are UDP and TCP. UDP is quick and easy, but lacks the native ability to guarantee packet delivery and sequence. TCP is devoid of these drawbacks, but it is a bit more complicated in the process of establishing a p2p connection. And with the latest trends, it seems to me that a direct tcp connection can completely sink into oblivion.

Setting up a p2p connection is far from always dependent on the ability to work with network protocols. For the most part, this feature depends on specific network settings, more often: such as NAT (Network address translation) and / or firewall settings.

It is customary to divide NAT into 4 types, each of which differ in the rules for transmitting packets from an external network to the end user:

By the way, some advanced devices allow you to select a mode directly from the configuration panel, but this is not about that now.

In most cases, it is possible to break through NAT, initiating data transfer to the host, from which you expect to receive a response. To do this, it is necessary for the remote side to recognize its external endpoint and inform it to us. We, in turn, need to do the same.

To find out your IP address and port on an external device (let's call it a router for simplicity), we use the SUN (Session traversal utilities for NAT) and TURN (Traversal using relay NAT) servers. STUN - for determining external IP: port (endpoint) for UDP protocol, TURN - for TCP.

Why so, because it would be much easier to get an external IP from our own signal server?

There are at least 4 arguments for this:

Coturn is an opensource implementation of a TURN and STUN server. Its use, as practice has shown, is not at all limited to WebRTC. In my opinion, this is a fairly flexible tool, not very demanding. Yes, it does not have the built-in horizontal scaling capability, but everything can be solved, for example, using a signal server.

The steps for getting endpoints are documented in RFC # 3489, # 5389, # 5766 and # 6062.

All messages to the STUN or TURN protocol are as follows:

Accordingly:

In general, overhead information is enclosed in the first 20 bytes of the packet.

Attributes also consist of:

It is important that the total length of the attribute must be a multiple of 4 bytes. If, say, the attribute length value, for example, is 7, then at the end it is necessary to understaff: (2 + 2 + 7)% 4 bytes of empty data.

What the endpoint collection for the UDP protocol looks like:

Further, each byte corresponds to its octet of ipv4 address: 123.123.123.123 The

collection of endpoint for TCP is somewhat different, because we get it according to the TURN protocol. Why so? Everything is explained by minimizing the number of sockets connected to the TURN server, which means that a potentially larger number of people will be able to "hang" on the same traffic relay server.

To collect a candidate under the TURN protocol, you must:

So, we have a server through which we exchanged collected endpoints with the remote side.

Of course, this now seems simple and straightforward, but looking back when you look at the RFC and understand that without wireshark’s prompts, things will not get off the ground - you’re getting ready to dive into ... In general, I recall one bearded anecdote:

The simplest is organizing a UDP hole punch.

To do this, you need to artificially create routing rules on your NAT.

Simply organize a series of packet transfers to the remote endpoint and wait for a response from it. Several packages are needed to create an appropriate rule on NAT and get rid of the “race”, who will be the first to deliver the corresponding package to whom. Well, no one canceled the loss of UDP.

Then we exchanged control phrases and we can assume that the connection is established.

A little more complicated is the organization of TCP hole punch, although the general ideology remains exactly the same.

The difficulty lies in the fact that only 1 socket can occupy its local endpoint by default, and trying to connect to another address will automatically disconnect from the first one. However, there are socket options that remove this limitation: REUSE_ADDRESS and EXCLUSIVEADDRUSE. After cocking the first and resetting the second option on the socket, other sockets will be able to occupy the same local endpoint.

Well, there remains a mere trifle - to bind to the local endpoint, opened by the socket when connecting to TURN, and to try to connect to the endpoint of the remote side.

Well and even a little more complicated, but no less important for a stable connection setup is traffic relaying.

According to the priority of use, we have lined up such a hierarchy: direct tcp> direct udp> relay (relay)

Well, UDP, for all its ease and speed, has a significant drawback: the lack of a delivery guarantee and priority. And if you could somehow come to terms with the video stream somehow (the presence of graphic artifacts), then the file transfer here is somewhat more serious.

To ensure the guarantee and priority, a mechanism similar to reliable UDP was implemented, which, yes, consumes a few more resources, but also gives the desired result.

How did we get out of the situation? First you need to find out MTU (maximum transmission unit) - that is, the largest possible udp size of a packet that can be sent without fragmentation on passing nodes.

To do this, take 512 bytes for the maximum packet size and set the IP_DONTFRAGMENT option to the socket. We send the package and wait for its confirmation. If within a fixed time we get an answer, then increase the maximum size and repeat the iteration. If, in the end, we did not wait for confirmation, then we begin the procedure for clarifying the MTU size: we begin not to significantly lower the maximum block size and expect stable confirmation within 10 times. We didn’t receive confirmation - they reduced the MTU and start the cycle again.

Optimum MTU size found.

Next, we segment: we cut the entire large block into many small ones, indicating the starting segment number and the ending segment number that characterizes the package. After splitting, add segments to the send queue. The segment is sent until the remote party notifies us that it has received it. The retry interval is used as 1.2 * the maximum ping size received when finding the MTU.

On the receiving side, we look at the received segment, add it to the incoming queue and try to collect the nearest packet. If it works out, we clean the line and try to collect the next one.

Here, of course, the most attentive of you who "lived" to this paragraph can safely notice: why not use the x264 or x265 codec? - and will be partially right. Honestly, we are also inclined to zayuzat it, then you can give up this bike on udp. But what about, say, the transfer of binary files? In this case, we again return to the need for guarantee of delivery and priority of packages.

In conclusion, I want to note that with such an organization of connections, we have no more than 2-3% of failed connections per day, most of which are incorrect proxy or firewall settings, which set up the connection without problems.

If this topic turns out to be interesting to you (and it seems to us that way), then in the following articles we will talk about launching the application with the highest rights in the system, the problems that arise because of this, and how we deal with them. About compression algorithms, virtual desktops and much more.

Author: Vladislav Yakovlev asmsa

Experience is the son of difficult mistakes

There are a lot of remote access systems. These are all kinds of variations of free VNC, and quite powerful and offering a wide range of functionality paid solutions. Initially, our company used the adaptation of one of such solutions - UltraVNC. This is a great free system that allows you to connect to another PC, knowing its IP. The option of how to proceed if the PC has indirect access to the interne network has already flickered in the vastness of Habr, and we will not touch on this topic. This solution will be sufficient only until a relatively small number of simultaneous connections are achieved. A step to the left, a step to the right, and the smut begins with scaling, usability, integration into the system and complexity of improvements, which, of course, appear in the process of the software life cycle, which we encountered.

So, it was decided to invent your bike to create your own remote desktop management system, which could be integrated into the overall VLSI ecosystem. Of course, the easiest way to connect 2 PCs that does not use only the lazy one is by a numerical identifier. In our implementation, we use random 6-digit numbers without reference to a specific client.

So, it was decided to invent your bike to create your own remote desktop management system, which could be integrated into the overall VLSI ecosystem. Of course, the easiest way to connect 2 PCs that does not use only the lazy one is by a numerical identifier. In our implementation, we use random 6-digit numbers without reference to a specific client. One very famous person once said:

Theory is when everything is known, but nothing works.

Practice is when everything works, but no one knows why.

We combine theory and practice: nothing works ...

and no one knows why!

At the very beginning of our journey, this quote was very similar to the truth: there was an understanding of how to “introduce” the client and the operator to each other. But in practice, everything turned out to be not entirely trivial.

Introduction to p2p

To connect 2 devices, we use a signal server - an intermediary, access to which is available to both parties. Its role is to register and exchange information between participants in real time. Through it, without any hassle, we exchange endpoints (a bunch of IP-address and port, access point) in order to establish a connection.

This signal server, which we call remote helper manager (RHM), is a pool of systems written in nodejs that ensure the fault-tolerant operation of the entire service. Nuuu, more precisely, as a "fault tolerant" ... we hope so :). Connection to one of the servers is based on the round-robin principle. Thus, the client and operator can be connected to different servers, and all the mechanics for their synchronization and coordination are completely removed from the desktop application.

All work is reduced to the exchange of service packages, with the help of which the parties can uniquely identify each other and perform any actions relatively synchronously, for example, to begin the process of collecting candidates for connection or the beginning of the connection attempt itself.

By the way, do not act like us - do not shoot yourself in the foot: if you use the 443 TCP port - use TLS, not pure traffic. More and more firewalls block it and disconnect, moreover, often on the provider's side.

By the way, do not act like us - do not shoot yourself in the foot: if you use the 443 TCP port - use TLS, not pure traffic. More and more firewalls block it and disconnect, moreover, often on the provider's side.The most common communication protocols on the Internet are UDP and TCP. UDP is quick and easy, but lacks the native ability to guarantee packet delivery and sequence. TCP is devoid of these drawbacks, but it is a bit more complicated in the process of establishing a p2p connection. And with the latest trends, it seems to me that a direct tcp connection can completely sink into oblivion.

Setting up a p2p connection is far from always dependent on the ability to work with network protocols. For the most part, this feature depends on specific network settings, more often: such as NAT (Network address translation) and / or firewall settings.

It is customary to divide NAT into 4 types, each of which differ in the rules for transmitting packets from an external network to the end user:

- Symmetric NAT

- Cone / Full Cone NAT

- Address restricted cone NAT

- Port restricted cone NAT

By the way, some advanced devices allow you to select a mode directly from the configuration panel, but this is not about that now.

In most cases, it is possible to break through NAT, initiating data transfer to the host, from which you expect to receive a response. To do this, it is necessary for the remote side to recognize its external endpoint and inform it to us. We, in turn, need to do the same.

To find out your IP address and port on an external device (let's call it a router for simplicity), we use the SUN (Session traversal utilities for NAT) and TURN (Traversal using relay NAT) servers. STUN - for determining external IP: port (endpoint) for UDP protocol, TURN - for TCP.

Why so, because it would be much easier to get an external IP from our own signal server?

There are at least 4 arguments for this:

- The ability to transparently expand the list of servers (both your own and publicly available) for collecting endpoints, thereby increasing system resiliency.

- The complementarity and widespread use of the STUN and TURN protocols allows you to pay a minimum of attention to collecting endpoints and relaying traffic.

- STUN and TURN protocols are very similar. Having dealt with the architecture of STUN packages, TURN is already on the thumb. And the use of TURN gives us the opportunity to relay traffic when the attempt to establish a direct connection fails.

- We already used the STUN / TURN server “coturn” in the project of video calls, which means that it was possible to “plug” their capacities with minimal injections into the “iron”.

Coturn is an opensource implementation of a TURN and STUN server. Its use, as practice has shown, is not at all limited to WebRTC. In my opinion, this is a fairly flexible tool, not very demanding. Yes, it does not have the built-in horizontal scaling capability, but everything can be solved, for example, using a signal server.

How is communication with the server built using the STUN / TURN protocol?

The steps for getting endpoints are documented in RFC # 3489, # 5389, # 5766 and # 6062.

All messages to the STUN or TURN protocol are as follows:

Accordingly:

- 12 bytes per message type

- 22 bytes for its length (the size of all subsequent attributes)

- 12 bytes for a random identifier for TURN and 16 bytes for STUN packets. Their size differs by 4 bytes - this data is reserved for the TURN packet under the constant MagicCookie.

In general, overhead information is enclosed in the first 20 bytes of the packet.

Attributes also consist of:

- 2 bytes per attribute type

- 2 bytes for its length

- attribute value itself

It is important that the total length of the attribute must be a multiple of 4 bytes. If, say, the attribute length value, for example, is 7, then at the end it is necessary to understaff: (2 + 2 + 7)% 4 bytes of empty data.

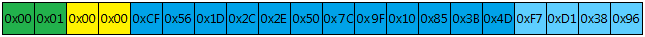

What the endpoint collection for the UDP protocol looks like:

- Connect to server

- Sending a package containing a binding request:

- Getting the package containing the binding response:

- Parsing the packet and extracting mapped-address:

0x00 0x01 - Attribute type corresponding to MAPPED-ADDRESS

0x00 0x08 - Combined attribute length

0x00 0x01 - Protocol version corresponding to IPv4

0x30 0x39 - Port, with value 12345

Further, each byte corresponds to its octet of ipv4 address: 123.123.123.123 The

collection of endpoint for TCP is somewhat different, because we get it according to the TURN protocol. Why so? Everything is explained by minimizing the number of sockets connected to the TURN server, which means that a potentially larger number of people will be able to "hang" on the same traffic relay server.

To collect a candidate under the TURN protocol, you must:

- Connect to the server.

- Send the package containing the allocation request.

- If authorization on the TURN server is necessary, we will receive a allocate failure with a 401 error in response. In this case, it will be necessary to repeat the allocation request with the username and Message Integrity attribute generated based on the message itself, username, password and realm attribute taken from the response received from the server.

- Then, in case of successful registration, the server sends an allocate success response with the attribute of the dedicated port on the TURN server, as well as XOR-MAPPED-ADDRESS - thereby the public endpoint on the TCP protocol. For further work with IP, each octet must be “clogged” (XOR - logical exception operation OR) with the same byte from the MagicCookie constant attribute: 0x21 0x12 0xA4 0x42

- In case of further work with this TURN connection, it is necessary to renew the registration each time by sending a refresh request. This is done to discard the "dead" connections.

So, we have a server through which we exchanged collected endpoints with the remote side.

Of course, this now seems simple and straightforward, but looking back when you look at the RFC and understand that without wireshark’s prompts, things will not get off the ground - you’re getting ready to dive into ... In general, I recall one bearded anecdote:

Learn the kid, otherwise you will give the keys ...

How to establish a connection?

The simplest is organizing a UDP hole punch.

To do this, you need to artificially create routing rules on your NAT.

Simply organize a series of packet transfers to the remote endpoint and wait for a response from it. Several packages are needed to create an appropriate rule on NAT and get rid of the “race”, who will be the first to deliver the corresponding package to whom. Well, no one canceled the loss of UDP.

Then we exchanged control phrases and we can assume that the connection is established.

A little more complicated is the organization of TCP hole punch, although the general ideology remains exactly the same.

The difficulty lies in the fact that only 1 socket can occupy its local endpoint by default, and trying to connect to another address will automatically disconnect from the first one. However, there are socket options that remove this limitation: REUSE_ADDRESS and EXCLUSIVEADDRUSE. After cocking the first and resetting the second option on the socket, other sockets will be able to occupy the same local endpoint.

Well, there remains a mere trifle - to bind to the local endpoint, opened by the socket when connecting to TURN, and to try to connect to the endpoint of the remote side.

Well and even a little more complicated, but no less important for a stable connection setup is traffic relaying.

- Because we already have a TURN registration, all we need to do is add the remote side registration to the TURN permissions. To do this, send the CreatePermission package indicating remote registration.

- The initiator of the connection sends the ConnectRequest packet indicating the “clogged” endpoint of the remote registration and signs the MessageIntegrity packet.

- If everything is fine and the remote side sent CreatePermission with your registration, then the connect success response will come to the initiator, and the connection attempt to the client. In both cases, the connection-id attribute will be present in the incoming packet.

- Further, for a short period of time, you need to connect to the same IP and TURN server port with the new socket as the original socket (in the classic TURN version, both 3478 and 443 tcp ports can listen to the server) and send a ConnectionBind packet from the new socket with connection- id received earlier.

- Wait for the packet containing the connection bind success response, and voila - the connection is established. In this case, yes, 2 sockets are used - the control one, which is responsible for maintaining the connection, and the transport one, which can be operated as with a direct connection - everything that will be sent or received should be processed as is.

According to the priority of use, we have lined up such a hierarchy: direct tcp> direct udp> relay (relay)

Why did we take direct udp to second place?

Well, UDP, for all its ease and speed, has a significant drawback: the lack of a delivery guarantee and priority. And if you could somehow come to terms with the video stream somehow (the presence of graphic artifacts), then the file transfer here is somewhat more serious.

To ensure the guarantee and priority, a mechanism similar to reliable UDP was implemented, which, yes, consumes a few more resources, but also gives the desired result.

How did we get out of the situation? First you need to find out MTU (maximum transmission unit) - that is, the largest possible udp size of a packet that can be sent without fragmentation on passing nodes.

To do this, take 512 bytes for the maximum packet size and set the IP_DONTFRAGMENT option to the socket. We send the package and wait for its confirmation. If within a fixed time we get an answer, then increase the maximum size and repeat the iteration. If, in the end, we did not wait for confirmation, then we begin the procedure for clarifying the MTU size: we begin not to significantly lower the maximum block size and expect stable confirmation within 10 times. We didn’t receive confirmation - they reduced the MTU and start the cycle again.

Optimum MTU size found.

Next, we segment: we cut the entire large block into many small ones, indicating the starting segment number and the ending segment number that characterizes the package. After splitting, add segments to the send queue. The segment is sent until the remote party notifies us that it has received it. The retry interval is used as 1.2 * the maximum ping size received when finding the MTU.

On the receiving side, we look at the received segment, add it to the incoming queue and try to collect the nearest packet. If it works out, we clean the line and try to collect the next one.

Here, of course, the most attentive of you who "lived" to this paragraph can safely notice: why not use the x264 or x265 codec? - and will be partially right. Honestly, we are also inclined to zayuzat it, then you can give up this bike on udp. But what about, say, the transfer of binary files? In this case, we again return to the need for guarantee of delivery and priority of packages.

In conclusion, I want to note that with such an organization of connections, we have no more than 2-3% of failed connections per day, most of which are incorrect proxy or firewall settings, which set up the connection without problems.

If this topic turns out to be interesting to you (and it seems to us that way), then in the following articles we will talk about launching the application with the highest rights in the system, the problems that arise because of this, and how we deal with them. About compression algorithms, virtual desktops and much more.

Author: Vladislav Yakovlev asmsa