The birth of a supernova: how new features appear on the example of 3D-counting visitors

In a previous article, we talked about upgrading one of the most popular functions of Macroscop video analysis - the function of counting visitors.

We decided to make it better, more accurate and more convenient for the user. There was one small question: how to do it? In our case, the procedure was as follows:

1. Read scientific articles and publications;

2. Discuss, analyze and choose ideas;

3. Prototype and test;

4. Choose and design one.

After completing the first two steps, we decided to create a new count of visitors, which will be based on information about the depth. Depth is the vertical distance from the camera to objects falling into its field of view. It gives information about the height of the one who crosses the entry-exit line, therefore, it allows you to distinguish people from other objects.

Depth can be obtained in several different ways. And we had to choose exactly how we would do it in the framework of the new calculation. We identified 4 priority areas for further study.

1. Use a stereo nozzle for the camcorder.

One of the options for obtaining depth data is to create a stereo nozzle on the camcorder's lens, which will double the image. Next, write an algorithm that will process it, combine the corresponding reference points and get a stereo image that will allow you to find out distance data and build a depth map.

Looking ahead, we note that options involving the construction of something hardware (whether a nozzle or a device) attracted us to a lesser extent. We are developers and are specialists in writing algorithms and programs. And we did not really want to take on what we are not professionals in.

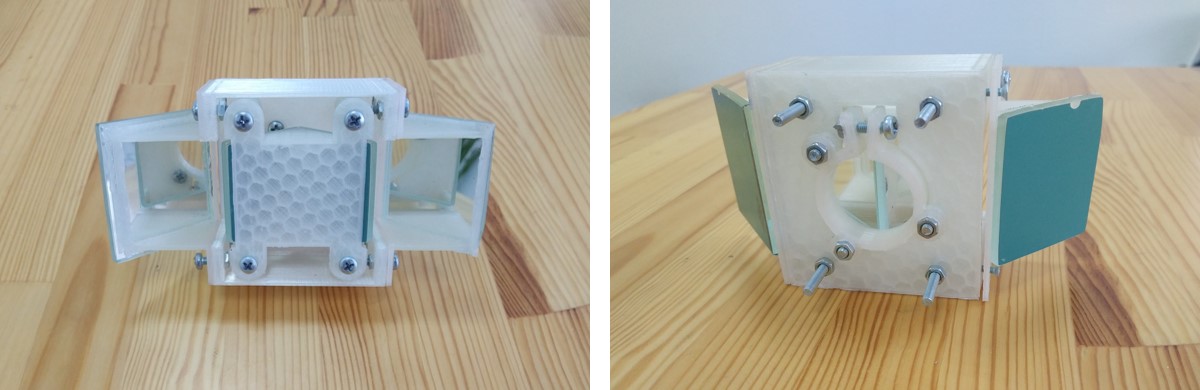

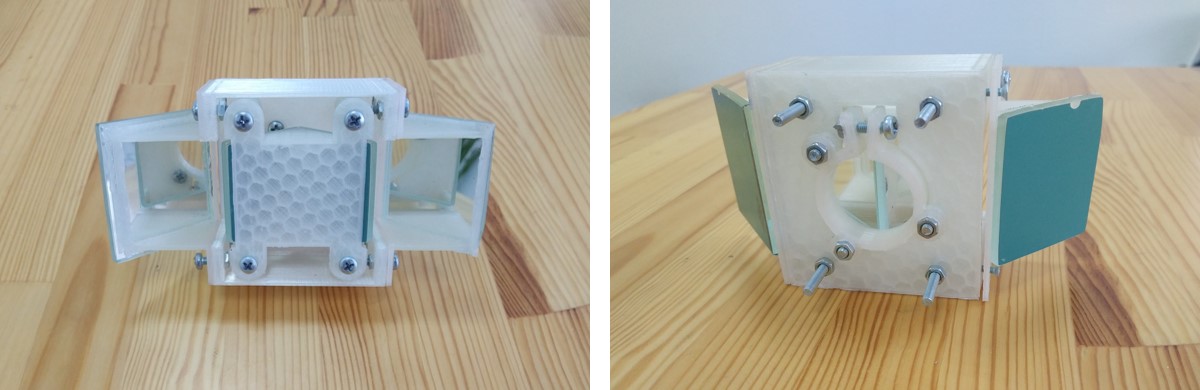

First, we looked for ready-made nozzles to test our reasoning in practice. But to find an option that would suit us in all respects, failed. Then we printed our own sample nozzle on a 3D printer, but it was also unsuccessful.

The process also revealed that using a stereo nozzle reduces the versatility of the solution, since not every camera can be worn. Therefore, it would be necessary to either produce a line of different nozzles, or restrict users in choosing cameras.

Another limitation was that the stereo nozzle significantly narrows the field of view of the camera (2 times). Sometimes the inputs and outputs are quite wide, such that several ordinary video cameras are installed several. And if you narrow the field of view of the camera by half, then this will complicate the life of the installers and increase the cost of the system. In addition, some users want to not only count visitors, but also get an overview picture from their camcorder.

2. Synchronize images from two cameras.

In fact, we almost immediately refused this option. Firstly, this would lead to a significant increase in the cost of the solution for the user. Secondly, it seemed to us impractical to solve the problem of synchronizing frames from two different cameras. Its implementation required us to assemble a device that would include 2 identical cameras installed in certain positions and at a certain distance from each other. But most importantly, we needed to simultaneously receive the same frames from these cameras. This was already more complicated: internal delays can occur in the camera, and if you do the processing of frames at the software level, then how do you understand in which millisecond a given frame has come relative to the frame from another camera?

We decided to work out other options.

3. Use the experience of Microsoft.

Studying the topic of image depth, we found a study from Microsoft . It described a method for using the IR illumination of a camera to determine distance. In general, this option seemed very interesting: we take any camera with IR illumination, illuminate the area, evaluate the brightness and get the depth data we need. The more illuminated the area, the closer it is to the camera. But it turned out that this method solves only narrow tasks well, for example, recognizes gestures. Because different materials reflect light to different degrees.

Therefore, objects can in fact be at the same distance, but due to the difference in materials and the ability to absorb and reflect light, the distance to them will be interpreted differently. We checked this experimentally in the office, when we received a map for different objects laid out on the same level from different materials:

As a result, this algorithm works well only on any one material, and in our case we are talking about an absolutely heterogeneous environment.

4. Use structural highlighting.

Structural illumination essentially also works on infrared rays. Only in the previous version, rays are emitted and the level of illumination of the surfaces on which they fall is measured, and in the current version, a picture is emitted on the surface (for example, circles). The reflected images are read, and by their size and distortion, you can understand how far from the emitter this or that object is located. In the variant of claim 3, the map is built on the basis of the intensity of the rays (which directly depends on the reflectivity of the surfaces), and in the current one, on the basis of data on the structure of the reflected image, the brightness is not taken into account here.

This option seemed to us the most advantageous. In addition, we were able to find a suitable finished hardware device with structural illumination. And this meant that we did not need to deal with what was not our specialization (to design this very device). We had to go about our business - write a processing algorithm.

The first prototype we wrote on Kinect (this is a touch-sensitive game controller from Microsoft for gesture recognition). The expectations were confirmed, the chosen approach turned out to be workable - the device issued a map of acceptable depth and accuracy. However, later it turned out that for our specificity Kinect was not convenient in everything. First of all, it is a USB device that does not fit into the infrastructure of our users (IP video systems). Therefore, we would have to build something above it or supply an adapter from USB to the network input in the kit. The second significant limitation was that Kinect does not have processing power. Given that the pure depth map itself weighs quite a lot, without processing and compression on board the device, it was quite problematic to transmit it over the network.

The final implementation of 3D counting includes another device with structural highlighting. It has independent computing power, which in the current implementation is used to compress the depth map, so the network is unloaded. And we already wrote in detail in the article “Deep calculation. About how the same software processing and calculation is performed . As 3D-technologies help to count people and make life easier? " .

PS:

Developing a new solution is not always impenetrable code writing. Search, read, try, print on a 3D printer, bring something from home to test your theory - this is the real development process. And often, in order to create something breakthrough, new, you need to move away from the usual work models.

We decided to make it better, more accurate and more convenient for the user. There was one small question: how to do it? In our case, the procedure was as follows:

1. Read scientific articles and publications;

2. Discuss, analyze and choose ideas;

3. Prototype and test;

4. Choose and design one.

After completing the first two steps, we decided to create a new count of visitors, which will be based on information about the depth. Depth is the vertical distance from the camera to objects falling into its field of view. It gives information about the height of the one who crosses the entry-exit line, therefore, it allows you to distinguish people from other objects.

Depth can be obtained in several different ways. And we had to choose exactly how we would do it in the framework of the new calculation. We identified 4 priority areas for further study.

1. Use a stereo nozzle for the camcorder.

One of the options for obtaining depth data is to create a stereo nozzle on the camcorder's lens, which will double the image. Next, write an algorithm that will process it, combine the corresponding reference points and get a stereo image that will allow you to find out distance data and build a depth map.

Looking ahead, we note that options involving the construction of something hardware (whether a nozzle or a device) attracted us to a lesser extent. We are developers and are specialists in writing algorithms and programs. And we did not really want to take on what we are not professionals in.

First, we looked for ready-made nozzles to test our reasoning in practice. But to find an option that would suit us in all respects, failed. Then we printed our own sample nozzle on a 3D printer, but it was also unsuccessful.

The process also revealed that using a stereo nozzle reduces the versatility of the solution, since not every camera can be worn. Therefore, it would be necessary to either produce a line of different nozzles, or restrict users in choosing cameras.

Another limitation was that the stereo nozzle significantly narrows the field of view of the camera (2 times). Sometimes the inputs and outputs are quite wide, such that several ordinary video cameras are installed several. And if you narrow the field of view of the camera by half, then this will complicate the life of the installers and increase the cost of the system. In addition, some users want to not only count visitors, but also get an overview picture from their camcorder.

2. Synchronize images from two cameras.

In fact, we almost immediately refused this option. Firstly, this would lead to a significant increase in the cost of the solution for the user. Secondly, it seemed to us impractical to solve the problem of synchronizing frames from two different cameras. Its implementation required us to assemble a device that would include 2 identical cameras installed in certain positions and at a certain distance from each other. But most importantly, we needed to simultaneously receive the same frames from these cameras. This was already more complicated: internal delays can occur in the camera, and if you do the processing of frames at the software level, then how do you understand in which millisecond a given frame has come relative to the frame from another camera?

We decided to work out other options.

3. Use the experience of Microsoft.

Studying the topic of image depth, we found a study from Microsoft . It described a method for using the IR illumination of a camera to determine distance. In general, this option seemed very interesting: we take any camera with IR illumination, illuminate the area, evaluate the brightness and get the depth data we need. The more illuminated the area, the closer it is to the camera. But it turned out that this method solves only narrow tasks well, for example, recognizes gestures. Because different materials reflect light to different degrees.

Therefore, objects can in fact be at the same distance, but due to the difference in materials and the ability to absorb and reflect light, the distance to them will be interpreted differently. We checked this experimentally in the office, when we received a map for different objects laid out on the same level from different materials:

As a result, this algorithm works well only on any one material, and in our case we are talking about an absolutely heterogeneous environment.

4. Use structural highlighting.

Structural illumination essentially also works on infrared rays. Only in the previous version, rays are emitted and the level of illumination of the surfaces on which they fall is measured, and in the current version, a picture is emitted on the surface (for example, circles). The reflected images are read, and by their size and distortion, you can understand how far from the emitter this or that object is located. In the variant of claim 3, the map is built on the basis of the intensity of the rays (which directly depends on the reflectivity of the surfaces), and in the current one, on the basis of data on the structure of the reflected image, the brightness is not taken into account here.

This option seemed to us the most advantageous. In addition, we were able to find a suitable finished hardware device with structural illumination. And this meant that we did not need to deal with what was not our specialization (to design this very device). We had to go about our business - write a processing algorithm.

The first prototype we wrote on Kinect (this is a touch-sensitive game controller from Microsoft for gesture recognition). The expectations were confirmed, the chosen approach turned out to be workable - the device issued a map of acceptable depth and accuracy. However, later it turned out that for our specificity Kinect was not convenient in everything. First of all, it is a USB device that does not fit into the infrastructure of our users (IP video systems). Therefore, we would have to build something above it or supply an adapter from USB to the network input in the kit. The second significant limitation was that Kinect does not have processing power. Given that the pure depth map itself weighs quite a lot, without processing and compression on board the device, it was quite problematic to transmit it over the network.

The final implementation of 3D counting includes another device with structural highlighting. It has independent computing power, which in the current implementation is used to compress the depth map, so the network is unloaded. And we already wrote in detail in the article “Deep calculation. About how the same software processing and calculation is performed . As 3D-technologies help to count people and make life easier? " .

PS:

Developing a new solution is not always impenetrable code writing. Search, read, try, print on a 3D printer, bring something from home to test your theory - this is the real development process. And often, in order to create something breakthrough, new, you need to move away from the usual work models.