Veritas Access 7.3: Pros, Cons, Pitfalls

Software Defined Storage Approach

In this article, we will review and test a new version of Software-Defined Storage (SDS) Veritas Access 7.3, a multi-purpose scalable data storage based on regular x86 architecture servers with support for file, block and object access. Our main task is to get acquainted with the product, with its functionality and capabilities.

Veritas is a synonym for reliability and experience in the field of information management, owning a long tradition of leadership in the backup market, producing solutions for analyzing information and ensuring its high availability. In Veritas Access Software-Defined Storage, we see the future and are confident that after a while the product will gain popularity and will occupy one of the key positions in the SDS solutions market.

The platform on which Access was created was a product with a long history of InfoScale (Veritas Storage Foundation), which at the time of the lack of virtualization was at the peak of popularity in Highly Available (HA) solutions. And from Veritas Access’s younger brother, we expect the continued success story of HA in the form of Software-Defined Storage.

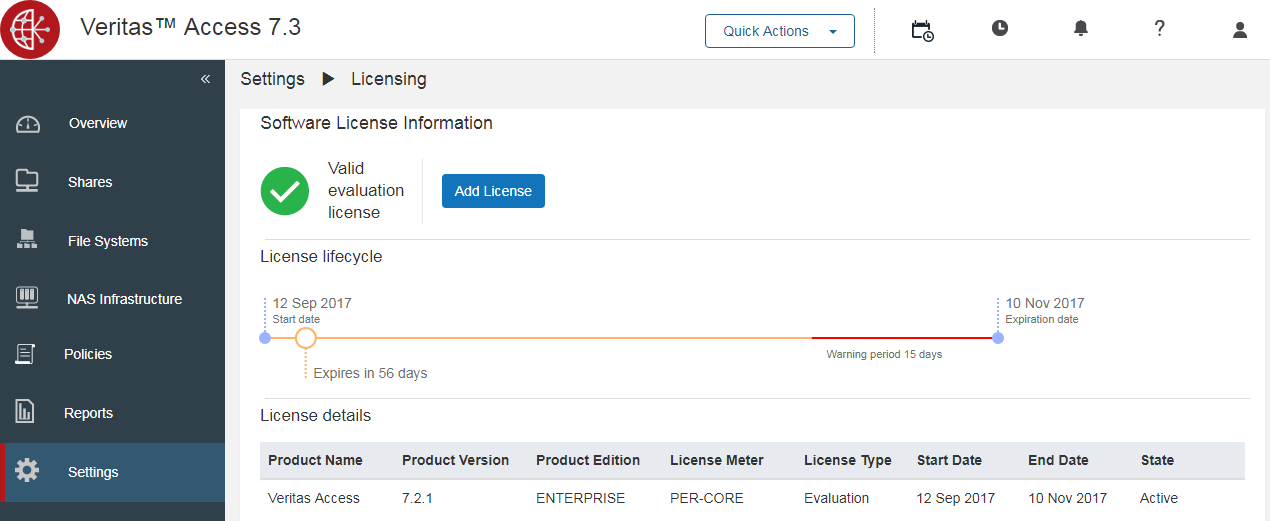

One of the advantages of Veritas Access is its price. The product is licensed by the number of active cores (approximately $ 1000/1 CORE in the GPL (global price list)), the cost is completely independent of the number of disks and the total storage system capacity. For nodes, it is reasonable to use low-core, high-performance single-processor servers. Roughly speaking, an Access license for a 4-node cluster will cost $ 20,000– $ 30,000, which against the background of other SDS solutions, where the cost starts at $ 1,500 per terabyte of raw GPL space, looks very attractive.

The main advantage that distinguishes Veritas Access from Ceph and its similar SDS solutions is its “box-like": Veritas Access is a full-fledged Enterprise-level product. Implementation and support of Access is not fraught with difficulties that only specialists with deep knowledge of nix SDS systems can do. In general, implementing any SDS solution is not a particularly difficult task, the main difficulties arise during operation in the form of adding nodes when they fail, transferring data, updating, etc. And in the case of Access, all problems are understandable, since the solutions are described for Storage Foundation . And in the case of Ceph, you need to have qualified personnel who can provide specialized support. Someone is comfortable working with a ready-made solution in the form of Access, someone seems to be comfortable using the Ceph constructor with a more flexible approach, but requiring knowledge. We will not compare Access with other SDS solutions, but try to give a small overview of the new product. Perhaps he will help you make a choice in the question of which SDS solution you will be more comfortable working with.

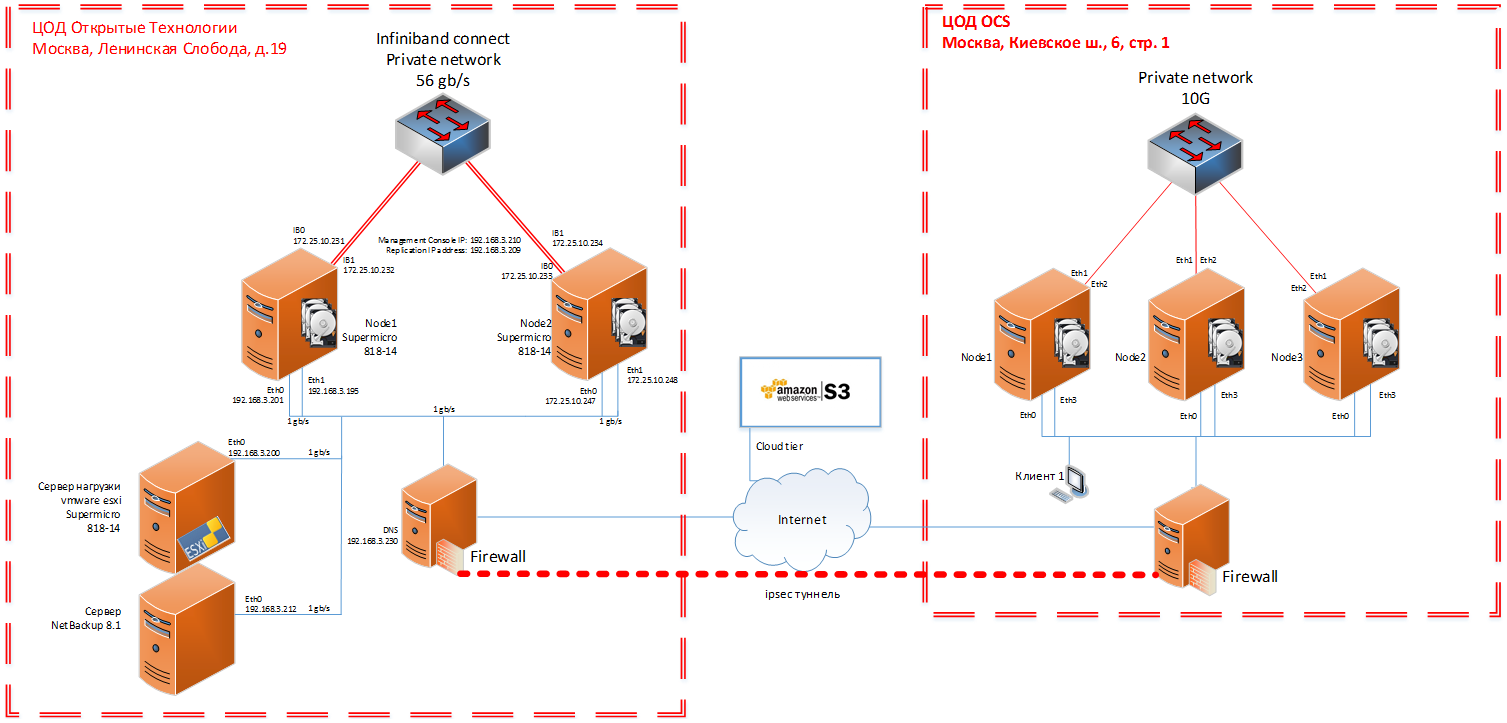

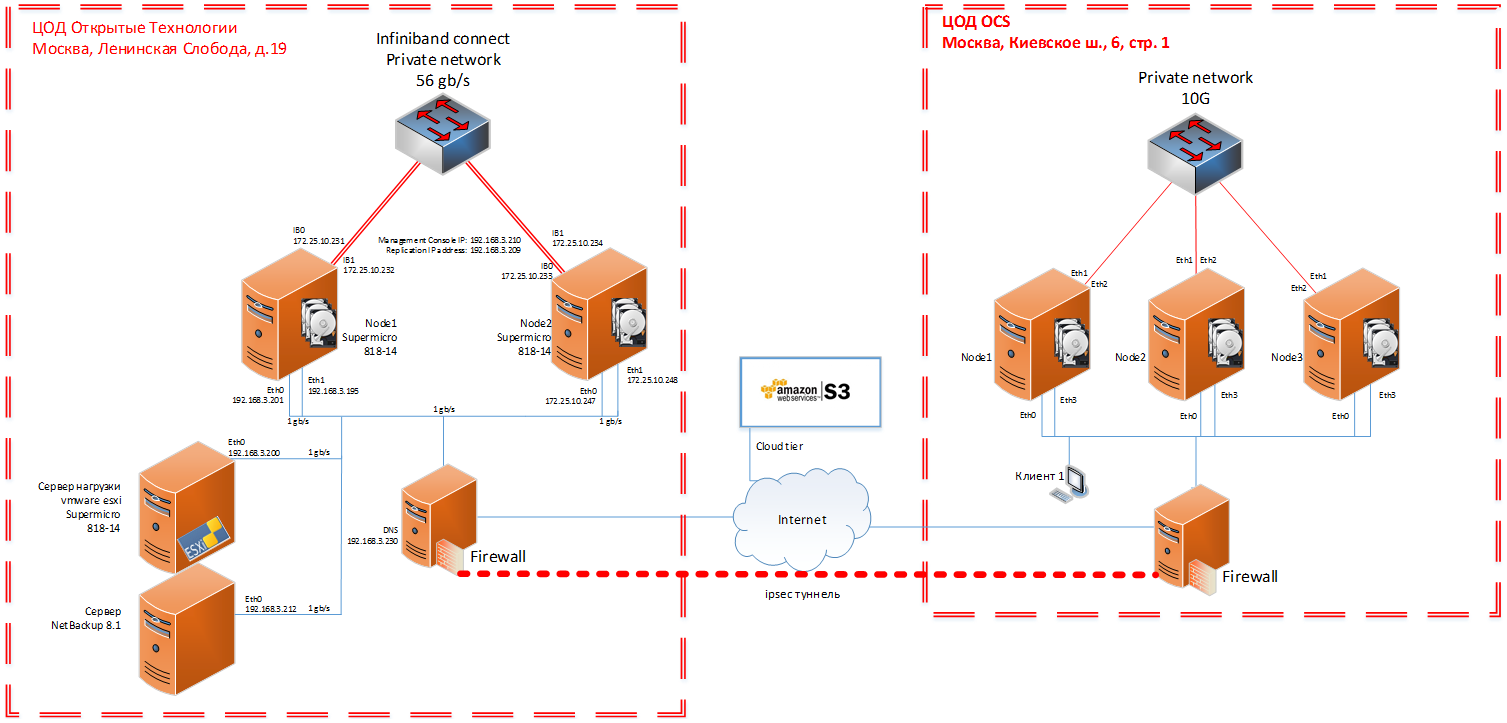

For the transparency of testing, as close as possible to production, together with colleagues from one of Veritas distributors - OCS, we assembled a five-node stand on physical servers: a two-node cluster in the Open Technologies data center and a three-node cluster in the OCS data center. Below are photos and diagrams.

File vs Block vs Object Storage

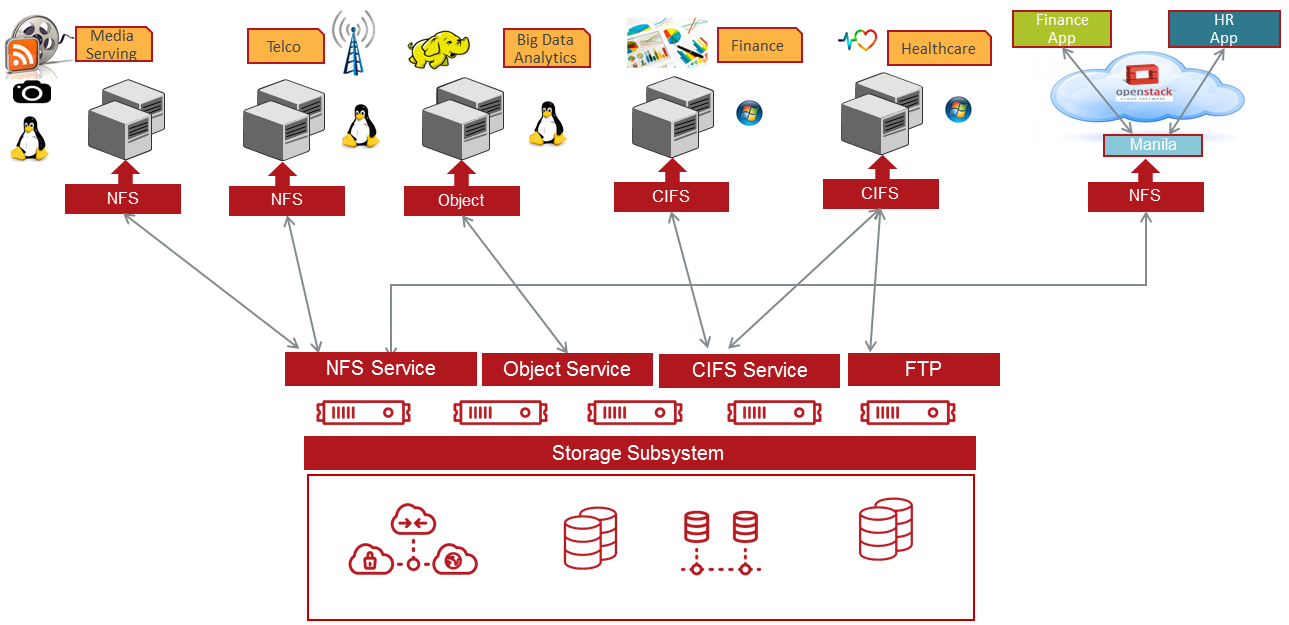

Veritas Access works on distribution through all protocols, providing file, block and object accesses. The only difference is that file access is very easy to configure via the WEB interface, while the other two settings are currently poorly described and complicated.

NFS, S3 work on nodes in active-active mode. iSCSI and CIFS in the current release 7.3 work in active-passive. Services connected via NFS, S3 will continue to work if any of the nodes fails, and services connected via CIFS, iSCSI may not survive the loss of the node on which CIFS (Samba) is active. It will take some time until the cluster realizes that the node is down and starts the CIFS service (Samba) on another node, similar to iSCSI. Active-active for iSCSI and CIFS is promised in future releases.

iSCSI in 7.3 is presented as a tech preview, in Veritas Access 7.3.1, the release of which is promised on December 17, 2017, there will be a full-fledged implementation of iSCSI in active-active.

More about modes:

- Active-active: two (or more) cluster nodes work with the application, the so-called “two legs application on the storage system” mode, that is, the output of one cluster node working with the application will not result in denied access or data loss.

- Active-passive: the application works with only one cluster node, in this case "the application stands on the storage system with one leg." Critical loss of the node, possible loss of recorded data (error) and short term access. For file trash, recording video archive from cameras - this is not critical. And if you decide to backup the database using CIFS, and at the time of recording the backup, the node that took the load will stop working - “oh” will happen and the backup will have to start again. Accordingly, if a node fails to accept the load or a node onto which the data mirror is written, nothing bad will happen, only performance will slightly decrease.

Why SDS?

A bit of theory.

Traditional storage systems do an excellent job of current tasks, providing the necessary performance and data availability. But the cost of storage and management of different generations of storage systems are definitely not the most powerful aspects of traditional solutions. Scale-up architecture scaling, where performance is increased by replacing individual system components, is not able to restrain the growth of unstructured data in the modern IT world.

In addition to Scale-up vertical scaling architecture, Scale-out solutions have appeared, where scaling is done by adding new nodes and distributing the load between them. Using this approach, the problem of the growth of unstructured data is solved quickly and easily.

Cons of traditional storage systems:

• High cost • Low

shelf life of storage systems

• Complex management of storage systems of various generations and manufacturers

• Scale-up scaling architecture

Advantages of Software-Defined Storage with horizontal Scale-out scaling:

• No dependence on the hardware of the system, any x86 server can be used for nodes

• Flexible and reliable solution with simple scaling and redundancy support

• Predictable level of service for applications based on policies

• Low cost

• Performance

Cons software-defined system storage Data:

• Lack of a single point of technical support

• More qualified engineers required

• The need for an N-fold amount of resources (excess volume)

When trying to expand a traditional storage system after 3-5 years of use, the user is faced with sharply rising costs that force him to buy a new storage system. When disparate devices of various manufacturers appear, control over ensuring the level of quality and reliability of the storage subsystem as a whole is lost, which, as a rule, greatly complicates the solution of business problems.

Software-defined storage allows you to look at the problem of placing growing data from the other side: its implementation preserves the performance and availability of information without having to breed a zoo from various manufacturers and generations of storage systems, which makes working with data more accessible in all plans.

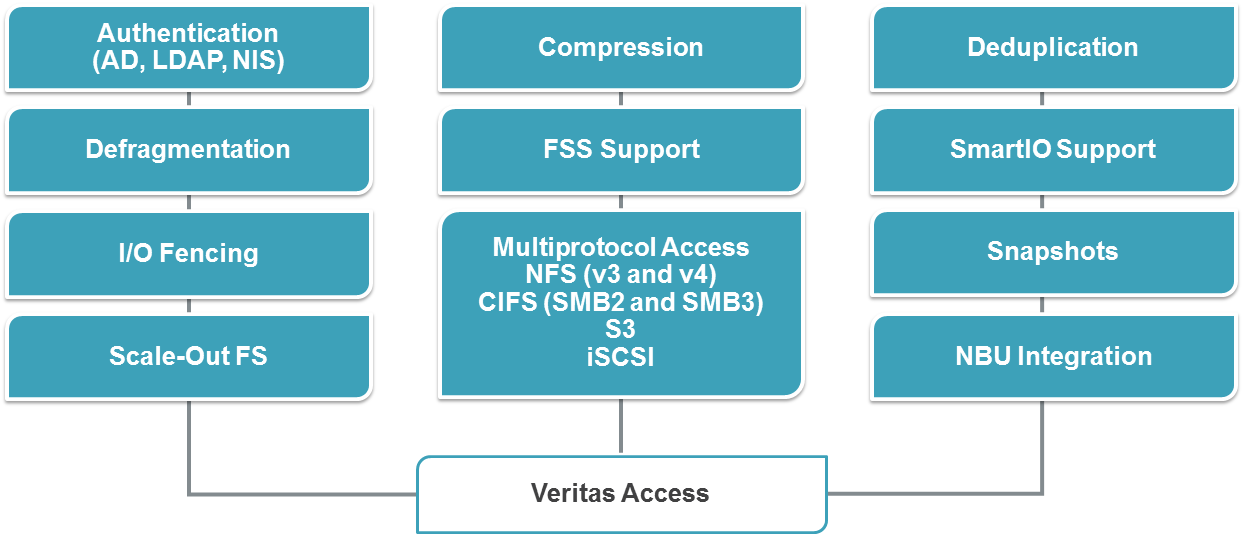

Key Business Benefits

- An affordable and reliable storage system that supports your existing hardware: both old and new on the x86 platform.

- High performance that meets the requirements of unstructured data - the ideal solution for storing a large number of small files with low latency, as well as serving streaming loads by providing high recording speed.

- High availability. Thanks to the multi-node scalable file system, which provides the ability to switch to another resource at the local, metro and global distance of nodes from each other without terminating access.

- A single storage solution for new and outdated applications. Multi-protocol storage solution (CIFS, NFS, iSCSI and S3)

- Easy to use WEB, SSH and RESTful API.

- Flexible scalable architecture. It is easy to add new nodes to increase productivity, fault tolerance and increase capacity.

Key Specifications

- A highly available scalable file system provides fault tolerance both locally and globally by replication.

- Policy-based storage management with which you can flexibly adjust the level of availability, performance, data protection and volume security.

- Multiprotocol access to files and objects: work with NFS, SMB3 / CIFS, iSCSI, FTP and S3, including support for reading and writing to one volume using different protocols.

- Hybrid cloud:

- OpenStack: support for Cinder and Manila

- Amazon (AWS): support for interface and S3 protocol. - Centralized user interface - a single window for managing all nodes with a simple and intuitive interface.

- Support for heterogeneous memory DAS, SSD, SAN, as well as the cloud.

- Storage Tiering: Support tearing between different storage volumes: clouds, SAN, DAS and SSD.

- Read Caching: The ability to use SSD for cache.

- Storage Optimization: Deduplication and Compression Support

- Snapshots.

- Direct Backup Ready: Built-in NetBackup backup client and support for Veritas Enterprise Vault.

- Flexible authentication capabilities with support for LDAP, NIS and AD.

- Flexible management features with support for WEB, SSH and RESTful API.

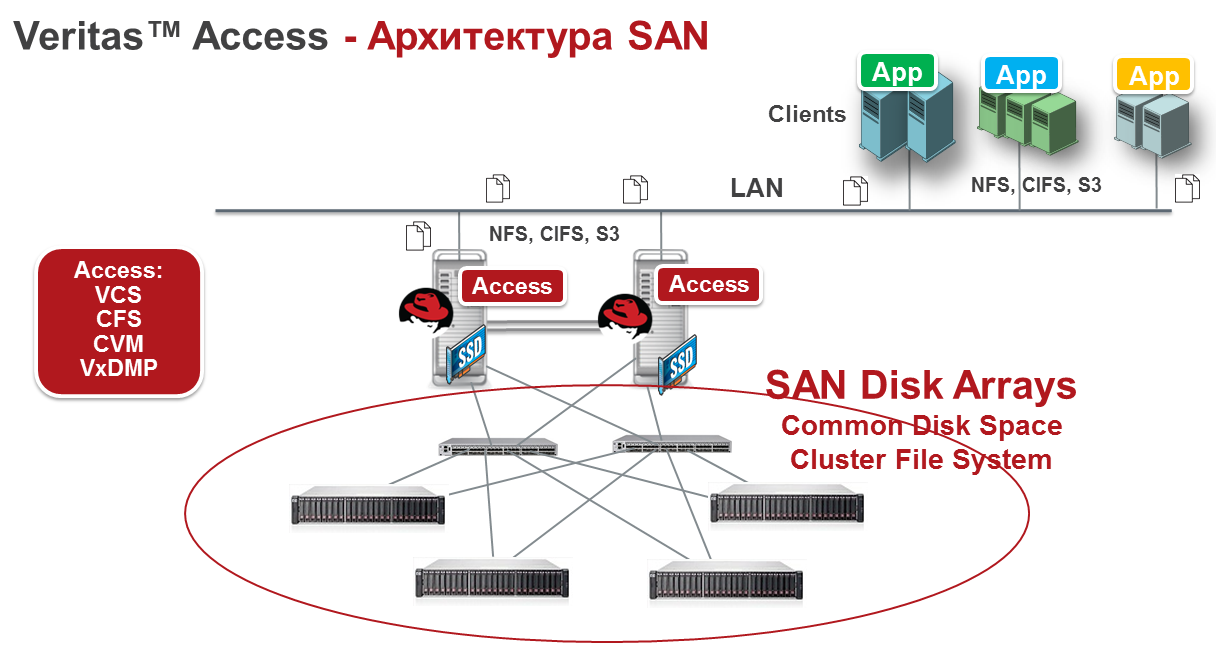

ARCHITECTURE

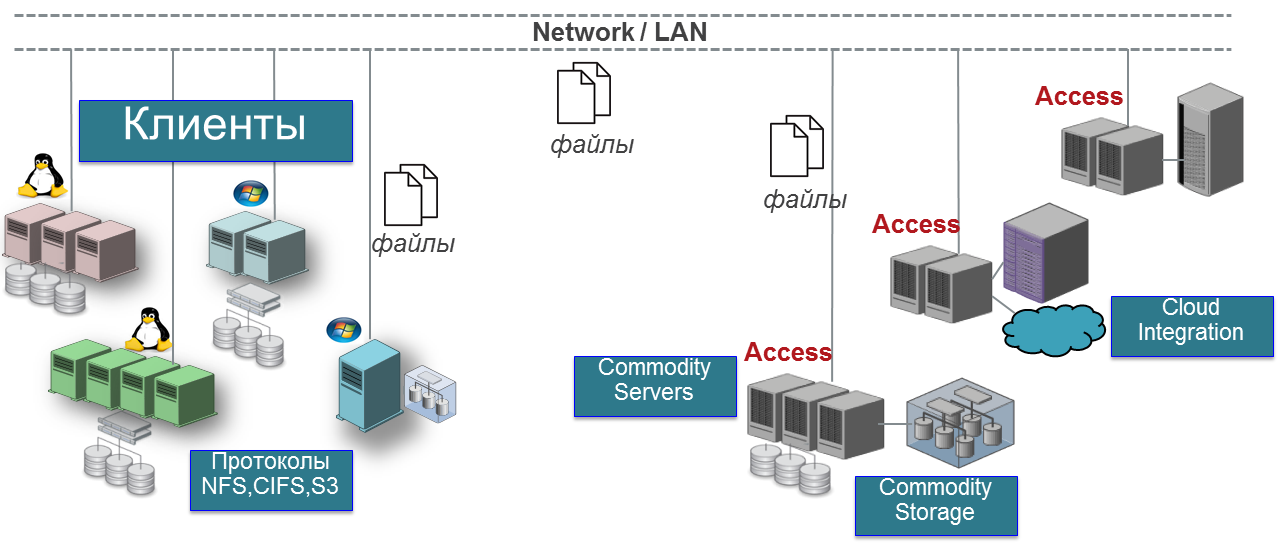

Veritas Access flexibly and easily scales with node expansion. This solution is primarily suitable for working with unstructured data, as well as other storage tasks. The need for software-defined storage systems dictates the transition to multi-purpose, multi-protocol products that combine reliability, high performance and affordable cost.

The Veritas Access cluster consists of connected server nodes. Together they form a united cluster sharing all the main resources. The minimum reference architecture consists of a two-node converged storage solution.

Veritas Access Foundation - Veritas Storage Foundation

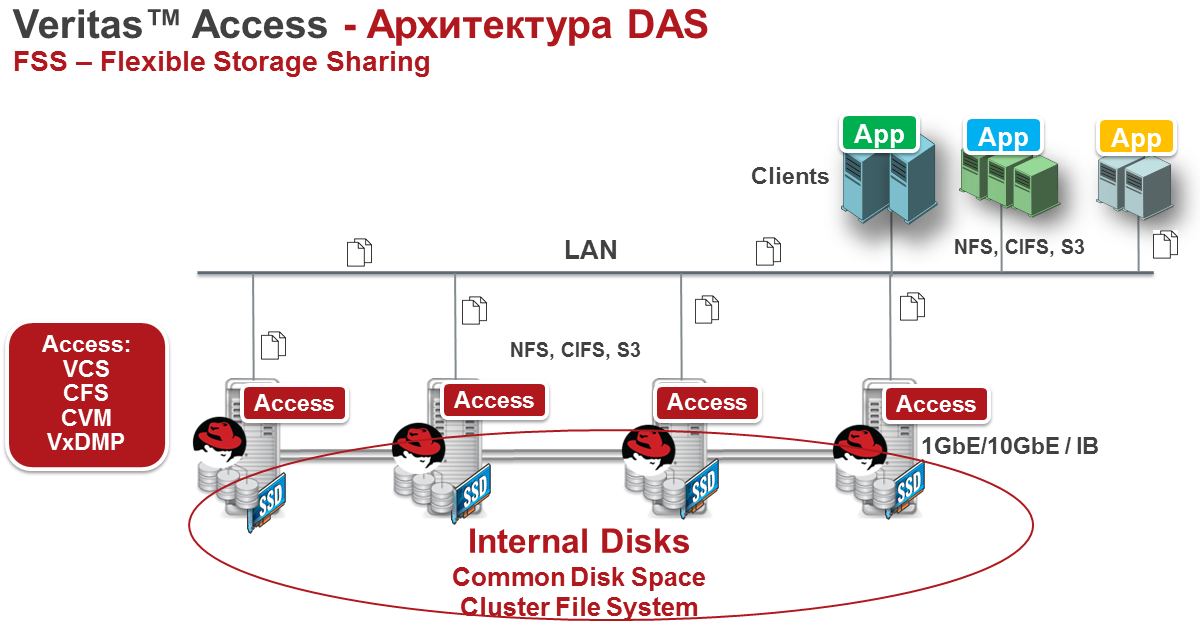

The achievements obtained during the life cycle of the Veritas Storage Foundation have found their logical application in the modern SDS class product, which is Veritas Access. Basically, the following components find their manifestation in Access:

- Veritas Cluster Server (VCS) is a clustering system for application services.

- Veritas Cluster File System (CFS) is a clustered file system.

- Veritas Cluster Volume Manager (CVM) is a cluster logical volume manager.

- Flexible Storage Sharing (FSS) is a technology that allows you to combine all local storage resources of nodes into a single pool, accessible to any node in the cluster.

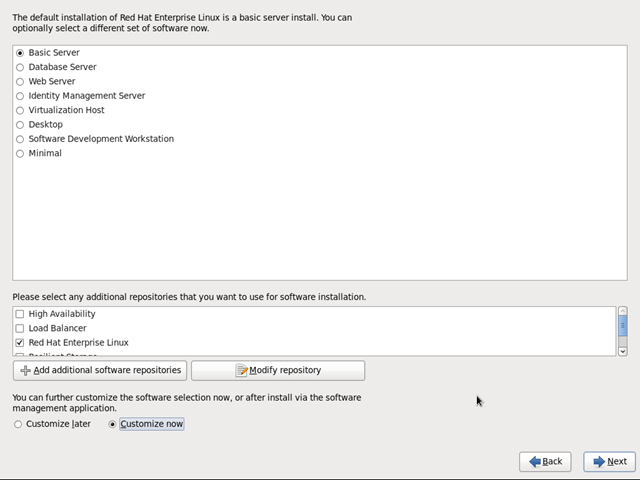

Veritas Access 7.3 hosts use x86 servers running Linux Red Hat Release 6.6 - 6.8. Starting with the release 7.3.0.1 - RedHat 7.3 - 7.4. Cluster joining is due to VCS technology. Any available disks are used as the storage pool. To add a node to the cluster, you must have at least 4 Ethernet ports in the server. A minimum of 2 ports go to client access and a minimum of 2 ports go to inter-node interconnect. Veritas recommends using InfiniBand for inter-node interconnect. Minimum requirements for interconnect 1 GB ethernet.

On RAM, a recommendation of 32 GB for production. Veritas Cluster File System (CFS) allows you to cache data due to RAM, therefore, any memory size above 32 GB will not be superfluous, but it is better not to use less. For testing, 8 GB is quite enough, there are no software restrictions.

A three-node configuration is best avoided; we recommend using two nodes or four, five, etc. at once. The current limitation of one cluster is 20 nodes. The InfoScale cluster supports 60, so 20 nodes is not the limit.

The VCS cluster provides fault-tolerant operation of data access services via NFS, CIFS, S3, FTP, Oracle Direct NFS protocols and the corresponding infrastructure on Veritas Access nodes, and in certain configurations it is possible to write and read the same data using different protocols.

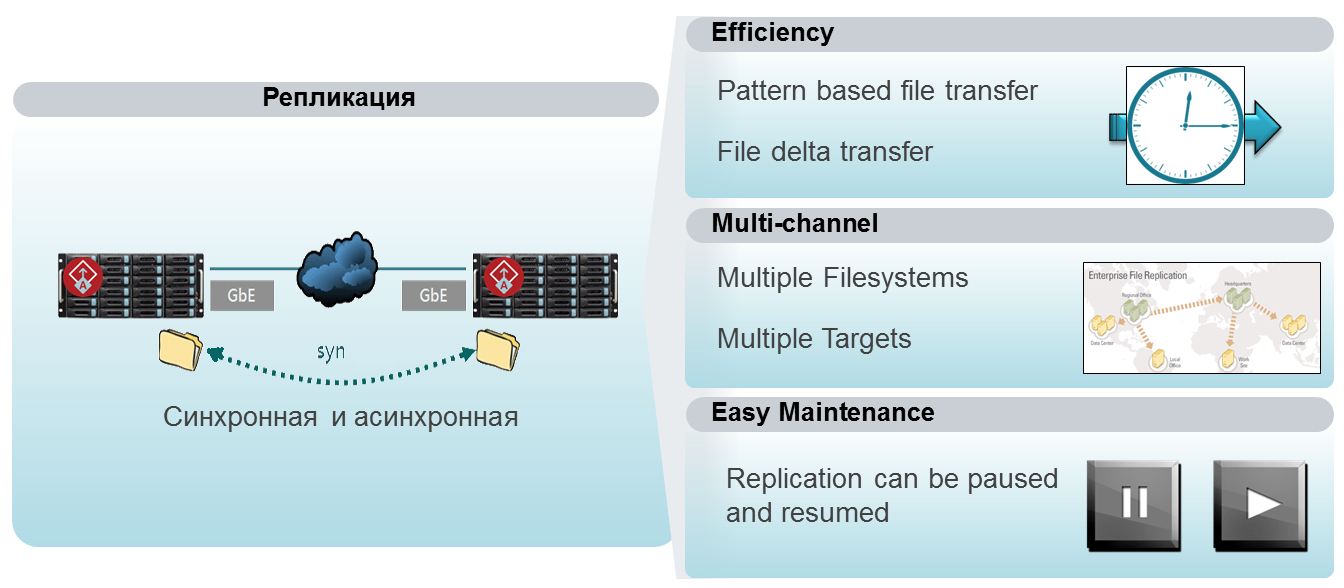

To organize file systems up to 3 Pbytes in size, a scalable file system (Scale-out) can be used, which allows, in particular, to connect external cloud storage within a single namespace. When building distributed storage or disaster recovery systems, file replication between different Veritas Access repositories can be configured. This technology allows you to asynchronously replicate the file system of the source cluster to the remote cluster with a minimum interval of 15 minutes, and the remote file system remains open for reading during replication. It supports load balancing between replication links and instantly switching the remote file system to record mode when the source is unavailable and switching the replication service from one node to another in case of failure.

The number of concurrent replication operations is unlimited. It is important to note that the replication technology uses Veritas CFS / VxFS functionality (File Change Log and snapshots at the file system level) to quickly identify changes, which avoids the loss of time scanning the file system and significantly increases replication performance.

DISC WORK ARCHITECTURE

Scheme of the stand together with OCS:

Photo booth in Open Technologies:

|  |

|---|

Download and Install

Get the Linux Red Hat Enterprise distribution kit for a 30-day subscription at this link . After the trial is completed, Red Hat distributions will be available for download, in particular, the necessary releases 6.6–6.8.

You can get the Veritas Access distribution at this link . To do this, fill out a trial form. During installation, the system itself will offer a key for 60 days. You can add the main license later through the web administration panel in the Settings → Licensing section.

All documentation for version 7.3 is available here .

Veritas Access installation can be conditionally divided into two stages:

1. Installing and configuring Red Hat Enterprise Linux

2. Installing Veritas Access

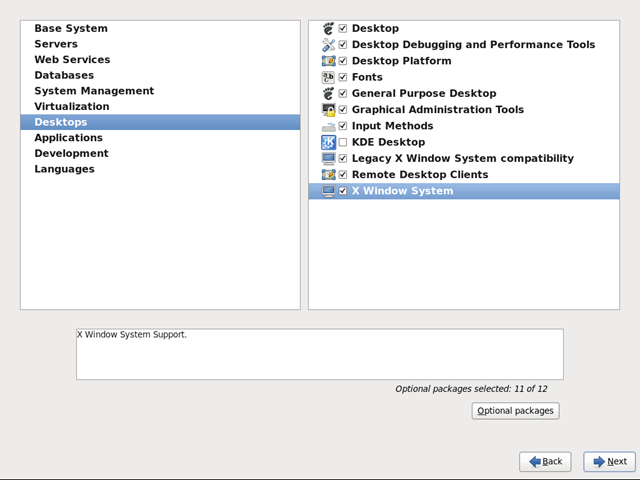

Installing Linux Red Hat Enterprise Linux

Typical Linux installation without any difficulty.

Red Hat Requirements:

With a Red Hat subscription, the Veritas Access installer will pull up the RPMs it needs. Starting with the release of Access 7.3, all the necessary modules have been added to the installer repository.

On public interfaces, in addition to IP addresses, you must set the Connection automatically or ONBOOT = yes flag in the interfaces configuration file.

Veritas Access

Installation : Installation files must be hosted on the same site. Installation is started by the command:

# ./installaccess node1_ip node2_ip nodeN_ipwhere node1_ip, node2_ip is any of the ip addresses of the public interface.

There are several points to consider when installing Veritas Access:

- Veritas Access installer is designed for perfect installation. Any left-right step is critical for him and may lead to an installation error. Carefully enter the addresses, masks, names.

- The installation must be run locally from any host or through the server management mechanism in the absence of physical access to them, not ssh.

- If you are unable to install Veritas Access the first time, I recommend reinstalling Red Hat and starting the Veritas Access installation from scratch. In the event of an error, the installer does not provide for a rollback of the system before the installation starts, which leads to a re-installation with partially running Veritas Access services and can cause problems.

After installing Veritas Access, 2 management consoles will be available:

• web - in our case, it is https://172.25.10.250:14161

• ssh - at 172.25.10.250 with the username master (default password is master) and root

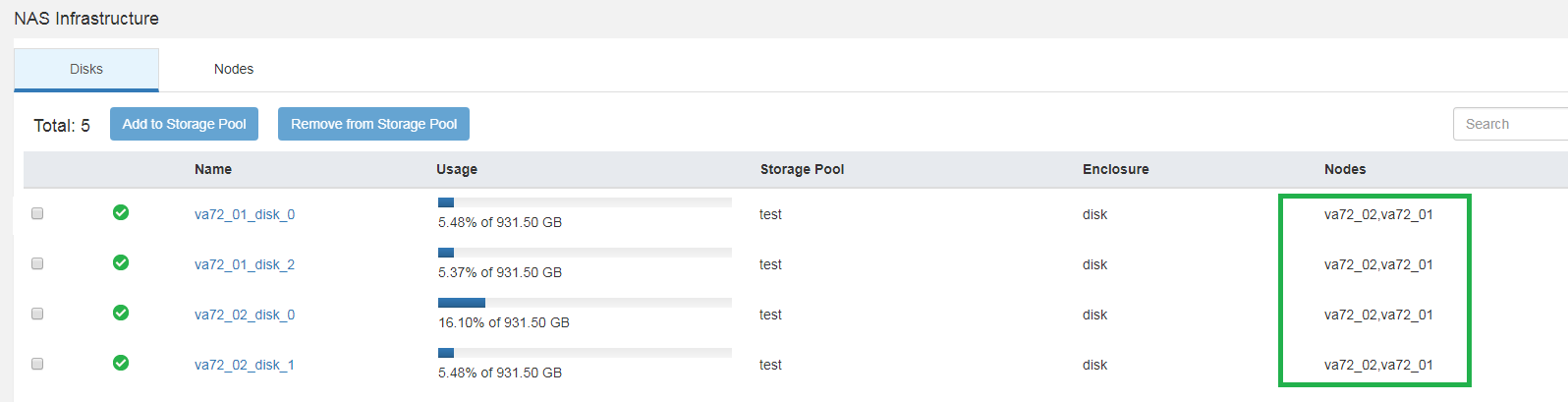

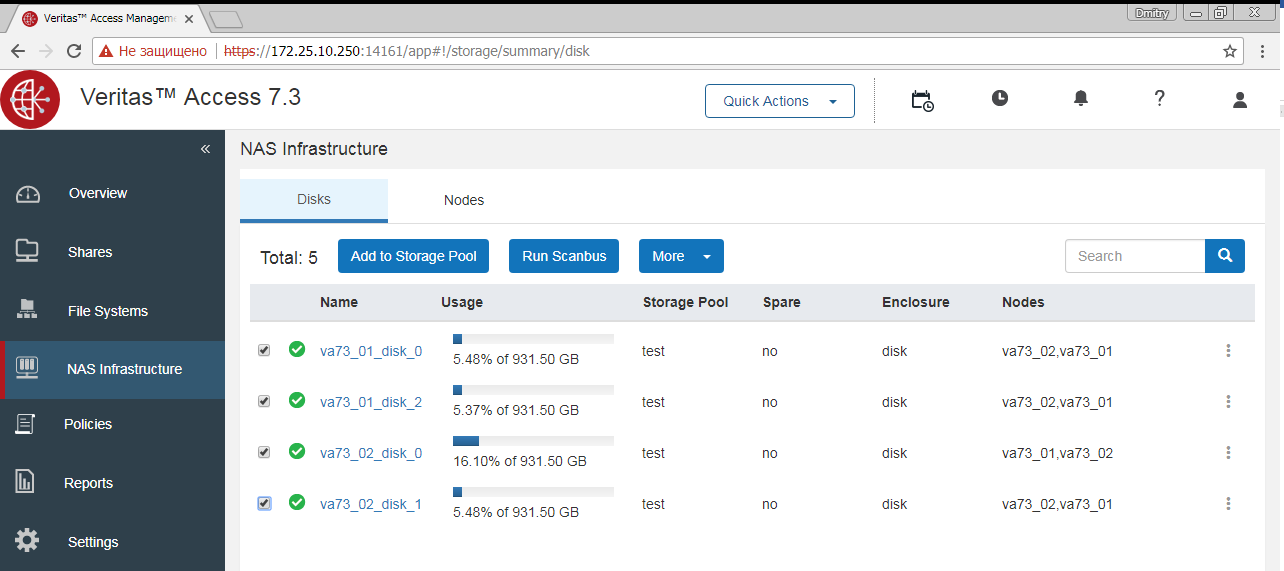

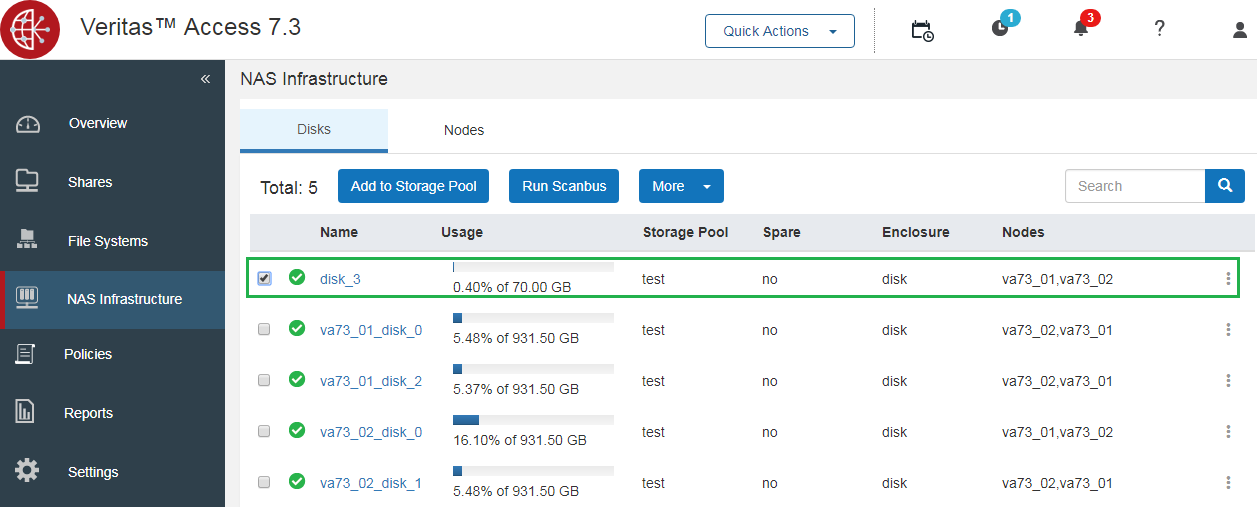

After installing Access, you must provide the cluster all disks of each node that you plan to use in the cluster for data. This process is not automatic, since you may have other plans for some of the cluster drives.

CLISH Commands:

# vxdisk list

# vxdisk export diskname1-N

# vxdisk scandisksFor “non-standard” server disks, you must specify the disk model and the address on which the S / N disk lies. In my case, on SATA disks the command turned out:

# vxddladm addjbod vid=ATA pid="WDC WD1003FBYX-0" serialnum=18/131/16/12This is a small minus to SDS systems with respect to non- Hardware Compatibility Lists hard drives . Each vendor lays its standards in disks, seeing a terabyte in a different number of bytes, sectors, placing identifiers in different addresses. And the situation is quite normal. If the SDS storage system does not correctly detect the disks, it needs a little help, in the case of Access, the instruction is here .

And here is the plus of Access: there is a non-standard situation - there is a document with its solution that is searched by Google, in addition there is a detailed help in the vxddladm module itself. There is no need to read bourgeois forums, to finish on the knee with an unpredictable result in production. If the problem cannot be resolved independently, you can always contact technical support.

As a result, each disk must be accessible to each node of the cluster.

After installation, the folder with the installation files will be deleted.

How does it work

In our case, the cluster consists of two nodes:

Each node has physical and virtual IP addresses. Physical IPs are assigned to each node as unique, virtual IPs serve all nodes of the cluster. Using physical interfaces, you can check the availability of the node, connect directly via ssh. If a node is unavailable, its physical interfaces will be unavailable. Each node's virtual IPs serve all nodes of the cluster and are active as long as there is at least one live cluster node. Clients work only with virtual addresses.

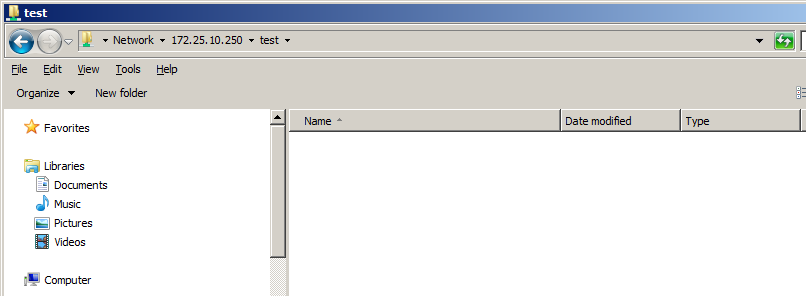

Each ball displays information on what IP is available.

At any given time, one node acts as a master; in the screenshot, this is node va73_02. The master node performs administrative tasks, load balancing. The role of the node wizard can be transferred or taken by another node in case of loss of accessibility or fulfillment of a number of conditions laid down in the logic of the Veritas Access cluster. If internal interconnect is lost, an unpleasant situation may occur in which each node becomes a master. The reliability of internal interconnect needs to be given special attention.

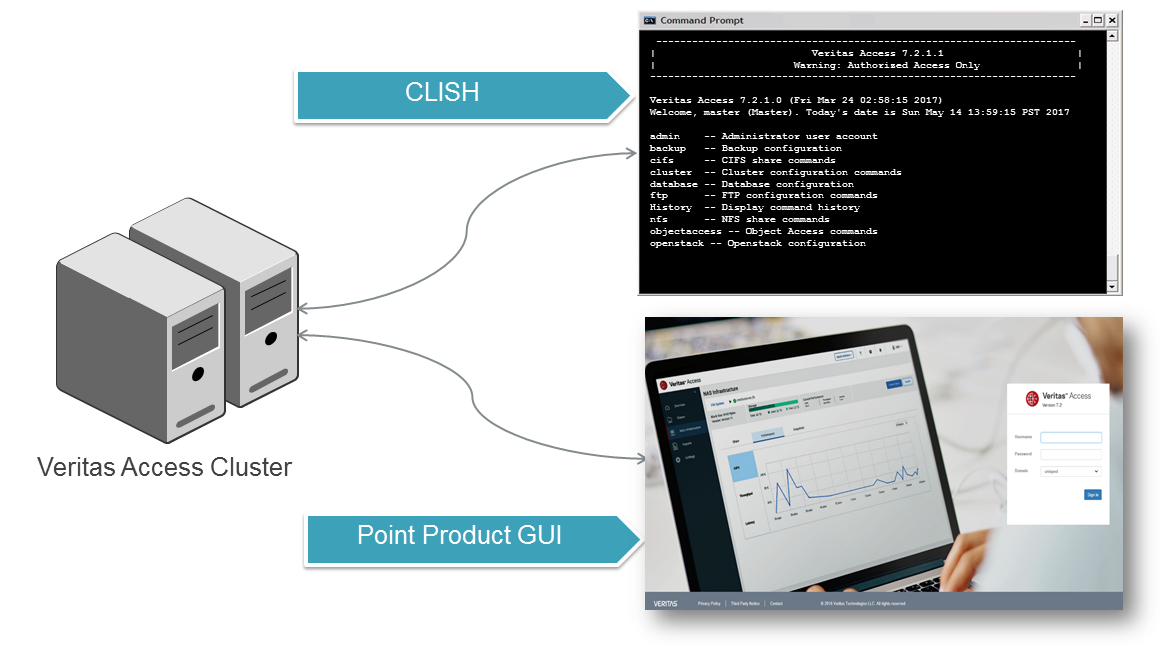

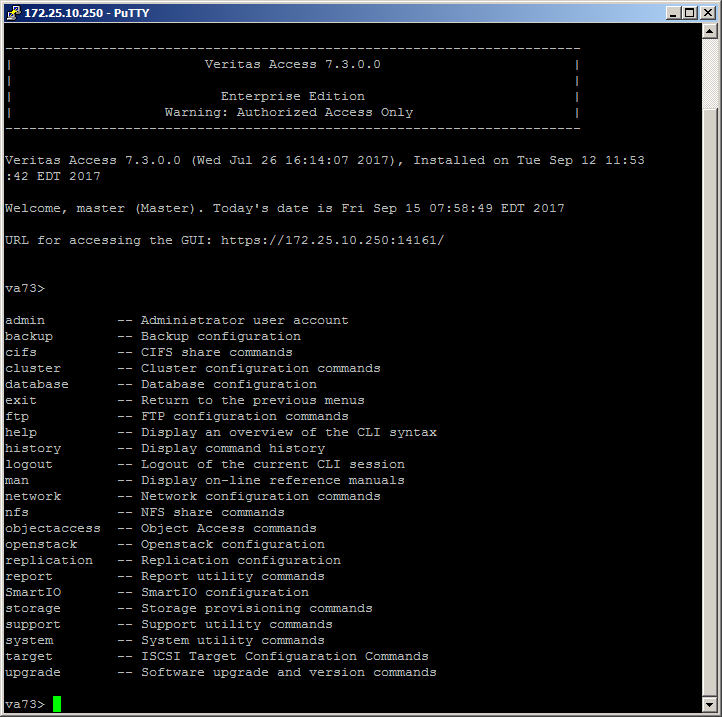

Control

Veritas Access has 3 management interfaces: CLISH in root and master mode, WEB and REST API.

| ssh master mode (login root, master) | web |

|---|---|

|  |

ssh master mode

The most comprehensive console management cluster Veritas Access, access is via ssh on the IP control with the login master (default password is master). It has an intuitive simplified set of commands and detailed help.

ssh node mode

Normal Linux management console, access is via ssh with root login on the IP control.

WEB

WEB cluster management console, access is carried out by root or master login on the IP management port: 14161, https protocol.

WEB console interprets commands in ssh master mode .

With each release, there is an expansion of WEB management capabilities.

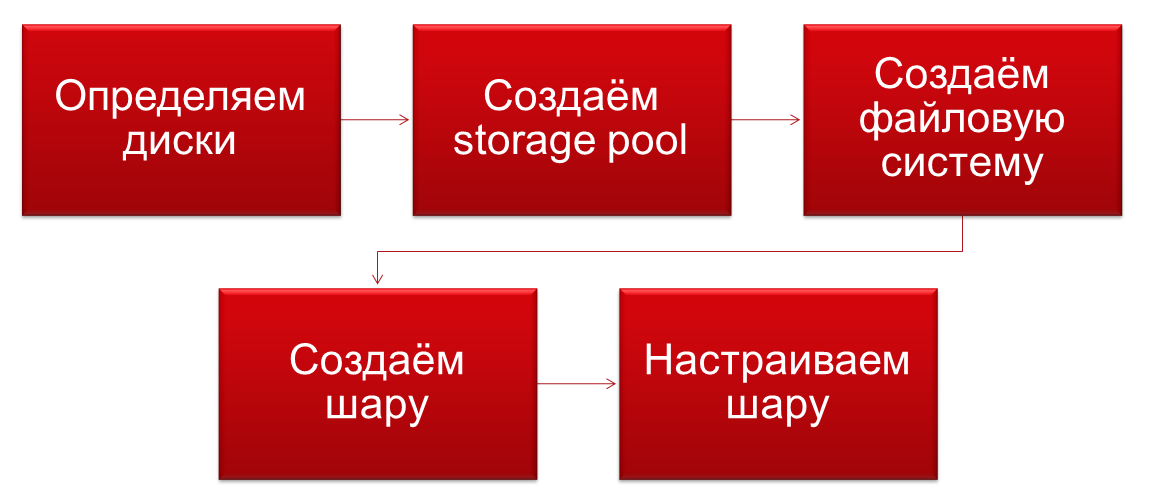

Initial setup

Just a few words on launching Veritas Access as a storage system.

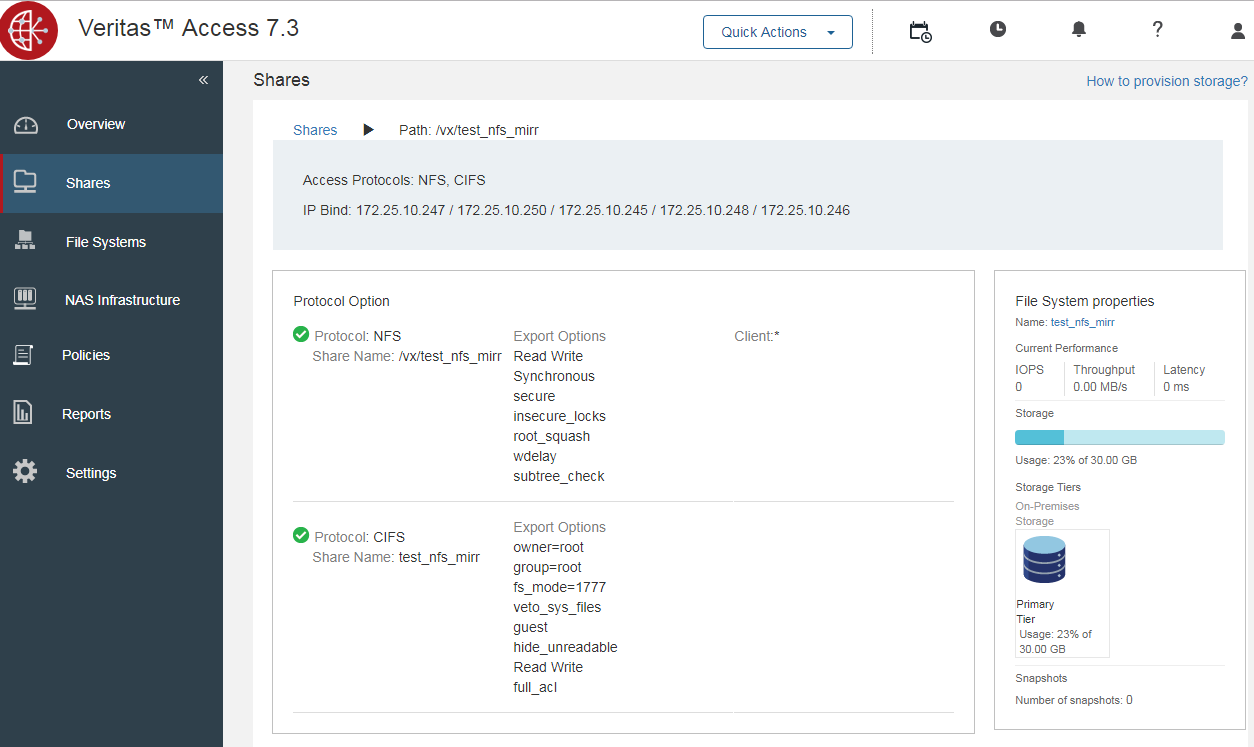

1. We combine the disks in the storage pool

2. We create a file system based on the type of disks, data and the required protection (analogous to RAID)

3. We share the file system using the necessary protocols. One file system can be shared over several protocols and, for example, can access the same files both via NFS and CIFS, both temporarily and permanently.

4. You are great.

Connection via ISCSI of external storage systems and any other storage devices

Veritas Access supports the use of any third-party iSCSI or storage volumes for storage. Third-party storage drives must be provided individually in RAW format. If this is not possible, use RAID 5 of 3 disks. Data protection is provided by the Veritas Access file system and is presented in the section above. There is no need to protect data at the hardware raid level; the settings of the Access file system indicate the number of nodes on which your data will be mirrored.

Volumes connected via iSCSI can be included in shared pools.

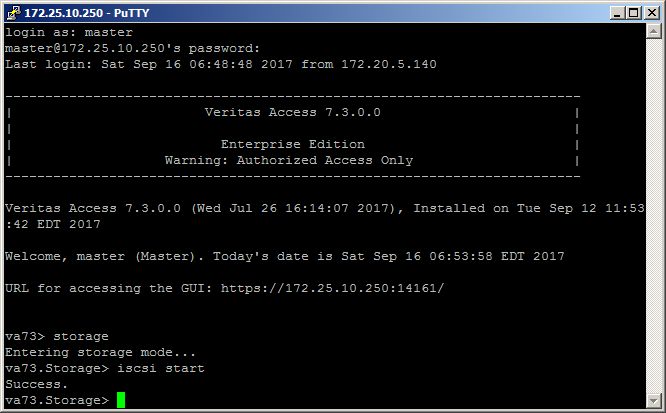

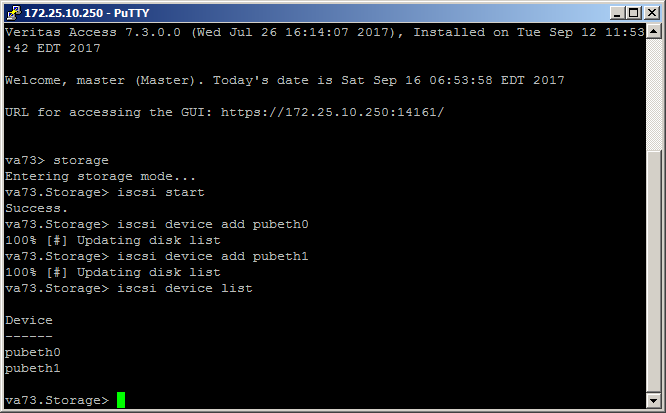

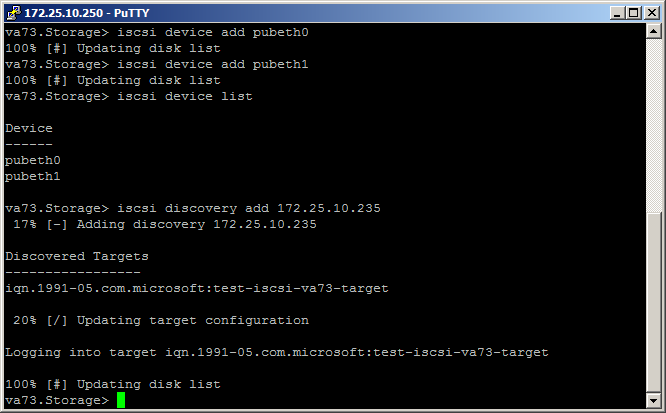

Adding an iSCSI disk looks like this:

1. Turn on iSCSI in the storage section:

2. Add iSCSI device:

3. Connect an iSCSI disk:

win2012 as an iSCSI test disk

Distribution on iSCSI storage volumes

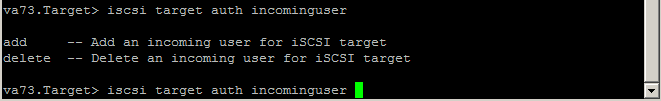

The configuration process in the current release is a little peculiar, you can read more about it on page 437 in the Command Reference Guide (iSCSI target service). For many teams in the current release there is no way to see the settings, i.e. all parameters are better pre-recorded in the TXT file.

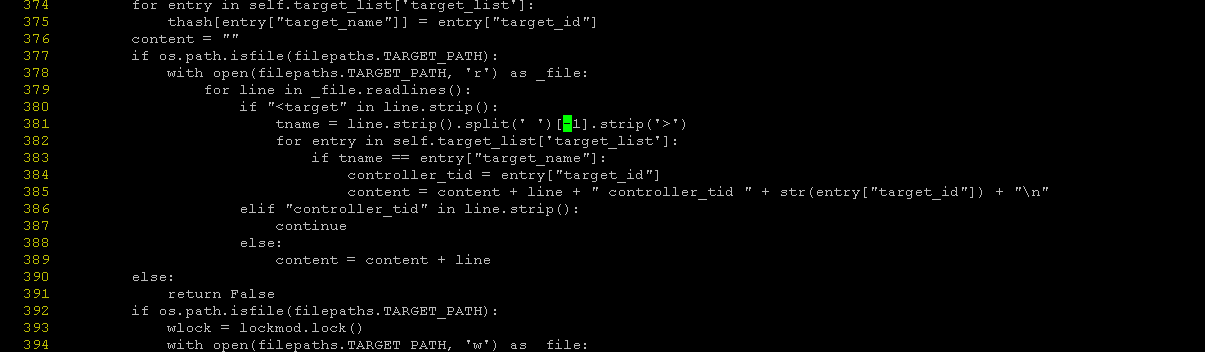

Important notice, in version 7.3 there is a bug due to which the iSCSI service does not start!

You can fix it as follows:

On the nodes, you need to fix the file /opt/VRTSnas/pysnas/target/target_manager.py

On line 381, fix [1] to [-1].

The process of starting distribution on iSCSI looks like this:

va73.Target> iscsi service start

ACCESS Target SUCCESS V-288-0 iSCSI Target service started

va73.Target> iscsi target portal add 172.25.10.247

ACCESS Target SUCCESS V-288-0 Portal add successful.

va73.Target> iscsi target create iqn.2017-09.com.veritas:target

ACCESS Target SUCCESS V-288-0 Target iqn.2017-09.com.veritas:target created successfull

va73.Target> iscsi target store add testiscsi iqn.2017-09.com.veritas:target

ACCESS Target SUCCESS V-288-0 FS testiscsi is added to iSCSI target iqn.2017-09.com.veritas:target.

va73.Target> iscsi lun create lun3 3 iqn.2017-09.com.veritas:target 250g

ACCESS Target SUCCESS V-288-0 Lun lun3 created successfully and added to target iqn.2017-09.com.veritas:target

va73.Target> iscsi service stop

ACCESS Target SUCCESS V-288-0 iSCSI Target service stopped

va73.Target> iscsi service start

ACCESS Target SUCCESS V-288-0 iSCSI Target service startedAnother important note: without restarting the iSCSI service, the new settings are not applied!

If something goes wrong, the iSCSI target logs can be viewed in the following ways:

/opt/VRTSnas/log/iscsi.target.log

/opt/VRTSnas/log/api.log

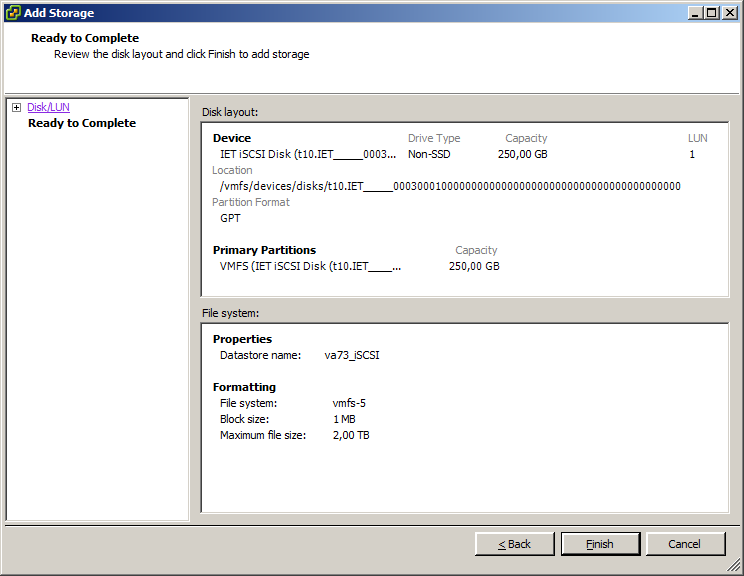

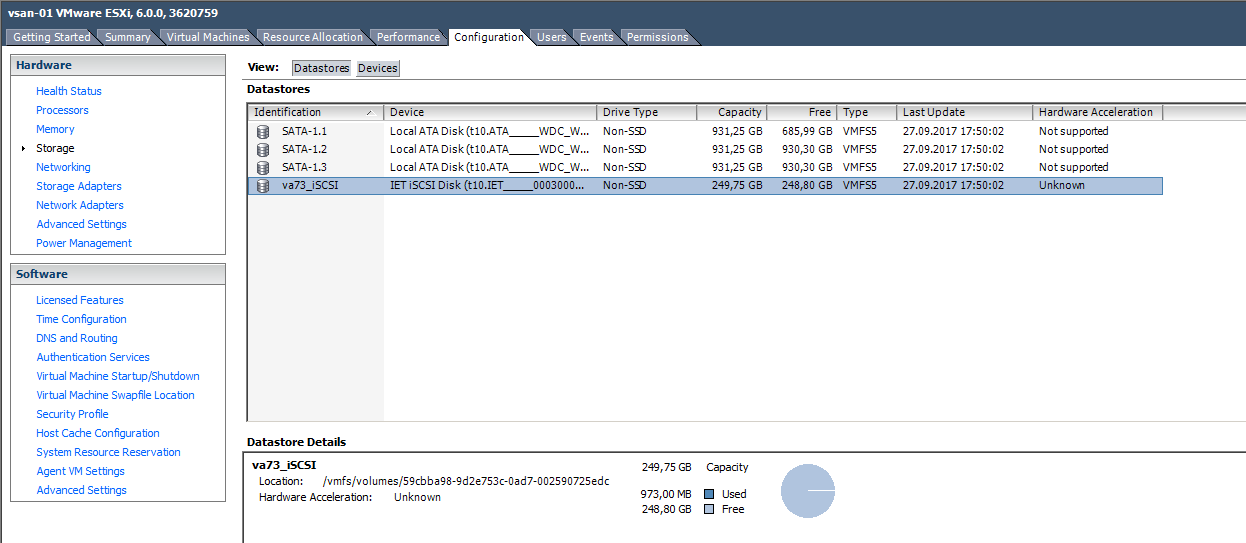

/var/log/messagesThat, in fact, is all. We connect our iSCSI volume to VMware ESXi.

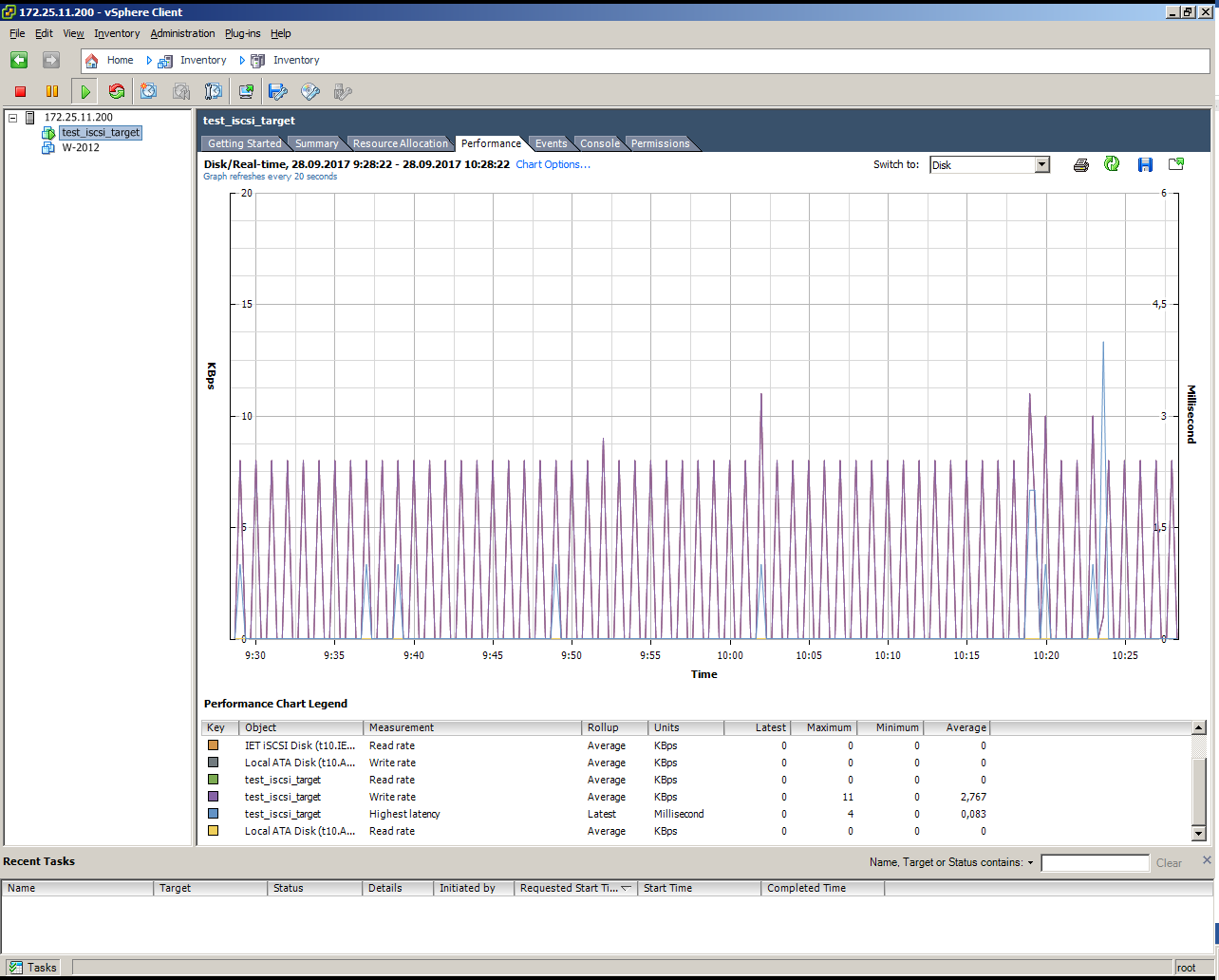

Install the virtual machine on that iSCSI, work without load:

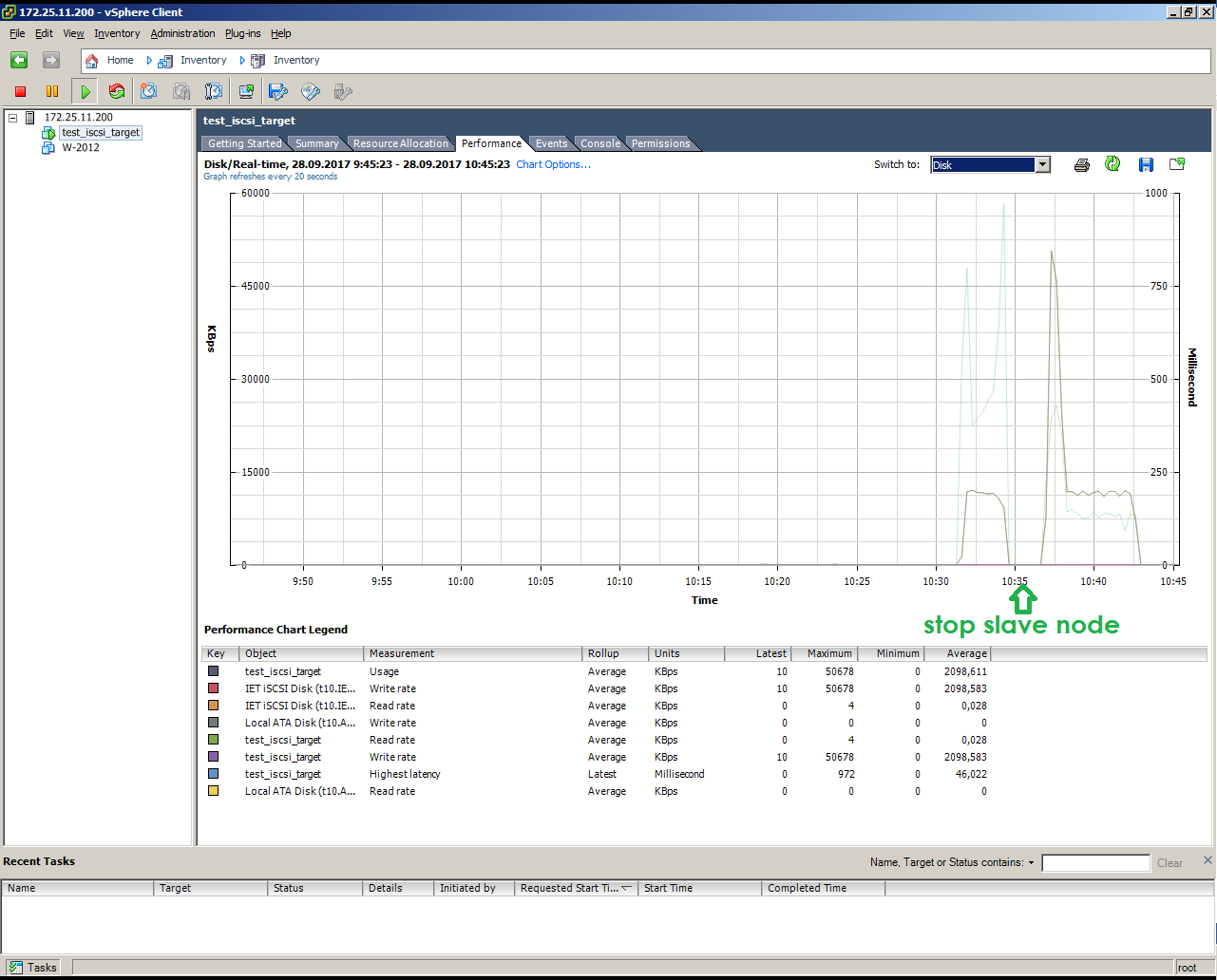

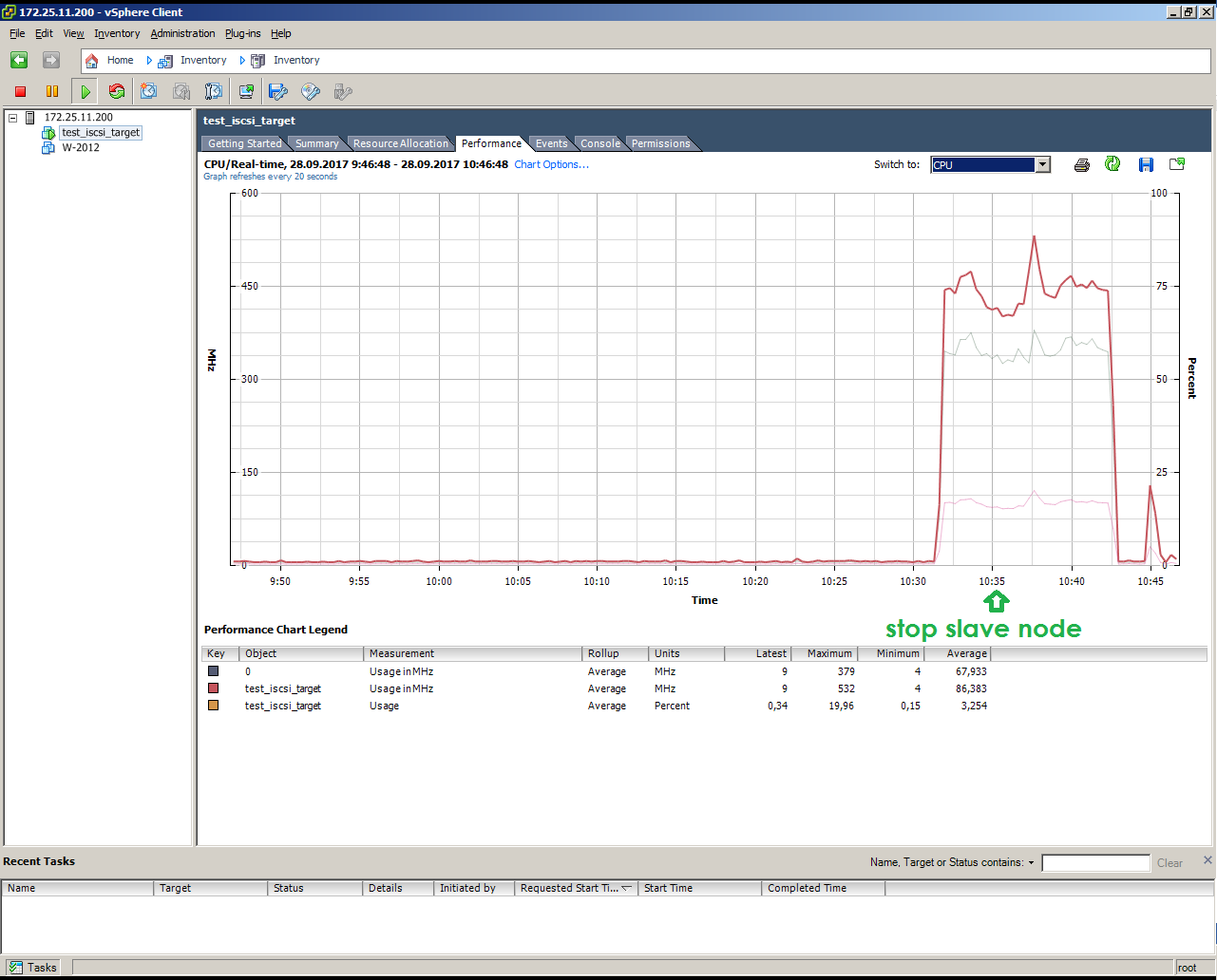

This is how the simulated SLAVE node failure under load looks like (copy 10 GB)

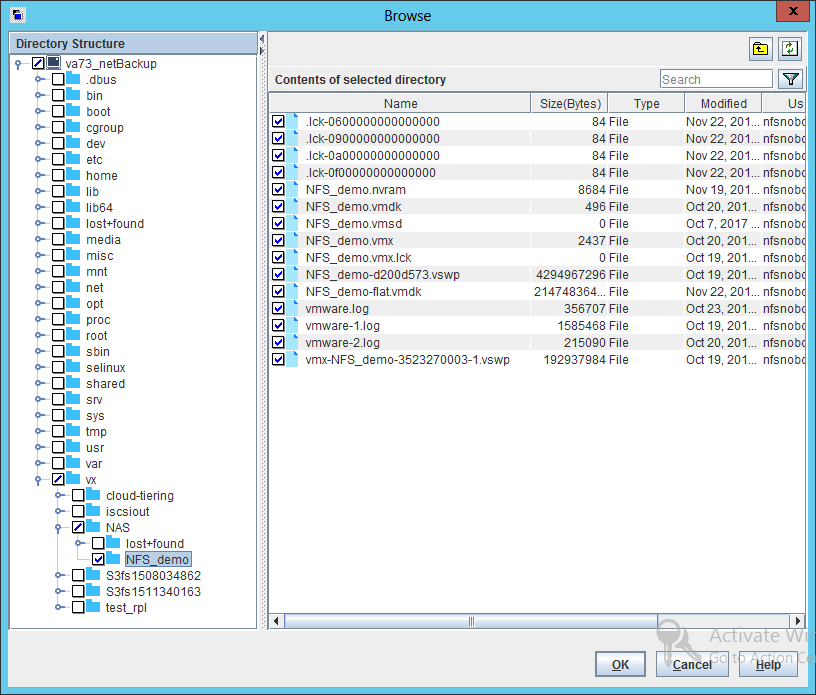

Veritas Access Integration with Veritas NetBackup

An important advantage of Veritas Access is its integrated integration with Veritas NetBackup backup software, which is provided by the default NetBackup Client agent, which is configured through the Veritas Access CLISH command interface, by default. The following types of backup operations are available:

• full;

• differential incremental;

• cumulative incremental;

• An instant copy of the checkpoint at the VxFS level.

Long-Term Backup Storage for NetBackup

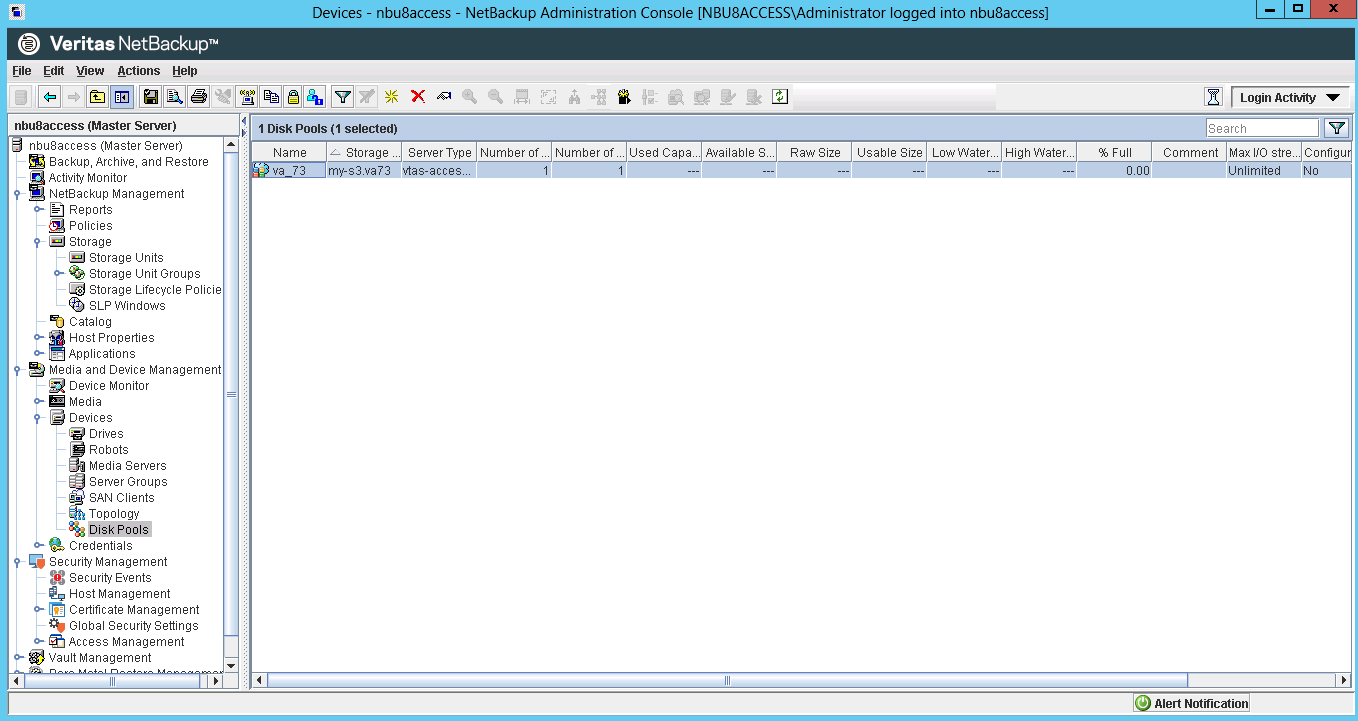

Veritas Access’s NetBackup integration capabilities make it a cheaper and easier alternative to tape for the long-term backup task. In this case, integration can be performed using third-party open source software OpenDedup, which is installed on the NetBackup media server and connected as a NetBackup Storage Unit logical device. OpenDedup is installed on a volume with the specialized OpenDedup SDFS file system, which is located inside the S3 bucket container on the Veritas Access storage. When duplicating backups, the NetBackup (Storage Lifecycle Policy) manages the write to the NetBackup Storage Unit logical unit and the data is sent in deduplicated form using S3 protocol to Veritas Access storage. It should be noted,

Everything is configured quite simply, but since version 8.1 certificates are required.

Storage Volumes:

NetBackup Interface:

Veritas Access Upgrade Upgrade

We started to learn and test Veritas Access version 7.2, but after a week of testing a new 7.3 was released.

There was a question of an interesting case - update.

The case is relevant, with which owners of SDS solutions will sooner or later come across.

Possibility of updating is provided in admin panel by login master.

Veritas Access as Storage for VMware over NFS

It should be noted the ease of use of NFS. Its use does not require the implementation and development of a complex FC infrastructure, complicated zoning configuration processes or proceedings with iSCSI. Using NFS to access the datastore is also simple in that the storage granularity is equal to the VMDK file, and not the entire datastore, as is the case with block protocols. The NFS datastore is a regular host-mounted network ball with virtual machine disk files and their configs. This, in turn, facilitates backup and recovery, since the unit of backup and recovery is a simple file, a separate virtual disk of a separate virtual machine. You can’t ignore the fact that when using NFS you automatically get thin provisioning, and deduplication frees you space directly to the datastore level, which makes it accessible to the administrator and users of the VM, and not to the level of storage, as in the case of using the LUN. It all also looks extremely attractive in terms of using virtual infrastructure.

Finally, using an NFS datastore, you are not limited to a 2 TB limit. This is very useful if, for example, you have to administer a large number of relatively lightly loaded I / O machines. All of them can be placed on one large datastore, backup and management of which is much easier than a dozen disparate VMFS LUNs of 2 TB each.

In addition, you can freely not only increase, but also reduce the datastore. This can be a very useful feature for a dynamic infrastructure with a large number of heterogeneous VMs, such as cloud provider environments where VMs are constantly created and deleted, and this particular datastore for hosting these VMs can not only grow, but also decrease.

But there are also disadvantages:

Well, firstly, it is the inability to use RDM (Raw-device mapping), which may be needed, for example, to implement the MS Cluster Service cluster if you want to use it. You cannot boot from NFS (at least in a simple and normal way, such as boot-from-SAN). The use of NFS is associated with a slight increase in the load on the stack, since a number of operations that are implemented on the host side in the case of a block SAN are supported by the stack in the case of NFS. This is all sorts of locks, access control, and so on.

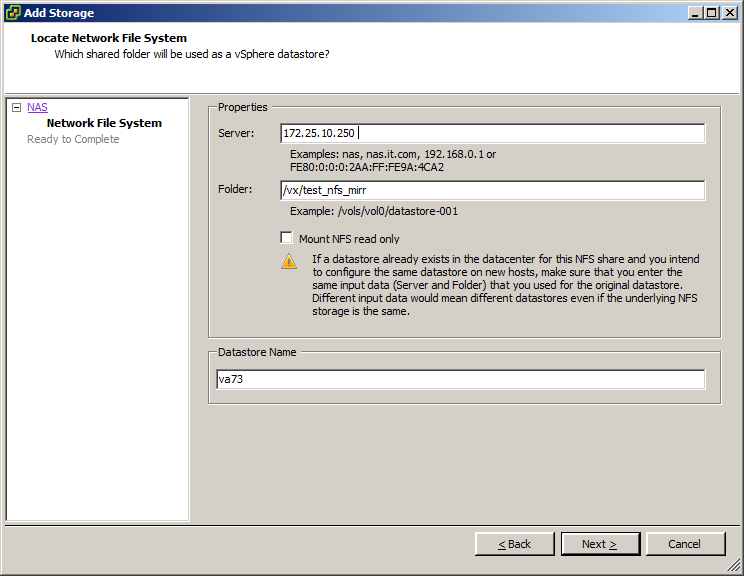

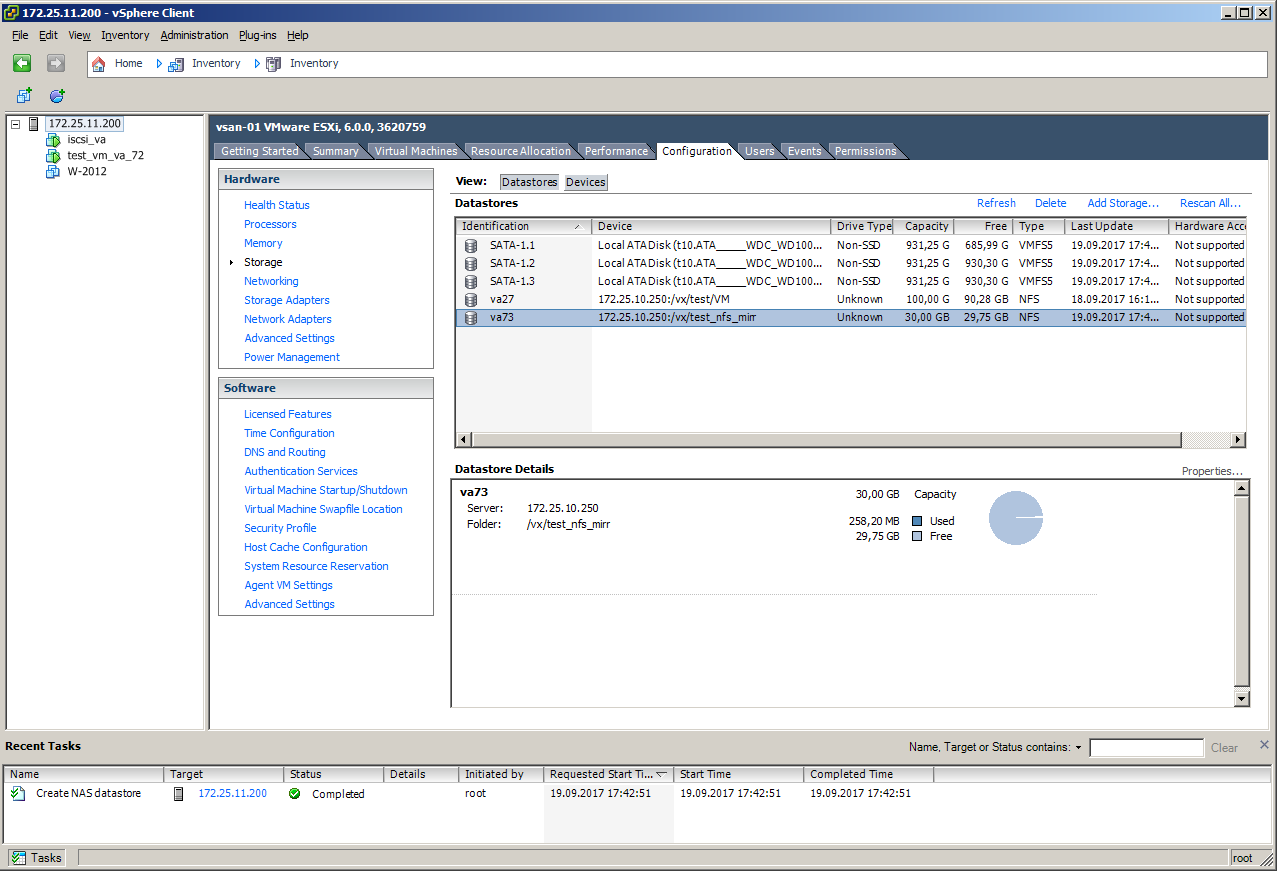

Connecting VMware to Veritas Access via NFS looks like this, you see, it's very simple:

To test fault tolerance and performance, we marked the virtual machine on win 2008 R2 on a ball located in a mirror on two nodes.

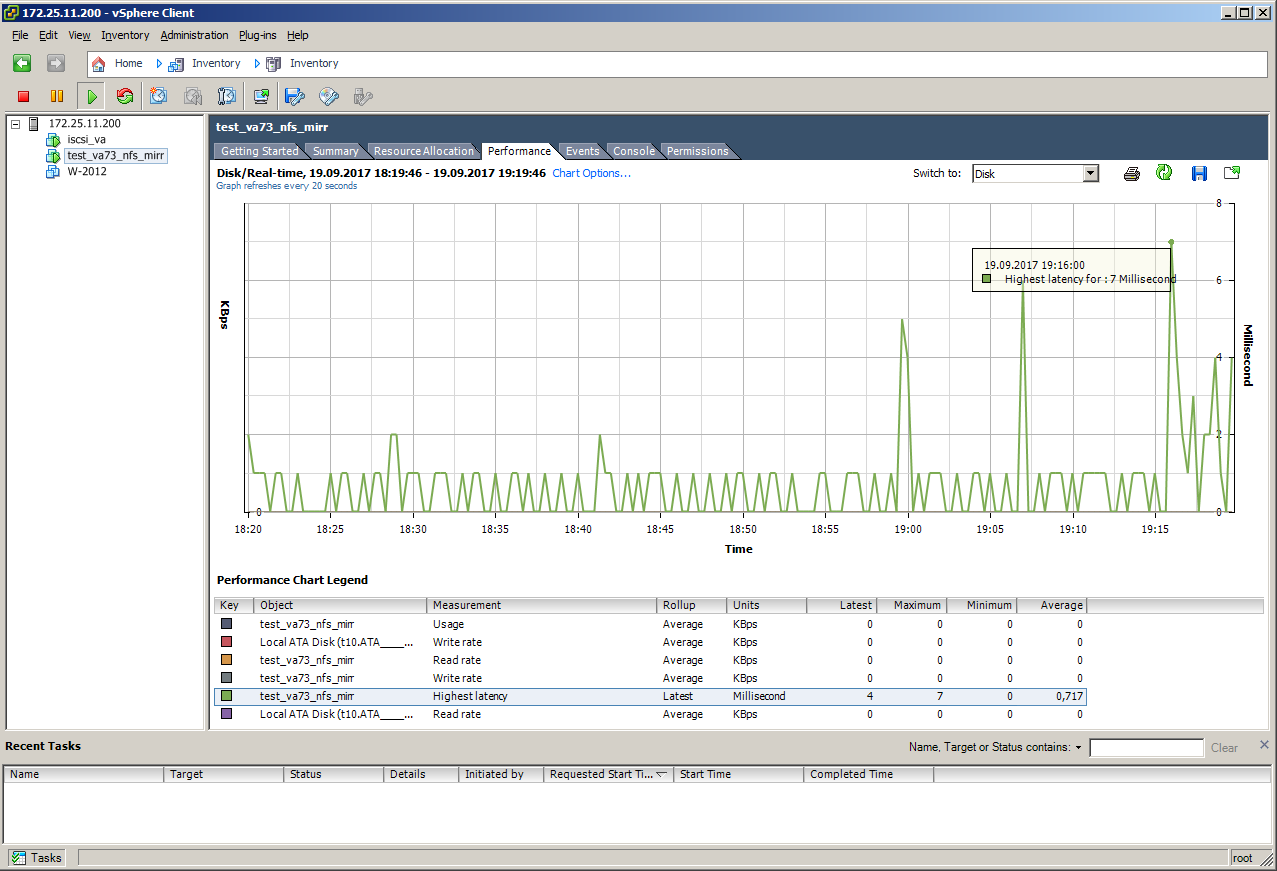

It looks like an imitation of a failure of a master node without a load, at the time of pulling out a cable, latency rose from 0.7 to 7:

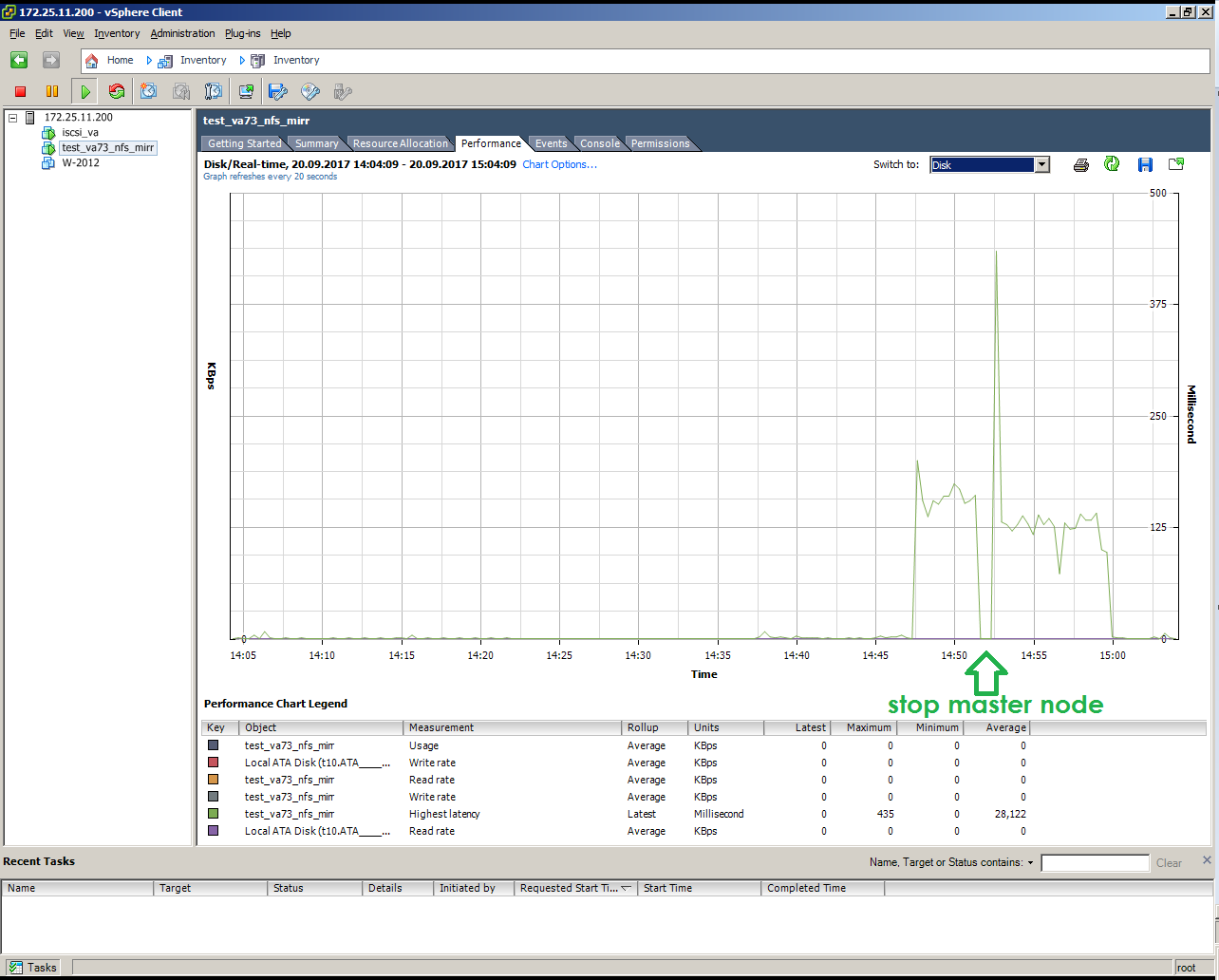

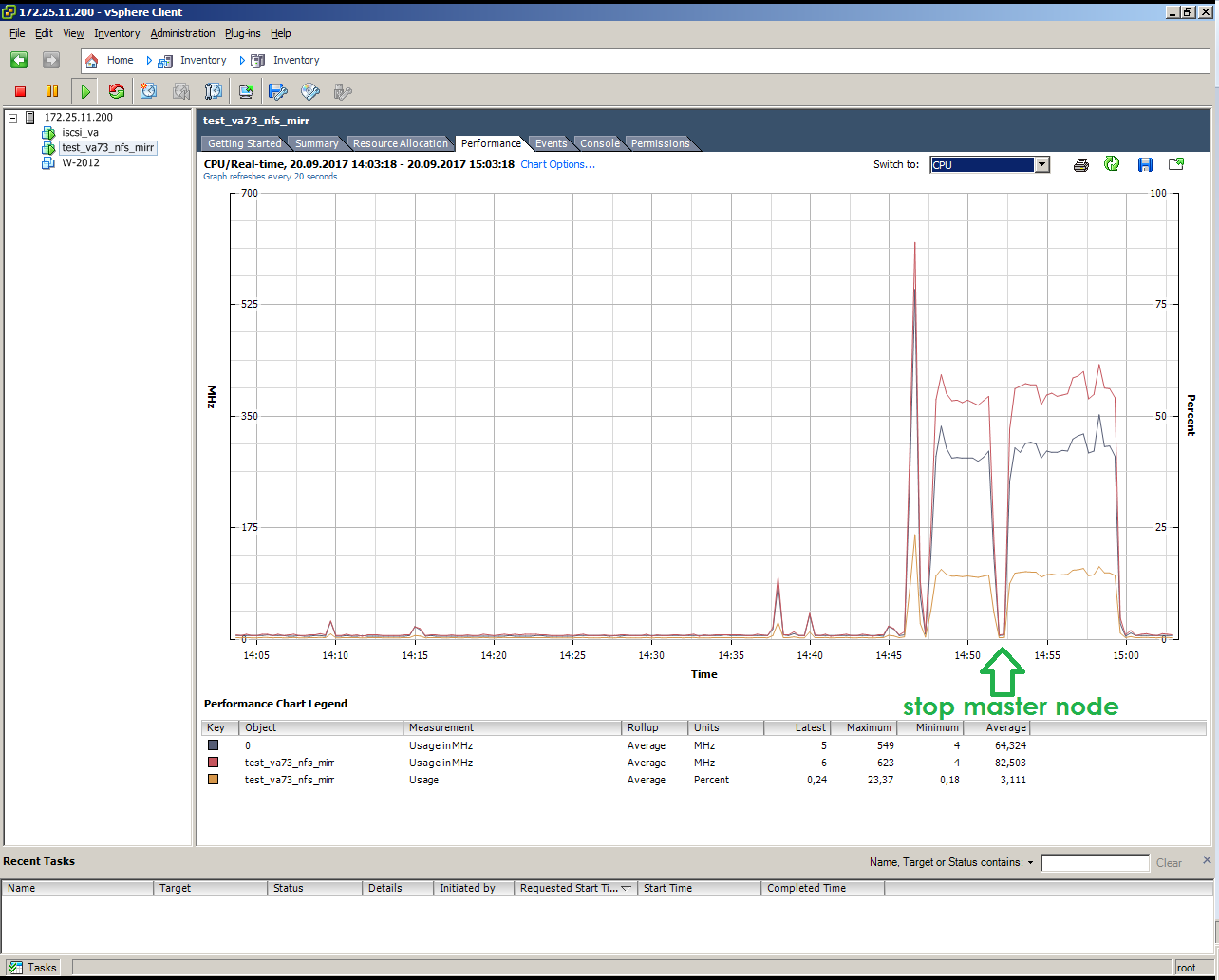

It looks like an imitation of a failure of a master node under a load (copy 10 GB):

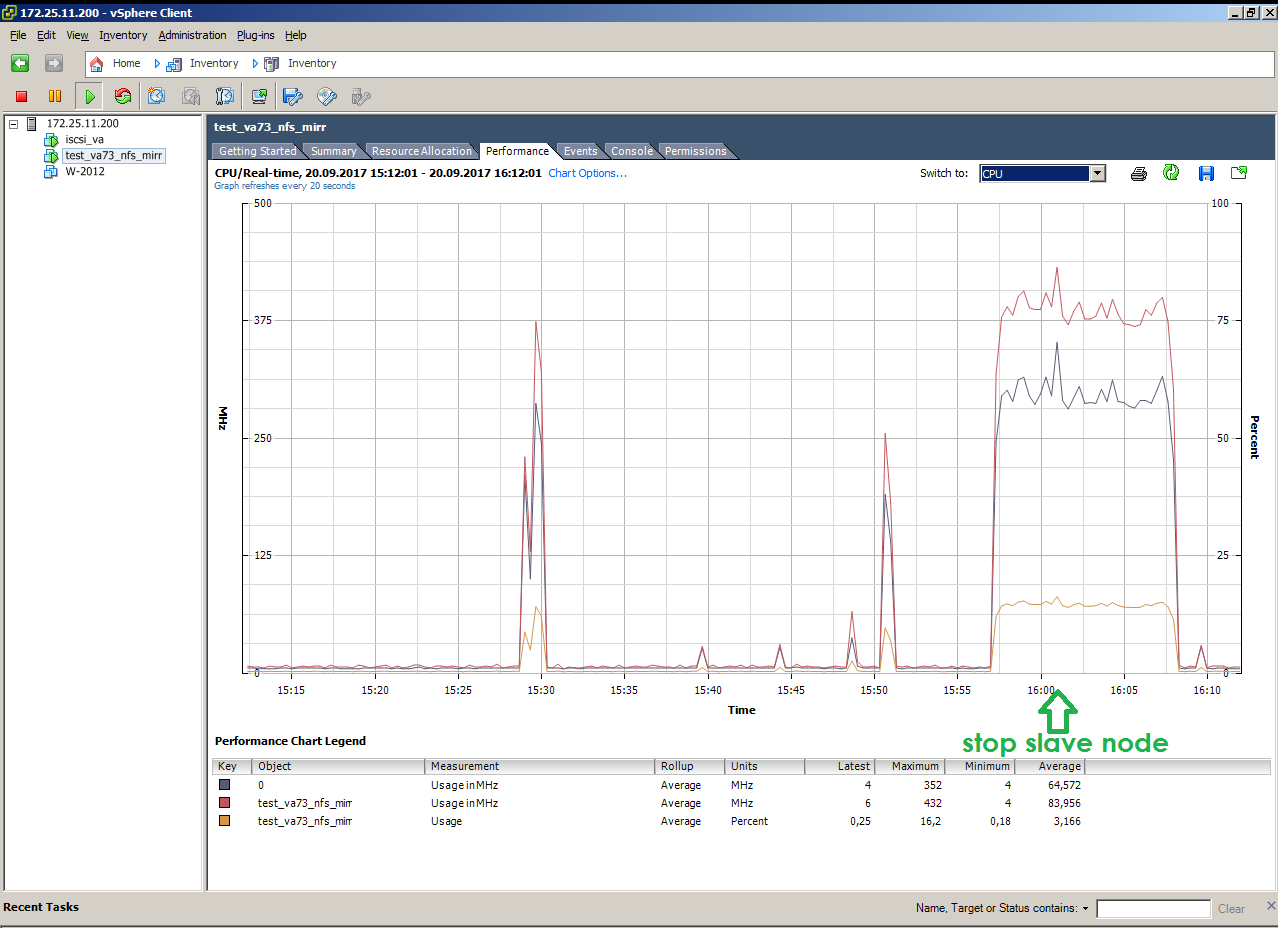

It looks like an imitation of a failure of a SLAVE node under a load (copy 10 GB):

S3 AND OBJECT STORAGE

One of the main disadvantages of NFS, iSCSI, CIFS is the difficulty of using it over long distances. The task of forwarding NFS balls to a neighboring city can be called at least interesting, and doing this on S3 will not be any difficulty. The popularity of object storage is growing, more and more applications support object stories and S3 in particular.

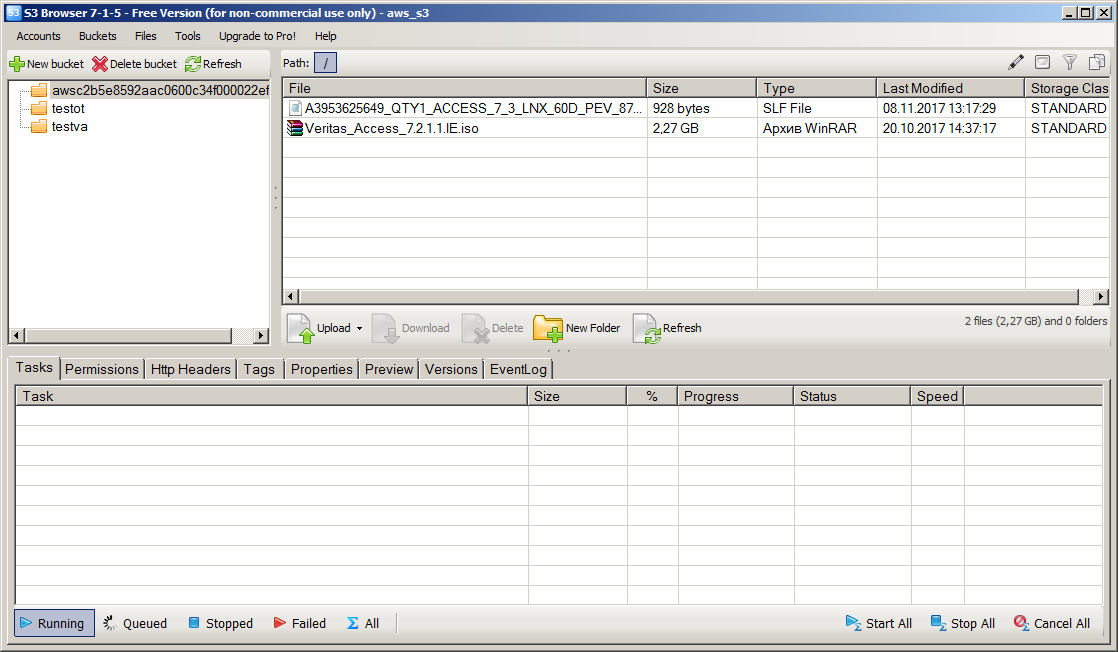

To configure and test S3 there is a convenient and free tool - S3 Browser. Configuring S3 on Veritas Access is quite simple, but original. To access via S3, you need to get the Access Key and Secret Key keychain. The domain users see their keys through the Access WEB interface, the keys for the root user in the current release are obtained by scripts through the CLISH console.

Connected via S3 NetBackup:

Replica

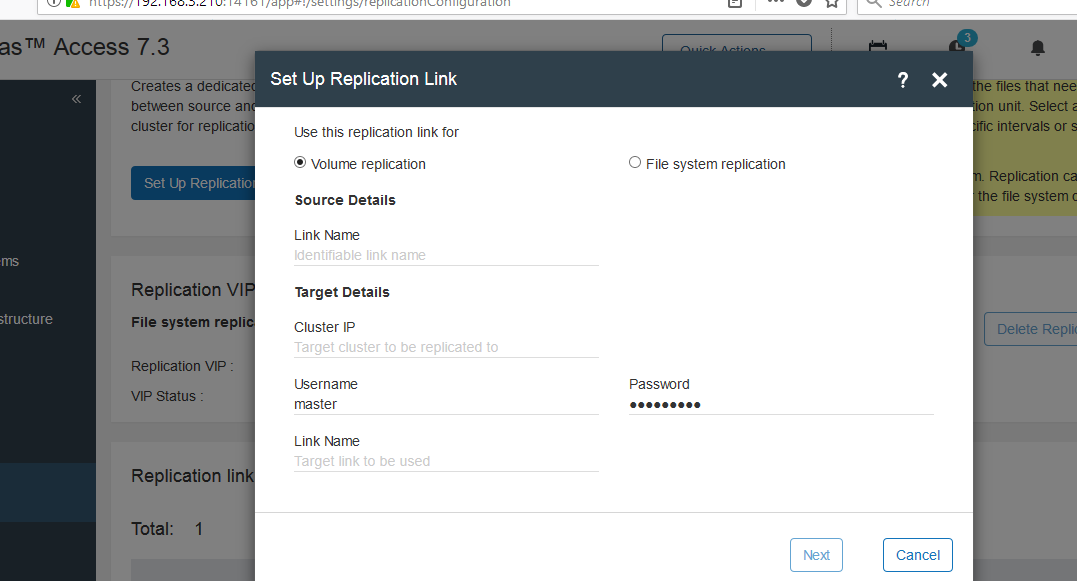

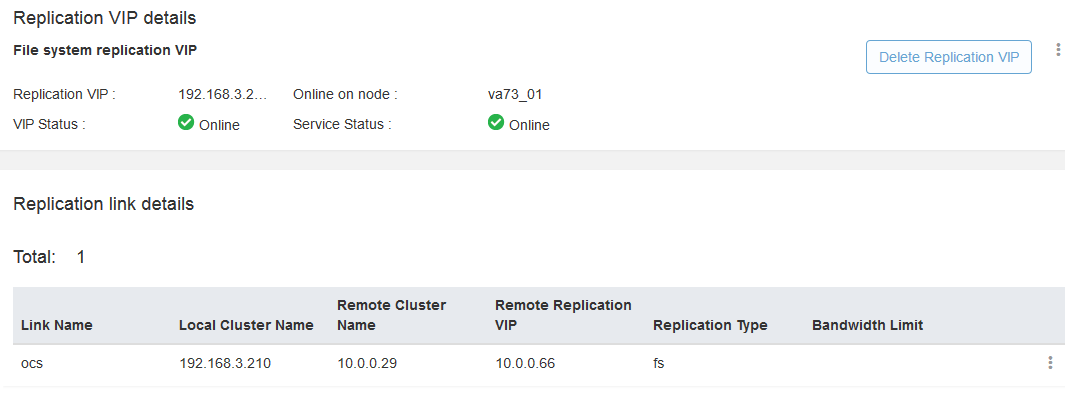

Veritas Access supports synchronous and asynchronous replication. Replication runs in the basic functionality without additional licenses and is configured quite simply. Asynchronous replication is based on file systems, synchronous replication is based on volumes. To test replication, we combined our Veritas Access cluster and the OCS distributor cluster with file system-level replication. An IPSEC tunnel was organized for communication between sites.

Once again, the stand scheme:

Configuring replication via the WEB browser:

After successful authorization, the Replication link appears: We

checked the functionality.

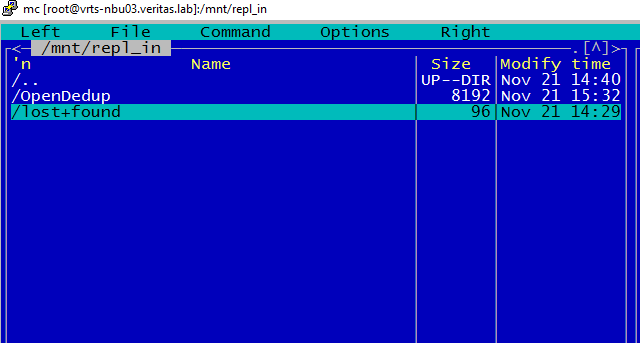

Mounted two balls from each cluster into two folders:

[root@vrts-nbu03 mnt]# showmount -e vrts-access.veritas.lab

Export list for vrts-access.veritas.lab:

/vx/VMware-Test *

/vx/test_rpl_in *

[root@vrts-nbu03 mnt]# showmount -e 192.168.3.210

Export list for 192.168.3.210:

/vx/NAS *

/vx/test_rpl *

[root@vrts-nbu03 mnt]# mount -t nfs 192.168.3.210:/vx/test_rpl /mnt/repl_out/

[root@vrts-nbu03 mnt]# mount -t nfs vrts-access.veritas.lab:/vx/test_rpl_in /mnt/repl_in/

[root@vrts-nbu03 mnt]#We copied the data to the source folder: We

started the replication task so as not to wait for the timer:

va73>

va73> replication job sync test_rpl_job

Please wait...

ACCESS replication SUCCESS V-288-0 Replication job sync 'test_rpl_job'.

va73>

Files appeared in the recipient's folder:

CONCLUSIONS

Veritas Access is an interesting Software-Defined Storage solution that is not a shame to offer the customer. It is a truly affordable, easily scalable storage system with support for file, block and object access. Access provides the ability to build high-performance and cost-effective storage for unstructured data. The integration capabilities with OpenStack, cloud providers and other Veritas technologies allow applying this solution in the following areas:

• Media holdings: storage of photo and video content;

• Public sector: storage of Vedioarchives with systems such as "safe city";

• Sports:storage of video archives and other important information by stadiums and other sports facilities;

• Telecommunications: storage of primary data of billing CDR (Call Detail Records);

• Financial sector: storage of statements, payment documents, passport scans, etc .;

• Insurance companies: storage of documents, scans of passports, photographs, etc .;

• Medical sector: storage of X-ray images, MRI images, analysis results, etc .;

• Cloud providers: storage organization for OpenStack.

• An alternative to tape storage systems

Strengths:

• Easy scalability;

• Any x86 server;

• Relatively low cost.

Weaknesses:

• In the current release, the product requires increased attention of engineers;

• Weak documentation;

• CIFS, iSCSI operate in active-passive mode.

The Veritas Access team regularly releases new releases according to the schedule (roadmap), fixing bugs and adding new features. From the interesting in the new Veritas Access 7.3.1 release dated December 17, 2017 it is expected: a full-fledged implementation of iSCSI, erasure coding, up to 32 nodes in a cluster.

If you have questions about work, functionality or customization - write, call, we are always ready for help and cooperation.

Dmitry Smirnov,

Design Engineer,

Open Technologies

Tel: +7 495 787-70-27

dsmirnov@ot.ru