Develop skills for Alice. Experience with voice interfaces, tips for beginners

Just a month ago, we wanted to try our hand at creating an extension for Alice’s functional skills . From the experience of chatting in the Yandex Dialog support chat , the impression is that there is already something to share with the novice features of working on voice interfaces.

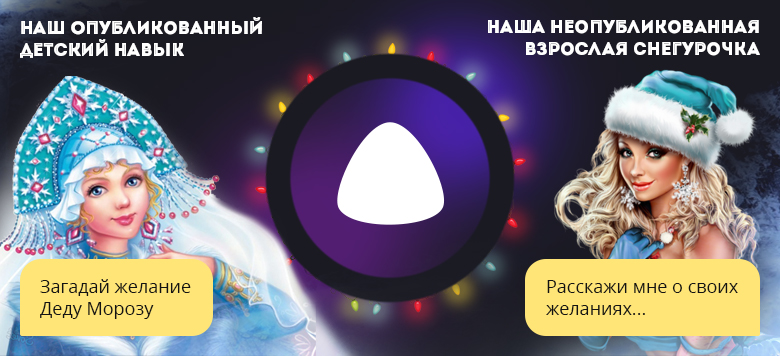

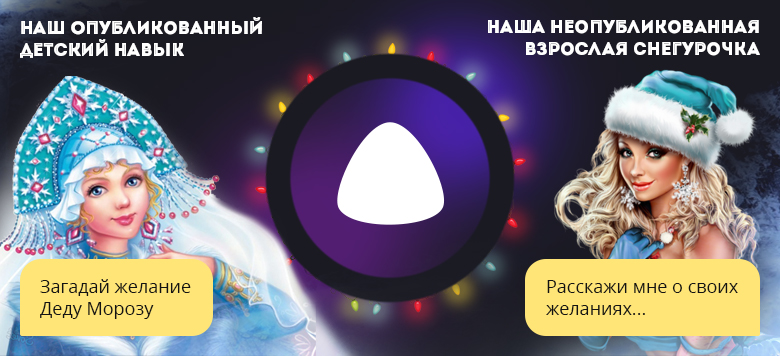

In this article I want to tell you my impressions of the three weeks of work of our children's New Year skill, Snow Maiden, and about the questions and answers in the chat of the dialogue developers.

For a professional in VUI , this article will not show anything new, but practical advice and comments from experienced users are welcome. I am writing for the first time, please do not judge strictly.

Why pay attention to Alice?

Why all this: voice, skills?

How to create a skill?

General approach and common mistakes.

Current platform flaws.

If overseas voice assistants have already become commonplace, many hours of talking with the robot are already flowing into the network , then we still have it at the level of interaction with the navigator, the chatter of children with Alice, and the pampering of geeks with smart homes. Few of my friends bring notes and reminders using Siri, although, in my opinion, this is one of the most convenient ways to use it. With a high probability in the coming year, this situation will change for the better, because the foundation has already been laid:

All this tells me that we are at the beginning of the development of voice interfaces in Russia and therefore we decided to start studying these technologies.

I think many already understand the advantage of voice interfaces in some cases, but it will not be superfluous to remind you: sometimes this is the most suitable option. For example, in the car or in the kitchen, doing cooking, for any other activity where the hands are busy, it is more convenient to give commands by voice. For example, for a long time there are voice-guided nurse robots that help with surgical operations.

Voice is a familiar interaction interface for people. Older people and children without any problems master this method for receiving information and managing gadgets.

For people with visual impairment, voice and hearing are an even more important channel of interaction with the environment. Judging by the chat Yandex. Stationsuch a category of people greatly appreciated the appearance of such a device that makes their lives easier.

I will not continue to list cases, if it is interesting, then you can learn more about all this from the literature.

A skill is a program that implements a kind of dialogue, which is launched by a given activation command in Alice and extends the capabilities of the voice assistant from Yandex.

There are already a number of good third-party skills , but there are still many niches that can be occupied and made a truly interesting and useful skill.

On creating them there are several articles, including on Habré. There is documentation , there are brief general recommendations . I will not go deep into the technical details of the implementation, since I would like to share common approaches for beginners.

Technically, the skill is very similar to the bot, with the difference that it cannot send the message itself, but only respond to the user's request.

Here is a short list of resources to help you get started: Libraries and resources for Yandex Dialogues

Informal FAQ on working with Yandex Dialogues.

The document contains relevant and not very links and questions and answers.

The above chat Yandex Dialogs .

To start developing, you need an account on Yandex, a server on which the code of our program will be located and run, a web server and the application itself, written in any convenient language that can support the https protocol .

I will not give here the details of the implementation of my skills, if there is a request from the community, I will pay attention to this in a separate article. Especially since such materials already exist.

I will leave only an example of a simple PHP skill with comments which, I think, will allow a beginner to make a quick start.

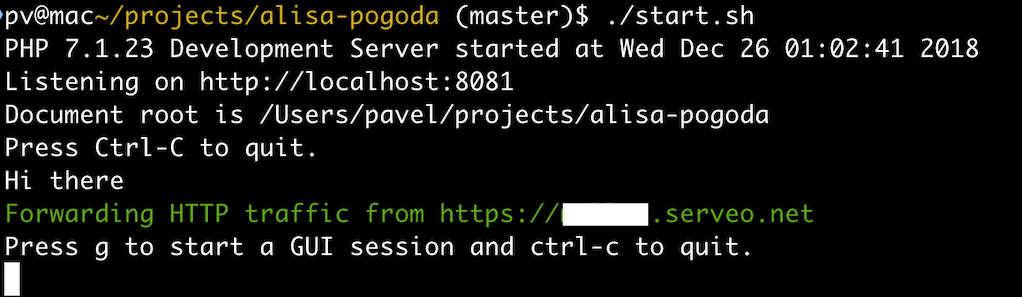

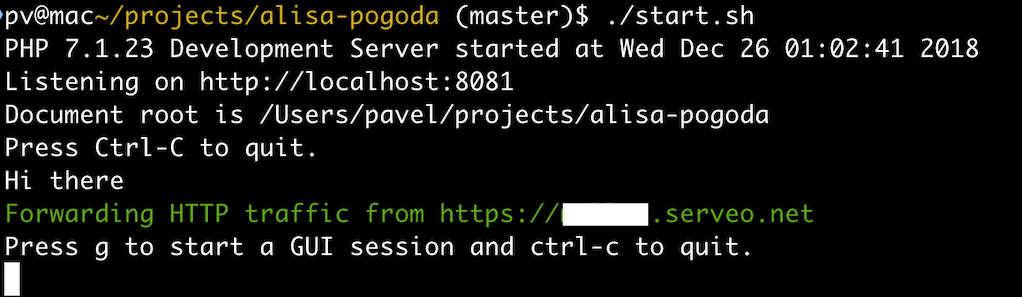

In the repository is a script for creating a simple development environment that runs the web server built into PHP and using the serveo.net service makes the local port accessible from the Internet:

Save the URL https: //******.serveo.net - this will be the URL your webhook Unlike ngrok, this URL does not change with time, it is not necessary to change it in the settings of the dialog. You can check the availability of the webhuk by typing this URL in the browser - should return json with an error. This is normal, we did not pass the required parameters to the script.

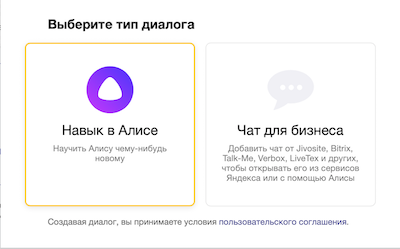

Then we register the skill itself by reference :

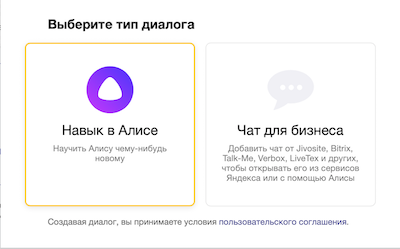

Select the item “Skill in Alice”.

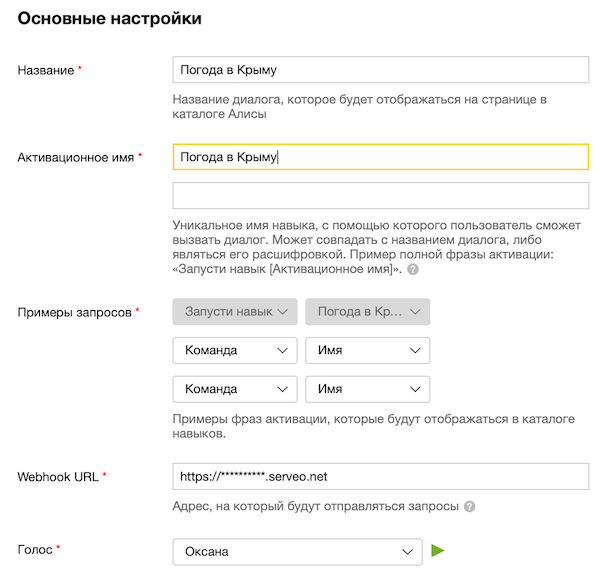

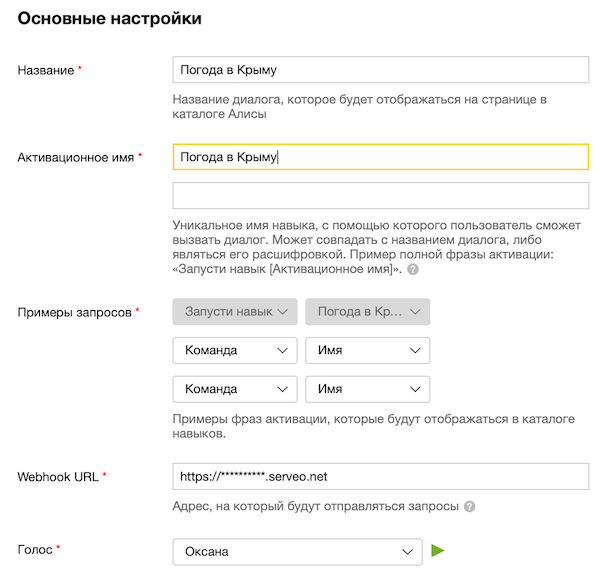

Fill in all the fields according to the prompts and documentation of the dialogs:

In the field webhook url enter the previously received URL.

We try to save, if you followed the instructions given, everything should work out the first time.

In this article on implementation, I will only advise you to pay special attention to the system for logging user actions. I’m all problem areas of user interaction, for example, expecting “yes | No, but I get something else, I write it to the log and immediately transmit it (as a separate process for the speed of the web-based work) to the telegram channel for operational tracking and response.

As I expected, the approaches customary in the usual web development or development of telegram bots are not very suitable here. The main difference is the frequent unpredictability of answers. The dialog platform allows you to add buttons with clear answers to a question, but the user often answers not at all what the programmer expects, who are accustomed to follow a clear logic.

Example.

Do you want to learn another poem?

[yes] [no]

We expect that the person will answer yes or no, but we have many other options:

All this happens for several reasons. A person can use various affirmative phrases: “of course,” “well, yes,” “aha,” “come on,” and so on. as well as negative. It happens that he did not begin to answer the question at the right time, Alice is still not listening to the answer, and the person is already beginning to speak, as a result we hear part of the phrase. Or vice versa, after the person answered, he continues the conversation with another interlocutor “in real”. Also, the user of the skill may simply not understand what is inside a third-party dialogue, and makes the usual aliso-inquiries without exiting the “enough” command.

If we simply repeat the same question without recognizing the correct answer, this can annoy the user and lead to frustration in your skill and voice interfaces in general. Therefore, separate attention should be paid to error messages, sometimes it is better to reformulate the question, clarify it, and not ask it again. The user will most likely answer the same question the same, just louder.

You should also try to provide different options for user answers, to highlight the semantic part. Depending on the situation, it is sometimes better to err once in favor of incorrectly recognizing the answer, rather than forcing the user to respond clearly. If we add something with a pattern (yes *) to the expected answers, then it will often work as it should, and cases when we were answered “yes leave you alone” will already be an exception and nothing critical in the logic of the program will happen. If, of course, we need an exact answer, because of the incorrect recognition of which, there will be irreversible consequences in the system, then we should get a clear answer from the user. Or if we are not sure of the answer, then we can ask for additional missing information.

Each of the interaction cases should be considered separately.

In the above example, we are faced with the fact that users are asking for a poem shorter or about some character ("is there a shorter?", "Let's go about the Snow Maiden").

Here we have options - either to add new menu levels, but not to forget about the “humanity” of the dialogue or to respond to such requests in accordance with the expectations of the user, but clearly not offering them.

No matter how we initially thought through this conversational part, we realized that real use would better show the user's desires, so we started our first skill with minimal functionality, gradually adding the capabilities of our Snow Maiden, as well as reactions to in-demand, but not intended by us in advance requests.

Using Third-Party APIs

We noticed that in order to provide some useful functions in their skills, developers sometimes resort to excessive use of third-party APIs. However, do not forget that the response time of your webhook should not exceed 1.5 seconds. Sometimes this can cause a negative user experience with a skill that responds correctly, then writes: “something went wrong.” Named entity

recognition features: not all names, cities are recognized correctly, and therefore it is desirable to provide for this in their skill. So far, in order not to deprive the child of the name with an unrecognized Yandex name, he had to resort to some “crutches” to communicate with the Snow Maiden. Immediately take this into account when developing, so as not to upset users with the names Glory or Hope, for example. We hope Yandex will fix it soon.

Pronunciation of words The

existing speech synthesis testing tool does not always correspond to what you actually have in the skill. Do not be lazy to provide mechanisms for adding tts to any of your answers. We hoped for the Yandex engine, and some of the phrases in our skill are now difficult to markfor better pronunciation (without major changes in the code). We take into account for the future.

Simple, but fairly effective PHP functions for fuzzy word comparisons

helped a lot : similar_text () , levenshtein () , other languages also have similar tools. Well, and, of course, regular expressions, where without them.

These functions allowed, for example, to accept the correct answer to a riddle with a partially correct answer - not exactly recognized, in different declinations.

This, of course, for specialists in NLP (Natural Language Processing) will look frivolous, but, I repeat, the note is for beginners.

I will briefly outline the main claims of the developers to Yandex Dialogues, which they noticed in the chat.

Long, often seemingly illogical, moderation. It happens that your skill does not pass moderation due to the fact that you have, say, a too general name for the skill, for example, “weather in Crimea”, while you already have a skill with a similar name - “Weather in Severodvinsk”. So I understand that this is due to the fact that earlier the requirements for the names and activation words were simpler and the boy's mom'sson's friend was lucky, and you will not be able to do that. Search for a different phrase or try using a brand .

Documentation does not always correspond to reality.The platform itself develops quickly, the documentation does not keep up, is interpreted by users and representatives of Yandex sometimes in different ways.

The platform so far provides only basic tools for debugging skills .

When adding a skill to the developer panel, your webhuk should respond correctly, or a validation error will occur. What a mistake, what JSON was sent and what came - we will not see. If you managed to add a skill, then you will have a minimal panel with the contents of only the last request-answer and that's it! There are no means to check how it will work in reality and sound on the device before moderation. There is a third-party emulator that works quite unstable, not in all browsers, and does not comply with the current protocol.

There are other wishes and requests from developers, such as: integration with a smart home, determining the interlocutor by his voice, the ability to choose voices for skills with better generation, integration of payment systems, integration with a Yandex account, including working with a skill with different devices under one user, a thought-out system of private skills. But these are functions that require careful study and, in terms of safety, convenience. I think Yandex will be able to provide these opportunities when it realizes it at the proper level. However, problems with moderation, debugging and sometimes irrelevant documentation can greatly hinder beginners from getting comfortable with the platform.

It helps that chatthe support is consistently responding, the less experienced and responsive community has also been organized, which has helped me a lot, will help you too. Support responds fairly quickly to many of the claims with the messages “taken note”, “we know about the documentation, we will correct it”, “we decide about moderation”. Ok, we look forward to it.

For a quick start, I advise you to publish a private skill , they are now checked with minimum requirements:

Chat tip:

Once a published skill can be used to test your other dialogues while waiting for moderation by proxying requests from a published webhost to a developed one, filtering by user_id.

In general, the voice interfaces themselves seemed to be a very interesting topic, more and more in demand in the future, I plan to dive into it more, reading special literature, instructions and tips from similar foreign services (Google, Amazon, Siri, etc.).

Let me remind you once again about Alice’s school of Yandex, if someone like me was interested in this topic.

Some more links on the topic:

Blog Yandex Dialogues

Youtube channel Yandex Dialogues

Easy dialogue

Book Designing Voice User Interfaces

Natasha: a library for extracting named entities

Telegram channel informing about new skills

Informal catalog of skills

In this article I want to tell you my impressions of the three weeks of work of our children's New Year skill, Snow Maiden, and about the questions and answers in the chat of the dialogue developers.

For a professional in VUI , this article will not show anything new, but practical advice and comments from experienced users are welcome. I am writing for the first time, please do not judge strictly.

Why pay attention to Alice?

Why all this: voice, skills?

How to create a skill?

General approach and common mistakes.

Current platform flaws.

Why pay attention to Alice?

If overseas voice assistants have already become commonplace, many hours of talking with the robot are already flowing into the network , then we still have it at the level of interaction with the navigator, the chatter of children with Alice, and the pampering of geeks with smart homes. Few of my friends bring notes and reminders using Siri, although, in my opinion, this is one of the most convenient ways to use it. With a high probability in the coming year, this situation will change for the better, because the foundation has already been laid:

- Alice was only one year old, she studies, but she already knows a lot.

- Exit Yandex station - I think it will gradually become smarter and more functional.

- Appearance on sale in December, the first low-cost partner mini-columns .

- Running Alice’s School to Develop Skills Developers

- Announced major improvements in the infrastructure of Yandex Dialogs, in particular, “discovery skills” - a tool for easy search and ranking of skills from third-party developers

All this tells me that we are at the beginning of the development of voice interfaces in Russia and therefore we decided to start studying these technologies.

Why all this: voice, skills?

I think many already understand the advantage of voice interfaces in some cases, but it will not be superfluous to remind you: sometimes this is the most suitable option. For example, in the car or in the kitchen, doing cooking, for any other activity where the hands are busy, it is more convenient to give commands by voice. For example, for a long time there are voice-guided nurse robots that help with surgical operations.

Voice is a familiar interaction interface for people. Older people and children without any problems master this method for receiving information and managing gadgets.

For people with visual impairment, voice and hearing are an even more important channel of interaction with the environment. Judging by the chat Yandex. Stationsuch a category of people greatly appreciated the appearance of such a device that makes their lives easier.

I will not continue to list cases, if it is interesting, then you can learn more about all this from the literature.

A skill is a program that implements a kind of dialogue, which is launched by a given activation command in Alice and extends the capabilities of the voice assistant from Yandex.

How to create a skill?

There are already a number of good third-party skills , but there are still many niches that can be occupied and made a truly interesting and useful skill.

On creating them there are several articles, including on Habré. There is documentation , there are brief general recommendations . I will not go deep into the technical details of the implementation, since I would like to share common approaches for beginners.

Technically, the skill is very similar to the bot, with the difference that it cannot send the message itself, but only respond to the user's request.

Here is a short list of resources to help you get started: Libraries and resources for Yandex Dialogues

Informal FAQ on working with Yandex Dialogues.

The document contains relevant and not very links and questions and answers.

The above chat Yandex Dialogs .

To start developing, you need an account on Yandex, a server on which the code of our program will be located and run, a web server and the application itself, written in any convenient language that can support the https protocol .

I will not give here the details of the implementation of my skills, if there is a request from the community, I will pay attention to this in a separate article. Especially since such materials already exist.

I will leave only an example of a simple PHP skill with comments which, I think, will allow a beginner to make a quick start.

In the repository is a script for creating a simple development environment that runs the web server built into PHP and using the serveo.net service makes the local port accessible from the Internet:

Save the URL https: //******.serveo.net - this will be the URL your webhook Unlike ngrok, this URL does not change with time, it is not necessary to change it in the settings of the dialog. You can check the availability of the webhuk by typing this URL in the browser - should return json with an error. This is normal, we did not pass the required parameters to the script.

Then we register the skill itself by reference :

Select the item “Skill in Alice”.

Fill in all the fields according to the prompts and documentation of the dialogs:

In the field webhook url enter the previously received URL.

We try to save, if you followed the instructions given, everything should work out the first time.

In this article on implementation, I will only advise you to pay special attention to the system for logging user actions. I’m all problem areas of user interaction, for example, expecting “yes | No, but I get something else, I write it to the log and immediately transmit it (as a separate process for the speed of the web-based work) to the telegram channel for operational tracking and response.

General approach and common mistakes.

As I expected, the approaches customary in the usual web development or development of telegram bots are not very suitable here. The main difference is the frequent unpredictability of answers. The dialog platform allows you to add buttons with clear answers to a question, but the user often answers not at all what the programmer expects, who are accustomed to follow a clear logic.

Example.

Do you want to learn another poem?

[yes] [no]

We expect that the person will answer yes or no, but we have many other options:

- Yes.

- Yes Yes

- of course I want

- yes mom and what do we eat?

- tired of

- what is the weather in Novosibirsk?

All this happens for several reasons. A person can use various affirmative phrases: “of course,” “well, yes,” “aha,” “come on,” and so on. as well as negative. It happens that he did not begin to answer the question at the right time, Alice is still not listening to the answer, and the person is already beginning to speak, as a result we hear part of the phrase. Or vice versa, after the person answered, he continues the conversation with another interlocutor “in real”. Also, the user of the skill may simply not understand what is inside a third-party dialogue, and makes the usual aliso-inquiries without exiting the “enough” command.

If we simply repeat the same question without recognizing the correct answer, this can annoy the user and lead to frustration in your skill and voice interfaces in general. Therefore, separate attention should be paid to error messages, sometimes it is better to reformulate the question, clarify it, and not ask it again. The user will most likely answer the same question the same, just louder.

You should also try to provide different options for user answers, to highlight the semantic part. Depending on the situation, it is sometimes better to err once in favor of incorrectly recognizing the answer, rather than forcing the user to respond clearly. If we add something with a pattern (yes *) to the expected answers, then it will often work as it should, and cases when we were answered “yes leave you alone” will already be an exception and nothing critical in the logic of the program will happen. If, of course, we need an exact answer, because of the incorrect recognition of which, there will be irreversible consequences in the system, then we should get a clear answer from the user. Or if we are not sure of the answer, then we can ask for additional missing information.

Each of the interaction cases should be considered separately.

In the above example, we are faced with the fact that users are asking for a poem shorter or about some character ("is there a shorter?", "Let's go about the Snow Maiden").

Here we have options - either to add new menu levels, but not to forget about the “humanity” of the dialogue or to respond to such requests in accordance with the expectations of the user, but clearly not offering them.

No matter how we initially thought through this conversational part, we realized that real use would better show the user's desires, so we started our first skill with minimal functionality, gradually adding the capabilities of our Snow Maiden, as well as reactions to in-demand, but not intended by us in advance requests.

Using Third-Party APIs

We noticed that in order to provide some useful functions in their skills, developers sometimes resort to excessive use of third-party APIs. However, do not forget that the response time of your webhook should not exceed 1.5 seconds. Sometimes this can cause a negative user experience with a skill that responds correctly, then writes: “something went wrong.” Named entity

recognition features: not all names, cities are recognized correctly, and therefore it is desirable to provide for this in their skill. So far, in order not to deprive the child of the name with an unrecognized Yandex name, he had to resort to some “crutches” to communicate with the Snow Maiden. Immediately take this into account when developing, so as not to upset users with the names Glory or Hope, for example. We hope Yandex will fix it soon.

Pronunciation of words The

existing speech synthesis testing tool does not always correspond to what you actually have in the skill. Do not be lazy to provide mechanisms for adding tts to any of your answers. We hoped for the Yandex engine, and some of the phrases in our skill are now difficult to markfor better pronunciation (without major changes in the code). We take into account for the future.

Simple, but fairly effective PHP functions for fuzzy word comparisons

helped a lot : similar_text () , levenshtein () , other languages also have similar tools. Well, and, of course, regular expressions, where without them.

These functions allowed, for example, to accept the correct answer to a riddle with a partially correct answer - not exactly recognized, in different declinations.

This, of course, for specialists in NLP (Natural Language Processing) will look frivolous, but, I repeat, the note is for beginners.

Current platform flaws

I will briefly outline the main claims of the developers to Yandex Dialogues, which they noticed in the chat.

Long, often seemingly illogical, moderation. It happens that your skill does not pass moderation due to the fact that you have, say, a too general name for the skill, for example, “weather in Crimea”, while you already have a skill with a similar name - “Weather in Severodvinsk”. So I understand that this is due to the fact that earlier the requirements for the names and activation words were simpler and the boy's mom's

Documentation does not always correspond to reality.The platform itself develops quickly, the documentation does not keep up, is interpreted by users and representatives of Yandex sometimes in different ways.

The platform so far provides only basic tools for debugging skills .

When adding a skill to the developer panel, your webhuk should respond correctly, or a validation error will occur. What a mistake, what JSON was sent and what came - we will not see. If you managed to add a skill, then you will have a minimal panel with the contents of only the last request-answer and that's it! There are no means to check how it will work in reality and sound on the device before moderation. There is a third-party emulator that works quite unstable, not in all browsers, and does not comply with the current protocol.

There are other wishes and requests from developers, such as: integration with a smart home, determining the interlocutor by his voice, the ability to choose voices for skills with better generation, integration of payment systems, integration with a Yandex account, including working with a skill with different devices under one user, a thought-out system of private skills. But these are functions that require careful study and, in terms of safety, convenience. I think Yandex will be able to provide these opportunities when it realizes it at the proper level. However, problems with moderation, debugging and sometimes irrelevant documentation can greatly hinder beginners from getting comfortable with the platform.

It helps that chatthe support is consistently responding, the less experienced and responsive community has also been organized, which has helped me a lot, will help you too. Support responds fairly quickly to many of the claims with the messages “taken note”, “we know about the documentation, we will correct it”, “we decide about moderation”. Ok, we look forward to it.

For a quick start, I advise you to publish a private skill , they are now checked with minimum requirements:

- The skill is checked for the name and the activation phrase , as the base is common for all developers.

- Webhuk must correctly respond to requests.

- The private skill should report that the skill is closed in its welcome message. That the user who has casually started it has been notified.

Chat tip:

Once a published skill can be used to test your other dialogues while waiting for moderation by proxying requests from a published webhost to a developed one, filtering by user_id.

Conclusion

In general, the voice interfaces themselves seemed to be a very interesting topic, more and more in demand in the future, I plan to dive into it more, reading special literature, instructions and tips from similar foreign services (Google, Amazon, Siri, etc.).

Let me remind you once again about Alice’s school of Yandex, if someone like me was interested in this topic.

Some more links on the topic:

Blog Yandex Dialogues

Youtube channel Yandex Dialogues

Easy dialogue

Book Designing Voice User Interfaces

Natasha: a library for extracting named entities

Telegram channel informing about new skills

Informal catalog of skills