Hilbert curve vs Z-order

Repeatedly heard the opinion that of all sweeping curves . it is the Hilbert curve that is most promising for spatial indexing. This is motivated by the fact that it does not contain gaps and therefore, in a sense, is “well arranged”. Is this really so, and what does spatial indexing have to do with it, let's figure it out under the cut.

The final article from the cycle:

- Why the straightforward approach does not work with

spatial indexing by sweeping curves - Algorithm Presentation

- 3D and obvious optimizations

- 8D and perspectives in OLAP

- 3D on a sphere how it looks

- 3D on a sphere, benchmarks

- What about indexing non-point features?

The Hilbert curve has a remarkable property - the distance between two consecutive points on it is always equal to unity (the step of the current detail, to be more precise). Therefore, data with not very different values of the Hilbert curve are not far from each other (the opposite, by the way, is incorrect - the neighboring points of the mogets differ greatly in value). For the sake of this property, it is used, for example, for

- downsizing ( color palette management )

- increase access locality in multidimensional indexing ( here )

- in antenna design ( here )

- table clustering ( DB2 )

As for spatial data, the properties of the Hilbert curve can increase the locality of links and increase the effectiveness of the DBMS cache, as here , where it is recommended to supplement the table with a column with the calculated value and sort by this column.

In spatial search, the Hilbert curve is present in Hilbert R-trees , where the main idea (very rough) - when it comes time to split the page into two descendants - we sort all the elements on the page by centroid values and divide in half.

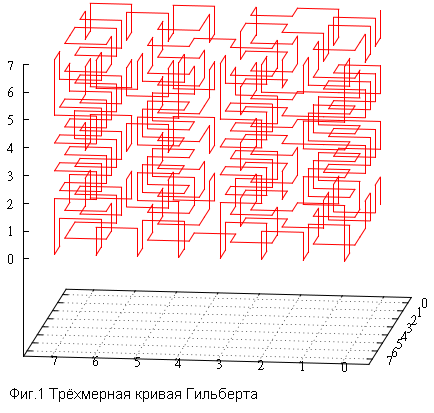

But we are more interested in another property of the Hilbert curve — this is a self-similar sweeping curve. That allows you to use it in an index based on a B-tree. We are talking about a way to number the discrete space.

- Let a discrete space of dimension N.

- Suppose also that we have some way of numbering all the points in this space.

- Then, for indexing point objects, it suffices to place the numbers of points at which these objects hit the index on the basis of the B-tree.

- The search in such a spatial index depends on the numbering method and ultimately comes down to sampling by index from the intervals of values.

- Noticeable curves are good for their self-similarity, which allows you to generate a relatively small number of such sub-intervals, while other methods (for example, the similarity of horizontal scanning) require a huge number of subqueries, which makes such spatial indexing ineffective.

So, where does this intuitive feeling come from that the Hilbert curve is good for spatial indexing? Yes, there are no gaps in it; the distance between any consecutive points is equal to the sampling step. So what? What is the connection between this fact and potentially high performance?

At the very beginning, we formulated a rule - a “good” numbering method minimizes the total perimeter of index pages. We are talking about the pages of the B-tree, which in the end will be data. In other words, index performance is determined by the number of pages you need to read. The fewer pages on average a reference size query, the higher the (potential) index performance.

From this point of view, the Hilbert curve looks very promising. But you cannot smear this intuitive feeling on bread, therefore we will conduct a numerical experiment.

- consider 2 and 3-dimensional spaces

- take square / cubic queries of different sizes

- for a series of random queries of the same type and size, we calculate the average / variance of the number of visible pages, in a series of 1000 queries

- we will proceed from the fact that a point lies in each node of space. You can sketch out random data and check on it, but then questions would arise about the quality of this random data. And so we have a completely objective indicator of “suitability”, albeit far from any real data set

- 200 elements are placed on the page, it is important that this is not a power of two, which would lead to a degenerate case

- compare the Hilbert curve and the Z-curve

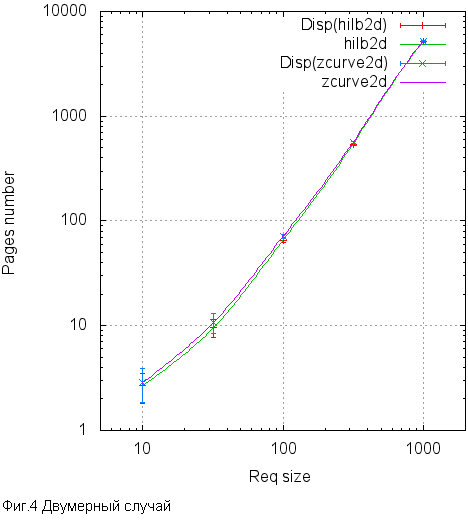

This is how it looks like graphs.

Now it’s worth at least out of curiosity to see how, with a fixed request size (2D 100X100), the number of read pages behaves depending on the page size.

Well visible “holes” in the Z-order, corresponding to the sizes of 128, 256 and 512. And the “holes” in 192, 384, 768. Not so big.

As we see, the difference can reach 10%, but almost everywhere it is within the statistical error. Nevertheless, everywhere the Hilbert curve behaves better than the Z-curve. Not much, but better. Apparently, these 10% are an upper estimate of the potential effect that can be achieved by going to the Hilbert curve. Is the game worth the candle? Let's try to find out.

Implementation.

The use of the Hilbert curve in spatial search encounters a number of difficulties. For example, the calculation of a key of 3 coordinates takes (the author) about 1000 cycles, about 20 times more than the same for zcurve. Perhaps there is some more effective method, in addition, there is an opinion that reducing the number of pages read pays for any complication of work. Those. a disk is a more expensive resource than a processor. Therefore, we will primarily focus on reading buffers, and we will just take note of the times.

Regarding the search algorithm . In short, it is based on two ideas -

- The index is a B-tree with its pagination. If we want logarithmic access to the first element of the output, we must use the “divide and conquer” strategy, like a binary search. Those. it is necessary to divide the request into subqueries on average in each iteration.

But unlike the binary search, in our case, the partition does not halve the number of elements to be disposed, but occurs according to the geometric principle.

It follows from the self-similarity of sweeping curves that if we take a square (cubic) lattice that leaves the origin with a step of two, then any elementary cube in this lattice contains one interval of values. Therefore, dissecting the search query into the nodes of this lattice, we can split the original arbitrary subquery into continuous intervals. Then each of these intervals is subtracted from the tree. - If this process is not stopped, it will cut the original request down to intervals of one element, this is not what we would like. The stopping criterion is as follows - the request is cut into subqueries until it fits entirely on one page of the B-tree. As soon as it fits, it’s easier to read the whole page and filter out the unnecessary. How to find out what fit? We have the minimum and maximum points of the subquery, as well as the current and last points of the page.

Indeed, at the beginning of processing the subquery, we do a search in the B-tree for the minimum point of the subquery and get a sheet page where the search stopped and the current position in it.

All this is equally true for the Hilbert curve and for the Z-order. However:

- the Hilbert curve is out of order - the lower left point of the query is not necessarily less than the upper right (for the two-dimensional case)

- not only that, the maximum and / or minimum of the subquery is not necessarily in one of its corners, it does not necessarily lie within the query

- therefore, the real range of values can be much wider than the search query, in the worst case, this is the entire extent of the layer, if you managed to get into the area around the center

This leads to the need to modify the original algorithm.

To begin with, the interface for working with the key has changed.

- bitKey_limits_from_extent - this method is called once at the beginning of the search to determine the real boundaries of the search. The input takes the coordinates of the search extent, gives the minimum and maximum values of the search range.

- bitKey_getAttr - on an input accepts an interval of values of a subquery and also initial coordinates of a search query. Returns the mask of the following values

- baSolid - this subquery lies entirely within the search extent, it does not need to be split, but values from the interval can be entirely placed in the output

- baReadReady - the minimum key of the sub-query interval lies inside the search extent, you can search in the B-tree. In the case of zcurve, this bit is always cocked.

- baHasSmth - if this bit is not cocked, this subquery is located entirely outside the search query and should be ignored. In the case of Z-order, this bit is always cocked.

The modified search algorithm looks like this:

- We start the queue of subqueries, initially in this queue there is one single element - the extent (as well as the minimum and maximum range keys) found by calling bitKey_limits_from_extent

- While the queue is not empty:

- We get the item from the top of the queue

- Get information about it using bitKey_getAttr

- If baHasSmth is not cocked , ignore this subquery

- If baSolid is cocked , we subtract the entire range of values directly in the resultset and proceed to the next subquery

- If baReadReady is cocked , we search in the B-tree for the minimum value in the subquery

- If baReadReady is not cocked or the stop criterion does not work for this request (it does not fit on one page)

- Calculate the minimum and maximum values of the subquery range

- Find the discharge of the key, which we will cut

- We get two subqueries

- We put them in the queue, first the one with the larger values, then the second

Consider an example request in the extent [524000,0,484000 ... 525000,1000,485000].

This is how the execution of the request for Z-order looks like - nothing more.

Subqueries - all generated subqueries, results - found points,

lookups - queries to the B-tree.

Because the range crosses 0x80000, the start extent is the size of the entire extent of the layer. All the most interesting merged in the center, we will consider in more detail.

The “clusters” of subqueries at the borders are noteworthy. The mechanism of their occurrence is approximately the following — for example, the next section cut off a narrow strip, for example, a width of 3, from the search extent extent. Moreover, this strip does not even have dots, but we don’t know that. And we can check only at the moment when the minimum value of the subquery range falls within the search extent. As a result, the algorithm will cut the strip down to subqueries of size 2 and 1, and only then will it be able to query the index to make sure that there is nothing there. Or there is.

This does not affect the number of pages read, all such passes through the B-tree fall on the cache, but the amount of work done is impressive.

Well, it remains to find out how many pages were actually read and how long it took. The following values were obtained on the same data and in the same manner as before . Those. 100 000 000 random points in [0 ... 1 000 000, 0 ... 0, 0 ... 1 000 000].

The measurements were carried out on a modest virtual machine with two cores and 4 GB of RAM, so the times do not have absolute value, but the numbers of pages read can still be trusted.

Times are shown on the second runs, on a hot server and virtual machine. The number of buffers read is on a freshly raised server.

| NPoints | Nreq | Type | Time (ms) | Reads | Hits |

|---|---|---|---|---|---|

| 1 | 100,000 | zcurve rtree hilbert | .36 .38 .63 | 1.13307 1.83092 1.16946 | 3.89143 3.84164 88.9128 |

| 10 | 100,000 | zcurve rtree hilbert | .38 .46 .80 | 1.57692 2.73515 1.61474 | 7.96261 4.35017 209.52 |

| 100 | 100,000 | zcurve rtree hilbert | .48 .50 ... 7.1 * 1.4 | 3.30305 6.2594 3.24432 | 31.0239 4.9118 465.706 |

| 1,000 | 100,000 | zcurve rtree hilbert | 1.2 1.1 ... 16 * 3.8 | 13.0646 24.38 11.761 | 165.853 7.89025 1357.53 |

| 10,000 | 10,000 | zcurve rtree hilbert | 5.5 4.2 ... 135 * 14 | 65.1069 150.579 59.229 | 651.405 27.2181 4108.42 |

| 100,000 | 10,000 | zcurve rtree hilbert | 53.3 35 ... 1200 * 89 | 442.628 1243.64 424.51 | 2198.11 186.457 12807.4 |

| 1,000,000 | 1,000 | zcurve rtree hilbert | 390 300 ... 10000 * 545 | 3629.49 11394 3491.37 | 6792.26 3175.36 36245.5 |

Npoints is the average number of points in the

Nreq output - the number of queries in the

Type series - ' zcurve ' is a fixed algorithm with hypercubes and numeric parsing using its internal structure ;; ' rtree '- gist only 2D rtree; ' hilbert ' - the same algorithm as for zcurve, but for the Hilbert curve, (*) - in order to compare the search time, it was necessary to reduce the series by 10 times so that the pages fit in the cache

Time (ms) - average execution time Request

Reads - The average number of reads per request. The most important column.

Shared hits - number of hits to buffers

* - the scatter of values is caused by high instability of the results, if the necessary buffers were successfully in the cache, the first number was observed, in the case of mass misses - the second. In fact, the second number is characteristic of the declared Nreq, the first is 5-10 times smaller.

conclusions

It is little surprise that the “knee-high” assessment of the benefits of the Hilbert curve gave a fairly accurate assessment of the real situation.

It can be seen that in queries where the size of the output is comparable to or larger than the page size, the Hilbert curve begins to behave better in the number of misses past the cache. The gain, however, is a few percent.

On the other hand, the integral operating time for the Hilbert curve is approximately twice worse than that of the Z-curve. Suppose the author did not choose the most efficient way to calculate the curve, for example, something could be done more accurately. But after all, the calculation of the Hilbert curve is objectively heavier than the Z-curve; to work with it, one really needs to take significantly more actions. The feeling is that to win back this slowdown with a few percent gain in reading pages will fail.

Yes, we can say that in a highly competitive environment, each page reading is more expensive than tens of thousands of key calculations. But the number of successful hits in the cache on the Hilbert curve is noticeably greater, and they go through serialization and are also worth something in a competitive environment.

Total

So the epic story about the possibility and features of spatial and / or multidimensional indices based on self-similar sweeping curves built on B-trees has come to an end.

During this time (besides the fact that such an index is realizable), we found out that in comparison with the R-tree traditionally used in this field

- it is more compact (objectively - due to better average page occupancy)

- builds faster

- does not use heuristics (i.e. this is an algorithm)

- not afraid of dynamic changes

- On small and medium queries it works faster.

It plays on large requests (only with an excess of memory for the page cache) - where hundreds of pages participate in the output.

In this case, the R-tree is faster because filtering occurs only on the perimeter, the processing of which sweeping curves is much harder.

Under normal conditions of competition for memory and on such queries, our index is noticeably faster than the R-tree. - Without changing the algorithm, it works with keys of any (reasonable) dimension

- In this case, it is possible to use heterogeneous data in one key, for example, time, object class and coordinates. In this case, you do not need to configure any heuristics, in the worst case, if the data ranges vary greatly, performance will drain.

- Spatial objects ((multidimensional) intervals) are treated as points, spatial semantics are processed within the boundaries of search extents.

- It is allowed to mix interval and exact data in one key, again, all the semantics are brought out, the algorithm does not change

- All this does not require intervention in the SQL syntax, the introduction of new types, ...

If you want, you can write a wrapping extension PostgreSQL. - And yes, it is laid out under the BSD license, which allows for almost complete freedom of action.