How the AI sensory system was created in Thief: The Dark Project

- Transfer

“Feelings” in game development is a useful metaphor for understanding, creating and discussing the part of AI that collects information about important objects of a simulated game environment. Non-player characters in a realistic three-dimensional space are usually people, animals or other creatures with eyesight and hearing, so this metaphor is very convenient.

But such a development metaphor should not be taken too literally. Despite the apparent physical nature of the AI of the gaming world, this analogy is neither physical nor neurological. The line between “feeling” and “knowledge” in the game is blurred. Feeling includes the idea of recognizing the presence of other elements of the game in the game, as well as their assessment and knowledge of them. Game logic can be tied directly to such elements.

The system of feelings in the game must be created in such a way that it obeys the needs of the design of the game and is effective in implementation. The system should not be too complicated, it is enough to provide reliability and interesting gameplay. The result of her work must be perceived by the player and understandable to him. Only a few AI games require a sense of taste, touch, or touch, while vision and hearing are mostly used. When used correctly, feelings can become an invaluable tool for creating more interesting behavior of simple finite state machines.

This article describes an approach to the design and implementation of a high-precision sensor system for AI in a first-person game with an emphasis on stealth. The techniques described here are from AI development experience for Thief: The Dark Project, and also from familiarization with the Half-Life code . The first part of the article outlines the basic concepts of AI feelings using the motivating example of Half-Life . The second part outlines stricter requirements for touch systems in the design of stealth games and describes the system created for Thief .

Case Study: Half-Life

In the game Half-Life, stealth and feelings are not the main thing. However, due to the strong emphasis on tactical combat, she needs a logical sensory system. Therefore, this game is a great example for exploring the basics of AI sensor systems. The AI in Half-Life has a scope and hearing, as well as a system for managing information about detected objects. The game has interesting examples of applying simple feelings to create compelling behaviors.

In a simple sensor system, AI periodically “looks” and “listens” to the world. Unlike vision and hearing in the real world, where stimuli are received by the sense organs passively, whether the object wants it or not, in the game they are active events. AI explores the world based on its interests and, according to a set of rules, decides whether it sees and hears another element of the game. Such checks emulate real feelings, while at the same time reducing the amount of work needed. Most resources are spent on aspects important to game mechanics.

For example, the periodically called basic Half-Life sensor system looks like this:

If I'm close to the player, then ...

Начать смотреть

--Собрать список объектов в пределах заданного расстояния

--Для каждого найденного объекта...

----Если я хочу посмотреть на него и

----Он находится в конусе моей видимости и

----Если можно испустить луч из моих глаз к его глазам, то...

------Если это игрок и

------Если мне приказано не видеть игрока, пока он меня не увидит, и

------Если он меня не видит

--------Закончить смотреть

------Иначе

--------Задать различные сигналы, зависящие от моих отношений с увиденным объектом --------

Закончить смотреть

Начать слушать

--Для каждого воспроизводимого звука...

----Если звук доносится до моих ушей...

------Добавить звук в список в услышанных звуков

------Если звук является настоящим звуком...

--------Задать сигнал, сообщающий о том, что я что-то услышал

------Если звук является псевдозвуком "запаха"

--------Задать сигнал, сообщающий о том, что я что-то унюхал

Закончить слушатьThe first concept, illustrated by this pseudo-code, is that feelings are closely related to the properties of the AI, its relationship with the object, and the correspondence of the actions of the AI to the actions of the player. This is partly due to the desire for optimization, but is implemented through game mechanics. In the Half-Life game design, an AI character that is far from the player is not important and does not need to feel the world. Even when he is next to the player, the AI needs to look only at objects that later should cause him to react with fear or hatred.

This logic also demonstrates the design of the visibility system as a combination of visibility distance, visibility cone, line of sight and eye position (Fig. 1). Each AI has a limited two-dimensional field of view, within which it emits rays in the direction of interesting objects. Free-flowing rays mean the visibility of objects.

Figure 1

Two important aspects are worth noting here. Firstly, sensory operations are performed in order, from less expensive to more expensive. Secondly, for the pleasure of the player, the vision system in the game is made so that the enemy sees the player only when he sees him. In the first-person game, the player weakly senses the body, and if the opponent notices the player earlier, this often seems to be cheating.

The most interesting fragment is the restriction of the ability of the AI to see the player until the player sees the AI. It is only needed to make the game fun. This is an example of how high-level goals of a game can be simply and elegantly achieved using elementary techniques in low-level systems.

The logic of hearing is much simpler than that of vision. The basic element of the hearing component is the definition and tuning of what it means to convey the audible sound to the AI. In the case of Half-Life, hearing is a straightforward heuristic of sound volume multiplied by “hearing sensitivity”, resulting in the distance at which the AI hears the sound. More interesting is the demonstration of the ability to hear as a template for collecting general information about the world. In this example, the AI “hears” pseudo-sounds, that is, fictional odors emanating from nearby corpses.

Feelings as an important element of gameplay: Thief

In Thief: The Dark Project and its followers presented a little scripted game world, in which the central game mechanic (stealth) is in conflict with the traditional game play 3D-games in the first person. The Thief player moves carefully, avoids conflict, and is punished for killing people. However, he can easily die. The gameplay focuses on the changes in AI sensory knowledge about a player moving around the game space. The player must move around the territory filled with motionless, moving and patrolling AI, trying to go unnoticed, hiding in the shadows and not making sounds. Although the feelings of gaming AI are built on the same basic principles as in Half-Life, the mechanics of stealth, escape, and unexpected attacks require a more sophisticated sensory system.

The main requirement was the creation of a very flexible sensor system that works in a wide range of conditions. On the surface, stealth gameplay focuses on the fictional themes of stealth, slipping away, taking by surprise, light and shadow. One of the aspects that make this gameplay interesting is the expansion of the gray zone between security and threat, which is very subtle in most first-person games. The game seeks to maintain tension, keeping the player on the verge between different states, and then falling into all seriouss when crossing the zone. This requires a wide range of feelings that do not have clearly defined polar meanings of “player seen” or “player not seen”.

The second requirement is that the sensory system should be activated much more often and for more objects than in a regular first-person shooter. During the game, the player can change the state of the world in such ways that the AI should notice, even when the player is not nearby. Some aspects, such as hiding corpses, require a reliable system. These two requirements create an interesting challenge that conflicts with yet another inherent requirement for game developers: game performance.

Finally, it is necessary that both players and designers understand the input and output information of the sensor systems, and that the output information matches the results expected from the input information. This prompted developers to create a solution with a limited amount of input perceived by the player and discretely evaluated results.

Expansion of the range of feelings

Basically, the sensor system described here is very similar to that used in Half-Life . This is a visibility system based on visibility cones and emitted rays and a simple hearing system that supports optimization, game mechanics and pseudo-sensor data. As with Half-Life , most of the data collection is independent of the decision-making process based on this information. In this system, some of the basic principles are expanded and new ones are added.

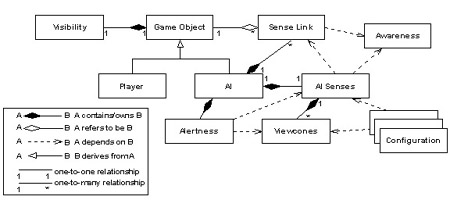

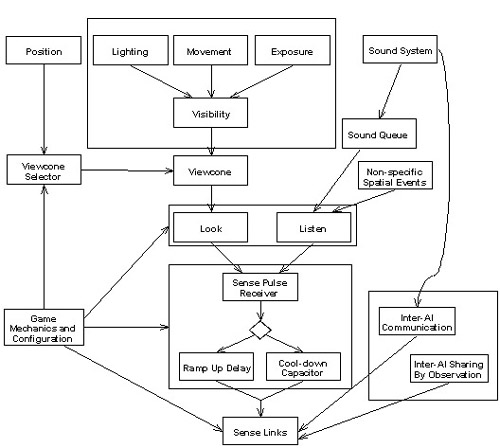

Figure 2. Main components and relationships

The system design and data transmission through it are designed as a flexible flexible information collection system that provides stable and understandable output data.

In this system, AI feelings are driven by “awareness.” Awareness is expressed as a set of discrete states representing AI awareness of the presence, location and identification of an object of interest. These discrete states are the only representation of the system internals available to the designer. In high-level AI, they correlate with the character’s alertness. In the Thief AI, the set of alert states is similar to the set of alert states. The alertness state of AI is transmitted in various ways to the sensory system to change its behavior.

Awareness is stored in sensory connections that bind the AI to another entity in the game or to a position in space. These links store details of sensory information that are important for the game (time, place, line of sight, etc.), as well as cached values used to reduce the number of calculations between cycles of “thinking”. In principle, sensory communications are the main memory of the AI. Through verbal transmission and observation, sensory connections can be distributed between AI with the control of cascades of knowledge distribution by level. After basic processing, they can also be controlled through the logic of the game.

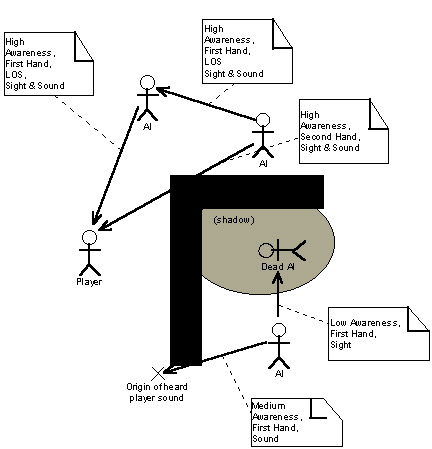

Figure 3. Sensory communications

Each object of interest in the game has its own visibility value, independent of the observer. Depending on the state of the game and the nature of the object, the level of detail of this value and the refresh rate are scaled, which reduces the processor time for calculating the value.

Visibility is determined through the illumination, movement and visibility (size, distance from other objects) of the object. Its meaning is closely related to the requirements of the game. For example, a player’s illumination is tied to the illumination of the floor under his feet, which gives him an objective and visual way of determining his own safety level. These values and visibility in the form of their total amount are stored as analog values in the interval 0..1.

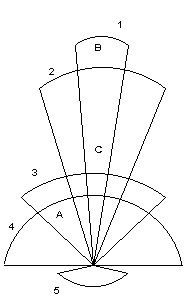

Visibility Cones

Instead of one two-dimensional visual field for feelings, Thief implements a set of consecutive three-dimensional visual cones defined as the XY angle, Z angle, distance, a set of parameters describing general visual acuity and sensitivity to different types of stimuli (for example, to movement and light) and their attitude to AI waryness. Cones of visibility are located in the direction of rotation of the AI head.

At any given point in time for the noticed object, only the first cone of visibility in which the object is located is used in the calculations of feelings. To simplify and customize the gameplay, it is believed that each cone of visibility creates constant output, regardless of what part of the cone of visibility the object is in.

For example, the AI shown in Figure 4 has five visibility cones. The object at point A will be evaluated by cone number 3. The cone of visibility used to calculate the visibility awareness of the object at point B and point C is cone number 1, in which the same visibility values for the object give the same result.

Figure 4. Cones of visibility, top view

When checking the objects of interest in the world, the sensory system first of all determines which of the cones belongs to this object. Then, his own characteristic of visibility, along with the cone of visibility, is transmitted through the heuristic “look”, which gives a discrete value of awareness.

Several cones of visibility are used to implement aspects such as direct visibility, peripheral vision, or to distinguish between objects directly in front and on the same Z plane from objects in front, but higher and lower. Cone number 5 in the figure is a good example of using a low-level system to express a high-level concept. The cone of "imaginary vision" looks back. He is tuned to sensitivity to movement and gives AI a “spider sense”: the character senses when they move too close behind him, even when the player is completely silent.

Information conveyor

The feelings management system has the form of a series of components, each of which receives a limited and clearly defined data set, after which it gives an even more limited value. The processing costs of each stage can be independently scaled according to the importance of the gameplay. In terms of performance, such multiple scalable layers can be made incredibly efficient.

Figure 5. Information pipeline

A heuristic is implemented in the basic sensor system, which receives information about visibility, sound events, current awareness links, configuration data for designers and programmers and the current state of the AI, and outputs one awareness value for each object of interest. These heuristics can be considered as a “black box”, which the AI programmer constantly adjusts during the development of the game.

Vision is implemented by filtering the visibility value of the object through the corresponding cone of visibility, which changes the result based on the properties of each AI. In most cases, simple beam emission is used to check line of sight. In more interesting cases, for example, when calculating the visibility of a player, several rays are emitted that determine the spatial relationship of the AI to the object when weighing the visibility of the object.

In thiefa complex sound system is used, in which the emitted and unpublished sounds are marked with semantic data and distributed along the 3D geometry of the world. When the sound “reaches” the ears of the AI, it comes from the direction from which it would come in the real world, marked by weakened values of awareness. If sound is speech, then sometimes it carries information from other AIs. Such sounds join other awareness-altering aspects (for example, the smell in the case of Half-Life ) as a relation of awareness regarding position in space.

Impulses of awareness

After the operations of vision and hearing are completed, their results in the form of an awareness level are transmitted to a method that receives periodic impulses from unprocessed sensory signals, and converts them into a simple awareness relation, all the details of which are stored in its associated sensory connection. Unlike the analog data used in the pipeline at this stage, the data in this process is completely discrete. The result of this process is the creation, updating, or nullification of sensory connections with the correct awareness value.

This process takes place in three stages. First, the input values of hearing and vision are compared. One of them is declared dominant and becomes the value of awareness. The auxiliary data created by each feeling is cleared and combined into the total amount of the event of feelings.

Secondly, if the momentum of awareness has increased compared to previous indicators, then it is passed through a time filter that determines whether awareness will actually increase. Time delay is a property of only the current state, not the target. In this way, reaction delays and player forgiveness indicators are realized. After passing the time threshold, awareness passes to the target state without passing through intermediate states.

Thirdly, if the new pulse value is lower than the current reading, a “capacitor” is used to smoothly and gradually reduce awareness. Awareness decreases over a period of time, passing through all the intermediate states. This softens the behavior of the AI after the object of interest is no longer felt by the senses, but the alertness of the AI is not controlled by this mechanism.

If the object of interest no longer generates impulses, a degree of free knowledge is added to the senses, scaled based on the state of the AI. This mechanism creates the appearance of logical thinking on the part of the AI, when the object disappears from the scope, allowing hiding cheating from the player.

Conclusion

The system described in the article is designed for a single-player game with software rendering. Therefore, the rendering system was available all the information about the objects of the game. Unfortunately, in a game engine with a client-server architecture and exclusively hardware renderer, this may not be possible. The calculation of the illuminance area of an object may not be so straightforward. Therefore, to implement such a system must be thoughtfully and accurately, because it will need information from other systems.

In addition, despite its effectiveness, the system is designed for a game that largely depends on the data output from this system. In Thief, it takes up most of the time allocated to the CPU for all AI calculations. Therefore, the system can take time from finding ways, tactical analysis and other decision-making processes.

However, any game can benefit from the implementation of the sensor system. When collecting and filtering information about the environment and when processing it correctly, the sense system can be used to create interesting AI behavior without significantly complicating the decision-making state machines. In addition, a reliable sensory system allows for the implementation of “preconscious” behaviors by controlling and changing the basic input of knowledge. Finally, a multi-state sensory system gives the player an artificial adversary or ally, demonstrating diverse and complex reactions and behaviors that do not add unnecessary complexity to basic decision-making machines.

Further research

Due to the fact that the Dark Engine on which Thief is created is highly dependent on data, most of the concepts presented in the article and all configuration details can be explored independently using the tools available at http://www.thief-thecircle.com .