In the context: news aggregator on Android with a backend. Java Web Crawl Library (crawler4j)

Introductory part (with links to all articles)

An integral part of the news gathering system is a robot for crawling sites (crawler, kruhaler, "spider"). Its functions include tracking changes on these sites and entering new data into the database (DB) of the system.

There was no completely ready-made and suitable solution - in this regard, it was necessary to choose from existing projects something that would satisfy the following criteria:

The chosen solution was a fairly popular robot for crawling sites - crawler4j . Of course, it pulls a lot of libraries to analyze the received content, but this does not affect the speed of its work or the resources consumed. It uses Berkley DB as a link database, which is created in a custom directory for each analyzed site.

As a method of customization, the developer selected a hybrid of behavioral templates “ Strategy ” (the right to decide which links and sections of the site to analyze should be accepted by the client) and “ Observer ” (when crawling the site, page information (address, format, content, meta data ) are transferred to the client, who is free to decide for himself what to do with them).

In fact, for a developer, a “spider” looks like a library that connects to the project and into which the necessary interface implementations are transferred to customize the behavior. The developer expands the library class

where

We did not have to deal with the implementation of site parsing before - therefore, immediately I had to face a series of problems and in the process of eliminating them it was necessary to make several decisions on the implementation and methods of processing the obtained crawl data:

The main problem for me was some architectural solution of the cralwer4j developer that the pages of the site do not change, i.e. the logic of his work is as follows:

Having studied the source code of the library, I realized that I could not change this logic with any settings and it was decided to create a fork for the main project. In the indicated branch, before the action “Add extracted links to the links database”, an additional check is made for the need to make links to the links database: the start pages of the site are never entered into this database and, as a result, when they get into the main processing cycle, they are received again and reassembled while giving out links to the latest news.

However, such a refinement required a change in working with the library, in which the launch of the main methods should be carried out on a periodic basis, which was easily implemented using the quartz library . In the absence of fresh news on the start pages, the method completed its work in a couple of seconds (after receiving the start pages, analyzing them and receiving already passed links) or writing the latest news to the database.

Thanks for attention!

An integral part of the news gathering system is a robot for crawling sites (crawler, kruhaler, "spider"). Its functions include tracking changes on these sites and entering new data into the database (DB) of the system.

There was no completely ready-made and suitable solution - in this regard, it was necessary to choose from existing projects something that would satisfy the following criteria:

- ease of setup;

- the ability to configure to crawl multiple sites;

- low resource requirements;

- lack of additional infrastructure things (work coordinators, a database for a spider, additional services, etc.).

The chosen solution was a fairly popular robot for crawling sites - crawler4j . Of course, it pulls a lot of libraries to analyze the received content, but this does not affect the speed of its work or the resources consumed. It uses Berkley DB as a link database, which is created in a custom directory for each analyzed site.

As a method of customization, the developer selected a hybrid of behavioral templates “ Strategy ” (the right to decide which links and sections of the site to analyze should be accepted by the client) and “ Observer ” (when crawling the site, page information (address, format, content, meta data ) are transferred to the client, who is free to decide for himself what to do with them).

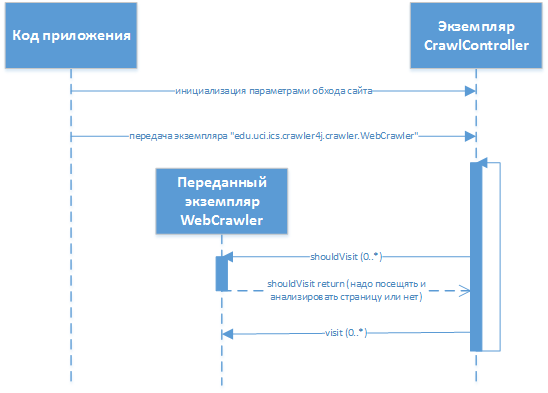

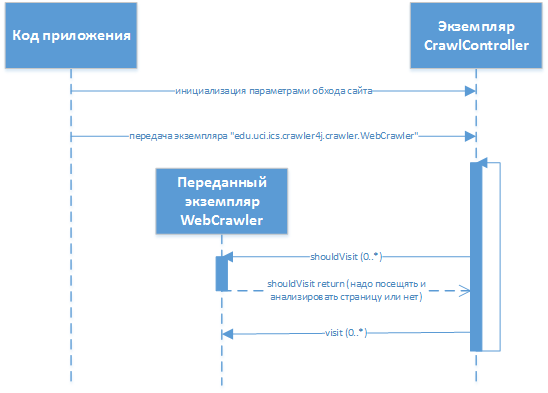

In fact, for a developer, a “spider” looks like a library that connects to the project and into which the necessary interface implementations are transferred to customize the behavior. The developer expands the library class

«edu.uci.ics.crawler4j.crawler.WebCrawler»(with «shouldVisit»and methods «visit»), which is subsequently transferred to the library. The interaction in the process of work looks something like this:,

where

edu.uci.ics.crawler4j.crawler.CrawlController is the main class of the library through which the interaction is carried out (bypass setup, transfer of the control code, obtaining status information, start / stop).We did not have to deal with the implementation of site parsing before - therefore, immediately I had to face a series of problems and in the process of eliminating them it was necessary to make several decisions on the implementation and methods of processing the obtained crawl data:

- the code for analyzing the received content and the implementation of the “Strategy” template is presented in the form of a separate project, the versioning of which is separate from the versions of the “spider” itself;

- the actual code for analyzing the received content and link analysis is implemented on groovy , which allows you to change the logic of work without restarting spiders (option

«recompileGroovySource»c is activated«org.codehaus.groovy.control.CompilerConfiguration») (while the corresponding implementation code«edu.uci.ics.crawler4j.crawler.WebCrawler»actually contains only the groovy interpreter, which processes the transmitted data); - data extraction from each page encountered in the “spider” is not complete - comments, the “header” and the “footer” are removed; the fact that it makes no sense to spend 50% of the volume of each page - everything else is stored in the MongoDB database for subsequent analysis (this allows you to restart the page analysis without re-crawling the sites);

- the key fields for each news item (“date”, “heading”, “subject”, “author”, etc.) are already extracted from the database on MongoDB, while their completeness is monitored - when a certain amount of errors is reached, a notification is sent about the need to adjust scripts (a sure sign of a change in the structure of the site).

Change in library code

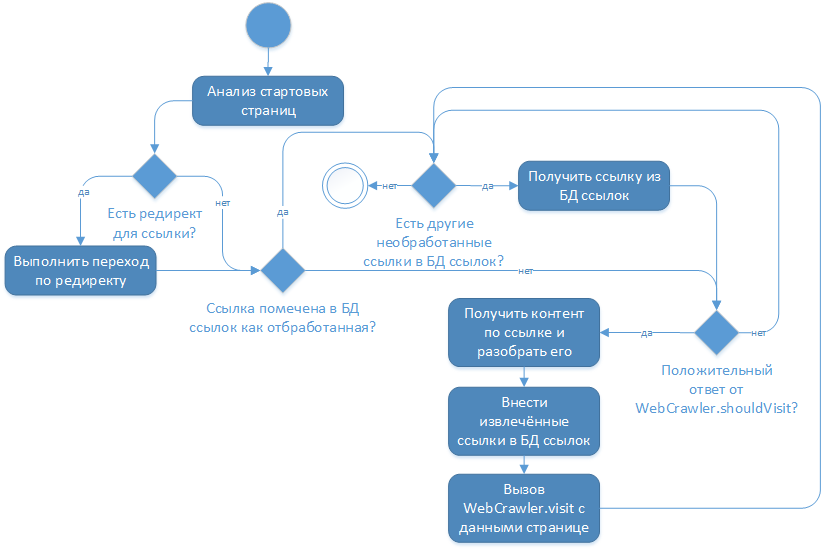

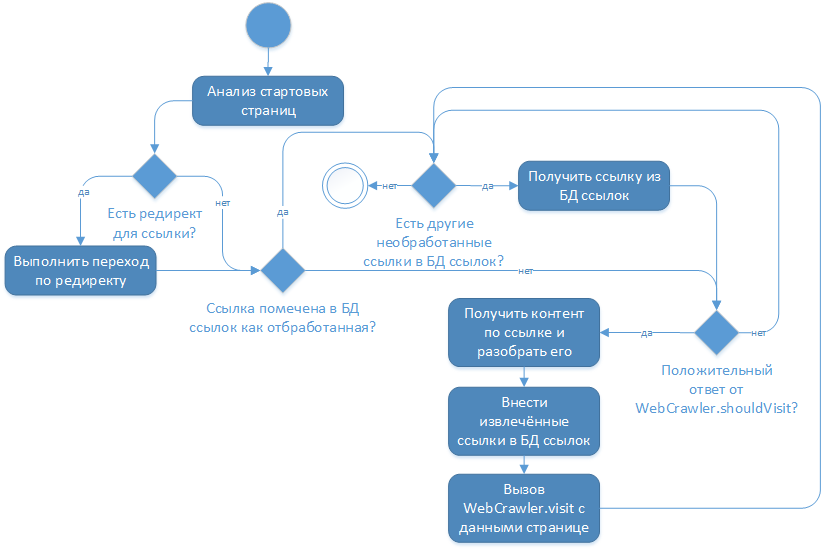

The main problem for me was some architectural solution of the cralwer4j developer that the pages of the site do not change, i.e. the logic of his work is as follows:

Having studied the source code of the library, I realized that I could not change this logic with any settings and it was decided to create a fork for the main project. In the indicated branch, before the action “Add extracted links to the links database”, an additional check is made for the need to make links to the links database: the start pages of the site are never entered into this database and, as a result, when they get into the main processing cycle, they are received again and reassembled while giving out links to the latest news.

However, such a refinement required a change in working with the library, in which the launch of the main methods should be carried out on a periodic basis, which was easily implemented using the quartz library . In the absence of fresh news on the start pages, the method completed its work in a couple of seconds (after receiving the start pages, analyzing them and receiving already passed links) or writing the latest news to the database.

Thanks for attention!