Zabbix Review: how to organize code review for monitoring configuration

Code review - engineering practice in terms of flexible development methodology. This analysis (inspection) of the code in order to identify errors, shortcomings, discrepancies in the style of writing code and understanding whether the code solves the problem.

Today I will talk about how we organized the review process for monitoring configuration in Zabbix. The article will be useful to those who work with the Zabbix monitoring system, both in a large team and alone, even if you have “ten hosts, what is there to review”.

What problems we solve

To monitor our internal services and build infrastructure, we use Zabbix. We have a naming convention - name convention (we use a role model with Role allocation, Profile templates for monitoring), but there is no dedicated monitoring team (there are senior engineers who “ate the dog” in monitoring matters), there are engineers and junior engineers, ~ 500 hosts, ~ 150 templates (small but very dynamic infrastructure).

This infrastructure is used to support and automate development processes in the company ; in addition to its support, we also develop automation and integration tools, so we have little experience and understanding of development processes from the inside.

With the increase in the number of employees and the changes introduced into the monitoring system, more and more common errors that were difficult to track were encountered:

- Binding item, trigger directly to the hosts, outside the templates (and some of the hosts are left without monitoring).

- Wrong value of triggers (sort of agreed on an accessible place of 3 GB, but a typo, we get a never-working trigger of 34 GB).

- Non-compliance with the name convention - and we get an incomprehensible trigger name Script failed (although this means that the update delivery system does not work) or the template - Gitlab Templates (monitoring what, server or agent?).

- Trigger off, temporary, for tests. As a result, missed the warning on the infrastructure and stood up.

In the world of programmers, all these problems are solved quite simply: linters, modereview. So why not take these bestpractices for a Zabbix configuration review? We take!

We have already written about the pros and examples of code review: code inspections Introduction into the development process , practical example of the implementation of the inspection code , code inspection. Results

Why you may need to review the Zabbix configuration:

- Check that the hosts and patterns are named as is customary in the command ( name convention ).

- Train new employees and check that they have done the task as discussed.

- Transfer knowledge between experienced employees.

- To notice accidentally or temporarily off triggers.

- Notice the wrong values in item or trigger - last (0) instead of min (5m) .

Add your own problems in the comments, try to figure out together how to solve them with the help of review.

Like Zabbix with change tracking

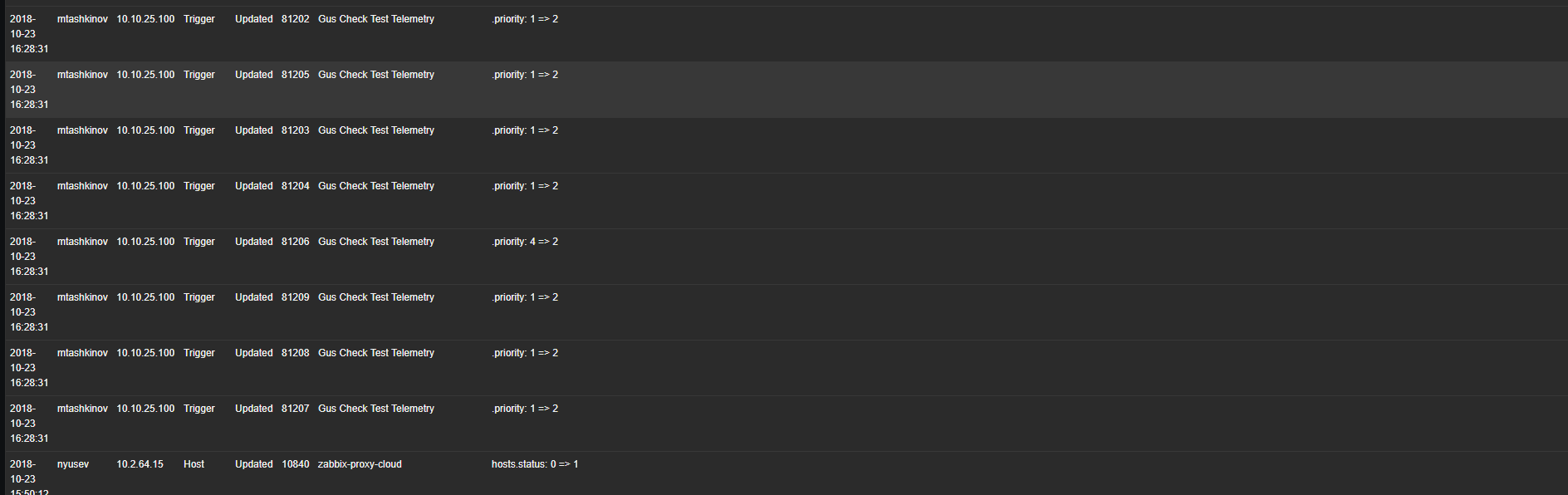

Zabbix has an Audit subsystem , with its help we are looking at who made the configuration changes. Its significant drawback is the large number of saved events, as it saves every user event.

Imagine that any code change remains in the git history, you tried to find the name of a variable for an hour, tried 40 options and all of them are now saved, each change is a separate commit, and then you review the history of these commits, without being able to compare the initial and final version. Awful, right?

And in Zabbix Audit exactly like this. With it, you can track changes, but it does not allow you to quickly see the difference (diff) between the two states of the system (at the beginning of the week and at the end). In addition, her actions are divided by type: add, change, delete need to look in different windows. An example can be found in your Zabbix on the Audit tab (or look at the screenshot). It is difficult to understand what the initial state, what the current, what changes were in a week. The situation becomes more complicated when we have dozens of changes in a week.

I would like a mechanism that will allow:

- Once a week or after completing the task of changing the monitoring logic, take a snapshot of the system state.

- Compare the current configuration snapshot with the previous diff ().

- Automatically check the name convention.

- Check the quality of the task, give recommendations, tips, discuss solutions.

- Check that the changes are legitimate - all made in accordance with the task.

- Use familiar tools for developers - git, diff, mergerequest.

- Roll back to some state of the system, but do not lose data (so backup is not suitable).

- Control entities Zabbix - host, templates, action, macros, screen, map.

Now let's talk about how we implemented the mechanism and how it can be useful to you for your Zabbix infrastructure.

Making Zabbix configuration review

For storage of Zabbix-configuration we use the following formats:

- Original XML - exported using original Zabbix Export . Use for objects host, template, screen. There are features:

- XML is difficult to read and view changes;

- contain all fields, including blank;

- contain the date field - the export date, we cut it out.

- Raw JSON - some types of Zabbix objects are not able to export (actions, mediatypes), but they are important and I want to see the changes, so we take raw data from ZabbixAPI and save them in JSON.

- Readable YAML - we process exported XML and raw JSON and save to human-readable, convenient vanilla YAML. It is easy to handle large volumes of changes with it. We add there small processing:

- We delete fields with no values - a controversial moment, so we can skip an empty field, although it should be filled, for example, a field describing the problem of a trigger (trigger.description). After discussion, we decided that it would be better to remove empty fields, there are too many of them. If you wish, you can put an exception on some empty fields and do not delete them.

- We delete dates - they change every time and with merge requesting they are shown as changes for each host.

- You can optionally add other operations on filling with information - userid is written in action instead of users, for example.

Select three git repositories (we use gitlab for storage, but any VCS will do):

- zabbix-review-export - the export code (Python-scripts) and parameters for gitlab-ci jobs will be stored here.

- zabbix-xml - store XML + JSON, all in one thread. Revising this business is hard and time consuming. It is used to restore the Zabbix configuration state for a certain time.

- zabbix-yaml is our main repository, here we do merge requests, we look at the changes, we discuss the decisions made, merjim to master if there are no comments.

In these repositories we save configuration data, the rules are as follows:

- Grouping by object type by folder - full list: https://gitlab.com/devopshq/zabbix-review-export#supported-objects

- one object - one file.

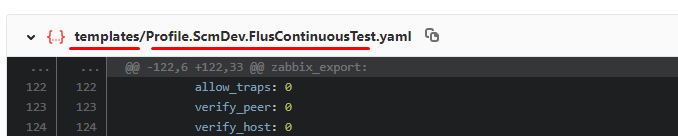

Now we clearly see what type of object has changed, and it is clear which object has changed; in the example below, the Profile template has changed . ScmDev. FlusContinuousTest .

Let's show by examples

To view the changes, we use the merge-request mechanism in gitlab.

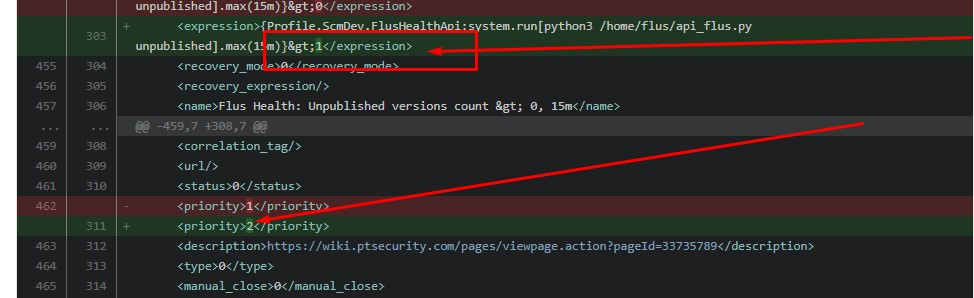

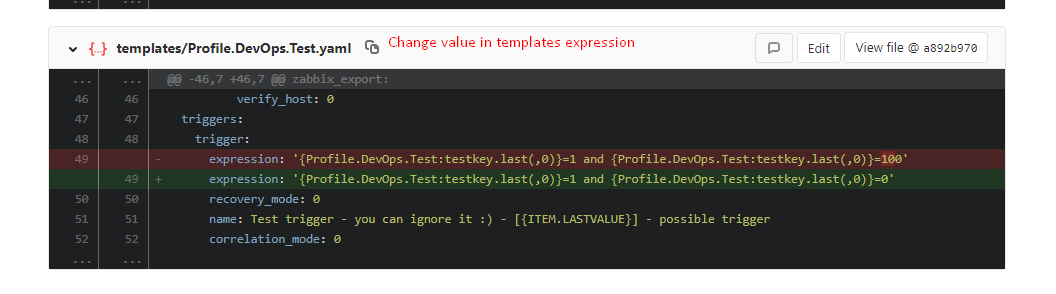

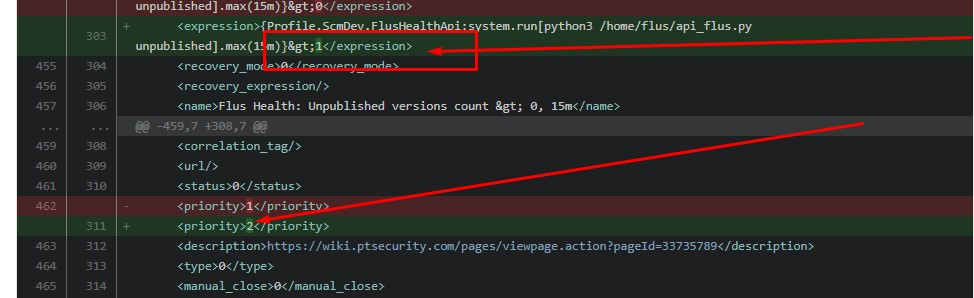

Changed the profile template. DevOps. Test - changed the expression of the trigger. The template is in the templates folder :

Changed the expression in the trigger and priority:

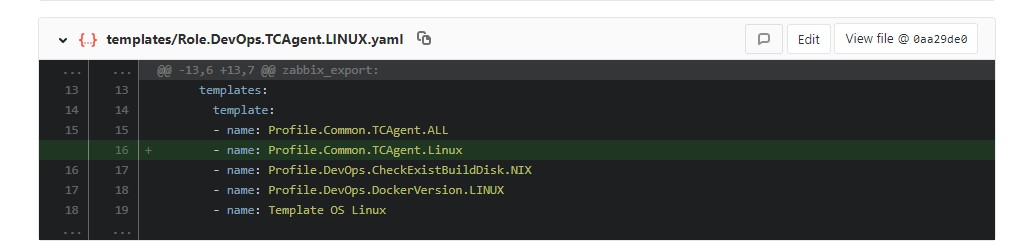

Linked to one pattern of another:

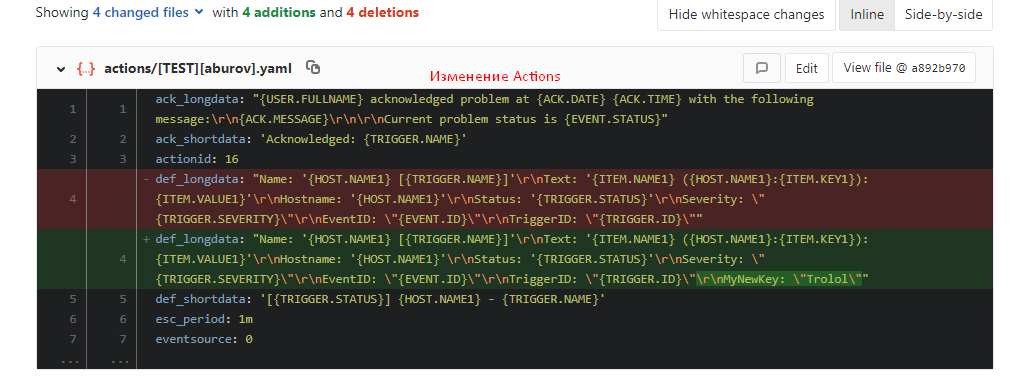

Changed the action - added a new line to the end of the text:

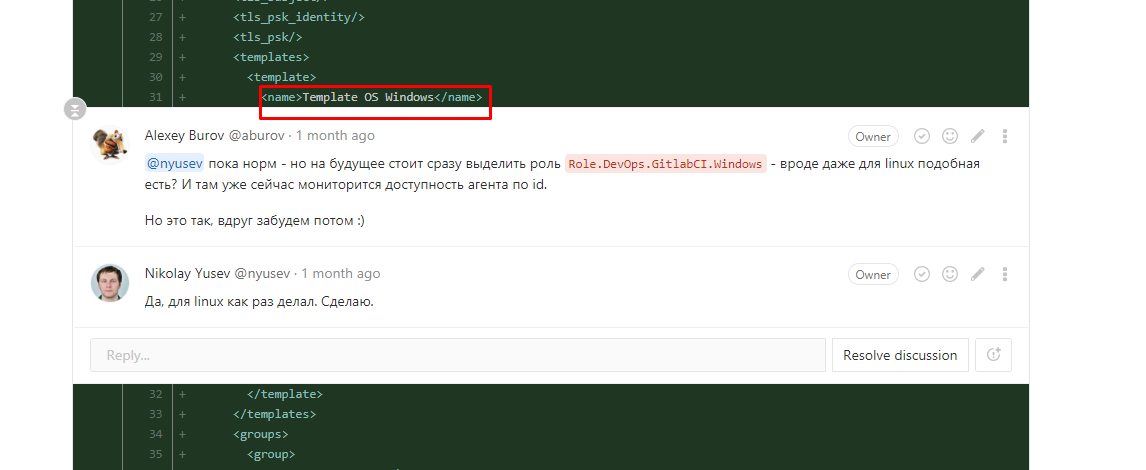

An example of discussions in merge requests (everything is like that of programmers!) - you can see that the standard template was connected directly to the host, but it is worth highlighting a separate role for the future. Screenshot from the old review, then still using the XML representation of the configuration.

In general, everything is simple:

- Added a new host or other object - a new file was created.

- Changed the host or other object - looked diff.

- Delete - the file is deleted.

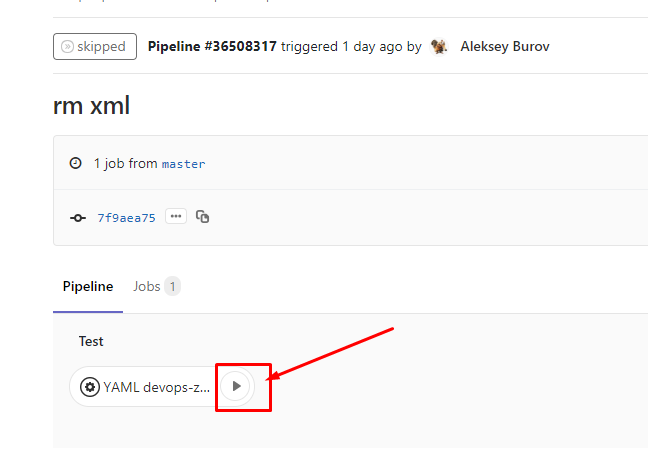

Suppose you have completed the task and want to ask a colleague to see if you have forgotten nothing. We request a review: to do this in the zabbix-review-export repository, run the gitlab-ci job with manual launch.

We assign a merge-request to a colleague who watches, discusses and rules the monitoring infrastructure code.

Once a week, a new review is launched to track small changes, for this, a schedule ( Schedule ) is used to export and save the configuration to the git repository (with a new commit), and the monitoring guru reviews the changes.

Gently crawl, but you have to try

Now we’ll tell you how to set up this system with a review of the Zabbix configuration ( we love open source and try to share our experiences with the community).

There are two possible uses:

- Just run the export script with your hands - run the script, see the changes, make

git add * && git commit && git push. This option is suitable for rare changes or when you are working with a monitoring system alone. - Use gitlab-ci to automate - then you just need to click on the start button (see above screenshot). The option is more suitable for large

lazycommands or with frequent changes.

Both options are described in the https://gitlab.com/devopshq/zabbix-review-export repository , everything you need is stored there - scripts, gitlab-ci settings and README.md, how to put them in your infrastructure.

To get started, try the first option (or if you don't have the gitlab-ci infrastructure): use the manual mode — run the zabbix-export.py script to export (backup) the configuration, git add * && git commit && git pushdo it on your working machine. When you get tired, go to the second option - automate automation!

Problems and possible improvements

Now the changes are impersonal and to find out who made the changes, you need to use the Audit system , which causes pain and suffering. But not everything is so scary, and Audit is rarely needed, usually a message in the command chat is usually enough to find the right employee.

Another problem: if you change the item or trigger host, it is not contained in XML. That is, we can turn off all the triggers on a particular host or change their priority to a lower one - and no one will know about it and correct us! We are waiting for this fix at https://support.zabbix.com/browse/ZBX-15175

Not yet invented an automatic recovery mechanism. Suppose a template or host is greatly changed, we understand that the changes are incorrect and you need to return everything as it was. Now we are looking for the necessary XML for the corresponding host, import it manually into the UI, and I would just like to click the “Roll back TemplateName template to commit-hash commit state”.

You can implement two-way synchronization - when changes in the Zabbix configuration are created during changes in YAML, then you don’t have to go to the Zabbix web interface. On github we met a similar project, but somehow it quickly faded away and the community did not accept the idea; Apparently, it’s not so easy to implement in YAML what you can click with the mouse in the web interface. Therefore, we stopped on the interaction in one direction.

The ideal option is to embed this configuration saving system as a code, at least just in XML format, in Zabbix. As it is done in the TeamCity CI server : configurations configured via the UI make commits on behalf of the user who changed the configuration. It turns out a very handy tool for viewing changes, and also eliminates the problem of depersonalization of changes.

Try

Start exporting your Zabbix configuration, commit to the repository (local enough), wait a week and start again. Now the changes are under your control! https://gitlab.com/devopshq/zabbix-review-export

Who would be interested in this functionality in the box Zabbix - please vote for the issue https://support.zabbix.com/browse/ZBXNEXT-4862

All 100% uptime!