Support for Visual Studio 2017 and Roslyn 2.0 in PVS-Studio: sometimes using ready-made solutions is not so easy

In this article I want to talk about the problems that PVS-Studio developers faced with the support of the new version of Visual Studio. In addition, I’ll try to answer the question: why support of our C # analyzer based on a “ready-made solution” (in this case, Roslyn) is more expensive in some situations than our “self-written” C ++ analyzer.

In this article I want to talk about the problems that PVS-Studio developers faced with the support of the new version of Visual Studio. In addition, I’ll try to answer the question: why support of our C # analyzer based on a “ready-made solution” (in this case, Roslyn) is more expensive in some situations than our “self-written” C ++ analyzer. With the release of the new version of Visual Studio - 2017, Microsoft presents a large number of innovations for its "flagship" IDE. These include:

- support for C # 7.0;

- new versions of .NET Core / .NET Standard;

- new features from C ++ 11 and C ++ 14 standards;

- IntelliSense enhancements for many of the supported languages

- “Lightweight” loading of projects and new tools for tracking the performance of IDE extensions;

- new modular installation system, and much more.

The version of PVS-Studio 6.14 that supports Visual Studio 2017 was released just 10 days after the release of this IDE. We started work on supporting the new VS much earlier - at the end of last year. Of course, far from all the innovations in Visual Studio hurt the work of PVS-Studio, however, the latest release of this IDE turned out to be especially time-consuming for us in terms of its support in all components of our product. The most affected was not our "traditional" C ++ analyzer (it turned out to be fast enough to support the new version of Visual C ++), but the components responsible for interacting with the MSBuild assembly system and the Roslyn platform (on which our C # analyzer is based).

Also, the new version of Visual Studio was the first since the C # analyzer appeared in PVS-Studio (which we released in parallel with the first Roslyn release in Visual Studio 2015), and the C ++ analyzer on Windows was more closely integrated with the MSBuild assembly system. Therefore, due to the difficulties encountered in updating these components, support for the new VS has become the most costly for us in the history of our product.

What Microsoft solutions does PVS-Studio use

Most likely, you know that PVS-Studio is a static analyzer for C / C ++ / C # languages, running on Windows and Linux. What does PVS-Studio consist of? First of all, this, of course, is a cross-platform C ++ analyzer and a set of (mostly) cross-platform utilities for its integration into various assembly systems.

However, on the Windows platform, most of our users use a technology stack for developing software from Microsoft, i.e. Visual C ++ / C #, Visual Studio, MSBuild, etc. For them, we have tools for working with the analyzer from the Visual Studio environment (IDE plug-in), and a command line utility for checking C ++ / C # MSBuild projects. The same utility “under the hood” uses our VS plugin. This utility directly analyzes the structure of projects using the API provided by MSBuild itself. Our C # analyzer is based on the .NET Compiler Platform (Roslyn), and so far is only available for Windows users.

So, we see that on the Windows platform PVS-Studio uses the "native" tools from Microsoft to integrate into Visual Studio, analyze the build system and analyze C # code. With the release of the new version of Visual Studio, all of these components have also been updated.

What has changed for us with the release of Visual Studio 2017

In addition to updating versions of MSBuild and Roslyn, Visual Studio 2017 also contains a number of innovations that greatly affected our product. It so happened that a number of our components stopped working, which were used without any special changes during several previous releases of Visual Studio, and some of them have been working since Visual Studio 2005 (which we no longer support). Let us consider in more detail these changes.

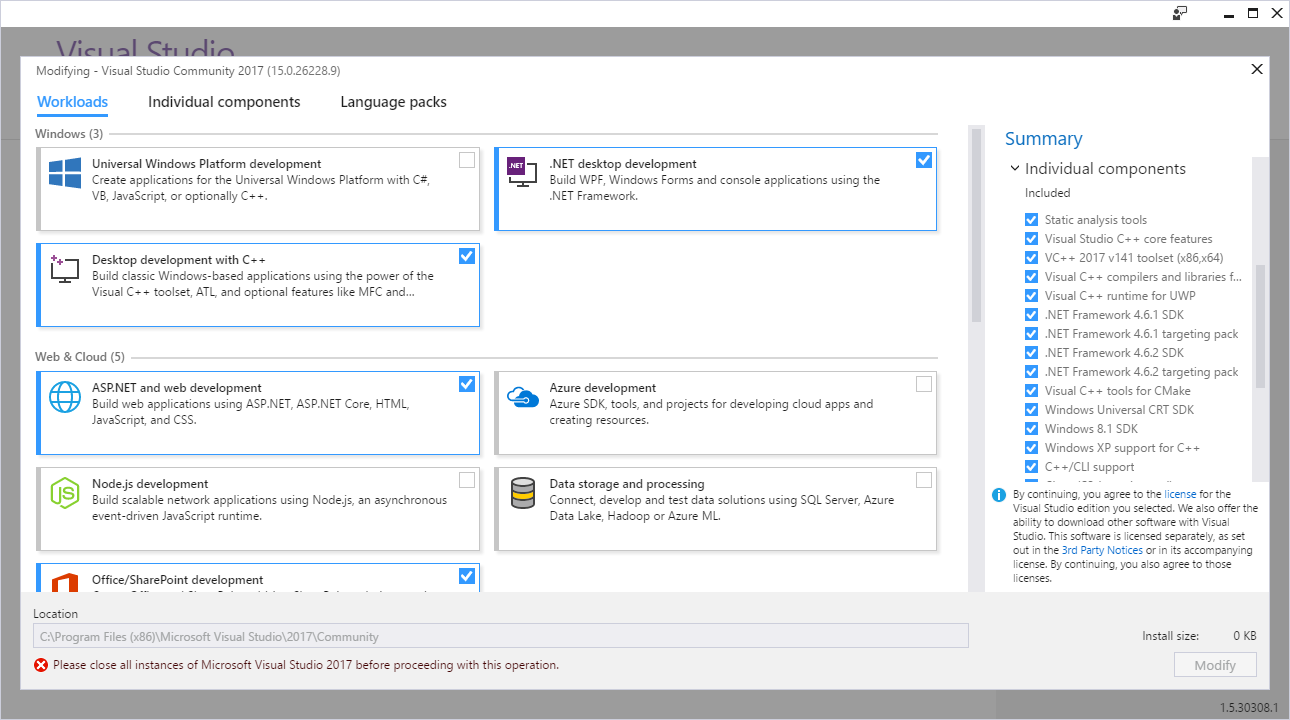

New installation order for Visual Studio 2017

The new modular installation system , which allows the user to select only the components necessary for him to work, was completely "untied" from the use of the Windows registry. Theoretically, this made the development environment more portable, and allowed you to install several different editions of Visual Studio on the same system. All this, however, significantly influenced the developers of extensions, as all the code that allowed earlier to determine the presence of the installed environment itself or its individual components stopped working.

Figure 1 - The new Visual Studio installer.

To obtain information about the instances of Visual Studio installed on the system, developers are now invited to use COM interfaces, in particular ISetupConfiguration . I think many will agree that the convenience of using COM interfaces is inferior to reading from the registry. And if, for example, for C # code at least wrappers of these interfaces already exist, then we had to work hard to adapt our installer based on InnoSetup. In fact, here Microsoft has changed one Windows-specific technology to another. In my opinion, the gain from such a transition is rather doubtful, especially since VS has not been able to refuse completely using the registry. At least in this version.

A more significant consequence of this transition, in addition to a fairly subjective usability, was that it indirectly affected the operation of the MSBuild 15 libraries we used and their backward compatibility with previous versions of MSBuild. The reason for this is because the new version of MSBuild has also stopped using the registry. We were forced to update all the components we used for MSBuild, as Roslyn depends on them directly. I will talk more about the consequences of these changes a little later.

Changes in the C ++ MSBuild Infrastructure

By the MSBuild infrastructure for C ++, I primarily mean the layer that is responsible for calling the compiler directly when building Visual C ++ projects. This layer is called PlatformToolset in MSBuild and is responsible for preparing the environment in which the C ++ compiler works. The PlatformToolset system also provides backward compatibility with previous versions of Visual C ++ compilers. It allows you to work with the latest version of MSBuild to build projects using previous versions of the Visual C ++ compiler.

For example, you can build a project using the C ++ compiler from Visual Studio 2015, in MSBuild 15 / Visual Studio 2017, if this version of the compiler is installed on the system. This may be useful since allows you to start using the new version of the IDE on the project immediately, without first porting the project to a new version of the compiler (which is not always easy).

PVS-Studio fully supports PlatformToolset and uses the "native" MSBuild API to prepare the environment of the C ++ analyzer, thereby allowing the analyzer to check the source code closest to how it is compiled.

Such close integration with MSBuild allowed us to easily support new versions of C ++ compiler from Microsoft earlier. More precisely, to maintain its assembly environment, because Support for directly new compiler features (for example, syntax and header files using this syntax) is not included in the issues discussed in this article. We simply added the new PlatformToolset to the list of supported ones.

In the new version of Visual C ++, the order of setting the compiler environment has undergone noticeable changes, again “breaking” our code that worked earlier for all versions starting with Visual Studio 2010. And although PlatformToolset's from previous versions of the compiler still work, to support the new toolset 'but I had to write a separate code branch. By a strange coincidence (and possibly not a coincidence), the MSBuild developers also changed the naming pattern for C ++ toolsets: v100, v110, v120, v140 in previous versions, and v141 in the latest version (while Visual Studio 2017 still has a version 15.0).

In the new version, the structure of vcvars scripts has been completely changed, which is tied to the deployment of the compiler environment. These scripts set the environment variables required for the compiler, supplement PATH with paths to binary directories and system C ++ libraries, etc. The analyzer requires an identical environment for operation, in particular, for preprocessing the source files before starting the analysis itself.

We can say that the new version of the deployment scripts, in a sense, is made “neater”, and most likely it is easier to maintain and expand (it is possible that the update of these scripts was caused by the inclusion of clang support in the new version of Visual C ++ as compiler), but from the point of view of the developers of the C ++ analyzer, this added to our work.

C # analyzer PVS-Studio

Together with Visual Studio 2017, Roslyn 2.0 and MSBuild 15 were released. It might seem that to support these new versions in PVS-Studio C # it will be quite simple to update the packages used in their projects by Nuget. After that, all the “goodies” of new versions, such as support for C # 7.0, new types of .NET Core projects, etc., will immediately become available to our analyzer.

Indeed, updating the packages we use and rebuilding the C # analyzer turned out to be quite simple. However, the very first launch of such a new version on our tests showed that “everything broke”. Further experiments showed that the C # analyzer only works correctly in the system on which Visual Studio 2017 \ MSBuild 15 is installed. The fact that our distribution contains the necessary versions used by us Roslyn \ MSBuild libraries was not enough. The release of the new version of the C # analyzer “as is” would entail a deterioration in the analysis results for all our users working with previous versions of C # compilers.

When we created the first version of the C # analyzer using Roslyn 1.0, we tried to make our analyzer as independent as possible, without requiring any third-party installed components in the system. In this case, the main requirement for the user system is the collectability of the checked project - if the project is assembled, it can be checked by the analyzer. Obviously, on Windows, to build Visual C # projects (csproj), you need at least MSBuild and a C # compiler.

We immediately abandoned the idea of requiring our users to install the latest versions of MSBuild and Visual C # along with the C # analyzer. If a user normally builds a project, for example, in Visual Studio 2013 (which in turn uses MSBuild 12), the requirement to install MSBuild 15 will look redundant. We, on the contrary, are trying to lower the “threshold” to begin using our analyzer.

Microsoft’s web installers turned out to be very demanding on the size of the downloads they needed - while our distribution was about 50 megabytes in size, the installer, for example, for Visual C ++ 2017 (which is also needed for the C ++ analyzer) estimated the amount of data to download at about 3 gigabytes. As a result, as we found out later, the presence of these components would still be insufficient for the C # analyzer to work correctly.

How PVS-Studio interacts with Roslyn

When we were just starting to develop our C # analyzer, we had 2 ways to interact with the Roslyn platform.

The first option was to use the Diagnostics API, which was specially created for the development of .NET analyzers . This API provides an opportunity, inheriting from the abstract class DiagnosticAnalyzer , to implement their "diagnostics". Using the CodeFixProvider class , users could implement automatic fixes to such warnings.

The undoubted advantage of this approach is the full power of the existing Roslyn infrastructure. Diagnostic rules become immediately available in the Visual Studio code editor, they can be automatically applied both when editing the code in the IDE editor and when starting the project reassembly. This approach does not require the analyzer developer to independently open project files and source files - everything will be done as part of the work of the “native” compiler based on Roslyn. If we would go this way initially, then the problems with the transition to the new Roslyn, most likely, would not arise, at least in the form as it is now.

The second option was to implement a fully autonomous analyzer, similar to how PVS-Studio C ++ works. We stopped on it, because decided to make the C # analyzer infrastructure as close as possible to the existing tool for C / C ++. In the future, this allowed us to quickly adapt both the current C ++ diagnostics (of course, not all, but the part that was relevant for C #), as well as more “advanced” analysis techniques .

Roslyn provides opportunities for implementing this approach: we independently open the Visual C # project files, build syntax trees from the source code, and implement our own mechanism for traversing them. All this is done using the MSBuild and Roslyn APIs. Thus, we got full control over all stages of the analysis, and is not dependent on the operation of the compiler or IDE.

No matter how tempting the “free” integration with the Visual Studio code editor may seem, we prefer to use our own IDE interface, as it presents much more features than the standard Error List (where such warnings will be issued). Using Diagnostics APIwould also limit us to Roslyn-based compiler versions, i.e. going along with Visual Studio 2015 and 2017, while an independent analyzer allowed us to support all previous versions.

During the creation of the C # analyzer, we were faced with the fact that Roslyn turned out to be very tightly tied to MSBuild. Of course, here I am talking about the Windows version of Roslyn, unfortunately, we have not had to work with the Linux version yet, so I can’t say how things are going there.

I’ll say right away that the Roslyn’s API for working with MSBuild projects, in my opinion, remains raw enough even in version 2.0. When creating the C # analyzer, we had to write a lot of different “crutches”, because Roslyn did some things incorrectly (incorrectly = "not like MSBuild would have done when building the same projects"), which ultimately led to false positives and analysis errors when checking source files.

It was precisely this involvement of Roslyn on MSBuild that led to the problems that we encountered when upgrading to Visual Studio 2017.

Roslyn and MSBuild

For the analyzer to work, for the most part, we need to get two entities from Roslyn: the syntax tree of the code being tested and the semantic model of this tree, i.e. the meaning of the syntactic constructions representing its nodes — types of class fields, return values and method signatures, etc. And if to get a syntax tree using Roslyn it is enough to have a file with the source code, then to generate a semantic model of this file, you need to compile a project that includes it.

The upgrade of Roslyn to version 2.0 led to errors in the semantic model in our tests (messages V051 indicate thisanalyzer). Such errors usually appear in the analyzer results as false negative / false positive responses, i.e. some good messages disappear and bad messages appear.

To obtain a semantic model, Roslyn provides the so-called Workspace API, which can open .NET MSBuild projects (in our case it is csproj and vbproj) and get "compilations" of such projects. In this context, we are talking about the Compilation utility class object in Roslyn, which abstracts the preparation and invocation of the C # compiler. From such a “compilation” we can get the semantic model directly. Errors in compilation ultimately lead to errors in the semantic model.

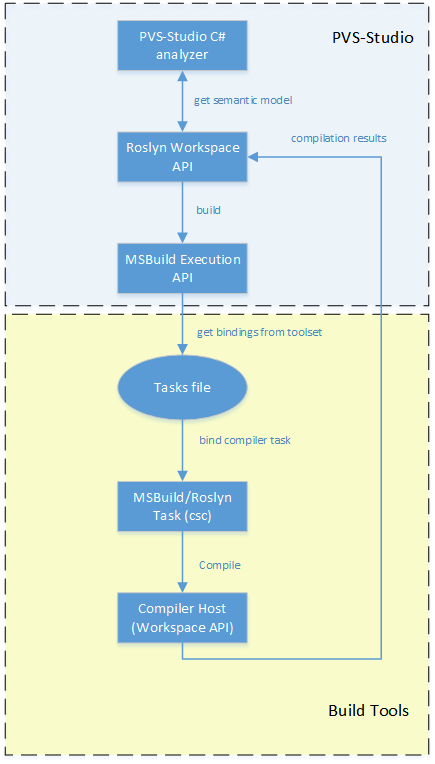

Now let's look at how Roslyn interacts with MSBuild to get a "compilation" of the project. The following is a diagram illustrating this interaction in a simplified form:

Figure 2 - Scheme of interaction between Roslyn and MSBuild

The diagram is divided into 2 segments - PVS-Studio and Build Tools. The PVS-Studio segment contains components that come with our analyzer distribution kit - the MSBuild and Roslyn libraries that implement the APIs we use. The Build Tools segment includes an assembly system infrastructure that must be present in the system for the API data to work correctly.

After the analyzer has requested a compilation object from the Workspace API (to obtain a semantic model), Roslyn starts building the project, or, in MSBuild terminology, executing the csc task. After starting the assembly, control passes to MSBuild, which performs all preparatory steps in accordance with its assembly scripts.

It should be noted that this is not a “normal” assembly (it will not lead to the generation of binary files), but the so-called design mode. The ultimate goal of this step is to get Roslyn all the information that would be provided to the compiler during the "real" build. If the assembly is tied to some kind of pre-assembly steps (for example, running scripts to automatically generate part of the source files), all such actions will also be performed by MSBuild, as if it were a normal assembly.

After gaining control, MSBuild, or rather, the library, which is part of PVS-Studio, will begin to look for installed assembly tools (toolsets) in the system. After finding the appropriate toolset, she will try to instantiate the steps described in the build script. Toolsets correspond to the instances of MSBuild installed on the system. For example, MSBuild 14 (Visual Studio 2015) installs toolset 14.0, MSBuild 12 - 12.0, etc.

Toolset contains all sample build scripts for MSBuild projects. A project file (for example, csproj) usually contains only a list of input assembly files (for example, source files). Toolset contains all the steps that need to be performed on these files: from compilation and linking, to the publication of assembly results. We will not dwell on how MSBuild works, it is only important to understand that one project file and the project parser (that is, the MSBuild library that comes with PVS-Studio) is not enough to complete the assembly.

Go to the Build Tools chart segment. We are interested in the assembly step csc. MSBuild will need to find a library where this step is directly implemented, and the tasks file of the selected toolset will be used for this. A Tasks file is an xml file containing paths to libraries that implement standard assembly tasks. In accordance with this file, a library containing the implementation of the csc task will be found and loaded. This task will prepare everything for calling the compiler itself (usually this is a separate command line utility csc.exe). As we recall, we have a "fake" build, and therefore, when everything is ready, the compiler will not be called. Roslyn already has all the necessary information to obtain a semantic model - all links to other projects and libraries are disclosed (because types can be used in the code being tested,

Fortunately, if at some of these steps something “went wrong”, Roslyn has a spare mechanism for preparing a semantic model based on information known before compilation, i.e. until the transition to MSBuild Execution API. Usually this is information from the evaluation of the project file (which is also done using the separate MSBuild Evaluation API). Often this information is not enough to build a complete semantic model. The most striking example here is the new .NET Core project format, in which the project file itself does not contain anything at all - even a list of source files, not to mention the dependencies. But even in “ordinary” csproj files, we observed the loss of links to dependencies and conditional compilation symbols (define'ov) after unsuccessful compilation,

Something went wrong

Now that, as I hope, it has more or less become clear what is happening “inside” PVS-Studio when checking the C # project, let's see what happened after the upgrade of Roslyn and MSBuild. From the diagram above it is clearly seen that part of the Build Tools from the point of view of PVS-Studio is “in the external environment” and, accordingly, is not controlled by us. As described earlier, we abandoned the idea of carrying the entire MSBuild in the distribution, so we have to rely on what will be installed on the user’s system. There can be many options, because we support working with all versions of Visual C #, starting with the 2010 version of Visual Studio. At the same time, Roslyn began to be used as the basis for the C # compiler starting only with the previous version of Visual Studio - 2015.

Consider the situation when MSBuild 15 is not installed on the system in which the analyzer is launched. We run the analyzer to verify the project under Visual Studio 2015 (MSBuild 14). And here we immediately encounter the first “cant” in Roslyn - when opening the MSBuild project, it does not indicate the correct toolset. If toolset is not specified, MSBuild starts using toolset by default - according to the version of the library used by MSBuild. And because Since Roslyn 2.0 is compiled with dependence on MSBuild 15, this library selects such a version of toolset.

Due to the fact that this toolset is absent in the system, MSBuild does not install this toolset correctly - it creates a “mishmash” of nonexistent and incorrect paths pointing to toolset version 4. Why 4th? Because such toolset along with the 4th version of MSBuild is always available on the system, as part of the .NET Framework 4 (in subsequent versions of MSBuild it was untied from the framework). The result of this is the selection of an incorrect targets file, an incorrect csc task, and, ultimately, errors in the compilation and semantic model.

Why didn’t we encounter such an error on the old version of Roslyn? Firstly, judging by the analyzer usage statistics, most of our users have Visual Studio 2015, i.e. the correct (for Roslyn 1.0) version of MSBuild is already installed.

Secondly, the new version of MSBuild, as I mentioned earlier, stopped using the registry to store its configurations, and, in particular, information about installed toolsets. And if all previous versions of MSBuild stored their toolsets in the registry, then MSBuild 15 now stores it in the config file next to MSBuild.exe. Also, the new MSBuild changed the “registration address” - previous versions were installed uniformly in c: \ Program Files (x86) \ MSBuild \% VersionNumber% , and the new version is now deployed by default to the Visual Studio installation directory (which has also changed compared to previous versions )

This fact sometimes "hid" the wrongly chosen toolset in previous versions - the semantic model was generated correctly with it. Moreover, even if the new toolset we need is in the system, the library we use may not find it, because now it is registered in the app.config file MSBuild.exe, and not in the registry, and the library is not loaded from the MSBuild.exe process, and from PVS-Studio_Cmd.exe. At the same time, the new MSBuild has a spare mechanism for this case. If a COM server is installed on the system, which implements the ISetupConfiguration I mentioned earlier , MSBuild will try to find the toolset in the Visual Studio installation directory. However, the standalone MSBuild installer, of course, does not register this COM interface - only the Visual Studio installer does this.

And finally, thirdly, probably, the main reason was, unfortunately, insufficient testing of our analyzer on various options of supported configurations, which did not allow us to identify the problem earlier. It so happened that on all our machines for daily testing of analyzers, Visual Studio 2015 \ MSBuild 14 was installed. Fortunately, we were able to identify and fix this problem earlier than our clients would have written to us about this.

Having understood why Roslyn does not work, we decided to try to indicate the correct toolset when opening the project. A separate question, which toolset should be considered “correct”? We asked them when we used the same MSBuild API to open C ++ projects for our C ++ analyzer. Because An entire article can be devoted to this issue; we will not dwell on it now. Unfortunately, Roslyn does not provide the opportunity to choose which toolset it will use, so I had to modify its own code (an additional inconvenience for us, because it will not work just to take ready-made Nuget packages). After that, the problems disappeared in some projects from our test base. However, even more projects have new problems. What went wrong now?

It is worth noting that all the processes described in the above diagram occur within the same process of the operating system - PVS-Studio_Cmd.exe. It turned out that when choosing the right toolset, a conflict occurred while loading the dll modules. Our test version uses Roslyn 2.0, part of which is, for example, the Microsoft.CodeAnalysis.dll library, which also has version 2.0. This library was already loaded into the PVS-Studio_Cmd.exe process memory (our C # analyzer) at the time the project analysis started. Let me remind you that we are checking the project for 2015 Visual Studio, so when opening we specify toolset 14.0. Next, MSBuild finds the correct tasks file and starts compiling. Because The C # compiler in this toolset (I remind you that we are using Visual Studio 2015) uses Roslyn version 1.3, then, accordingly, MSBuild is trying to load Microsoft.CodeAnalysis.dll version 1.3 into the process memory. And falls off at this step, because the same module of a higher version is already loaded in the process memory.

What can we do in this situation? Trying to get a semantic model in a separate process or AppDomain? But to get the model, Roslyn is needed (i.e., all those libraries that cause a conflict), and transferring the model from one process \ domain to another can be a nontrivial task, because this object contains links to the projects, compilations and workspaces from which it was obtained.

A better option would be to separate the C # analyzer into a separate backend process from our parser solution common to C ++ and C # analyzers, and make 2 versions of such backends - using Roslyn 1.0 and 2.0, respectively. But this solution also has significant drawbacks:

- the need to directly implement it in the code (accordingly, additional development costs and delay in the release of a new version of the analyzer);

- complicating the development of diagnostic rules code (you have to use ifdefs in the code to support the new syntax from C # 7.0);

- and, probably, the main thing - this approach will not protect us from the release of intermediate new versions of Roslyn.

Let's look at the last item in more detail. During the existence of Visual Studio 2015, 3 updates were released, in each of which the Roslyn compiler version was also updated - from 1.0 to 1.3. When updating, for example, to 2.1, we will have to, in the case of highlighting the backend, make separate versions of the analyzer for each minor studio update, or the possibility of repeating the error with the version conflict will remain with users using not the latest version of Visual Studio.

I also note that the compilation fell off when we tried to work with toolsets that did not use Roslyn, for example, version 12.0 (Visual Studio 2013). The reason was different, but we did not dig deeper, because and already known problems were enough to refuse such a solution.

How we solved the problem of backward compatibility of the analyzer with old C # projects

Having understood the causes of errors, we came to the need to “supply” toolset version 15.0 with an analyzer. Its availability saves us from problems with the version conflict of Roslyn components and allows us to check projects for all previous versions of Visual Studio (the latest version of the compiler is backward compatible with all previous versions of the C # language). I already described above why we decided not to pull the “full-fledged” MSBuild 15 into our installer:

- large volume for download in the MSBuild web installer;

- potential version conflicts after upgrades in Visual Studio 2017;

- the inability of MSBuild libraries to find their own installation directory (with toolset) in the absence of installed Visual Studio 2017.

However, while researching the problems that Roslyn encountered when compiling projects, we realized that our distribution already contained almost all the Roslyn and MSBuild libraries necessary for such a compilation (I recall that Roslyn conducts “fake” compilation, therefore the csc.exe compiler itself not needed). In fact, for a full-fledged toolset, we only needed a few props and targets files in which this toolset was described. And these are ordinary xml files in the format of MSBuild projects, weighing a total of only a few megabytes - we have no problem including these files in the distribution kit.

The main problem was, in fact, the need to "trick" the MSBuild library and force it to pick up "our" toolset as a native. There is a comment in MSBuild code:Running without any defined toolsets. Most functionality limited. Likely will not be able to build or evaluate a project. (eg reference to Microsoft. *. dll without a toolset definition or Visual Studio instance installed) . This comment describes the mode in which the MSBuild library works when it is added to a project simply as a link, and is not used from MSBuild.exe. And this comment is not encouraging, especially its part about the fact that "most likely nothing will work."

How to force MSBuild 15 libraries to use third-party toolset? Let me remind you that this toolset is declared in app.config for the MSBuild.exe file. It turned out that you can add the contents of the config to the config of our application (PVS-Studio_Cmd.exe) and set the environment variable MSBUILD_EXE_PATH for our process with an indication of our executable file. And this method worked! At this point, the latest version of MSBuild was in the Release Candidate 4 stage. Just in case, we decided to see how things were going in the MSBuild master branch on GitHub. And as if according to Murphy’s law, a check was added to the master in the toolset selection code - take toolset from the appconfig only if the name of the executable file is MSBuild.exe. So in our distribution there was a file with a size of 0 bytes with the name MSBuild.exe,

Our adventures with MSBuild did not end there. It turned out that one toolset is not enough for projects using MSBuild extensions - additional assembly steps. For example, these types of projects include WebApplication, Portable, .NET Core projects. When you install the appropriate Visual Studio component, these extensions are defined in a separate directory next to MSBuild. In our “installation” of MSBuild this, of course, was not all. We found a solution thanks to the ability to easily edit “our own” toolset. To do this, we tied the search paths (MSBuildExtensionsPath property) of our toolset to a special environment variable that the PVS-Studio_Cmd.exe process sets depending on the type of the project being checked. For example, if we have a WebApplication project for Visual Studio 2015, we are expecting we’ll compile the user’s project, look for the extensions for the toolset version 14.0, and specify the path to them in our special environment variable. MSBuild needs these paths only to include additional props \ targets files in the assembly script, so there are no problems with version conflicts.

As a result, the C # analyzer can work on the system with any of the versions of Visual Studio that we support, regardless of the presence of any versions of MSBuild. A potential problem for us may now be the user having some own modifications of MSBuild assembly scripts, but due to the independence of our toolset, these modifications can also be made to the work of PVS-Studio if necessary.

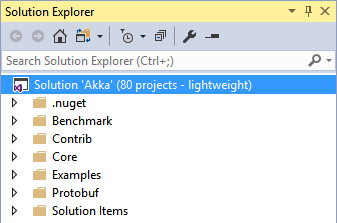

PVS-Studio plugin, Lightweight Solution Load

One of the innovations of Visual Studio 2017, which allows optimizing the work with solutions containing a large number of projects, is the delayed loading mode - “lightweight solution load”.

Figure 3 - lightweight solution load

This mode can be enabled in the IDE both for a single solution and for all downloadable solutions forcibly. The peculiarity of using the “lightweight solution load” mode is only to display the project tree (without loading them) in the Visual Studio Solution Explorer. Downloading the selected project (revealing its internal structure and downloading the files contained in the project) is possible only upon request: after the appropriate user action (opening the project node in the tree), or programmatically. A detailed description of the delayed loading mode is given in the documentation .

In the process of supporting this mode, we encountered a number of difficulties:

- obvious issues related to the lack of necessary information about the files contained in the project until the project is loaded into the IDE;

- the need to use new methods to obtain the above information;

- a number of “pitfalls” related to the fact that we started work on supporting the PVS-Studio plug-in in Visual Studio 2017 long before its release.

Here I would like to note the insufficient, in my opinion, volume of technical documentation on the issue of using the delayed loading mode. In fact, all the documentation that reveals the features of the internal mechanisms for working with the new features of Visual Studio 2017 regarding the “lightweight solution load” mode is limited to one article .

As for the "pitfalls", in the process of bringing the RC Visual Studio version to mind, Microsoft not only eliminated the flaws, but also renamed some methods in the newly added interfaces, which we just used. As a result, a refinement of the seemingly debugged mechanism for supporting the deferred project loading mode in the release version of PVS-Studio was required.

Why in the release? The fact is that one of the interfaces we use turned out to be declared in the library, which is included in Visual Studio 2 times - once in the main installation of Visual Studio, and the 2nd time - as part of the Visual Studio SDK (development package for Visual Studio extensions ) For some reason, the developers of the Visual Studio SDK did not update the RC version of this library in the release of Visual Studio 2017. And because we had this SDK installed on almost all machines (including on a machine that runs nightly tests — it is also used as a build server), we did not find any errors during compilation and operation. Unfortunately, we corrected this error after the release of PVS-Studio, having received a bug report from the user. As for the article I wrote about above,

Conclusion

The release of Visual Studio 2017 turned out to be the most “expensive” to support in PVS-Studio for the entire time the product existed. The reason for this was the coincidence of several factors - significant changes in the work of MSBuild \ Visual Studio, the appearance of a C # analyzer as part of PVS-Studio (which also needs to be supported now).

When we started creating a static analyzer for C # a year and a half ago, we expected that the Roslyn platform would allow us to do this very quickly. These hopes were largely justified - the release of the first version of the analyzer took place after 4 months. We also expected that, in comparison with our C ++ analyzer, the use of a third-party solution will allow us to significantly save on supporting new features in the development of the C # language. This expectation has also been confirmed. Despite all this, the use of a ready-made platform for static analysis was not as “painless” as our experience in supporting new versions of Roslyn / Visual Studio showed. Solving compatibility issues with new C # features, using Roslyn, due to its involvement with third-party components (in particular MSBuild and Visual Studio), creates difficulties in completely different areas. Roslyn's involvement with MSBuild makes it very difficult to use it in a standalone code analyzer.

We are often asked why we also do not want to “rewrite” our C ++ analyzer based on some ready-made solution, for example, Clang. Indeed, this would allow us to remove a number of existing problems in our C ++ kernel. However, in addition to the need to rewrite the existing mechanisms and diagnostics now, one should also not forget that when using a third-party solution, such “pitfalls” will always arise that cannot be fully foreseen in advance.

If you want to share this article with an English-speaking audience, then please use the translation link: Paul Eremeev. Support of Visual Studio 2017 and Roslyn 2.0 in PVS-Studio: sometimes it's not that easy to use ready-made solutions as it may seem

Have you read the article and have a question?

Often our articles are asked the same questions. We collected the answers here: Answers to questions from readers of articles about PVS-Studio, version 2015 . Please see the list.