Scientists have cured AI from forgetfulness

Artificial neural networks differ from their biological counterparts in their inability to “remember” past skills while learning a new task. Artificial intelligence trained to recognize dogs cannot distinguish between people. To do this, he will have to retrain, but the network will “forget” about the existence of dogs. The same goes for games - an AI that knows how to play poker will not win chess.

This feature is called catastrophic forgetting. However, scientists from DeepMind and Imperial College London have developed a deep neural network learning algorithm that can acquire new skills while preserving the “memory” of previous tasks. / photo Dean Hochman CC

A neural network consists of several links, for each of which its weight is calculated. Each weight in the neural network is assigned a parameter F, which determines its significance. The higher the F value for a particular neuron, the less likely it will be replaced during further training. Therefore, the neural network, as it were, “remembers” the most important acquired skills.

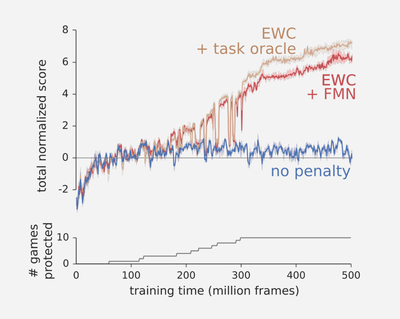

The technique is called Elastic Weight Consolidation, or "elastic fastening of the balance." The algorithm was tested on Atari games. Scientists have shown that without “fixing weights” the program quickly forgot the games when it stopped playing them (blue graph). When using the EWC algorithm, the neural network “remembered” the weights necessary to perform all previous tasks. Although the EWC network lost in each case the classical algorithm, it showed good results in the sum of all stages (red and brown graphs).

The authors of the study say that the scientific community has already made attempts to create deep neural networks that can perform several tasks at once. However, past solutions were either not powerful enough, or required large computational resources, since the networks were trained immediately on a large combined sample (and not several consecutive ones). This approach did not bring the algorithms closer to the principles of the human brain.

There are alternative neural network architectures for working with text, music, and long data series. They are called recurrent and have long-term and short-term memory, which allows you to switch from global to local problems (for example, from the analysis of individual words to the rules of the style of the language as a whole).

Recursive neural networks have memory, but they are inferior to deep networks in their ability to analyze complex sets of attributes that are encountered, for example, when processing graphics. Therefore, a new solution from DeepMind in the future will allow you to create smart universal algorithms that will find application in software for solving problems requiring non-linear transformations.

PS Some more interesting materials from our blog:

This feature is called catastrophic forgetting. However, scientists from DeepMind and Imperial College London have developed a deep neural network learning algorithm that can acquire new skills while preserving the “memory” of previous tasks. / photo Dean Hochman CC

A neural network consists of several links, for each of which its weight is calculated. Each weight in the neural network is assigned a parameter F, which determines its significance. The higher the F value for a particular neuron, the less likely it will be replaced during further training. Therefore, the neural network, as it were, “remembers” the most important acquired skills.

The technique is called Elastic Weight Consolidation, or "elastic fastening of the balance." The algorithm was tested on Atari games. Scientists have shown that without “fixing weights” the program quickly forgot the games when it stopped playing them (blue graph). When using the EWC algorithm, the neural network “remembered” the weights necessary to perform all previous tasks. Although the EWC network lost in each case the classical algorithm, it showed good results in the sum of all stages (red and brown graphs).

The authors of the study say that the scientific community has already made attempts to create deep neural networks that can perform several tasks at once. However, past solutions were either not powerful enough, or required large computational resources, since the networks were trained immediately on a large combined sample (and not several consecutive ones). This approach did not bring the algorithms closer to the principles of the human brain.

Articles from our blog: Machine Learning

There are alternative neural network architectures for working with text, music, and long data series. They are called recurrent and have long-term and short-term memory, which allows you to switch from global to local problems (for example, from the analysis of individual words to the rules of the style of the language as a whole).

Recursive neural networks have memory, but they are inferior to deep networks in their ability to analyze complex sets of attributes that are encountered, for example, when processing graphics. Therefore, a new solution from DeepMind in the future will allow you to create smart universal algorithms that will find application in software for solving problems requiring non-linear transformations.

PS Some more interesting materials from our blog:

- Unboxing all-flash storage NetApp AFF A300: specifications and inside view

- Stretch Deploy for vCloud Connector: Features and Operation

- Three reasons why using VMware vSphere 6.0 is still relevant

- Cloud technologies for solving problems in the construction business

- Feasibility study on the use of IaaS services using the case of a large company as an example

- X-as-a-services: how not to get bogged down with cloud service abbreviations