Events, buses and data integration in the complex microservices world

Valentin Gogichashvili explains microservices. Here is a transcript of the report with Highload ++ .

Good afternoon, I'm Valentin Gogichashvili. I made all the slides in Latin, I hope there will be no problems. I am from Zalando.

What is Zalando? You probably know Lamoda, Zalando was Lamoda's dad of his time. To understand what Zalando is, you need to introduce Lamoda and increase it several times.

Zalando is a clothes store, we started selling shoes, very good by the way. Began to expand more and more. Outside the site looks very simple. For 6 years that I have been working in Zalando and for 8 years of existence, this company was one of the fastest growing in Europe at some time. Six years ago, when I came to Zalando, it grew somewhere around 100%.

When I started 6 years ago, it was a small startup, I arrived quite late, there were already 40 people there. We started in Berlin, over the 6 years we have expanded Zalando Technology to many cities, including Helsinki and Dublin. Data science is in Dublin, mobile developers are in Helsinki.

Zalando Technology is growing. At the moment, we are hiring around 50 people a month, this is a terrible thing. Why? Because we want to build the coolest fashion platform in the world. Very ambitious, let's see what happens.

I want to go back a little bit in history and show you the old world in which you most likely were at some point in your career.

Zalando started as a small service that had 3 levels: web applicaton, backend and database. We used Magento. By the time I was called to Zalando, we were the largest Magento users in the world. We had huge headaches with MySQL.

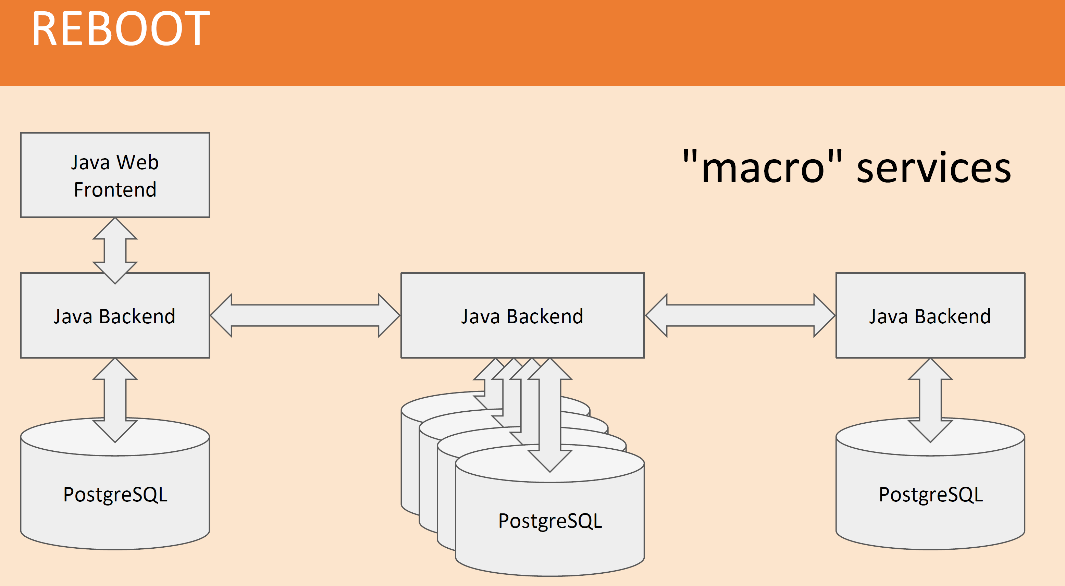

We started the REBOOT project. I came to this project 6 years ago.

What have we done?

We rewrote everything in Java because we knew Java. We installed PostgreSQL everywhere because I knew PostgreSQL. Well, Python is a technical matter. Almost any normal person will support me that Python for tooling is the only right solution (people from the Perl world, do not kill me). Python is a good joke for writing tooling.

We began to develop such a scheme:

We had a macro services system. Java Backend, PostgreSQL storage with PostgreSQL sharding. Two years ago, at the same conference, I talked about how we do PostgreSQL shardings, how we manage circuits, how we roll out versions without downtime, it was very interesting.

Like I said, Java we all knew. SOAP was used to combine macro services with each other. PostgreSQL gave us the opportunity to have very clean data. We had a schema, clean data, transactions, and stored procedures, which we taught all java-developers or those who remained from the PHP world, which we taught Java and stored procedures.

One hint: if you are in a mode of less than 15 million users per month, then you can use the Java SProc Wrapper system to automatically shard PostgreSQL from Java. A very interesting thing that PostgreSQL into an RSP system, in essence.

Everything was fine, we wrote and rewrote everything. We first bought a management system for our warehouses, and then rewrote everything. Because we had to move much faster than the people from whom we bought the system could do this.

Everything worked perfectly until the problem with personnel began. Our beautiful world began to crumble before our eyes. The standardization system, its level, which we introduced at the Java level, SOAP began to crumble. People began to complain and leave or just not come.

We told them: you have to write in Java, if you leave, what will we do? If you write something on Haskell or Clojure and leave, what will we do? And they answered us fuck you.

We decided to take the matter seriously. We decided to rebuild not only the architecture, but the entire organization. We began the process of restructuring an organization that the German industry did not see, in which we said that we are completely destroying everything that we had. It was an organization in which there were around 900 people, we are destroying the hierarchical structure in the form in which it was. We are announcing Radical Agility.

What does it mean?

We announce that we have teams that are autonomous, that move forward meaningfully. Of course, we want people who were engaged in business, they did this business with skill.

Autonomous teams.

They can choose their own technology stack. If the team decided that they would write in Haskell or Clojure, then so be it. But you have to pay for it. Teams must support the services they wrote themselves, wake up at night themselves. Including selection of persistent stack. We taught you PostgreSQL, if you want to choose MongoDB, but there is no stop, we have blocked MongoDB. We have a technology radar in which we conduct monthly surveys and technologies that we consider dangerous, put on the red sector. This means that the team can choose these technologies, but they blame themselves completely if something goes wrong.

We said that teams will be isolated by their AWS accounts. Before that, we were in our own data centers, choosing AWS, we made a deal with the devil. We said, we know that it will cost more, but we will move faster. We will not have situations like before, in our own data centers: in order to order one hard drive, it took 6 weeks. It was unbearable and impossible. We could not move forward.

Too many people believe that autonomy is anarchy. Autonomy is not anarchy. With autonomy, a lot of responsibility comes, especially for Zalando, which is a publicly traded company. We are on the stock exchange and as in any publicly traded company, auditors come to us and they check how our systems work. We had to create some kind of structure that would allow our developers to work with AWS, but still be able to answer the questions of level auditors: “Why do you have this public IP address without identifications?”

The result was such a system:

We wanted to make it as simple as possible, it is really simple. But everyone swears when they see her.

If you go to AWS, a reminder to you, with this speed and openness, and if you choose the idea with microservices or public services, then you may have to pay for it. Including if you want to make a system that is safe, which answers questions that our auditors may ask.

Of course, we said that in order to support a diverse stack of technologies, we are raising the standardization level from Java and PostgreSQL to a higher level. We are raising the standardization level to the level of REST APIs.

What does it mean? I noted this in a previous report that we needed an API description system. Description of the system of how microservices communicate with each other. We need order. At some level, we need to standardize. We announced that we will have an API first system. And that each service before they start writing, the team must come to the guild API and persuade them to accept the API as part of the approved API. We wrote REST API guidelines, very interesting. They were even referenced in some resources. API first libraries that allow you to use Swagger (OpenAPI) as root for the server. For example, connection is the root for flask in Python, and play-swagger is the root for the play system in Scala. Clojure has the same root, it is very convenient. You write the Swagger file first, describe what

But the problem is with microservices. I want to repeat this phrase several times. Microservices are a response to organizational problems; this is not a technical answer. I will not advise microservices to anyone who is small. I will not recommend microservices to those who have no problems with a diverse technological base, who do not need to write one service in Scala, another service in Python or Haskell. The number of problems with microservices is quite high. This barrier. In order to overcome it, you need to experience a lot of pain before this, as we did.

One of the biggest problems with world services: microservices by definition block access to the data persistence system. The bases are hidden inside the microservice.

Therefore, the classic extract transform load process does not work.

Let's take one step back and remember how we work in the classical world. What do we have? We have a classic world, we have developers, junior developers, senior developers, DBA and Business Intelligence.

How it works?

In the simple case, we have business logic, a database, the ETL process takes our data directly from the database and pushes it into Date Warehouse (DWH).

On a larger scale, we have many services, many databases and one process, which is most likely written in pens. Data is pulled from these databases and put into a special database for business analysts. It is very well structured, business analysts understand what they are doing.

Of course that's all - not without problems. It is all very difficult to automate. In the world of microservices, this is not so.

When we announced microservices, when we announced Radical Agility, when we announced all these great innovations for developers, business analysts were very unhappy.

How to collect data from a huge number of microservices?

This is not about dozens, but about hundreds or even thousands. Then Valentine comes on a horse and says: we will write everything in a stream, in a queue. Then the architects say: why queue? Someone will use Kafka, someone will use Rabbit, how will we integrate all this? Our security officers said: never in life, we will not allow. Our business analysts said: if there is no diagram there, we hang ourselves and we can’t understand what’s going on, it’s just a gutter, not a data transport system.

We sat down and began to confer and decide what to do. Our main goals: ease of use of our system, we want that we do not have a single point of failure, there is no such monster that if it falls, then everything will fall. There must be a secure system, and this system must satisfy the needs of business intelligence, the system must satisfy our data-science. It should, in a good case, enable other services to use this data that flows through the bus.

Very simple. Event Bus.

We can pull Business Intelligence from Event Bus or into some Data heavy services. DDDM has been a favorite concept lately. This is data driven decisions making system. Any manager will be delighted with such a word. Machine learning and DDDM.

What did we come up with?

Nakadi. You probably understood that my surname is quite Georgian. Nakadi in Georgian means flow. For example, a mountain stream.

We started to make such a stream. The basic principles that we put in there, I will repeat a little.

We said that we will have a standard HTTP API. If possible - restful. We will make a centralized or possibly not very centralized event type registry. We will introduce different classes of event types. For example, at the moment we have two classes supported. These are data capture and business events. That is, if entities are changing, then we can record event capture with all the necessary meta-information. If we just have information that something happened in the business process, then this is usually a much simpler case, and we can write a simpler event. But still, business analysts require that we have an organized structure that can be automatically parsed.

Having vast experience with PostgreSQL and with schemas, we know that without the support of versioning schemas, nothing will work. That is, if we slide down to the level where programmers will have to describe order created, then order created 1,2,3, we will essentially make a system similar to Microsoft Windows, and it will be very difficult, especially in order to understand how to develop an entity; how an entity is versioned. It is very important that this interface allows you to stream data so that you can respond as soon as possible to the arrival of messages and notify everyone who wants the message to arrive.

We did not want to reinvent the wheel. Our goal is to make the most minimal system that will use existing systems. Therefore, at the moment, we have taken Kafk as an underline system and PostgreSQL for storing metadata and schema.

Nakadi Cluster is what we have. Exists in the form of an open source project. At the moment, it is validating the scheme that was registered before. He knows how to write additional information in the meta-field for event'a. For example, the time of arrival, or if the client did not create unique id for the event, then the unique id can be stuffed there.

We also thought that we needed to take control of the offset. Those who know how Kafka works. Somebody knows? Good, but not most. Kafka is a classic pub / sub system in which a producer writes data sequentially, but the client does not store it, as in classical message systems.

No separate copies of message are created for the client, the only thing the client needs is offset. That is, a shift in this endless stream. You can imagine that Kafka is such an endless stream of data in which each line is numbered. If your client wants to collect data, he says: read from position X. Kafka will give him this data from position X. In this way, data ordering is guaranteed, thus it is guaranteed that a lot of information is not stored on the server, as is usually done in classic message- systems that allow you to commit part of the read events. In this situation, we have a problem in that you cannot commit a piece of a read block. Offtext has now started, I didn’t want to talk about Kafk'y, sorry.

High level interface makes reading from kafk very easy for clients. Clients should not exchange information on which section they read from, which offsets they store. The client simply comes and receives what is needed from the system. We decided on the path of minimum resistance. Zookeeper already exists for Kafk, no matter how terrible Zookeeper is, we already have it, we already need to manage it and we use it to store offs and additional information. PostgreSQL - for metadata and schema storage.

Now I want to tell you in which direction we are moving.

We are moving very fast. Therefore, when I return to Berlin, some parts will already be done.

At the moment, we have Nakadi Cluster, we have Nakadi UI, which we started writing on Elm to interest other people. Elm is cool, love him.

The next step we want to be able to manage multiple clusters. We already saw the jambs when a new producer arrives and begins to write 10 thousand events per second, without warning about anything.

Our cluster does not have time to scale. We want us to have different clusters for different types of data. We made the standardization of the interface specifically so that there was no connection to Kafk'y.

We can switch from Kafk'i to Redis. And from Redis to Kinesis. Essentially, the idea is that depending on the need for the service and the properties of the events they write, if someone is not interested in ordering, orderliness, then you can use a system that does not support ordering and is more efficient than Kafka. At the moment, we have the opportunity to abstract this using our interface.

Nakadi Scheme Manager needs to be pulled out of the cluster, because it must be baked. The next step is such an idea that our circuits are detected. That is, the microservice rises, publishes its swagger file, publishes a list of events in the same format as swagger. Automatically, crawker takes this all away and eliminates the need for developers to additionally inject the scheme into the message bus before deployment.

And of course, topology manager, so that you can somehow root the producer and consumers into different clusters. They said that Kafka works like an elephant. No, not like an elephant, but like a steam locomotive. In our situation, this engine breaks all the time. I don’t know who produced this engine, but in order to manage Kafk in AWS, it turned out to be not so simple.

We wrote the Bubuku system, a very good name, very Russian.

What does Bubuku do?

I had a large slide that indicated what Bubuku was doing, but it turned out to be very large. Everything can be viewed here .

In the trailer, Bubuku has the goal of doing what others don't do with Kafk. The main ideas are that it is automatically reportition, automatic scaling and the ability to survive lightning hits, crazy monkeys that kill instances.

By the way, Chaos Monkey is testing our system, and it all works very well. I recommend everyone, if you write microservices, always think about how this system survives Chaos Monkey. This is a Netflix system that randomly kills nodes or disconnects a network, spoils your system.

Whatever system you build, if you do not test it, it will not work if something breaks.

Concluding my superficial story, I want to say: what I talked about, now we are developing in open source. Why open source? We even wrotewhy does zalando do open source .

When people write in open source, they write not for the company, but for themselves in part. Therefore, we see that the quality of products is better; we see that the isolation of products from infrastructure is better. No one writes inside zalando.de and does not edit keys, does not commit in Git.

We have principles on how to open source. Do you have questions in the company should we open source or not? There is an open source first principle. Before starting a project, we think whether it is worth an open source. In order to understand and answer this question, you need to answer the questions:

- Who needs this?

- Do we need this?

- Do we want to do this as an open source project?

- Can we keep what we keep in this publice tree?

There are things when you don't need open source:

- If your project contains domain knowledge, then what makes a company your company, this cannot be open source, of course.

This is the last slide, here are the projects that were mentioned today:

If you go to zalando.github.io , there are a lot of projects on PostgreSQL, a lot of libraries for both the backend and the frontend, I highly recommend it.

I'm out of time.

Report: Events, Buses, and Data Integration in the Challenging World of Microservices