Incremental analysis in PVS-Studio: now also on the build server

Introduction

When introducing a static analyzer into an existing development process, the team may encounter certain difficulties. For example, it is very useful to check the modified code before it gets into the version control system. However, performing static analysis in this case may require quite a long time, especially on projects with a large code base. In this article, we will consider the incremental analysis mode in the PVS-Studio static analyzer, which allows you to check only modified files, which significantly reduces the time required for code analysis. Consequently, developers will be able to use static analysis as often as necessary and minimize the risks of code containing the error in the version control system. The reason for writing the article was, firstly,

Scenarios for using a static analyzer

Any approach to improving software quality assumes that defects should be detected as early as possible. In an ideal situation, the code needs to be written without errors at all, but nowadays this practice has been successfully implemented in only one corporation:

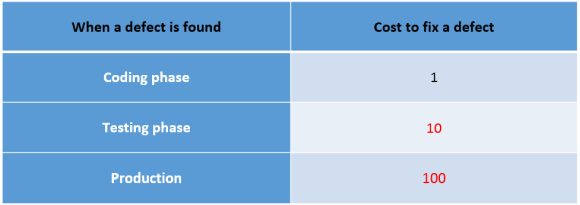

Why is it so important to detect and fix errors as early as possible? I will not talk here about such trivial things as reputational risks that will inevitably arise if your users begin to massively detect defects in your software. Let's focus on the economic component of correcting errors in the code. We do not have statistics on the average price of an error. Errors are very different, they are detected at different stages of the software life cycle, software can be applied in different subject areas, both critical to errors and not so. Therefore, although the industry average cost of fixing a defect depending on the stage of the software life cycle is unknown, you can evaluate the change in this cost using the well-known rule “1-10-100”. In relation to software development, this rule states that if the cost of eliminating a defect at the development stage is 1?, Then after passing this code to testing it will already be 10?, And grows to 100? after the defective code went to production:

There are many reasons for such a rapid increase in the price of fixing a defect, for example:

- Changes in one part of the code can affect many other parts of the application.

- Repeating the tasks already done: design changes, coding, making adjustments to the documentation, etc.

- Delivery of the corrected version to users, the need to convince users to upgrade.

Understanding the importance of correcting errors at the earliest stages of the software life cycle, we offer our customers to use a two-level code verification scheme with a static analyzer. The first level is checking the code on the developer's computer before the code is embedded in the version control system. That is, the developer writes some piece of code and immediately inspects it with a static analyzer. For this task, we have a plugin for Microsoft Visual Studio (all versions from 2010 to 2015 inclusive are supported). The plugin allows you to check one or more source code files, one or more projects, or the entire solution.

The second level of protection is the launch of static analysis during the nightly build of the project. This allows you to make sure that new errors have not been added to the version control system, or to take the necessary actions to correct these errors. In order to minimize the risk of errors entering the later stages of the software life cycle, we suggest using both levels of performing static analysis: locally on the machines of developers and on a centralized server for continuous integration.

Such an approach, however, is not without drawbacks and does not guarantee that errors that can be detected by the static analyzer do not fall into the version control system or, in extreme cases, are corrected before the build goes into testing. Forcing developers to manually perform static analysis before committing, they will certainly encounter strong resistance. Firstly, nobody wants to sit on projects with a large code base and wait until the project is verified. Or, if the developer decides to save time and check only those files in which he changed the code, he will have to keep records of the changed files, which, naturally, no one will do either. If we consider an assembly server, on which, in addition to nightly assemblies, the assembly is also configured after detecting changes in the version control system,

The incremental analysis mode, which allows you to check only the last modified files, helps to solve these problems. Let us consider in more detail what advantages the incremental analysis mode can bring when used on developers' computers and on an assembly server.

Incremental analysis on the developer's computer - a barrier to bugs in the version control system

If the development team decided to use static code analysis, and the analysis is performed only on the build server, during, for example, nightly builds, sooner or later developers will begin to perceive the static analyzer as an enemy. It is not surprising, because all team members will see what mistakes their colleagues make. We strive to ensure that all project participants perceive the static analyzer as a friend and as a useful tool that helps improve the quality of the code. In order to prevent errors that the static analyzer can detect, do not fall into the version control system and are widely known, static analysis should also be performed on the computers of the developers in order to identify possible problems in the code as early as possible.

As I already mentioned, manual launch of static analysis on the entire code base can take quite a while. If the developer is forced to remember which files with the source code he worked on, this will also be very annoying.

Incremental analysis can both reduce the time it takes to perform a static analysis and eliminate the need to manually run the analysis. Incremental analysis starts automatically after building a project or solution. We consider this trigger to be the most suitable for triggering analysis. It is logical to verify that the project is going to, before making changes to the version control system. Thus, the incremental analysis mode allows you to get rid of the annoying actions associated with the need to manually perform static analysis on the developer's computer. Incremental analysis is performed in the background, so the developer can continue to work on the code without waiting for the verification to complete.

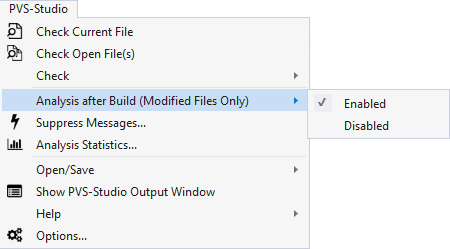

You can enable the mode of post-assembly incremental analysis in the PVS-Studio / Incremental Analysis After Build (Modified Files Only) menu; this item is activated in PVS-Studio by default:

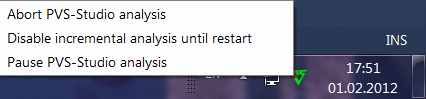

After activating the incremental analysis mode, PVS-Studio will automatically in the background analyze all affected files immediately after the project is completed. If PVS-Studio detects such modifications, incremental analysis will be launched automatically, and an animated PVS-Studio icon will appear in the Windows notification area:

Details related to the use of incremental analysis are discussed in the article PVS-Studio incremental analysis mode .

Incremental analysis on a continuous integration server - an additional barrier to bugs

The practice of continuous integration implies that the project is assembled on the build server after each commit to the version control system. As a rule, in addition to building a project, an existing set of unit tests is also performed. In addition to unit tests, many teams that use continuous integration practice use a build server to provide continuous quality control processes. For example, in addition to running unit and integration tests, these processes may include performing static and dynamic analysis, measuring performance, etc.

One of the important requirements for the tasks performed on the continuous integration server is that the project must be assembled and all additional steps must be completed quickly so that the team can quickly respond to the detected problems. Performing static analysis on a large code base after each commit to the version control system contradicts this requirement, since it can take a very long time. We did not agree to put up with such a restriction, so we looked at our plug-in for Visual Studio, which has long had an incremental analysis mode, and asked ourselves: why don't we implement the same mode in the module for the command line PVS-Studio_Cmd.exe ?

It is said - done, and in our module for the command line, which is designed to integrate the static analyzer into various assembly systems, an incremental analysis mode has appeared. This mode works the same as incremental analysis in a plugin.

Thus, with the addition of incremental analysis support to PVS-Studio_Cmd.exe, it has become possible to use our static analyzer in a continuous integration system during numerous daily builds. Due to the fact that only modified files will be checked from the moment of the last code update from the version control system, the static analysis will be performed very quickly, and the build time of the project will practically not increase.

To activate the incremental analysis mode in the module for the PVS-Studio_Cmd.exe command line, specify the key--incremental and set one of the following operation modes:

- Scan - analyze all dependencies to determine which files should be performed incremental analysis. The analysis itself will not be performed.

- Analyze - perform incremental analysis. This step must be performed after the Scan step is completed, and can be performed both before and after the assembly of the solution or project. Static analysis will only be performed on modified files since the last build.

- ScanAndAnalyze - analyze all dependencies to determine which files the incremental analysis should be performed on, and immediately perform an incremental analysis of modified files with the source code.

For more information about the incremental analysis mode in the module for the PVS-Studio_Cmd.exe command line, refer to the PVS-Studio Incremental Analysis Mode and Verifying Visual C ++ (.vcxproj) and Visual C # (.csproj) projects from the command line with the articles PVS-Studio .

I also want to note that the use of the BlameNotifier utility provided in the PVS-Studio distribution kit is perfectly combined with the functionality of incremental analysis. This utility interacts with popular version control systems (currently supported by Git, Svn and Mercurial) to get information about which of the developers has committed the code containing the errors and send notifications to these developers.

Therefore, we recommend using the following scenario of using a static analyzer on a continuous integration server:

- for numerous daily builds, perform incremental analysis to control the quality of the code of only modified files;

- for nightly assembly, it is advisable to perform a full analysis of the entire code base in order to have complete information about defects in the code.

Features of the implementation of the incremental analysis mode in PVS-Studio

As I already noted, the incremental analysis mode in the PVS-Studio plug-in for Visual Studio has existed for a long time. In the plugin, the definition of modified files for which incremental analysis should be performed was implemented using Visual Studio COM wrappers. This approach is absolutely not applicable for the implementation of the incremental analysis mode in our command line version of our analyzer, as it is completely independent of the Visual Studio internal infrastructure. Supporting different implementations that perform the same function in different components is not a good idea, so we immediately decided that the plug-in for Visual Studio and the command-line utility PVS-Studio_Cmd.exe would use a common code base.

Theoretically, the task of detecting modified files since the last build of the project is not particularly difficult. To solve it, we need to obtain the modification time of the target binary file and the modification time of all files involved in the construction of the target binary file. Those source code files that were modified later than the target file should be added to the list of files for incremental analysis. The real world, however, is much more complicated. In particular, for projects implemented in C or C ++, it is very difficult to determine all the files involved in the construction of the target file, for example, those header files that were connected directly in the code and are not in the project file. Here I want to note that under Windows both our plug-in for Visual Studio (which is obvious) and the version for the command line PVS-Studio_Cmd. exe only support analysis of MSBuild projects. This fact has greatly simplified our task. It is also worth mentioning that in the Linux version of PVS-Studio it is also possible to use incremental analysis - there it works out of the box: when using compilation monitoring, only collected files will be analyzed. Accordingly, incremental analysis will start incremental analysis; with direct integration into the assembly system (for example, into make files), the situation will be similar. incremental assembly will start incremental analysis; with direct integration into the assembly system (for example, into make files), the situation will be similar. incremental assembly will start incremental analysis; with direct integration into the assembly system (for example, into make files), the situation will be similar.

MSBuild implements a mechanism for tracking file system calls ( File Tracking ). For incremental assembly of projects implemented in C and C ++, the correspondence between the source files (for example, cpp-files, header files) and the target files are recorded in * .tlog-files. For example, for a CL task, the paths to all source files read by the compiler will be written to the CL.read. {ID} .tlog file, and the paths to the target files will be saved to the CL.write. {ID} .tlog file.

So, in the CL. *. Tlog files we already have all the information about the source files that were compiled, and about the target files. The task is gradually simplified. However, the task remains to bypass all the source and target files and compare the dates of their modification. Is it possible to simplify yet? Of course! In the Microsoft.Build.Utilities namespace , we find the classes CanonicalTrackedInputFiles and CanonicalTrackedOutputFiles , which are responsible for working with the CL.read. *. Tlog and CL.write. *. Tlog files, respectively. By creating instances of these classes and using the CanonicalTrackedInputFiles.ComputeSourcesNeedingCompilation method , we get a list of source files that need to be compiled based on the analysis of the target files and the dependency graph of the source files.

We give an example of a code that allows you to get a list of files on which incremental analysis should be performed using our approach. In this example, sourceFiles is a collection of full normalized paths to all project source files, tlogDirectoryPath is the path to the directory where * .tlog files are located:

var sourceFileTaskItems =

new ITaskItem[sourceFiles.Count];

for (var index = 0; index < sourceFiles.Count; index++)

sourceFileTaskItems[index] =

new TaskItem(sourceFiles[index]);

var tLogWriteFiles =

GetTaskItemsFromPath("CL.write.*", tlogDirectoryPath);

var tLogReadFiles =

GetTaskItemsFromPath("CL.read.*", tlogDirectoryPath);

var trackedOutputFiles =

new CanonicalTrackedOutputFiles(tLogWriteFiles);

var trackedInputFiles =

new CanonicalTrackedInputFiles(tLogReadFiles,

sourceFileTaskItems, trackedOutputFiles, false, false);

ITaskItem[] sourcesForIncrementalBuild =

trackedInputFiles.ComputeSourcesNeedingCompilation(true);Thus, using standard MSBuild tools, we have achieved that the file definition mechanism for incremental analysis is identical to the internal MSBuild mechanism for incremental assembly, which ensures very high reliability of this approach.

Conclusion

In this article, we looked at the benefits of using incremental analysis on developer computers and on a build server. We also looked under the hood and learned how, using the capabilities of MSBuild, to determine which files to perform incremental analysis. I suggest that everyone who is interested in the capabilities of our product download the trial version of the PVS-Studio analyzer and see what can be found in your projects. All the quality code!

If you want to share this article with an English-speaking audience, then please use the link to the translation: Pavel Kuznetsov. Incremental analysis in PVS-Studio: now on the build server

Have you read the article and have a question?

Often our articles are asked the same questions. We collected the answers here: Answers to questions from readers of articles about PVS-Studio, version 2015 . Please see the list.