The path to the contactless lie detector, or How to arrange a hackathon at maximum speed

Once Steve Jobs and Steve Wozniak closed in the garage and rolled out the first Mac. It would be great if you could always close the programmers in the garage and get an MVP with great potential. However, if you add a couple of people to programmers who are ready to evaluate user experience and look for something innovative, the chances of success are growing.

Our team of 5 people had a certain idea, for which we decided slightly take over the world pokakatonit.

MVP Description

Application for HR-managers, allowing to determine the applicant's psycho-type and behavior patterns.

Required components:

- Database of questions, types of reactions and the system of bringing these things to the psycho type

- The definition of human emotions, based on the manifestations of AU - certain movements of the facial muscles.

- Non-contact pulse detection

- Determination of gaze direction

- Determination of blink rate

- A system for aggregating the above-described retrieved data into a stress graph and other useful metadata.

MVP for us is an island of security between the very idea and the big Anne project, to which we are heading.

How was it

So that during development there would be no distractions, it was decided to rent an apartment in the nearest resort town with catering. Arriving there on Sunday and arranging our jobs, we immediately sat down for discussion. On the very first night, the bricks were identified, which should definitely be in the final MVP. And while the guys were polishing the idea, they went to the city for consultations with HR managers, the developers (myself included) were already preparing the groundwork.

Technical part

It was decided to make the definition of emotions strictly through FACS , since this method has sufficient scientific validity compared to, for example, such a rough approach. Accordingly, the task was broken into

- Network training predicting 68 face marks

- Normalization / filtering of the face image

- Algorithm that detects facial movements in dynamics

The training, by the way, was done on the Radeon RX580 with the help of PlaidML, which I already mentioned in my previous article . Thank you very much here you need to tell the imgaug library , which allows you to apply affine transformations simultaneously to images and points on it (in our case, to landmarks).

Some Augmented Images:

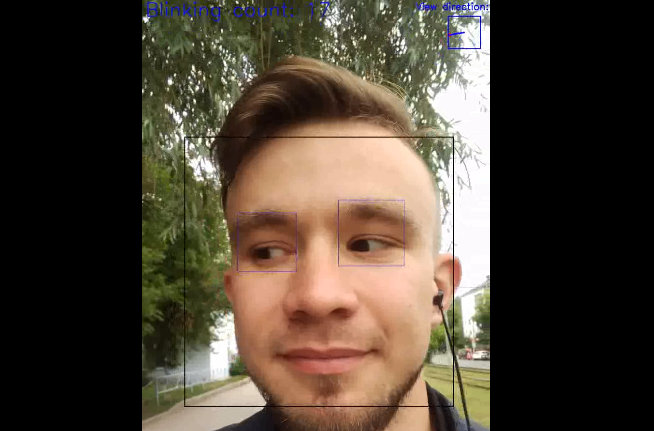

To determine the direction of the gaze, an algorithm on classical computer vision was originally used, searching for a pupil on the eye area on the HOGs. But soon the understanding came that often the pupil is not visible and the direction of gaze can be described not only by him, but also by the location of the eyelids. Due to these difficulties, the solution was transferred to the neural network approach. We cut and dated Dataset ourselves, driving through the first algorithm, and then manually corrected the places of his mistakes.

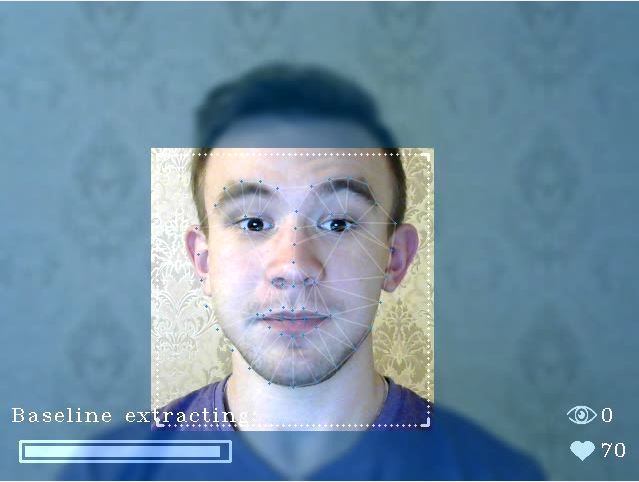

The first developments began in the summer and existed in a dirty Python script:

The determination of the blinking frequency resulted from the aggregation of the parts of the two algorithms described above - bringing the Landmarks of the eyelids closer and looking downward.

The determination of the pulse from the video stream was based on the idea of the absorption of the green component of light by blood particles and was complemented by algorithms for tracking and extracting areas of interest (skin).

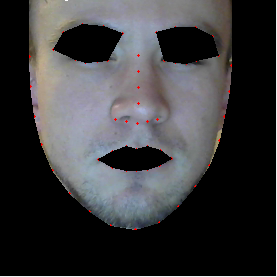

Eerie mask goes, of course:

In fact, the creation of the above-described bricks has been reduced to the implementation of the State Of The Art algorithms with modifications that improve the accuracy in our particular case. Fortunately, there is arxiv.org .

Difficulties have appeared in creating the logic of normalizing the face image and the algorithms for evaluating the obtained data. For example, when recognizing faces, the Active Appearance Model is actively used — the face, based on the points found, is stretched onto the overall texture of the face. But it is important to us the relative position of the points! As an option - to filter too turned faces, or to stretch on the texture only by “anchors”, key points that do not reflect the movement of muscles (for example, a point on the nose bridge and the edge of the face). This problem is now one of the main and does not allow to obtain reliable data if the face is too rotated (we can count the angle of rotation too!). The permissible framework for today is + -20 ° along both axes. Otherwise, the face is simply not processed.

Of course, there are other problems:

- Determination of landmarks if a person is wearing glasses

- Baseline extraction if the person is grimacing

- Pulse detection in highly flickering light

Oh yes, and what is the "baseline" ? The fundamental concept in the processing of emotions by FACS methods. The baseline extraction algorithm is probably one of the most important know-how for our hackathon.

In addition to algorithms, there is another important point that we could not forget about - performance. And the performance ceiling is not even a PC, but a regular laptop. As a result, all the algorithms are as easy as possible, and the networks undergo iterative reduction in size while maintaining acceptable accuracy.

The result is 30-40% on the Intel i5 at 15-20 fps. It is clear that there is a certain margin that will disappear as additional modules are added.

Plans to determine:

- Sore throat

- Face color change

- Respiratory rate

- Intensity of body movements

- Human postures patterns

- Trembling voice

What else can we do?

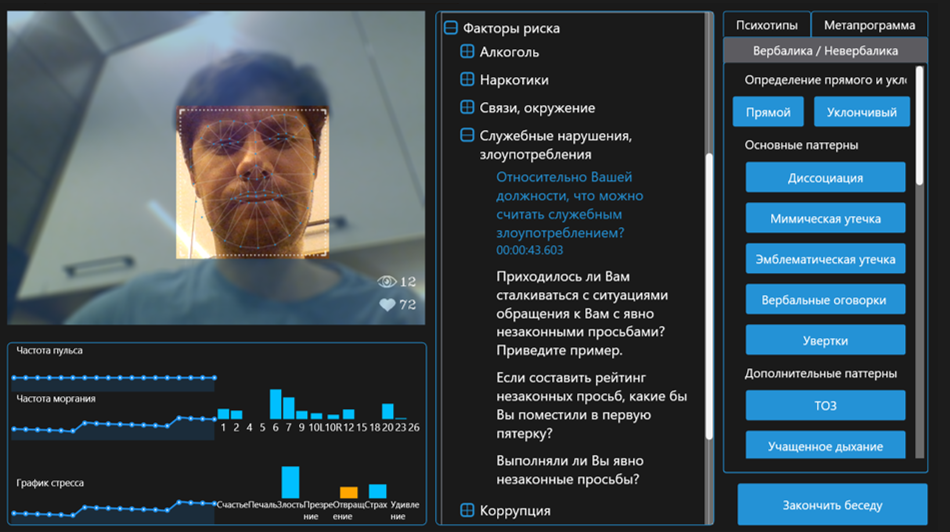

I, as a fan of computer vision and ML, told you a little about the algorithms used in our software. But due to its incompleteness, for this application, the above possibilities are rather a pleasant addition. The most important part is the developed system for determining the human psychotype. What is the point? Unfortunately, my colleagues (friends!) Were engaged in this and I would not be able to explain what was coming from. But for a minimal understanding, you can consider how to work with the received software:

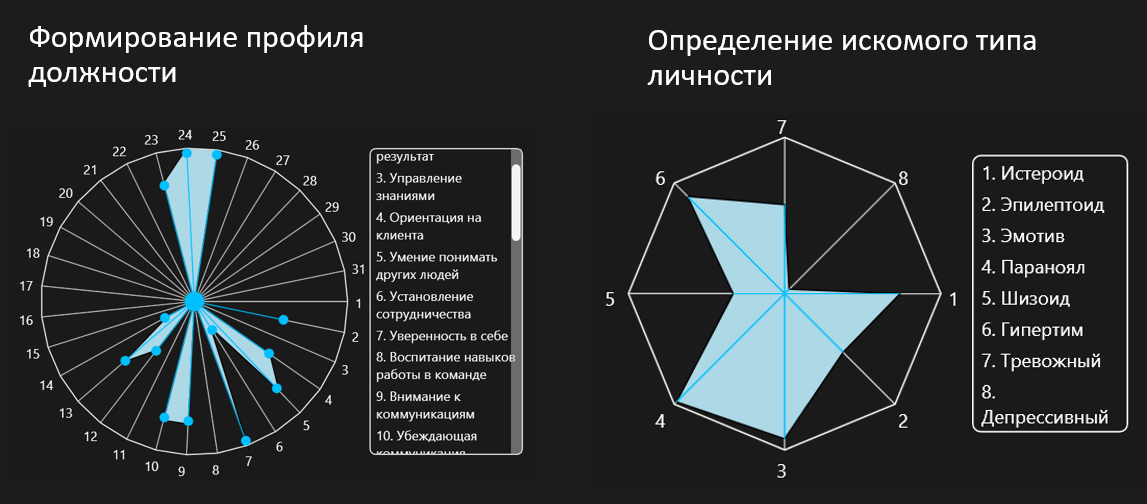

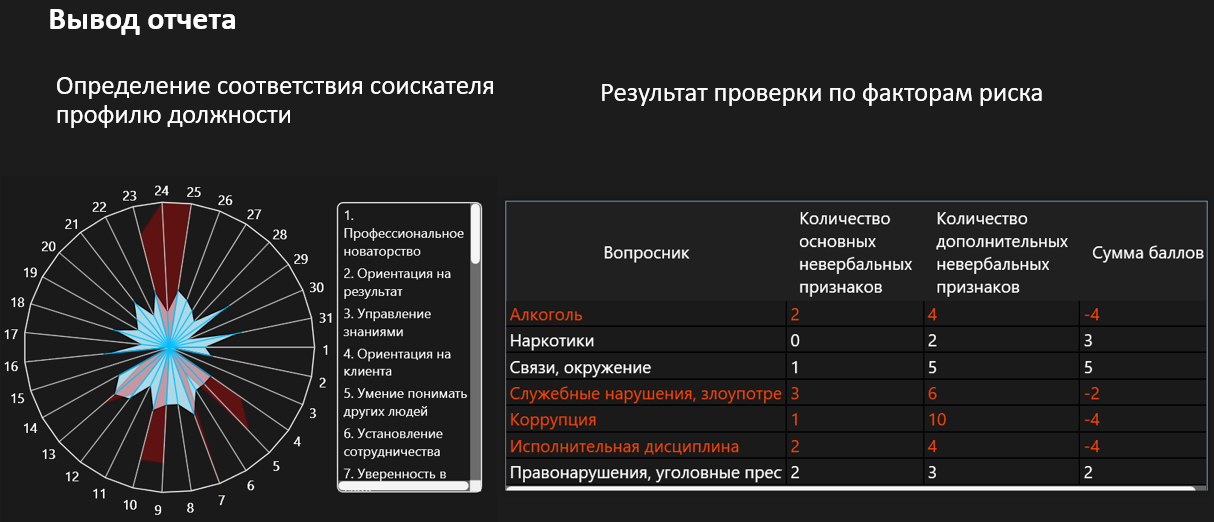

HR sets the qualities that are especially needed for the job being considered:

HR conducts an interview, asking some of the questions of the prepared database (during the interview HR has additional information about the emotions and level of stress)

During or after the interview, HR fills in answers to questions and behavioral patterns:

Through the developed matrixes, the software builds an infographic displaying the coincidence of the specified and defined qualities:

After the interview there is a record that allows you to return to the interview at any time and evaluate one or another moment.

Total

14 days x 12 hours + 3 developers + 2 specialists in the field of lie determination = ready MVP. The immersion was maximum - up to the fact that at lunchtime we watched the TV series Fool Me - I strongly advise.

Not to be unfounded, I attach an example of how the application works now:

As well as a promotional video of a big solution "Anne", to which we step.