Deep learning for beginners: fine tuning the neural network

- Transfer

- Tutorial

Introduction

Introducing the third (and final) article in a series designed to help you quickly understand deep learning technology ; we will move from basic principles to non-trivial features in order to get decent performance on two data sets: MNIST (classification of handwritten numbers) and CIFAR-10 (classification of small images into ten classes: plane, car, bird, cat, deer, dog, frog , horse, ship and truck).

Last time, we looked at a convolutional neural network model and showed how, using a simple but effective regularization method called dropout, you can quickly achieve 78.6% accuracy using the Keras deep learning network framework.

Now you have the basic skills necessary to apply deep learning to most interesting tasks (the exception is the task of processing non - linear time series , the consideration of which is beyond the scope of this guide and for the solution of which recurrent neural networks are usually preferable(RNN). The final part of this guide will contain something that is very important, but often missed in such articles - the tricks and tricks of fine-tuning the model in order to teach it to generalize better than the basic model with which you started.

This part of the manual assumes familiarity with the first and second articles of the cycle.

Hyperparameter setup and base model

Usually, the process of developing a neural network begins with the development of a simple network, either directly using those architectures that have already been successfully used to solve such problems, or using those hyperparameters that previously yielded good results. In the end, we hope that we will achieve a level of performance that will serve as a good starting point, after which we can try to change all the fixed parameters and extract maximum performance from the network. This process is commonly referred to as tuning hyperparameters , because it involves changing the network components that must be installed before training begins.

Although the method described here may provide more tangible benefits on CIFAR-10, due to the relative complexity of quickly prototyping on it in the absence of a graphics processor, we will focus on improving its performance on MNIST. Of course, if resources allow, I urge you to try these methods on CIFAR and see for yourself how much they benefit compared to the standard CNN approach.

The starting point for us will be the original CNN, presented below. If any code fragments seem incomprehensible to you, I suggest that you familiarize yourself with the previous two parts of this series, which describes all the basic principles.

Base Model Code

from keras.datasets import mnist # subroutines for fetching the MNIST dataset

from keras.models import Model # basic class for specifying and training a neural network

from keras.layers import Input, Dense, Flatten, Convolution2D, MaxPooling2D, Dropout

from keras.utils import np_utils # utilities for one-hot encoding of ground truth values

batch_size = 128 # in each iteration, we consider 128 training examples at once

num_epochs = 12 # we iterate twelve times over the entire training set

kernel_size = 3 # we will use 3x3 kernels throughout

pool_size = 2 # we will use 2x2 pooling throughout

conv_depth = 32 # use 32 kernels in both convolutional layers

drop_prob_1 = 0.25 # dropout after pooling with probability 0.25

drop_prob_2 = 0.5 # dropout in the FC layer with probability 0.5

hidden_size = 128 # there will be 128 neurons in both hidden layers

num_train = 60000 # there are 60000 training examples in MNIST

num_test = 10000 # there are 10000 test examples in MNIST

height, width, depth = 28, 28, 1 # MNIST images are 28x28 and greyscale

num_classes = 10 # there are 10 classes (1 per digit)

(X_train, y_train), (X_test, y_test) = mnist.load_data() # fetch MNIST data

X_train = X_train.reshape(X_train.shape[0], depth, height, width)

X_test = X_test.reshape(X_test.shape[0], depth, height, width)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255 # Normalise data to [0, 1] range

X_test /= 255 # Normalise data to [0, 1] range

Y_train = np_utils.to_categorical(y_train, num_classes) # One-hot encode the labels

Y_test = np_utils.to_categorical(y_test, num_classes) # One-hot encode the labels

inp = Input(shape=(depth, height, width)) # N.B. Keras expects channel dimension first

# Conv [32] -> Conv [32] -> Pool (with dropout on the pooling layer)

conv_1 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', activation='relu')(inp)

conv_2 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', activation='relu')(conv_1)

pool_1 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_2)

drop_1 = Dropout(drop_prob_1)(pool_1)

flat = Flatten()(drop_1)

hidden = Dense(hidden_size, activation='relu')(flat) # Hidden ReLU layer

drop = Dropout(drop_prob_2)(hidden)

out = Dense(num_classes, activation='softmax')(drop) # Output softmax layer

model = Model(input=inp, output=out) # To define a model, just specify its input and output layers

model.compile(loss='categorical_crossentropy', # using the cross-entropy loss function

optimizer='adam', # using the Adam optimiser

metrics=['accuracy']) # reporting the accuracy

model.fit(X_train, Y_train, # Train the model using the training set...

batch_size=batch_size, nb_epoch=num_epochs,

verbose=1, validation_split=0.1) # ...holding out 10% of the data for validation

model.evaluate(X_test, Y_test, verbose=1) # Evaluate the trained model on the test set!Training Listing

Train on 54000 samples, validate on 6000 samples

Epoch 1/12

54000/54000 [==============================] - 4s - loss: 0.3010 - acc: 0.9073 - val_loss: 0.0612 - val_acc: 0.9825

Epoch 2/12

54000/54000 [==============================] - 4s - loss: 0.1010 - acc: 0.9698 - val_loss: 0.0400 - val_acc: 0.9893

Epoch 3/12

54000/54000 [==============================] - 4s - loss: 0.0753 - acc: 0.9775 - val_loss: 0.0376 - val_acc: 0.9903

Epoch 4/12

54000/54000 [==============================] - 4s - loss: 0.0629 - acc: 0.9809 - val_loss: 0.0321 - val_acc: 0.9913

Epoch 5/12

54000/54000 [==============================] - 4s - loss: 0.0520 - acc: 0.9837 - val_loss: 0.0346 - val_acc: 0.9902

Epoch 6/12

54000/54000 [==============================] - 4s - loss: 0.0466 - acc: 0.9850 - val_loss: 0.0361 - val_acc: 0.9912

Epoch 7/12

54000/54000 [==============================] - 4s - loss: 0.0405 - acc: 0.9871 - val_loss: 0.0330 - val_acc: 0.9917

Epoch 8/12

54000/54000 [==============================] - 4s - loss: 0.0386 - acc: 0.9879 - val_loss: 0.0326 - val_acc: 0.9908

Epoch 9/12

54000/54000 [==============================] - 4s - loss: 0.0349 - acc: 0.9894 - val_loss: 0.0369 - val_acc: 0.9908

Epoch 10/12

54000/54000 [==============================] - 4s - loss: 0.0315 - acc: 0.9901 - val_loss: 0.0277 - val_acc: 0.9923

Epoch 11/12

54000/54000 [==============================] - 4s - loss: 0.0287 - acc: 0.9906 - val_loss: 0.0346 - val_acc: 0.9922

Epoch 12/12

54000/54000 [==============================] - 4s - loss: 0.0273 - acc: 0.9909 - val_loss: 0.0264 - val_acc: 0.9930

9888/10000 [============================>.] - ETA: 0s

[0.026324689089493085, 0.99119999999999997]As you can see, our model achieves 99.12% accuracy on the test set. This is slightly better than the results of the MLP, discussed in the first part, but we still have room to grow!

In this guide, we will share ways to improve such “basic” neural networks (without departing from the CNN architecture), and then evaluate the performance gain that we will get.

-regularization

-regularization

In a previous article, we said that one of the main problems of machine learning is the problem of overfitting , when the model, in pursuit of minimizing the cost of training, loses its ability to generalize.

As already mentioned, there is an easy way to keep retraining in check - the dropout method .

But there are other regularizers that can be applied to our network. Perhaps the most popular of these is

Please note that it is extremely important to choose the right λ. If the coefficient is too small, then the effect of regularization will be negligible, if it is too large, the model will reset all weights. Here we take λ = 0.0001; to add this regularization method to our model, we need one more import, after which it’s enough just to add the parameter

W_regularizer to each layer where we want to apply regularization.from keras.regularizers import l2 # L2-regularisation

# ...

l2_lambda = 0.0001

# ...

# This is how to add L2-regularisation to any Keras layer with weights (e.g. Convolution2D/Dense)

conv_1 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', W_regularizer=l2(l2_lambda), activation='relu')(inp)Network initialization

One of the points that we overlooked in the previous article is the principle of choosing the initial values of weights for the layers that make up the model. Obviously, this question is very important: setting all weights to 0 will be a serious obstacle to learning, since none of the weights will initially be active. Assigning weights to the values from the interval ± 1 is also usually not the best option - in fact, sometimes (depending on the task and the complexity of the model) it may depend on the correct initialization of the model, whether it will reach the highest performance or not converge at all. Even if the task does not involve such an extreme, a well-chosen method of initializing weights can significantly affect the model’s ability to learn, since it presets the model parameters taking into account the loss function.

Here are two of the most interesting methods. Xavier

initialization method (sometimes - Glorot's method). The main idea of this method is to simplify the passage of the signal through the layer during both forward and backward propagation of the error for the linear activation function (this method also works well for the sigmoid function, since the region where it is unsaturated also has a linear character). When calculating weights, this method relies on a probability distribution (uniform or normal) with a dispersion equal to , where and are the numbers of neurons in the previous and subsequent layers, respectively. Initialization Method Ge (He)

This is a variation of the Zawier’s method, more suitable for the ReLU activation function , compensating for the fact that this function returns zero for half of the domain of definition. Namely, in this case, in

order to obtain the required variance for Zawier’s initialization, we consider what happens to the dispersion of the output values of a linear neuron (without a bias component), assuming that the weights and input values do not correlate and have zero expectation :

It follows from this that in order to preserve the variance of the input data after passing through the layer, it is necessary that the variance be

These two methods are suitable for most of the examples that you come across (although the orthogonal initialization method also deserves research , especially with respect to recurrent networks). It is not difficult to specify the initialization method for the layer: you just need to specify the parameter

initas described below. We will use the uniform initialization of Ge ( he_uniform) for all ReLU layers and the uniform initialization of Zawier ( glorot_uniform) for the output softmax layer (since in essence it is a generalization of the logistic function to multiple similar data).# Add He initialisation to a layer

conv_1 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', init='he_uniform', W_regularizer=l2(l2_lambda), activation='relu')(inp)

# Add Xavier initialisation to a layer

out = Dense(num_classes, init='glorot_uniform', W_regularizer=l2(l2_lambda), activation='softmax')(drop)Batch normalization

Batch normalization is a deep learning acceleration method proposed by Ioffe and Szegedy at the beginning of 2015, already quoted on arXiv 560 times! The method solves the following problem that impedes the effective learning of neural networks: as the signal propagates through the network, even if we normalize it at the input, passing through the inner layers, it can be greatly distorted both by the mean and variance (this phenomenon is called internal covariance shift ), which is fraught with serious discrepancies between the gradients at different levels. Therefore, we have to use stronger regularizers, thereby slowing down the pace of learning.

Batch normalization offers a very simple solution to this problem: normalizeinput data in such a way as to obtain zero expectation and unit variance. Normalization is performed before entering each layer. This means that during the training we normalize the

batch_sizeexamples, and during testing we normalize the statistics obtained on the basis of the entire training set, since we cannot see test data in advance. Namely, we calculate the expectation and variance for a specific batch (package) Using these statistical characteristics, we transform the activation function in such a way that it has zero expectation and a single dispersion throughout the whole batch:

where ε> 0 is the parameter that protects us from dividing by 0 (in case the standard deviation of the batch is very small or even equal to zero). Finally, in order to get the final activation function y , we need to make sure that during normalization we did not lose the ability to generalize, and since we applied scaling and shifting operations to the original data, we can allow arbitrary scaling and shifting of normalized values to obtain the final function activation:

Where β and γ are the parameters of the batch normalization with which the systems can be trained (they can be optimized by the gradient descent method on the training data). This generalization also means that patch normalization can be useful to apply directly to the input of a neural network.

This method, when applied to deep convolution networks, almost always successfully reaches its goal - to accelerate learning. Moreover, it can happen to be an excellent regularizer , allowing you to choose the pace of training, the power of the

And finally, although the authors of the method recommend applying normalization to normal before the activation function of a neuron, recent studies show that if it is not more useful, then at least it is also beneficial to use it after activation, which we will do as part of this guide.

In Keras, adding batch normalization to your network is very simple: it is responsible for the layer

BatchNormalizationto which we will pass several parameters, the most important of which is axis(along which axis of the data the statistical characteristics will be calculated). In particular, while working with convolutional layers, we better normalize along individual channels, therefore, we choose axis=1.from keras.layers.normalization import BatchNormalization # batch normalisation

# ...

inp_norm = BatchNormalization(axis=1)(inp) # apply BN to the input (N.B. need to rename here)

# conv_1 = Convolution2D(...)(inp_norm)

conv_1 = BatchNormalization(axis=1)(conv_1) # apply BN to the first conv layerExtension of the training set (data augmentation)

While the methods described above concerned mainly fine-tuning the model itself , it can be useful to study data tuning options , especially when it comes to image recognition tasks.

Imagine that we trained a neural network to recognize handwritten numbers that were about the same size and neatly aligned. Now let's imagine what happens if someone gives this network testing slightly shifted numbers of different sizes and inclinations - then its confidence in the right class will drop sharply. Ideally, it would be nice to be able to train the network in such a way that it remains resistant to such distortions, but our model can be trained only on the basis of those samples that we have provided to her, despite the fact that she carries out some kind of statistical analysis of the training set and extrapolates it.

Fortunately, there is a solution for this problem that is simple but effective, especially on image recognition tasks: artificially expand the training data with distorted versions during training! This means the following: before setting an example for the input of the model, we apply all the transformations that we consider necessary to it, and then let the network directly observe what effect it has when applied to the data and teaching it to “behave well” on these examples. For example, here are a few examples of shifted, scaled, warped, tilted digits from the MNIST set.

Keras provides a wonderful interface for expanding the learning set - the class

ImageDataGenerator. We initialize the class, telling it what kind of transformation we want to apply to the images, and then run the training data through the generator, calling the method fit, and then the method flow, getting a continuously expanding iterator for the batch that we replenish. There is even a special method model.fit_generatorthat will teach our model using this iterator, which greatly simplifies the code. There is a small drawback: this is how we lose the parameter validation_split, which means that we will have to separate the validation subset of the data ourselves, but this only takes four lines of code. Here we will use random horizontal and vertical shifts.

ImageDataGeneratoralso provides us with the ability to perform random rotations, scaling, warping and mirroring. All these transformations are also worth a try, except perhaps a mirror image, since in real life we are unlikely to encounter manuscript figures expanded in this way.from keras.preprocessing.image import ImageDataGenerator # data augmentation

# ... after model.compile(...)

# Explicitly split the training and validation sets

X_val = X_train[54000:]

Y_val = Y_train[54000:]

X_train = X_train[:54000]

Y_train = Y_train[:54000]

datagen = ImageDataGenerator(

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1) # randomly shift images vertically (fraction of total height)

datagen.fit(X_train)

# fit the model on the batches generated by datagen.flow()---most parameters similar to model.fit

model.fit_generator(datagen.flow(X_train, Y_train,

batch_size=batch_size),

samples_per_epoch=X_train.shape[0],

nb_epoch=num_epochs,

validation_data=(X_val, Y_val),

verbose=1)Ensembles

One interesting feature of neural networks that can be seen when they are used to distribute data into more than two classes is that, under different initial learning conditions, they are more easily allocated to one class, while others are confused. Using MNIST as an example, one can find that a single neural network can perfectly distinguish triples from fives, but does not learn how to separate units from sevens correctly, while the opposite is the case with dividing from another network.

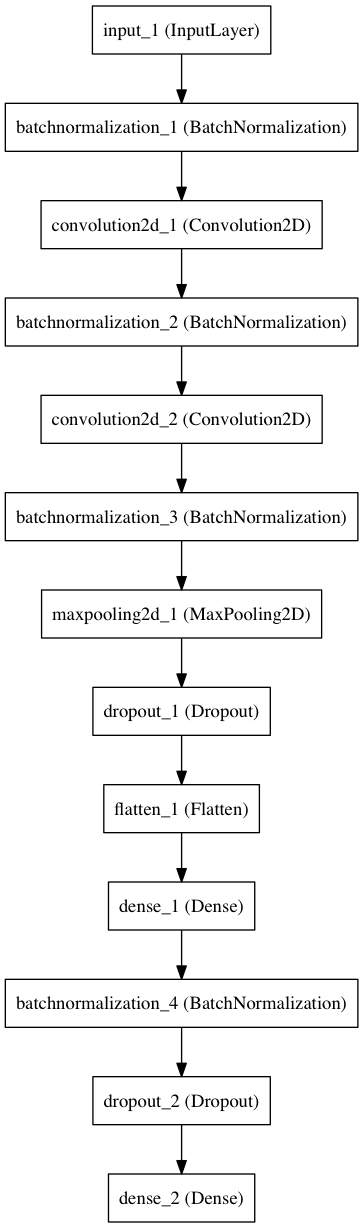

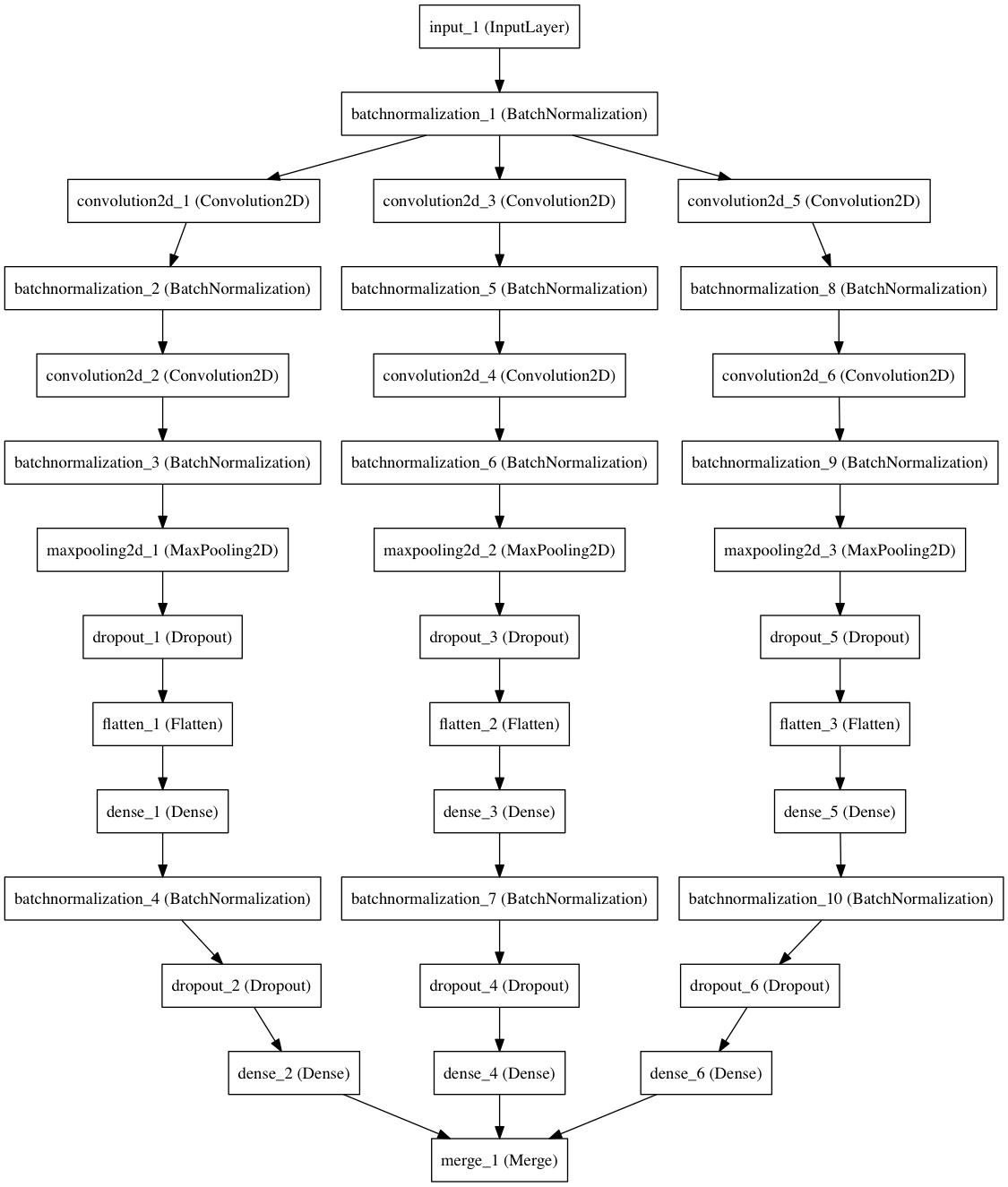

This discrepancy can be dealt with using the method of statistical ensembles - place several copies of one networkwith different initial values and calculate their average result on the same input data. Here we will build three separate models. The differences between them can easily be represented in the form of a diagram, also built in Keras.

Core network

Ensemble

And again, Keras allows you to implement your plan by adding a minimal amount of code - we’ll wrap up the method of constructing the components of the model in a cycle, combining their results in the last layer

merge.from keras.layers import merge # for merging predictions in an ensemble

# ...

ens_models = 3 # we will train three separate models on the data

# ...

inp_norm = BatchNormalization(axis=1)(inp) # Apply BN to the input (N.B. need to rename here)

outs = [] # the list of ensemble outputs

for i in range(ens_models):

# conv_1 = Convolution2D(...)(inp_norm)

# ...

outs.append(Dense(num_classes, init='glorot_uniform', W_regularizer=l2(l2_lambda), activation='softmax')(drop)) # Output softmax layer

out = merge(outs, mode='ave') # average the predictions to obtain the final outputEarly stopping

I will describe here another method as an introduction to the wider field of optimization of hyperparameters . So far, we have used a validated data set exclusively for monitoring the progress of training, which, no doubt, is not rational (since this data is not used for constructive purposes). In fact, the validation set can serve as a basis for evaluating network hyperparameters (such as depth, number of neurons / nuclei, regularization parameters, etc.). Imagine that a network is run with various combinations of hyperparameters, and then a decision is made based on their performance on the validation set. Please note that we do not need to know anything about the test set before finallylet's define hyperparameters, because otherwise the signs of the test set will involuntarily pour into the learning process. This principle is also known as the golden rule of machine learning , and has been violated in many early approaches.

Perhaps the easiest way to use the validation set is to set the number of “ eras ” (cycles) using a procedure known as early stop - just stop the learning process if the losses do not begin to decrease over a given number of eras (parameter patience). Since our data set is relatively small and quickly saturated, we will set patience to five epochs, and increase the maximum number of epochs to 50 (this number is unlikely to ever be reached).

The early stop mechanism is implemented in Keras through a class of callback functions

EarlyStopping. Callback functions are called after each training epoch using a parameter callbackspassed to fitor fit_generator. As usual, everything is very compact: our program grows by only one line of code.from keras.callbacks import EarlyStopping

# ...

num_epochs = 50 # we iterate at most fifty times over the entire training set

# ...

# fit the model on the batches generated by datagen.flow()---most parameters similar to model.fit

model.fit_generator(datagen.flow(X_train, Y_train,

batch_size=batch_size),

samples_per_epoch=X_train.shape[0],

nb_epoch=num_epochs,

validation_data=(X_val, Y_val),

verbose=1,

callbacks=[EarlyStopping(monitor='val_loss', patience=5)]) # adding early stoppingJust show me the code

After applying the six optimization techniques described above, the code of our neural network will look as follows.

The code

from keras.datasets import mnist # subroutines for fetching the MNIST dataset

from keras.models import Model # basic class for specifying and training a neural network

from keras.layers import Input, Dense, Flatten, Convolution2D, MaxPooling2D, Dropout, merge

from keras.utils import np_utils # utilities for one-hot encoding of ground truth values

from keras.regularizers import l2 # L2-regularisation

from keras.layers.normalization import BatchNormalization # batch normalisation

from keras.preprocessing.image import ImageDataGenerator # data augmentation

from keras.callbacks import EarlyStopping # early stopping

batch_size = 128 # in each iteration, we consider 128 training examples at once

num_epochs = 50 # we iterate at most fifty times over the entire training set

kernel_size = 3 # we will use 3x3 kernels throughout

pool_size = 2 # we will use 2x2 pooling throughout

conv_depth = 32 # use 32 kernels in both convolutional layers

drop_prob_1 = 0.25 # dropout after pooling with probability 0.25

drop_prob_2 = 0.5 # dropout in the FC layer with probability 0.5

hidden_size = 128 # there will be 128 neurons in both hidden layers

l2_lambda = 0.0001 # use 0.0001 as a L2-regularisation factor

ens_models = 3 # we will train three separate models on the data

num_train = 60000 # there are 60000 training examples in MNIST

num_test = 10000 # there are 10000 test examples in MNIST

height, width, depth = 28, 28, 1 # MNIST images are 28x28 and greyscale

num_classes = 10 # there are 10 classes (1 per digit)

(X_train, y_train), (X_test, y_test) = mnist.load_data() # fetch MNIST data

X_train = X_train.reshape(X_train.shape[0], depth, height, width)

X_test = X_test.reshape(X_test.shape[0], depth, height, width)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

Y_train = np_utils.to_categorical(y_train, num_classes) # One-hot encode the labels

Y_test = np_utils.to_categorical(y_test, num_classes) # One-hot encode the labels

# Explicitly split the training and validation sets

X_val = X_train[54000:]

Y_val = Y_train[54000:]

X_train = X_train[:54000]

Y_train = Y_train[:54000]

inp = Input(shape=(depth, height, width)) # N.B. Keras expects channel dimension first

inp_norm = BatchNormalization(axis=1)(inp) # Apply BN to the input (N.B. need to rename here)

outs = [] # the list of ensemble outputs

for i in range(ens_models):

# Conv [32] -> Conv [32] -> Pool (with dropout on the pooling layer), applying BN in between

conv_1 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', init='he_uniform', W_regularizer=l2(l2_lambda), activation='relu')(inp_norm)

conv_1 = BatchNormalization(axis=1)(conv_1)

conv_2 = Convolution2D(conv_depth, kernel_size, kernel_size, border_mode='same', init='he_uniform', W_regularizer=l2(l2_lambda), activation='relu')(conv_1)

conv_2 = BatchNormalization(axis=1)(conv_2)

pool_1 = MaxPooling2D(pool_size=(pool_size, pool_size))(conv_2)

drop_1 = Dropout(drop_prob_1)(pool_1)

flat = Flatten()(drop_1)

hidden = Dense(hidden_size, init='he_uniform', W_regularizer=l2(l2_lambda), activation='relu')(flat) # Hidden ReLU layer

hidden = BatchNormalization(axis=1)(hidden)

drop = Dropout(drop_prob_2)(hidden)

outs.append(Dense(num_classes, init='glorot_uniform', W_regularizer=l2(l2_lambda), activation='softmax')(drop)) # Output softmax layer

out = merge(outs, mode='ave') # average the predictions to obtain the final output

model = Model(input=inp, output=out) # To define a model, just specify its input and output layers

model.compile(loss='categorical_crossentropy', # using the cross-entropy loss function

optimizer='adam', # using the Adam optimiser

metrics=['accuracy']) # reporting the accuracy

datagen = ImageDataGenerator(

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1) # randomly shift images vertically (fraction of total height)

datagen.fit(X_train)

# fit the model on the batches generated by datagen.flow()---most parameters similar to model.fit

model.fit_generator(datagen.flow(X_train, Y_train,

batch_size=batch_size),

samples_per_epoch=X_train.shape[0],

nb_epoch=num_epochs,

validation_data=(X_val, Y_val),

verbose=1,

callbacks=[EarlyStopping(monitor='val_loss', patience=5)]) # adding early stopping

model.evaluate(X_test, Y_test, verbose=1) # Evaluate the trained model on the test set!Training Listing

Epoch 1/50

54000/54000 [==============================] - 30s - loss: 0.3487 - acc: 0.9031 - val_loss: 0.0579 - val_acc: 0.9863

Epoch 2/50

54000/54000 [==============================] - 30s - loss: 0.1441 - acc: 0.9634 - val_loss: 0.0424 - val_acc: 0.9890

Epoch 3/50

54000/54000 [==============================] - 30s - loss: 0.1126 - acc: 0.9716 - val_loss: 0.0405 - val_acc: 0.9887

Epoch 4/50

54000/54000 [==============================] - 30s - loss: 0.0929 - acc: 0.9757 - val_loss: 0.0390 - val_acc: 0.9890

Epoch 5/50

54000/54000 [==============================] - 30s - loss: 0.0829 - acc: 0.9788 - val_loss: 0.0329 - val_acc: 0.9920

Epoch 6/50

54000/54000 [==============================] - 30s - loss: 0.0760 - acc: 0.9807 - val_loss: 0.0315 - val_acc: 0.9917

Epoch 7/50

54000/54000 [==============================] - 30s - loss: 0.0740 - acc: 0.9824 - val_loss: 0.0310 - val_acc: 0.9917

Epoch 8/50

54000/54000 [==============================] - 30s - loss: 0.0679 - acc: 0.9826 - val_loss: 0.0297 - val_acc: 0.9927

Epoch 9/50

54000/54000 [==============================] - 30s - loss: 0.0663 - acc: 0.9834 - val_loss: 0.0300 - val_acc: 0.9908

Epoch 10/50

54000/54000 [==============================] - 30s - loss: 0.0658 - acc: 0.9833 - val_loss: 0.0281 - val_acc: 0.9923

Epoch 11/50

54000/54000 [==============================] - 30s - loss: 0.0600 - acc: 0.9844 - val_loss: 0.0272 - val_acc: 0.9930

Epoch 12/50

54000/54000 [==============================] - 30s - loss: 0.0563 - acc: 0.9857 - val_loss: 0.0250 - val_acc: 0.9923

Epoch 13/50

54000/54000 [==============================] - 30s - loss: 0.0530 - acc: 0.9862 - val_loss: 0.0266 - val_acc: 0.9925

Epoch 14/50

54000/54000 [==============================] - 31s - loss: 0.0517 - acc: 0.9865 - val_loss: 0.0263 - val_acc: 0.9923

Epoch 15/50

54000/54000 [==============================] - 30s - loss: 0.0510 - acc: 0.9867 - val_loss: 0.0261 - val_acc: 0.9940

Epoch 16/50

54000/54000 [==============================] - 30s - loss: 0.0501 - acc: 0.9871 - val_loss: 0.0238 - val_acc: 0.9937

Epoch 17/50

54000/54000 [==============================] - 30s - loss: 0.0495 - acc: 0.9870 - val_loss: 0.0246 - val_acc: 0.9923

Epoch 18/50

54000/54000 [==============================] - 31s - loss: 0.0463 - acc: 0.9877 - val_loss: 0.0271 - val_acc: 0.9933

Epoch 19/50

54000/54000 [==============================] - 30s - loss: 0.0472 - acc: 0.9877 - val_loss: 0.0239 - val_acc: 0.9935

Epoch 20/50

54000/54000 [==============================] - 30s - loss: 0.0446 - acc: 0.9885 - val_loss: 0.0226 - val_acc: 0.9942

Epoch 21/50

54000/54000 [==============================] - 30s - loss: 0.0435 - acc: 0.9890 - val_loss: 0.0218 - val_acc: 0.9947

Epoch 22/50

54000/54000 [==============================] - 30s - loss: 0.0432 - acc: 0.9889 - val_loss: 0.0244 - val_acc: 0.9928

Epoch 23/50

54000/54000 [==============================] - 30s - loss: 0.0419 - acc: 0.9893 - val_loss: 0.0245 - val_acc: 0.9943

Epoch 24/50

54000/54000 [==============================] - 30s - loss: 0.0423 - acc: 0.9890 - val_loss: 0.0231 - val_acc: 0.9933

Epoch 25/50

54000/54000 [==============================] - 30s - loss: 0.0400 - acc: 0.9894 - val_loss: 0.0213 - val_acc: 0.9938

Epoch 26/50

54000/54000 [==============================] - 30s - loss: 0.0384 - acc: 0.9899 - val_loss: 0.0226 - val_acc: 0.9943

Epoch 27/50

54000/54000 [==============================] - 30s - loss: 0.0398 - acc: 0.9899 - val_loss: 0.0217 - val_acc: 0.9945

Epoch 28/50

54000/54000 [==============================] - 30s - loss: 0.0383 - acc: 0.9902 - val_loss: 0.0223 - val_acc: 0.9940

Epoch 29/50

54000/54000 [==============================] - 31s - loss: 0.0382 - acc: 0.9898 - val_loss: 0.0229 - val_acc: 0.9942

Epoch 30/50

54000/54000 [==============================] - 31s - loss: 0.0379 - acc: 0.9900 - val_loss: 0.0225 - val_acc: 0.9950

Epoch 31/50

54000/54000 [==============================] - 30s - loss: 0.0359 - acc: 0.9906 - val_loss: 0.0228 - val_acc: 0.9943

10000/10000 [==============================] - 2s

[0.017431972888592554, 0.99470000000000003]Our model after updating achieves 99.47% accuracy on the test data set, and this is a significant increase compared to the initial performance of 99.12%. Of course, for a small and relatively simple dataset like MNIST, the benefits do not seem so significant. Applying the same techniques to the CIFAR-10 recognition task, if you have the necessary resources, you can get a more tangible advantage.

I suggest you continue to work with this model: in particular, try to use validation data not only to receive an early stop, but also to estimate the size and number of cores, sizes of hidden layers, optimization strategies, activation functions, the number of networks in the ensemble, and compare the results with the best of the best (at the time of writing this post, the best model was 99.79% accurate on MNIST).

Conclusion

In this article, we examined six techniques for fine-tuning neural networks described in previous posts:

Initialization

Batch-normalization

Expanding the training set

Ensemble method

Early stop

And successfully applied them to the deep convolutional network built in Keras, which allowed us to achieve a significant increase in accuracy on MNIST and took less than 90 lines of code.

This was the final article in the series. You can read the previous two parts here and here .

I hope that your knowledge will become the incentive that, combined with the necessary resources, will allow you to become the coolest engineers in deep learning networks.

Thanks!

Oh, and come to us to work? :)wunderfund.io is a young foundation that deals with high-frequency algorithmic trading . High-frequency trading is a continuous competition of the best programmers and mathematicians around the world. By joining us, you will become part of this fascinating battle.

We offer interesting and complex data analysis and low latency development tasks for enthusiastic researchers and programmers. A flexible schedule and no bureaucracy, decisions are quickly taken and implemented.

Join our team: wunderfund.io