Experience in building and operating a large file storage

Daniil Podolsky (Git in Sky)

The story of what each engineer should do in his life after he gave birth to a child, planted a tree and built a house is to make his own file storage.

My report is called "Experience in the construction and operation of a large file storage." We have been building and operating a large file storage for the last three years. At that moment, when I was submitting my abstracts, the report was entitled “At Night Through the Forest. Experience in building blah blah blah exploitation. ” But the program committee asked me to be more serious, nevertheless, in fact, this is the report “At Night Through the Forest”.

This image was invented by a colleague Chistyakov for the conference “Strike”, and there we made a report “At night through the forest” about docker, and I about new SQL databases.

Since I went to the conference, it became clear to me that I want to hear not the success stories, but the stories of horror and nightmare that awaits us all on the road to success. Because alien success will not give me anything. Of course, if someone has already done something, the very knowledge that this can be done helps me move, but in reality I would like to know where the mines and traps are.

Another buzz image is the book “Picnic on the Sidelines”. There is a research institute that explores this area. They have flying bots, they have automatic markers, they have this and that, they have robotic systems, and there are stalkers that roam this area just like that, throwing nuts back and forth. So, it just so happened that we work in the segment where stalkers are in demand, and not a research institute. We work in the segment where highload is most important. We work in the rogue segment. Highload is, in fact, for rogues, because older guys simply do not allow their server load to exceed 30%. If you decide that your flow watermark is 70%, firstly, you have started highload, and secondly, you are a rogue.

So. To begin with, what is file storage and why can it, in general, end up in our lives?

A file is a piece of information (this is its official definition), equipped with a name by which this piece of information can be extracted. But this is not the only piece of information in the world that has a name, why is the file different from all the others? Because the file is too large to handle it as one piece. See: if you want to support, for example, 100 thousand simultaneous connections (this is not so much) and you send files of 1 MB in size. This means that if you want to treat a file as one piece of information, you are forced to load all 100 thousand files of 1 MB each into memory, that is 100 GB of memory. Impossible. Accordingly, you have to do something. Either limit the number of simultaneous connections (this is normal for corporate applications), or treat the file as if it consists of pieces of information, from individual small pieces, from chunks. The word chunk will be used in the report further.

The cornerstone of a

File storage is the place where files are stored. In fact, it may be even more important that the files are stored there - this is the place from where the files are granted access, where they are given from.

What is file storage, we understand. What is a large file storage? Exploiting a large file storage, I found that this is not a characteristic of the storage itself. For example, Vasya Pupkin has an archive of teenage movies of 5 PB. Is this a big file storage? No, because nobody needs it, because Vasya Pupkin cannot watch all 5 PB at the same time. He is watching one small movie. There are several more characteristics of this Pupkin repository, for example, if he loses it, he will cry and download everything again from the Internet.

Lots of files. It can be assumed that if a lot of bytes is not large, then many files are large? Not. There are repositories in which there are a lot of records. We have bases in which there are a billion lines and, nevertheless, they are not large. Why? Because if you have a billion lines in the database, you have convenient and reliable means of managing these lines. There is no such tool for files. All of our actively used file systems today are hierarchical. This means that in order to find out what is happening on our file system, we need to go through it, open all available directories, read them. Sometimes we don’t have it, even usually we don’t have indexes on the directory, so we are forced to read it from beginning to end, find the file we need - they all perfectly imagine it.

So, the big one is a description of the situation in which you find yourself with your file storage, and not the storage itself. Very often, and this is my favorite trick, you can turn a large file storage into normal by simply transferring it to an SSD. There are much more IOPS and standard information management tools that are used on file systems start working fast enough so that managing such file storage is not a problem.

The paradox of file storage. From a business point of view, these file storages are not why not needed. When you have a rather large project that accepts files from users, gives files to users, shows users ads - everything, in general, is clear. And why about half of the project budget is made up of some slurred glands, on which some slurred bytes lie? The business, in principle, understands why this is, but in fact file storage is not needed. Business requirements will never say “keep files”. The business requirements will say "give files." There is a TK in which it will be written “store files” - this is a TK on the backup system. But this is also a lie. When we want a backup system, we do not want a backup system, we want a disaster recovery system. Those.

Unfortunately, the creators of file storage do not understand this. Maybe only the creators of S3 thought of this simple thing. Everyone else is interested in the files being folded so that they do not collapse in any way, and if there is a danger of destruction, then you should stop all activities, in no case give away a file that could be beaten, and in no case upload new files if we are in danger of breaking existing information.

This is a traditional approach, but, nevertheless, it has nothing to do with what we do. File storage is a necessary thing, you can’t do without it, it is needed, and no. And without it in any way, because the files must be somewhere so that you can give them away.

The main source of experience with file storage in my life is the Setup.Ru project. Setup.Ru is a mass hosting with some chips, there sites are generated according to the template. There is a template, the user fills it in, clicks “generate”, 20 to 200 files are generated, they all add up to the repository. Users upload pictures, various other binary files. All in all, this is an unlimited source of all this. Currently, 450 million files are shared in the Setup repository, divided into 1.5 million sites. This is quite a lot. How we came to such a life is the main content of my report. How we moved to what we have there now. 20 million files per day - this is the volume of the Setup update today at its peak. In itself, 20 million is already a lot. The first time we ran into problems when we had 6.5 million files,

In 2012, the Setup file storage was organized very simply. Content generators were published on two servers to ensure fault tolerance. Synchronization - if one of these servers was dying with us, we took the other the same one, copied rsync from one to another all this mass of files, and everything was fine with us. For hot content, we used an SSD, then Hetzner. This is all at Hetzner, sorry. This is the question of what we are stalkers. Those. Hetzner is such a funny place, such a zone, from there we take out witch jelly from time to time, sell it on a black roar and live from it.

What was the problem when we got acquainted with this scheme in 2012? At the moment when one of the duplicate servers died, we live without a filer for a while, so while rsync is on, we are forced to pray and shake with fear. File system statistics are also problems. If it was still possible to collect it on SSDs, 60 GB each (64 GB then there were SSDs in Hetzner and no others), then by HDD we quickly realized by the spring of 2012 that we did not know what we had on the drives, and never know. True, then we did not think that this would become a problem.

In the summer of 2012, another server died. We ordered a new one with the usual movement, launched rsync, and it never ended. Rather, the script that ran rsync in a loop never ended until it found that all the files were copied. It turned out that there were already a lot of files, that a tree walk took six hours, that files were copied plus those six hours, another 12. And in 18 hours the content managed to change so much that our cue was irrelevant. “At night through the forest”, i.e. no one expected this stick to hit our eyes. So here. Now it’s obvious, and then we were very surprised, like: how so?

Then your humble servant came up with the idea of adding files to the database. Why did he come up with this, where did this stupid idea come from? Because after all, we have collected some statistics. 95% of the files were less than 64 Kb. 64 Kb - even with 100 thousand simultaneous connections - is quite a lifting size to handle it like a single piece. The remaining files were hidden in the database, large files were hidden in the blobs. Later I will say that this is the main mistake of this decision. I will say why.

This was all done at Postgres. We then believed in the master replication master, and in Postgres there is no master replication master (and nowhere, in fact, in any DBMS there is no master replication master), so we wrote our own, which took into account the features of our content and updates of this content, and could function normally.

And in the spring of 2013, we finally ran into problems of what we wrote.

There were 25 million files at that time. It turned out that with such a volume of updates, which by that time had taken place in the system, transactions took a significant amount of time. Therefore, some transactions that were shorter managed to end earlier than earlier ones, but longer. As a result, the auto-increment counter, which we were guided by in our master replication master, ended up with holes. Those. our master replication master never saw some files. It was a big surprise for me personally. I drank three days.

Then, at last, he came up with the idea that whenever we start the master-master replication, it should be taken from the last counter. First, I had to take 1000, then 2000, then 10000. When I entered 25 thousand in this field, I realized that I had to do something, but by that time I did not know what to do. We stumbled upon the same problem again - the content was changing faster than we synchronized it.

It turned out that this our master replication master runs rather slowly, and it works slowly, in fact, it doesn’t, insert in Postgres works slowly, insert in BLOBs works especially slowly. Therefore, at some point, at night, when the number of publications decreased radically, the base converged. But during the day, she was always a little bit inconsistent, a little bit. Our users noticed this as follows: they upload a picture, they want to see it right away, but it doesn’t exist, because they uploaded it to one server, and they request it through round-robin from another. Well, I had to learn our servers, replace the business logic, I had to get the picture from the same server on which we uploaded it. This then came back to us with problems with a dead server - when the server is dead, we need to go where this router is and change its routing parameters.

In the fall of 2013, there were 50 million files. And here we came across the fact that our database, in general, did not pull, because there it was rather difficult to join in order to give the user exactly the latest version of the file that he downloaded. And we no longer have enough of our eight cores. We did not know what to do with this, but colleague Chistyakov found a solution - colleague Chistyakov on the triggers made us a materialized view, i.e. upon request, the file from the view, in which there was a long join, moved to a separate table, from where it was subsequently requested. It is clear how this was arranged, and all this also began to work perfectly. Master replication by that time already worked very poorly, but we hardened this crutch, and everything seemed to be fine. Until the spring of 2014.

120 million files. Content no longer fits on one machine at Hetzner. We failed to get machines in which there would be more than four of three t-screws. Therefore, the following was invented. Small files remained in Postgres, large files moved to leofs. It turned out right away that leofs is a pretty slow repository. At the same time, we tried various other cluster repositories, they are all pretty slow. But it also turned out that not one of them is transactional. Surprise, right?

A transactional file system is needed in order to change the entire site. If the user has published a new site, he does not want him to change the file within half an hour. Even if the site will be published for half an hour, the user wants the whole site to be published as a whole.

None of the repositories we tested gave us this functionality. We could implement it on POSIX-compatible cluster file storages using symlinks, as is done on the standard file system, but it turned out that none of them provide us with enough IOPS to send our 500 requests per second. Therefore, small files remained in Postgres, and instead of large files and BLOBs, links to leofs repositories turned out to be. And everything became good again. Now it’s clear that this is another crutch that we pushed under our system, but, nevertheless, everything became good for a while.

2015, the beginning. The leofs ran out of space. There were already 400 million files - apparently, our users are constantly increasing momentum. We ran out of space on leofs, we decided that we needed to add a couple of nodes, we added them. For some time in a row there was a rebalancing, then it seemed to be over, and it turned out that the master node now does not know where it has any files.

Well, we disabled these two new nodes. It turned out that now she knows absolutely nothing. We connected these two new nodes back, and it turned out that now rebalancing is not going at all. Here it is.

The technical manager of the setup team hired a Japanese translator, and we called Tokyo. At 8 am. Talk to Rakuten. Rakuten talked to us for an hour, and forgive me, I will scold her because I haven’t been treated like this in my life, and I’ve been in business for 20 years ... Rakuten talked to us for an hour, listened carefully to our problems, read our bug reports in their tracker, after which she said: "You know, we have run out of time, thanks, sorry." And then we realized that a fur-bearing animal came to us, because we have 400 million files, we (taking into account replication factor 3) have more than 20 TB of content. In fact, this is not entirely true, but the real content there is 8 TB. Well, here's what to do?

At first we panicked, tried to arrange metrics according to the leofs code, tried to understand what was happening, our colleague Chistyakov did not sleep at night. We tried to find out if anyone from St. Petersburg Erlang'ist wants to touch it with his wand. Petersburg Erlang'ists turned out to be reasonable people, they did not touch it with a wand.

Then the idea arose that it’s good, okay, let it be, but there are adult guys, adult guys have storage. We take storage systems, and put all our files on this storage system and behave like older guys. We agreed on a budget. We went to Hetzner. We were not given storage. Moreover, when we figured out that we would take a nine-disk machine, put iSCSI on it on FreeBSD, iSCSI on the internal Gbit network, forward it all to our transfer servers, raise the old storage on Postgres in the form in which it was . And they even counted, sort of like, in 128 GB of RAM, we got in for another six months. It turned out that these nine-disk machines cannot be connected to our cluster via the internal network, because they are in completely different racks. “At night through the forest” - no one expected that this pit would fall under our feet. And no one expected us to be in the zone. That this is another crush. In short, on the Hetzner shared network, the idea of quasi-storage on iSCSI did not fail. There was too much latency, we were falling apart a cluster file system.

2015, spring. 450 million files. On leofs, the place ended completely, i.e. at all. Rakuten did not even talk to us. Thanks to them. But horror, the worst thing happened to us in the spring of 2015. Everything was fine yesterday, and today disc saturation in the Postgres server has risen to 70% and has not dropped down. After a week, he rose to 80%, and now, before switching to our last option, he kept from 95 to 99% all the time. This means that, firstly, the discs will die soon, and secondly, everything is very bad in terms of speed of return and speed of publication. And then we realized that we must already take a step forward. That the situation is such that we will not be kicked out of this project anyway, after that the project will be closed, because these 450 million files are the whole project. In general, we realized that we must go all-in.

About the solutions that we developed in the spring of 2015 and implemented, which are now functioning, I will talk a little later. In the meantime, what have we learned from the time we exploited all this?

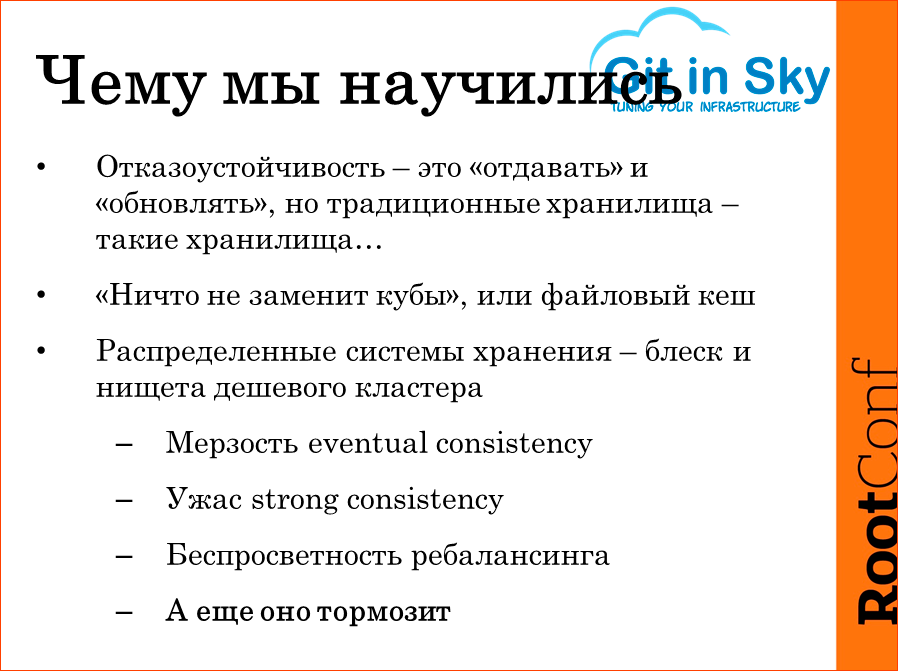

First of all, fault tolerance. Fault tolerance - this does not store files. Nobody needs to know that you have safely hidden 450 million user files if users cannot manage to access them.

File cache. I treated him a little with contempt until I discovered that if you have a really high load, you will not get anywhere, you have to hide the hot set in memory. There, the hot set is not so big. In fact, the hot set is about 70 thousand sites, and about 10 million files. Those. if in Hetzner you could take a cheap car with 1 TB of RAM, we would hide all this in memory, and again we would not have problems if we were adult boys and not stalkers from the garbage.

Distributed storage systems. If you have money for a large storage system, which in itself has synchronization with another such storage system ... When I last checked, the chassis cost about 50 thousand euros. And you can’t insert any disks there. Maybe now it has become more expensive, and maybe it has become cheaper, there are many players in this market - IBM, Hewlett-Packard, EMC ... Nevertheless, we tried several cheap solutions - CEF, leofs and a couple of other names which I did not remember.

If your storage is declared as eventual consistency, it means that you are in trouble. What problems did you get with eventual consistency, I just talked about. And what problems do we get with strong consistency? We put the file there, for some reason it does not cram and breaks off. The user retries, the publication lays in the queue. After some time, the queue grows, and after some time it turns out that your file storage fell under the load created by users who always poke on the button like monkeys. In a corporate environment, one could explain that this should not be done, but they paid money, they want to poke a button.

Rebalancing turned out to be a separate problem. The first thing that people learn to do with the CEF system is that they learn to screw it on rebalancing so that when it is needed, it does not eat up 100% of the disk capacity and internal network.

And it slows down. All the distributed storage systems that we tried are terribly slow. I mean - there are less than 100 IOPS on them. If this is a POSIX system, this is, in general, a sentence. If this is an object store, then that might be good. Varnish was added to leofs with us so that the most popular content could still be cached, rather than requested each time from there.

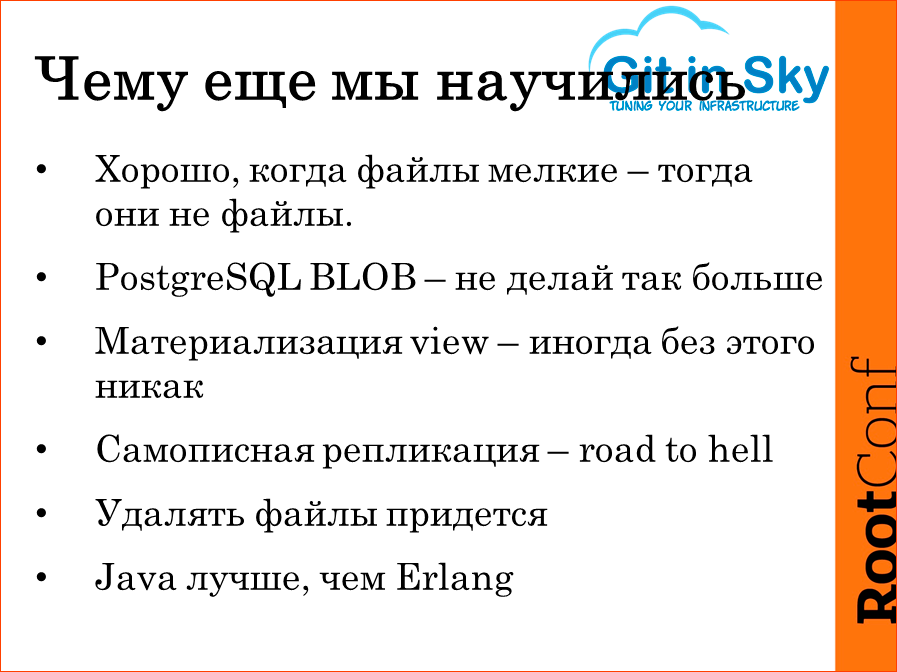

What else have we learned? Small files are not files. Small files are records in the database. All that can be taken from the repository in one piece and given to the user in one piece is not a file, it’s all very convenient content that you can handle.

PostgreSQL BLOB. Never use it. Of course, you could read about it in the documentation, but for some reason I did not read it. PostgreSQL BLOB does not fall into standard PostgreSQL replication. Neither this nor this. Actually, this is a Postgres table, an internal one, in which this file is divided into pieces, but this table never gets into replication. Maybe they will fix this problem, but this is what we stumbled upon when we already had 4 TB of data in this Postgres itself, and it was too late to do anything.

Materialization view - it turned out to be a silver bullet. If you start to slow down on joins, on the CPU base, then view materialization can help you. It is very easy - 3-4 triggers, and everything is fine with you. The main thing is not to forget to check that the data has changed, and accordingly, the data in the materialized view should be invalidated.

Self-made replication. I was very proud of her until I discovered how she works. Do not, do not write self-written replication. I have been told about this since 2012. In the fall of 2012 in HighLoad ++, I was proud to tell all this about how the file storage on Postgres works great for us. And they immediately told me that it would be bad. I knew it would be bad, I did not know that so soon.

When we started messing around with all this, we knew that adult boys like VKontakte never delete files. They only mark them as deleted. We thought that we don’t even need to mark them as deleted, everything will be fine with us and so. Until Roskomnadzor came to us and asked to remove this, this and this. We deleted the site, we provided for the removal of the site. But it turned out that direct links to counterfeit images were hidden in search engines, so a real nightmare began.

And again, hello to Rakuten - Java is better than Erlang. Not in the sense that Java itself is better than Erlang. And in the sense that Java programmers are not smart enough. Java programmers would have climbed into this cesspool and repaired us, maybe leofs.

What didn’t we learn? We were not able to learn big fault-tolerant storage. The customer simply does not have such a budget, and we understand it. And even if the customer found a budget, he would have to find a budget to move from Hetzner, because Hetzner did not give anything like that.

Distributed POSIX-compliant file systems. In general, a POSIX-compatible file system is a system that provides random access. In fact, it differs from object storage in this, random access to write by file. This is why it is not necessary for our tasks. Therefore, it is not needed. But nobody uses it, it’s in beta.

Now about how we solved the problem and, hopefully, solved it for the next year at least, or maybe two. And then, of course, everything will start in a new way.

- We took a cluster NoSQL DBMS. We took the Aerospike, but you can take any. We took Aerospike because it has excellent latency performance. It

became free last fall, so we took advantage of it. - We ourselves cut the files into chunks and we store each chunk separately, as a separate line in the database, and we extract it separately when we need it.

- We wrote versioning. That very transactionality. In fact, this is a modernization of the idea of symlinks. Those. we have a certain transaction id, which

includes all the files under it downloaded, and then in the table with sites the link to the transaction changes. - Self-made dedup. If we ourselves cut into chunks, then we can calculate SHA1 for each chunk, we can store this SHA1 in the database as an access key to this

chunk. In general, dedup reduced it to us. I’ll tell you more. We had dedup, it was at the file level, we counted the amount for the file and duplicate files

were stored in the database only once. Using the dedup chasing reduced the base size from eight TB to six. This is a pure amount of data, without replication. - Recorded transactions. It is clear that there are no transactions in distributed databases. We had to write our own, which are purely for the task that we are

solving. - And of course, we screwed LZ4 compression there.

So, it works. Now it's all in battle. Now it all stores the very 450 million files, 1.5 million sites, and answers 600 requests per second. And it is not loaded, it can do more. And it takes 20 million updates per day.

There are eight servers in this cluster, and this is due to the fact that we do not fit content on a smaller number of servers. We have replication factor 3, we have disks there, two of three. We are very brave guys, so they are in RAID 0. Accordingly, we have eight servers of 3 T. At the moment, each server is already 66% full, respectively, we are going to take two more and connect it to the same cluster and wait until rebalancing is complete.

1.5K queries per second is what I tested on. At the same time, I ran into not a cluster of eight machines, of course, but the performance of one test server. Well, I decided that 1.5 K is 2.5 times more than we have now, so I did not continue testing at this point.

But still, "At Night Through the Forest." We moved users very smoothly with our NoSQL database, and everything was very good, until it turned out that we uploaded 2 TB per server. Fiction - the cluster worked 5 minutes ago, but it doesn’t work anymore. Here he gives the 500th error, here he gives the 404th error, here he gives the 403th error. The publication does not work. The logs are very strange messages: "I could not find such a sector."

Thanks to the support of Aerospike, I did not sleep just one night. In the morning they answered me, it turned out that it is impossible to configure one low-level storage file in Aerospike more than 2 T. You can configure several at the same time, now it is configured for three files of 1 T. But our first Aerospike cluster was configured on one node. the file is at 3 T. As soon as we got to two T, it immediately stopped functioning. And moreover, in a very unpleasant way - we lost a certain amount of user-loaded content, which was no longer on the old store, but on the new one it was already broken off.

This is the same “Night through the Forest” - you fell into a ravine and lie in the mud, you do not see the sky, you do not understand where to get out, hellish creatures are already howling. A restart of one node takes three hours, because it builds an index in the memory, it is forced to read all the data from the disk. 100 MB per second is a simple SATA drive. And complete rebalancing. We checked this, we knocked out one node, erased the data from it, turned it back on, one node was complete, it takes 60 hours to restore the cluster when replacing one node. This means that we can not afford any replication factor of less than three.

This report is a transcript of one of the best speeches at a professional exploitation conference and devops RootConf . We are already preparing the 2017 conference, although what does it mean to cook? Opened registration for tickets and reports. Now all the forces are cast on HighLoad ++ , it eats up all our energy and sleep :(

This year there are a lot of interesting topics on data warehouses, here are the most controversial and furious reports:

- The bike is already invented. What can industrial storage do? / Anton Zhbankov;

- Archival Disc to replace Blu-ray: building an archive storage on optical technologies / Alexander Vasiliev, German Gavrilov;

- The architecture of storage and upload photos in Badoo / Artyom Denisov.

Also, some of these materials are used by us in an online training course on the development of highly loaded systems HighLoad. Guide is a chain of specially selected letters, articles, materials, videos. Already in our textbook more than 30 unique materials. Get connected!