We use Check Knowledge Module (CKM) in projects based on Oracle Data Integrator

This post opens a series of materials devoted to the data validation module included in the ETL (or ELT - as ORACLE positions it) Oracle Oracle Integrator product. In our opinion, the functionality of the module is undeservedly ignored for the sake of more sophisticated and “intelligent” products of the Data Quality class. In this regard, we have a desire to look at CKM not as a kind of atavism, but as a holistic solution that allows us to provide basic control over the processed data.

For this we plan:

As an introduction, it is worth noting that all modules in ODI are proudly called the Knowledge Module, which, apparently, reflects the following facts:

CKM-module refers to the so-called template type of modules, i.e. involves (in addition to influencing the module’s own behavior through the installation of options) modification of the “body” or creation from scratch of its own modules of this class.

In this article we will begin the story with standard checks that are implemented in the module supplied with ODI CKM. Let's consider how they are set and what functionality can be implemented using them.

Immediately it is worth noting that it is possible to use the module in two modes (STATIC_CONTROL and FLOW_CONTROL): control of the source / end data or checking the correctness of the data obtained as a result of the integration mapping before placing them in the target table, respectively.

So, the "standard" includes five types of "speak for themselves" checks:

The verification data is set at the level of the data model description in ODI, but can be activated / deactivated in various places depending on the operating mode of the module.

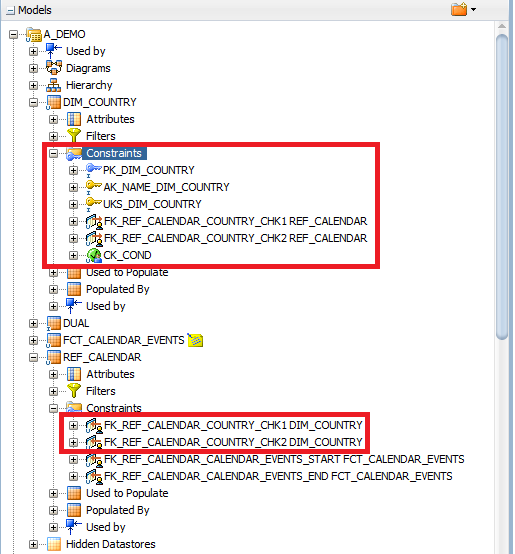

The first four types of checks are set at the Constarints level of a particular DataStore (DS - description of an entity - table, file, etc. - in the ODI metadata repository) and use separate records for each specified restriction.

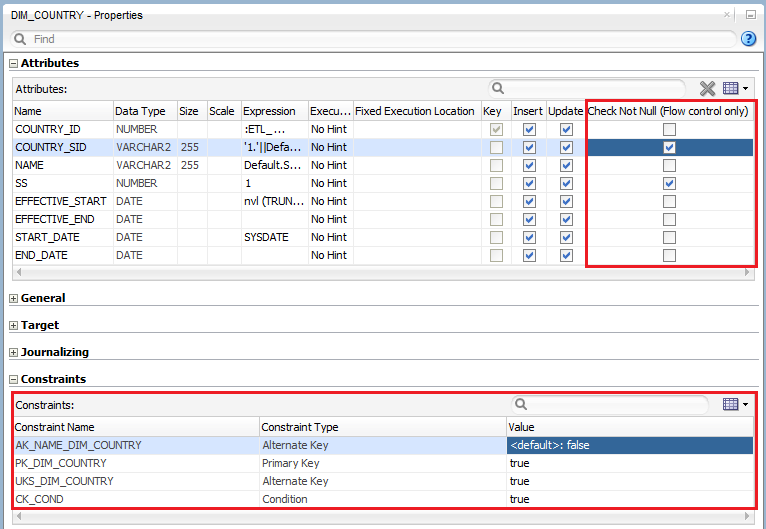

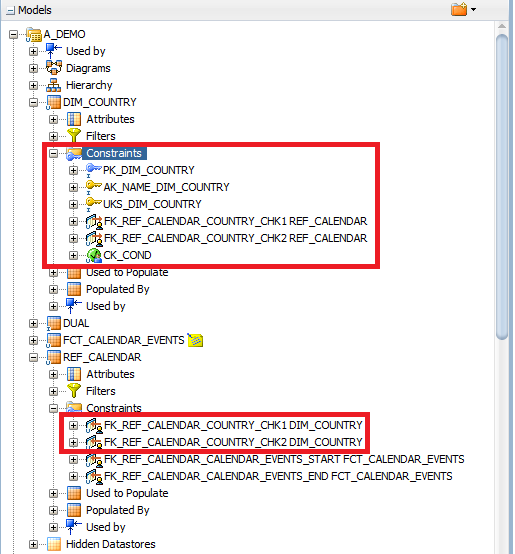

In the illustration, we see a set of checks defined for two Data Store: DIM_COUNTRY and REF_CALENDAR. Note that when creating under one of the DS, both foreign key checks become visible under the other DS, connected by the FK condition.

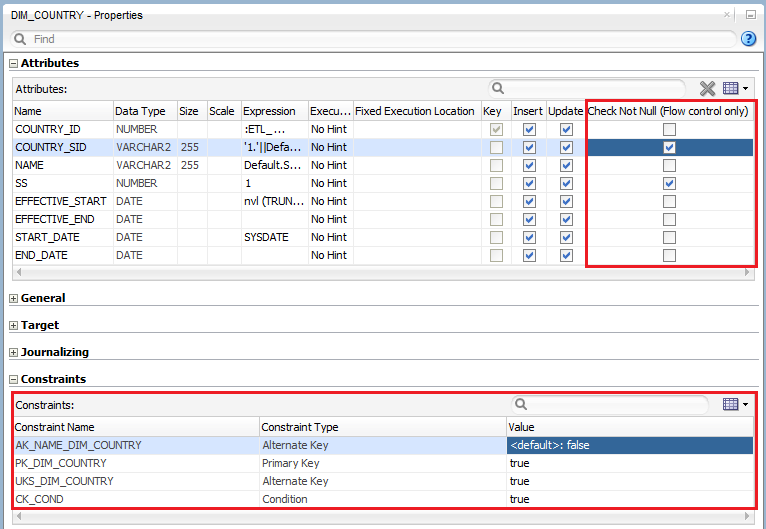

The final type of validation, Mandatory, is set at the DS field level:

Let's look at what general and private parameters are set for different types of checks.

1. The modes for which the considered data control (Static / Flow) can be used correspond to the CKM operating modes - STATIC_CONTROL / FLOW_CONTROL, respectively. In the picture below it is red.

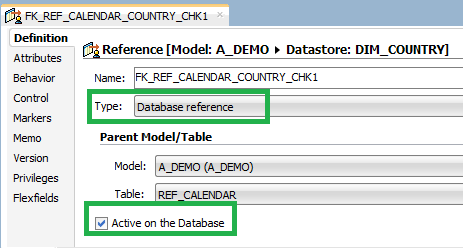

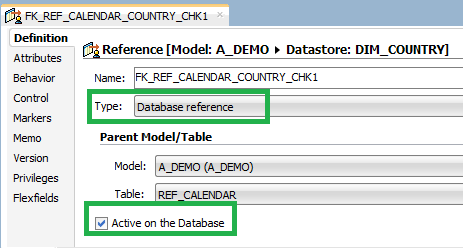

Flags in the green block (only for PK / AK / CK checks) indicate the need for the physical presence and activity of this restriction in the final system, respectively, if such a possibility is implied. The meaning of the Type = Database reference parameters and the Activate on Database flag to restrict the FK type carry the same meaning.

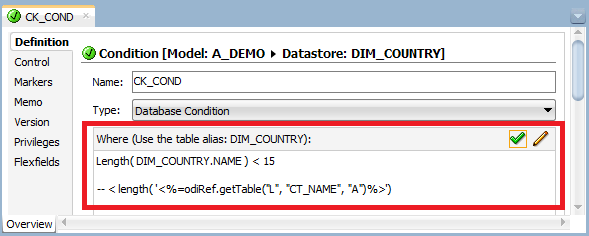

Or the value of the Database Condition to restrict the CK.

In fact, the parameters responsible for the physical presence and activity of the constraint are intended only to reflect the desired / existing state of the final system - their installation / removal does not directly affect the final system. These parameters are put down when building a data model based on the metadata of the final system (reverse engineering). Or, the corresponding definition of the constraint is added to the object generation script in the target system, which is automatically created based on the description of the data model - with an explicit request for creation.

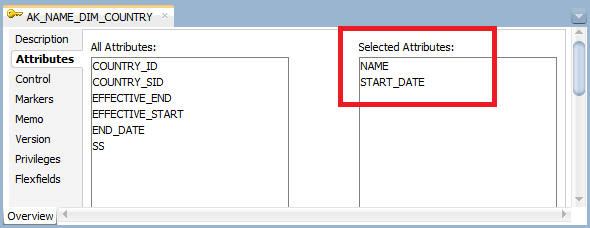

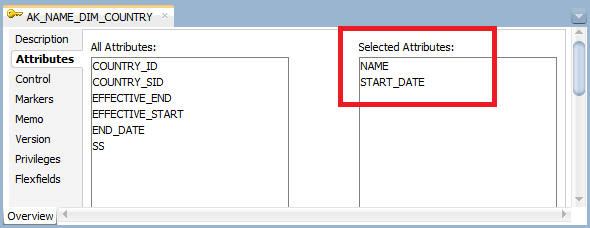

2. Attributes participating in the restriction condition for PK / AK specify the fields included in the key:

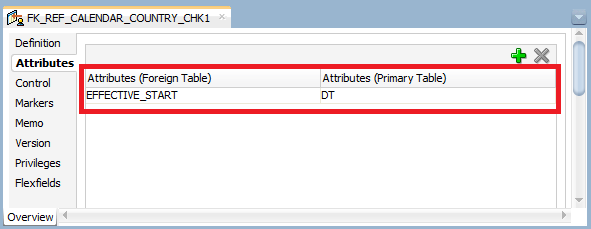

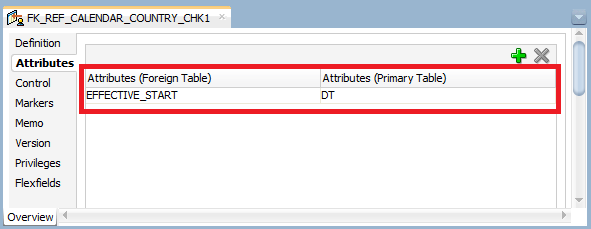

for FK - reference fields (left) and the corresponding key fields in the parent table (right):

If the FK constraint type is specified as Complex User Reference, then the table link condition is specified in the Expression field

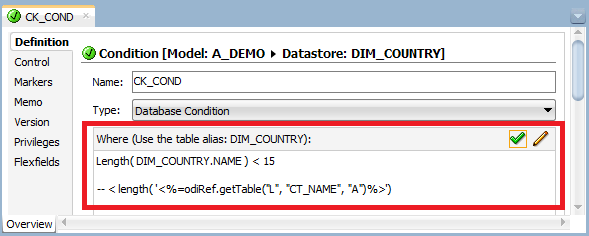

For CK, a condition is set that will be checked (it can be quite complicated).

Here it is worth saying that in the expression for both FK and CK it is allowed to use functions from the wildcard API (about them in the next article). However, for CK (under the condition Type = Oracle Data Integrator Condition), such a condition will pass the built-in check (green daw), but for FK it will not.

3. Individual settings.

The Primary Key or Alternate Key constraint type indicates the appropriate type of validation. The Not Unique Index option is used only as an indication of the need to create an additional index in order to increase productivity.

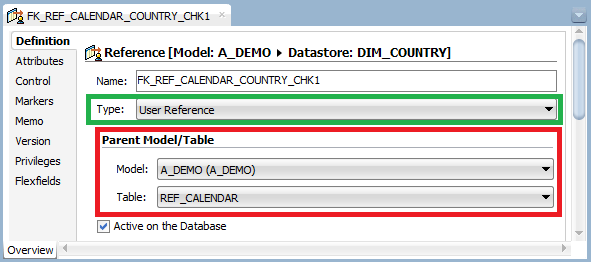

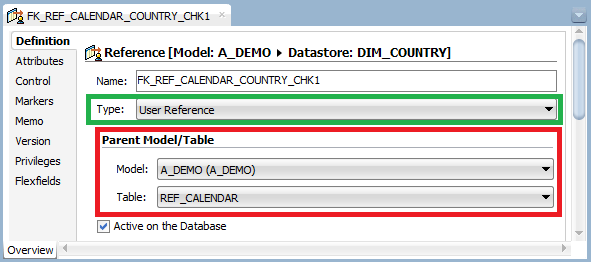

When determining the constraints, the data model and the parent table (more precisely DS) are selected from the available ones - in the figure below in red.

In the simple version (Type = User Reference / Database Reference), the connection condition sets the foreign key constraint; in the "advanced" version (Type = Complex User Reference), a more complex condition is allowed, as described above (the option is highlighted in green in the figure).

So, the limitations are spelled out in the data model - what's next? They should now be involved in the STATIC_CONTROL check. For FLOW_CONTROL there is an additional level of control of ACTIVATION of restrictions, which, when creating a mapping, is set in accordance with the settings available in the model, but can be redefined. Get to know him.

To do this, consider the Logical tab, selected when viewing a specific integration mapping.

It is necessary to select the resulting DS on it and go to its properties. Here you can activate / deactivate existing checks in the red blocks indicated.

But there are a number of nuances.

NN type checks can be activated / deactivated regardless of whether the Mandatory and Flow flags are set in the data model. Those. these settings are completely independent of the model and completely redefine them. Thus, changes made at the model level will not affect the existing mapping and will be taken into account only when creating a new one.

Checks like PK / AK / FK / CK can be activated / deactivated, but if the Flow flag is cleared in the model, then activating the restriction at the mapping level will not help - this check will not be performed in the FLOW_CONTROL mode, regardless of the value specified at the mapping level. The opposite works - the check can be disabled at the mapping level.

There is a feature of the behavior of ODI Studio 12c (version 12.1.3.0.0). When changing the Flow flag for PK / AK / FK / CK restrictions at the model level, this fact does NOT automatically appear in the existing mapping (the word does not appear / does not disappear in the Constarints window opposite the corresponding restriction). This will happen only when the flag value is re-selected in the interface. Therefore, in order to avoid misunderstandings in the behavior of the module, taking into account the previous nuance, when deactivating restrictions in the model, you must manually update all the mappings associated with it.

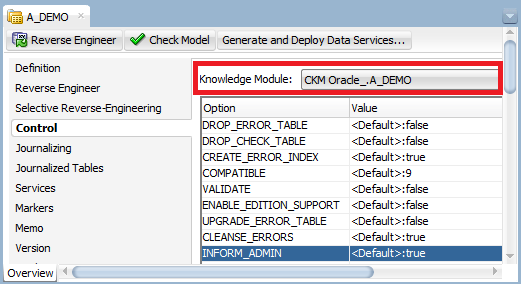

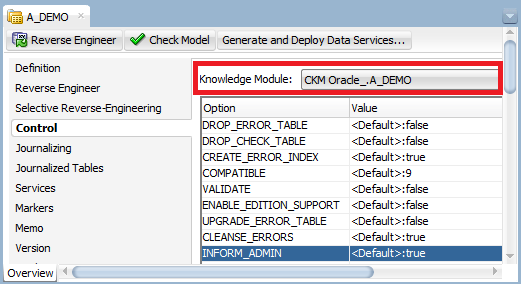

And the last thing that needs to be said in this article to complete the topic covered is how to use CKM in checks. For STATIC_CONTROL mode, the module must be specified in the data model settings.

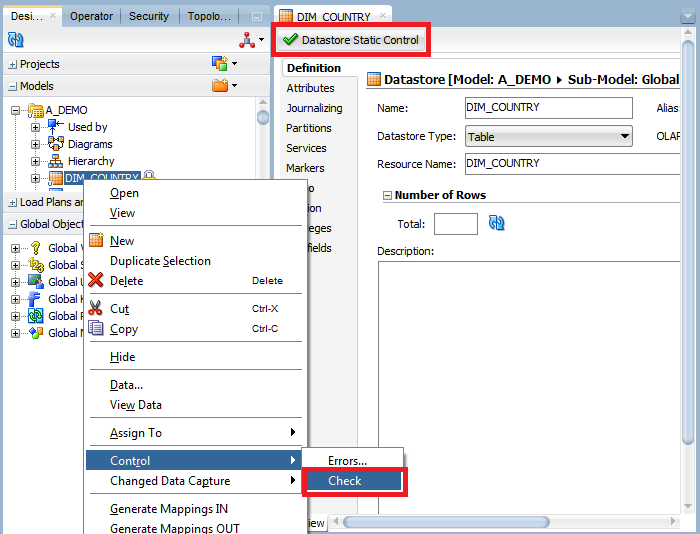

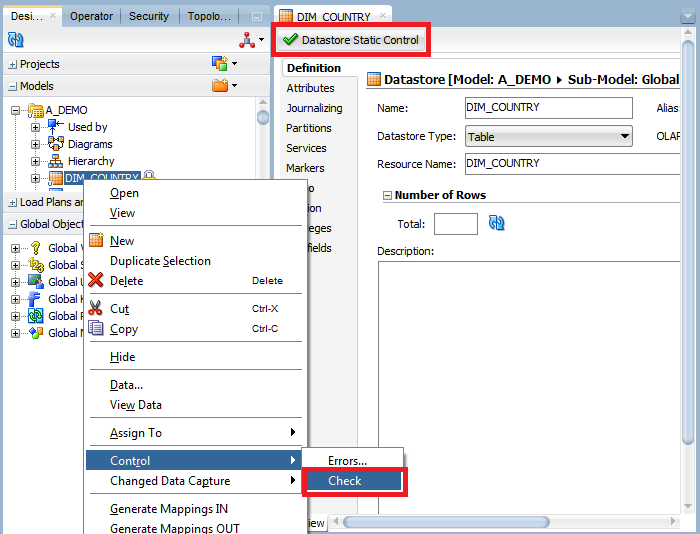

This will allow you to analyze the “purity” of data in any of the available DSs, for example, by selecting the appropriate context menu item on a specific DS or by clicking the Datastore Static Control button on the Definition tab when viewing DS.

To activate the STATIC_CONTROL / FLOW_CONTROL mode at the mapping level, you will need to specify the corresponding directive CKM_STATIC / CKM_FLOW in the code of the IKM module connected to the mapping.

Also, on the Physical tab in the mapping, specify the CKM module itself and make sure that when connecting IKM (highlighted in green), the FLOW_CONTROL option is activated.

This article was prepared by Alexei Polev, architect of the Jet Infosystems Applied Financial Systems Department.

For this we plan:

- talk about the types of checks included in the standard Oracle module and what settings you need to make in order to activate them;

- touch on the performance features, the possibilities to expand the standard module, the use of a substitution API, which is used to ensure the universality of the functionality being developed;

- using a specific example, consider the possibilities provided by Oracle Data Integrator Tools, and the option of transferring DEV-> PROD settings using the topology;

- evaluate the workplace of the operator who processes errors detected by the CKM module.

As an introduction, it is worth noting that all modules in ODI are proudly called the Knowledge Module, which, apparently, reflects the following facts:

- certain behavior is pre-wired into the module;

- the name of the module (Check, Loading, Integration, etc.) corresponds to the class of tasks solved by the module;

- within one class, specific modules can be selected or developed to solve specific types of problems.

CKM-module refers to the so-called template type of modules, i.e. involves (in addition to influencing the module’s own behavior through the installation of options) modification of the “body” or creation from scratch of its own modules of this class.

In this article we will begin the story with standard checks that are implemented in the module supplied with ODI CKM. Let's consider how they are set and what functionality can be implemented using them.

Immediately it is worth noting that it is possible to use the module in two modes (STATIC_CONTROL and FLOW_CONTROL): control of the source / end data or checking the correctness of the data obtained as a result of the integration mapping before placing them in the target table, respectively.

So, the "standard" includes five types of "speak for themselves" checks:

- Primary Key (PK) - the uniqueness of the primary key

- Alternate Key (AK) - key uniqueness

- Join (FK) - control the availability of related records

- Condition Check (CK) - Compliance

- Mandatory (NN) - required fields

The verification data is set at the level of the data model description in ODI, but can be activated / deactivated in various places depending on the operating mode of the module.

The first four types of checks are set at the Constarints level of a particular DataStore (DS - description of an entity - table, file, etc. - in the ODI metadata repository) and use separate records for each specified restriction.

In the illustration, we see a set of checks defined for two Data Store: DIM_COUNTRY and REF_CALENDAR. Note that when creating under one of the DS, both foreign key checks become visible under the other DS, connected by the FK condition.

The final type of validation, Mandatory, is set at the DS field level:

Let's look at what general and private parameters are set for different types of checks.

1. The modes for which the considered data control (Static / Flow) can be used correspond to the CKM operating modes - STATIC_CONTROL / FLOW_CONTROL, respectively. In the picture below it is red.

Flags in the green block (only for PK / AK / CK checks) indicate the need for the physical presence and activity of this restriction in the final system, respectively, if such a possibility is implied. The meaning of the Type = Database reference parameters and the Activate on Database flag to restrict the FK type carry the same meaning.

Or the value of the Database Condition to restrict the CK.

In fact, the parameters responsible for the physical presence and activity of the constraint are intended only to reflect the desired / existing state of the final system - their installation / removal does not directly affect the final system. These parameters are put down when building a data model based on the metadata of the final system (reverse engineering). Or, the corresponding definition of the constraint is added to the object generation script in the target system, which is automatically created based on the description of the data model - with an explicit request for creation.

2. Attributes participating in the restriction condition for PK / AK specify the fields included in the key:

for FK - reference fields (left) and the corresponding key fields in the parent table (right):

If the FK constraint type is specified as Complex User Reference, then the table link condition is specified in the Expression field

For CK, a condition is set that will be checked (it can be quite complicated).

Here it is worth saying that in the expression for both FK and CK it is allowed to use functions from the wildcard API (about them in the next article). However, for CK (under the condition Type = Oracle Data Integrator Condition), such a condition will pass the built-in check (green daw), but for FK it will not.

3. Individual settings.

A. For PK and AK.

The Primary Key or Alternate Key constraint type indicates the appropriate type of validation. The Not Unique Index option is used only as an indication of the need to create an additional index in order to increase productivity.

B. For FK

When determining the constraints, the data model and the parent table (more precisely DS) are selected from the available ones - in the figure below in red.

In the simple version (Type = User Reference / Database Reference), the connection condition sets the foreign key constraint; in the "advanced" version (Type = Complex User Reference), a more complex condition is allowed, as described above (the option is highlighted in green in the figure).

C. For CK and NN, options for existing settings were already covered when parsing other items

So, the limitations are spelled out in the data model - what's next? They should now be involved in the STATIC_CONTROL check. For FLOW_CONTROL there is an additional level of control of ACTIVATION of restrictions, which, when creating a mapping, is set in accordance with the settings available in the model, but can be redefined. Get to know him.

To do this, consider the Logical tab, selected when viewing a specific integration mapping.

It is necessary to select the resulting DS on it and go to its properties. Here you can activate / deactivate existing checks in the red blocks indicated.

But there are a number of nuances.

NN type checks can be activated / deactivated regardless of whether the Mandatory and Flow flags are set in the data model. Those. these settings are completely independent of the model and completely redefine them. Thus, changes made at the model level will not affect the existing mapping and will be taken into account only when creating a new one.

Checks like PK / AK / FK / CK can be activated / deactivated, but if the Flow flag is cleared in the model, then activating the restriction at the mapping level will not help - this check will not be performed in the FLOW_CONTROL mode, regardless of the value specified at the mapping level. The opposite works - the check can be disabled at the mapping level.

There is a feature of the behavior of ODI Studio 12c (version 12.1.3.0.0). When changing the Flow flag for PK / AK / FK / CK restrictions at the model level, this fact does NOT automatically appear in the existing mapping (the word does not appear / does not disappear in the Constarints window opposite the corresponding restriction). This will happen only when the flag value is re-selected in the interface. Therefore, in order to avoid misunderstandings in the behavior of the module, taking into account the previous nuance, when deactivating restrictions in the model, you must manually update all the mappings associated with it.

And the last thing that needs to be said in this article to complete the topic covered is how to use CKM in checks. For STATIC_CONTROL mode, the module must be specified in the data model settings.

This will allow you to analyze the “purity” of data in any of the available DSs, for example, by selecting the appropriate context menu item on a specific DS or by clicking the Datastore Static Control button on the Definition tab when viewing DS.

To activate the STATIC_CONTROL / FLOW_CONTROL mode at the mapping level, you will need to specify the corresponding directive CKM_STATIC / CKM_FLOW in the code of the IKM module connected to the mapping.

Also, on the Physical tab in the mapping, specify the CKM module itself and make sure that when connecting IKM (highlighted in green), the FLOW_CONTROL option is activated.

This article was prepared by Alexei Polev, architect of the Jet Infosystems Applied Financial Systems Department.