Night Sight: how Pixel phones are seen in the dark

- Transfer

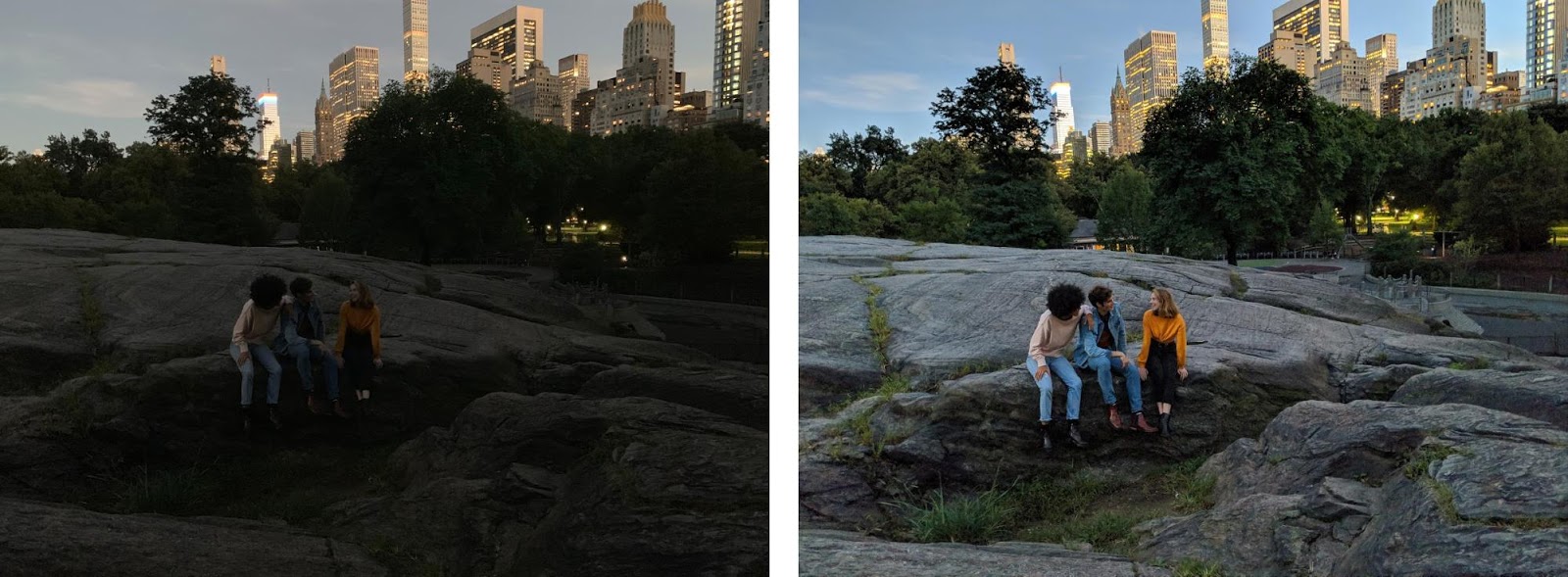

Left: iPhone XS ( full resolution photo ). Right: Pixel 3 Night Sight ( full resolution photo ).

Night Sight is a new feature of the Pixel Camera application that allows you to take clear and clean photos in very low light, even when the lights are so low that your eye can see little. It works on the main and front camera of all three generations of Pixel phones, and does not require a tripod or flash. In this article we will talk about why it is so difficult to take photos in low light, discuss computational photography and machine learning technologies superimposed on the HDR + format, and allowing Night Sight to work.

Why is it difficult to take photographs in poor light?

Everyone who took photographs in a poorly lit place is familiar with noise in an image that looks like random brightness changes from pixel to pixel. For smartphones' cameras, with lenses and photomatrices of a small size, the main source of noise is the natural variation in the number of photons entering the lens, known as shot noise. Any camera suffers from it, and it will always be present, even with an ideal quality matrix. However, the matrix is not ideal, so when converting the electric charge arising due to the ingress of light on the pixels, there is a second source of noise - the noise when reading. Other sources also contribute to the signal-to-noise ratio (SNR), showing how much of the image remains noise-free. Fortunately, SNR grows in proportion to the square root of the exposure time (or faster), so the longer the exposure, the cleaner the picture. But in dim lighting, it is rather difficult to maintain stillness long enough to take a good shot, and what you are photographing may also not stand still.

In 2014, we introduced the technology of computational photography HDR +, which improves this situation by obtaining several images at once, which are then programmatically aligned and merged together. The main goal of HDR + is to improve the dynamic range, that is, the ability to take photographs in a wide range of lighting conditions (for example, at sunset or in the case of backlit portraits). All generations of Pixel phones use HDR +. It turns out that merging multiple images also reduces the effect of shot noise and reading noise, so it improves SNR in dim light. To preserve the clarity of images in terms of hand-shake and movement of the subject, we use a short shutter speed. We also discard parts of the image for which no good alignment methods have been found. This allows HDR + to produce sharp images

How dark will it be?

But if getting and merging multiple frames gives sharper photos in low light, why not use HDR + to get several dozen images so that we can, in fact, see in the dark? Let's start with the definition of "darkness." Photographers, discussing the illumination of the scene, often measure it in suites . Lux is the amount of light incident on a surface unit, measured in lumens per square meter. So that you can roughly imagine the levels of illumination, here is a convenient table for you:

- 30,000 - pavement, lit by the sun.

- 10,000 pavement on a clear day in the shade.

- 1000 - pavement on a gloomy day.

- 300 - office lighting.

- 150 - table lighting at home.

- 50 - light in the restaurant.

- 20 - restaurant with atmospheric lighting.

- 10 - the minimum lighting required to find a matching pair of socks.

- 3 - the sidewalk in the light of lanterns.

- 1 - the minimum light for reading the newspaper.

- 0.6 - the pavement under the full moon.

- 0.3 - it is impossible to find the keys on the floor.

- 0.1 - it is impossible to move around the house without a lantern.

Smartphone cameras taking one photo are starting to experience difficulties at a light level of 30 lux. Phones that take several shots and combine them can last up to 3 lux, but in darker conditions they cannot cope and rely on flash. With the Night Sight technology, we sought to improve the quality of the photo in the range from 3 to 0.3 lux using a smartphone, one click on the trigger and no flash. For this to work, several key elements are required, the most important of which is getting more frames.

Get the data

Increasing the frame exposure time increases the SNR and allows for a cleaner image, but leads to two problems. Firstly, the default shooting mode on Pixel phones uses the zero delay protocol (ZSL), which limits the exposure time. The camera application starts shooting as soon as you start it, and stores photos in a circular buffer that constantly erases old frames, freeing up space for new ones. When you press the shutter, the camera sends the last 9-15 frames to process HDR + or Super Res Zoom software.. This allows you to capture exactly the moment you need - hence the "zero delay of the descent." However, since we are helping you to aim, we show these images on the screen, HDR + limits the maximum exposure time to 66 ms, regardless of the level of illumination, which allows the viewfinder to maintain a frequency of at least 15 frames / sec. For dimmer scenes where a longer shutter speed is needed, Night Sight uses Positive Shutter Delay (PSL) technology and waits for a button press before taking photographs. To use PSL, you need to hold the phone motionless for a while after pressing a button, but this mode allows you to increase the shutter speed, improving the SNR at the worst illumination.

The second problem of increasing the exposure time per frame is the blur caused by shaking hands or the movement of objects in the scene. Optical image stabilization (AX), available in Pixel 2 and 3, reduces the impact of shaking for an average shutter speed (up to 1/8 s), but does not help in the case of longer exposures or moving objects. To combat blurring beyond OSI, in Pixel 3, the default mode is motion measurement [motion metering], which uses optical flow.to measure recent scene movements and select a shutter speed that minimizes blur. Pixel 1 and 2 do not use this default mode, however, all three phones use this technology in the Night Sight mode, increasing the exposure time per frame up to 333 ms in the absence of movement. In Pixel 1, which has no OS, we do not increase the exposure time so much (and for self-cameras that do not have OS, the increase will be even more modest). If the camera is stabilized (leaning against the wall, put on a tripod), the shutter speed of each frame can be increased up to a second. In addition to varying the time-lapse exposure, we also vary the number of frames, from 6, if the phone is on a tripod, to 15, if shooting is done with it. These frame limits prevent user fatigue (and the need for a “cancel” button). So, depending on how

A specific example of using short shutter speeds when detecting motion:

Left: 15 frames, shot in a row by one of two Pixel 3 phones located side by side. In the center: a snapshot in the Night Sight mode with motion measurement turned off, which forces the phone to use a 73 ms shutter speed. The head of the dog is blurred. Right: Night Sight with motion measurement enabled, using 48 ms shutter speed. Blur is noticeably smaller.

An example of using a long exposure when the phone is on a tripod:

Left: part of the night sky shot taken from the hands with the help of Night Sight (the whole picture ). My hands were shaking a little, so Night Sight chose 333 ms × 15 frames = 5.0 seconds. Right: tripod shot ( full image). Jitter is not fixed, so Night Sight used 1.0 s × 6 frames = 6.0 seconds. The sky can be seen more clearly, less noise, more stars are visible.

Align and merge

The idea of averaging frames to reduce noise is as old as digital imaging technology itself. In astrophotography, this is called exposure stacking . Although the technology itself is simple, the most difficult thing is to properly align the image when shooting is done with it. We started working on this topic in 2010 with the Synthcam application . It constantly took photographs, leveled and merged them in real time at low resolution, and demonstrated the final result, which became the clearer the longer you looked.

Night Sight uses a similar principle, but at full resolution of the matrix and not in real time. For Pixel 1 and 2 phones, we use the HDR + fusion algorithm,modified and adjusted to enhance the ability to detect and reject incorrectly aligned parts of frames, even in very noisy scenes. On Pixel 3, we use Super Res Zoom , which adjusts depending on whether you are enlarging an image or not. And although the latter was designed for high resolutions, it is also capable of reducing noise, since it produces an average of several photographs put together. For some night scenes, Super Res Zoom gives better results than HDR +, but it needs a faster Pixel 3 phone processor.

By the way, all this happens on the phone in a few seconds. If you quickly switch to the list of photos (waiting for the shooting to end!), You can see how the image “manifests” as HDR + or Super Res Zoom ends.

Other difficulties

Although the basic ideas described sound simple, in the absence of sufficient lighting, some of the tricks of the Night Sight development turned out to be very difficult:

1. Auto White Balance (ABB) does not work correctly in low light

People are well aware of the colors of things, even in color lighting (or in sunglasses), showing color continuity . However, this process often fails when we take a photo in one light, and we look in another; the photo seems tinted to us. To correct this effect, camera perception changes the color of images in order to partially or fully take into account the main color of the backlight (which is sometimes called color temperature ), in effect, shifting the image colors to make it appear that the scene is highlighted in neutral white. This process is called automatic white balance .

The problem is that this task belongs to those that mathematicians call incorrectly posed.. Was the snow shot on camera was blue? Or is it white snow, illuminated by a blue sky? Apparently, the last option. Such ambiguity complicates the search for white balance. The ABB algorithm used in modes other than Night Sight works well, but with poor or color lighting (for example, sodium discharge lamps ) it is difficult to make out the color of the backlight.

To solve these problems, we developed an algorithmABB is based on training, trained to distinguish a well balanced picture from a poorly balanced picture. When a photo is balanced poorly, the algorithm may offer color shift options so that the lighting seems more neutral. To train the algorithm, it was necessary to take a photo of many different scenes using Pixel devices, and manually adjust their balance by looking at the photo on the monitor with well-calibrated colors. The algorithm can be viewed by comparing the same scene with poor lighting, photos of which were made by different methods using Pixel 3:

Left: the balancer in the camera does not know by default how yellow the highlight of this hut on the coast of Vancouver was ( full photo ). Right: our learning algorithm did better ( full photo).

2. Marking shades on too dark scenes

The purpose of Night Sight is to take photographs of so dark scenes that it is difficult to distinguish them with the naked eye - to develop something like superpowers! Associated with this problem is the fact that in low light conditions, people no longer distinguish colors, because cones in our retina stop working, leaving all the rods unable to distinguish the wavelengths of light. At night, the scene does not lose its colors, we simply cease to distinguish them. We want the photos in Night Sight to be in color - this is also part of the superpowers and a possible cause for another conflict. Finally, our chopsticks have limited spatial sharpness, so objects appear blurry at night. We want the photos in Night Sight to be clear and have more details than you can tell.

For example, if you put a DSLR on a tripod and set a very large shutter speed - a few minutes, or apply a few photos with a shorter shutter speed - then the night photo will look like a day photo. Details will be visible in the shadows, the scene will be colored and clear. Look at the photo below, made a DSLR; stars are visible, so it must be night, but the grass is green, the sky is blue, and the moon casts shadows of trees that look like sunny. The effect is pleasant, but not always necessary - and if you share such a photo with a friend, he will be at a loss as to how you made it.

Yosemite Valley at night. Canon DSLR, 28mm f / 4 lens, shutter speed 3 min, ISO 100 ( full photo )

Artists have known for hundreds of years how to make a picture look like a night shot:

“A Philosopher Explaining the Model of the Solar System,” Joseph Wright, 1766. The artist uses different colors, from white to black, but the scene painted is clearly dark. How did he do it? Increased contrast, surrounded the scene with darkness, blackened the shadows, in which no details are visible.

In Night Sight we use similar tricks, in particular, using the S-shaped curve for marking the shades. However, it is quite difficult to find a balance between the “magic superpowers” and a reminder of what time the photo was taken. Below is a photo that partially succeeded:

Pixel 3, 6 seconds with Night Sight, on a tripod ( full photo )

How dark can it be for shooting with Night Sight?

When lighting is worse than 0.3 lux, autofocus begins to fail. If you do not see the car keys lying on the floor, your smartphone cannot focus either. To do this, we added two manual focus buttons on the Pixel 3 to the Night Sight mode: “Close” focuses at a distance of just over a meter, “Far” at 4 meters. The last is the hyperfocal distance of the lens, that is, everything that is at a distance from half (2 m) to infinity should get into focus. We are also working to improve the ability of Night Sight to autofocus in low light. You can still take great photos with light below 0.3 lux and even astrophotography, as demonstrated in this article , but you will need a tripod, manual focus and a special application using the Android Camera2 API.

How far can we go? In the end, at a certain level of illumination, the read noise eclipses the photons collected by the sensor. There are other sources of noise, including dark current , increasing in proportion to the exposure and dependent on temperature. In order to avoid such effects, biologists cool their cameras to temperatures below -20 ° C to get photos of fluorescent animals - but we do not recommend doing this with Pixel phones! Too noisy images are also difficult to align. Even if we solved all these problems, the wind blows, the trees sway, and the stars and clouds move. Taking photos with very long shutter speeds is hard.

How to get the most out of Night Sight

Night Sight not only makes cool pictures in low light: it's just fun to use, because it takes pictures even when you see almost nothing. We display the image of the chip when the scene is so dark that when using Night Sight the photo will turn out better - but not limited to these cases. Immediately after sunset, at a concert in an urban setting, Night Sight takes clean photos with low noise and makes them brighter than reality. Here are some examples of photos taken with Night Sight, and A / B comparisons . Here are some tips for using Night Sight:

- Night Sight does not work in complete darkness, so choose scenes with a low level of illumination.

- Soft uniform illumination is better than sharp, creating dark shadows.

- To avoid glare, try to keep sources of bright light out of sight.

- To increase the shutter speed, click on the images of objects, and slide the shutter speed slider . Press again to cancel.

- To reduce exposure, take a photo and darken it in the photo editor ; noise will be less.

- If the scene is so dark that the focus does not work, click on the contrast border or on the border of the light source.

- If that doesn't work, use the “Close” and “Far” buttons.

- To maximize clarity, lean the phone against a wall or tree, place it on a table or a stone.

- Night Sight also works for selfies on an A / B album , with the option of screen backlighting.

Manual focus buttons (Pixel 3 only).

Night Sight works best on Pixel 3. We made it for Pixel 2 and for the original Pixel, although the latter uses a shorter shutter speed due to the lack of optical stabilization. Also, the automatic white balancer is trained on Pixel 3, so on older phones it will work less accurately. By the way, we make the viewfinder brighter in the Night Sight mode so that it is easier for you to take aim, but it works with a shutter speed of 1/15 s, so it will be noisy and will not give an idea of the quality of the final photo. So give him a chance - aim and hit the trigger. Often you will be pleasantly surprised!