96 cores and code optimization of ant route search algorithm

- Transfer

Today we’ll talk about code optimization, which implements an ant algorithm for finding optimal paths on graphs. We will look for bottlenecks in the program using Intel VTune Amplifier XE 2016 Update 2, and optimize it using MPI , OpenMP and the Intel Threading Building Blocks library.

Our goal is to ensure the effective operation of the program on a computer with four Intel Xeon E7-8890 v4 processors . The system is equipped with 512 GB of RAM, Linux 3.10.0-327.el7.x86_64 is installed on it, the code was compiled using Intel Parallel Studio XE 2016 U2.

The problem of finding the optimal route in the transport network is known as the “traveling salesman problem”. In practice, this is, for example, finding the best ways to transport goods. Initially, “efficiency” in tasks of this kind meant choosing the cheapest way, but over the past few decades the concept of “route cost” has expanded. Now, this includes environmental impact, the price of energy, and time. In addition to this, the globalization of business and supply chains has led to the fact that the size and complexity of transport networks, and hence the models on which the calculations are based, have grown significantly. Typical route optimization problems are now classified as NP-hard. Typically, deterministic methods are not suitable for solving such problems.

With the development of distributed and multi-core computing systems, heuristic methods for solving problems have been developed and successfully applied, in particular, the so-called ant algorithm (Ant Colony Optimization, ACO). Now we will look at the process of analyzing the basic implementation of ACO and talk about the phased improvement of the code. Looking ahead, we note that our optimization technique allowed us to bring the program to performance and scalability levels close to theoretically achievable.

Let's talk about the algorithm that is used in our program. It is based on the behavior of an ant colony. Insects are looking for food sources, marking the paths passed by pheromones, which attract other ants. Over time, pheromones evaporate, that is, longer paths become less attractive than shorter ones, or those that can be traveled quickly. As a result, the shorter or faster the path, the more ants it is able to interest, while each of them, passing along the path, makes it even more attractive.

The figure below shows an example of a transport network. Solid lines mark direct routes between nodes, and dotted lines indicate indirect routes.

An example of a transport network

Simple computer agents are able, using a probabilistic approach, to find solutions to transport problems using an ant algorithm. Parallel implementations of this algorithm, differing, however, by some limitations, have already been investigated in the past.

So, in 2002, Marcus Randall et al published a material (A Parallel Implementation of Ant Colony Optimization, Journal of Parallel and Distributed Computing 62), which shows an approach to parallelizing a task, which led to an acceptable acceleration of calculations. However, in this implementation, to maintain the pheromone matrix, a large number of interactions between the “ants” were required, which acted in parallel, each of which was an independent unit of the model. As a result, the performance of the solution was limited by the Message Passing Interface (MPI) between the ants.

In 2015, material was published (Veluscek, M., T. Kalganova, P. Broomhead, A. Grichnik, Composite goal methods for transportation network optimization, Expert Systems with Applications 42), which describes the methodology for optimizing the transport network using technology OpenMP and shared memory. However, this approach is only suitable for systems with a relatively small number of processing cores and threads.

Here is a block diagram of the underlying architecture for the parallel implementation of the ant algorithm. It was with her that we started experiments.

Scheme of an unoptimized implementation of an ant algorithm

. This diagram shows how many iterative processes are launched every “month”. In each of them, a group of “ants” is released to the network, which build pheromone matrices. Each iterative process is completely independent, it is executed in its own thread.

It uses a static distribution of tasks, each OpenMP thread does its part, finding a local solution. After all threads have completed execution, the main thread compares the local solutions they found and selects the best that becomes global.

One of the fastest ways to find out if an application scales effectively with an increase in the number of processing cores available to it is as follows. First get a basic performance indicator on one processor (NUMA node). Then this indicator is compared with the results of measuring the performance at startup on several processors, both with and without Hyper-Threading technology. In an ideal scenario, assuming that the performance depends only on the number of processing cores, a system with two sockets should show a performance that is double that of a system with one. Accordingly, four sockets should give a fourfold increase in performance.

In the figure below you can see the test results of the basic version of the application. Now our code is far from ideal. After the number of sockets exceeded two (48 cores), the program does not scale very well. On four sockets with Hyper-Threading enabled (192 logical cores), performance is even lower than when using a single socket.

Testing the basic non-optimized implementation of the algorithm

This is not at all what we need, so it's time to explore the program using VTune Amplifier.

In order to find out what prevents the application from working normally on multiple processors, we will use the VTune Amplifier XE 2016 Hotspot analysis. We will look for the most loaded sections of the program. In the VTune Amplifier measurements, a reduced workload (384 iterative processes) was used to limit the size of the data being collected. Other trials used full load (1000 iterations).

The figure below shows the VTune report. In particular, we are interested in the indicators in the Top Hotspots group and the Serial Time indicator, which allows us to find out the time taken for sequential execution of the code.

Top Hotspots Report

It can be seen from the report that the application spends a lot of time sequentially executing code, which directly affects the parallel use of system resources. The most loaded part of the program is the memory allocation module from the standard library for working with strings, which does not scale well enough with a large number of cores. This is an important find. The fact is that OpenMP uses one shared memory pool, so a huge number of parallel calls from different threads to the string constructor or to the memory allocation module for objects (using the new operator) make memory a bottleneck. If you look at the CPA Usage indicator below, you can find that the application, although it uses all 96 available cores, does it inefficiently, loading them only in short periods of time.

Using the processor

If we look at what the threads are doing, we will see that the load on them is not balanced.

Unbalanced load

So, the main thread (Master) at the end of each "month" performs calculations, and the remaining threads do nothing useful at this time.

Now, after analyzing the code, we’ll take care of its optimization.

In order to get rid of the large set of OpenMP streams that is present in the base implementation, we used the standard “master-slave” approach and added another level of parallelism to our application. Namely, now MPI processes that are executed in parallel, each of which, in turn, contains a certain number of OpenMP threads, are responsible for the calculations in separate iterations. Now the loads associated with allocating memory for strings and objects are distributed across MPI processes. Such a hybrid MPI-OpenMP implementation of the ACO algorithm is shown in the flowchart below.

Optimized Implementation # 1

Testing What We've Got Using VTune Amplifier

We are exploring an optimized version of the application using the same methodology as we studied its basic version. The figure below shows the Top Hotspots report, which shows that the program now spends less time on memory allocation operations for strings.

Top Hotspots report

And here are the histograms of processor usage in the base (left) and optimized version of the program.

CPU Usage Histograms

Here's how thread loading now looks. It can be seen that it is balanced much better than before.

Balanced load

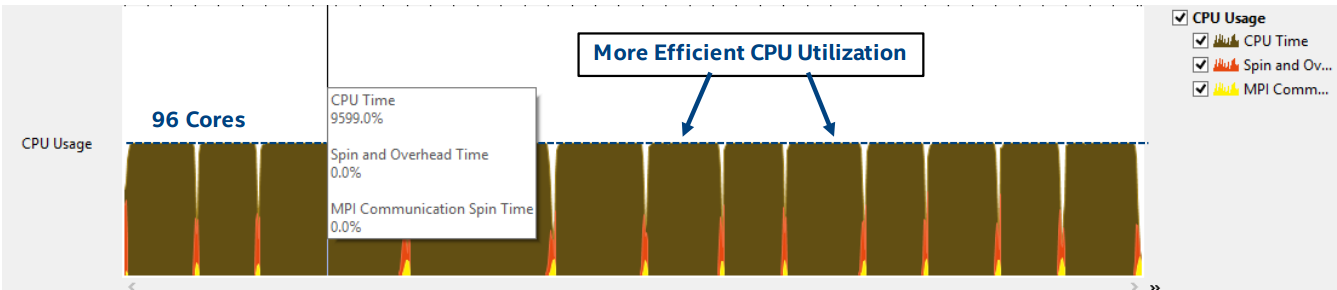

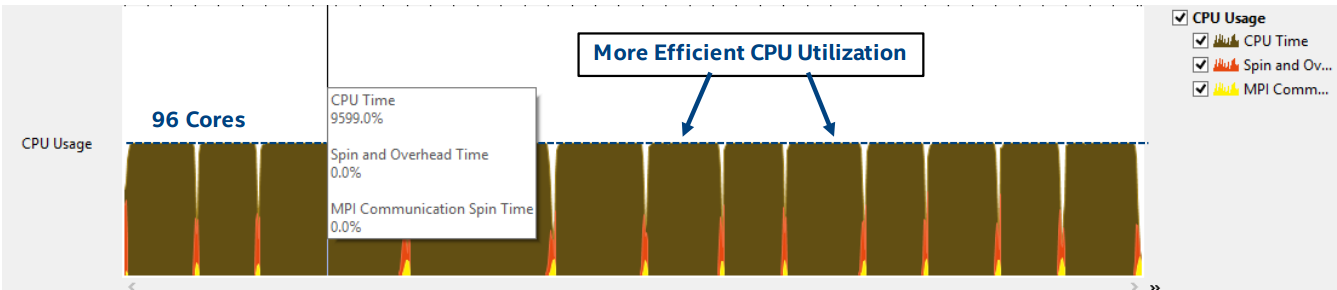

In the figure below, you can see that all available 96 cores are almost completely loaded.

CPU usage

Unfortunately, there is still too much time spent waiting in OpenMP streams and when exchanging MPI data, when the MPI process that finds the best solution sends data to the main process to update the results file. We assumed that this is due to the fact that the system is overloaded with MPI communication operations.

MPI uses a distributed memory interface, with each process working with a separate memory pool. As a result, the modification of objects and data structures by one process is not visible to another, but at the same time, data between processes must be transmitted using the MPI Send and Receive mechanisms. The same applies to the transfer to the main process of the best solution found in the current “month”.

The found global solution is a complex C ++ object, which consists of a number of objects of derived classes, smart pointers with data and other objects from the STL template. Since MPI communication operations do not support the exchange of complex C ++ objects by default, using Send and Receive mechanisms requires serialization, during which the objects are converted to byte streams before sending, and then, after receiving, the streams are converted to objects again.

The load created by serialization is constant. It occurs at most once a “month” (or does not occur at all if the main process, rank 0, finds the best solution that will be recognized as global), regardless of the number of running MPI processes. This is very important in order to minimize the MPI communication operations during the transition to program execution on multiple cores.

In the above figure, additional loads are highlighted in yellow (MPI communication operations) and red (standby and overload).

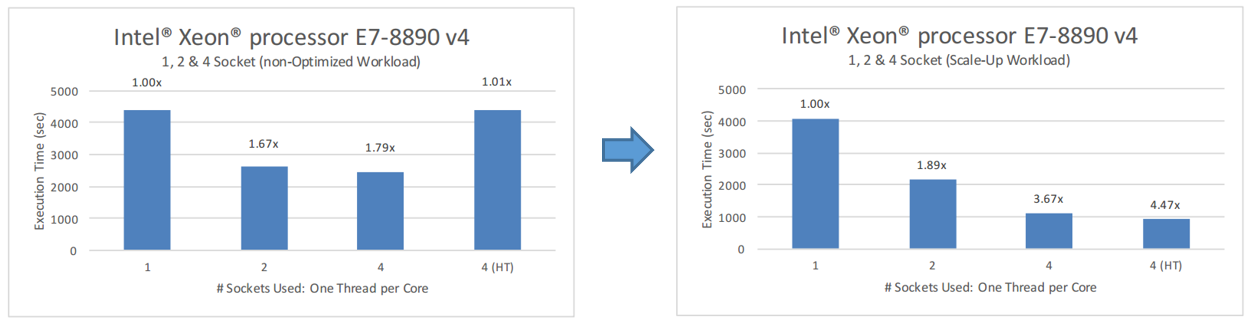

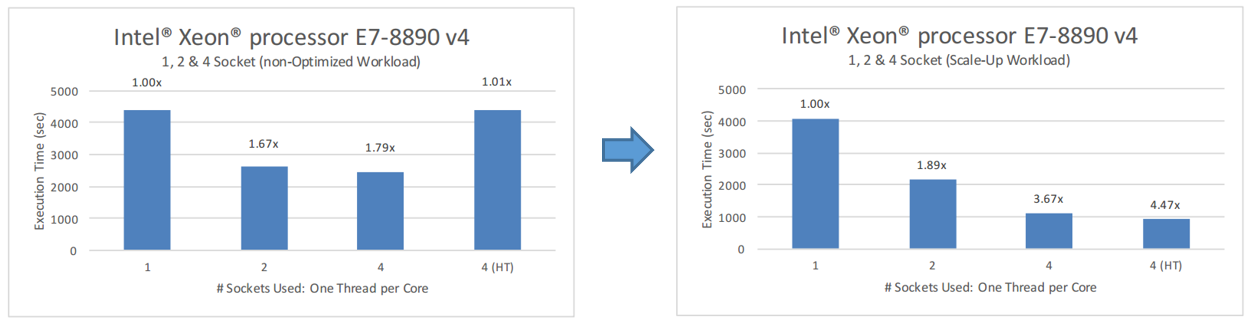

The hybrid MPI-OpenMP version of the program showed much better results in terms of load balancing between MPI processes and OpenMP threads. She also demonstrated a much more efficient use of the large number of cores available on systems with Intel Xeon E7-8890 processors. Here is what the test results of the current version of the program look like in comparison with the base one.

Comparison of the results of the base and optimized versions of the program

Here you can see that the program scales much better with the increase in the number of available cores. Performance gains are also seen when Hyper-Threading is enabled.

We have achieved good results, but the optimization work has not yet been completed. We will use the Intel TBB library to further improve the performance of our code.

Studying the most loaded sections of code for the hybrid MPI-OpenMP application implementation, we noticed that a significant proportion of the execution time still falls on the standard library for working with strings. We decided to check whether the use of the dynamic memory allocation library from Intel TBB would improve the situation . This library offers several memory allocation templates that are similar to the standard std: allocator class from STL, and also include scalable_allocator and cache_aligned_allocator. These patterns help solve two critical groups of concurrent programming problems.

The first group is the problem of scaling. They arise due to the fact that memory allocation mechanisms are sometimes forced to compete for a single common pool, moreover, due to the initial serial device device, only one thread can allocate memory at a time.

The second group of problems is related to shared access to resources. For example, a situation is possible when two threads try to access different words of the same cache line. Since the smallest unit of information exchange between processor caches is a line, it will be transmitted between processors even when each of them works with different words in this line. False sharing can hurt performance, as moving a cache line can take hundreds of clock cycles.

One of the easiest ways to find out if Intel TBB is good for the application is to replace the standard dynamic memory allocation function with a function from the Intel TBB library libtbbmalloc_proxy.so.2. To do this, just load the library at program startup using the LB_PRELOAD environment variable (without changing the executable file) or associate the executable file with the library.

Solving the most important scaling problem that arises when using standard memory allocation mechanisms, the Intel TBB dynamic memory allocation library provides an additional 6% performance compared to the hybrid MPI-OpenMP version of the application.

Performance Improvement with Intel TBB

At this stage, we decided to study the impact on the performance of various combinations of MPI processes and OpenMP threads under the same load. The experiment used all 192 available logical cores, that is, 4 processors were involved and Hyper-Threading technology was enabled. During the tests, we found the optimal ratio of MPI processes and OpenMP streams. Namely, the best result was achieved using 64 MPI processes, each of which executed 3 OpenMP streams.

Performance comparison for various combinations of MPI processes and OpenMP streams.

A study of the basic parallel implementation of the ant algorithm allowed us to identify scaling problems associated with memory allocation mechanisms for strings and object constructors.

The first stage of optimization, thanks to the application of a hybrid approach using MPI and OpenMP, allowed to achieve better use of processor resources, which significantly increased productivity. However, the program still spent too much time allocating memory.

At the second stage, thanks to the library for dynamic memory allocation from Intel TBB, it was possible to increase productivity by another 6%.

During the third stage of performance improvement, it was found out that for our program a combination of 64 MPI processes is best suited, each of which runs 3 OpenMP streams. At the same time, the code works well on all 192 logical cores. Here are the final optimization results.

Optimization results

As a result, after all the improvements, the program worked 5.3 times faster than its basic version. We believe this is a worthy result, which is worth the effort spent on research and code optimization.

Our goal is to ensure the effective operation of the program on a computer with four Intel Xeon E7-8890 v4 processors . The system is equipped with 512 GB of RAM, Linux 3.10.0-327.el7.x86_64 is installed on it, the code was compiled using Intel Parallel Studio XE 2016 U2.

The problem of finding the optimal route in the transport network is known as the “traveling salesman problem”. In practice, this is, for example, finding the best ways to transport goods. Initially, “efficiency” in tasks of this kind meant choosing the cheapest way, but over the past few decades the concept of “route cost” has expanded. Now, this includes environmental impact, the price of energy, and time. In addition to this, the globalization of business and supply chains has led to the fact that the size and complexity of transport networks, and hence the models on which the calculations are based, have grown significantly. Typical route optimization problems are now classified as NP-hard. Typically, deterministic methods are not suitable for solving such problems.

With the development of distributed and multi-core computing systems, heuristic methods for solving problems have been developed and successfully applied, in particular, the so-called ant algorithm (Ant Colony Optimization, ACO). Now we will look at the process of analyzing the basic implementation of ACO and talk about the phased improvement of the code. Looking ahead, we note that our optimization technique allowed us to bring the program to performance and scalability levels close to theoretically achievable.

About the ant algorithm

Let's talk about the algorithm that is used in our program. It is based on the behavior of an ant colony. Insects are looking for food sources, marking the paths passed by pheromones, which attract other ants. Over time, pheromones evaporate, that is, longer paths become less attractive than shorter ones, or those that can be traveled quickly. As a result, the shorter or faster the path, the more ants it is able to interest, while each of them, passing along the path, makes it even more attractive.

The figure below shows an example of a transport network. Solid lines mark direct routes between nodes, and dotted lines indicate indirect routes.

An example of a transport network

Simple computer agents are able, using a probabilistic approach, to find solutions to transport problems using an ant algorithm. Parallel implementations of this algorithm, differing, however, by some limitations, have already been investigated in the past.

So, in 2002, Marcus Randall et al published a material (A Parallel Implementation of Ant Colony Optimization, Journal of Parallel and Distributed Computing 62), which shows an approach to parallelizing a task, which led to an acceptable acceleration of calculations. However, in this implementation, to maintain the pheromone matrix, a large number of interactions between the “ants” were required, which acted in parallel, each of which was an independent unit of the model. As a result, the performance of the solution was limited by the Message Passing Interface (MPI) between the ants.

In 2015, material was published (Veluscek, M., T. Kalganova, P. Broomhead, A. Grichnik, Composite goal methods for transportation network optimization, Expert Systems with Applications 42), which describes the methodology for optimizing the transport network using technology OpenMP and shared memory. However, this approach is only suitable for systems with a relatively small number of processing cores and threads.

Basic implementation of the algorithm

Here is a block diagram of the underlying architecture for the parallel implementation of the ant algorithm. It was with her that we started experiments.

Scheme of an unoptimized implementation of an ant algorithm

. This diagram shows how many iterative processes are launched every “month”. In each of them, a group of “ants” is released to the network, which build pheromone matrices. Each iterative process is completely independent, it is executed in its own thread.

It uses a static distribution of tasks, each OpenMP thread does its part, finding a local solution. After all threads have completed execution, the main thread compares the local solutions they found and selects the best that becomes global.

Basic Test Results

One of the fastest ways to find out if an application scales effectively with an increase in the number of processing cores available to it is as follows. First get a basic performance indicator on one processor (NUMA node). Then this indicator is compared with the results of measuring the performance at startup on several processors, both with and without Hyper-Threading technology. In an ideal scenario, assuming that the performance depends only on the number of processing cores, a system with two sockets should show a performance that is double that of a system with one. Accordingly, four sockets should give a fourfold increase in performance.

In the figure below you can see the test results of the basic version of the application. Now our code is far from ideal. After the number of sockets exceeded two (48 cores), the program does not scale very well. On four sockets with Hyper-Threading enabled (192 logical cores), performance is even lower than when using a single socket.

Testing the basic non-optimized implementation of the algorithm

This is not at all what we need, so it's time to explore the program using VTune Amplifier.

Analysis of the basic implementation of the algorithm using VTune Amplifier XE

In order to find out what prevents the application from working normally on multiple processors, we will use the VTune Amplifier XE 2016 Hotspot analysis. We will look for the most loaded sections of the program. In the VTune Amplifier measurements, a reduced workload (384 iterative processes) was used to limit the size of the data being collected. Other trials used full load (1000 iterations).

The figure below shows the VTune report. In particular, we are interested in the indicators in the Top Hotspots group and the Serial Time indicator, which allows us to find out the time taken for sequential execution of the code.

Top Hotspots Report

It can be seen from the report that the application spends a lot of time sequentially executing code, which directly affects the parallel use of system resources. The most loaded part of the program is the memory allocation module from the standard library for working with strings, which does not scale well enough with a large number of cores. This is an important find. The fact is that OpenMP uses one shared memory pool, so a huge number of parallel calls from different threads to the string constructor or to the memory allocation module for objects (using the new operator) make memory a bottleneck. If you look at the CPA Usage indicator below, you can find that the application, although it uses all 96 available cores, does it inefficiently, loading them only in short periods of time.

Using the processor

If we look at what the threads are doing, we will see that the load on them is not balanced.

Unbalanced load

So, the main thread (Master) at the end of each "month" performs calculations, and the remaining threads do nothing useful at this time.

Now, after analyzing the code, we’ll take care of its optimization.

Optimization number 1. Sharing MPI and OpenMP

In order to get rid of the large set of OpenMP streams that is present in the base implementation, we used the standard “master-slave” approach and added another level of parallelism to our application. Namely, now MPI processes that are executed in parallel, each of which, in turn, contains a certain number of OpenMP threads, are responsible for the calculations in separate iterations. Now the loads associated with allocating memory for strings and objects are distributed across MPI processes. Such a hybrid MPI-OpenMP implementation of the ACO algorithm is shown in the flowchart below.

Optimized Implementation # 1

Testing What We've Got Using VTune Amplifier

Analysis of optimized algorithm implementation using VTune Amplifier XE

We are exploring an optimized version of the application using the same methodology as we studied its basic version. The figure below shows the Top Hotspots report, which shows that the program now spends less time on memory allocation operations for strings.

Top Hotspots report

And here are the histograms of processor usage in the base (left) and optimized version of the program.

CPU Usage Histograms

Here's how thread loading now looks. It can be seen that it is balanced much better than before.

Balanced load

In the figure below, you can see that all available 96 cores are almost completely loaded.

CPU usage

Unfortunately, there is still too much time spent waiting in OpenMP streams and when exchanging MPI data, when the MPI process that finds the best solution sends data to the main process to update the results file. We assumed that this is due to the fact that the system is overloaded with MPI communication operations.

MPI uses a distributed memory interface, with each process working with a separate memory pool. As a result, the modification of objects and data structures by one process is not visible to another, but at the same time, data between processes must be transmitted using the MPI Send and Receive mechanisms. The same applies to the transfer to the main process of the best solution found in the current “month”.

The found global solution is a complex C ++ object, which consists of a number of objects of derived classes, smart pointers with data and other objects from the STL template. Since MPI communication operations do not support the exchange of complex C ++ objects by default, using Send and Receive mechanisms requires serialization, during which the objects are converted to byte streams before sending, and then, after receiving, the streams are converted to objects again.

The load created by serialization is constant. It occurs at most once a “month” (or does not occur at all if the main process, rank 0, finds the best solution that will be recognized as global), regardless of the number of running MPI processes. This is very important in order to minimize the MPI communication operations during the transition to program execution on multiple cores.

In the above figure, additional loads are highlighted in yellow (MPI communication operations) and red (standby and overload).

Optimization Results No. 1

The hybrid MPI-OpenMP version of the program showed much better results in terms of load balancing between MPI processes and OpenMP threads. She also demonstrated a much more efficient use of the large number of cores available on systems with Intel Xeon E7-8890 processors. Here is what the test results of the current version of the program look like in comparison with the base one.

Comparison of the results of the base and optimized versions of the program

Here you can see that the program scales much better with the increase in the number of available cores. Performance gains are also seen when Hyper-Threading is enabled.

We have achieved good results, but the optimization work has not yet been completed. We will use the Intel TBB library to further improve the performance of our code.

Optimization number 2. Intel TBB Application

Studying the most loaded sections of code for the hybrid MPI-OpenMP application implementation, we noticed that a significant proportion of the execution time still falls on the standard library for working with strings. We decided to check whether the use of the dynamic memory allocation library from Intel TBB would improve the situation . This library offers several memory allocation templates that are similar to the standard std: allocator class from STL, and also include scalable_allocator and cache_aligned_allocator. These patterns help solve two critical groups of concurrent programming problems.

The first group is the problem of scaling. They arise due to the fact that memory allocation mechanisms are sometimes forced to compete for a single common pool, moreover, due to the initial serial device device, only one thread can allocate memory at a time.

The second group of problems is related to shared access to resources. For example, a situation is possible when two threads try to access different words of the same cache line. Since the smallest unit of information exchange between processor caches is a line, it will be transmitted between processors even when each of them works with different words in this line. False sharing can hurt performance, as moving a cache line can take hundreds of clock cycles.

Features of work with Intel TBB

One of the easiest ways to find out if Intel TBB is good for the application is to replace the standard dynamic memory allocation function with a function from the Intel TBB library libtbbmalloc_proxy.so.2. To do this, just load the library at program startup using the LB_PRELOAD environment variable (without changing the executable file) or associate the executable file with the library.

Настройка связи файла с библиотекой:

-ltbbmalloc_proxy

Установка переменной окружения LD_PRELOAD для загрузки библиотеки

$ export LD_PRELOAD=libtbbmalloc_proxy.so.2Optimization Results No. 2

Solving the most important scaling problem that arises when using standard memory allocation mechanisms, the Intel TBB dynamic memory allocation library provides an additional 6% performance compared to the hybrid MPI-OpenMP version of the application.

Performance Improvement with Intel TBB

Optimization number 3. Finding the Best Combination of MPI Processes and OpenMP Streams

At this stage, we decided to study the impact on the performance of various combinations of MPI processes and OpenMP threads under the same load. The experiment used all 192 available logical cores, that is, 4 processors were involved and Hyper-Threading technology was enabled. During the tests, we found the optimal ratio of MPI processes and OpenMP streams. Namely, the best result was achieved using 64 MPI processes, each of which executed 3 OpenMP streams.

Performance comparison for various combinations of MPI processes and OpenMP streams.

Summary

A study of the basic parallel implementation of the ant algorithm allowed us to identify scaling problems associated with memory allocation mechanisms for strings and object constructors.

The first stage of optimization, thanks to the application of a hybrid approach using MPI and OpenMP, allowed to achieve better use of processor resources, which significantly increased productivity. However, the program still spent too much time allocating memory.

At the second stage, thanks to the library for dynamic memory allocation from Intel TBB, it was possible to increase productivity by another 6%.

During the third stage of performance improvement, it was found out that for our program a combination of 64 MPI processes is best suited, each of which runs 3 OpenMP streams. At the same time, the code works well on all 192 logical cores. Here are the final optimization results.

Optimization results

As a result, after all the improvements, the program worked 5.3 times faster than its basic version. We believe this is a worthy result, which is worth the effort spent on research and code optimization.