How to evaluate the effectiveness of the team

A cool startup at the beginning of its journey is similar to Sapsan. A small team is rapidly gaining momentum and rushing into the future, carrying a bunch of tasks into production. If the project turned out to be promising, such as Skyeng, then in a few years there will be significantly more teams, and it is possible that among them steam locomotives will appear, in which you need to continuously throw firewood into the firebox so that at least something will reach the users.

View or read Alexey Kataev's report on Saint TeamLead Confif you do not know for what formal signs to determine if you have a cool team. If you want to be able to measure technical debt in hours, and not to operate with the categories “just a little bit”, “some”, “terribly much”. If your product manager thinks that a team of three people per month will do 60 tasks - show him this article. If your supervisor has been bombarded with development metrics and invites you to take action based on results like: “34% think the team has a problem with planning,” this report is for you.

About speaker: Alexey Kataev ( deusdeorum ) has been working in web development for 15 years. I managed to work backend, frontend, fullstack-developer and teamlide. Now he is engaged in the optimization of development processes in Skyeng . May be familiar to the team leaders on the performance of the distributed team.

Now finally we pass the word to the speaker. Let's start with the context and gradually move on to the main problem.

I joined Skyeng in 2015 and was one of five developers — all of them were developers at the company.

A little more than three years have passed, and now we have 15 teams - these are 68 developers .

All developers work remotely , they are scattered around the world.

Let's look at the problems that inevitably arise when the company is scaled from 5 to 68 employees.

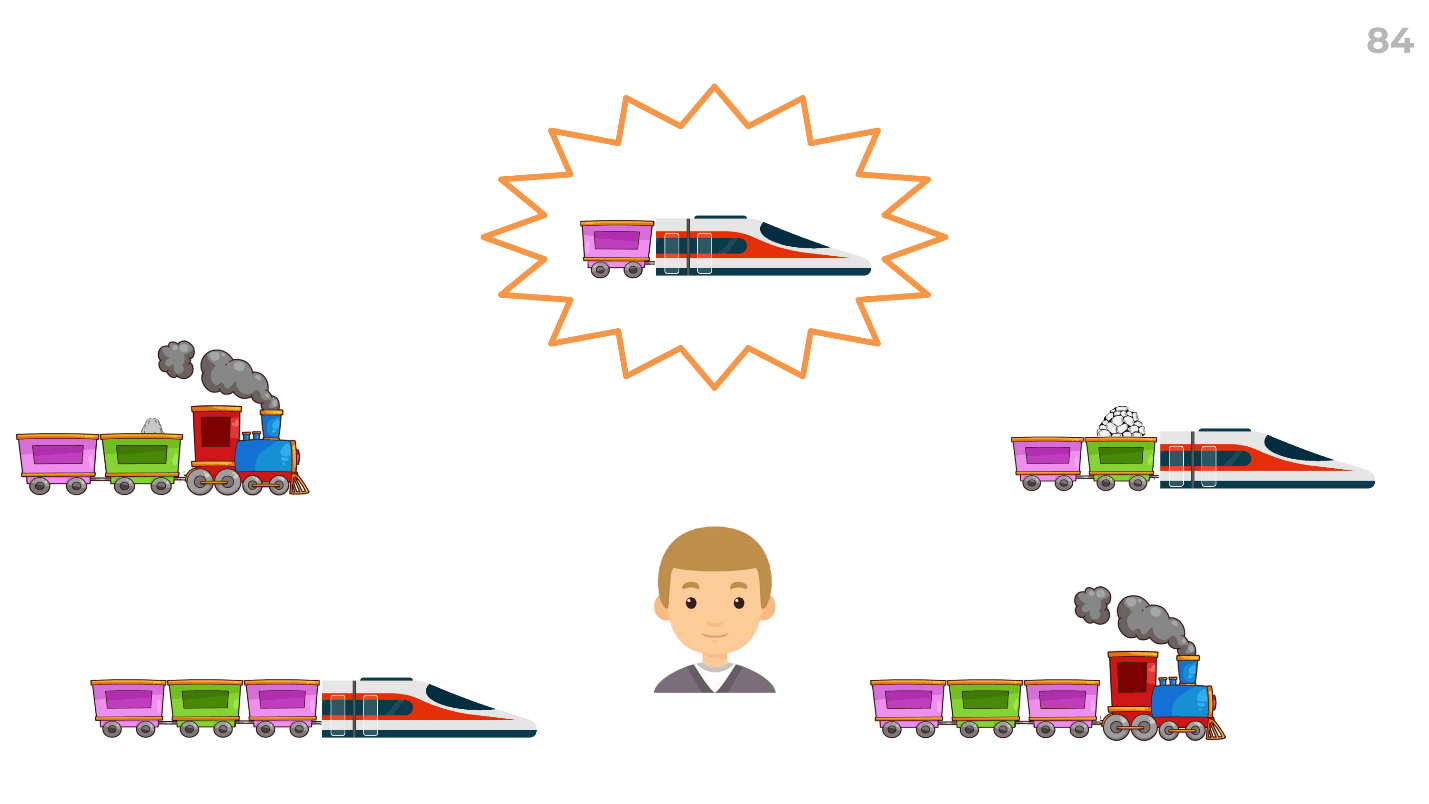

In the picture Sergey is the head of development.

Once we had one team, and it was the Sapsan, who rushed into the future and carried with him a bunch of tasks in production. But not only tasks, but also a bit more technical debt. Then, at some point, unexpectedly for us, the teams became much more.

The first question that Sergey faces is whether all of our teams are Sapsans , or among us there are steam locomotives where you need to throw firewood in the furnace.

This question is important because such a situation may arise: a product manager or business representative will come to Sergey and say that we don’t have time, the team is not working well. But in fact, the problem may not be only in the team. The problem may be in the relationship between the team and the business or in the business itself - it can set tasks poorly or have too optimistic plans.

Need to understand - whether the team is cool .

The second question is the resources of the team : how many tasks can we take to the prod. This question is important because the product always has a lot of plans for the near future. It is important to understand whether the team will cope with these plans, or if you need to throw out half of the tasks. Or maybe it is worth hiring a few more people to accomplish all the tasks.

The third question - how much technical debt are we taking with us? This is a critical question, because if its quantity exceeds the limit value, then in the end our train will not be able to go anywhere at all. We'll have to dismiss the team, start the project from the beginning, but we don’t want to allow it.

Finally, how can we make all the teams Sapsans , not trains?

1. Determine how cool our teams are.

Of course, the first thing that comes to mind is to measure some metrics! Now I will tell you about our experience and how we were wrong over and over again.

First of all, we tried to follow the velocity - how many tasks we roll out for the sprint. But we found out that half of our teams have no sprints, they have Kanban. And where there are sprints, tasks are evaluated in hours or in story points. There are still a couple of teams that just do the tasks - they don't have Kanban, they don't know what Scrum is.

This is inconsistency data. We are trying to count one number on completely different data. At the same time, to make sprints everywhere, so that all teams are identical, it will be very expensive. In order to calculate one metric, one would have to change processes.

We thought, tried a few more options and came up with a simple metric - fulfillment of plans . This is also expensive, but it is necessary to make identical, consistent only product plans. Someone leads them to Jira, someone to Google Spreadsheets, someone builds charts - to lead to one format is much cheaper than changing processes in teams.

Every quarter we look at whether the team fulfills the business requirements, how many planned tasks are fulfilled.

Count the number of incidents also failed.

We log every unsuccessful rollout or bug that caused damage to the company, and carry out post-mortem. As a team leader, Sergei came to me and said: “Your team allows the most falls. Why so? ”We thought, looked and it turned out that our team is the most responsible and the only one who logs all the falls. The rest act on the principle of quickly raised, not considered to be fallen.

The problem is again the inconsistency of data and insufficient sampling. We have teams that simply have a landing page - it never falls at all. We can not say that this team is better, because it has a more stable project.

The second is my favorite topic is cognitive distortion.. Cognitive ease is when we draw some conclusion that seems simple to us, and we immediately begin to believe in it. We do not include critical thinking: once many falls, it means a bad team.

We came to the exact same metric for the number of incidents, but only revised its implementation. We have done a process in which all drops are logged . At the end of each month, we ask people who are not interested in hiding incidents - these are QA and products, is this a complete list of problems that have occurred through the fault of the team. They remember when we dropped something, and supplement this list.

There are also problems with surveys. It would seem that super universal means - we will interrogate everyone (products, team leads, customers) what problems we have in our teams. According to their answers, we will build graphics, and we will learn everything. But a lot of problems.

First, if you ask closed questions, then no conclusions can be drawn from this data. For example, we ask: “Is there a problem with planning in the team?” And 34% answer what is - and what to do about it? If you do open questions: “What are the problems in the team with the infrastructure?” - then you will get empty answers, because everyone is too lazy to write anything. From this data it is impossible to draw any conclusions .

We developed this idea - first we conduct surveys as a screening, and then interviews in order to understand what the problem is. We will talk about the interview a little later.

I gave three examples, in fact, we tried dozens of metrics .

Now we only use:

I take liking if I say that we even measure these simple things in all teams, because the most expensive thing is implementation . Especially when there are 15 different teams, when the product says: “Yes, we don’t need it at all! We would roll out our task, now is not up to that! ” It is very difficult to measure one number in all teams.

Interview

We make a brief digression about interviews with developers . I read several articles, passed the grocery course. There is a lot about user research and customer development. I took a few practices from there, and they very well communicated with the developers.

If your goal is to find out what problems the team has, then first of all you should write targeted questions that you will be looking for the answer to. That is, not just throwing 30 questions of some kind, you choose 2-3 questions that you are looking for an answer to. For example: does the team have infrastructure problems; how communication is established between the business and the team.

In this case, questions should be:

Another very good approach is prioritization . You have different aspects of the team’s life. You ask the employee, which, in his opinion, the coolest and should remain the same, and which, perhaps, should be improved.

There is another approach that I took from the interview chapter in the book “Who. Solve your problem number one ”- ask questions like this:“ If I asked the product, what do you think he would call the problems? ”This forces the developer to look at the whole picture, and not from his position, to see all the problems.

2. Evaluate resources

Now let's talk about resource assessment .

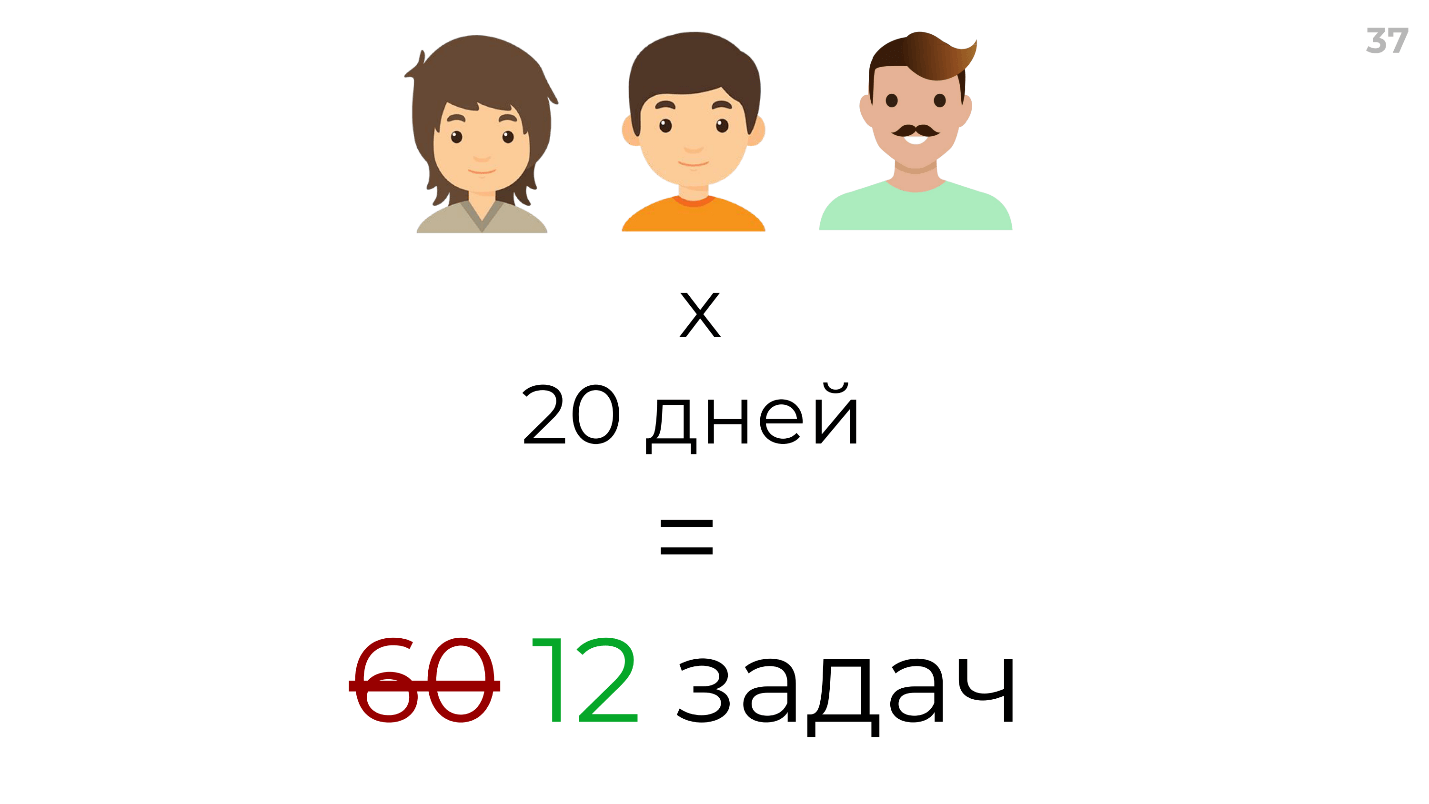

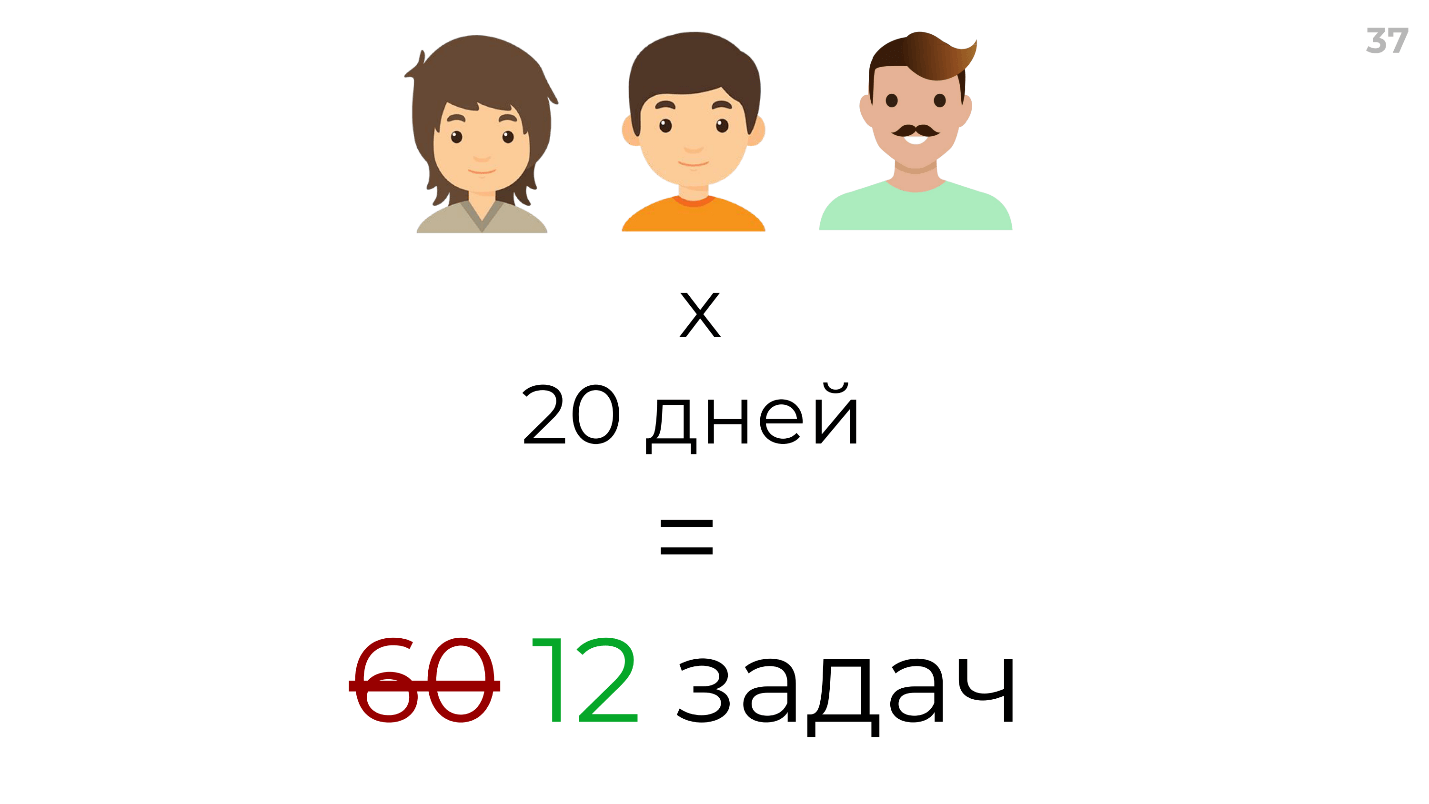

Let's start with the product approach - how he usually evaluates the resources of his team. There are 3 people, 20 working days in a month - we multiply people by days, we get 60 tasks.

Of course, I'm exaggerating, a normal product will multiply this as 60 days of development. But this is also wrong, no one will ever roll out tasks rated for 60 days in 60 days. Even in Scrum, it is advised to count the focus factor and multiply by a certain magic number, for example, 0.2. In fact, from iteration to iteration, we will roll out the 12, then 17, then 10 tasks. I think this is a very rough estimate.

I have my own approach to resource assessment. To begin with, we consider the team’s shared resource in working hours. We multiply the clock by the developers, we take off vacations and time off. Suppose it turned out 750.But not all development is development directly.

The above is real data on the example of one of the commands:

Can the product count on these 63.1%? No, it cannot, because grocery tasks are only a part. There is also a quota (about 20%) for bug fixes and refactoring . This is what timlid distributes, and the product no longer counts on this time.

Of the product tasks, too, not all tasks are those that the product has planned. There are grocery tasks of other teams that ask for urgent help, because the release. We estimate about 8–10% of the tasks that come from other teams .

And here we have 287 hours! Everything would be great if we always fit in our assessments. But in this team, the average overspent was calculated - 1.51, that is, on average, the task took one and a half times more time than it was estimated.

A total of 750 left 189 hours to complete the main tasks . Of course, this is an approximation, but this formula has variables that can be changed. For example, if you devote a month to refactoring, you can substitute this value and find out what you can count on.

I devoted a whole day to it - I took puzzles, counted the average time in Excel, analyzed it - spent a lot of time and decided that I would never do that again. I need a quick approach for this, so as not to do it every time with my hands.

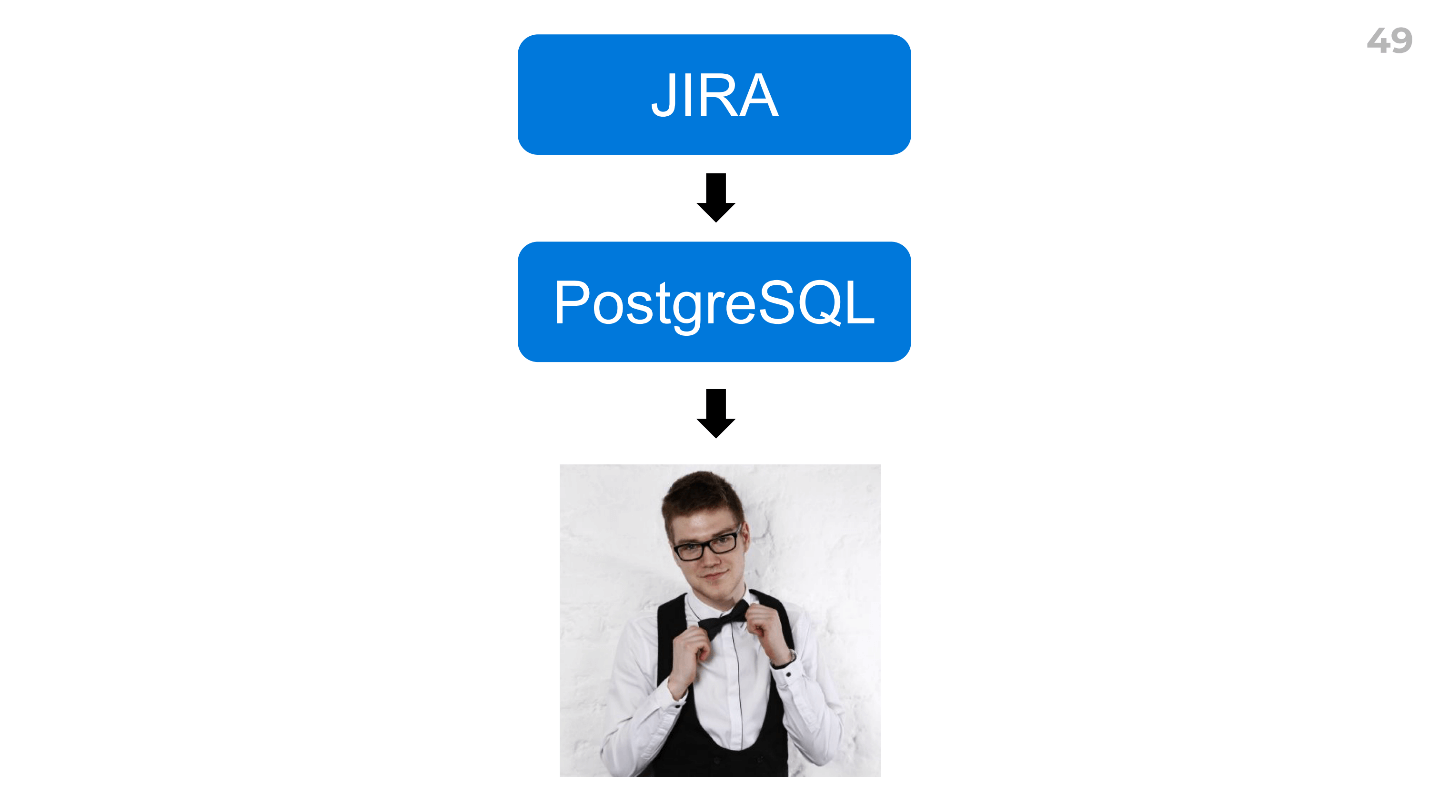

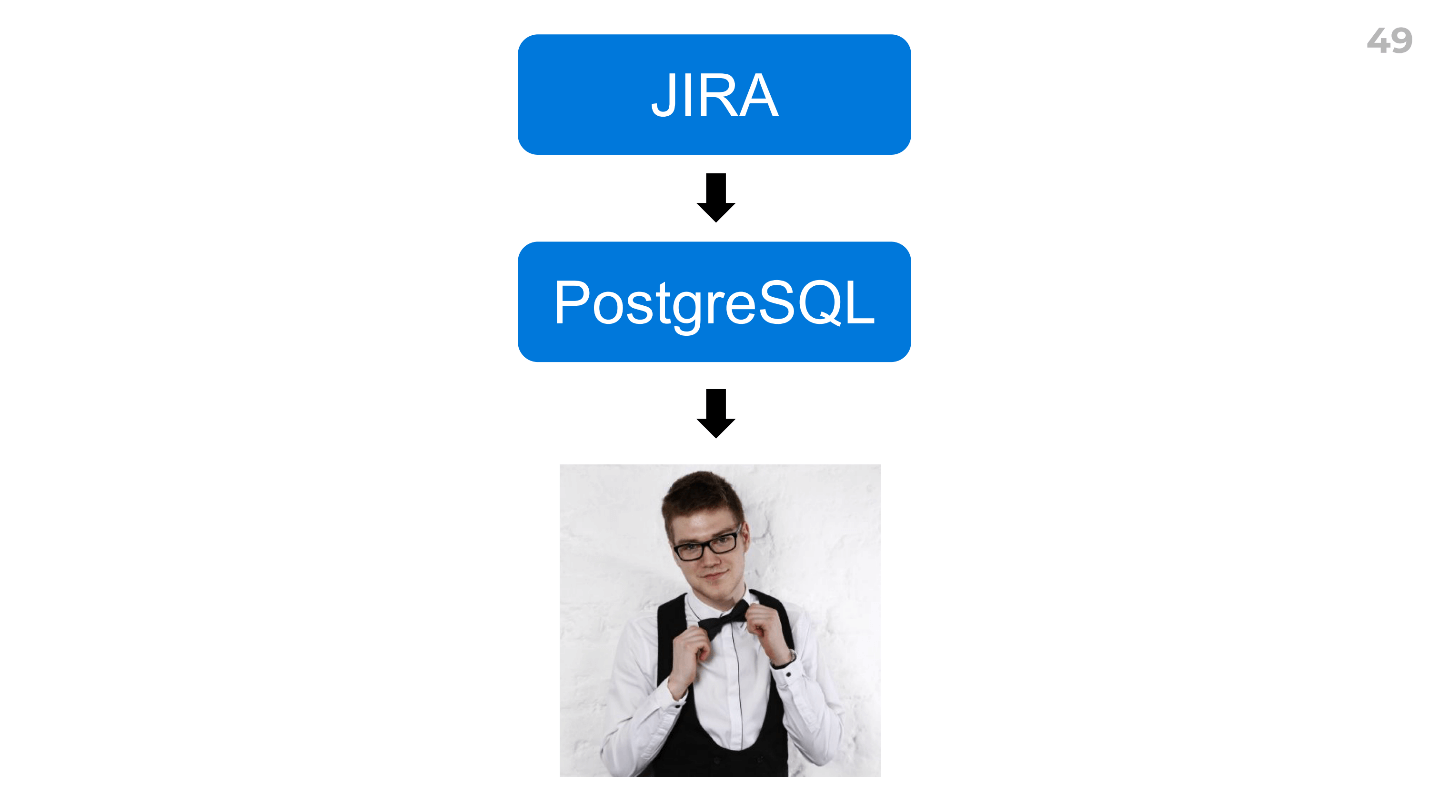

I was advised to plug in for Jira and EazyBI . This is a super complex tool, or I didn’t have enough abilities, but I gave up in the middle.

I found a way that allows you to quickly build any reports.

You upload data from your task tracker to any database you know (PostgreSQL for us), and then ask the analyst to calculate everything. We have this Dima, and he considered everything in 2 hours.

At the same time, he gave a bunch of additional data - overtime by developers, overtime by task types, some coefficients, told us about his insights and new hypotheses - cool and scalable: you can use it at once in all teams.

How to increase the resource

Now about how to speed up the team - to increase its resource without increasing the number of developers.

First of all, to speed up something, you need to measure it first. Usually we consider two metrics:

I’m not tired of telling Arseny’s bot, which I wrote over the weekend. Every week he posts a digest - how many tasks we rolled out.

There are two interesting things here:

Speed is a lagging metric, we cannot directly influence it. It shows something, but there are metrics, by affecting which, we can influence the speed. In my opinion, there are two main ones:

1. Accuracy of the assessment of tasks.

Here, our charged analyst Dima helped us again, who calculated the actual time of the task, depending on the initial assessment.

This is real data. Above is a chart of the actual time to complete the task, and below is our estimate.

Joel Spolsky claims that 16 hours for a task is the limit. For us it is clear that after 12 hours the estimate does not make sense, there is too much dispersion. We really entered soft-limit and try not to evaluate tasks more than at 12 o'clock. After that, either decomposition or additional research.

2. The expense of time, that is, when developers spend time on something that they could not spend.

In one of our teams, communication was spent up to 50% of the time. We began to analyze and understand the reason. It turned out that the problem was in the processes: all customers wrote directly to the developers, asked questions. We changed processes a little, reduced this time and improved speed indicators.

In your case, it will not necessarily be communication, for example, it may be time on the deployment or on infrastructure. But the prerequisite for this is that all your developers should log the time. If no one is logging time in Jira, then obviously it will be very expensive to implement it.

3. We understand the technical debt

When I say "technical debt", I visualize it as something blurry - it is not clear how it can be measured?

If I tell you that we have a technical debt of exactly 648 hours in one of the teams, you won’t believe me. But I will tell the algorithm by which we measure it.

Once a quarter we gather with the whole team (we call it refactoring meetup) and invent cards: what crutches (ours and others) people saw in the code, dubious decisions and other bad things, for example, old versions of libraries - anything. After we have generated a bunch of these cards, for each we write resolution - what to do about it. Maybe, do nothing, because it is not a technical debt at all, or you need to update the library version, refactor, etc. Then we create tickets in Jira, where we write down: “This is the problem - this is its solution.”

And here we have 150 tickets in Jira - what to do with them?

After that, we conduct a survey, which takes 10 minutes for one developer. Each developer gives a rating from 1 to 5, where 1 - “Someday we will do in the next life”, and 5 - “Right now we need to do it, it slows us down a lot”. We make this assessment right on the ticket in Jira. We made a custom field, called it “Priority of refactoring”, and according to it we get a prioritized list of our technical debt, problems. Evaluating the first 10–20, and then multiplying the tail by the average estimate (we are too lazy to evaluate all 150 tickets), we get technical debt in hours .

Why do we need this? We conduct this assessment once a quarter. If we had 700 hours in the first quarter, and then, for example, 800, then everything is fine. And if it becomes 1400, this means that there is a threat and we need to increase the quota for refactoring - agree with the business that we will now refactor a whole month or 40% of the total time.

Well, we solved the problem with technical debt, it is under lock and key.

But let's talk about the reasons that lead to its occurrence. Most often this is a bad code review.

Bad code review

One of the main reasons for bad code review is bus-factor. We learned to formalize bus-factor . We take a team, write out a list of areas relevant to this team, for example, for a platform command it is: video calling, exercise synchronization, tools. Each developer puts a rating from 1 to 3:

Interestingly, for some areas there was not a single expert in the team, no one knew how it worked.

How did we solve the problem with bus-factor ?

Next, we calculated the median value for each region, and conducted a series of reports at regular team meetings, in which experts told the whole team about those very areas. Of course, this method does not scale very well, but, firstly, there are videos of these reports, and secondly, this is a very fast way. The whole team now more or less knows how video calls work. For some areas we have not written documentation yet, but we have made a task to write documentation. Ever write.

Now about the code review, when there is not enough time.We are currently undergoing an experiment, where we review code review. At the end of each month, we select random pull requests, ask the developers once again to do a code review and find as many problems as possible. Thus, when you know that someone will recheck after you, then you are already more responsive to review. We also added checklists - did you look at this, this and this - in general, we try to make the code review more qualitative.

That's all about technical debt. We have the last question - how to make all the teams be Sapsans .

4. Turn all teams into Sapsans

The easiest way to do this is at the start. When a new team is formed, especially when a new team leader comes along and recruits people, we do the same cross review of all processes. That is, we take a tmlid expert who checks everything: planning, first technical solutions, technology stack, all processes. So we set the correct vector for the team that starts the work. It is very expensive due to the time spent, but it pays off.

Here, too, there is a certain prerequisite, through Google Suite we record all the meetings on video: all meetings, all planning, all retrospectives, they can always be reconsidered.

The second cheap metric is of course checklists .

The above is an example of a checklist, but in fact it is much longer. For each team we mark in the huge table whether there are autotests in the team, Continuous Integration, etc.

But there is a problem with these checklists - they become obsolete the day after you have done it. Administrative assistants help us solve it - these are people to whom you can set any routine tasks. We set the task, and they update themselves what they can update, and where they cannot, they want the team leader to update. Thus, we maintain the relevance of checklists.

Let's sum up

We talked about:

The report did not include some materials that Alexey is willing to share on request.

Email Alexey in a Telegram (@ ax8080) or facebook to get these lists and learn more about the effectiveness of teams.

View or read Alexey Kataev's report on Saint TeamLead Confif you do not know for what formal signs to determine if you have a cool team. If you want to be able to measure technical debt in hours, and not to operate with the categories “just a little bit”, “some”, “terribly much”. If your product manager thinks that a team of three people per month will do 60 tasks - show him this article. If your supervisor has been bombarded with development metrics and invites you to take action based on results like: “34% think the team has a problem with planning,” this report is for you.

About speaker: Alexey Kataev ( deusdeorum ) has been working in web development for 15 years. I managed to work backend, frontend, fullstack-developer and teamlide. Now he is engaged in the optimization of development processes in Skyeng . May be familiar to the team leaders on the performance of the distributed team.

Now finally we pass the word to the speaker. Let's start with the context and gradually move on to the main problem.

I joined Skyeng in 2015 and was one of five developers — all of them were developers at the company.

A little more than three years have passed, and now we have 15 teams - these are 68 developers .

All developers work remotely , they are scattered around the world.

Let's look at the problems that inevitably arise when the company is scaled from 5 to 68 employees.

In the picture Sergey is the head of development.

Once we had one team, and it was the Sapsan, who rushed into the future and carried with him a bunch of tasks in production. But not only tasks, but also a bit more technical debt. Then, at some point, unexpectedly for us, the teams became much more.

The first question that Sergey faces is whether all of our teams are Sapsans , or among us there are steam locomotives where you need to throw firewood in the furnace.

This question is important because such a situation may arise: a product manager or business representative will come to Sergey and say that we don’t have time, the team is not working well. But in fact, the problem may not be only in the team. The problem may be in the relationship between the team and the business or in the business itself - it can set tasks poorly or have too optimistic plans.

Need to understand - whether the team is cool .

The second question is the resources of the team : how many tasks can we take to the prod. This question is important because the product always has a lot of plans for the near future. It is important to understand whether the team will cope with these plans, or if you need to throw out half of the tasks. Or maybe it is worth hiring a few more people to accomplish all the tasks.

The third question - how much technical debt are we taking with us? This is a critical question, because if its quantity exceeds the limit value, then in the end our train will not be able to go anywhere at all. We'll have to dismiss the team, start the project from the beginning, but we don’t want to allow it.

Finally, how can we make all the teams Sapsans , not trains?

1. Determine how cool our teams are.

Of course, the first thing that comes to mind is to measure some metrics! Now I will tell you about our experience and how we were wrong over and over again.

First of all, we tried to follow the velocity - how many tasks we roll out for the sprint. But we found out that half of our teams have no sprints, they have Kanban. And where there are sprints, tasks are evaluated in hours or in story points. There are still a couple of teams that just do the tasks - they don't have Kanban, they don't know what Scrum is.

This is inconsistency data. We are trying to count one number on completely different data. At the same time, to make sprints everywhere, so that all teams are identical, it will be very expensive. In order to calculate one metric, one would have to change processes.

We thought, tried a few more options and came up with a simple metric - fulfillment of plans . This is also expensive, but it is necessary to make identical, consistent only product plans. Someone leads them to Jira, someone to Google Spreadsheets, someone builds charts - to lead to one format is much cheaper than changing processes in teams.

Every quarter we look at whether the team fulfills the business requirements, how many planned tasks are fulfilled.

Count the number of incidents also failed.

We log every unsuccessful rollout or bug that caused damage to the company, and carry out post-mortem. As a team leader, Sergei came to me and said: “Your team allows the most falls. Why so? ”We thought, looked and it turned out that our team is the most responsible and the only one who logs all the falls. The rest act on the principle of quickly raised, not considered to be fallen.

The problem is again the inconsistency of data and insufficient sampling. We have teams that simply have a landing page - it never falls at all. We can not say that this team is better, because it has a more stable project.

The second is my favorite topic is cognitive distortion.. Cognitive ease is when we draw some conclusion that seems simple to us, and we immediately begin to believe in it. We do not include critical thinking: once many falls, it means a bad team.

We came to the exact same metric for the number of incidents, but only revised its implementation. We have done a process in which all drops are logged . At the end of each month, we ask people who are not interested in hiding incidents - these are QA and products, is this a complete list of problems that have occurred through the fault of the team. They remember when we dropped something, and supplement this list.

There are also problems with surveys. It would seem that super universal means - we will interrogate everyone (products, team leads, customers) what problems we have in our teams. According to their answers, we will build graphics, and we will learn everything. But a lot of problems.

First, if you ask closed questions, then no conclusions can be drawn from this data. For example, we ask: “Is there a problem with planning in the team?” And 34% answer what is - and what to do about it? If you do open questions: “What are the problems in the team with the infrastructure?” - then you will get empty answers, because everyone is too lazy to write anything. From this data it is impossible to draw any conclusions .

We developed this idea - first we conduct surveys as a screening, and then interviews in order to understand what the problem is. We will talk about the interview a little later.

I gave three examples, in fact, we tried dozens of metrics .

Now we only use:

- Execution plans.

- The number of incidents.

- Polls + interviews.

I take liking if I say that we even measure these simple things in all teams, because the most expensive thing is implementation . Especially when there are 15 different teams, when the product says: “Yes, we don’t need it at all! We would roll out our task, now is not up to that! ” It is very difficult to measure one number in all teams.

Interview

We make a brief digression about interviews with developers . I read several articles, passed the grocery course. There is a lot about user research and customer development. I took a few practices from there, and they very well communicated with the developers.

If your goal is to find out what problems the team has, then first of all you should write targeted questions that you will be looking for the answer to. That is, not just throwing 30 questions of some kind, you choose 2-3 questions that you are looking for an answer to. For example: does the team have infrastructure problems; how communication is established between the business and the team.

In this case, questions should be:

- Open . The answer to the question: “Is there a problem?” - “Yes!” - will not tell you anything.

- Neutral . A question about a problem is a bad question. It is better to ask: "Tell me about the planning process in your team." The person talks about the process, and you are already starting to ask him deeper questions.

Another very good approach is prioritization . You have different aspects of the team’s life. You ask the employee, which, in his opinion, the coolest and should remain the same, and which, perhaps, should be improved.

There is another approach that I took from the interview chapter in the book “Who. Solve your problem number one ”- ask questions like this:“ If I asked the product, what do you think he would call the problems? ”This forces the developer to look at the whole picture, and not from his position, to see all the problems.

2. Evaluate resources

Now let's talk about resource assessment .

Let's start with the product approach - how he usually evaluates the resources of his team. There are 3 people, 20 working days in a month - we multiply people by days, we get 60 tasks.

Of course, I'm exaggerating, a normal product will multiply this as 60 days of development. But this is also wrong, no one will ever roll out tasks rated for 60 days in 60 days. Even in Scrum, it is advised to count the focus factor and multiply by a certain magic number, for example, 0.2. In fact, from iteration to iteration, we will roll out the 12, then 17, then 10 tasks. I think this is a very rough estimate.

I have my own approach to resource assessment. To begin with, we consider the team’s shared resource in working hours. We multiply the clock by the developers, we take off vacations and time off. Suppose it turned out 750.But not all development is development directly.

The above is real data on the example of one of the commands:

- 36.9% of the time is spent on communication - these are meetings, discussions, technical reviews, code reviews, etc.

- 63.1% - to solve problems directly.

Can the product count on these 63.1%? No, it cannot, because grocery tasks are only a part. There is also a quota (about 20%) for bug fixes and refactoring . This is what timlid distributes, and the product no longer counts on this time.

Of the product tasks, too, not all tasks are those that the product has planned. There are grocery tasks of other teams that ask for urgent help, because the release. We estimate about 8–10% of the tasks that come from other teams .

And here we have 287 hours! Everything would be great if we always fit in our assessments. But in this team, the average overspent was calculated - 1.51, that is, on average, the task took one and a half times more time than it was estimated.

A total of 750 left 189 hours to complete the main tasks . Of course, this is an approximation, but this formula has variables that can be changed. For example, if you devote a month to refactoring, you can substitute this value and find out what you can count on.

I devoted a whole day to it - I took puzzles, counted the average time in Excel, analyzed it - spent a lot of time and decided that I would never do that again. I need a quick approach for this, so as not to do it every time with my hands.

I was advised to plug in for Jira and EazyBI . This is a super complex tool, or I didn’t have enough abilities, but I gave up in the middle.

I found a way that allows you to quickly build any reports.

You upload data from your task tracker to any database you know (PostgreSQL for us), and then ask the analyst to calculate everything. We have this Dima, and he considered everything in 2 hours.

At the same time, he gave a bunch of additional data - overtime by developers, overtime by task types, some coefficients, told us about his insights and new hypotheses - cool and scalable: you can use it at once in all teams.

How to increase the resource

Now about how to speed up the team - to increase its resource without increasing the number of developers.

First of all, to speed up something, you need to measure it first. Usually we consider two metrics:

- The initial total assessment of tasks for the iteration in hours. For example, we rolled out tasks for 100 hours in a week.

- And sometimes the average rolling time is the time from the moment when the task came into development, before it turns out to be in production. This is a more business metric and is interesting for the product, not for the developer.

I’m not tired of telling Arseny’s bot, which I wrote over the weekend. Every week he posts a digest - how many tasks we rolled out.

There are two interesting things here:

- What I call the observer effect — by evaluating some indicator, we are already changing it. As soon as we started using this bot, we increased the number of tasks that we do during the iteration.

- The metric should be motivating . I began by showing how we do not have time to do a sprint. It turned out that we do not have time for 10%, 20%. This does not motivate at all; there will be no observer effect.

Speed is a lagging metric, we cannot directly influence it. It shows something, but there are metrics, by affecting which, we can influence the speed. In my opinion, there are two main ones:

1. Accuracy of the assessment of tasks.

Here, our charged analyst Dima helped us again, who calculated the actual time of the task, depending on the initial assessment.

This is real data. Above is a chart of the actual time to complete the task, and below is our estimate.

Joel Spolsky claims that 16 hours for a task is the limit. For us it is clear that after 12 hours the estimate does not make sense, there is too much dispersion. We really entered soft-limit and try not to evaluate tasks more than at 12 o'clock. After that, either decomposition or additional research.

2. The expense of time, that is, when developers spend time on something that they could not spend.

In one of our teams, communication was spent up to 50% of the time. We began to analyze and understand the reason. It turned out that the problem was in the processes: all customers wrote directly to the developers, asked questions. We changed processes a little, reduced this time and improved speed indicators.

In your case, it will not necessarily be communication, for example, it may be time on the deployment or on infrastructure. But the prerequisite for this is that all your developers should log the time. If no one is logging time in Jira, then obviously it will be very expensive to implement it.

3. We understand the technical debt

When I say "technical debt", I visualize it as something blurry - it is not clear how it can be measured?

If I tell you that we have a technical debt of exactly 648 hours in one of the teams, you won’t believe me. But I will tell the algorithm by which we measure it.

Once a quarter we gather with the whole team (we call it refactoring meetup) and invent cards: what crutches (ours and others) people saw in the code, dubious decisions and other bad things, for example, old versions of libraries - anything. After we have generated a bunch of these cards, for each we write resolution - what to do about it. Maybe, do nothing, because it is not a technical debt at all, or you need to update the library version, refactor, etc. Then we create tickets in Jira, where we write down: “This is the problem - this is its solution.”

And here we have 150 tickets in Jira - what to do with them?

After that, we conduct a survey, which takes 10 minutes for one developer. Each developer gives a rating from 1 to 5, where 1 - “Someday we will do in the next life”, and 5 - “Right now we need to do it, it slows us down a lot”. We make this assessment right on the ticket in Jira. We made a custom field, called it “Priority of refactoring”, and according to it we get a prioritized list of our technical debt, problems. Evaluating the first 10–20, and then multiplying the tail by the average estimate (we are too lazy to evaluate all 150 tickets), we get technical debt in hours .

Why do we need this? We conduct this assessment once a quarter. If we had 700 hours in the first quarter, and then, for example, 800, then everything is fine. And if it becomes 1400, this means that there is a threat and we need to increase the quota for refactoring - agree with the business that we will now refactor a whole month or 40% of the total time.

Well, we solved the problem with technical debt, it is under lock and key.

But let's talk about the reasons that lead to its occurrence. Most often this is a bad code review.

Bad code review

One of the main reasons for bad code review is bus-factor. We learned to formalize bus-factor . We take a team, write out a list of areas relevant to this team, for example, for a platform command it is: video calling, exercise synchronization, tools. Each developer puts a rating from 1 to 3:

- 1 - "I do not understand what it is, I heard a couple of times."

- 2 - "I can do tasks from this area."

- 3 - "I am a super expert in this field."

Interestingly, for some areas there was not a single expert in the team, no one knew how it worked.

How did we solve the problem with bus-factor ?

Next, we calculated the median value for each region, and conducted a series of reports at regular team meetings, in which experts told the whole team about those very areas. Of course, this method does not scale very well, but, firstly, there are videos of these reports, and secondly, this is a very fast way. The whole team now more or less knows how video calls work. For some areas we have not written documentation yet, but we have made a task to write documentation. Ever write.

Now about the code review, when there is not enough time.We are currently undergoing an experiment, where we review code review. At the end of each month, we select random pull requests, ask the developers once again to do a code review and find as many problems as possible. Thus, when you know that someone will recheck after you, then you are already more responsive to review. We also added checklists - did you look at this, this and this - in general, we try to make the code review more qualitative.

That's all about technical debt. We have the last question - how to make all the teams be Sapsans .

4. Turn all teams into Sapsans

The easiest way to do this is at the start. When a new team is formed, especially when a new team leader comes along and recruits people, we do the same cross review of all processes. That is, we take a tmlid expert who checks everything: planning, first technical solutions, technology stack, all processes. So we set the correct vector for the team that starts the work. It is very expensive due to the time spent, but it pays off.

Here, too, there is a certain prerequisite, through Google Suite we record all the meetings on video: all meetings, all planning, all retrospectives, they can always be reconsidered.

The second cheap metric is of course checklists .

The above is an example of a checklist, but in fact it is much longer. For each team we mark in the huge table whether there are autotests in the team, Continuous Integration, etc.

But there is a problem with these checklists - they become obsolete the day after you have done it. Administrative assistants help us solve it - these are people to whom you can set any routine tasks. We set the task, and they update themselves what they can update, and where they cannot, they want the team leader to update. Thus, we maintain the relevance of checklists.

Let's sum up

We talked about:

- How to determine which teams work well, which ones are bad.

- How to evaluate team resources and sometimes even speed up some of the teams.

- How to evaluate the technical debt that these teams are taking with them.

- How to make all new teams Sapsans.

Bonus

The report did not include some materials that Alexey is willing to share on request.

- QA-metrics are more than 10 different metrics in testing to make all teams cool.

- A list of all the metrics that any Skyeng has ever tried. This is not just a list - it is a list with experience and comments, plus, with some categorization of these metrics.

- Bot Arseny will soon be posted in open source, you can learn about it as soon as it happens.

Email Alexey in a Telegram (@ ax8080) or facebook to get these lists and learn more about the effectiveness of teams.

I remind you that Call for Papers to the TeamLead Conf 2019 conference for tmlides, which will be held February 25-26 in Moscow, is already open. Here, briefly on how to submit a report, and the conditions for participation, this is a link to the application form.

And stay with us! We will continue to post articles and videos on managing development teams, and in the mailing list we will collect collections of useful materials for several weeks and write about the news of the conference.