Personal experience of using contactless sensors in the development

Good day to all! My name is Maxim, I am a leading developer at Habilect.

In each programmer’s life, there comes a time when I want to share what 2/3 of my life is spent on - description of work and projects :)

Historically, the development of systems based on contactless sensors has become the main field of my work.

For almost 7 years, many options have been tried - Microsoft Kinect (both the XBOX 360 version and Kinect One for Windows), Intel RealSense (starting with the first version available on the market - F200, continuing the SR300 and, at the moment, the last released - D435), Orbbec (Astra and Persee), Leap Motion (about which even here once wrote about the processing of custom-gestures). Naturally, it was not without ordinary webcams - image analysis, OpenCV, etc.

Those who are interested - I ask under the cat.

Just in case, a brief educational program on this topic of contactless sensors:

Imagine a situation that it is necessary to programmatically track the actions of a particular person.

Of course, you can use a regular webcam, which can be bought at any store. But with this use case, it is necessary to analyze the images, which is resource intensive, and, frankly, important (the problem of memory allocation in the same OpenCV is still relevant).

Therefore, it is better to recognize people not as part of the camera image, but as a “skeleton” obtained by a specialized sensor using active range finders. This allows you to both track a person (or several people) and work with individual parts of the "skeleton".

In the terminology of Microsoft Kinect SDK, these individual parts are called "Joints". In the case of a sensor, by default, their status is updated every 30 ms.

Each joint contains:

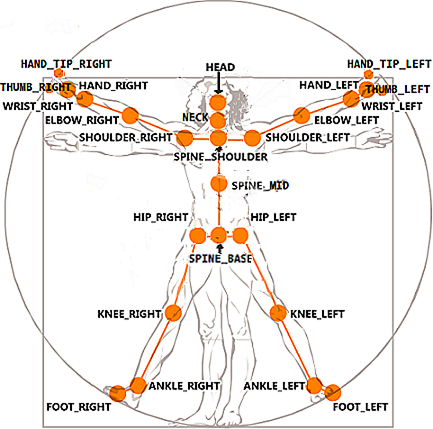

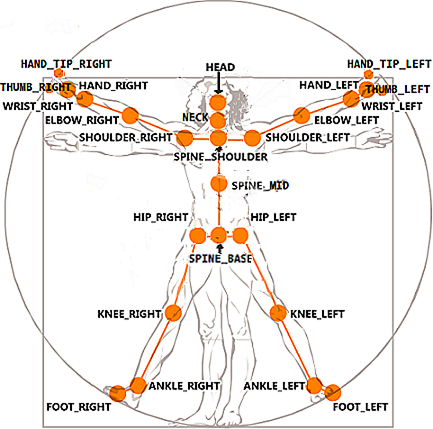

The figure shows the Joint's, processed by Kinect v2 on the example of Leonardo Da Vinci's painting “Vitruvian Man”.

Thus, working with a contactless sensor, the developer gets the opportunity to analyze the actions of a person or a group of people (for example, Kinect can simultaneously track 6 people in a frame). And what to do with the resulting data array depends on the specific application.

The areas of application of such sensors are endless. The main areas of my work are advertising and medicine.

Consider the advertising direction. Now, for the work of interactive advertising stands, several basic modules are needed, namely:

If everything is clear with the advertising materials themselves, then sensors are used to collect statistics. For example, any advertiser is interested in how many people paid attention to his advertising? Men, women or children? What time do they most often stop? How much time they look at advertising, and not just stand next to the stand, digging into the phone? With the help of the sensor it can be traced and made a report in a form convenient for the advertiser.

For the remote configuration, the most probably simple and obvious model is used - Google Calendar. Each calendar is tied to a specific stand, and, being in St. Petersburg, you can manage stands anywhere in the world.

And the attracting effect is the same functionality, because of which the stand is called interactive. You can make a game controlled by a person with a sponsor's logo, realize a welcome sound when a person appears in the sensor's field of view, make a QR code with a discount coupon in the advertiser's store if, for example, a person stood watching an advertisement for a minute. Options are limited mostly to fantasy, not technology.

Why use sensors in medicine ? The answer to this question is trivial and simple - it reduces the cost of examinations and rehabilitation while maintaining accuracy.

One of the most striking examples is the development in the field of stabilometry - the science of center of gravity abnormalities when walking, which affects the posture, the spine, and proper muscle work. You can go to special centers for special simulators, which is a very expensive procedure, which, however, is not everywhere provided. And you can buy home sensor and software, connect to the TV and perform diagnostic tests, the results of which are automatically sent to your doctor.

Another example is home rehabilitation. It is not a secret for anyone that in rehabilitation there are two main problems - an expensive call to the doctor at home (which needs to be done several times a week) and the reluctance of the person being rehabilitated to perform the same type of exercises for a long time. To this end, several basic tenets of the system were developed, namely:

Such a system makes it possible to reduce both time (for both the doctor and the patient to travel to each other), and to maintain the accuracy of the exercises necessary for progress.

For the implementation of projects using the stack technology. NET. I work Windows-only :). Yes, because of this, I had to write wrappers for the native SDK several times (as a vivid example, I made adapters for the Intel RealSense SDK, since at that time it was only unmanaged code). It cost a considerable amount of time, nerves and gray hair, but allowed to connect the library to different projects developed on the Windows platform.

It is worth noting that the main sensors (Kinect and RealSense) require x64 bit capacity for development.

To simplify the work of client applications, a separate service was implemented that works with the sensor, packing data in a convenient format for us and sending data to subscribers using a TCP protocol. By the way, this allowed us to create a system that works with several sensors, which can significantly improve the recognition accuracy.

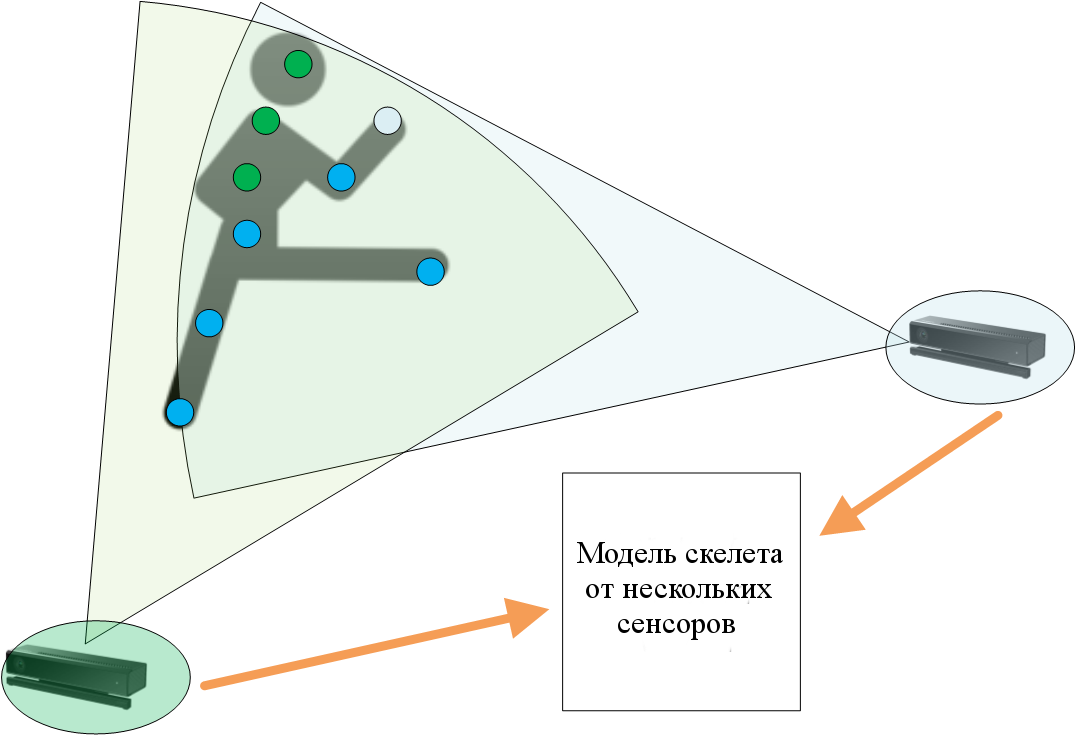

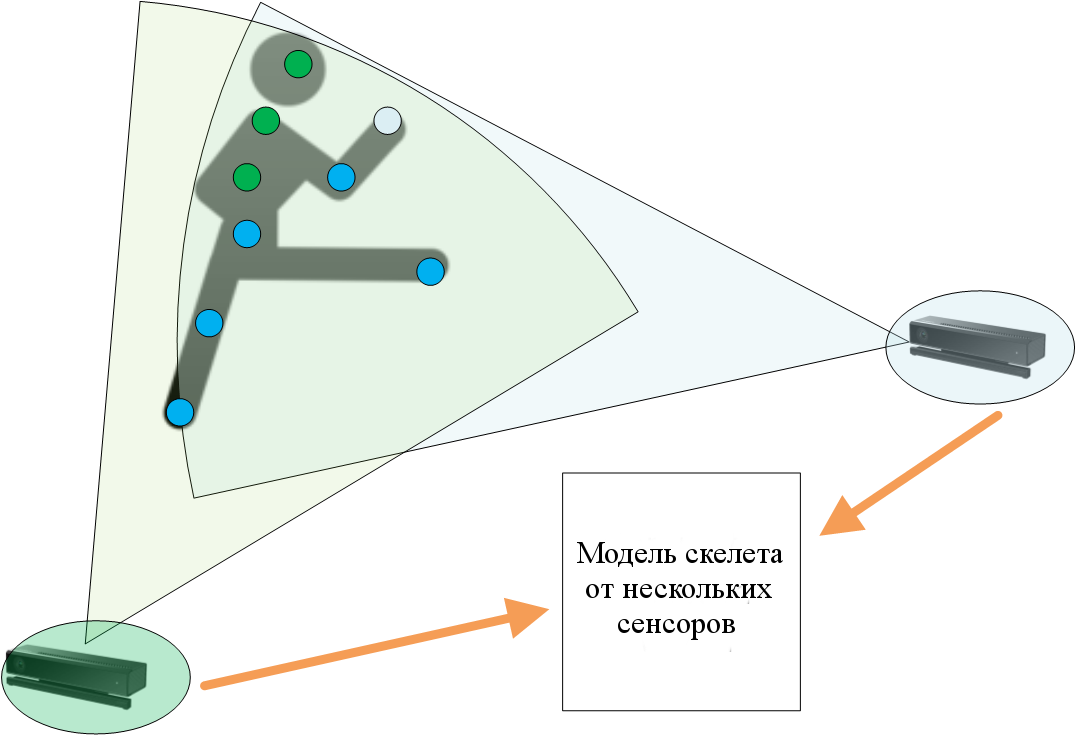

The diagram in simplified form shows the advantages of using multiple sensors to track an object. Blue points (joints) are stably determined by the first and second sensors, green ones - only by the second. Naturally, the developed solution is implemented under the "N-sensor model".

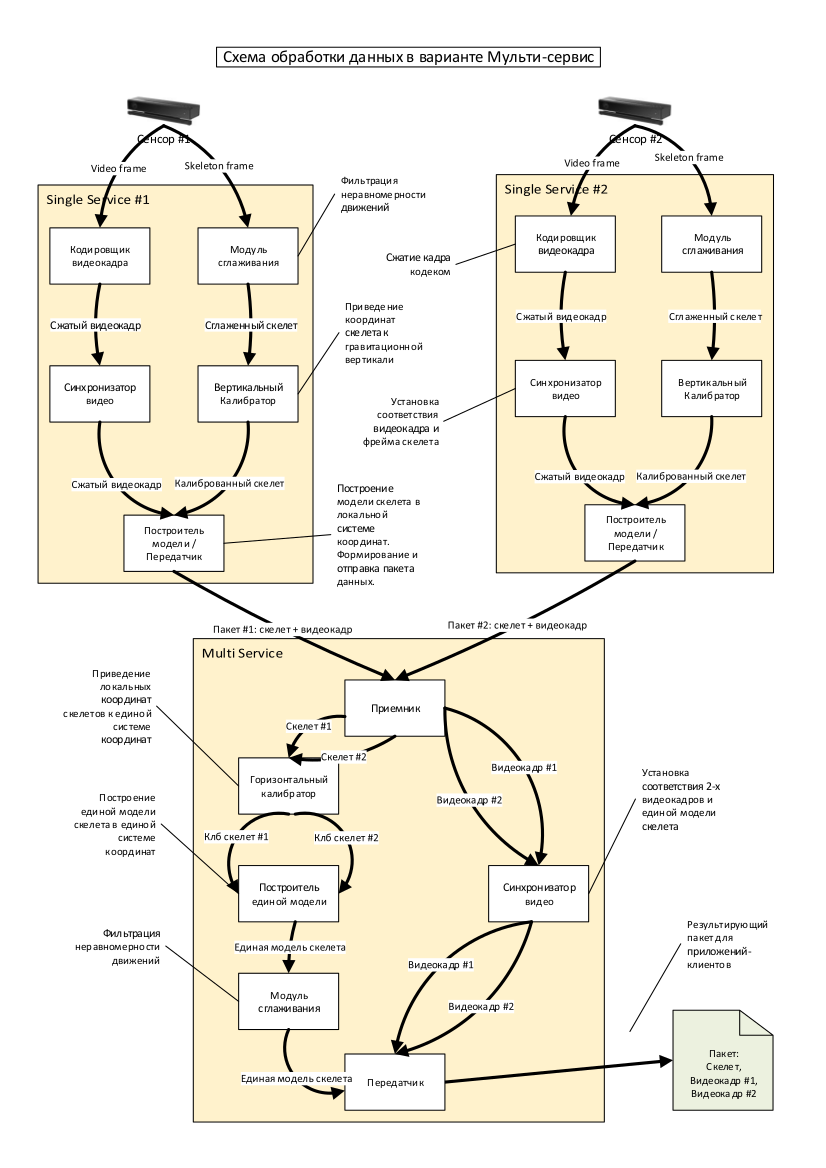

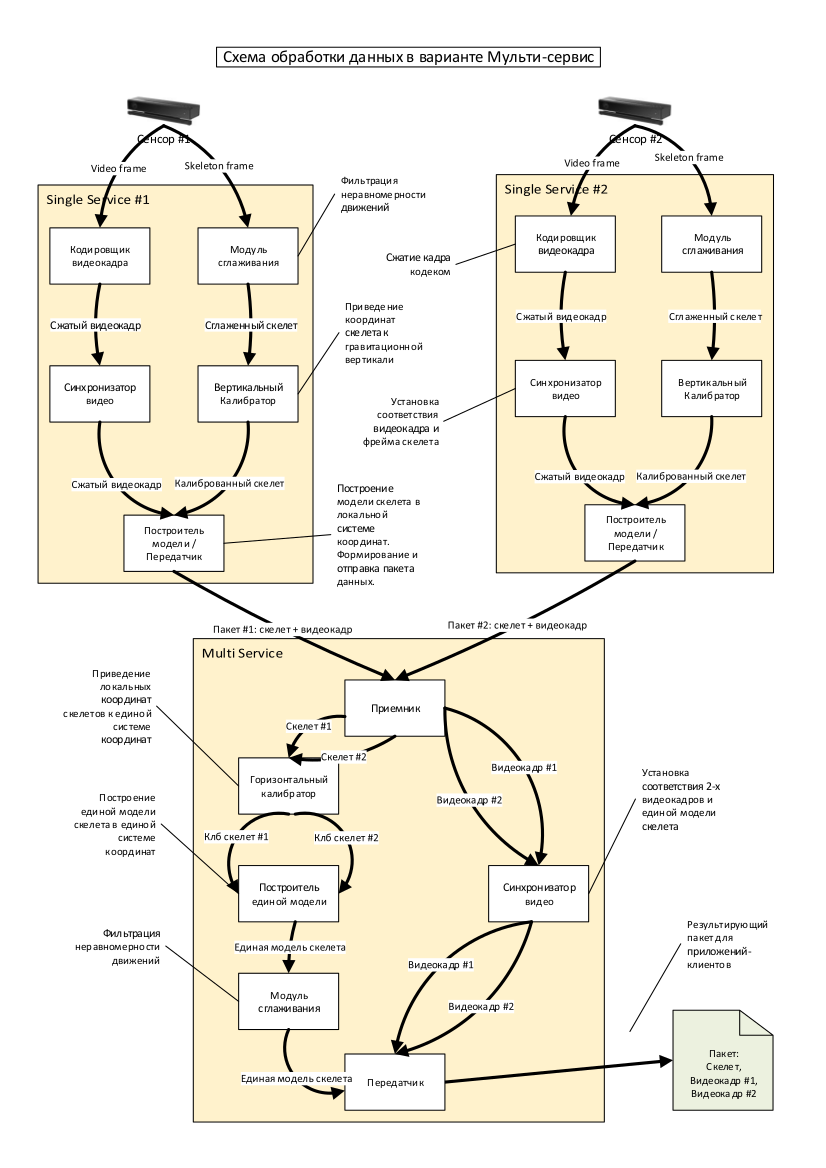

Detailed processing of data from several sensors is presented in the diagram above.

With almost any development, one of the main criteria for the contractor is interest. After several years in a row, I implemented similar web applications on ASP.NET and PHP, I wanted something new and more, let's say, smart. In web development (with rare exceptions) everything has already been invented. Well, the site, well, the service, well, the database. In the case of working with sensors, interesting and ambiguous tasks always arise, for example, “how do you transmit hd-video from a sensor over TCP so that it doesn’t hang the system?” (Life example, although it was done a long time ago). And it is difficult to predict that for the improvement and development of the system will need the following. It is clear that there is a main development sprint where global and pre-planned improvements are described. But such local subtasks are one of the most interesting in the craft of a programmer.

Such work is not exactly called routine. If it became interesting to someone to learn in more detail, or someone wants to know where to start in this area - I am open to communication. Ready to answer any questions :) And may the force be with you!

In each programmer’s life, there comes a time when I want to share what 2/3 of my life is spent on - description of work and projects :)

Historically, the development of systems based on contactless sensors has become the main field of my work.

For almost 7 years, many options have been tried - Microsoft Kinect (both the XBOX 360 version and Kinect One for Windows), Intel RealSense (starting with the first version available on the market - F200, continuing the SR300 and, at the moment, the last released - D435), Orbbec (Astra and Persee), Leap Motion (about which even here once wrote about the processing of custom-gestures). Naturally, it was not without ordinary webcams - image analysis, OpenCV, etc.

Those who are interested - I ask under the cat.

Just in case, a brief educational program on this topic of contactless sensors:

Imagine a situation that it is necessary to programmatically track the actions of a particular person.

Of course, you can use a regular webcam, which can be bought at any store. But with this use case, it is necessary to analyze the images, which is resource intensive, and, frankly, important (the problem of memory allocation in the same OpenCV is still relevant).

Therefore, it is better to recognize people not as part of the camera image, but as a “skeleton” obtained by a specialized sensor using active range finders. This allows you to both track a person (or several people) and work with individual parts of the "skeleton".

In the terminology of Microsoft Kinect SDK, these individual parts are called "Joints". In the case of a sensor, by default, their status is updated every 30 ms.

Each joint contains:

- data on the position of the corresponding point of the skeleton in space (X-, Y- and Z-coordinates) relative to the optical axis of the sensor,

- the state of visibility of the point (determined, not determined, the position is calculated by the sensor),

- rotation quaternion relative to the previous point

The figure shows the Joint's, processed by Kinect v2 on the example of Leonardo Da Vinci's painting “Vitruvian Man”.

Thus, working with a contactless sensor, the developer gets the opportunity to analyze the actions of a person or a group of people (for example, Kinect can simultaneously track 6 people in a frame). And what to do with the resulting data array depends on the specific application.

The areas of application of such sensors are endless. The main areas of my work are advertising and medicine.

Consider the advertising direction. Now, for the work of interactive advertising stands, several basic modules are needed, namely:

- Advertising materials themselves

- Collecting statistics on the degree of attracting the attention of visitors to the display of a particular advertisement

- The ability to remotely configure the advertising stand

- An eye-catching effect that distinguishes an interactive stand from regular TV with commercials and printed banners

If everything is clear with the advertising materials themselves, then sensors are used to collect statistics. For example, any advertiser is interested in how many people paid attention to his advertising? Men, women or children? What time do they most often stop? How much time they look at advertising, and not just stand next to the stand, digging into the phone? With the help of the sensor it can be traced and made a report in a form convenient for the advertiser.

For the remote configuration, the most probably simple and obvious model is used - Google Calendar. Each calendar is tied to a specific stand, and, being in St. Petersburg, you can manage stands anywhere in the world.

And the attracting effect is the same functionality, because of which the stand is called interactive. You can make a game controlled by a person with a sponsor's logo, realize a welcome sound when a person appears in the sensor's field of view, make a QR code with a discount coupon in the advertiser's store if, for example, a person stood watching an advertisement for a minute. Options are limited mostly to fantasy, not technology.

Why use sensors in medicine ? The answer to this question is trivial and simple - it reduces the cost of examinations and rehabilitation while maintaining accuracy.

One of the most striking examples is the development in the field of stabilometry - the science of center of gravity abnormalities when walking, which affects the posture, the spine, and proper muscle work. You can go to special centers for special simulators, which is a very expensive procedure, which, however, is not everywhere provided. And you can buy home sensor and software, connect to the TV and perform diagnostic tests, the results of which are automatically sent to your doctor.

Another example is home rehabilitation. It is not a secret for anyone that in rehabilitation there are two main problems - an expensive call to the doctor at home (which needs to be done several times a week) and the reluctance of the person being rehabilitated to perform the same type of exercises for a long time. To this end, several basic tenets of the system were developed, namely:

- Remote preparation of rehabilitation programs by a doctor for remote monitoring of performance. For example, a doctor from St. Petersburg can remotely monitor the course of exercises prescribed to a patient from Vladivostok, and if necessary, change exercises, increase or decrease the complexity and intensity of classes, study statistics in real time, etc.

- Gamified exercise process. If modern simulators are mainly focused on performing basic movements to develop damaged muscles and joints, then an interactive rehabilitation system allows you to perform exercises, literally, playfully. For example, with the help of the well-known Tetris, which, instead of pressing buttons, is controlled by movements designated by the doctor (raising the left hand, lifting the right leg, tilting the head forward and so on).

- Implementation of exercise criteria. When working together with the patient, the doctor controls the exercise with the help of hints and tactile assistance (for example, you cannot bend the arm at the elbow when the arm is moved away - this affects the operation of the shoulder joint). The interactive system evaluates the relative angles between the Joint'ami and, in the case of going beyond the allowable range, makes a warning to the user and does not count for the incorrect execution of the exercise.

Such a system makes it possible to reduce both time (for both the doctor and the patient to travel to each other), and to maintain the accuracy of the exercises necessary for progress.

For the implementation of projects using the stack technology. NET. I work Windows-only :). Yes, because of this, I had to write wrappers for the native SDK several times (as a vivid example, I made adapters for the Intel RealSense SDK, since at that time it was only unmanaged code). It cost a considerable amount of time, nerves and gray hair, but allowed to connect the library to different projects developed on the Windows platform.

It is worth noting that the main sensors (Kinect and RealSense) require x64 bit capacity for development.

To simplify the work of client applications, a separate service was implemented that works with the sensor, packing data in a convenient format for us and sending data to subscribers using a TCP protocol. By the way, this allowed us to create a system that works with several sensors, which can significantly improve the recognition accuracy.

The diagram in simplified form shows the advantages of using multiple sensors to track an object. Blue points (joints) are stably determined by the first and second sensors, green ones - only by the second. Naturally, the developed solution is implemented under the "N-sensor model".

Detailed processing of data from several sensors is presented in the diagram above.

With almost any development, one of the main criteria for the contractor is interest. After several years in a row, I implemented similar web applications on ASP.NET and PHP, I wanted something new and more, let's say, smart. In web development (with rare exceptions) everything has already been invented. Well, the site, well, the service, well, the database. In the case of working with sensors, interesting and ambiguous tasks always arise, for example, “how do you transmit hd-video from a sensor over TCP so that it doesn’t hang the system?” (Life example, although it was done a long time ago). And it is difficult to predict that for the improvement and development of the system will need the following. It is clear that there is a main development sprint where global and pre-planned improvements are described. But such local subtasks are one of the most interesting in the craft of a programmer.

Such work is not exactly called routine. If it became interesting to someone to learn in more detail, or someone wants to know where to start in this area - I am open to communication. Ready to answer any questions :) And may the force be with you!

Only registered users can participate in the survey. Sign in , please.