Overview and testing of flash storage from IBM FlashSystem 900

Photos, basic principles and some synthetic tests inside ...

Modern information systems and the pace of their development dictate their own rules for the development of IT infrastructure. Solid state storage systems have long evolved from luxury to a means of achieving the SLA drive you need. So, here it is - a system capable of issuing more than a million IOPS.

Basic principles

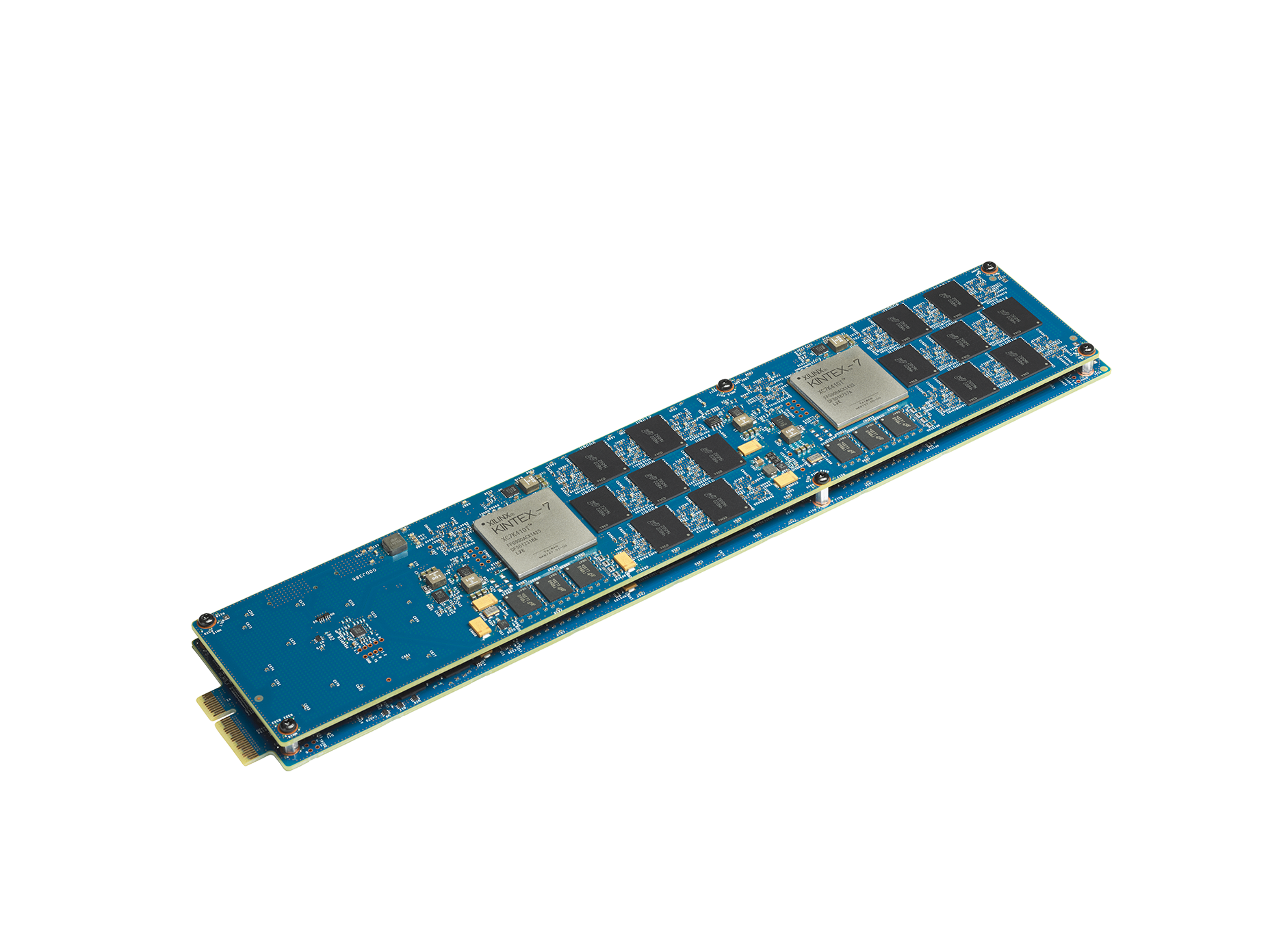

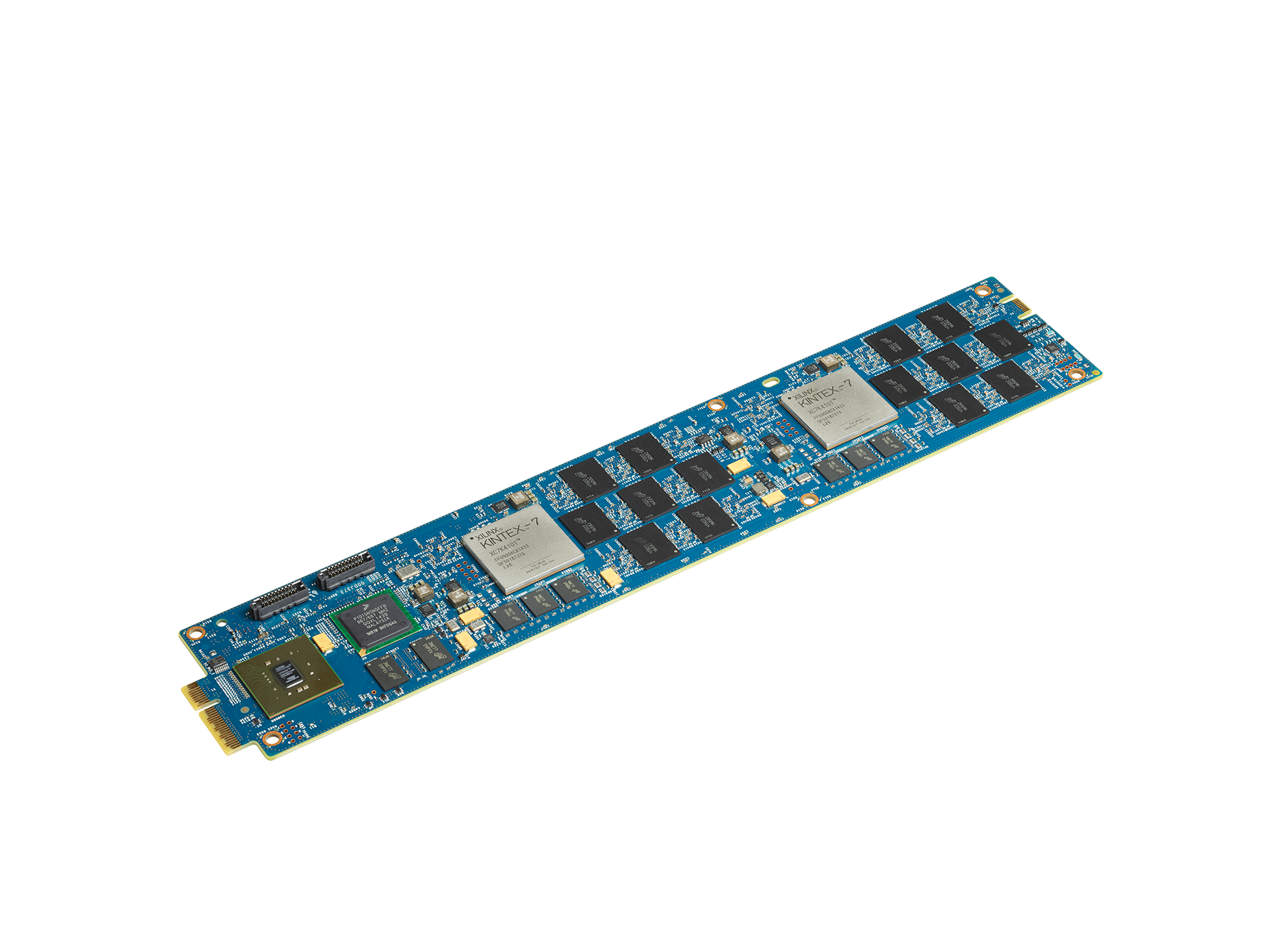

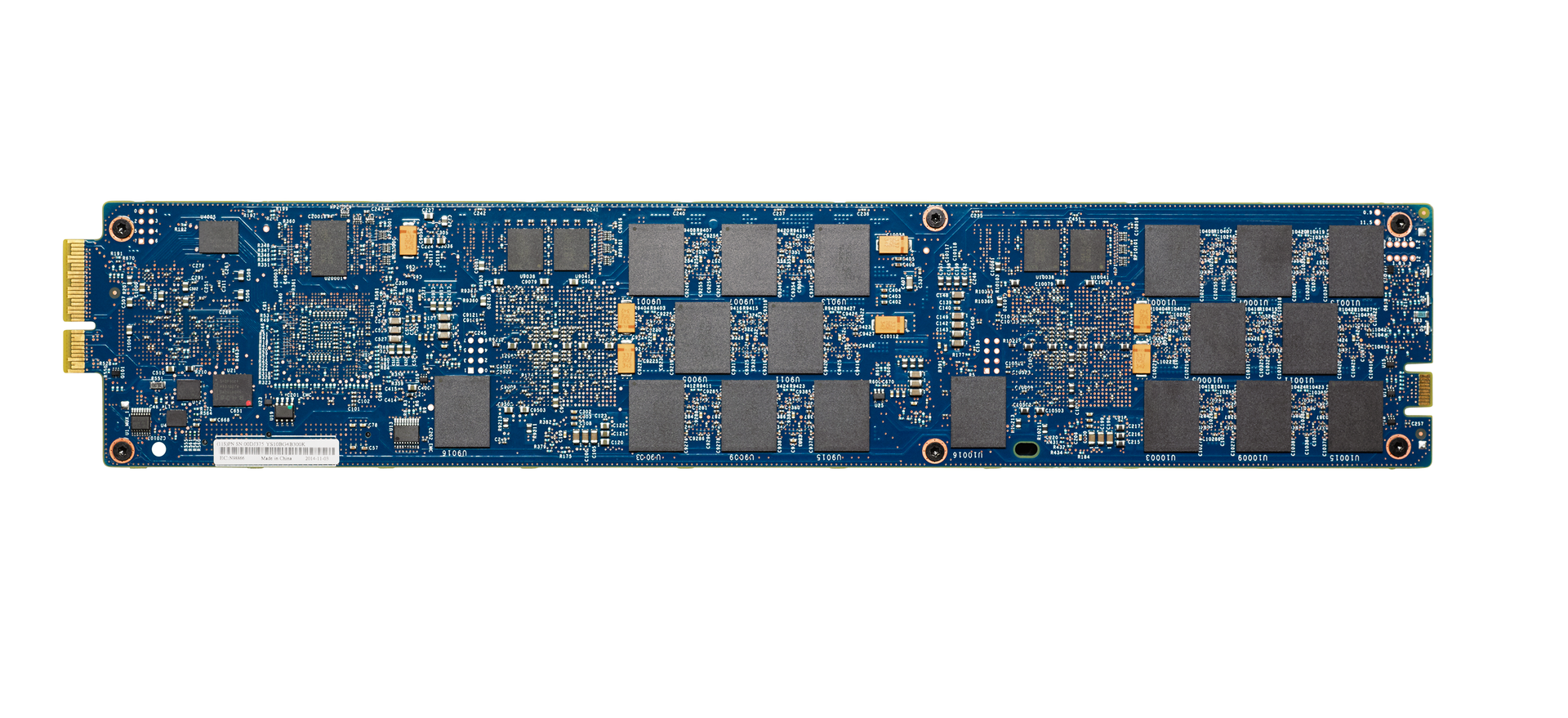

This storage system is a flash array with increased performance due to the use of MicroLatency modules and optimization of MLC technology.

When we asked our presale what technologies are used to ensure fault tolerance and how many gigabytes are actually hidden inside (IBM claims 11.4 TB of clean space), he answered evasively.

As it turned out, everything is not so simple. Inside each module there are memory chips and 4 FPGA controllers; Raid with variable stripe (Variable Stripe RAID, VSR) is built on them.

Each module chip is divided into so-called layers. On each N-layer of all the chips inside the module, Raid5 of variable length is built.

If one layer on the chip fails, the stripe length decreases, and the beaten memory cell is no longer used. Due to the excessive number of memory cells, the usable volume is saved. As it turned out, the system has a lot more than 20 Tb of raw flash, i.e. almost at the Raid10 level, and due to redundancy, we do without rebuilding the entire array when a single chip fails.

With Raid at the module level, FlashSystem integrates the modules into standard Raid5.

Thus, to achieve the desired level of fault tolerance, from a system with 12 modules of 1.2 TB each (marking on the module) we get a little more than 10 TB.

Testing

After receiving the system from a partner, we install the system in a rack and connect to the current infrastructure.

We connect the storage system, according to a pre-agreed scheme, configure the zoning and check the availability from the virtualization environment. Next - prepare the laboratory stand. The stand consists of 4 blade servers connected to the tested storage system by two independent 16 Gb optical factories.

Since IT Park leases virtual machines, the test will evaluate the performance of one virtual machine and a whole cluster of virtual machines running vSphere 5.5.

We optimize our hosts a bit: set up multithreading (roundrobin and the limit on the number of requests), and also increase the queue depth on the FC HBA driver.

On each blade server, we create one virtual machine (16 GHz, 8 GB RAM, 50 GB system disk). We connect 4 hard drives to each machine (each on its own Flash moon and on its Paravirtual controller).

In testing, we consider synthetic testing with a small 4K block (read / write) and a large 256K block (read / write). SHD stably gave 750k IOPS, which looked very good for us, despite the 1.1M IOPS space figure announced by the manufacturer. Do not forget that everything is pumped through the hypervisor and OS drivers.

Also note that, like all well-known vendors, the declared performance is achieved only in greenhouse laboratory conditions (a huge number of SAN uplinks, a specific LUN breakdown, the use of dedicated servers with RISK architecture and specially configured load generator programs).

Conclusions

Pros : huge performance, easy setup, user-friendly interface.

Minuses: outside the capacity of one system, scaling is carried out by additional shelves. “Advanced” functionality (snapshots, replication, compression) is moved to the storage virtualization layer. IBM has built a clear hierarchy of storage systems, led by a storage virtualizer (SAN Volume Controller or Storwize v7000), which will provide multi-level, virtualization and centralized management of your storage network.

Bottom line : IBM Flashsystem 900 performs its tasks of processing hundreds of thousands of IOs. In the current test infrastructure, we managed to get 68% of the performance declared by the manufacturer, which gives an impressive performance density on TB.

Modern information systems and the pace of their development dictate their own rules for the development of IT infrastructure. Solid state storage systems have long evolved from luxury to a means of achieving the SLA drive you need. So, here it is - a system capable of issuing more than a million IOPS.

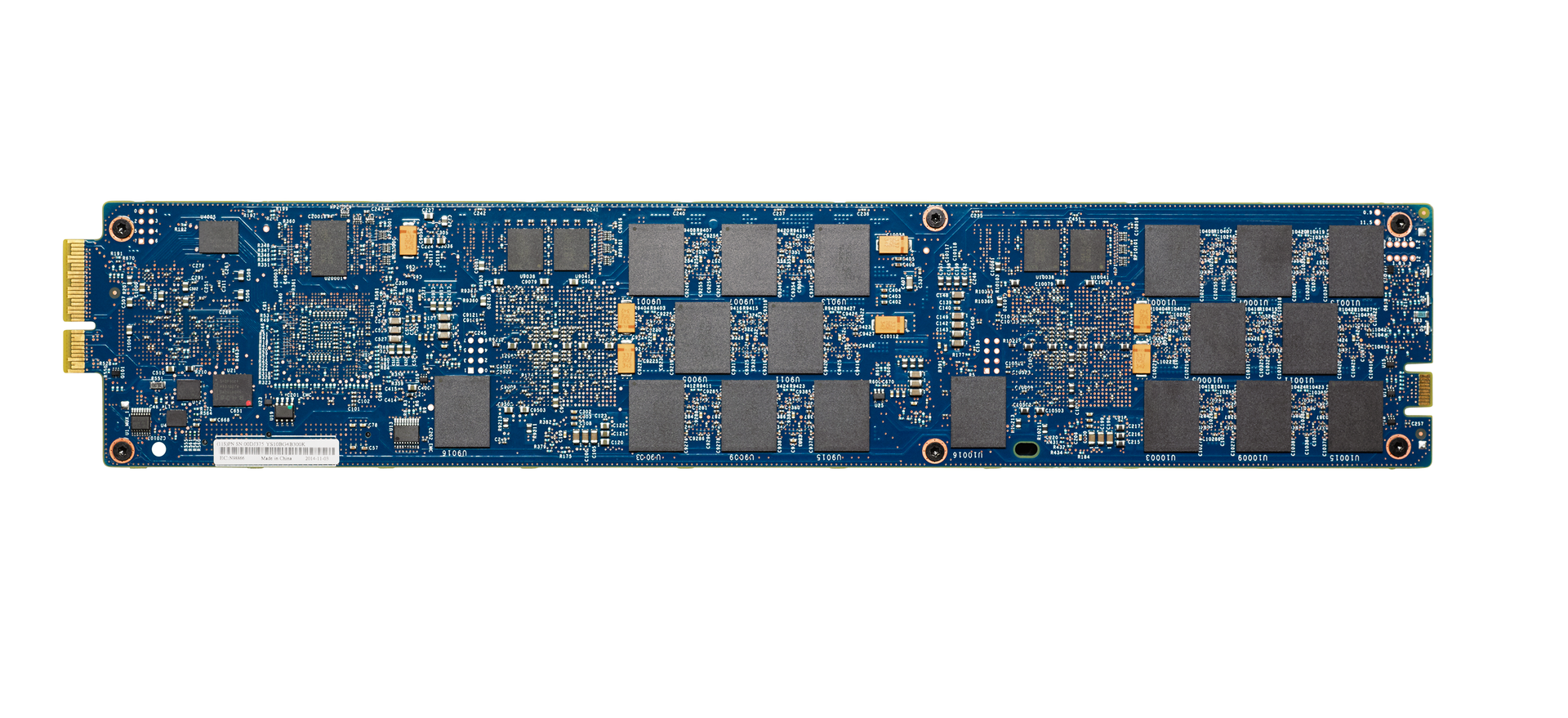

Specifications

Basic principles

This storage system is a flash array with increased performance due to the use of MicroLatency modules and optimization of MLC technology.

When we asked our presale what technologies are used to ensure fault tolerance and how many gigabytes are actually hidden inside (IBM claims 11.4 TB of clean space), he answered evasively.

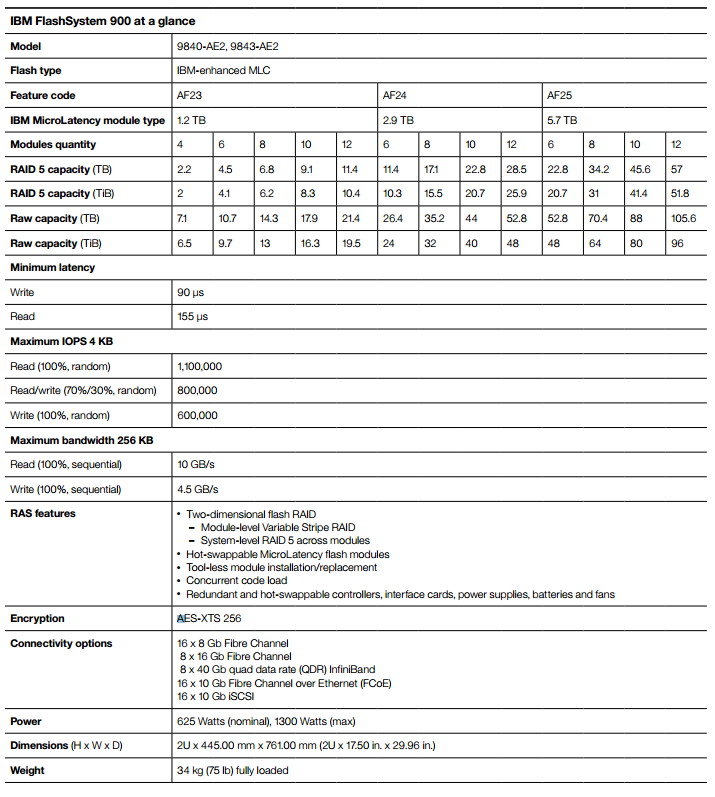

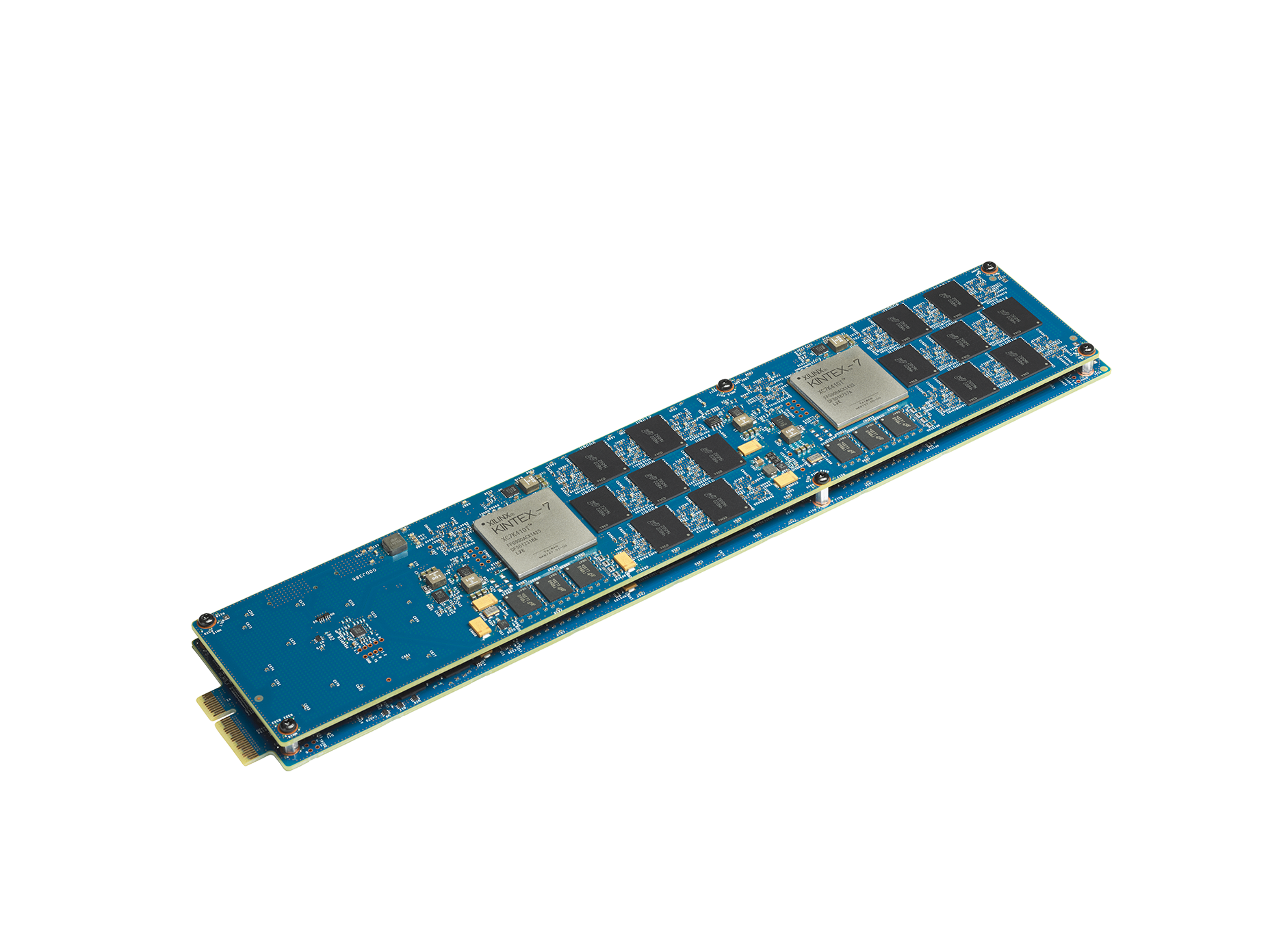

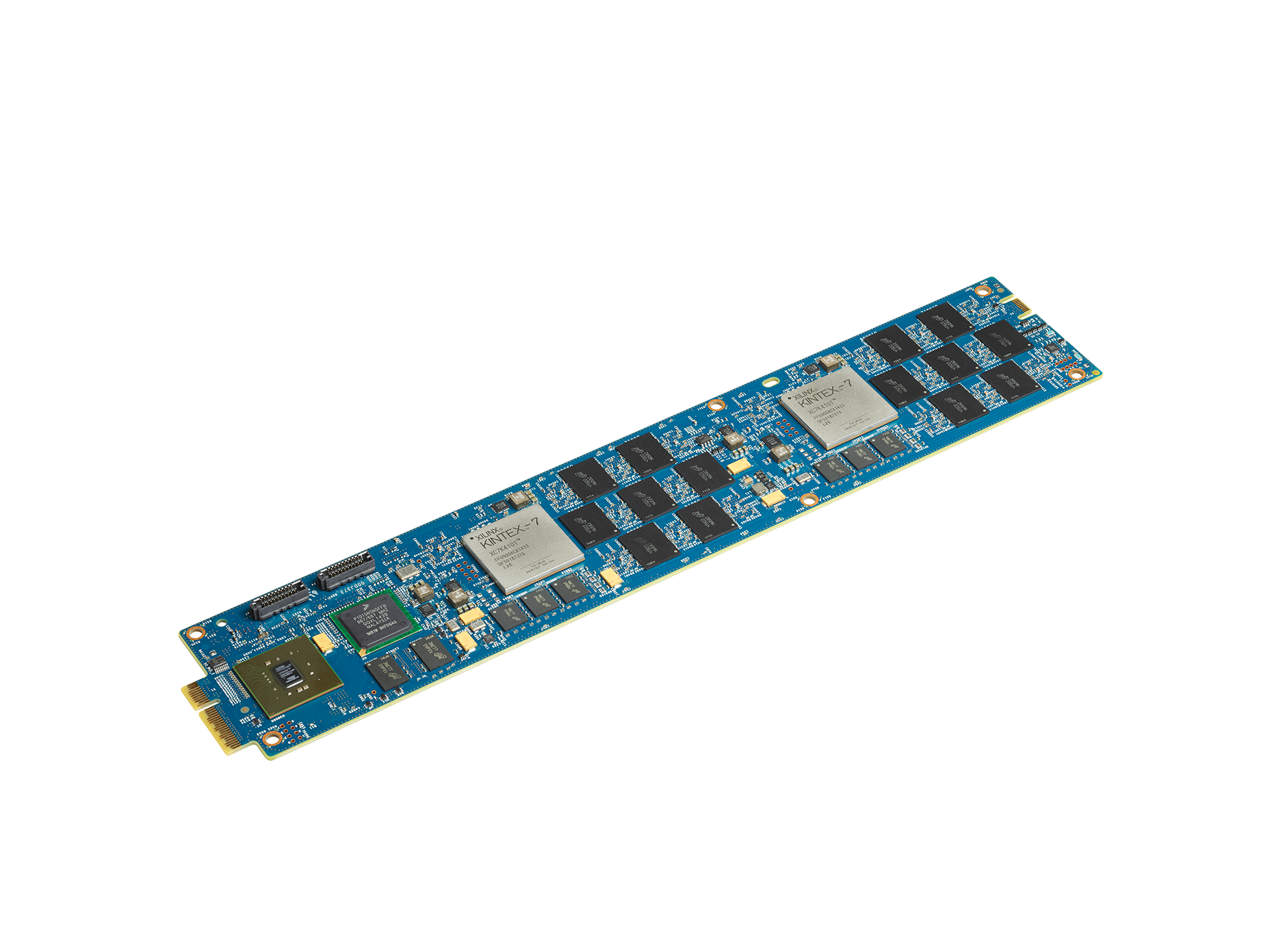

As it turned out, everything is not so simple. Inside each module there are memory chips and 4 FPGA controllers; Raid with variable stripe (Variable Stripe RAID, VSR) is built on them.

Module internals, two double-sided boards

Each module chip is divided into so-called layers. On each N-layer of all the chips inside the module, Raid5 of variable length is built.

If one layer on the chip fails, the stripe length decreases, and the beaten memory cell is no longer used. Due to the excessive number of memory cells, the usable volume is saved. As it turned out, the system has a lot more than 20 Tb of raw flash, i.e. almost at the Raid10 level, and due to redundancy, we do without rebuilding the entire array when a single chip fails.

With Raid at the module level, FlashSystem integrates the modules into standard Raid5.

Thus, to achieve the desired level of fault tolerance, from a system with 12 modules of 1.2 TB each (marking on the module) we get a little more than 10 TB.

Web-based system

Yes, it turned out to be an old friend (hello v7k clusters) with a terrible function of pulling locales from the browser. FlashSystem has a management interface similar to Storwize, but they differ significantly in functionality. The FlashSystem software is used for configuration and monitoring, and the software layer (virtualizer) is not available as a gateway, as the systems are designed for different tasks.

Testing

After receiving the system from a partner, we install the system in a rack and connect to the current infrastructure.

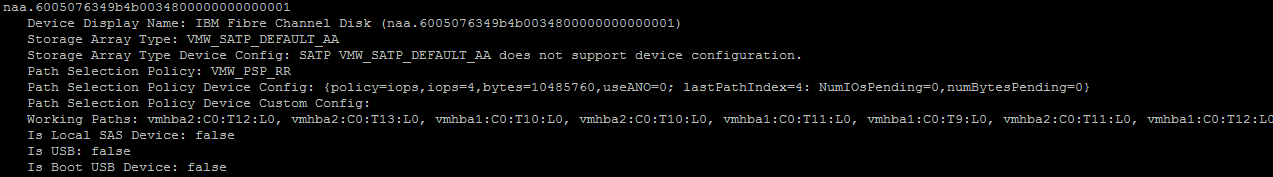

We connect the storage system, according to a pre-agreed scheme, configure the zoning and check the availability from the virtualization environment. Next - prepare the laboratory stand. The stand consists of 4 blade servers connected to the tested storage system by two independent 16 Gb optical factories.

Wiring diagram

Since IT Park leases virtual machines, the test will evaluate the performance of one virtual machine and a whole cluster of virtual machines running vSphere 5.5.

We optimize our hosts a bit: set up multithreading (roundrobin and the limit on the number of requests), and also increase the queue depth on the FC HBA driver.

ESXi Settings

Our settings may differ from yours!

On each blade server, we create one virtual machine (16 GHz, 8 GB RAM, 50 GB system disk). We connect 4 hard drives to each machine (each on its own Flash moon and on its Paravirtual controller).

VM settings

In testing, we consider synthetic testing with a small 4K block (read / write) and a large 256K block (read / write). SHD stably gave 750k IOPS, which looked very good for us, despite the 1.1M IOPS space figure announced by the manufacturer. Do not forget that everything is pumped through the hypervisor and OS drivers.

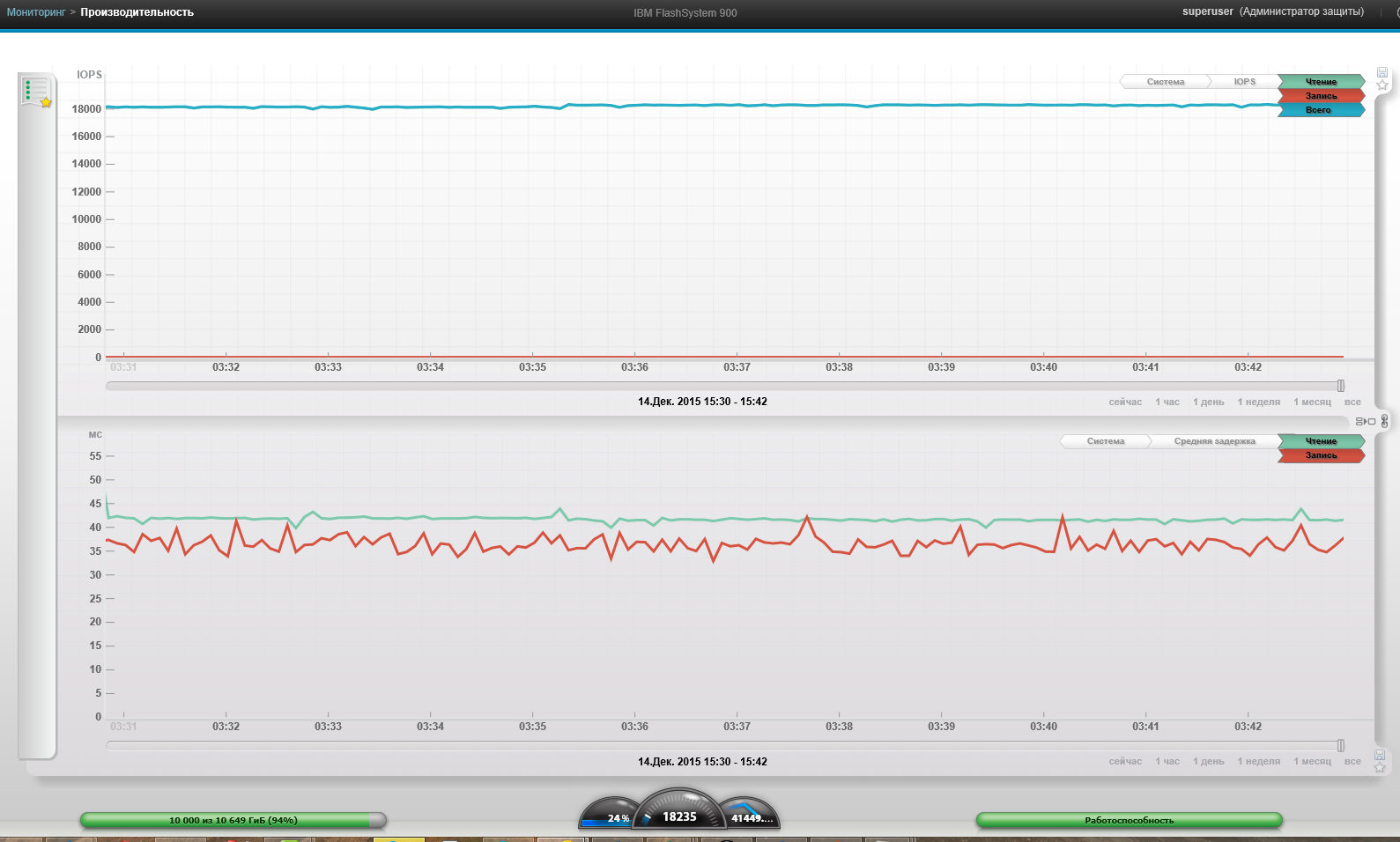

Schedules of IOPS, delays and, as it seems to me, notrim

1 VM, 4k block, 100% read, 100% random. When all resources were provided from one virtual machine, the performance graph behaved non-linearly and jumped from 300k to 400k IOPS. On average, we got about 400k IOPS

4 VMs , Block 4k, 100% read, 100% randomly

4 VMs, Block 4k, 0% read, 100% randomly

4 VMs, Block 4k, 0% read, 100% randomly, after 12 hours. Drawdowns in performance, we did not see.

1 VM, 256K block, 0% read, 0% randomly

4 VM, 256k block, 100% read, 0% random

4 VM, 256k block, 0% read, 0% random

Maximum system throughput (4 VM, 256k block, 100% read, 0% random)

4 VMs , Block 4k, 100% read, 100% randomly

4 VMs, Block 4k, 0% read, 100% randomly

4 VMs, Block 4k, 0% read, 100% randomly, after 12 hours. Drawdowns in performance, we did not see.

1 VM, 256K block, 0% read, 0% randomly

4 VM, 256k block, 100% read, 0% random

4 VM, 256k block, 0% read, 0% random

Maximum system throughput (4 VM, 256k block, 100% read, 0% random)

Also note that, like all well-known vendors, the declared performance is achieved only in greenhouse laboratory conditions (a huge number of SAN uplinks, a specific LUN breakdown, the use of dedicated servers with RISK architecture and specially configured load generator programs).

Conclusions

Pros : huge performance, easy setup, user-friendly interface.

Minuses: outside the capacity of one system, scaling is carried out by additional shelves. “Advanced” functionality (snapshots, replication, compression) is moved to the storage virtualization layer. IBM has built a clear hierarchy of storage systems, led by a storage virtualizer (SAN Volume Controller or Storwize v7000), which will provide multi-level, virtualization and centralized management of your storage network.

Bottom line : IBM Flashsystem 900 performs its tasks of processing hundreds of thousands of IOs. In the current test infrastructure, we managed to get 68% of the performance declared by the manufacturer, which gives an impressive performance density on TB.